1. Introduction

Each year 15 million babies are born around the world, and 11.4% of the pregnancies end up in early deliveries, which is around 1 in 10 [

1]. Of these, 15–20% are medically indicated as having Preeclampsia, Intrauterine Growth Restriction (IUGR) or Abruption. Furthermore, babies born between 32–33 weeks have a 95% chance of survival, and these chances decrease along with the birth term down to a 17% chance for babies born in the 23rd week [

2]. These chances of survival are associated with the complication of the born babies, and 20% of them might face temperature, breathing and feeding problems. Similarly, 5% most likely face development complications. Additionally, the delivery cost of premature baby is, on average, 7.4% higher when compared with the normal health newborn.

IUGR is a dangerous condition that occurs during pregnancy and that indicates that the fetus is growing slowly and weighs less than the 10% for gestational age. The weight and development of the neonatal depends on the number of gestational weeks [

3]. Comparing IUGR fetuses’ weights to the healthy neonate helps to identify the critical point of Fetal Heart Rate (FHR) and when to intervene to maintain the newborn’s life [

4]. Early analysis and monitoring of HR and prenatal (antepartum) data of the fetus will help the doctors take the necessary actions by intervening at the right time to prevent deaths of premature babies. Effective analysis of these significant parameters of premature babies will definitely increase the number of healthier babies and reduce the risk of further complications.

An automatic intelligent system based on artificial intelligence (AI), machine learning (ML) and deep learning (DL) has revolutionized the diagnostic process. The ML system has demonstrated the significant performance in various fields including health using different types of data, such as clinical data, X-ray, computerized tomography (CT) scan, cardiotocography (CTG), electromyography (EMG) and genomic data [

5,

6,

7,

8,

9,

10]. Notwithstanding the implication of ML models in healthcare, it also lacks comprehensibility and transparency and is considered a black box technique [

11]. Consequently, it is necessary to develop a model with enhanced interpretability. Therefore, in the proposed study, EAI was used along with the ML techniques for early diagnosis of fetal health using FHR for Antepartum Fetal Monitoring (AFM) to classify the fetus as healthy or IUGR. A number of studies using ML and DL techniques in healthcare have been reported; however, very few of them used EAI [

12].

This paper is organized as follows:

Section 2 consists of literature reviews of related work;

Section 3 consists of material and methods, including the dataset description and a description of the classifiers;

Section 4 consists of experiments, results and discussion; finally,

Section 5 contains the conclusion.

2. Review of Related Literatures

The use of FHR for AFM to predict fetal health is an important method adopted in clinical practice to preserve fetal well-being during gestation. In this section, we outline several studies that employ machine learning to classify the IUGR and healthy fetuses by using the FHR parameters.

Gurgen et al. [

4] used Support Vector Machines (SVM) to explore the risks of IUGR, which is associated with fetal hypoxia, leading to fetal development disorders. In the study, IUGR was predicted in two stages: during the first stage by using indicators such as non-invasive Doppler pulsatility index (PI), resistance index (RI), middle cerebral artery (MCA), etc., analyzing them and then using SVM to classify the fetus as “reactive” or “non-reactive and/or fetal distress (FD)”. The second stage involved verifying the correctness of the diagnosis through a nonstress test (NST) tool. The model was tested using 44 preterm pregnancies, with and without IUGR. They found that features such as Doppler indicators PI, RI and MCA are significant in achieving the greatest accuracy (0.81), specificity (0.933), sensitivity (0.625) and positive predictive value (PPV) (0.862).

In addition, Signorini et al. [

13] used several ML techniques such as Random Forest (RF), Classification Trees (CT), Logistic Regression (LR) and SVM for antepartum fetal monitoring and detected the pathology during pregnancy using physiology-based heart rate features. The dataset consisted of 60 IUGR and 60 healthy fetus and had time, frequency and non-linear parameters. Similarly, the models were trained with 11 cardiac rate features extracted from prenatal Cardiotocographic (CTG) recording. They found that RF and CT achieved the greatest accuracy (0.911).

Furthermore, another study [

14] was conducted on children born under IUGR conditions to identify the latent risk clinical attribute. The dataset consisted of 41 IUGR (18 male) and 34 healthy (22 male). The features were collected through 24 h monitoring of blood pressure and the electrocardiogram (ECG). Moreover, the same features were collected 9 years after birth. Several classifiers, such as LR, Extreme Gradient Boosting (XGBoost) and SVM, were assembled, and these achieved 0.947 accuracy. The proposed model will help in predicting latent risks of IUGR children through monitoring the collected attributes during their development.

Similarly, Zhao et al. [

15] study aimed to propose a system for computerized analysis of FHR signal to assist medical services in decision making. The proposed system extracted 47 features from a collection of (linear and nonlinear) domains: morphological, time and frequency. Three ML algorithms were used: DT, SVM and Adaptive Boosting (AdaBoost). To enhance the system’s performance, several feature selection methods were used: Mann–Whitney–Wilcoxon Statistical Test (ST) using the

p-value as a difference determination; Principal Component Analysis (PCA). The data was obtained from CTU-UHB in the obstetrics ward at the University Hospital in Brno, Czech Republic, from 2009 until 2012. It comprises a subset, which includes 9164 intrapartum CTG recordings; out of these recordings, 552 CTG signals were selected. The results have proved that AdaBoost outperformed the other classifier. The selected feature using Mann–Whitney–Wilcoxon ST gave better results when compared to the original dataset, with an accuracy and sensitivity of 0.92. In conclusion, the results have shown the efficiency and effectiveness of the proposed solution, with a comprehensive analysis of FHR signal to assist the medical services in their decision-making through intelligent prediction. However, to the best of the authors’ knowledge, more features should be added to enhance the system’s performance level.

Furthermore, a study [

16] was conducted with the aim of finding a limited set of parameters that could be used to identify the early recognition of IUGR fetuses. The data set was the FHR Signals of 120 women (60 IUGRs and 60 normal) during their pregnancy. Several classifiers, such as LR, Naïve Bayes (NB), SVM-RBF, SVM Linear and Classification trees, were used. The study achieved the highest accuracy (0.925) using LR and linear SVM. These results show that the method should be widely used in clinics for predicting IUGR Fetuses. Similarly, Comert and Kocamaz’s [

17] study used several ML techniques, such as Artificial Neural Networks (ANN), SVM, Extreme Learning Machines (ELM), Radial Base Function Networks (RBFN) and RF. Their dataset was created by SisProto software and consisted of 2126 instances and 21 features. The FHR signals were classified as either normal or hypoxic. All algorithms showed acceptable performance level. However, ANN achieved more accurate and reliable performance, with a sensitivity of 0.997 and specificity of 0.97.

Moreover, Signorini and Magenes’ [

18] study aimed to show and discuss the acquired outcomes from Normal and IUGR populations of fetuses based on the time series of the FHR signal analysis by using Phase Rectified Signal Analysis (PRSA) to identify indices that can reduce the risk of diseases early in the pregnancy duration. The dataset consisted of 122 subjects (61 healthy and 61 IUGR) between 32–35 weeks of pregnancy, and the parameters were STV, LTI, and delta. On the other hand, the ApEn and SampEn were both calculated and used as nonlinear indices with the Lempel Ziv parameter, which is used to recognize the patterns of the FHR signal. By using LR, the study achieved an accuracy of 0.925. There are several influential factors that affect FHR variability, and these may vary between linear and nonlinear, so that only the multivariate approach can improve the differentiation between the healthy and distressed fetuses.

Chaaban et al. [

19] noted that one of the complications of Hypertensive Disorders of Pregnancy (HDP) is IUGR, which changes the behavior of features extracted from FHRs. The study aimed to extract a new set of kurtosis-based features and classical time-based features, such as Sample Entropy and Fuzzy Entropy, from the FHR signal. These features were used to discover their effect on HDP and IUGR. K-means and SVM algorithm were used on 50 IUGR and 50 normal pregnancies. The study found that kurtosis-based features and SVM achieved the highest specificity and precision (1) and a sensitivity of 0.67. Similarly, Moreira et al. [

20] presented an analysis of ML methods that can assist in detection of the fetus problem, especially the low-birth-weight problem. DT, SVM, K Nearest Neighbor (KNN), Boosting, Bagging and subspace KNN algorithms were used. The dataset consisted of 104 pregnant women suffering from hypertensive disorder during the gestation. The study found that bagged tree algorithm is the best one when compared with the other algorithms; it achieved an accuracy of 0.849.

Krupa et al. [

21] discussed an approach for FHR interpretation based on Empirical Mode Decomposition (EMD) and SVM to classify obtained FHR records as “at risk” or “normal”. The FHR records were obtained from 15 subjects and the dataset consisted of 90 randomly selected records of specific duration. These records were labeled as “normal” or “at risk” by specialized doctors. The proposed approach used EMD standard deviation as an input to SVM for the classification of FHR samples. The study achieved the geometric mean of 0.815 and the Kappa value was 0.684. This method has shown an acceptable level of validity to be implemented for the FHR classification signals. However, in order to obtain a significant clinical accurate result, the method requires validation on a larger dataset, as well as further investigation of the use of EMD and SVM classifying approaches for studying the effects of sampling rates on extracted features.

Similarly, Pini et al. [

22] developed an SVM model to predict late IUGR using the FHR. The study was performed using the CTG of 160 healthy and 102 late IUGR-pregnant women. A radial-based kernel method was used in the SVM. However, the features were selected using the RFE method. In addition to the feature extracted from the CTG, they also considered some additional features, such as GA, maternal age and the fetal gender. As a result of the non-availability of the fetus gender in most of the countries they performed experiments with and without the fetus gender. However, no significant difference appeared between this and the outcome of the classifier performance during the two previously mentioned experiments. They have achieved the highest accuracy (0.84), a sensitivity of 0.843 and a specificity of 0.85.

The studies have shown the significance of ML in monitoring fetus health during the gestation period [

10,

23,

24]. Furthermore, as there are different approaches in interpreting FHR, cardiotocography is widely implemented in hospitals to provide fetal monitoring. However, some studies have been conducted for antepartum fetal monitoring using fetal heart rate data. The studies have achieved good results but can be further improved and investigated using other ML algorithms. Furthermore, there is a need to find the most significant features that can help in the early prediction of IUGR fetus. Therefore, in this study, we aimed to develop a model with enhanced performance and that would reduce the number of features for monitoring fetal health. Moreover, EAI was used to enhance the interpretability of the ML models, to generate confidence in the prediction, to add to comprehensibility and to help doctors in their decision-making. To the best of our knowledge, EAI techniques have never been used to predict IUGR before.

4. Experiments and Results

The study was implemented in Python 3.8.9. Sklearn library ver. 0.23.2 was used to implement ML models. Other libraries included NumPy ver. 1.18.5, Pandas ver. 1.3.3, matplotlib ver. 3.3.1 and Dalex ver. 1.4.1. The dataset was partitioned into training and testing using 70–30 distribution. A normalization technique was applied to SVM, since it is a linear-based model, to make it normally distributed in a standard scaler. Furthermore, a Recursive Feature Elimination (RFE) feature selection technique was applied while grid search and cross-validation were performed to find the best values for each algorithm parameter.

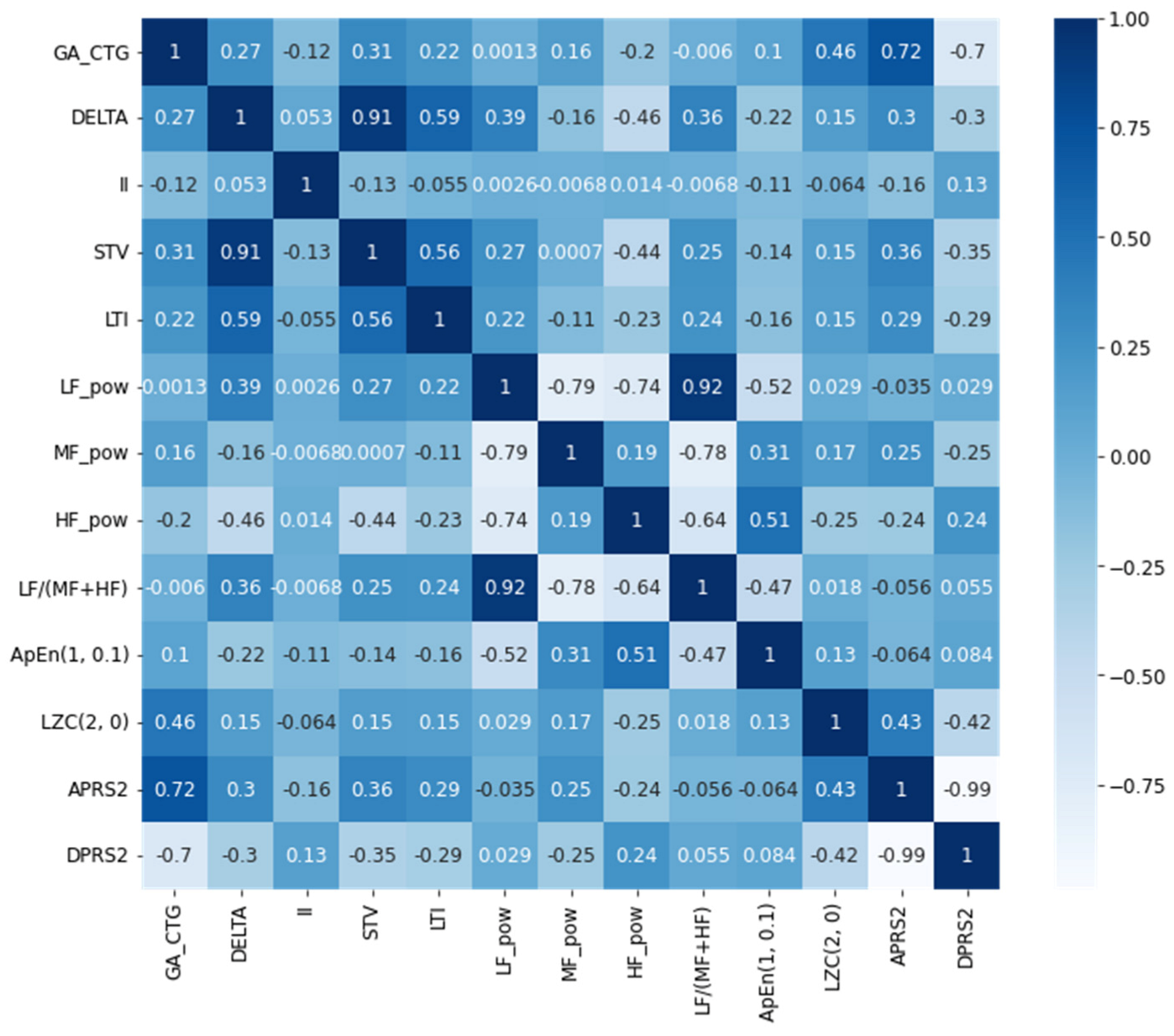

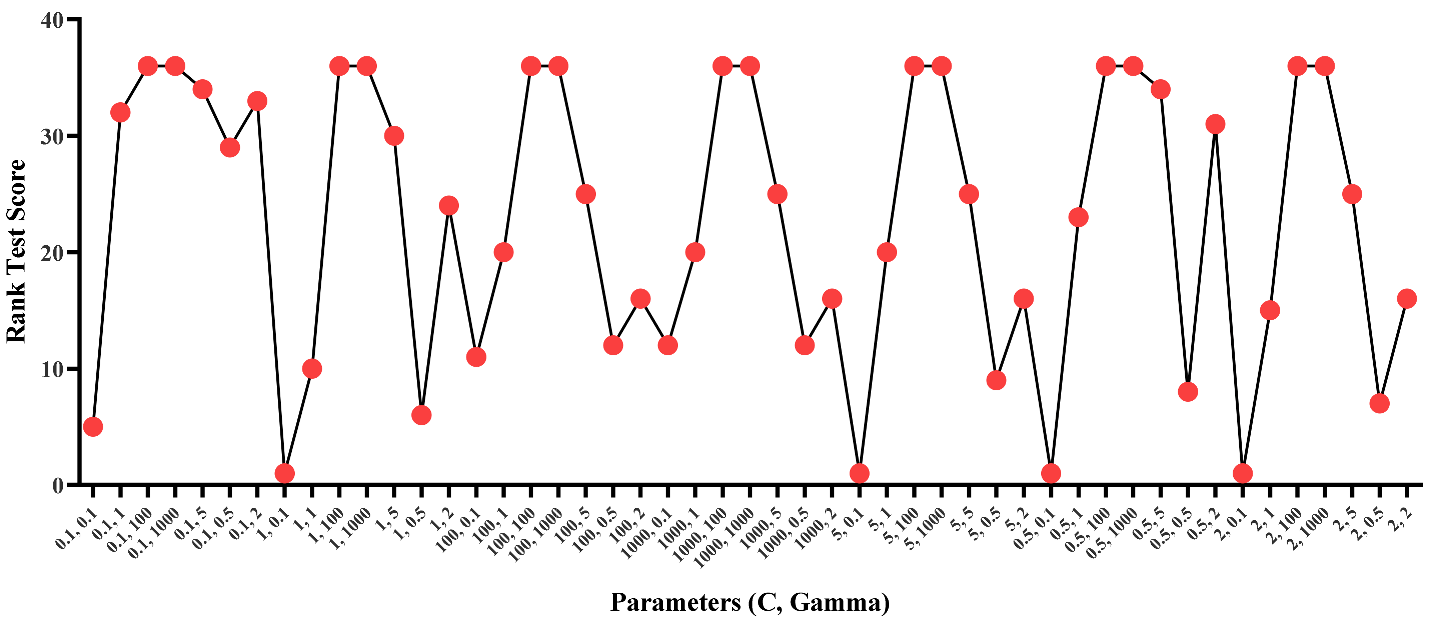

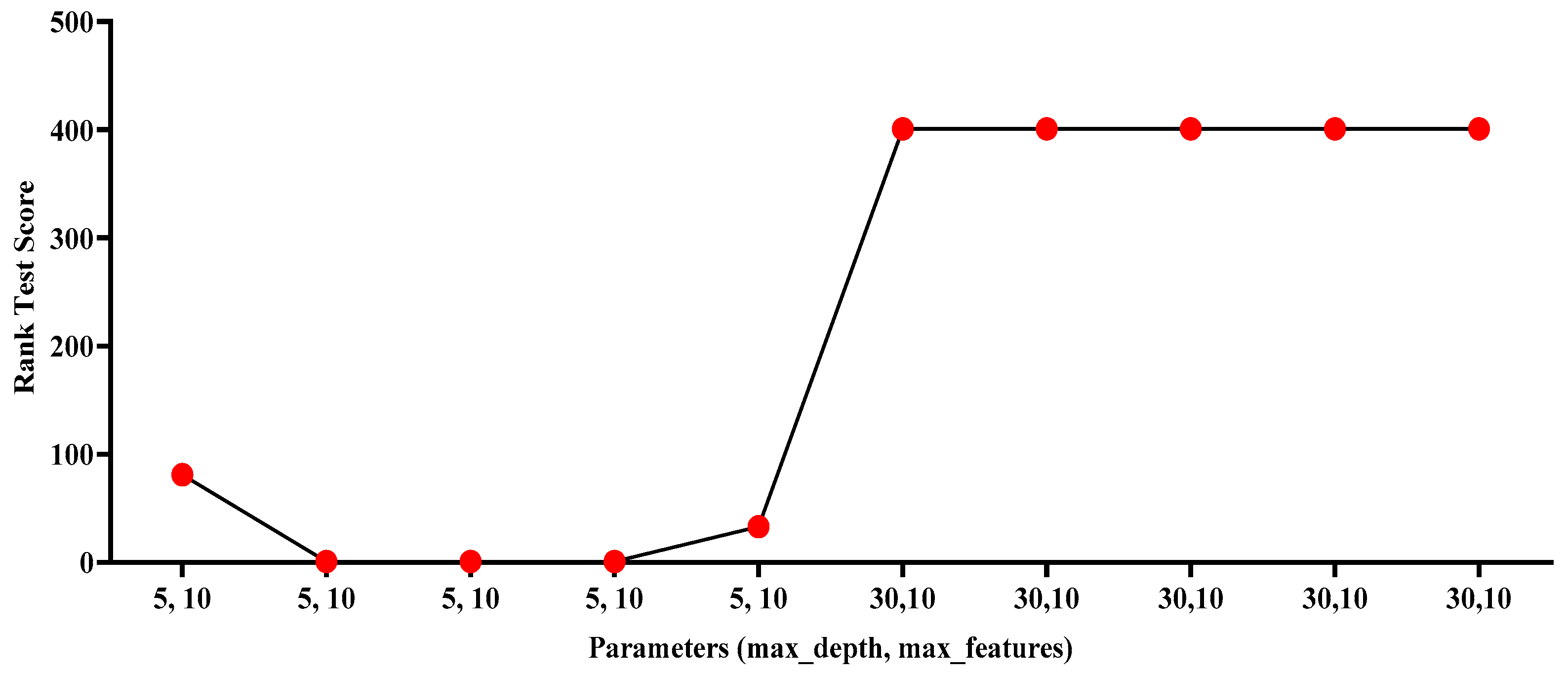

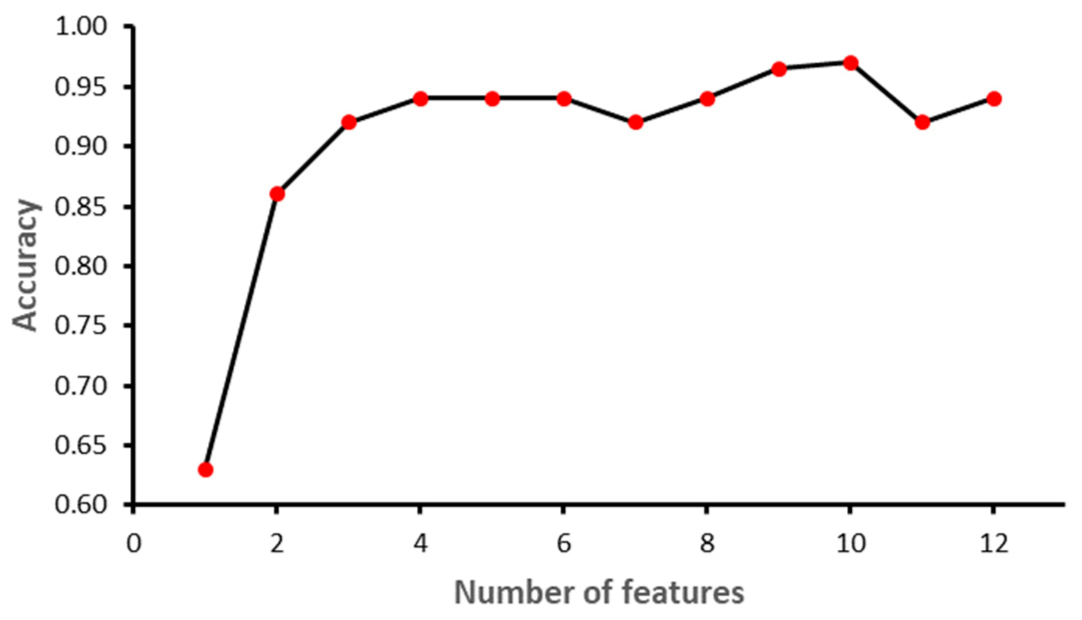

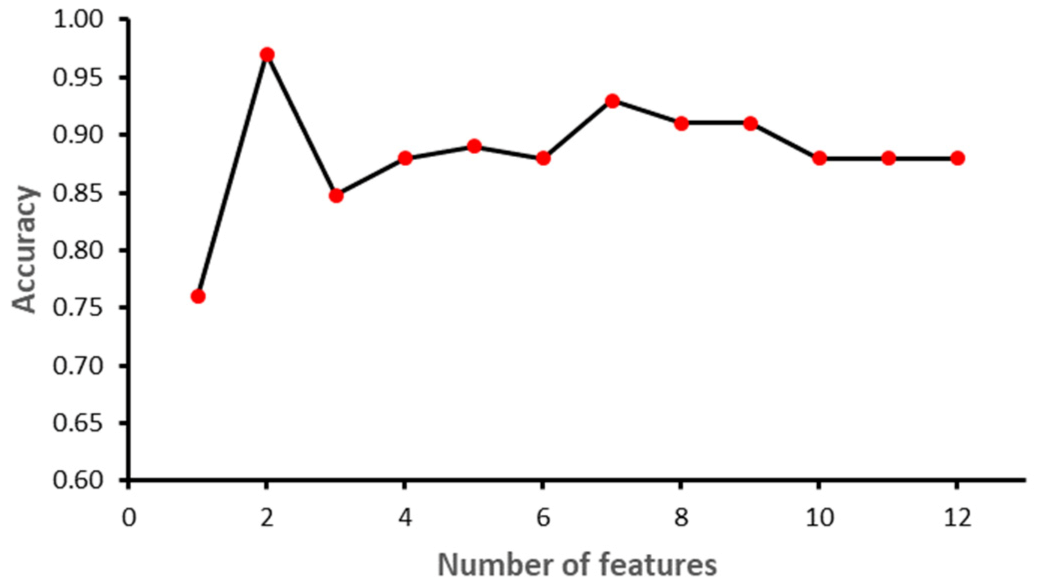

Figure 6,

Figure 7,

Figure 8 and

Figure 9 show the outcome of the RFE for each classifier.

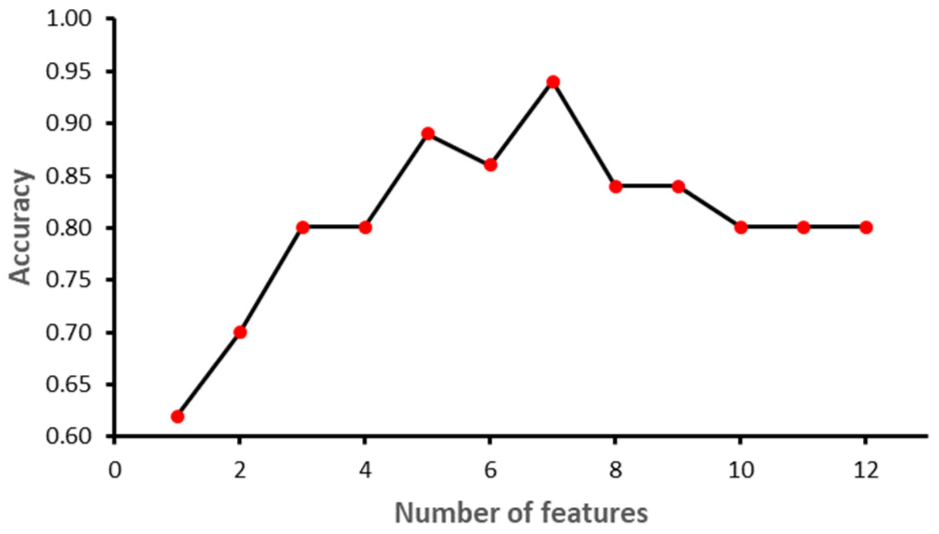

Figure 6 shows that with seven features, while the SVM model achieved the highest performance in terms of accuracy. Initially, the performance of the model improved with the increase in the number of features, but with eight features the performance deteriorated. The selected features for SVM model were: ‘II’, ‘STV’, ‘LTI’, ‘MF_pow’, ‘HF_pow’, ‘LF/(MF + HF)’ and ‘ApEn(1, 0)’. However, for the RF model, the best performance was achieved with 10 features: ‘GA_CTG’, ‘DELTA’, ‘STV’, ‘LTI’, ‘LF_pow’, ‘HF_pow’, ‘LF/(MF + HF)’, ‘ApEn(1, 0.1)’, ‘LZC(2, 0)’ and ‘APRS’.

Figure 7 shows the impact of feature selection on the performance of the RF model. The performance of the RF algorithm initially increased as the features were added, but after six features the performance degraded slightly; however, by eight features the performance start to increase again. Similarly, KNN achieved the best performance with two features, namely GA_CTG’ and ’LZC (2,0), as shown in the

Figure 8. Finally, the GB model produced the best performance with three features: ‘GA_CTG’, ‘STV’ and ‘LTI’, as shown in

Figure 9.

Table 6 contains the selected features using RFE for each classifier.

The evaluation parameters used for comparing the classifiers’ performance in the study are Accuracy (ACC), Sensitivity (SN), Specificity (SP), Positive Predicted Value (PPV), Negative Predicted Value (PPV) and F1-score. The formula for the evaluation measures is represented in the equations below.

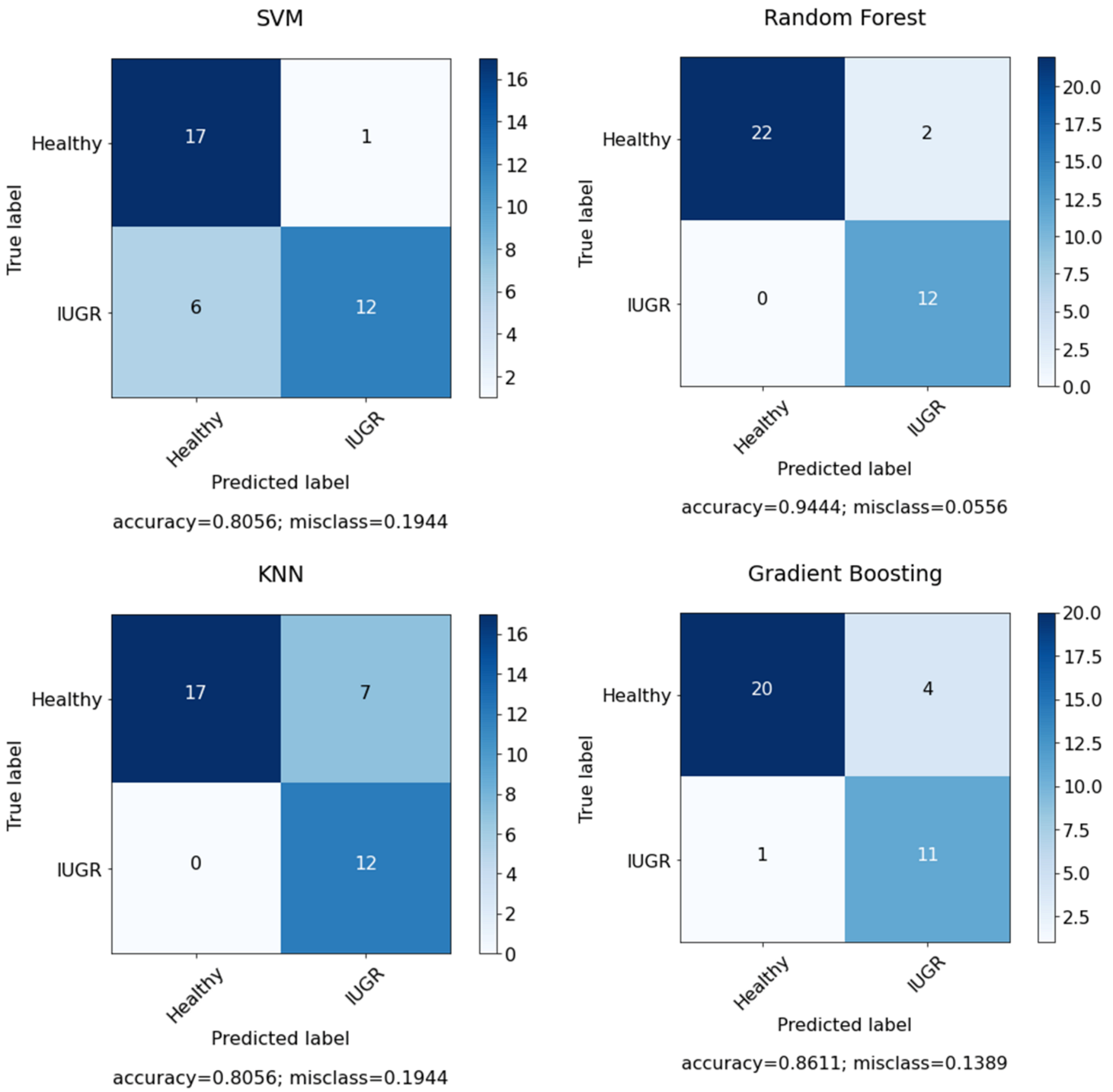

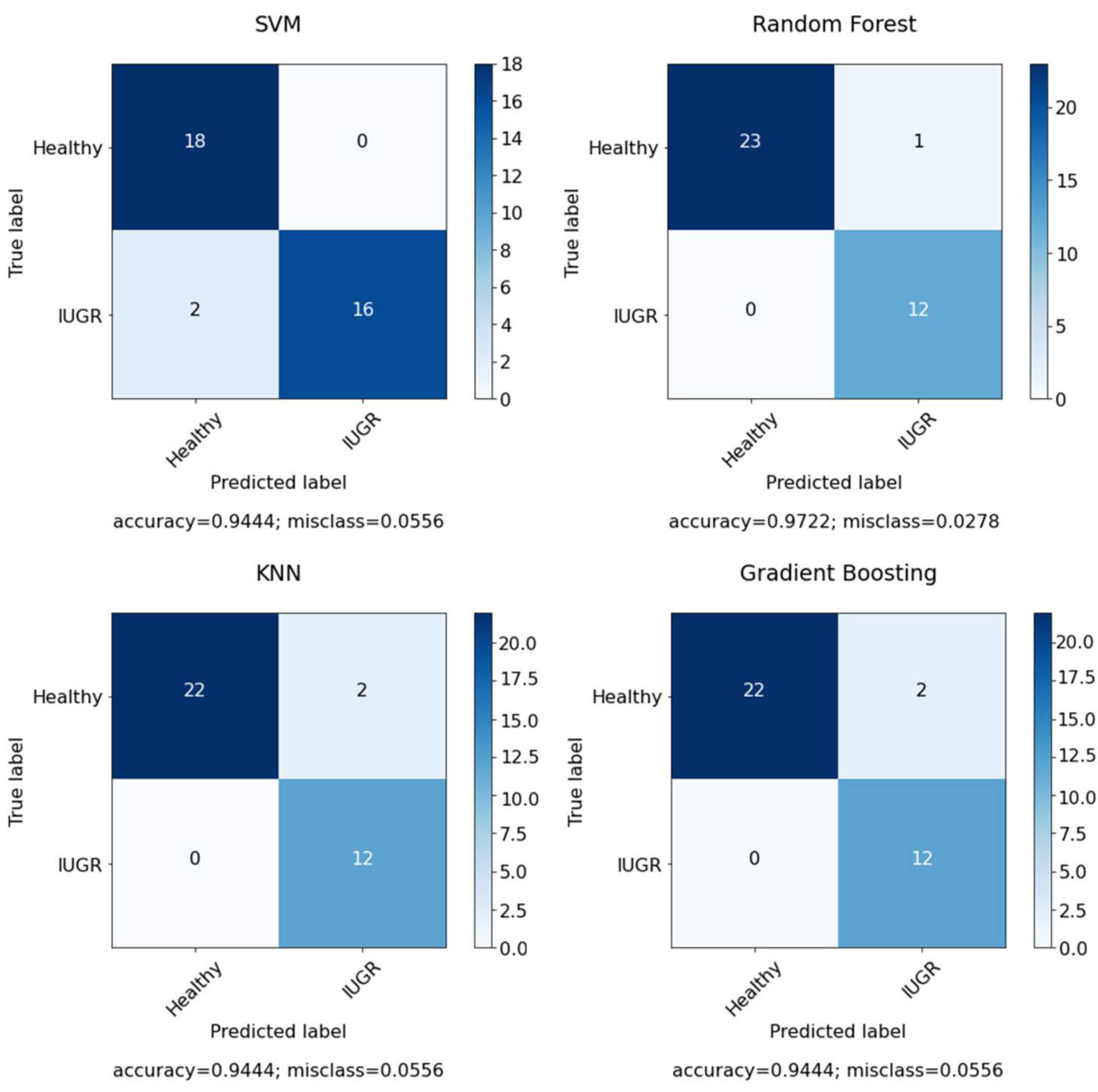

Table 7 indicates the performance comparison of classifiers with and without feature selection technique using dataset I.

After applying feature selection with RFE, the accuracy increased to 0.92 in SVM, with n = 7 selected features; however, after optimizing the model with grid search by using the C and gamma parameters, the results increased to 0.94. In the case of RF, we applied feature selection with RFE and achieved the same with an accuracy of 0.94 with random state = 45 to have fixed accuracy results in each run and with n = 10 selected features. After that, the model was optimized with grid search by using the number of estimators, the maximum depth, the minimum samples split, the minimum samples leaf and the maximum features. The results increased to an accuracy of 0.97 with random state = 50 and n = 10 selected features. However, KNN model performance gives the same result with and without optimization. Due to the fact that KNN model is a lazy learner model and there are not huge parameters in the model. There was a minor increase in PPV and F1-score. However, the performance of the GB model was greatly improved after the optimization and feature selection.

Figure 10 presents the confusion matrix for the selected features using dataset I. However,

Figure 11 shows the confusion matrix for the selected features and optimized models using dataset I.

The performance of the models was also compared with the baseline study. Initially, the performance of the model was compared with Signorini et al. [

13], and later the best performing model was tested on Pini et al.’s [

22] dataset using the selected features. Similar to baseline, RF outperformed the other models in terms of ACC, SN, PPV and NPV, while the SP value of the baseline study is similar to that of the proposed study. Furthermore, the number of features were also reduced, although Pini et al. [

22] achieved the highest results with SVM by using the radial based kernel function. The proposed RF model has achieved better results in term of ACC, SP, PPV and NPV. However, the SN of the proposed model is slightly higher than that of Pini et al. [

22].

Table 8 presents the comparison of the proposed study and the baseline study.

Generating Explanation Using Explainable Artificial Intelligence (EAI)

Despite the significant outcome of the ML and DL models in the prediction, these techniques are also considered as a black box and lack interpretability and comprehensibility [

30]. In the current study the explanation was generated using Shapley Additive Explanations (SHAP) and Local Interpretable Model-agnostic Explanations (LIME) to explain the prediction. SHAP uses game theory to generate the explanations to identify how each feature contributes to the prediction.

Figure 12 shows the global mean importance mean (SHAP values). However,

Figure 13 presents the information on one of the samples that is decomposed into attributes and the impact of each attribute, as well as the combined impact of the attributes for that particular sample. LIME is used to generate the explanation.

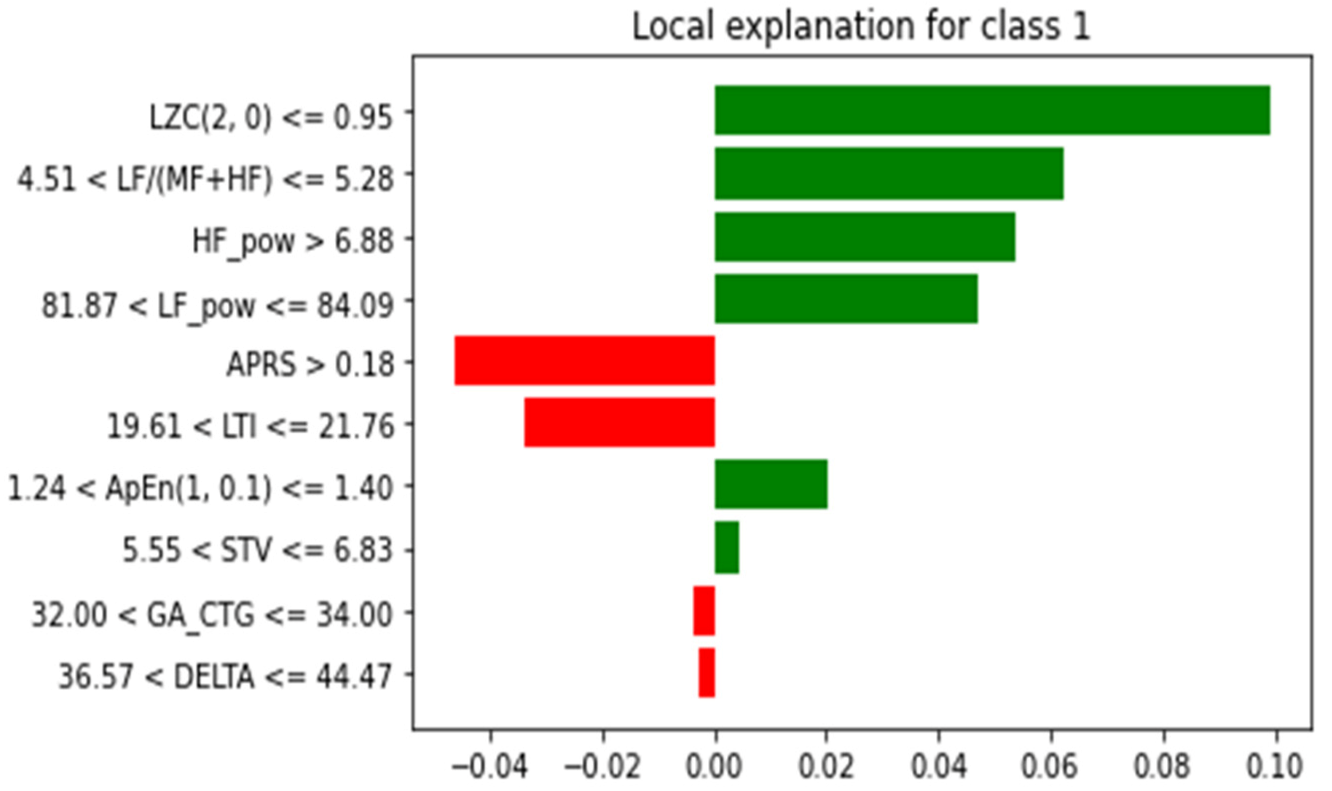

As can be seen from

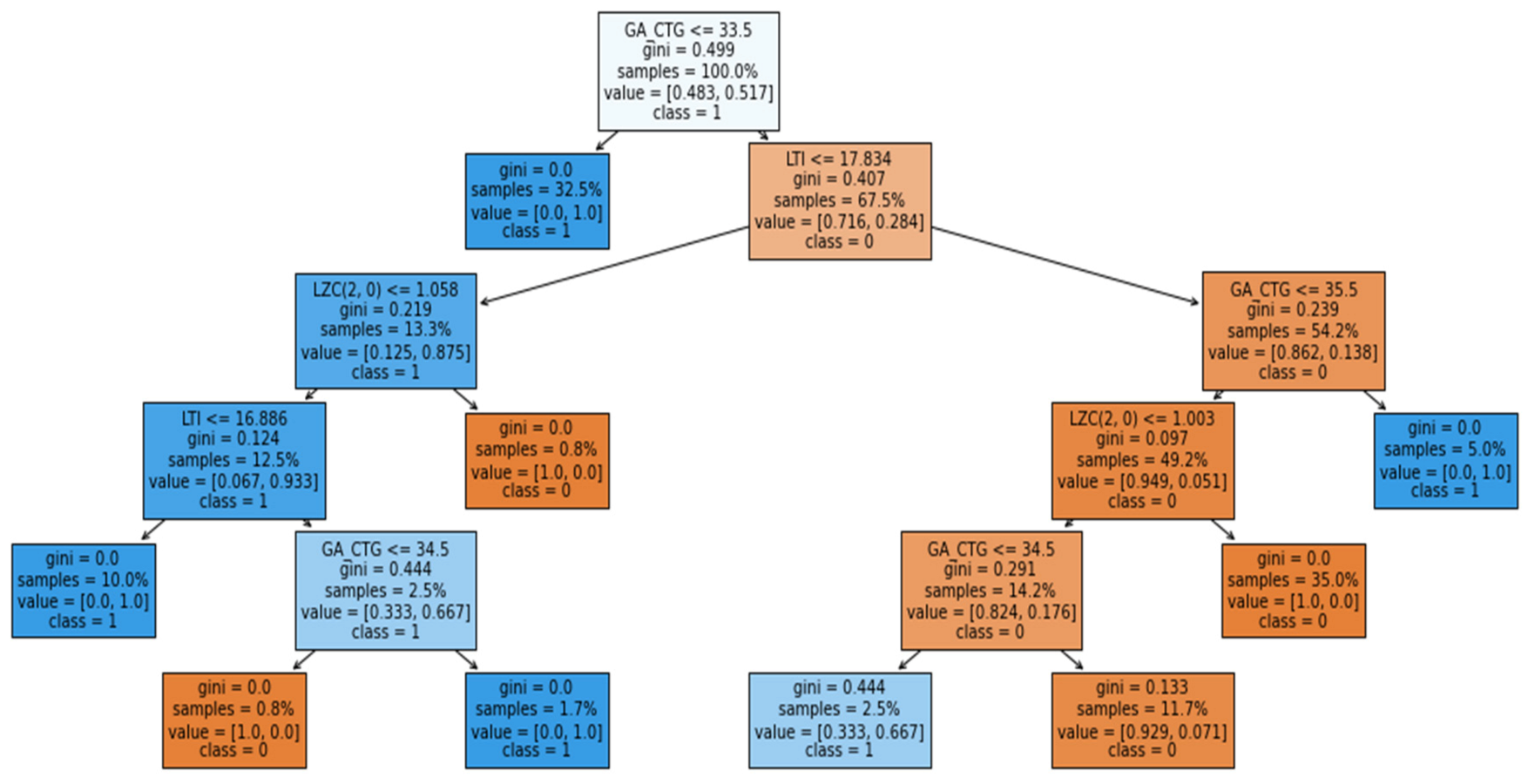

Figure 13, for predicting the IUGR represented as class 1, the green bar indicates that it supports the model that makes the prediction. In the figure, the name of the attribute is mentioned along with some value and range. This indicates that, during prediction, these specific values of the attributes help the model make the prediction (represented by the green bar). However, when represented by red, they indicate that the specific values of these attributes, such as APRS > 0.18, 81.87 < LF_pow <= 84.09, 32.00 < GA <= 34.00 and 36.57 < DELTA <= 44.47, not very well supported. However, this does not mean that these attributes are not significant; it only explains the prediction of that particular sample using the proposed RF model. In addition, for further interpretation the explanation of the RF model was generated in terms of the tree structure, as shown in

Figure 14.

The main contribution of the study is:

To introduce Explainable Artificial Intelligence (EAI) in fetus antepartum monitoring using FHR.

To propose an enhanced model with improved performance and a reduced set of features.

To further validate the model with another dataset. Indeed, the model outperformed the two baseline models.

In spite of all these advantages, there is one limitation and that is the size of the dataset.