seL4 Microkernel for Virtualization Use-Cases: Potential Directions towards a Standard VMM

Abstract

1. Introduction

- To gather the community in building a standard VMM as a shared effort by highlighting the discussion about the challenges and potential directions of a standard VMM;

- This article does not intend to define a standard VMM. It intends to present the potential key design principles and feature set support toward seL4 VMM standardization. The items shown in this paper can be the basis for an extended version with a more comprehensive list of required properties and features.

2. Why seL4?

3. Background

3.1. Microkernel & Monolithic Kernel

3.2. Virtualization

3.3. Related Hypervisors and VMMs

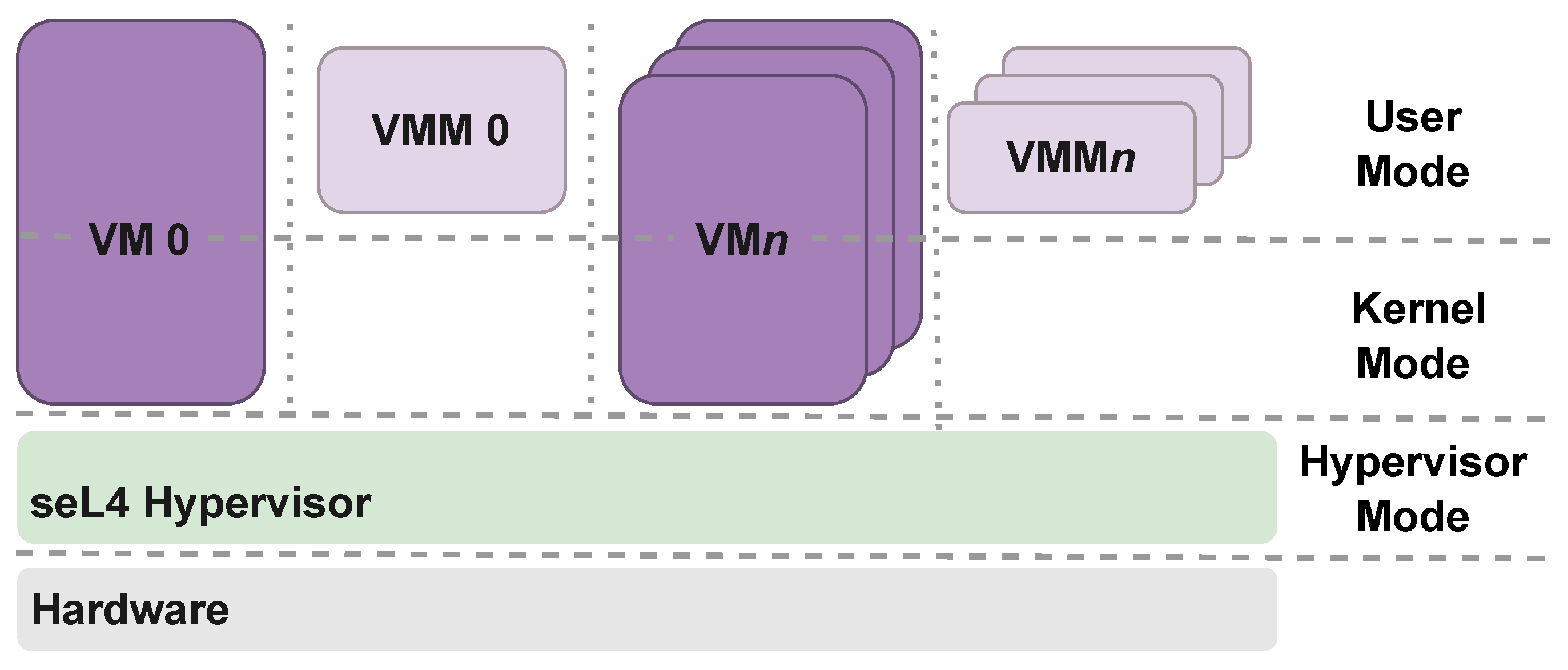

3.4. seL4 VMM

4. Philosophy of a Standard VMM

4.1. Design Principles

4.1.1. Official and Open Source

4.1.2. Modular: Maintainable and Upgradable

4.1.3. Portable: Hardware Independence

4.1.4. Scalable: Application-Agnostic

4.1.5. Secured by Design

4.2. Feature Set Support Tenets

4.2.1. System Configuration

4.2.2. Multicore & Time Isolation

- Direct Mapping Configuration: multiple single-core VMs running concurrently and physically distributed over dedicated CPUs. Figure 3 shows the representation of the Direct Mapping Configuration approach.

- Hybrid Multiprocessing Configuration: it can have multiple single-core VMs running in dedicated CPUs as the Direct Mapping Configuration, however, it can also have multicore VMs running in different CPUs. Figure 4 shows the representation of the Hybrid Multiprocessing Configuration approach.

- vCPUs Scheduling: The ability to schedule threads on each pCPU based on their priority, credit-based time slicing, or budgeting depending on the algorithm selected. As an example, It could be a design configuration whether it supports vCPU migration (a vCPU switching from pCPU id:0 to id:1) and also the possibility to link a set of vCPUs to pCPUs. Another potential configuration is the static partitioning one, where all the vCPUs are assigned to pCPUs at design time and are immutable at run-time. In addition, having dynamic and static VM configurations in a hybrid mode could be something to support. A multiprocessing protocol with acquire/release ownership of vCPUs should be supported. The seL4 kernel has a scheduler that chooses the next thread to run on a specific processing core, and is a priority-based round-robin scheduler. The scheduler picks threads that are runnable: that is, resumed and not blocked on any IPC operation. The scheduler picks the highest-priority, runnable thread (0 255). When multiple TCBs are runnable and have the same priority, they are scheduled in a first-in, first-out round-robin fashion. The seL4 kernel scheduler could be extended to VMMs.

- pIRQ/vIRQs ownership: physical interrupts (pIRQs) shall be virtualized (vIRQs) and require a multiprocessing protocol with simple acquire/release ownership of interrupts per pCPU/vCPU targets. In addition, support hardware-assisted interrupt-controllers with multicore support is required.

- Inter-vCPU/inter-pCPU communication: Another key aspect of multiprocessing architectures is the ability to communicate between pCPUs. Moreover, with equal importance, communication between vCPUs results in not only inter-pCPU but also inter-vCPU communication. Communication is very important in multiprocessing protocols, but it should be designed in a way that is easy to verify and validate.

4.2.3. Memory Isolation

- Configurable VM Virtual Address Space: Multiple virtual Address Spaces are an important feature supported by high-end processors and have the same paramount importance for hardware-assisted virtualization. There should be different Virtual Address Spaces for different software entities: Hypervisor, VMM, and their respective VMs. User-controlled and configurable address spaces are important features for VMs. For example, (i) setting up a contiguous virtual address space ranges from fragmented physical memory as well as small memory segments shared with other VMs, (ii) hiding physical platform memory segments or devices from the VMs, (iii) no need to recompile a non-relocatable VM image.

- Device memory isolation by hardware-support or purely software: Devices that are connected to the System-on-Chip (SoC) bus interconnection and are masters can trigger read and write DMA transactions from and to the main memory. This memory, typically DRAM, is physically shared and logically partitioned among different VMs by the hypervisor. Some requirements could be met in a standard VMM: (i) a device can only access the memory of the VM it belongs to; (ii) the device could understand the virtual AS of its VM; and (iii) the virtualization layer could intercept all accesses to the device and decode only those that intend to configure its DMA engine to do the corresponding translation if needed, and control access to specific physical memory regions. To meet these three requirements, a standard VMM requires support for either an IOMMU (with one or two-stage translation regimes) or software mechanisms for mediation.

- Cache isolation through page-coloring: Micro-architectural hardware features like pipelines, branch predictors, and caches are typically available and essential for well-performing CPUs. These hardware enhancements are mostly seen as software-transparent but currently leave traces behind and open up backdoors that can be exploited by attackers to break memory isolation and consequently compromise the memory confidentiality of a given VM. One mitigation for this problem is to apply page coloring in software, which could be an optional feature supported by a standard VMM. Page coloring is intended to map frame pages to different VMs without colliding into the same allocated cache line. A given cache allocated by a VM cannot remove a previously allocated cache line by another VM. This technique, by partitioning the cache in different colors, can protect to some extent (shared caches) against timing cache-based side-channel attacks; however, it strongly depends on some architectural/platform parameter limitations such as cache size, number of ways and page size granularity used to configure the virtual address space. The L1 cache is typically small and private to the pCPU, while the L2 cache is typically bigger and is seen as the last level of cache that is shared among several pCPUs. It would be possible to assign a color to a set of VMs based on their criticality level. For example, assuming the hardware limits the system to encode up to 4 colors, where one color can be shared by a set of noncritical VMs, another for real-time VM for deterministic behavior, and the other two for security- and performance-critical VMs that requires increased cache utilization and at the same isolation against side-channel attacks.

4.2.4. Hypervisor-Agnostic I/O Virtualization and Its Derivations

- VirtIO can be used for interfacing VMs with host device drivers. It can support the VirtIO driver backends and frontends on top of seL4. VirtIO interfaces can be connected to open-source technologies such as QEMU, crosvm, and Firecracker, among others. In this scenario, the open-source technologies will execute in the user space of a VM different from the one using the device itself. This approach helps in achieving reusability, portability, and scalability. Figure 5 shows the representation of such an approach considering a VirtIO Net scenario in which a Guest VM consumes the services provided by a back-end Host VM.

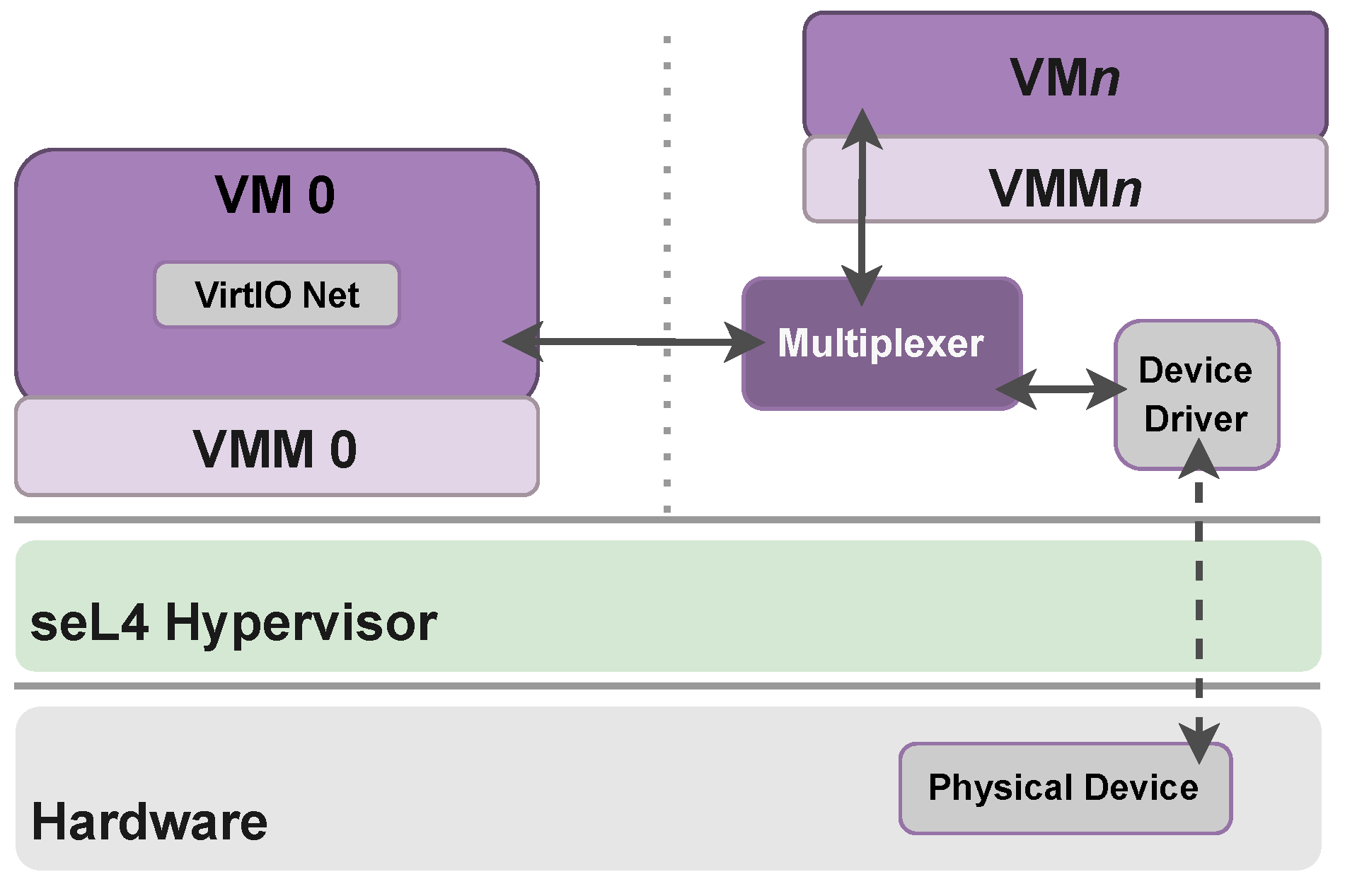

- VirtIO interfaces can be connected to formally verified native device drivers. The use of such kinds of device drivers increases the security of the whole system. Moreover, the verified device drivers can be multiplexed to different accesses, switching device access between multiple clients. The multiplexer is transparent to native clients, as it uses the same protocol as the (native) clients use to access an exclusively owned device. Figure 6 shows the representation of a device virtualization through a multiplexer. In this example, each device has a single driver, encapsulated either in a native component or a virtual machine, and is multiplexed securely between clients (https://trustworthy.systems/projects/TS/drivers/, accessed on 6 November 2022).

5. Discussion Topics

5.1. VMM API

5.2. Formal Methods

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- PR Newswire. Embedded System Market Size Worth $159.12 Billion by 2031 CAGR: 7.7%—TMR Study; PR Newswire: New York, NY, USA, 2022. [Google Scholar]

- Heiser, G. The Role of Virtualization in Embedded Systems. In Proceedings of the 1st Workshop on Isolation and Integration in Embedded Systems, IIES ’08, Glasgow, Scotland, 1 April 2008; pp. 11–16. [Google Scholar] [CrossRef]

- Bauman, E.; Ayoade, G.; Lin, Z. A Survey on Hypervisor-Based Monitoring: Approaches, Applications, and Evolutions. ACM Comput. Surv. 2015, 48, 1–33. [Google Scholar] [CrossRef]

- Deacon, W. Virtualization for the Masses: Exposing KVM on Android. 2019. Available online: https://kvmforum2020.sched.com/event/eE24/virtualization-for-the-masses-exposing-kvm-on-android-will-deacon-google (accessed on 6 November 2022).

- Stabellini, S. True Static Partitioning with Xen Dom0-Less. 2019. Available online: https://xenproject.org/2019/12/16/true-static-partitioning-with-xen-dom0-less/ (accessed on 6 November 2022).

- Klein, G.; Elphinstone, K.; Heiser, G.; Andronick, J.; Cock, D.; Derrin, P.; Elkaduwe, D.; Engelhardt, K.; Kolanski, R.; Norrish, M.; et al. SeL4: Formal Verification of an OS Kernel. In Proceedings of the ACM SIGOPS 22nd Symposium on Operating Systems Principles, SOSP ’09, Big Sky, MT, USA, 11–14 October 2009; pp. 207–220. [Google Scholar] [CrossRef]

- Heiser, G. The seL4 Microkernel—An Introduction. 2020. Available online: https://sel4.systems/About/seL4-whitepaper.pdf (accessed on 6 November 2022).

- seL4 Project. Services Endorsed by the Foundation. 2022. Available online: https://sel4.systems/Foundation/Services/home.pml (accessed on 6 November 2022).

- seL4 Project. seL4 Foundation Membership. 2022. Available online: https://sel4.systems/Foundation/Membership/home.pml (accessed on 6 November 2022).

- Cinque, M.; Cotroneo, D.; De Simone, L.; Rosiello, S. Virtualizing mixed-criticality systems: A survey on industrial trends and issues. Future Gener. Comput. Syst. 2022, 129, 315–330. [Google Scholar] [CrossRef]

- Wulf, C.; Willig, M.; Göhringer, D. A Survey on Hypervisor-based Virtualization of Embedded Reconfigurable Systems. In Proceedings of the 2021 31st International Conference on Field-Programmable Logic and Applications (FPL), Dresden, Germany, 30 August–3 September 2021; pp. 249–256. [Google Scholar] [CrossRef]

- Aalam, Z.; Kumar, V.; Gour, S. A review paper on hypervisor and virtual machine security. J. Phys. Conf. Ser. 2021, 1950, 012027. [Google Scholar] [CrossRef]

- Heiser, G.; Klein, G.; Andronick, J. SeL4 in Australia: From Research to Real-World Trustworthy Systems. Commun. ACM 2020, 63, 72–75. [Google Scholar] [CrossRef]

- VanderLeest, S.H. Is formal proof of seL4 sufficient for avionics security? IEEE Aerosp. Electron. Syst. Mag. 2018, 33, 16–21. [Google Scholar] [CrossRef]

- VanVossen, R.; Millwood, J.; Guikema, C.; Elliott, L.; Roach, J. The seL4 Microkernel–A Robust, Resilient, and Open-Source Foundation for Ground Vehicle Electronics Architecture. In Proceedings of the Ground Vehicle Systems Engineering and Technology Symposium, Novi, MI, USA, 13–15 August 2019; pp. 13–15. [Google Scholar]

- Millwood, J.; VanVossen, R.; Elliott, L. Performance Impacts from the seL4 Hypervisor. In Proceedings of the Ground Vehicle Systems Engineering and Technology Symposium, Virtual Conference, 11–13 August 2020; pp. 13–15. [Google Scholar]

- Sudvarg, M.; Gill, C. A Concurrency Framework for Priority-Aware Intercomponent Requests in CAmkES on seL4. In Proceedings of the 2022 IEEE 28th International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA), Taipei, Taiwan, 23–25 August 2022; pp. 1–10. [Google Scholar] [CrossRef]

- Elphinstone, K.; Heiser, G. From L3 to SeL4 What Have We Learnt in 20 Years of L4 Microkernels? In Proceedings of the Twenty-Fourth ACM Symposium on Operating Systems Principles, SOSP ’13, Farminton, PA, USA, 3–6 November 2013; pp. 133–150. [Google Scholar] [CrossRef]

- seL4 Project. Frequently Asked Questions on seL4. 2022. Available online: https://docs.sel4.systems/projects/sel4/frequently-asked-questions.html (accessed on 6 November 2022).

- seL4 Project. seL4 Foundation. 2022. Available online: https://sel4.systems/Foundation/home.pml (accessed on 6 November 2022).

- Roch, B. Monolithic kernel vs. Microkernel. TU Wien 2004, 1. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=05f0cc57b801a73b63e64cb4e92ffac1773c07ac (accessed on 6 November 2022).

- Rushby, J.M. Design and Verification of Secure Systems. ACM SIGOPS Oper. Syst. Rev. 1981, 15, 12–21. [Google Scholar] [CrossRef]

- Nider, J.; Rapoport, M.; Bottomley, J. Address Space Isolation in the Linux Kernel. In Proceedings of the 12th ACM International Conference on Systems and Storage, SYSTOR ’19, Haifa, Israel, 3–5 June 2019; p. 194. [Google Scholar] [CrossRef]

- Biggs, S.; Lee, D.; Heiser, G. The Jury Is In: Monolithic OS Design Is Flawed: Microkernel-Based Designs Improve Security. In Proceedings of the 9th Asia-Pacific Workshop on Systems, APSys ’18, Jeju Island, Republic of Korea, 27–28 August 2018. [Google Scholar] [CrossRef]

- Hao, Y.; Zhang, H.; Li, G.; Du, X.; Qian, Z.; Sani, A.A. Demystifying the Dependency Challenge in Kernel Fuzzing. In Proceedings of the 44th International Conference on Software Engineering, ICSE ’22, Pittsburgh, PA, USA, 21–29 May 2022; pp. 659–671. [Google Scholar] [CrossRef]

- Hohmuth, M.; Peter, M.; Härtig, H.; Shapiro, J.S. Reducing TCB Size by Using Untrusted Components: Small Kernels versus Virtual-Machine Monitors. In Proceedings of the 11th Workshop on ACM SIGOPS European Workshop, EW 11, Leuven, Belgium, 19–22 September 2004; p. 22-es. [Google Scholar] [CrossRef]

- Chiueh, S.N.T.C.; Brook, S. A survey on virtualization technologies. Rpe Rep. 2005, 142. [Google Scholar]

- Popek, G.J.; Goldberg, R.P. Formal Requirements for Virtualizable Third Generation Architectures. Commun. ACM 1974, 17, 412–421. [Google Scholar] [CrossRef]

- Tiburski, R.T.; Moratelli, C.R.; Johann, S.F.; de Matos, E.; Hessel, F. A lightweight virtualization model to enable edge computing in deeply embedded systems. Softw. Pract. Exp. 2021, 51, 1964–1981. [Google Scholar] [CrossRef]

- Moratelli, C.R.; Tiburski, R.T.; de Matos, E.; Portal, G.; Johann, S.F.; Hessel, F. Chapter 9—Privacy and security of Internet of Things devices. In Real-Time Data Analytics for Large Scale Sensor Data; Das, H., Dey, N., Emilia Balas, V., Eds.; Advances in Ubiquitous Sensing Applications for Healthcare; Academic Press: Cambridge, MA, USA, 2020; Volume 6, pp. 183–214. [Google Scholar] [CrossRef]

- Martins, J.; Alves, J.; Cabral, J.; Tavares, A.; Pinto, S. μRTZVisor: A Secure and Safe Real-Time Hypervisor. Electronics 2017, 6, 93. [Google Scholar] [CrossRef]

- Smith, J.; Nair, R. Virtual Machines: Versatile Platforms for Systems and Processes; Elsevier: Amsterdam, The Netherlands, 2005. [Google Scholar]

- Russell, R. Virtio: Towards a de-Facto Standard for Virtual I/O Devices. SIGOPS Oper. Syst. Rev. 2008, 42, 95–103. [Google Scholar] [CrossRef]

- Vojnak, D.T.; Ðorđević, B.S.; Timčenko, V.V.; Štrbac, S.M. Performance Comparison of the type-2 hypervisor VirtualBox and VMWare Workstation. In Proceedings of the 2019 27th Telecommunications Forum (TELFOR), Belgrade, Serbia, 26–27 November 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Azmandian, F.; Moffie, M.; Alshawabkeh, M.; Dy, J.; Aslam, J.; Kaeli, D. Virtual Machine Monitor-Based Lightweight Intrusion Detection. SIGOPS Oper. Syst. Rev. 2011, 45, 38–53. [Google Scholar] [CrossRef]

- Rosenblum, M.; Garfinkel, T. Virtual machine monitors: Current technology and future trends. Computer 2005, 38, 39–47. [Google Scholar] [CrossRef]

- Tickoo, O.; Iyer, R.; Illikkal, R.; Newell, D. Modeling Virtual Machine Performance: Challenges and Approaches. SIGMETRICS Perform. Eval. Rev. 2010, 37, 55–60. [Google Scholar] [CrossRef]

- Xu, F.; Liu, F.; Jin, H.; Vasilakos, A.V. Managing Performance Overhead of Virtual Machines in Cloud Computing: A Survey, State of the Art, and Future Directions. Proc. IEEE 2014, 102, 11–31. [Google Scholar] [CrossRef]

- Barham, P.; Dragovic, B.; Fraser, K.; Hand, S.; Harris, T.; Ho, A.; Neugebauer, R.; Pratt, I.; Warfield, A. Xen and the Art of Virtualization. In Proceedings of the Nineteenth ACM Symposium on Operating Systems Principles, SOSP ’03, Bolton Landing, NY, USA, 19–22 October 2003; pp. 164–177. [Google Scholar] [CrossRef]

- Chierici, A.; Veraldi, R. A quantitative comparison between xen and kvm. J. Phys. Conf. Ser. 2010, 219, 042005. [Google Scholar] [CrossRef]

- Dall, C.; Nieh, J. KVM/ARM: The Design and Implementation of the Linux ARM Hypervisor. SIGPLAN Not. 2014, 49, 333–348. [Google Scholar] [CrossRef]

- Raho, M.; Spyridakis, A.; Paolino, M.; Raho, D. KVM, Xen and Docker: A performance analysis for ARM based NFV and cloud computing. In Proceedings of the 2015 IEEE 3rd Workshop on Advances in Information, Electronic and Electrical Engineering (AIEEE), Riga, Latvia, 13–14 November 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Mansouri, Y.; Babar, M.A. A review of edge computing: Features and resource virtualization. J. Parallel Distrib. Comput. 2021, 150, 155–183. [Google Scholar] [CrossRef]

- Ramalho, F.; Neto, A. Virtualization at the network edge: A performance comparison. In Proceedings of the 2016 IEEE 17th International Symposium on A World of Wireless, Mobile and Multimedia Networks (WoWMoM), Coimbra, Portugal, 21–24 June 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Hwang, J.Y.; Suh, S.B.; Heo, S.K.; Park, C.J.; Ryu, J.M.; Park, S.Y.; Kim, C.R. Xen on ARM: System Virtualization Using Xen Hypervisor for ARM-Based Secure Mobile Phones. In Proceedings of the 2008 5th IEEE Consumer Communications and Networking Conference, Las Vegas, NV, USA, 10–12 January 2008; pp. 257–261. [Google Scholar] [CrossRef]

- Stabellini, S.; Campbell, I. Xen on arm cortex a15. In Proceedings of the Xen Summit North America, San Diego, CA, USA, 27–28 August 2012. [Google Scholar]

- Martins, J.; Tavares, A.; Solieri, M.; Bertogna, M.; Pinto, S. Bao: A Lightweight Static Partitioning Hypervisor for Modern Multi-Core Embedded Systems. In Workshop on Next Generation Real-Time Embedded Systems (NG-RES 2020); Bertogna, M., Terraneo, F., Eds.; OpenAccess Series in Informatics (OASIcs); Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik: Dagstuhl, Germany, 2020; Volume 77, pp. 3:1–3:14. [Google Scholar] [CrossRef]

- Li, H.; Xu, X.; Ren, J.; Dong, Y. ACRN: A Big Little Hypervisor for IoT Development. In Proceedings of the 15th ACM SIGPLAN/SIGOPS International Conference on Virtual Execution Environments, VEE 2019, Providence, RI, USA, 14 April 2019; pp. 31–44. [Google Scholar] [CrossRef]

- Li, S.W.; Li, X.; Gu, R.; Nieh, J.; Hui, J.Z. Formally Verified Memory Protection for a Commodity Multiprocessor Hypervisor. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), 11–13 August 2021; pp. 3953–3970. [Google Scholar]

- Bellard, F. QEMU, a Fast and Portable Dynamic Translator. In Proceedings of the 2005 USENIX Annual Technical Conference (USENIX ATC 05), Anaheim, CA, USA, 10–15 April 2005. [Google Scholar]

- Agache, A.; Brooker, M.; Iordache, A.; Liguori, A.; Neugebauer, R.; Piwonka, P.; Popa, D.M. Firecracker: Lightweight Virtualization for Serverless Applications. In Proceedings of the 17th USENIX Symposium on Networked Systems Design and Implementation (NSDI 20), Santa Clara, CA, USA, 25–27 February 2020; pp. 419–434. [Google Scholar]

- Tsirkin, M.S.; Huck, C. Virtual I/O Device (VIRTIO) Version 1.1; OASIS Committee: Burlington, MA, USA, 2018. [Google Scholar]

- seL4 Project. Virtualisation on seL4. 2022. Available online: https://docs.sel4.systems/projects/virtualization/ (accessed on 6 November 2022).

- seL4 Project. CAmkES VMM. 2022. Available online: https://docs.sel4.systems/projects/camkes-vm/ (accessed on 6 November 2022).

- seL4 Project. CAmkES. 2022. Available online: https://docs.sel4.systems/projects/camkes/ (accessed on 6 November 2022).

- seL4 Project. The seL4 Core Platform. 2022. Available online: https://github.com/BreakawayConsulting/sel4cp (accessed on 6 November 2022).

- Leslie, B.; Heiser, G. The seL4 Core Platform. 2022. Available online: https://www.researchgate.net/publication/364530327_seL4_Microkernel_for_virtualization_use-cases_Potential_directions_towards_a_standard_VMM (accessed on 6 November 2022).

- Leslie, B.; Heiser, G. Evolving seL4CP Into a Dynamic OS. 2022. Available online: https://arxiv.org/pdf/2210.04328.pdf (accessed on 6 November 2022).

- Sherman, T. Quality Attributes for Embedded Systems. In Advances in Computer and Information Sciences and Engineering; Sobh, T., Ed.; Springer: Dordrecht, The Netherlands, 2008; pp. 536–539. [Google Scholar]

- Clements, P.; Kazman, R.; Klein, M. Evaluating Software Architectures; Tsinghua University Press: Beijing, China, 2003. [Google Scholar]

- Oliveira, L.; Guessi, M.; Feitosa, D.; Manteuffel, C.; Galster, M.; Oquendo, F.; Nakagawa, E. An Investigation on Quality Models and Quality Attributes for Embedded Systems. In Proceedings of the The Eight International Conference on Software Engineering Advances, ICSEA, Venice, Italy, 27–31 October 2013; pp. 523–528. [Google Scholar]

- Bianchi, T.; Santos, D.S.; Felizardo, K.R. Quality Attributes of Systems-of-Systems: A Systematic Literature Review. In Proceedings of the 2015 IEEE/ACM 3rd International Workshop on Software Engineering for Systems-of-Systems, Florence, Italy, 17 May 2015; pp. 23–30. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Dall, C.; Gu, R.; Nieh, J.; Sait, Y.; Stockwell, G. Design and Verification of the Arm Confidential Compute Architecture. In Proceedings of the 16th USENIX Symposium on Operating Systems Design and Implementation (OSDI 22), Carlsbad, CA, USA, 11–13 July 2022; pp. 465–484. [Google Scholar]

- Vázquez-Ingelmo, A.; García-Holgado, A.; García-Peñalvo, F.J. C4 model in a Software Engineering subject to ease the comprehension of UML and the software. In Proceedings of the 2020 IEEE Global Engineering Education Conference (EDUCON), Porto, Portugal, 27–30 April 2020; pp. 919–924. [Google Scholar] [CrossRef]

- seL4 Project. Supported Platforms. 2022. Available online: https://docs.sel4.systems/Hardware/ (accessed on 6 November 2022).

- Pinto, S.; Santos, N. Demystifying Arm TrustZone: A Comprehensive Survey. ACM Comput. Surv. 2019, 51, 1–36. [Google Scholar] [CrossRef]

- Kuz, I.; Liu, Y.; Gorton, I.; Heiser, G. CAmkES: A component model for secure microkernel-based embedded systems. J. Syst. Softw. 2007, 80, 687–699. [Google Scholar] [CrossRef]

- Open Synergy. Android Ecosystem; Open Synergy: Berlin, Germany, 2022. [Google Scholar]

- Randal, A. The Ideal Versus the Real: Revisiting the History of Virtual Machines and Containers. ACM Comput. Surv. 2020, 53, 1–31. [Google Scholar] [CrossRef]

- Zhang, X.; Zheng, X.; Wang, Z.; Li, Q.; Fu, J.; Zhang, Y.; Shen, Y. Fast and Scalable VMM Live Upgrade in Large Cloud Infrastructure. In Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS ’19, Providence, RI, USA, 13–17 April 2019; pp. 93–105. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matos, E.d.; Ahvenjärvi, M. seL4 Microkernel for Virtualization Use-Cases: Potential Directions towards a Standard VMM. Electronics 2022, 11, 4201. https://doi.org/10.3390/electronics11244201

Matos Ed, Ahvenjärvi M. seL4 Microkernel for Virtualization Use-Cases: Potential Directions towards a Standard VMM. Electronics. 2022; 11(24):4201. https://doi.org/10.3390/electronics11244201

Chicago/Turabian StyleMatos, Everton de, and Markku Ahvenjärvi. 2022. "seL4 Microkernel for Virtualization Use-Cases: Potential Directions towards a Standard VMM" Electronics 11, no. 24: 4201. https://doi.org/10.3390/electronics11244201

APA StyleMatos, E. d., & Ahvenjärvi, M. (2022). seL4 Microkernel for Virtualization Use-Cases: Potential Directions towards a Standard VMM. Electronics, 11(24), 4201. https://doi.org/10.3390/electronics11244201