FPSNET: An Architecture for Neural-Network-Based Feature Point Extraction for SLAM

Abstract

1. Introduction

- A new convolution layer data flow is implemented to manage the streaming data using a row-based feature processing.

- An individual state controller (SC) and the bus arbiter and decoder module are deployed.

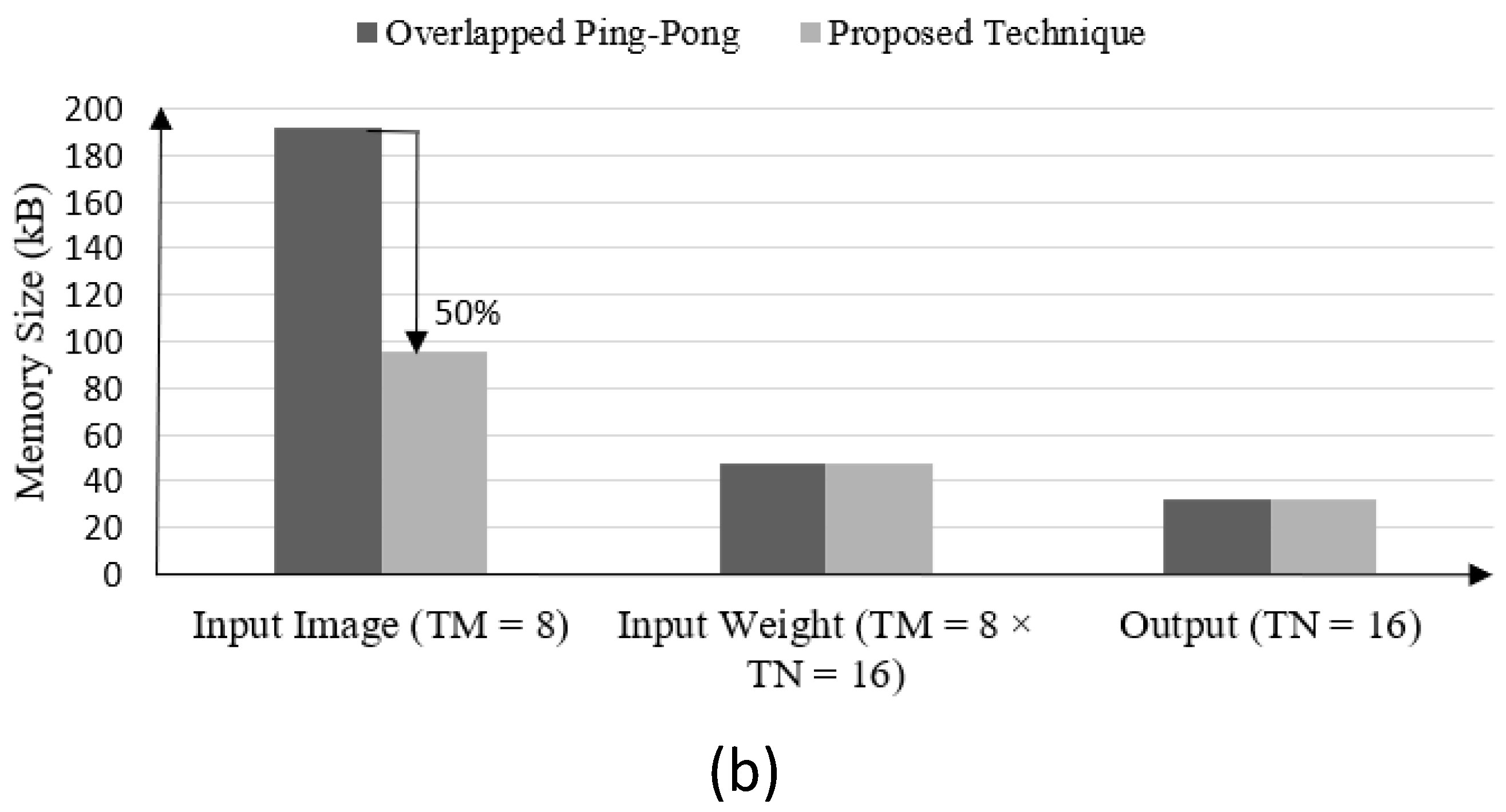

- A multi-bank memory system employing feature and synapse parallelism is designed to meet the data access demand, which avoids the traditional ping-pong buffer.

- Hardware implementation of the ReLU activation function is optimized.

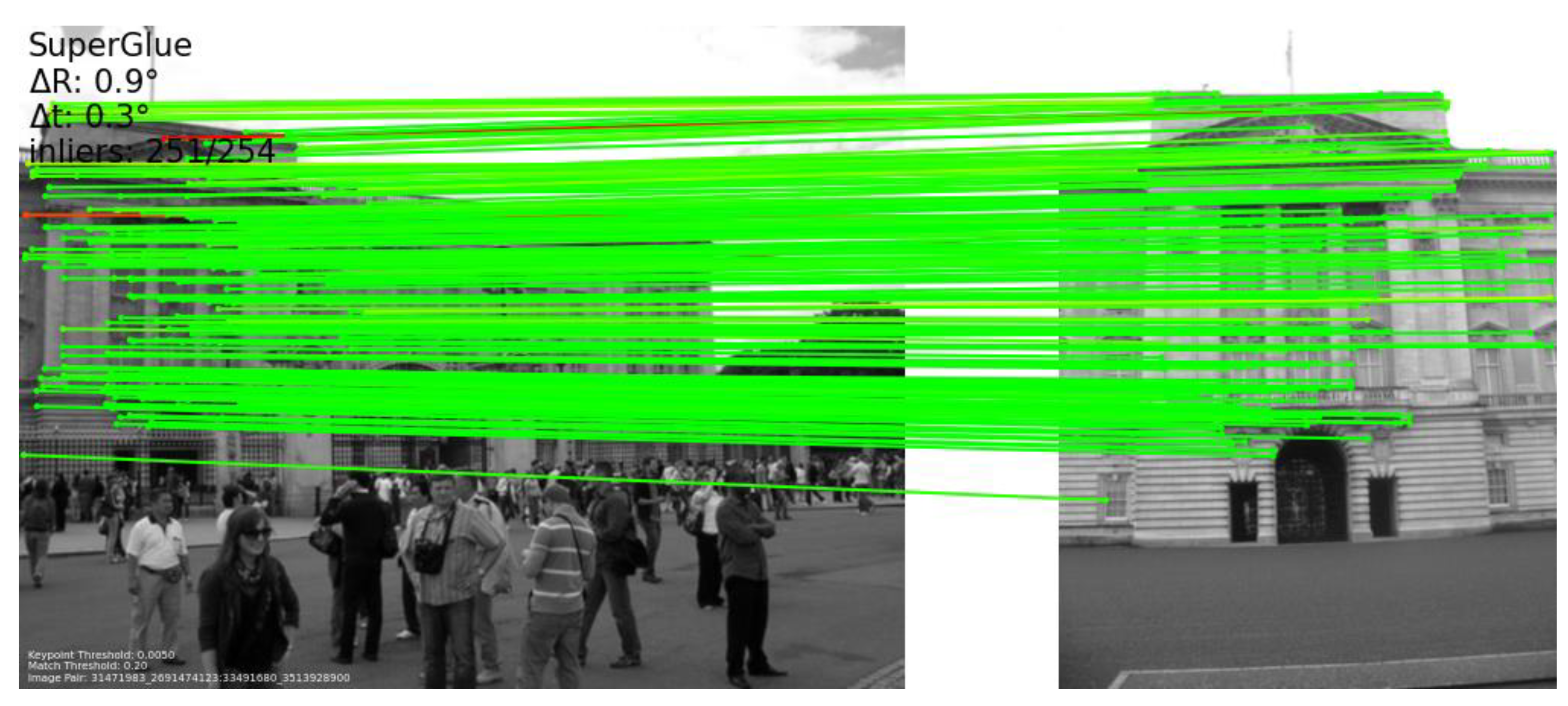

2. SLAM Based on SuperPoint Neural Network

2.1. CNN-Based SLAM

2.2. SuperPoint Architecture

2.3. Parallelism in Convolutional Neural Network

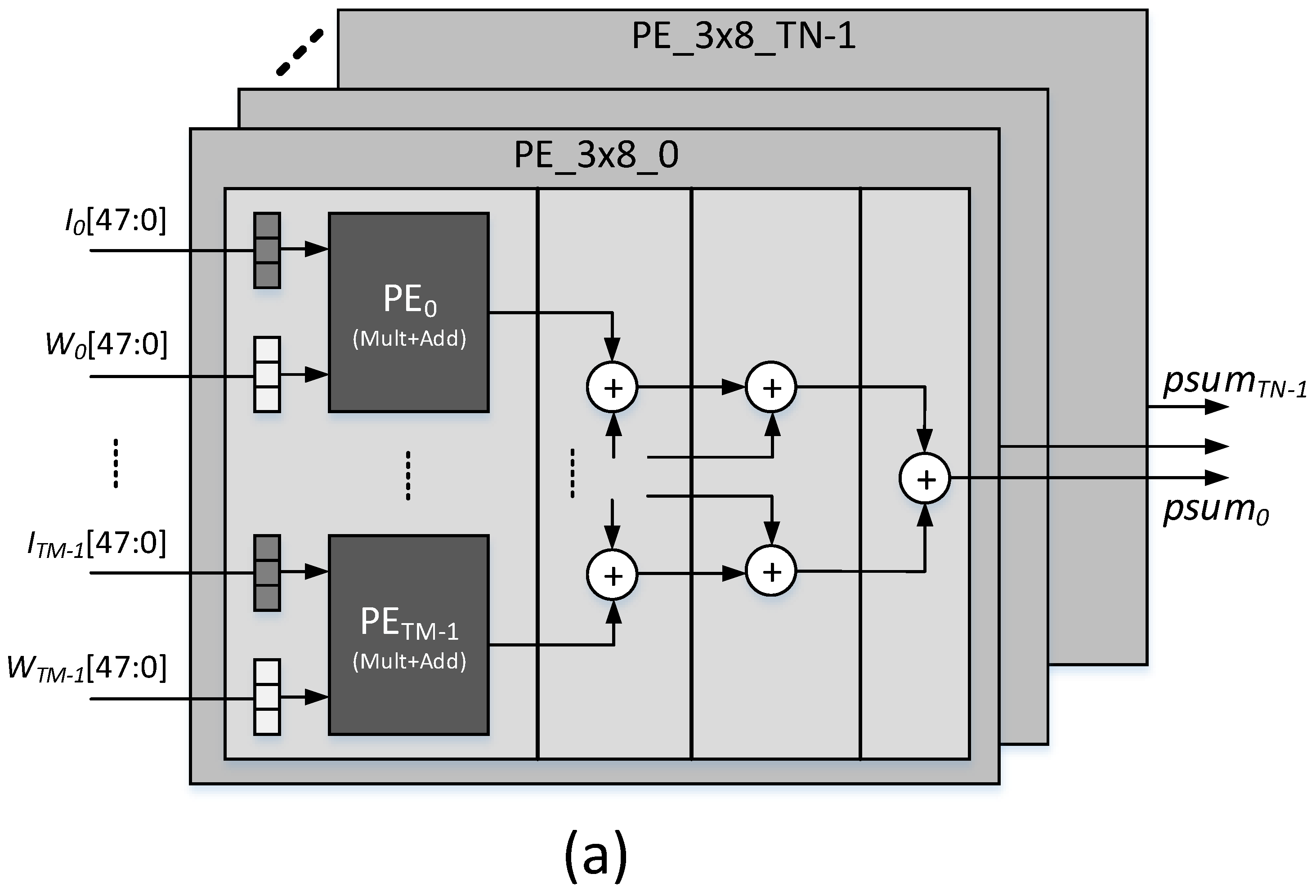

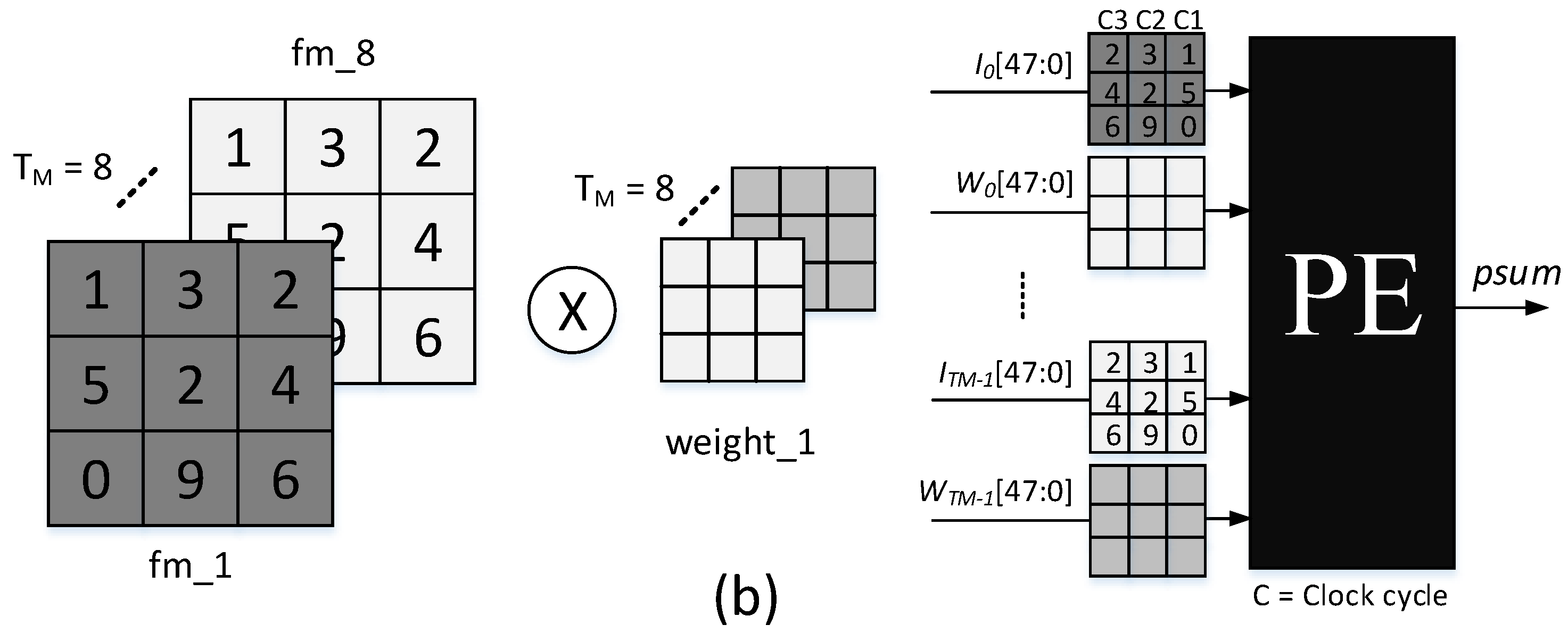

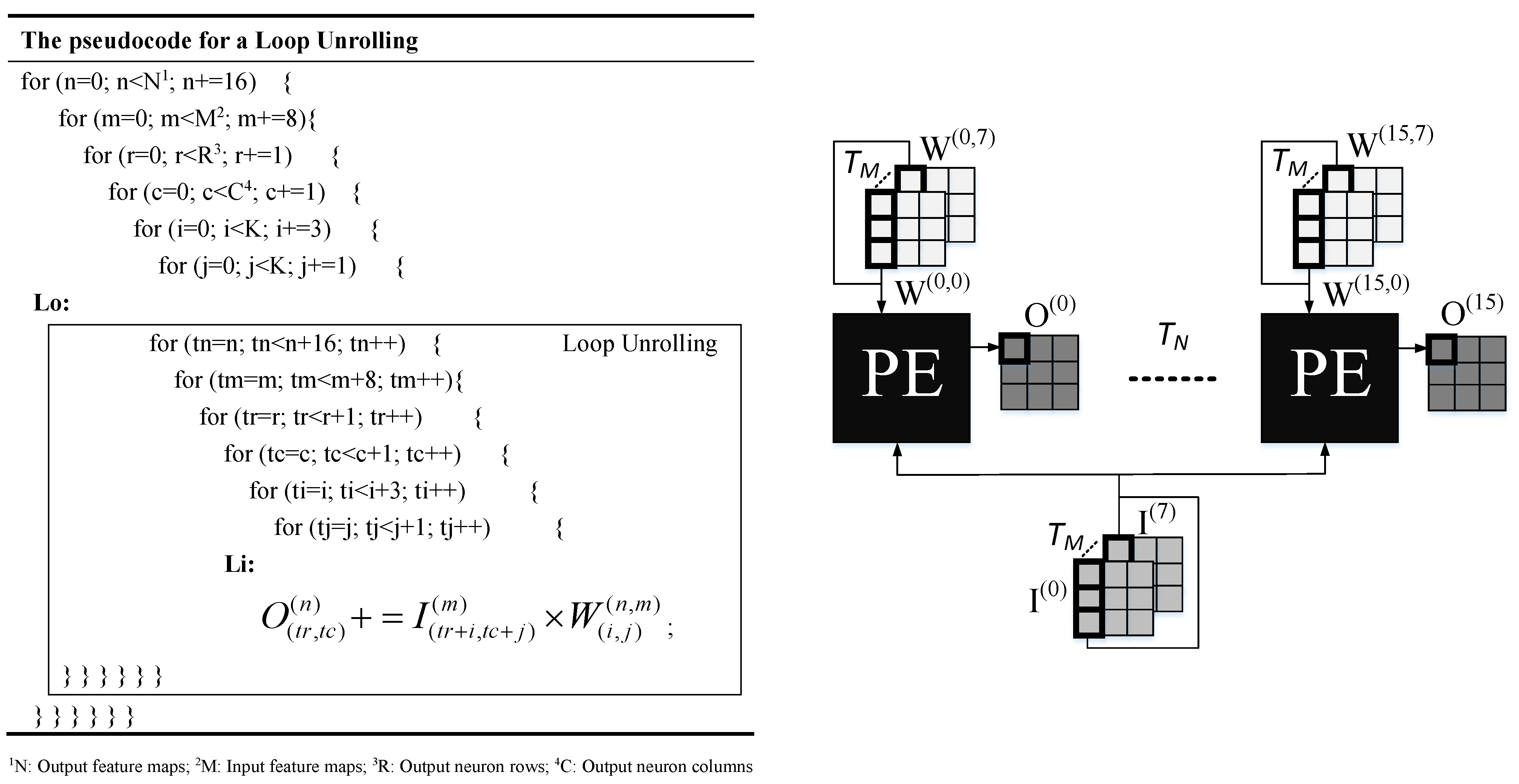

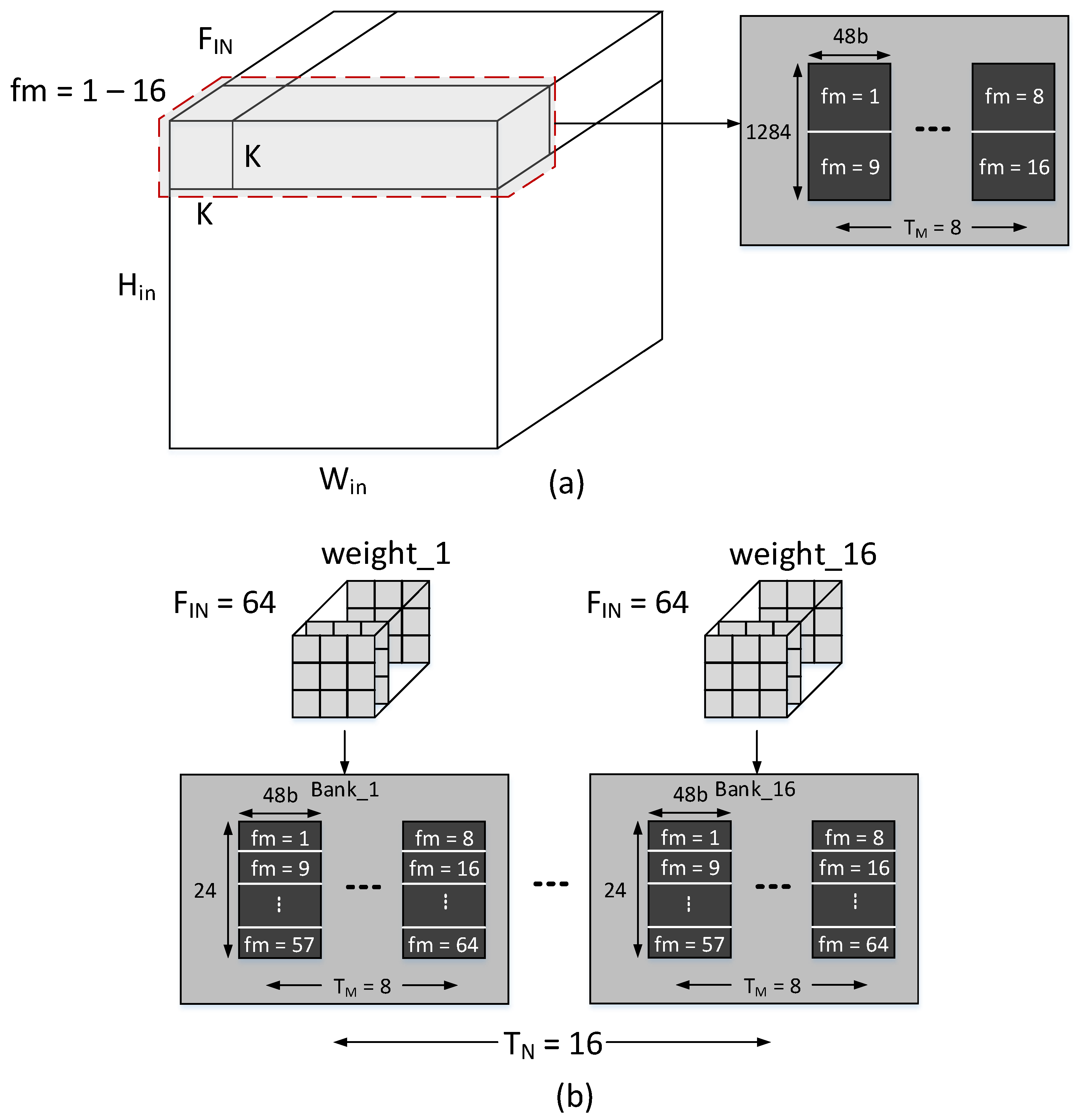

- The loops related to the feature map can be unrolled to and factors. are input feature maps processed at the same time while generating output feature maps. This is called feature map parallelism (FP).

- The loops for neurons are also unrolled with factors of , where of one output feature map are processed at the same time. It is called neuron parallelism (NP).

- Synapse-related loops are also unrolled with factors where synapses of one kernel weight are computed in one processing step and called synapse parallelism (SP).

3. FPSNET Architecture and Data Path

3.1. Data Path Optimization for Pipelined Architecture

3.2. Architecture Overview

3.3. Configuration Parameters

3.4. Arbiter and Decoder

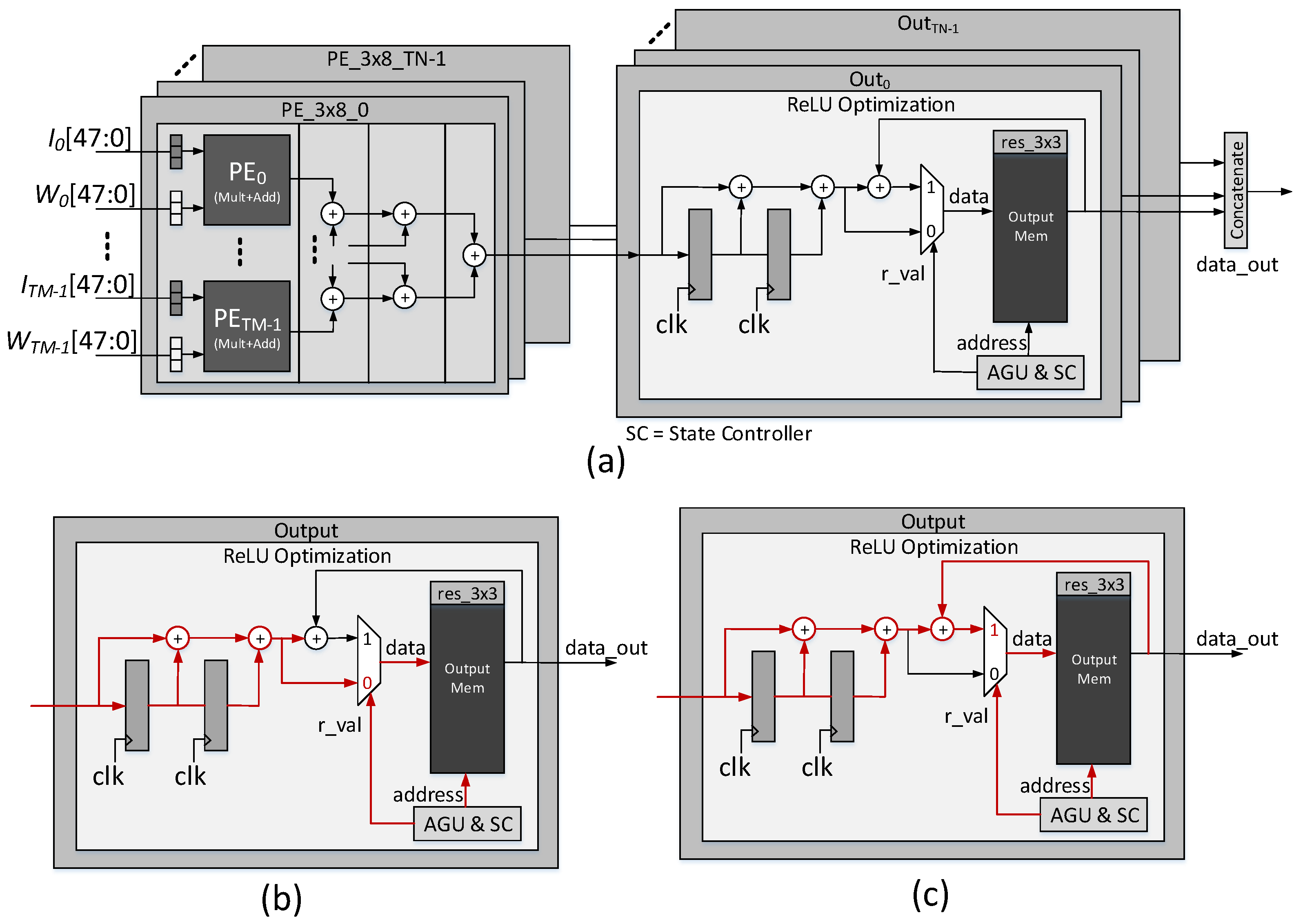

3.5. Processing Element (PE) Design

3.6. On-Chip Memory Architecture

4. Proposed Optimization Techniques

4.1. Data Arrangement in Memories

4.2. Address Generation Unit (AGU)

| Algorithm 1 AGU for input image reading. | ||

| Require: | ||

| Require: : stride | ||

| 1: | ||

| 2: | ||

| 3: | whiledo | ▹ Valid signal from SC |

| 4: | ||

| 5: | if then | |

| 6: | ||

| 7: | end if | |

| 8: | end while | |

| 9: | fordo | |

| 10: | if then | |

| 11: | ||

| 12: | else | |

| 13: | ||

| 14: | end if | |

| 15: | end for | |

| 16: | ||

| Algorithm 2 AGU for input weights reading. | |

| Require: | |

| Require: : Iteration Number | |

| Require: : Feature Count | |

| 1: | |

| 2: | |

| 3: | |

| 4: | whiledo |

| 5: | |

| 6: | if then |

| 7: | |

| 8: | end if |

| 9: | end while |

| 10: | fordo |

| 11: | if then |

| 12: | |

| 13: | |

| 14: | else if then |

| 15: | |

| 16: | |

| 17: | end if |

| 18: | end for |

| 19: | |

4.3. Memory Optimization

4.4. ReLU Hardware Optimization

5. ASIC Implementation and Results

5.1. ASIC Design

5.2. Network Architecture

5.3. ReLU Optimization and Simulation

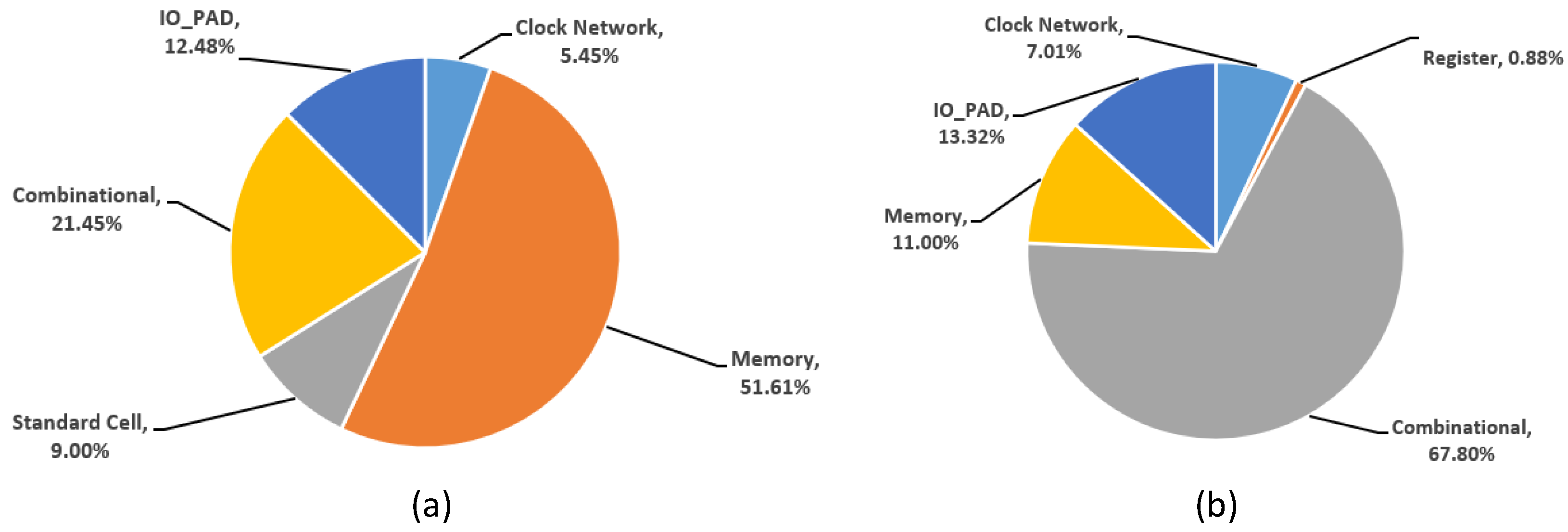

5.4. Area and Power of PE unit

5.5. Input Image and Weight Memories

5.6. Reusability of Data

5.7. Implementation Results and Comparison

5.7.1. Overall Chip Area and Power Consumption

5.7.2. Comparison with other ASIC designs

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gao, X.; Zhang, T. Introduction to Visual SLAM: From Theory to Practice; Springer Nature: Singapore, 2021. [Google Scholar]

- Chen, W.; Shang, G.; Ji, A.; Zhou, C.; Wang, X.; Xu, C.; Li, Z.; Hu, K. An Overview on Visual SLAM: From Tradition to Semantic. Remote. Sens. 2022, 14, 3010. [Google Scholar] [CrossRef]

- Li, D.; Shi, X.; Long, Q.; Liu, S.; Yang, W.; Wang, F.; Wei, Q.; Qiao, F. DXSLAM: A Robust and Efficient Visual SLAM System with Deep Features. arXiv 2020, arXiv:2008.05416. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Proceedings of the Computer Vision—ECCV 2006, Graz, Austria, 7–13 May 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Lowe, D. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperPoint: Self-Supervised Interest Point Detection and Description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 337–33712. [Google Scholar] [CrossRef]

- Simo-Serra, E.; Trulls, E.; Ferraz, L.; Kokkinos, I.; Fua, P.; Moreno-Noguer, F. Discriminative Learning of Deep Convolutional Feature Point Descriptors. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 118–126. [Google Scholar] [CrossRef]

- Radenović, F.; Tolias, G.; Chum, O. Fine-Tuning CNN Image Retrieval with No Human Annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1655–1668. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Jiang, Y.; Din Farrukh, F.U.; Zhang, C.; Xie, X. A Portable Accelerator of Proximal Policy Optimization for Robots. In Proceedings of the 2021 IEEE International Conference on Integrated Circuits, Technologies and Applications (ICTA), Zhuhai, China, 24–26 November 2021; pp. 171–172. [Google Scholar] [CrossRef]

- Dong, P.; Li, Z.; Chen, Z.; Yao, R.; Deng, H.; Zhang, W.; Zhang, Y.; Chen, L.; Wang, C.; An, F. A 139 fps pixel-level pipelined binocular stereo vision accelerator with region-optimized semi-global matching. In Proceedings of the 2021 IEEE Asian Solid-State Circuits Conference (A-SSCC), Busan, Korea, 7–10 November 2021; pp. 1–3. [Google Scholar] [CrossRef]

- Yu, J.; Xu, Z.; Zeng, S.; Yu, C.; Qiu, J.; Shen, C.; Xu, Y.; Dai, G.; Wang, Y.; Yang, H. INCA: INterruptible CNN Accelerator for Multi-tasking in Embedded Robots. In Proceedings of the 2020 57th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 20–24 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, Z.; Yu, J.; Yu, C.; Shen, H.; Wang, Y.; Yang, H. CNN-based Feature-point Extraction for Real-time Visual SLAM on Embedded FPGA. In Proceedings of the 2020 IEEE 28th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Fayetteville, AR, USA, 3–6 May 2020; pp. 33–37. [Google Scholar] [CrossRef]

- Liu, Y.; Li, J.; Huang, K.; Li, X.; Qi, X.; Chang, L.; Long, Y.; Zhou, J. MobileSP: An FPGA-Based Real-Time Keypoint Extraction Hardware Accelerator for Mobile VSLAM. IEEE Trans. Circuits Syst. I Regul. Pap. 2022, 69, 3190300. [Google Scholar] [CrossRef]

- Sun, H.; Deng, Q.; Liu, X.; Shu, Y.; Ha, Y. An Energy-Efficient Stream-Based FPGA Implementation of Feature Extraction Algorithm for LiDAR Point Clouds With Effective Local-Search. IEEE Trans. Circuits Syst. I Regul. Pap. 2022, 3212075. [Google Scholar] [CrossRef]

- Parashar, A.; Rhu, M.; Mukkara, A.; Puglielli, A.; Venkatesan, R.; Khailany, B.; Emer, J.; Keckler, S.W.; Dally, W.J. SCNN: An accelerator for compressed-sparse convolutional neural networks. In Proceedings of the 2017 ACM/IEEE 44th Annual International Symposium on Computer Architecture (ISCA), Toronto, ON, Canada, 24–28 June 2017; pp. 27–40. [Google Scholar] [CrossRef]

- Li, J.; Jiang, S.; Gong, S.; Wu, J.; Yan, J.; Yan, G.; Li, X. SqueezeFlow: A Sparse CNN Accelerator Exploiting Concise Convolution Rules. IEEE Trans. Comput. 2019, 68, 1663–1677. [Google Scholar] [CrossRef]

- Lee, J.; Kim, C.; Kang, S.; Shin, D.; Kim, S.; Yoo, H.J. UNPU: An Energy-Efficient Deep Neural Network Accelerator With Fully Variable Weight Bit Precision. IEEE J. Solid-State Circuits 2019, 54, 173–185. [Google Scholar] [CrossRef]

- Lu, W.; Yan, G.; Li, J.; Gong, S.; Han, Y.; Li, X. FlexFlow: A Flexible Dataflow Accelerator Architecture for Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Symposium on High Performance Computer Architecture (HPCA), Austin, TX, USA, 4–8 February 2017; pp. 553–564. [Google Scholar] [CrossRef]

- Shin, D.; Lee, J.; Lee, J.; Lee, J.; Yoo, H.J. DNPU: An Energy-Efficient Deep-Learning Processor with Heterogeneous Multi-Core Architecture. IEEE Micro 2018, 38, 85–93. [Google Scholar] [CrossRef]

- Yin, S.; Ouyang, P.; Tang, S.; Tu, F.; Li, X.; Zheng, S.; Lu, T.; Gu, J.; Liu, L.; Wei, S. A High Energy Efficient Reconfigurable Hybrid Neural Network Processor for Deep Learning Applications. IEEE J. Solid-State Circuits 2018, 53, 968–982. [Google Scholar] [CrossRef]

- Chen, Y.H.; Yang, T.J.; Emer, J.; Sze, V. Eyeriss v2: A Flexible Accelerator for Emerging Deep Neural Networks on Mobile Devices. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 292–308. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Nguyen, T.N.; Kim, H.; Lee, H.J. A High-Throughput and Power-Efficient FPGA Implementation of YOLO CNN for Object Detection. IEEE Trans. Very Large Scale Integr. (Vlsi) Syst. 2019, 27, 1861–1873. [Google Scholar] [CrossRef]

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks. In Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2015. [Google Scholar]

- Sun, F.; Wang, C.; Gong, L.; Xu, C.; Zhang, Y.; Lu, Y.; Li, X.; Zhou, X. A High-Performance Accelerator for Large-Scale Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Symposium on Parallel and Distributed Processing with Applications and 2017 IEEE International Conference on Ubiquitous Computing and Communications (ISPA/IUCC), Guangzhou, China, 12–15 December 2017; pp. 622–629. [Google Scholar] [CrossRef]

- Farrukh, F.U.D.; Zhang, C.; Jiang, Y.; Zhang, Z.; Wang, Z.; Wang, Z.; Jiang, H. Power Efficient Tiny Yolo CNN Using Reduced Hardware Resources Based on Booth Multiplier and WALLACE Tree Adders. IEEE Open J. Circuits Syst. 2020, 1, 76–87. [Google Scholar] [CrossRef]

- Li, H.; Fan, X.; Jiao, L.; Cao, W.; Zhou, X.; Wang, L. A high performance FPGA-based accelerator for large-scale convolutional neural networks. In Proceedings of the 2016 26th International Conference on Field Programmable Logic and Applications (FPL), Lausanne, Switzerland, 29 August–2 September 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Jokic, P.; Emery, S.; Benini, L. Improving Memory Utilization in Convolutional Neural Network Accelerators. IEEE Embed. Syst. Lett. 2021, 13, 77–80. [Google Scholar] [CrossRef]

- Karl, E.; Wang, Y.; Ng, Y.G.; Guo, Z.; Hamzaoglu, F.; Meterelliyoz, M.; Keane, J.; Bhattacharya, U.; Zhang, K.; Mistry, K.; et al. A 4.6 GHz 162 Mb SRAM Design in 22 nm Tri-Gate CMOS Technology With Integrated Read and Write Assist Circuitry. IEEE J. Solid-State Circuits 2013, 48, 150–158. [Google Scholar] [CrossRef]

- Horowitz, M. 1.1 Computing’s energy problem (and what we can do about it). In Proceedings of the 2014 IEEE International Solid-State Circuits Conference Digest of Technical Papers (ISSCC), San Francisco, CA, USA, 9–13 February 2014; pp. 10–14. [Google Scholar] [CrossRef]

- Yang, X.S.; Pu, J.; Rister, B.; Bhagdikar, N.; Richardson, S.; Kvatinsky, S.; Ragan-Kelley, J.; Pedram, A.; Horowitz, M. A Systematic Approach to Blocking Convolutional Neural Networks. arXiv 2016, arXiv:1606.04209. [Google Scholar]

- Yuan, Z.; Liu, Y.; Yue, J.; Yang, Y.; Wang, J.; Feng, X.; Zhao, J.; Li, X.; Yang, H. STICKER: An Energy-Efficient Multi-Sparsity Compatible Accelerator for Convolutional Neural Networks in 65-nm CMOS. IEEE J. Solid-State Circuits 2020, 55, 465–477. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Kang, R.; Shi, J.; Li, X.; Liu, Y.; Liu, X. DF-SLAM: A Deep-Learning Enhanced Visual SLAM System based on Deep Local Features. arXiv 2019, arXiv:1901.07223. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1901.07223. [Google Scholar] [CrossRef]

- Qiu, J.; Wang, J.; Yao, S.; Guo, K.; Li, B.; Zhou, E.; Yu, J.; Tang, T.; Xu, N.; Song, S.; et al. Going Deeper with Embedded FPGA Platform for Convolutional Neural Network. In Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Association for Computing Machinery (FPGA ’16), New York, NY, USA, 21–23 February 2016; pp. 26–35. [Google Scholar] [CrossRef]

- Shen, Y.; Ferdman, M.; Milder, P. Maximizing CNN Accelerator Efficiency Through Resource Partitioning. In Proceedings of the 44th Annual International Symposium on Computer Architecture, Association for Computing Machinery (ISCA ’17), New York, NY, USA, 24–28 June 2017; pp. 535–547. [Google Scholar] [CrossRef]

- Lu, L.; Liang, Y.; Xiao, Q.; Yan, S. Evaluating Fast Algorithms for Convolutional Neural Networks on FPGAs. In Proceedings of the 2017 IEEE 25th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), Napa, CA, USA, 30 April–2 May 2017; pp. 101–108. [Google Scholar] [CrossRef]

- Liang, S.; Yin, S.; Liu, L.; Luk, W.; Wei, S. FP-BNN: Binarized neural network on FPGA. Neurocomputing 2018, 275, 1072–1086. [Google Scholar] [CrossRef]

- Chen, Y.H.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks. IEEE J. Solid-State Circuits 2017, 52, 127–138. [Google Scholar] [CrossRef]

- Lin, T.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperGlue: Learning Feature Matching With Graph Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4937–4946. [Google Scholar] [CrossRef]

- Shin, D.; Lee, J.; Lee, J.; Yoo, H.J. 14.2 DNPU: An 8.1TOPS/W reconfigurable CNN-RNN processor for general-purpose deep neural networks. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 240–241. [Google Scholar] [CrossRef]

- Moons, B.; Uytterhoeven, R.; Dehaene, W.; Verhelst, M. 14.5 Envision: A 0.26-to-10TOPS/W subword-parallel dynamic-voltage-accuracy-frequency-scalable Convolutional Neural Network processor in 28 nm FDSOI. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 19–23 February 2017; pp. 246–247. [Google Scholar] [CrossRef]

| Signal Name | Register Bit(s) | Information |

|---|---|---|

| Conv | Convolution start command | |

| SpL | Special command for 1st Conv1a input feature | |

| Convolution layer group number | ||

| Iteration number for input features | ||

| Stored number of input features in each memory | ||

| ConvP | Convolution done pulse |

| Mode | 1 | ||

|---|---|---|---|

| Image Mem. WR 2 | 001 | xxxx | 000-111 |

| Weights Mem. WR | 010 | 0000-1111 | 000-111 |

| Output Mem. RD 3 | 011 | xxxx | 000-011 |

| Control Reg. WR | 100 | xxxx | xxx |

| Status Reg. RD | 101 | xxxx | xxx |

| Conv. Layer No. | P | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Conv1a | 1 | 64 | 480 | 640 | True | 1 | 642 | 1 1 | 24 |

| Conv1b | 64 | 64 | 480 | 640 | True | 2 | 1284 | 4 | 24 |

| Conv2a | 64 | 64 | 240 | 320 | True | 4 | 1288 | 2 | 24 |

| Conv2b | 64 | 64 | 240 | 320 | True | 4 | 1288 | 2 | 24 |

| Conv3a | 64 | 128 | 120 | 160 | True | 8 | 1296 | 1 | 48 |

| Conv3b | 128 | 128 | 120 | 160 | True | 8 | 1296 | 2 | 48 |

| Conv4a | 128 | 128 | 60 | 80 | True | 16 | 1312 | 1 | 48 |

| Conv4b | 128 | 128 | 60 | 80 | True | 16 | 1312 | 1 | 48 |

| Quantization | 1 AUC@5∘ | AUC@10∘ | AUC@20∘ | Precision | M. Score |

|---|---|---|---|---|---|

| Baseline (32-bit floating point) | 39.50 | 59.74 | 75.95 | 98.67 | 23.85 |

| Our work (16-bit fixed point) | 36.76 | 56.84 | 73.46 | 98.54 | 33.85 |

| Metrics | OJCAS [26] | This Work |

|---|---|---|

| Precision (bit) | 16 | 16 |

| Technology (nm) | 65 | 65 |

| Area (mm2) | 0.0739 | 0.0494 |

| Frequency (MHz) | 250 | 300 |

| No. of operations | 48 | 48 |

| No. of MACs | 24 | 24 |

| Power (mW) | 8.725 | 7.115 |

| Eyeriss ISSCC2016 [41] | DNPU ISSCC2017 [44] | ENVISION ISSCC2017 [45] | THINKER JSSC18 [21] | STICKER JSSC19 [32] | Our Work (FPSNET) | |

|---|---|---|---|---|---|---|

| Technology (nm) | 65 | 65 | 28 | 65 | 65 | 65 |

| Core Area (mm2) | 12.3 | 16.0 | 1.9 | 14.4 | 7.8 | 8.3 |

| Voltage (V) | 1.17 | 1.1 | 1.1 | 1.2 | 1.0 | 1.2 |

| On-chip SRAM (kB) | 181.5 | 290 | 144 | 348 | 170 | 176 |

| Core Frequency (MHz) | 200 | 200 | 200 | 200 | 200 | 250 |

| Number of PEs | 168 | 776 | 256 | 512 | 256 | 16 |

| Number of MACs | 168 | 768 | 512 | 1024 | 256 | 384 |

| Peak Performance (TOPS) | 0.084 @ 16b | 0.300 @ 16b | 0.076 @ 4b | 0.410 @ 8b | 0.102 @ 8b | 0.204 @ 16b |

| Bit-width (bits) | 16 | 4/8/16 | 4/8/16 | 8/16 | 8 | 16 |

| Power (mW) | 450 | 279 | 300 | 386 | 248 | 203 |

| Power Efficiency (TOPS/W) | 0.14 | 1.0 | 0.26 | 1.06 | 0.411 | 1.0 |

| Efficiency w.r.t. Area (GOPS/mm2) | 6.83 | 18.75 | 40.0 | 28.47 | 13.08 | 24.73 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farrukh, F.U.D.; Zhang, W.; Zhang, C.; Wang, Z.; Jiang, H. FPSNET: An Architecture for Neural-Network-Based Feature Point Extraction for SLAM. Electronics 2022, 11, 4168. https://doi.org/10.3390/electronics11244168

Farrukh FUD, Zhang W, Zhang C, Wang Z, Jiang H. FPSNET: An Architecture for Neural-Network-Based Feature Point Extraction for SLAM. Electronics. 2022; 11(24):4168. https://doi.org/10.3390/electronics11244168

Chicago/Turabian StyleFarrukh, Fasih Ud Din, Weiyi Zhang, Chun Zhang, Zhihua Wang, and Hanjun Jiang. 2022. "FPSNET: An Architecture for Neural-Network-Based Feature Point Extraction for SLAM" Electronics 11, no. 24: 4168. https://doi.org/10.3390/electronics11244168

APA StyleFarrukh, F. U. D., Zhang, W., Zhang, C., Wang, Z., & Jiang, H. (2022). FPSNET: An Architecture for Neural-Network-Based Feature Point Extraction for SLAM. Electronics, 11(24), 4168. https://doi.org/10.3390/electronics11244168