A 3D Scene Information Enhancement Method Applied in Augmented Reality

Abstract

1. Research Background

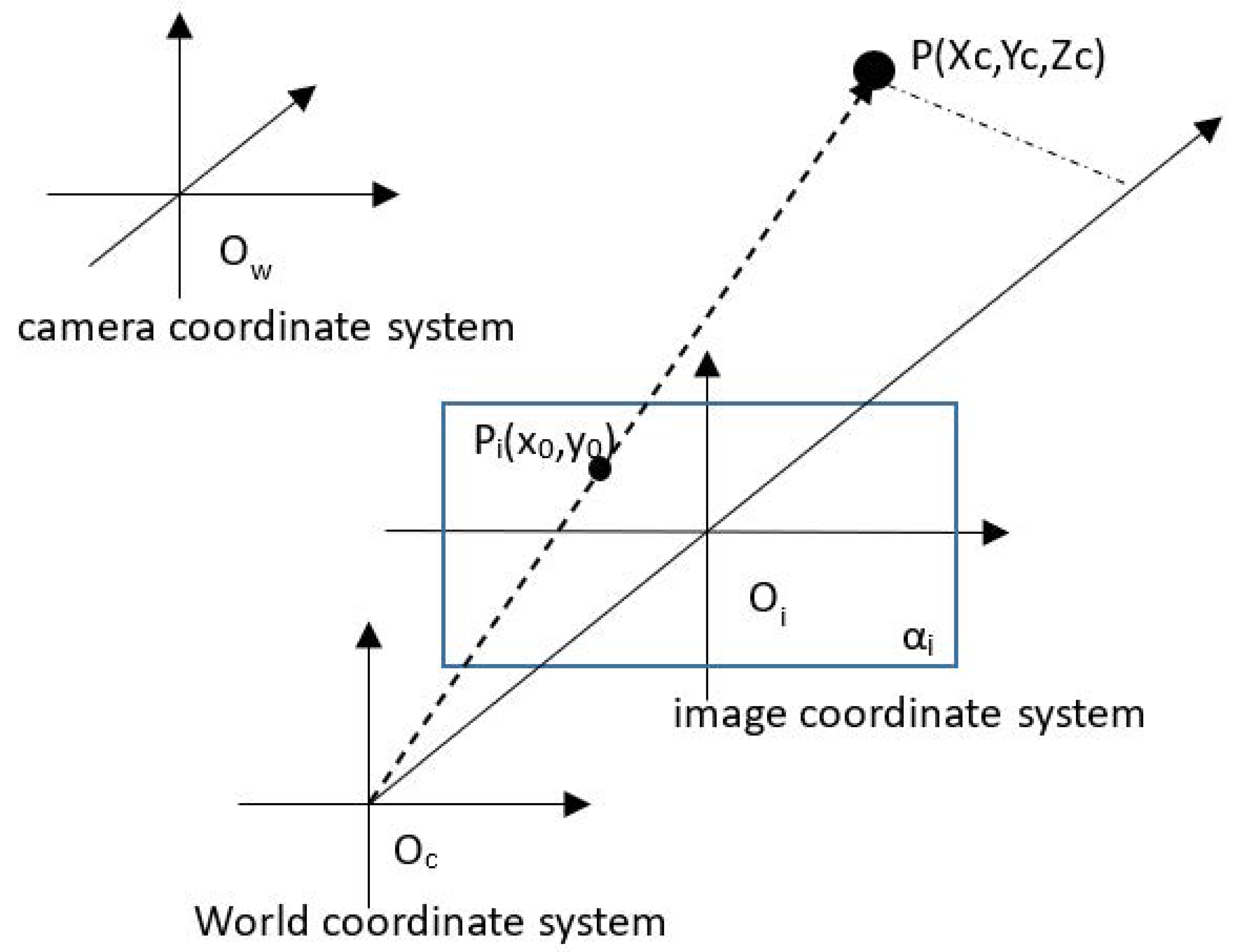

2. Principle of the Method

3. Experiments

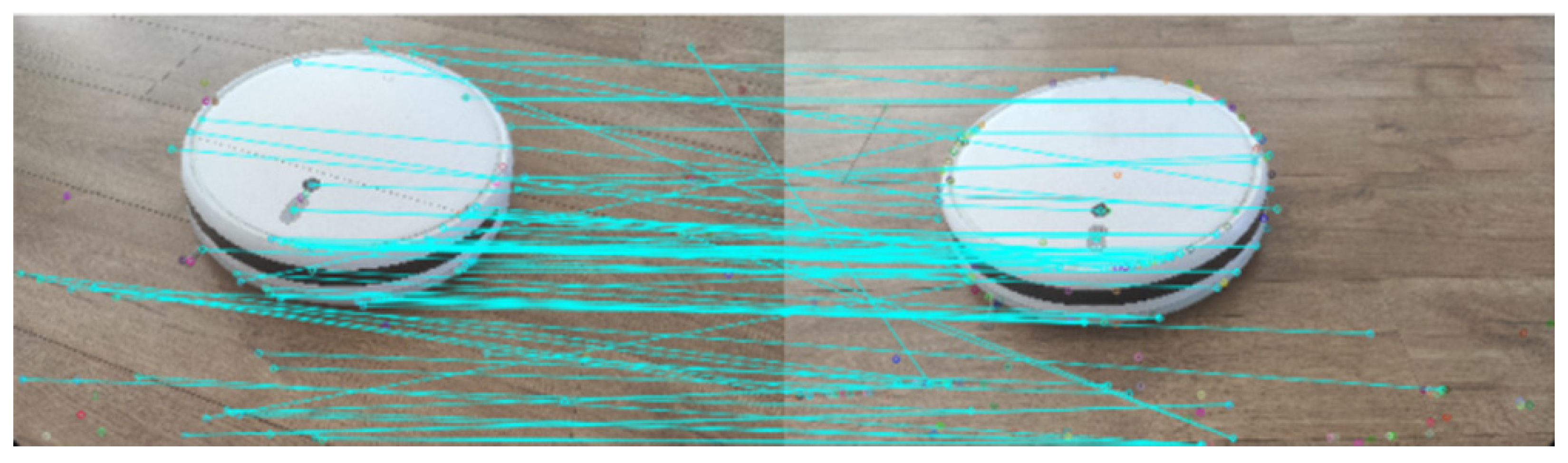

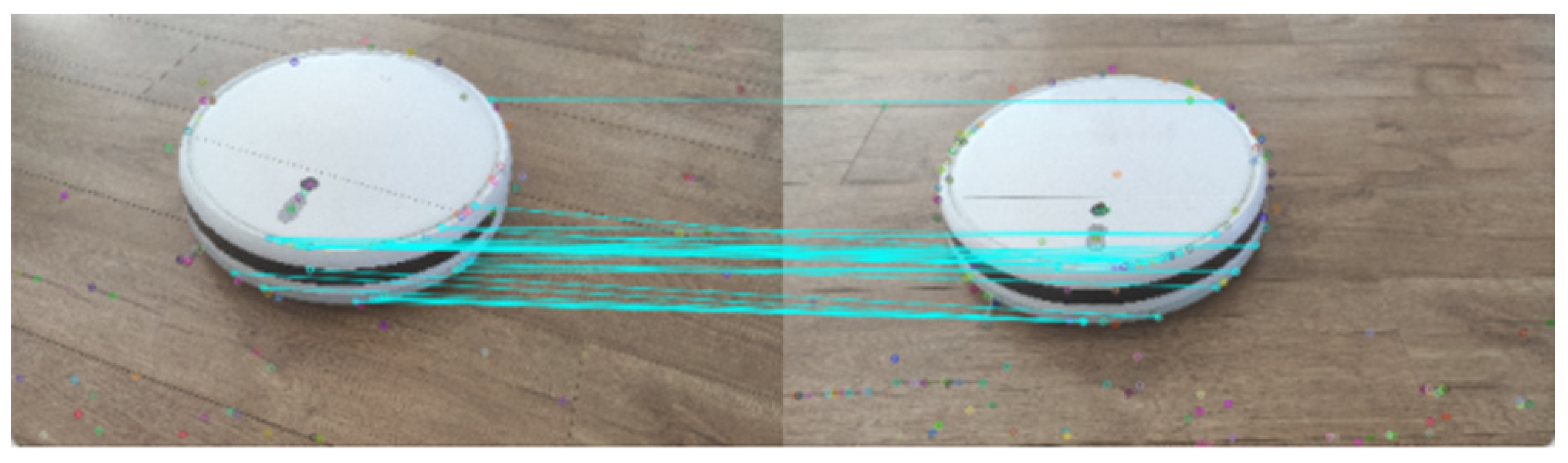

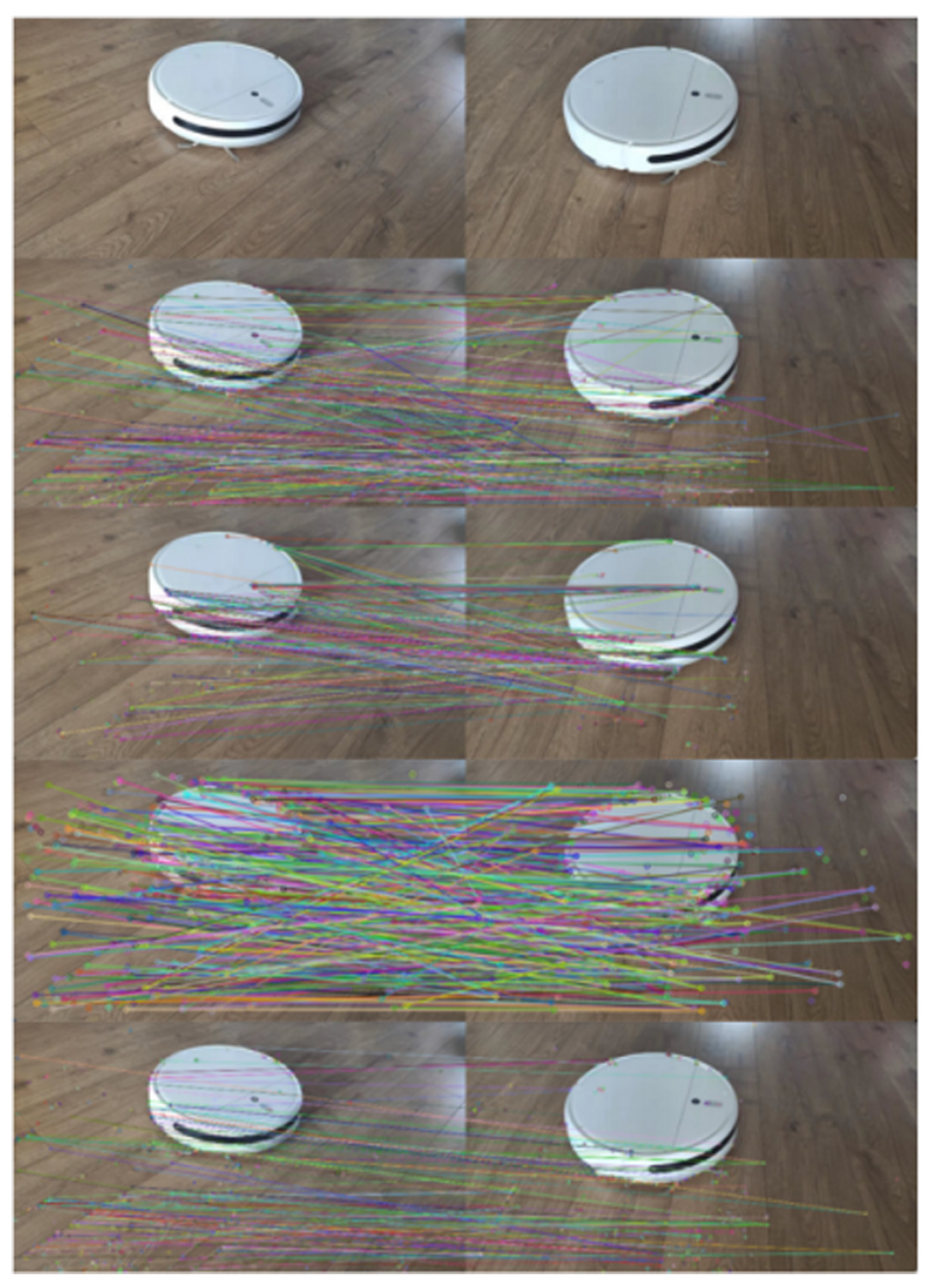

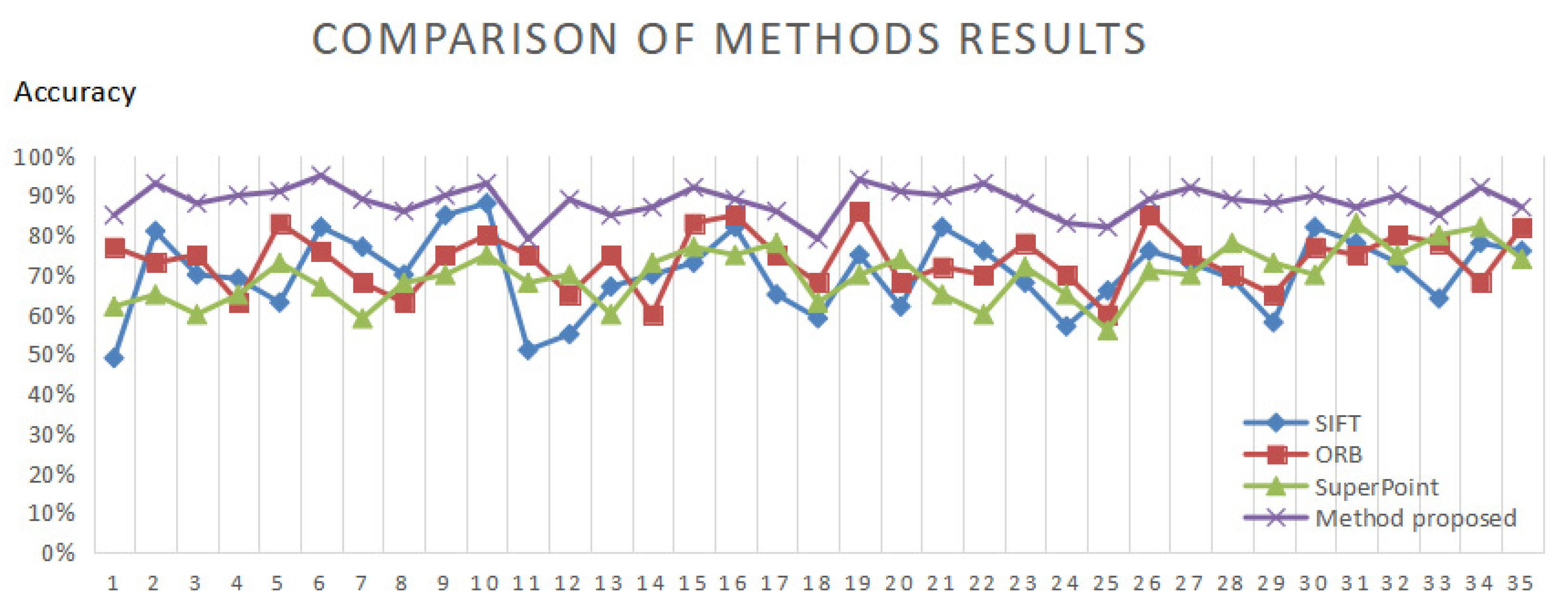

3.1. Feature Points Extraction and Matching Method Based on SIFT

- The points of the real scene appear in two different images, which must be one-to-one between two images. It is impossible for one point in one image to be projected to many points in another image. Therefore, the one-to-many matching results must be the polluted results, which can be removed.

- The scene content will not change too much in two images with adjacent angles. Thus, the horizontal angle of the corresponding points in two images will not change too much. Therefore, the matching points with large matching alignment slope must be polluted results, and can be removed.

3.2. Planes Recognition

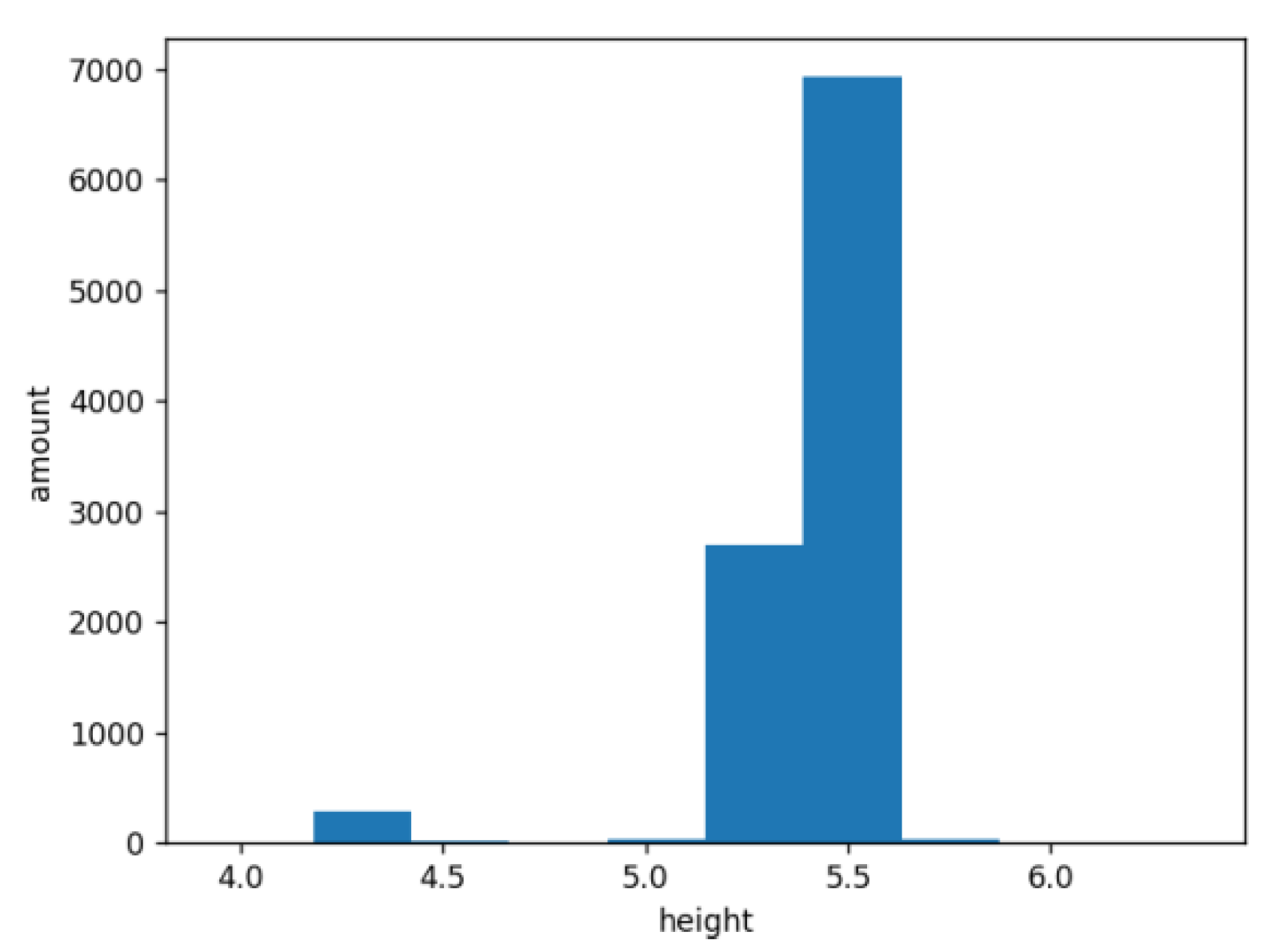

- Make statistics and analysis on the height data of the point cloud of the scene. There should be a plane at the height where many points are concentrated. Figure 8 shows the number of points at a certain height. It is shown that most point clouds are concentrated in two height ranges, so it is inferred that there are two planes in the scene. The small point set corresponds to the upper plane of the sweeper, and the large point set corresponds to the ground.

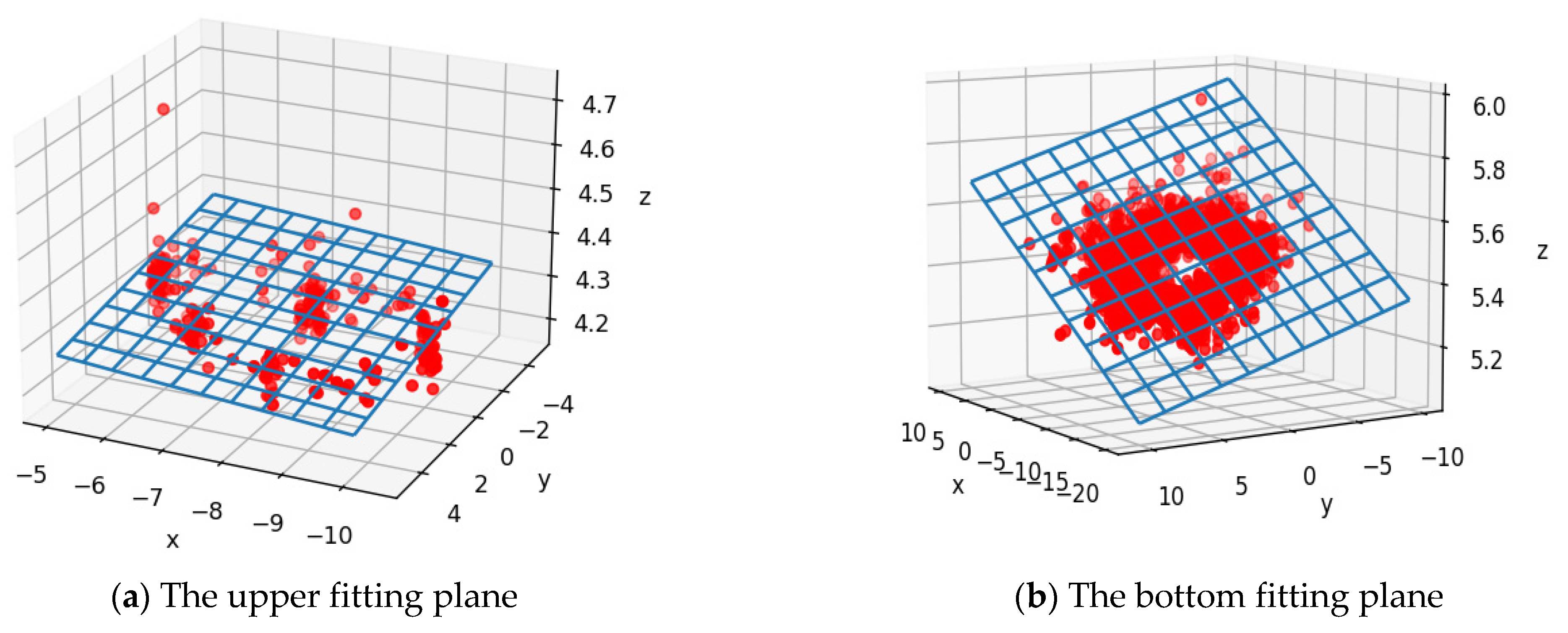

- The points in the two height ranges are extracted to form two point sets. For each point set, the RANSAC algorithm is used to fit a plane. The process can be executed in parallel. The fitting plane effect is shown in Figure 9. Figure 9a shows the fitting effect of the small point set and Figure 9b shows the fitting effect of the large point set.

- After fitting the plane equation, the size of the upper plane of the sweeper in the scene can be determined through the coordinate range of small point set.

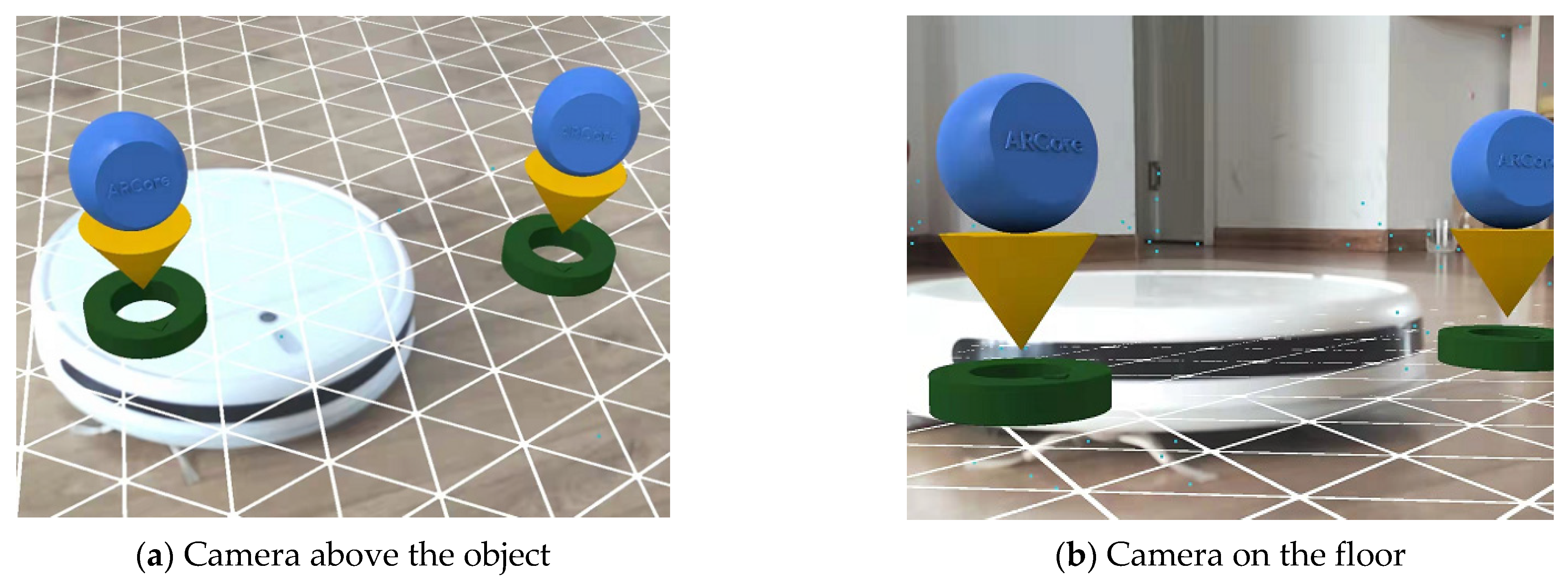

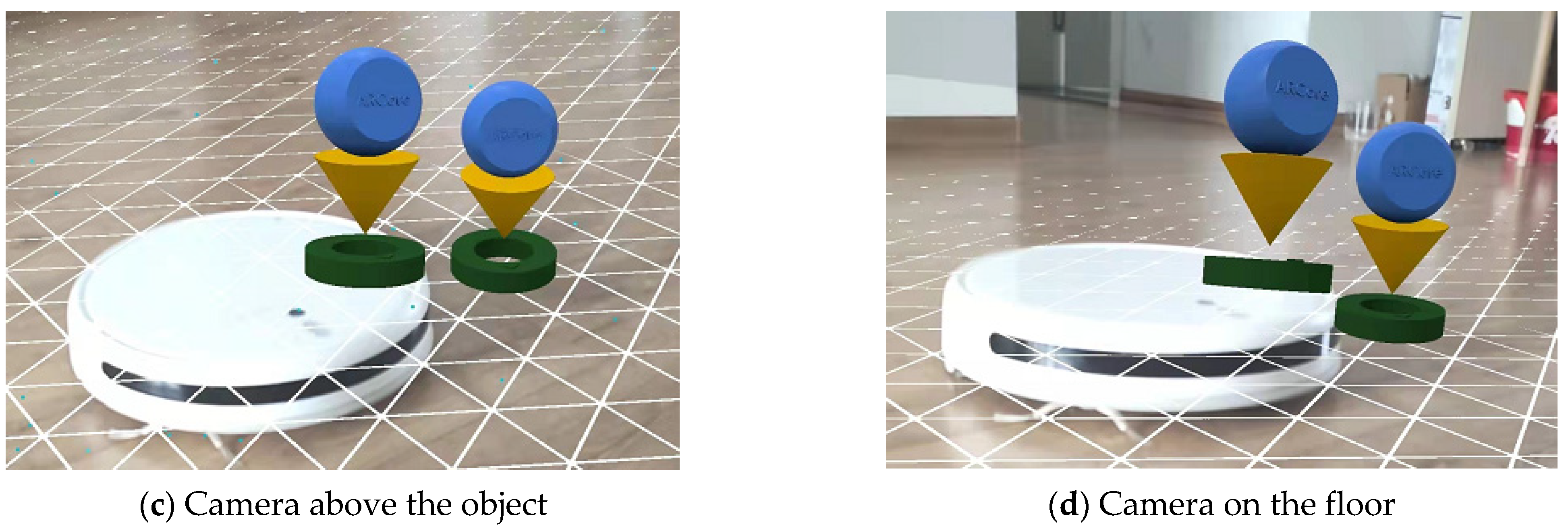

4. Effect Combined with ARCore

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Caudell, T.P.; Mizell, D.W. Augmented reality: An application of heads-up display technology to manual manufacturing processes. In Proceedings of the 25th Hawaii International Conference on System Sciences, Kauai, HI, USA, 7–10 January 1992; IEEE Computer Society Press: Los Alamitos, CA, USA, 1992; pp. 659–669. [Google Scholar]

- Bi, Y.; Zhao, Z. Application of VR Virtual Reality in Navigation Teaching. J. Phys. Conf. Ser. 2020, 1648, 032156. [Google Scholar] [CrossRef]

- Morimoto, T.; Kobayashi, T.; Hirata, H.; Otani, K.; Sugimoto, M.; Tsukamoto, M.; Yoshihara, T.; Ueno, M.; Mawatari, M. XR (Extended Reality: Virtual Reality, Augmented Reality, Mixed Reality) Technology in Spine Medicine: Status Quo and Quo Vadis. J. Clin. Med. 2022, 11, 470. [Google Scholar] [CrossRef] [PubMed]

- Chiang, F.K.; Shang, X.; Qiao, L. Augmented reality in vocational training: A systematic review of research and applications. Comput. Hum. Behav. 2022, 129, 107125. [Google Scholar]

- Sung, E.; Han, D.I.D.; Choi, Y.K. Augmented reality advertising via a mobile app. Psychol. Mark. 2022, 39, 543–558. [Google Scholar] [CrossRef]

- Jiang, S.; Moyle, B.; Yung, R.; Tao, L.; Scott, N. Augmented reality and the enhancement of memorable tourism experiences at heritage sites. Curr. Issues Tour. 2022, in press. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.J.; Kohi, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. Kinect Fusion: Real-Time Dense Surface Mapping and Tracking. In Proceedings of the 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 127–136. [Google Scholar]

- Roth, H.; Vona, M. Moving Volume Kinect Fusion. In Proceedings of the British Machine Vision Conference, Surrey, UK, 3–7 September 2012; pp. 1–11. [Google Scholar]

- Whelan, T.; Kaess, M.; Johannsson, H.; Fallon, M.; Leonard, J.J.; McDonald, J. Real-time large-scale dense RGB-D SLAM with volumetric fusion. Int. J. Robot. Res. 2014, 34, 598–626. [Google Scholar]

- Fioraio, N.; Taylor, J.; Fitzgibbon, A.; Di Stefano, L.; Izadi, S. Large-scale and drift-free surface reconstruction using online subvolume registration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4475–4483. [Google Scholar]

- Faugeras, O.; Robert, L.; Laveau, S.; Csurka, G.; Zeller, C.; Gauclin, C.; Zoghlami, I. 3-D Reconstruction of Urban Scenes from Image Sequences. Comput. Vis. Image Underst. 1998, 69, 292–309. [Google Scholar] [CrossRef]

- Debevec, P.E. Modeling and rendering architecture from photographs: A hybrid geometry and image based approach. In Proceedings of the Conference on Computer Graphics & Interactive Techniques, Berkeley, CA, USA, 4–9 August 1996; pp. 11–20. [Google Scholar]

- Snavely, N.; Seitz, S.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. In ACM Transactions on Graphics (TOG); ACM: New York, NY, USA, 2006; Volume 25, pp. 835–846. [Google Scholar]

- Goesele, M.; Snavely, N.; Curless, B.; Hoppe, H.; Seitz, S.M. Multi-View Stereo for Community Photo Collections. In Proceedings of the 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2017; IEEE: Piscataway, NJ, USA, 2007; pp. 1–8. [Google Scholar]

- Furukawa, Y.; Ponce, J. Accurate, Dense, and Robust Multi-View Stereopsis. In Proceedings of the 2007 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2007), Minneapolis, MN, USA, 17–22 June 2022; pp. 18–23. [Google Scholar]

- Snvaley, N. Bundler Structure from Motion (SfM) for Unordered Images. 2007. Available online: http://www.cs.cornell.edu/~snavely/bundler/ (accessed on 4 November 2022).

- Bradley, D.; Boubekeur, T.; Heidrich, W. Accurate multi-view reconstruction using robust binocular stereo and surface meshing. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Furukawa, Y.; Curless, B.; Seitz, S.M.; Szeliski, R. Reconstructing Building Interiors from Images. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 80–87. [Google Scholar]

- Liu, Y.; Xun, C.; Dai, Q.; Xu, W. Continuous depth estimation for multi-view stereo. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2121–2128. [Google Scholar]

- Li, J.; Li, E.; Chen, Y.; Xu, L.; Zhang, Y. Bundled Depth-Map Merging for Multi-View Stereo. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2010), San Francisco, CA, USA, 13–18 June 2010; pp. 2769–2776. [Google Scholar]

- Hernandez, E.; Vogiatzis, G.; Cipolla, R. Multiview Photometric Stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 548–554. [Google Scholar]

- Vogiatzis, G.; Esteban, C.H.; Torr, P.; Cipolla, R. Multiview Stereo via Volumetric Graph-Cuts and Occlusion Robust Photo-Consistency. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 29, 2241–2246. [Google Scholar] [CrossRef] [PubMed]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Mandikal, P.; Radhakrishnan, V.B. Dense 3D Point Cloud Reconstruction Using a Deep Pyramid Network. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. Orb: An efficient alternative to sift or surf. In Proceedings of the IEEE 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. Brisk: Binary robust invariant scalable keypoints. In Proceedings of the IEEE 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Deshpande, B.; Hanamsheth, S.; Lu, Y.; Lu, G. Matching as Color Images: Thermal Image Local Feature Detection and Description. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–1 June 2021; pp. 1905–1909. [Google Scholar]

- Xie, Y.; Wang, Q.; Chang, Y.; Zhang, X. Fast Target Recognition Based on Improved ORB Feature. Appl. Sci. 2022, 12, 786. [Google Scholar] [CrossRef]

- Wu, J.; Wang, Y.; Xue, T.; Sun, X.; Freeman, B.; Tenenbaum, J. Marrnet: 3D shape reconstruction via 2.5 d sketches. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 540–550. [Google Scholar]

- Yu, Y.; Guan, H.; Li, D.; Jin, S.; Chen, T.; Wang, C.; Li, J. 3-D feature matching for point cloud object extraction. IEEE Geosci. Remote Sens. Lett. 2019, 17, 322–326. [Google Scholar]

- Dewi, F.; Umar, R.; Riadi, I. Classification Based on Machine Learning Methods for Identification of Image Matching Achievements. J. Rekayasa Sist. Teknol. Inf. 2022, 6, 198–206. [Google Scholar]

- Ma, H.; Yin, D.Y.; Liu, J.B.; Chen, R.Z. 3D convolutional auto-encoder based multi-scale feature extraction for point cloud registration. Opt. Laser Technol. 2022, 149, 107860. [Google Scholar] [CrossRef]

- Seibt, S.; Lipinski, B.V.R.; Latoschik, M.E. Dense Feature Matching Based on Homographic Decomposition. IEEE Access 2022, 10, 21236–21249. [Google Scholar]

| Algorithm | Feature Points (Left) | Feature Points (Right) | Matched | Accuracy |

|---|---|---|---|---|

| SIFT | 513 | 377 | 183 | 75.41% |

| ORB | 455 | 468 | 259 | 68.34% |

| Super Point | 2023 | 1470 | 408 | 59.31% |

| Proposed Method | 513 | 377 | 123 | 89.43% |

| Recognized Planes | Accuracy | Time Cost (s) | Efficiency Improvement | ||

|---|---|---|---|---|---|

| Classical RANSAC | 1 | 50% | 0.193 | 0 | |

| Method Proposed | 2 | 100% | Upper plane | 0.012 | 14.5% |

| Bottom plane | 0.165 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, B.; Wang, X.; Gao, Q.; Song, Z.; Zou, C.; Liu, S. A 3D Scene Information Enhancement Method Applied in Augmented Reality. Electronics 2022, 11, 4123. https://doi.org/10.3390/electronics11244123

Li B, Wang X, Gao Q, Song Z, Zou C, Liu S. A 3D Scene Information Enhancement Method Applied in Augmented Reality. Electronics. 2022; 11(24):4123. https://doi.org/10.3390/electronics11244123

Chicago/Turabian StyleLi, Bo, Xiangfeng Wang, Qiang Gao, Zhimei Song, Cunyu Zou, and Siyuan Liu. 2022. "A 3D Scene Information Enhancement Method Applied in Augmented Reality" Electronics 11, no. 24: 4123. https://doi.org/10.3390/electronics11244123

APA StyleLi, B., Wang, X., Gao, Q., Song, Z., Zou, C., & Liu, S. (2022). A 3D Scene Information Enhancement Method Applied in Augmented Reality. Electronics, 11(24), 4123. https://doi.org/10.3390/electronics11244123