Abstract

Container technology enables rapid deployment of computing services, while edge computing reduces the latency of task computing and improves performance. However, there are limits to the types, number and performance of containers that can be supported by different edge servers, and a sensible task deployment strategy and rapid response to the policy is a must. Therefore, by jointly optimizing the strategies of task deployment, offloading decisions, edge cache and resource allocation, this paper aims to minimize the overall energy consumption of a mobile edge computing (MEC) system composed of multiple mobile devices (MD) and multiple edge servers integrated with different containers. The problem is formalized as a combinatorial optimization problem containing multiple discrete variables when constraints of container type, transmission power, latency, task offloading and deployment strategies are satisfied. To solve the NP-hard problem and achieve fast response for sub-optimal policy, this paper proposes an energy-efficient edge caching and task deployment policy based on Deep Q-Learning (DQCD). Firstly, the pruning and optimization of the exponential action space consisting of offloading decisions, task deployment and caching policy is completed to accelerate the training of the model. Then, the iterative optimization of the training model is completed using a deep neural network. Finally, the sub-optimal task deployment, offloading and caching policies are obtained based on the training model. Simulation results demonstrate that the proposed algorithm is able to converge the model in very few iterations and results in a great improvement in terms of reducing system energy consumption and policy response delay compared to other algorithms.

1. Introduction

With the advent of the 5G era, the internet and mobile devices have undergone great development, generating massive amounts of computing tasks and data. However, due to the limited computing ability of mobile devices, the delay in offloading computing tasks to remote cloud servers is too large to meet the needs of delay-sensitive tasks such as cognitive assistance, mobile gaming and virtual augmented reality (VR/AR) [1]. Mobile edge computing pushes computing tasks and data from centralized cloud computing to the edge of the network [2] so that task processing and generation are closer to the device, which improves the utilization of underlying resources and the QoS of users, which can well solve the above problems.

Compared with mobile devices, edge servers have larger computing ability and storage. Mobile devices can offload delay-sensitive tasks to edge servers for computing, which not only meets user needs but also reduces the energy consumption of mobile devices. In [3], an algorithm based on global search is used to find the optimal offloading strategy to achieve the goal of maximizing the weighted sum of task completion delay and system energy consumption. At the same time, in the research of computing offloading, containers have the characteristics of a light weight, fast deployment speed, small footprint, high portability and high security and can provide quality computing services for computing-intensive tasks such as virtual augmented reality and mobile games. In [4] multiple containers are proposed in each system for a single application, thereby improving the overall efficiency of the system.

The above works do not study the multi-user multi-edge server environment, especially when the number of tasks is large and when the edge server cannot process a large number of tasks in time and still does not meet the demand for latency. Therefore, user request data can be cached on the edge server, thereby greatly reducing the delay and energy consumption during task processing. In [5], the authors study the management of an MEC cache to improve the quality of service of mobile augmented reality (MAR) services. In [6], a data-driven approach is adopted using model-free reinforcement learning to optimize edge cache allocation.

In the deployment strategy of mobile edge computing, researchers have proposed optimization methods such as dynamic programming [7], genetic algorithm [3], etc. with some improvements, but the execution time of the above algorithms will increase exponentially when the number of users increases. The exponential growth does not meet the needs of rapid response for deployment strategy in reality. With the development of artificial intelligence, optimization methods based on deep reinforcement learning (DRL) have received more and more attention [8]. The biggest advantage of DRL is that it can obtain a sub-optimal deployment strategy and can greatly shorten the algorithm execution time in the multi-MD multi-server environment. Moreover, the above studies have not conducted in-depth research on the joint optimization of edge caching, computing offloading and task deployment strategies.

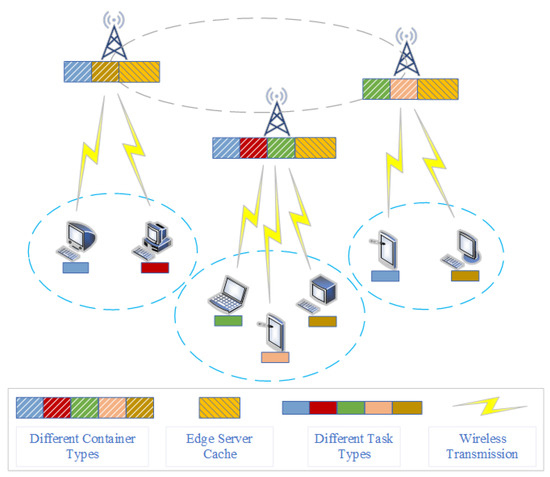

In general, in the context of massive computing tasks and data, due to the limited computing power of mobile devices, the computing power and storage capacity of edge servers are used to solve the delay and energy consumption of processing tasks on mobile devices. By jointly optimizing computing power, latency, task offloading, task deployment and cache deployment in an edge computing environment, the underlying computing and communication resources are maximized and system energy consumption is minimized. Using DQN to solve this NP-hard and discrete variable problem can not only obtain the suboptimal solution of system energy consumption, but also the processing time of the algorithm can meet the delay requirement. The edge computing environment has multiple edge servers and multiple MDs based on container technology. As shown in Figure 1, in the MEC system, the task of each MD can be executed locally or offloaded to a suitable edge server. At the same time, it is necessary to select an appropriate MD data cache in the edge server to maximize the use of system resources and minimize total energy consumption under the premise of meeting the delay.

Figure 1.

The multi-MD multi-container multi-server MEC system.

In this paper, we study the problem of container-based edge caching and task deployment in the MEC system. Meanwhile, we utilize DRL to obtain a near-optimal task deployment strategy. Then, a DQN-empowered efficient edge caching and task deployment (DQCD) algorithm is proposed to solve the problem of energy consumption minimization. The main contributions of this paper are as follows:

- A joint optimization problem of edge caching and task deployment is studied with the objective of minimizing the total system energy consumption in a multi-MD multi-container multi-base station (BS) MEC system.

- A DQN-empowered energy-efficient edge cache and task deployment algorithm is proposed to generate, for the near-optimal edge cache, task deployment and offloading decision strategies. During iterative training, DNN gradually improves upon the optimal or sub-optimal task deployment strategy.

- DQCD is compared with baselines in terms of convergence speed, deployment time and energy consumption. DQCD not only achieves near-optimal performance but also reduces execution latency and achieves convergence with very few iterations.

The remainder of this paper is organized as follows. Section 2 presents the system model and problem formulation. In Section 3, we propose a energy-efficient edge caching and task deployment based DQN (DQCD) algorithm to solve the formalization problem. Section 4 presents the simulation results and discussion. Finally, we conclude our paper in Section 5.

2. Related Work

Edge computing has been the topic of numerous studies. In [9], a novel permissionless mobile system based on QR codes and NFC technology is proposed for privacy-preserving smart contact tracing. An urban safety analysis system is proposed in [10], which can use multiple points of cross-domain urban data to infer safety indices. These are all studies on the practical application of edge computing, but they did not study the delay and energy consumption in the system. In [11], a mobile phone-assisted positioning algorithm is proposed, and the positioning scheme and the communication between the service areas of the remote control are formulated. In [12], a container engine was suggested that makes it easy to create, manage and delete containerized apps. Running several containers uses little overhead and a small amount of CPU power. The network performance of edge computing is boosted in several studies using container technology. It is suggested to utilize containers to administer and monitor apps [13]. One of the most useful and noticeable aspects of network edge computing is the scalability of containers. The IoT resource management framework uses a federated domain-based strategy in light of this. Resources given by IoT-based edge devices, such as smartphones or smart automobiles, can be used more effectively in containers that are dynamically allocated at runtime.

To solve the shortcomings of mobile devices in resource storage, computational performance and energy efficiency, computational offloading refers to resource-constrained devices that shift resource-intensive computing from mobile devices to resource-rich adjacent infrastructure [14]. In [15], the authors consider the context of a wearable camera uploading real-time video to a remote distribution server under a cellular network, maximizing the quality of uploaded video while meeting latency requirements. Technology for offloading computing minimizes transmission time while also relieving demand on the core network. MEC is able to execute brand-new, sophisticated applications on user equipment (UE), and computation offloading is a crucial edge computing technology. There are numerous relevant research findings, mostly focusing on the two core issues of resource allocation and offloading decisions. Offloading decisions include what to offload from mobile devices, how much to offload and how to offload computing duties. The analysis of where to dump resources is known as resource allocation.

The UE in an offload system typically consists of a decision engine, a system parser and a code parser. Three steps make up the execution of its unload decision, and the ability to uninstall something depends on the type of application and code data partition. The code parser first determines what can be uninstalled. The system parser then monitors various parameters, such as bandwidth availability, the size of data that need to be offloaded or the amount of energy used by running local applications [16]. According to the optimization target of the offloading decision, computational offloading can be divided into three categories: with the goal of lowering latency, with the goal of reducing energy consumption and with the goal of balancing energy consumption and delay. Under the premise of ensuring the delay of the device, the energy consumption of the device is usually the second concern of the user. In [17], energy harvesting methods are introduced for wearable devices. In [18], the data communication power consumption of wearable devices is minimized under the premise of meeting the delay requirements.

Base station caching, mobile content distribution networks and transparent caching are examples of mobile edge caching systems. Technology for cache acceleration can boost content delivery effectiveness and enhance user experience. Users can access nearby content once it has been cached at the mobile network’s edge, eliminating repetitive content transfer and relieving demand on the backhaul and core networks. Additionally, edge caching might lessen user request network latency, enhancing the user’s network experience. Edge caching can also enable the mobile network resource environment to offer tenants and users enhanced services [19]. A Cognitive Agent (CA) is suggested to assist users in pre-caching and performing tasks on MEC and to coordinate communication and caching to lessen the burden of MEC [20].

According to [21], a coordinate descent (CD) method that searches along one variable dimension at a time is suggested for task deployment decisions for edge computing. In [22], the authors research an analogous iterative binary offloading decision-adjusting heuristic search technique for multi-server MEC networks. Another extensively used heuristic is convex relaxation. For instance, Ref. [23] shows that integer variables can be relaxed to be continuous between 0 and 1, or [24] shows that binary constraints can be approximated by quadratic constraints. On the other hand, a heuristic algorithm with a lower level of complexity cannot ensure the quality of the solution. On the other hand, search-based approaches and convex relaxation methods are not appropriate for rapid fading channels since they both require a large number of iterations to attain adequate local optimums.

Our work is motivated by the cutting-edge benefits of deep reinforcement learning for solving issues in reinforcement learning with vast state spaces and action spaces [25]. The application range of deep reinforcement learning is very wide, and it is very well used in the fields of route planning, indoor positioning, edge computing and so on. In [26], the authors utilize reinforcement learning’s multi-objective hyperheuristic algorithm for smart city route planning. The goal of [27] was to automatically draw indoor maps through reinforcement learning for changes in AP signal strength in different rooms. In [28], the authors utilize deep learning techniques to build a fine-grained and facility-labeled interior floor plan system. In [29], the authors use the training of WiFi fingerprints to construct indoor floor plans. Deep neural networks (DNNs) in particular are used to build the best state space to action space mappings by learning from training data samples [30]. Regarding MEC network offloading based on deep reinforcement learning, Ref. [31] suggested a distributed deep learning based offloading (DDLO) technique for MEC networks by utilizing parallel computing. To improve the computational performance for energy-harvesting MEC networks, Ref. [32] presented an offloading approach based on Deep Q-Network (DQN). In [33], the authors examined a DQN-based online compute offloading approach under random task arrival in a comparable network environment. However, these two DQN based works both take the calculation unloading and task deployment decision as the state input vector and do not consider the container and task type or the edge cache.

3. System Modle

In this paper, we study a multi-MD multi-server environment in a given area, as shown in Figure 1. The container of the edge server can provide computing services to mobile devices with limited resources, and each edge server has a certain storage for user data caching. represents a set of edge servers, while , represents a set of MDs. The network is divided into several small areas, and represent the edge server with which the MDi can communicate wirelessly. Services are abstractions of applications requested by MDs. Example services include video streaming, face recognition, augmented reality and others. Computation tasks need to have corresponding container types on the BS.

Assume all MDs are randomly distributed within the coverage of edge servers, and all edge servers are connected by wired links. To allow multiple access, all MDs follow the Orthogonal Frequency Division Multiple Access (OFDMA) protocol. Assuming that the delay constraints of all tasks are the same, equal to T, the tasks will be executed within a specified execution deadline, and each MD has an indivisible task within T. represents a task, where represents the size of the input data, represents the number of CPU cycles required to complete a 1-bit task, represents the task type, and represents the time constraint of the task. The goal is to perform edge offloading and task deployment under the constraints of task type, computing power, delay and task offloading to minimize the total energy consumption of the system.

3.1. Task Deployment Model

The task computation of MDs can be accomplished locally or transferred to the optimal edge server in M. Assume the MDi is within the coverage of an edge server, . At the same time, the decision matrix a is introduced, and is defined as a task placement strategy to determine whether the computing task of the MDi is offloaded to the server j. If , it means that the task i is offloaded on the edge server j. Here, is transmitted locally to , and from to the edge server j. This paper ignores the data transmission delay between edge servers caused by the use of optical cable transmission. The task deployment strategy is denoted as follows:

3.2. Edge Cache Model

We introduce a decision matrix b, and define it as a caching strategy to determine whether the data of the MDi are cached on the server j. If , it means that the data of the MDi are cached on the edge server j, and the caching strategy is shown as follows:

At the same time, the storage of each edge server is limited. Assuming that the maximum storage space allocated to each edge server is , the size of the MD data is , and there are the following cache constraints:

3.3. Task Placement Model

Different MDs have different task types; at the same time, different edge servers have different container types, which can process a limited number and type of tasks. The container type set of edge server j is . When the container type of the edge server includes the task type of MD, the computing task i or the data of MDi can be cached:

3.4. Transfer Model

The computing task offloads to , represents the transmit power of MDi in the current time block, represents the channel gain of the upload task, and is the channel bandwidth. The number of MDs in the offload process will not be more than the number of sub-channels; the user is disturbed by the environment as , and the offload time of obtained by Shannon’s formula is

The energy consumption formula that offloads from the edge server is

3.5. Computational Model

The computing model consists of two parts: local computing and offloading computing in the optimal edge server. Tasks are completed within T. Suppose represents the time required to complete locally in the MD, and is the time to complete for the edge server, there are the following time constraints:

3.5.1. Local Computing

We consider that each MD has an within T that cannot be divided into subtasks. represents the CPU frequency allocated by MD to in T. We can get as follows:

In local computing, when the CPU is larger, the energy consumption is smaller. Let x be the chip energy coefficient of MD, the local computing energy consumption is as follows:

Let x denote the maximum calculation frequency of MD, and the task also has the following constraints on the calculation frequency of the local calculation:

3.5.2. Edge Server Computing

After all tasks are deployed, the selected server j executes the computing task immediately. Computing resources between edge server containers do not interfere with each other, and each container can be executed in parallel. In the same container, tasks are executed serially. The resource constraint allocated by edge servers for computing tasks is denoted as follows:

where represents the maximum computing resources that can be allocated by the container, and let be the chip energy coefficient of the edge server j; then, the CPU frequency of in the edge server is

The larger the computing delay on the edge server, that is, , the smaller the energy consumption. The computing energy consumption on the edge server side is

3.6. Problem Formulation

By jointly optimizing the edge cache and task deployment strategy, to minimize the total energy consumption value of the system, the problem is expressed as follows:

Obviously, the problem is a combinatorial optimization problem with multiple discrete variables, and it is an NP-hard problem.

4. Problem Solution

This paper uses deep Q-learning to solve this NP-hard problem, and the task needs to find the optimal or sub-optimal solution from the kinds of edge caches and task deployment strategies during deployment. To solve the combinatorial optimization problems with multiple discrete variables, global search algorithms such as genetic algorithm (GA) and heuristic algorithms such as dynamic programming (DP) can be used. However, as the number of MDs increases, the search space will be too large, and it will take a long time for such algorithms to obtain the optimal solution or sub-optimal solution. Therefore, the former algorithms do not meet the needs of the rapid response of the strategy in actual scenarios.

For this reason, this paper proposes an efficient edge caching and task deployment (DQCD) algorithm based on deep Q-learning, which can shorten the execution time of the algorithm while obtaining the optimal or sub-optimal solution. The algorithm consists of two parts: generating the edge cache and task deployment strategy and updating the edge cache and task deployment strategy. The edge cache and task deployment strategy are generated by taking the feature vector of the task and the feature vector of the server as the features input of the DNN in time block and outputting the predicted task deployment strategy . The neural network calculates the reward value by outputting the predicted task deployment strategy and stores the newly obtained actions into the buffer; then, it reads the training samples from the buffer to train the neural network in the deployment strategy update of the time block. This updates the weight and bias values in the trained model . The newly trained model is fed with new features in the next time block to generate a task deployment policy . Through the above-mentioned iterative process, the DNN training model is gradually improved to the optimal or sub-optimal task deployment strategy.

When generating edge cache and task deployment strategies, it is a huge work to directly generate a size policy space and find the optimal or suboptimal solution in such a large space. This paper optimizes the action space when the edge cache and task deployment strategy are generated through the container type, task type and edge cache size conditions, etc. On the one hand, the computing tasks of MD have different types, and the numbers and types of containers in the edge server are also different. By matching the task type and container type, the strategy matrix can be pruned and optimized. On the other hand, the cache size of the edge server is limited, and the policy matrix can be pruned and optimized through the maximum cache upper limit of the edge server. Through the above pruning optimization operations, the response speed of edge caching and task deployment strategies and the convergence speed of model training are accelerated.

The key elements of the model definition of the algorithm are as follows:

- (1)

- : The state of the system is a set of parameters about the MEC system, and the state at the time block can be defined as

- (2)

- : With the input, the DNN will choose from the above pruned-optimized deployment strategies, which can be defined aswhere is the task deployment vector and is the edge cache deployment.

- (3)

- : The goal of this paper is the minimization of system energy consumption. It is obvious that in the time block can be represented by the objective function (15), which can be defined as

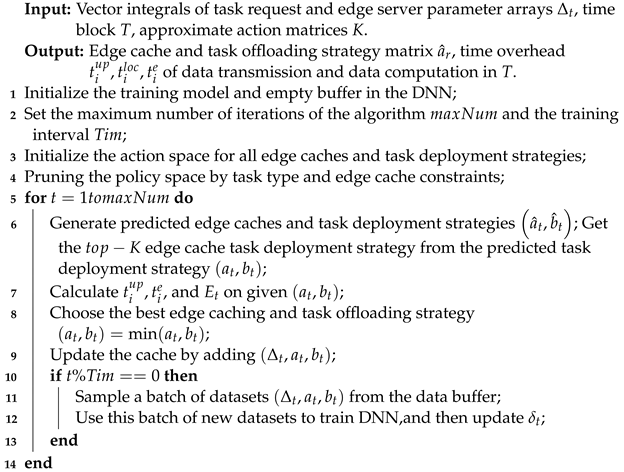

In summary, the DNN continuously learns from the optimal state operation matrix selected in the current state and generates better edge caching and task deployment strategy in self-iteration. In our algorithm, the buffer area given DNN is limited, so the DNN just learns the latest edge cache and task deployment strategy and then generates the latest data samples through the latest strategy. This is a closed-loop self-learning mechanism under the reinforcement learning mechanism, which improves its own edge caching and task deployment strategies in continuous iterations. The pseudocode DQCU is shown in Algorithm 1.

| Algorithm 1: Energy-efficient edge caching and task deployment based on DQN (DQCD) algorithm to solve the problem of minimizing system energy consumption. |

|

5. Simulation Results

In the simulation, without special instructions, we study a MEC system composed of three MEC servers and multiple MDs. The main parameters of this paper refer to [1], and the remaining parameters are as follows. When the MD transmits to the edge server, the sub-channel bandwidth follows a uniform distribution Mbps; the task transmission power follows a uniform distribution W. For the local computing model, the maximum CPU frequency of each MD follows a uniform distribution Ghz, and the CPU cycles required to calculate 1 bit follow a uniform distribution cycles. For the edge computing model, the maximum CPU frequency of each MD follows a uniform distribution Ghz, and the CPU cycles required to calculate 1 bit follow a uniform distribution cycles. We set the maximum number of iterations as , the training interval as , and the time block as s. The comparison algorithms in this paper include the following: the GACD algorithm uses the idea of the genetic algorithm for a global search in the edge cache and task deployment strategy matrix, respectively; the DPCD algorithm uses the idea of the genetic algorithm for a heuristic search in the edge cache and task deployment strategy matrix, respectively; and RandCD algorithms use random edge caching and task deployment strategies.

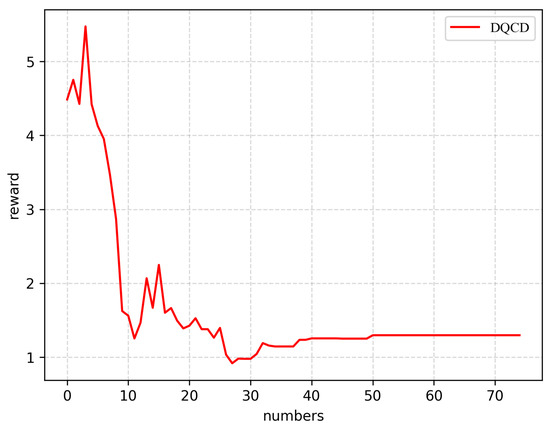

5.1. Algorithm Convergence Proof

During the training process of the DQCU algorithm, the deep neural network model is always updated iteratively, and the test data set is input into the current model to obtain the optimal deployment strategy. As shown in Figure 2, it is verified that the DQCD algorithm finally achieves convergence. At the same time, the DQCD algorithm can have a relatively stable suboptimal solution around the 50th group.

Figure 2.

DQCD training convergence process.

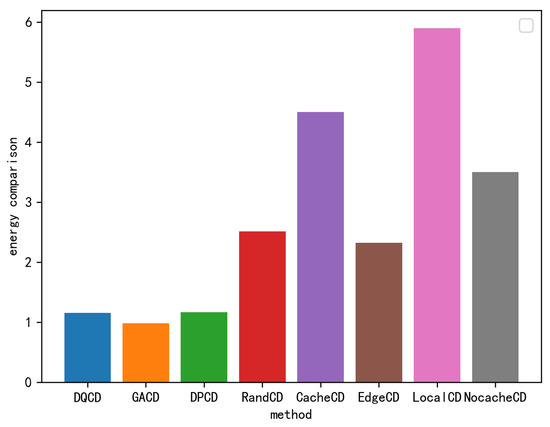

5.2. Algorithm Feasibility Verification

Figure 3 compares the energy consumption of the DQCD algorithm with the 1.0 T in the environment of 3 edge servers and 10 MDs. We compared the energy consumption of DQCD with GACD, DPCD and RandCD algorithms. In addition, we also verified the necessity of considering computing offload and edge cache. DQCD is also compared regarding the energy consumption of only considering the cache (CacheCD), only considering the offload to edge computing (EdgeCD), only considering local computing (LocalCD), and not considering the cache (NocacheCD). It can be seen from the figure that the energy consumption of DQCD can reach suboptimal solution, and the energy consumption comparison is obviously better than CacheCD, EdgeCD, LocalCD and NocacheCD, which proves the effectiveness of our proposed joint edge caching and task deployment algorithm in reducing energy consumption.

Figure 3.

Energy consumption comparison.

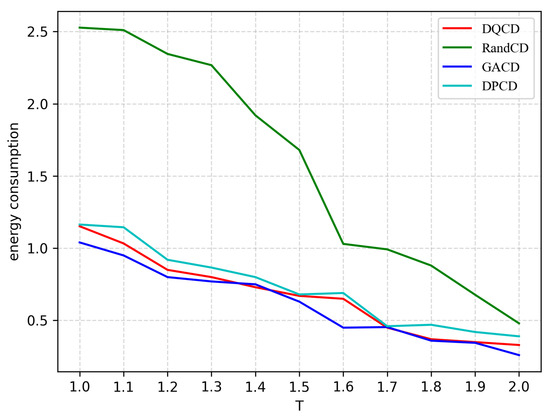

Figure 4 compares the energy consumption of the DQCD algorithm with the T increasing from 1.0 to 2.0 in the environment of 3 edge servers and 10 MDs. Compared with the GACD algorithm, DQCU can achieve suboptimal or even optimal task deployment strategies. Compared with DPCD, DQCU can achieve the purpose of reducing energy consumption; compared with RandCD, DQCD can have a great overall energy consumption reduction.

Figure 4.

Comparison of energy consumption.

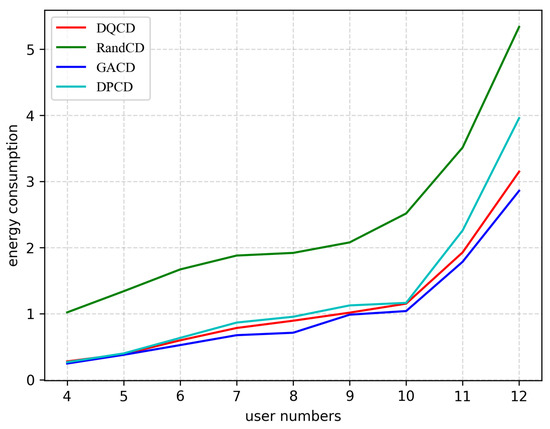

Figure 5 compares the energy consumption and algorithm execution time in the environment with 3 edge servers and 1.0 T in which MDs increase sequentially from 4 to 12. Compared with the GACD algorithm, DQCD can realize the task deployment strategy of the suboptimal solution in terms of energy consumption. Compared with the DPCD algorithm, DQCD has a slight energy consumption reduction. Compared with RandCD, DQCD has a large overall energy consumption reduction. Compared with RandCD, DQCD has a slight disadvantage in algorithm execution time. Compared with GACD and DPCD, the algorithm time of DQCD is greatly reduced. As the number of users increases, the advantage of DQCD in terms of the algorithm time becomes more and more obvious.

Figure 5.

Comparison of execution time.

Through the analysis of the above simulation results, the proposed DQCD algorithm can obtain a performance close to the optimal solution when solving the task deployment problem in the multi-MD multi-server MEC system, and at the same time, DQCD realizes the rapid response of the policy.

6. Conclusions

This paper studies an MEC system composed of multi-MD multi-servers, under the constraints of container type and computing ability, delay, task offloading and deployment, to solve the problem of minimizing system energy consumption. Task offloading, task deployment and edge caching strategies are all discrete variables, and the energy minimization problem is a combinatorial optimization problem containing multiple discrete variables, and it is NP-hard. In order to minimize energy consumption and achieve a fast policy response, this paper proposes a joint optimization algorithm for edge caching and task deployment based on deep Q-learning (DQCD) by optimizing the policy space to accelerate the model convergence speed. At the same time, a deep neural network model close to the optimal solution is obtained through iterative training. The simulation results show that, compared with the existing baseline algorithms, the DQCD algorithm not only achieves near-optimal performance but also effectively reduces the execution delay.

At present, the research scale of this paper is small because the current resources are limited. In a large-scale scenario with more edge servers and users, more powerful computing resources would be needed for algorithm model training, and then we would consider purchasing computing resources with more powerful performance so that larger-scale scenarios could be calculated. This is what we need to do next.

Author Contributions

Conceptualization, Y.L.; Methodology, Y.L.; Software, P.W.; Resources, C.D.; Data curation, P.W.; Writing—original draft, P.W.; Writing—review & editing, C.D. and Y.L.; Supervision, L.M.; Project administration, L.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data can be provided on simple request.

Acknowledgments

We would like to thank all participants.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Xu, J.; Chen, L.; Zhou, P. Joint service caching and task offloading for mobile edge computing in dense networks. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 207–215. [Google Scholar]

- Ananthanarayanan, G.; Bahl, P.; Bodík, P.; Chintalapudi, K.; Philipose, M.; Ravindranath, L.; Sinha, S. Real-time video analytics: The killer app for edge computing. Computer 2017, 50, 58–67. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, X.; Wang, D.; Lan, Y.; Hou, J. Mobility-aware task offloading and migration schemes in scns with mobile edge computing. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference (WCNC), Marrakesh, Morocco, 15–18 April 2019; pp. 1–6. [Google Scholar]

- Aruna, K.; Pradeep, G. Performance and scalability improvement using iot-based edge computing container technologies. SN Comput. Sci. 2020, 1, 91. [Google Scholar] [CrossRef]

- Seo, Y.-J.; Lee, J.; Hwang, J.; Niyato, D.; Park, H.-S.; Choi, J.K. A novel joint mobile cache and power management scheme for energy-efficient mobile augmented reality service in mobile edge computing. IEEE Wirel. Commun. Lett. 2021, 10, 1061–1065. [Google Scholar] [CrossRef]

- Ben-Ameur, A.; Araldo, A.; Chahed, T. Cache allocation in multi-tenant edge computing via online reinforcement learning. arXiv 2022, arXiv:2201.09833. [Google Scholar]

- Lei, L.; Xu, H.; Xiong, X.; Zheng, K.; Xiang, W. Joint computation offloading and multiuser scheduling using approximate dynamic pro-gramming in nb-iot edge computing system. IEEE Internet Things J. 2019, 6, 5345–5362. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, H.; Wu, C.; Mao, S.; Ji, Y.; Bennis, M. Optimized computation offloading performance in virtual edge computing systems via deep reinforcement learning. IEEE Internet Things J. 2018, 6, 4005–4018. [Google Scholar] [CrossRef]

- Peng, Z.; Huang, J.; Wang, H.; Wang, S.; Chu, X.; Zhang, X.; Chen, L.; Huang, X.; Fu, X.; Guo, Y.; et al. Bu-trace: A permissionless mobile system for privacy-preserving intelligent contact tracing. In Proceedings of the International Conference on Database Systems for Advanced Applications, Taipei, Taiwan, 11–14 April 2021; pp. 381–397. [Google Scholar]

- Peng, Z.; Xiao, B.; Yao, Y.; Guan, J.; Yang, F. U-safety: Urban safety analysis in a smart city. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–6. [Google Scholar]

- Jeong, J.P.; Yeon, S.; Kim, T.; Lee, H.; Kim, S.M.; Kim, S.-C. Sala: Smartphone-assisted localization algorithm for positioning indoor iot devices. Wirel. Netw. 2018, 24, 27–47. [Google Scholar] [CrossRef]

- Morabito, R. A performance evaluation of container technologies on internet of things devices. In Proceedings of the 2016 IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), San Francisco, CA, USA, 10–14 April 2016; pp. 999–1000. [Google Scholar]

- Zeng, H.; Wang, B.; Deng, W.; Zhang, W. Measurement and evaluation for docker container networking. In Proceedings of the 2017 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery (CyberC), Nanjing, China, 12–14 October 2017; pp. 105–108. [Google Scholar]

- Mach, P.; Becvar, Z. Mobile edge computing: A survey on architecture and computation offloading. IEEE Commun. Surv. Tutor. 2017, 19, 1628–1656. [Google Scholar] [CrossRef]

- Li, J.; Peng, Z.; Xiao, B. Smartphone-assisted smooth live video broadcast on wearable cameras. In Proceedings of the 2016 IEEE/ACM 24th International Symposium on Quality of Service (IWQoS), Beijing, China, 20–21 June 2016; pp. 1–6. [Google Scholar]

- Cao, K.; Liu, Y.; Meng, G.; Sun, Q. An overview on edge computing research. IEEE Access 2020, 8, 85714–85728. [Google Scholar] [CrossRef]

- Hesham, R.; Soltan, A.; Madian, A. Energy harvesting schemes for wearable devices. AEU-Int. J. Electron. Commun. 2021, 138, 153888. [Google Scholar] [CrossRef]

- Li, J.; Peng, Z.; Gao, S.; Xiao, B.; Chan, H. Smartphone-assisted energy efficient data communication for wearable devices. Comput. Commun. 2017, 105, 33–43. [Google Scholar] [CrossRef]

- He, Y.; Yu, F.R.; Zhao, N.; Leung, V.C.; Yin, H. Software-defined networks with mobile edge computing and caching for smart cities: A big data deep reinforcement learning approach. IEEE Commun. Mag. 2017, 55, 31–37. [Google Scholar] [CrossRef]

- Wang, R.; Li, M.; Peng, L.; Hu, Y.; Hassan, M.M.; Alelaiwi, A. Cognitive multi-agent empowering mobile edge computing for resource caching and collaboration. Future Gener. Comput. Syst. 2020, 102, 66–74. [Google Scholar] [CrossRef]

- Bi, S.; Zhang, Y.J. Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading. IEEE Trans. Wirel. Commun. 2018, 17, 4177–4190. [Google Scholar] [CrossRef]

- Tran, T.X.; Pompili, D. Joint task offloading and resource allocation for multi-server mobile-edge computing networks. IEEE Trans. Veh. Technol. 2018, 68, 856–868. [Google Scholar] [CrossRef]

- Guo, S.; Xiao, B.; Yang, Y.; Yang, Y. Energy-efficient dynamic offloading and resource scheduling in mobile cloud computing. In Proceedings of the IEEE INFOCOM 2016-The 35th Annual IEEE International Conference on Computer Communications, San Francisco, CA, USA, 10–14 April 2016; pp. 1–9. [Google Scholar]

- Dinh, T.Q.; Tang, J.; La, Q.D.; Quek, T.Q. Offloading in mobile edge computing: Task allocation and computational frequency scaling. IEEE Trans. Commun. 2017, 65, 3571–3584. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Yao, Y.; Peng, Z.; Xiao, B.; Guan, J. An efficient learning-based approach to multi-objective route planning in a smart city. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–6. [Google Scholar]

- Jiang, Y.; Xiang, Y.; Pan, X.; Li, K.; Lv, Q.; Dick, R.P.; Shang, L.; Hannigan, M. Hallway based automatic indoor floorplan construction using room fingerprints. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; pp. 315–324. [Google Scholar]

- Peng, Z.; Gao, S.; Xiao, B.; Wei, G.; Guo, S.; Yang, Y. Indoor floor plan construction through sensing data collected from smartphones. IEEE Internet Things J. 2018, 5, 4351–4364. [Google Scholar] [CrossRef]

- Shin, H.; Chon, Y.; Cha, H. Unsupervised construction of an indoor floor plan using a smartphone. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2011, 42, 889–898. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Min, M.; Xiao, L.; Chen, Y.; Cheng, P.; Wu, D.; Zhuang, W. Learning-based computation offloading for iot devices with energy harvesting. IEEE Trans. Veh. Technol. 2019, 68, 1930–1941. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, H.; Wu, C.; Mao, S.; Ji, Y.; Bennis, M. Performance optimization in mobile-edge computing via deep reinforcement learning. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTCFall), Chicago, IL, USA, 27–30 August 2018; pp. 1–6. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.M.O.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D.P. Continuous Control with Deep Reinforcement Learning. U.S. Patent 10,776,692, 15 September 2020. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).