2. Related Works

In this part, we mainly review the related routing protocols of UWSNs and analyze the advantages and disadvantages of these protocols.

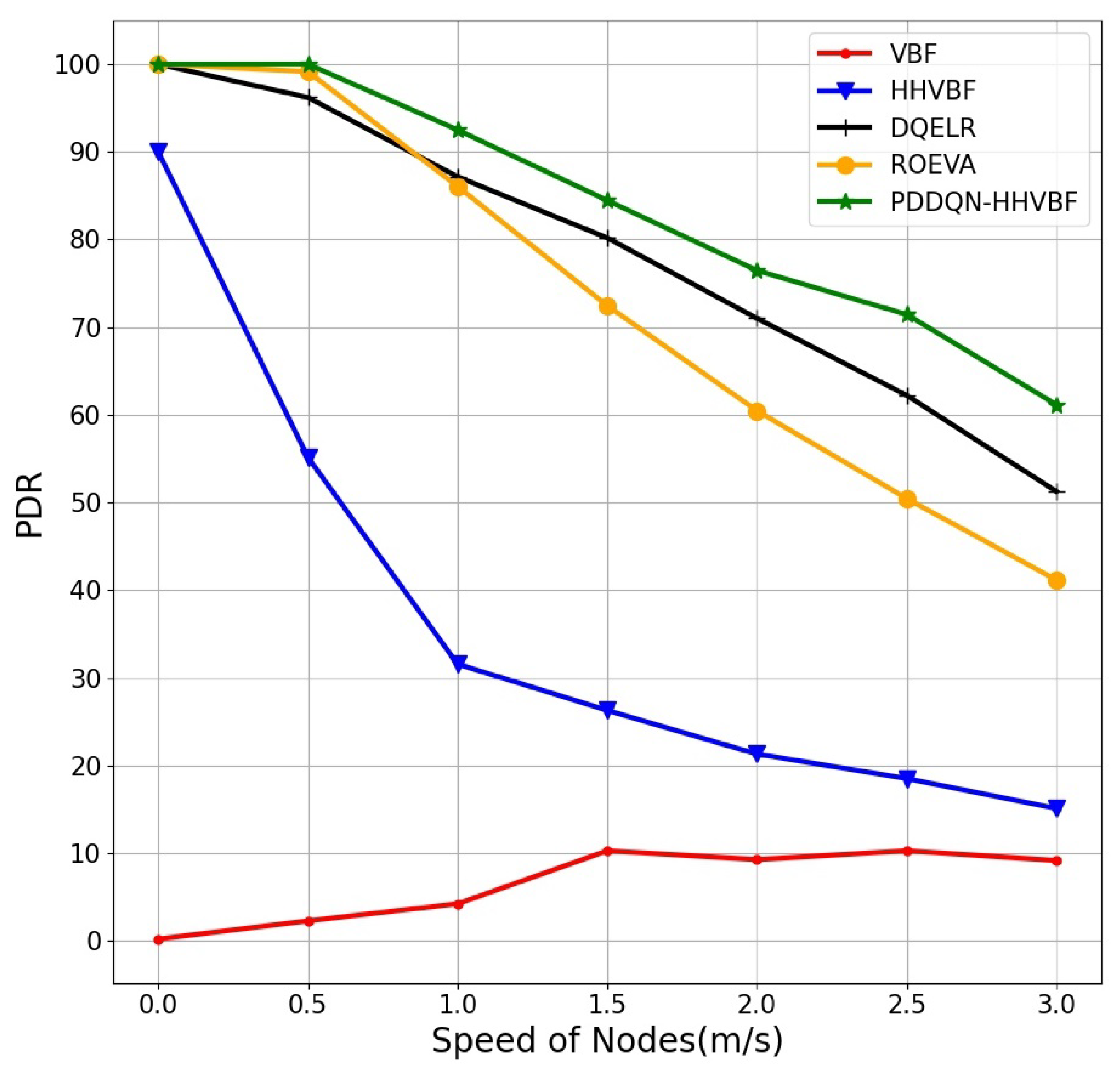

There are some location-based routing protocols, such as VBF (Vector-Based Forwarding) [

12], HHVBF (Hop-by-Hop Vector-Based Forwarding) [

13] and AHHVBF (Adaptive Hop-by-Hop Vector-Based Forwarding) [

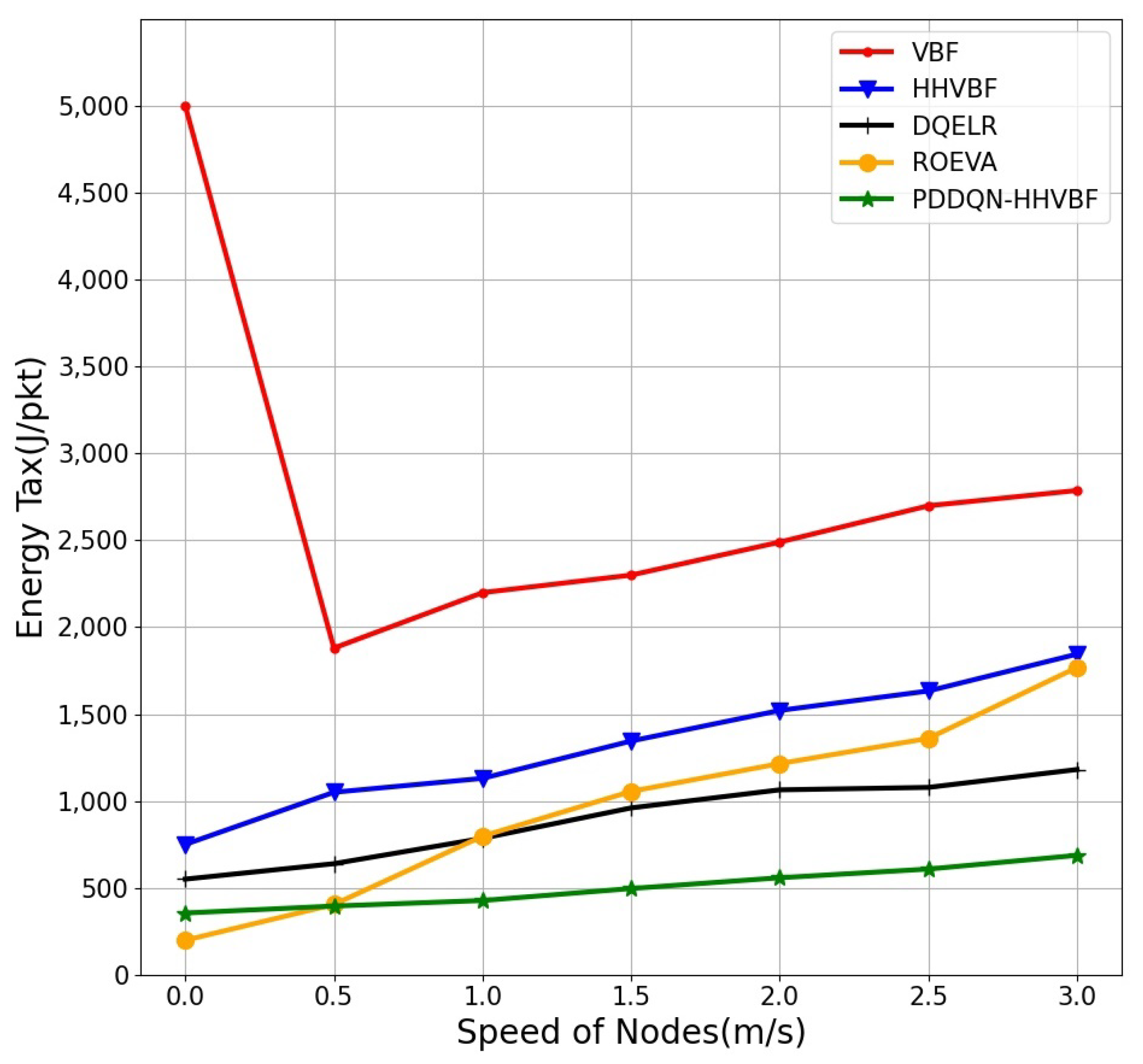

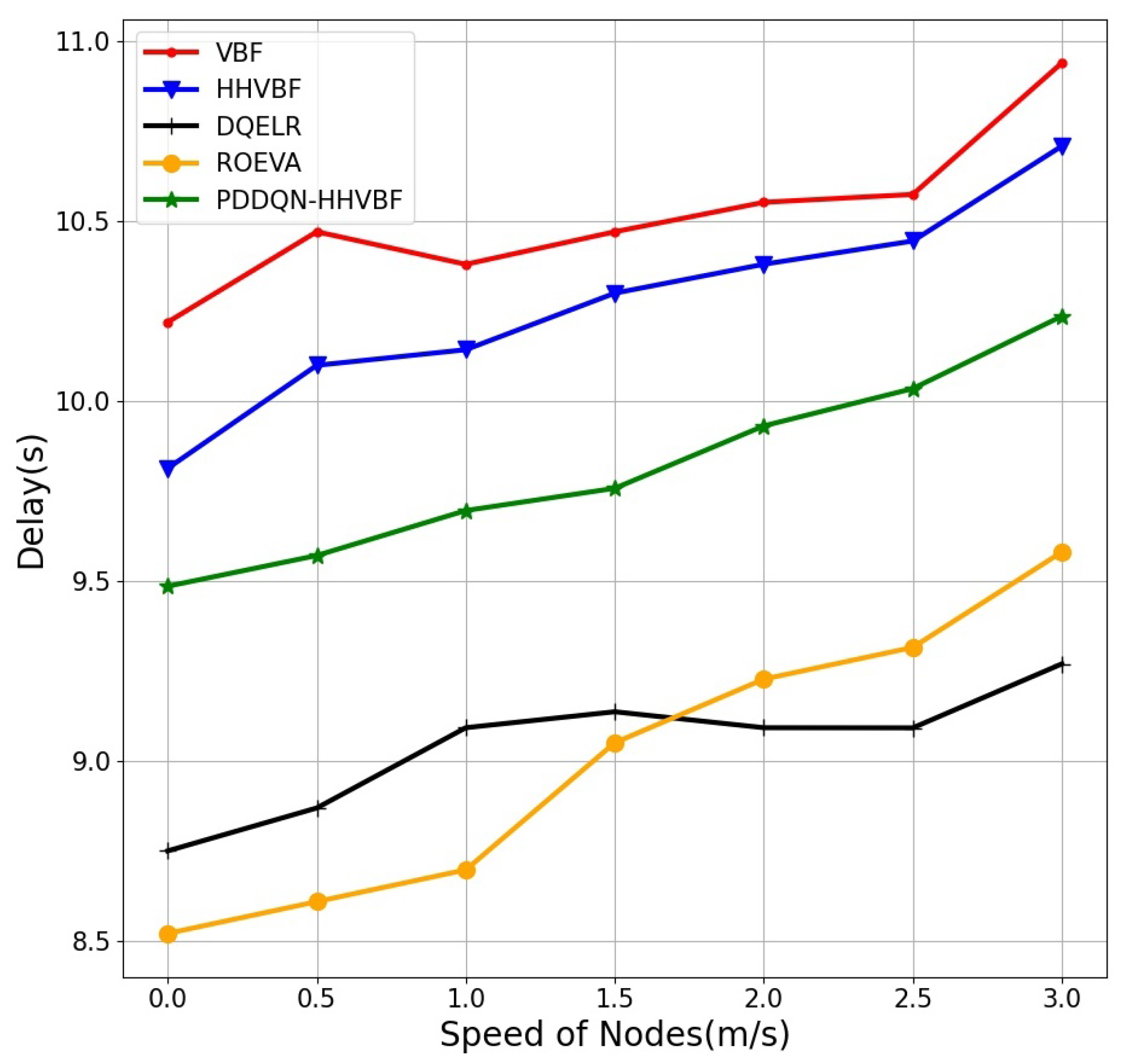

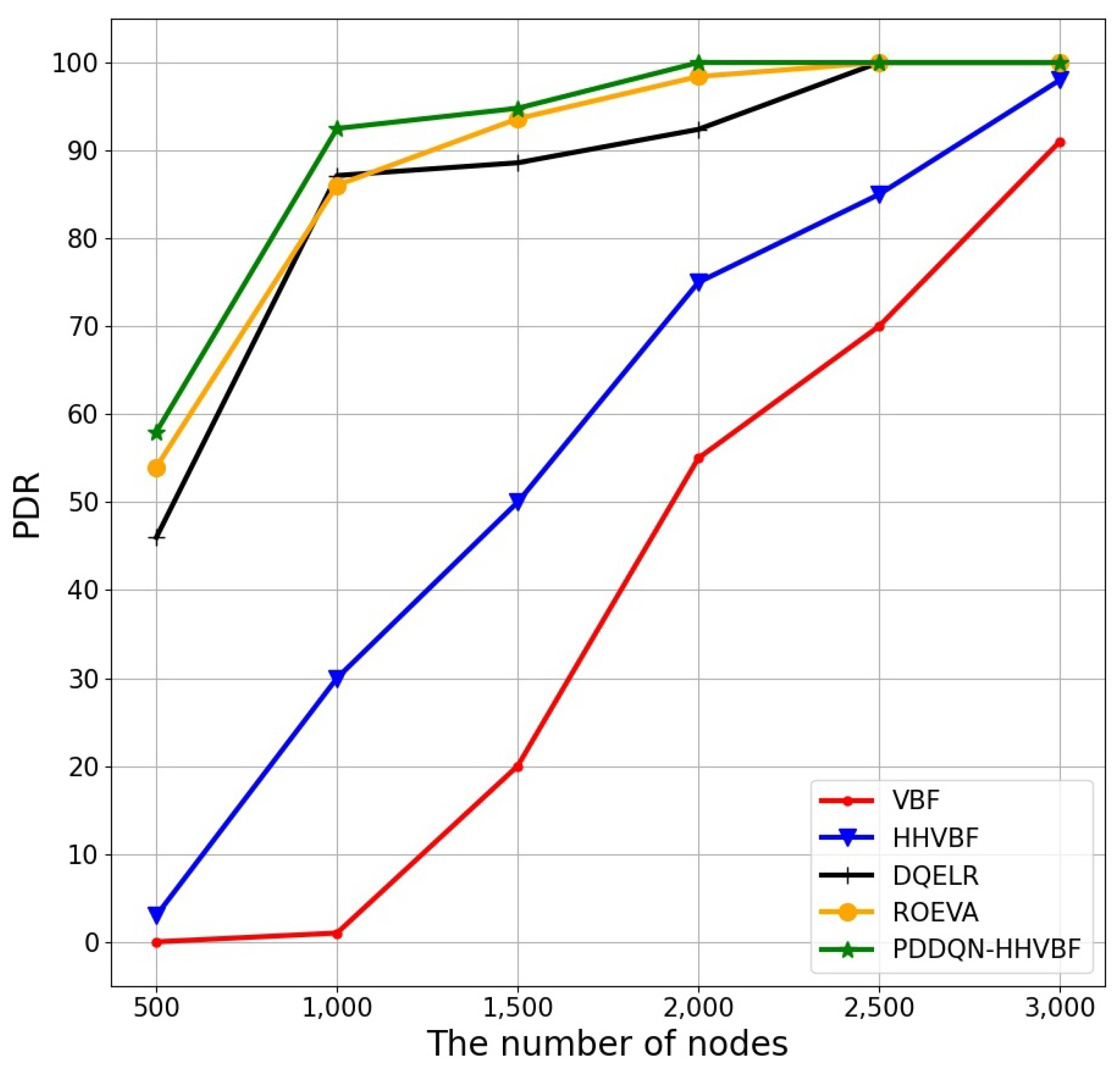

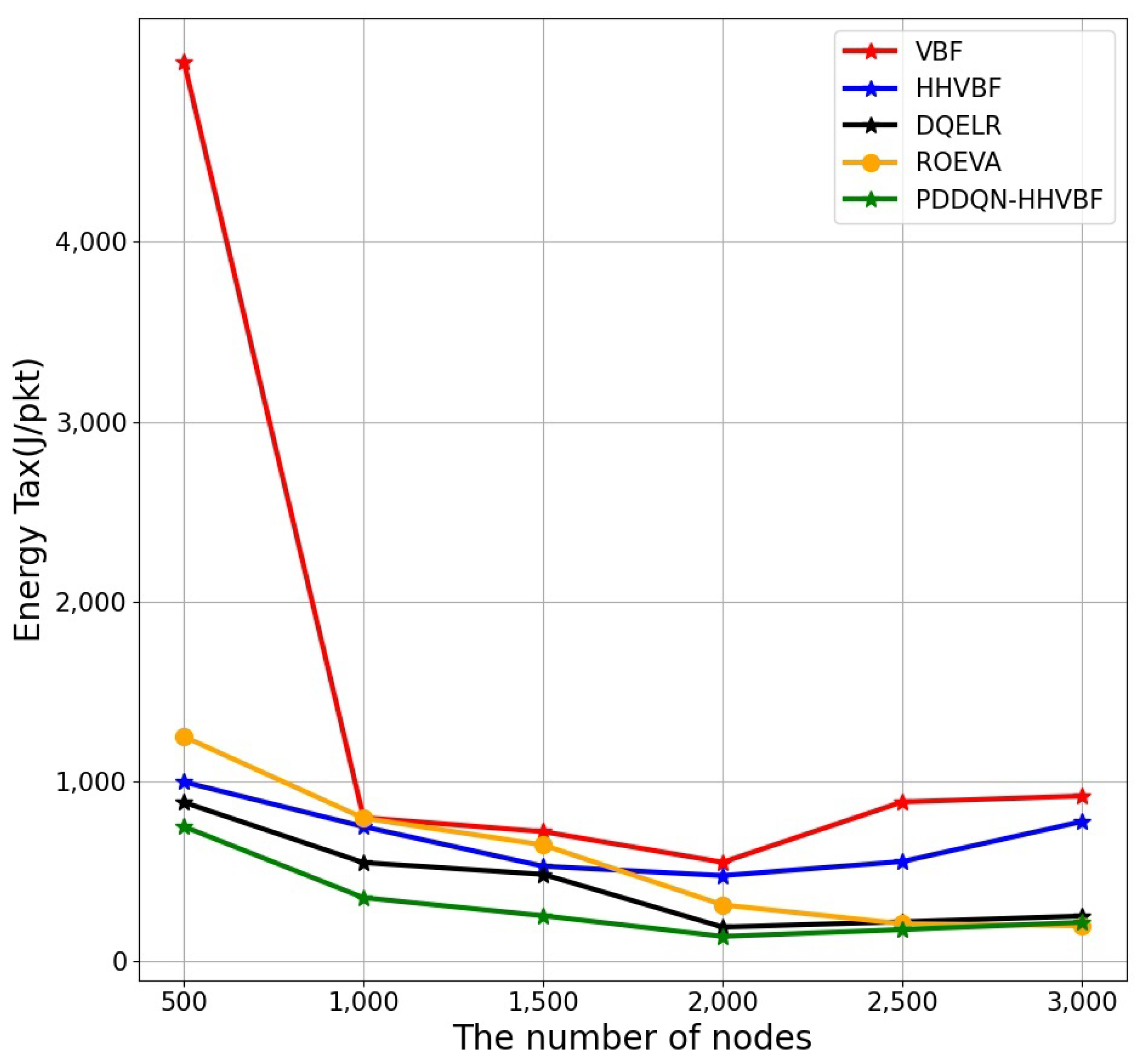

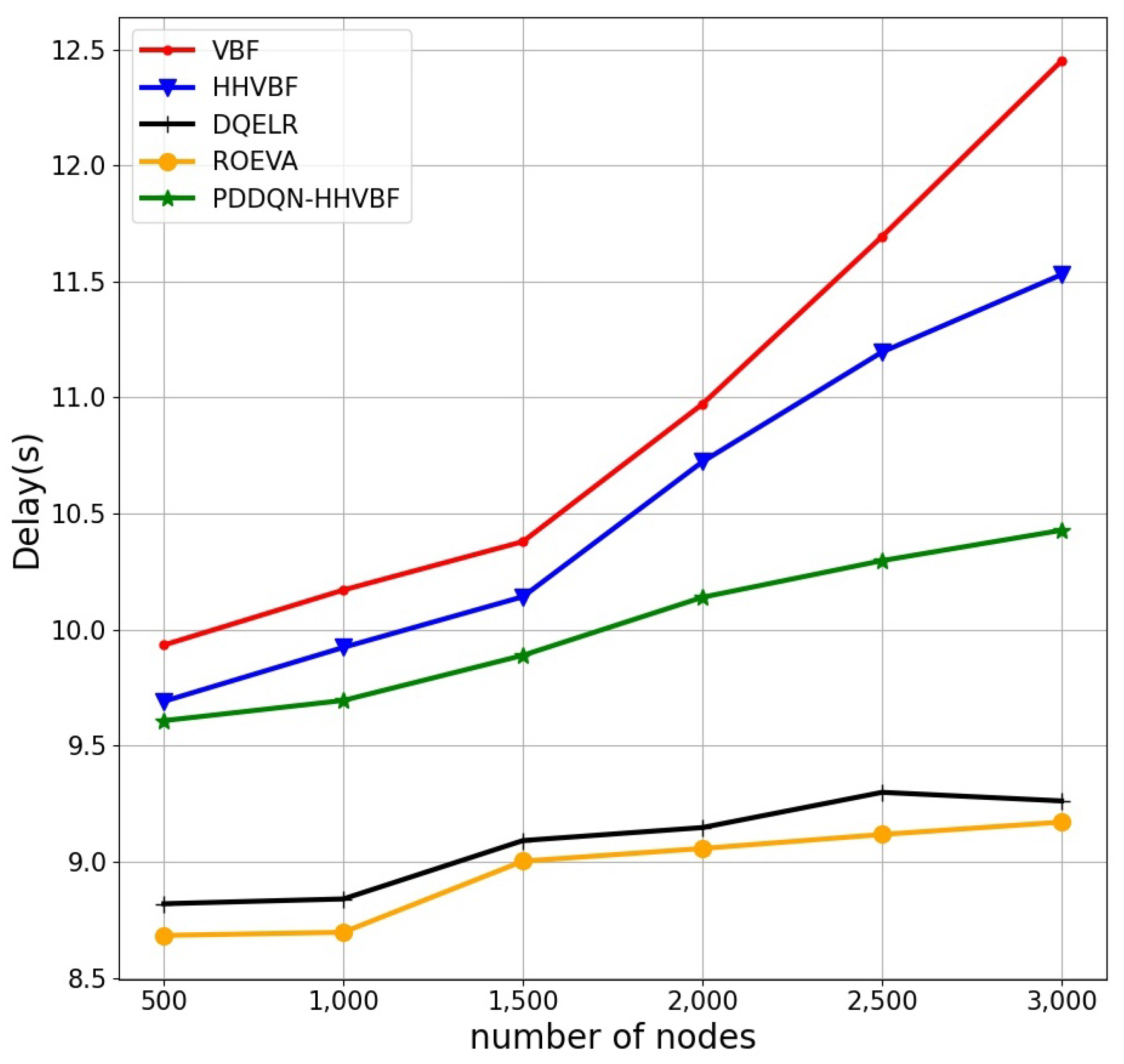

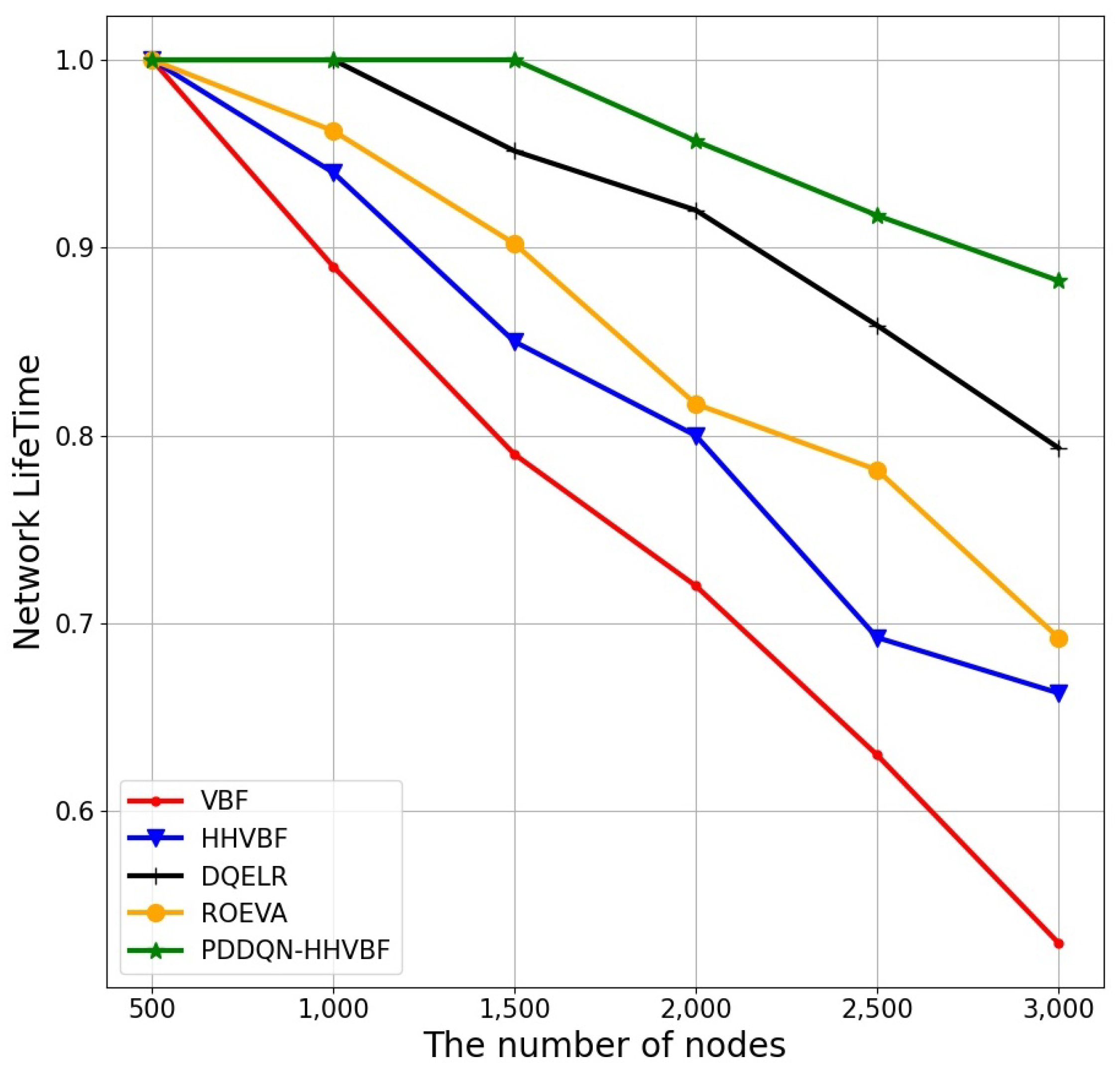

14]. VBF utilizes location information to improve the energy efficiency of dynamic networks. In VBF, data packets are delivered within the range of the routing pipeline, and nodes within this range will be used more frequently than nodes outside this range, which will lead to unbalanced energy consumption and shorten network life. In order to solve the problem of VBF, HHVBF, and AHHVBF are proposed, respectively. The HH-VBF protocol and the AHHVBF protocol also use the idea of routing pipeline. Unlike VBF, both HHVBF, and AHHVBF do not use a fixed pipeline to transmit data, but establish a new pipeline after each data transmission. In this way, compared with VBF, HHVBF, and AHHVBF protocols can still transmit data efficiently in areas with low node density, so they have higher packet delivery ratio, and each node can be used evenly, resulting in longer network lifetime. However, using the two protocol, a large network with a large number of nodes has a high data collision rate and low network lifetime.

There are some depth-based routing protocols. In [

15], Yan et al. proposed a depth-based routing (DBR) protocol, which effectively reduces the energy consumption and the number of collisions. The DBR protocol uses a greedy algorithm to delivery data packets from the sender to the receiver according to the depth information. However, if there is no surface receiver near the node, the data packets carried by the node will be discarded. In addition, the data packets can only be bottom-up transmission cannot meet the requirements of surface receivers to transmit data down. Based on the DBR protocol, the fuzzy depth-based routing protocol (FDBR) [

16] comprehensively considers hop count, depth and energy information to select forwarding nodes, thereby improving the energy efficiency and end-to-end delay performance of the UWSNs. High latency is also a challenge for underwater acoustic communication due to the slow propagation speed of acoustic signals in UWSNs. B. Diao et al. [

17] proposed an EE-DBR protocol that no longer uses the depth threshold used in DBR to narrow down the set of candidate nodes, instead uses ToA (Time of Arrival) technology [

18] to remove a fixed area to narrow down the range of the candidate forwarding set. However, when nodes are deployed sparsely, the algorithm cannot effectively solve the routing hole problem. There are other depth-based routing protocols, such as VAPR (Void-Aware Pressure Routing) [

19], IVAR (Inherently Void Avoidance Routing) [

20], and OVAR (Opportunistic Void Avoidance Routing) [

21], etc. These methods make use of hops, distance, forwarding direction and other information to keep packets away from the routing void during forwarding. However, on the premise of guaranteeing data transfer rate, these methods sacrifice more real-time performance, and the end-to-end delay is high. At the same time, the state change of some nodes will also lead to the state change of many other nodes, resulting in higher network overhead.

Although traditional routing protocols have improved network performance, the underwater environment is relatively harsh, and routing protocols must still deal with many constraints [

22]. Today, Multi-Agent Reinforcement Learning (MARL) [

23] has been successfully applied in multiple domains involving distributed decision making. A fully distributed underwater routing problem can be thought of as a cooperative multi-agent system. In [

24], adaptive, energy-efficient, and lifetime-aware routing protocol based on Q-learning (QELAR) uses the method of Q-learning to calculate its own routing decision. QELAR comprehensively considers the energy consumption of sensor nodes and the remaining energy distribution between adjacent nodes when designing the Q-value function, thereby optimizing the total energy consumption and network lifetime. However, the simulation model adopted by QELAR is for fixed nodes, which is not suitable for the situation where the movement of ocean currents causes node drift changes. In order to improve the real-time performance of the algorithm, an underwater multistage routing protocol (MURAO) based on Q-learning is proposed in [

25]. MURAO uses clustering to divide sensor nodes into algorithmic node sets for multiple sensors. The nodes in the cluster aggregate the collected data to the cluster head, and the cluster head routes to the sink node through multiple hops. MURAO allows multiple clusters to route data to sink nodes in parallel, improving real-time performance, but increases redundant data in the network and may cause data conflicts. In [

3], a DQN-based routing decision protocol DQELR with adaptive energy consumption and delay is proposed, which effectively prolongs the network lifetime of UWSNs. In addtion, the protocol adaptively selects the optimal node as a relay node according to the energy and depth state of the communication phase. However, this protocol uses DQN with a slow convergence speed, and does not consider the routing hole problem in the sparse network state, and is applicable to a single scenario. Ref. [

26] proposed a reinforcement-learning based routing congestion avoidance (RCAR) protocol. In order to improve energy efficiency and reduce end-to-end latency, the RCAR makes routing decisions based on congestion and energy. However, they do not consider void holes and cannot guarantee the reliability of transmission. Moreover, Zhou et al. [

27] designed a routing protocol for UWSNs, which focus on the characteristics of high energy consumption and high latency. This paper investigates a Q-learning-based localization-free routing protocol (QLFR) to prolong the lifetime as well as reduce the end-to-end delay for underwater sensor networks. However, the protocol does not consider the problem that nodes with large Q values become routing holes due to the influence of move current movement. Chen et al. [

28] proposed a Q-learning based multi-hop cooperative routing protocol for UWSNs named QMCR, which can automatically choose nodes with the maximum Q-value as forwarders based on distance information. Aiming to seek optimal routing policies, Q-value is calculated by jointly considering residual energy and depth information of sensor nodes throughout the routing process. In addtion, they define two cost functions (depth-related cost and energy-related cost) for Q-learning, in order to reduce delay and extend the network lifetime. The algorithm considers the overall energy consumption of the system, but does not consider the individual energy consumption of nodes. Therefore, the problem of short network life due to common relay node energy depletion will occur. Aiming at the problem of routing holes, Zhang et al. [

1] proposed a routing algorithm RLOR based on Q-learning, which combines the advantages of opportunism routing and reinforcement learning algorithms. The RLOR is a kind of distributed routing approach, which comprehensively considers nodes’ peripheral status to select the appropriate relay nodes. Additionally, a recovery mechanism is employed in RLOR to enable the packets to bypass the void area efficiently and continue to forward, which improves the delivery rate of data in some sparse networks. However, this protocol only has good performance for sparse networks. For large-scale UWSNs, this protocol does not effectively reduce data conflicts. For large-scale UWSNs, this protocol throughput and energy consumption performance are not good. Zhu et al. [

29] developed a reinforcement learning-based opportunistic routing protocol to enhance transmission reliability and reduce energy consumption. A reward function was developed based on reinforcement learning to seek optimal routing rules. Before forwarding the data packet, a two-hop availability checking function was defined, which avoids routing holes and identifies the trap nodes. However, this protocol only focus on the static nodes. When nodes move influenced by ocean current movement, it will perform worse.

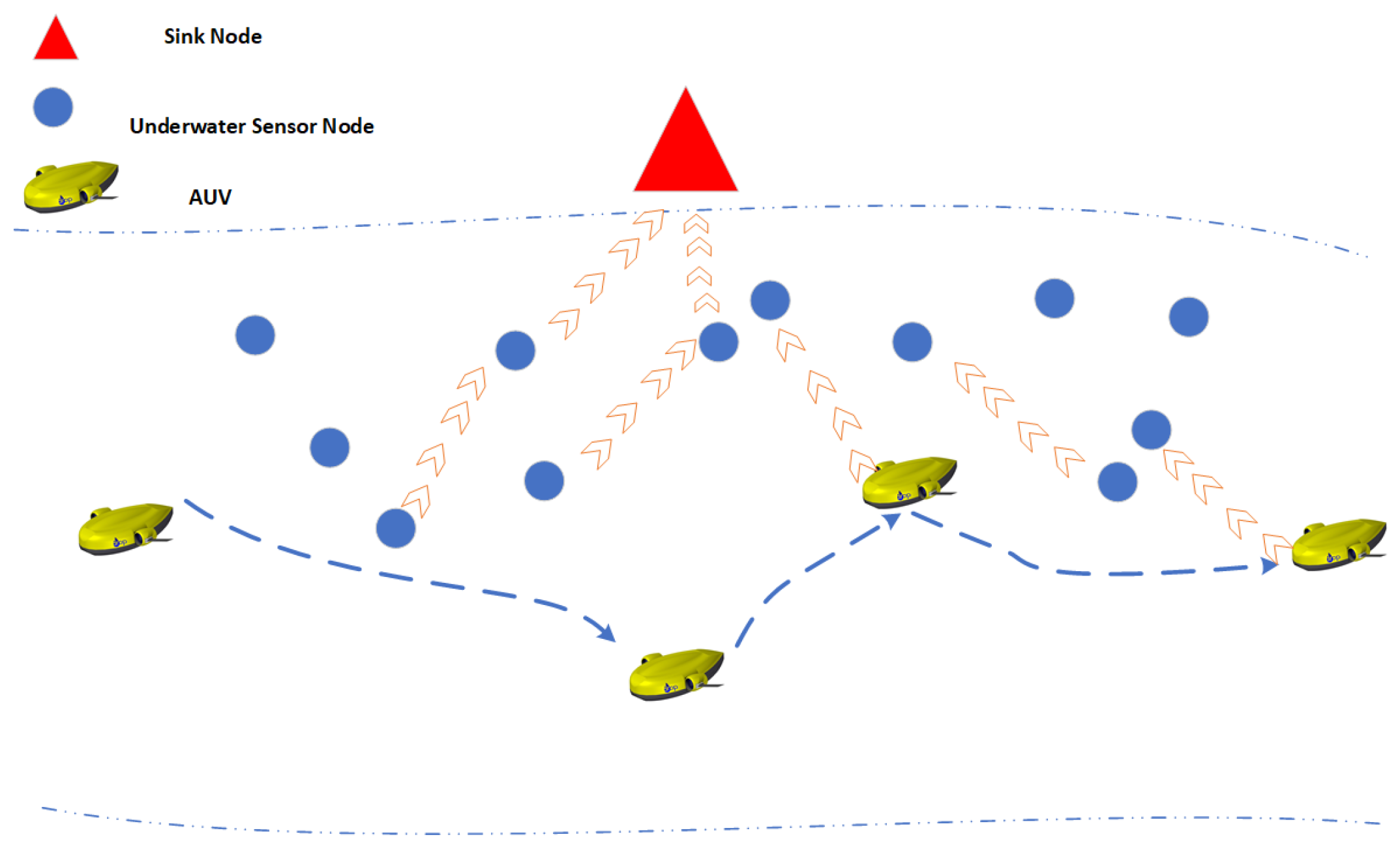

Although the above methods can reduce the delay, packet loss ratio and energy consumption of UWSNs to a certain extent. However, there are no good research results for M-UWSNs with mobile nodes as source nodes. And too many underwater routing protocols with RL pay attention to find low hop paths rather than short distance paths, while the long distance of ocean communication is the significant reason for the packets collision and energy loss in underwater. Thus, problems of low network lifetime, high energy consumption, and low packet delivery ratio of M-UWSNs have not been better solved. Based on the above problems, this paper proposes the PDDQN-HHVBF protocol, which aims at finding the optimal relay node to maximizing the packet delivery ratio, optimizing energy consumption and prolonging the network lifetime. In addition, when the AUV cannot find a suitable relay node during forwarding, this paper proposes the “Store-Carry-Forward” mechanism. In this way, AUV avoids wasting a lot of energy in searching for relay nodes or sending the same data packet repeatedly.

3. Reinforcement Learning

The PDDQN-HHVBF protocol uses the idea of Reinforcement Learning to find the optimal relay node. In this part, we will mainly introduce the concept of reinforcement learning and related algorithms, such as Q-learning, DQN, and DDQN.

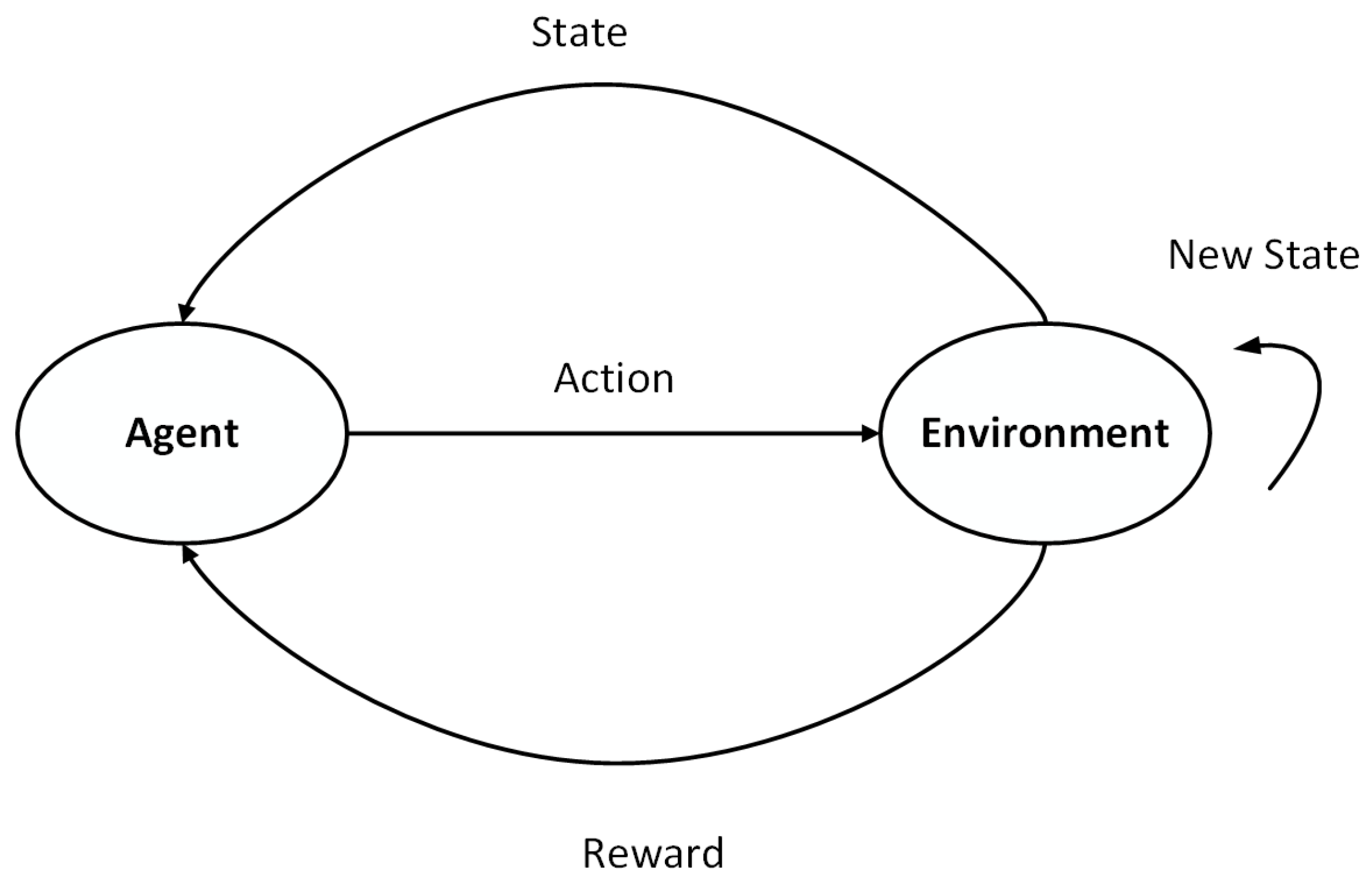

Reinforcement Learning (RL) is one of the paradigms and methodologies of machine learning, which describes and solves the problem that an agent achieves specific goals in the process of interacting with the environment through learning in a way that maximizes returns. Most RL methods are based on estimated value functions, state functions or state–action pairs

. It assesses how well an agent is in a specific state in the environment, or how well it performs a task [

3]. Usually the sample of RL is a time series, and the problem of RL is modeled and introduced into the Markov decision process (MDP). MDP is the theoretical basis for the use of RL in this paper. The schematic diagram of MDP is shown in

Figure 2.

Q-learning [

3] is an important RL algorithm that is widely used in various agent systems. This algorithm has the characteristics of ensuring convergence under certain conditions and has become the most widely used RL algorithm. When using Q-learning, the agent selects a action in the current state of the environment. After the environment receives the action, it will update to a different state, and then the environment will feed back a reward signal to the agent according to the changed state. After the agent receives the reward signal, the agent chooses the next action according to the received reward signal. In general, the state parameters are random, and the action selected by the agent according to the random state is also random. Therefore, a set of data pairs of state and action, together with the rules for changing the state, constitute a Markov decision process. During this process, a sequence of states, actions and rewards

is formed. The

expansion is shown in Formula (

1)

where

is the initial state under environment,

refers to the state parameters of the agent in the state after the

i iterations,

denotes the action that can only be selected in the

,

is the reward value of the environment feedback after the agent performs

. At the same time, the Q-learning algorithm is a Markov decision process, that is, the next state

in this process only depends on the current environmental state

and the selected action

in the current state. MDP will end when the state of the agent is

, that is, when the agent reaches a certain state set, the MDP ends. The learning goal of the agent using Q-learning is to maximize the cumulative return in the future. Q-learning is to estimate the reward value function according to the environmental state and the behavior taken at different times. Through multiple iterations of learning, the estimated reward value function is close to the real reward value function. At the same time, the agent needs to evaluate each action and expected reward value in each environmental state. The reward value function of the action

performed by the environmental state

is

, and the expected value of

is shown in the Formula (

2) as shown:

where

refers to the reward and return value obtained by the agent after selecting the action

from the state

. The learning goal of Q-learning is to find the action with the maximum reward value, that is, to maximize

, the

function conforms to the Bellman formula, and the maximization of

is expressed as Formula (

3):

where

is the estimated value representing the selection action of the state

, and

represents the action under state

that the agent may perform. The

Q value in the formula can also be obtained by an iterative method. In this way, the

Q value stored in the agent needs to be updated in each iteration process, which will waste a lot of time and space, so this method is not adopted. When doing experimental work, we can only collect partial samples for learning. Therefore, we will use the idea of combining the Temporal Difference algorithm (TD) to calculate and update the

Q value. The update method is as shown in Formula (

4):

where

, which is the learning rate of the agent,

is the loss factor of the reward value, and

is the behavior that the agent may perform in the state

. The formula is that the agent chooses the behavior execution that maximizes

among all possible actions. The agent will use a two-dimensional list to store the environmental state, the action taken and the corresponding reward value. In addtion, it queries the agent’s executable action

according to the state

, and obtain the corresponding reward value

.

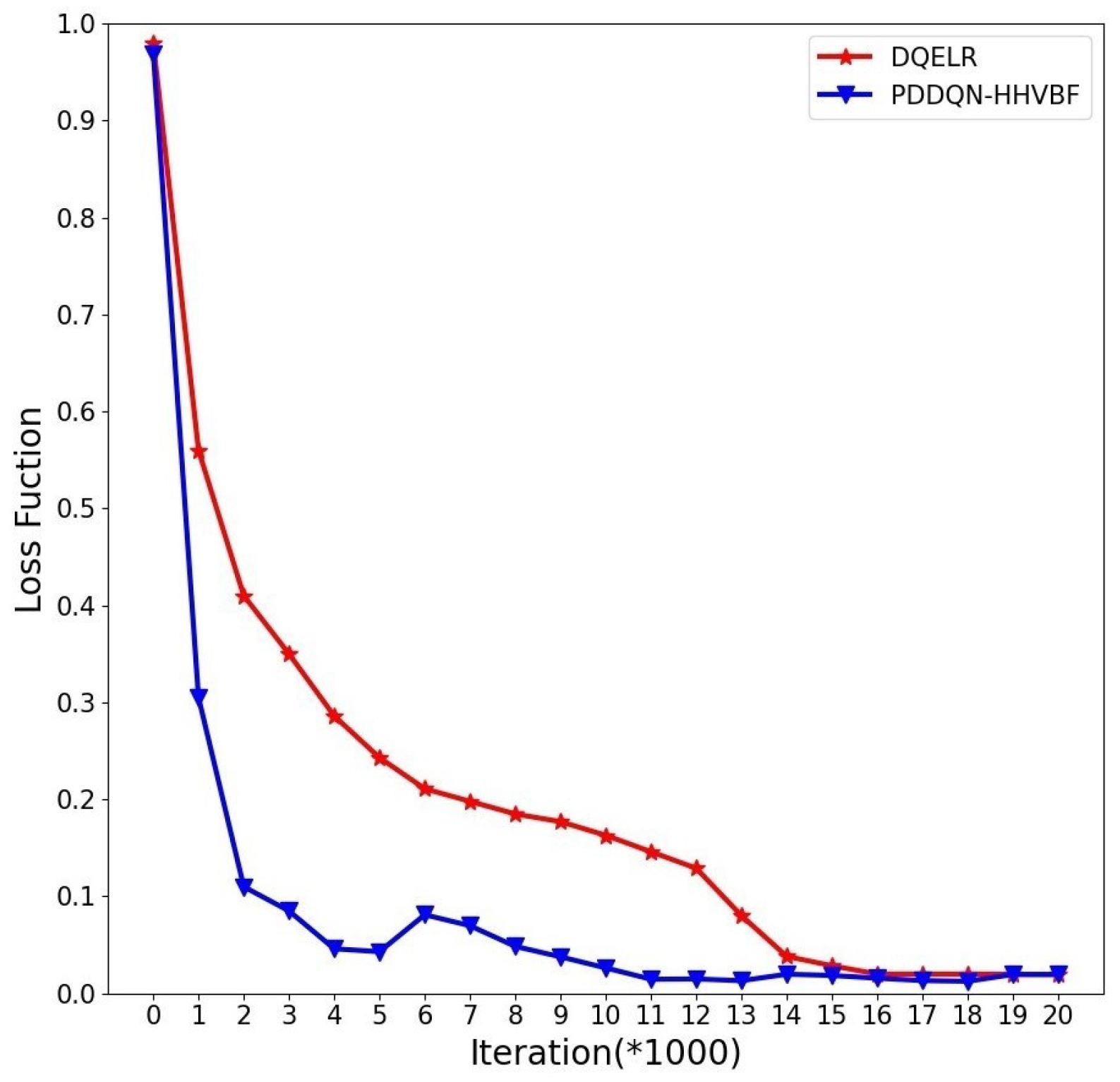

The storage method of Q-learning is to store all states and corresponding actions in the agent environment, as well as the resulting reward values, in a two-dimensional form. When the environment is complex, the number of states of the agent will be very large, and there will be many actions that may be taken. At this time, the two-dimensional list of stored state-behavior reward values will be too large and complicated, not only occupying a lot of storage space of the agent, but also It will cause the agent to take too long to query the Q table, so the Q-learning algorithm is only suitable for simple environments, and the efficiency is very low in complex environments. Different from the establishment of Q table in the Q-learning algorithm, which takes up a lot of space, DQN [

30] adopts the method of neural network combined with Q-learning, and obtains a function to calculate the

Q value by using the parameter

to train and fit. Not only can it save the storage space of the agent, but also does not need to spend a lot of time to query the Q table. Only in a specific environment state, DQN can output the income value of a specific action, and then the agent only needs to select the maximum income value. The action can be executed. The learning logic of DQN is to train the neural network parameter

through multiple

samples, so that the predicted return value function approximates the real return value function. The key to this approach is training the neural network. Therefore, it is necessary to determine the loss function to train the neural network. DQN uses mean squared error to define the objective function. The loss function is shown in Formula (

5):

where

is the target value for updating the Q value in DQN,

is the current value of the Q value predicted in the neural network. Then, we calculate the gradient of the neural network weight parameter

relative to the loss function, and use Formula (

6) to calculate the gradient value of the loss function relative to the parameter

.

can be calculated from the network structure. Then, we use the gradient descent method to update the parameter

until the loss function converges. However, the continuous state has a strong correlation with the action input. The action in one state will generate a reward value, and updating the parameters will affect the actions in other states. So, compared with the Q-learning algorithm, DQN is unstable. This instability can greatly affect the convergence speed of DQN. Reference [

31] proposed an experience replay method to solve the DQN instability problem. This method is that when the agent needs to use new data samples to update the parameters, it will also randomly sample the previously learned data to learn again, thereby avoiding the DQN instability problem caused by the correlation between state and action. The specific method is to store the state, action, reward and the next state sequence

of the agent after each learning into an experience pool sequence

D. In addtion, each time the reward function is updated to reuse the training to minimize the objective function, as shown in Equation (

7).

where

denotes that the experience sequence

D taken during the experience playback is a uniform random distribution sequence,

indicates the transition from the state

s to the next state, and

indicates the action that may be performed in the environment state of

. The above-mentioned experience replays DQN based on uniform distribution can avoid the strong correlation between data samples, which makes the training samples independent and identically distributed, and experience replay also enables reinforcement learning to learn and train in past strategies, makes the learned parameters more stable. However, this method is sensitive to the size of the experience pool. If too much experience is used, the learning time will be too long and the convergence speed will be too slow. In addition, there is a correlation between

(

) and the expected value of the current state

Based on the above problems, researchers proposed a method [

32], which uses a new neural network to generate the target value and use it as the final policy. The idea of the way is to calculate the target value and the current state estimate separately. In this way, there is no correlation between

and

. This method is called DDQN (Double Deep Q-Network) algorithm, and the loss function of this algorithm is as Formula (

8):

where

is the weight parameter of the target Q-Network. During the algorithm training,

will periodically synchronize from the parameter

of the current Q-Network. The objective value function of DDQN is shown in Formula (

9):

if state

is the state that ends the task, then

. Otherwise

. Where

Q and

are the same network structure, the

Q network is used to update the parameter selection action

a, and the

network is fixed and used for the target network to calculate the

Q value.

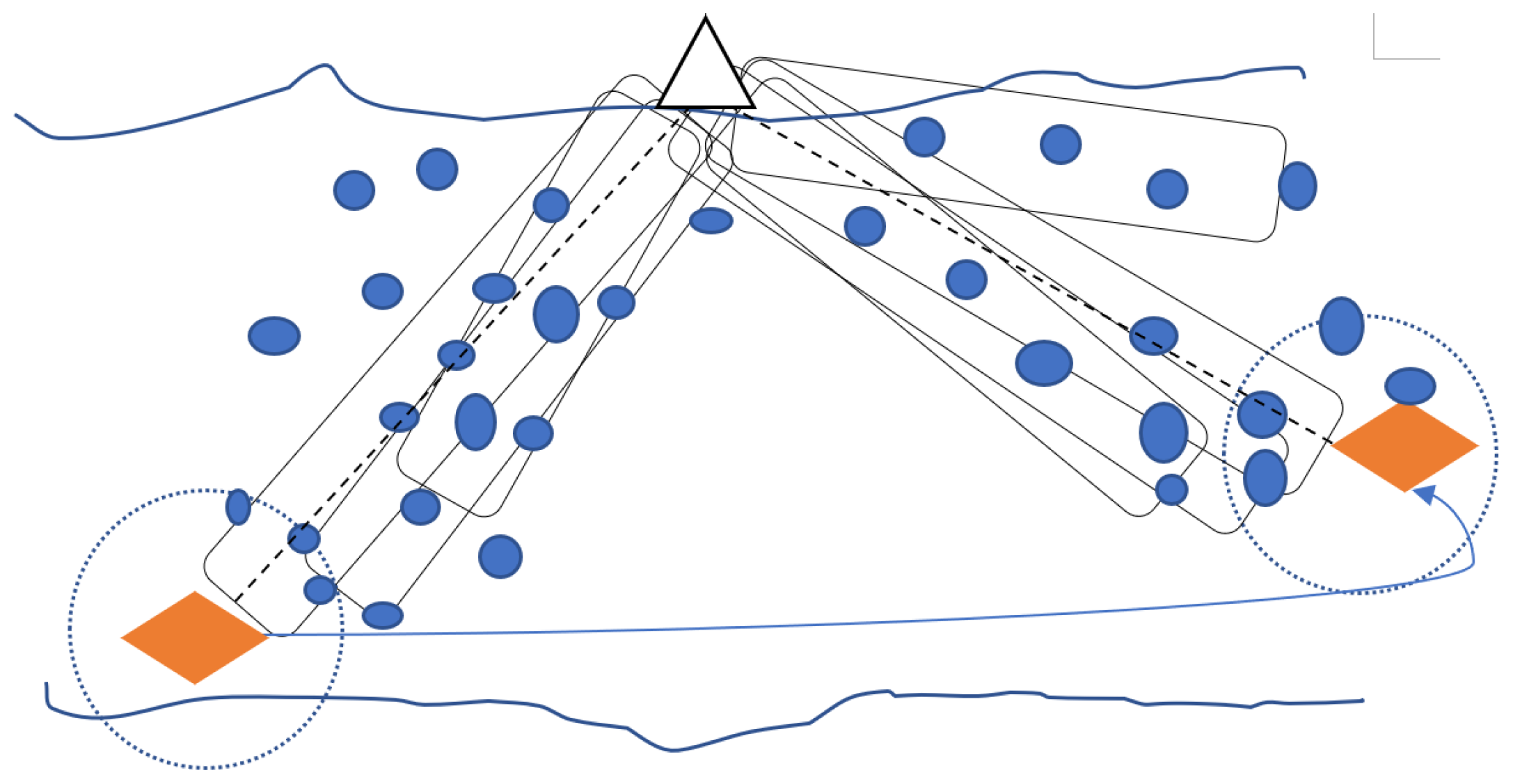

4. PDDQN-HHVBF Protocol

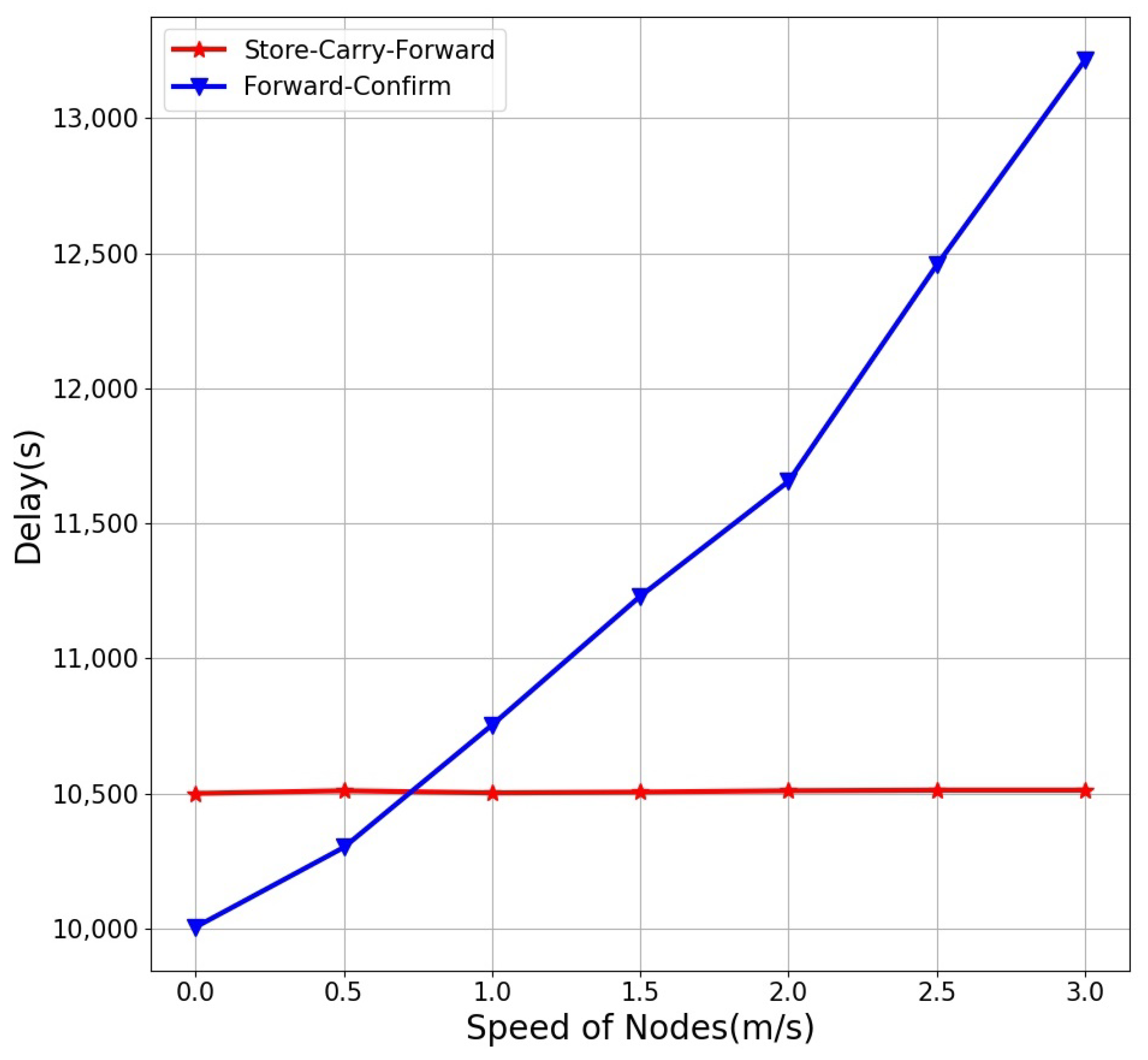

In the large-scale networking of M-UWSNs, researchers often set nodes with an appropriate density in the water to meet the mission requirements. However, due to the movement of ocean currents, the nodes drift, and the density of nodes in some areas is low, and the nodes in some areas are too dense. Routing holes may arise if the AUV sends data to a less dense area. In addtion, if data are forwarded to an area with high density, the selected relay node may appear to be the same relay node of multiple nodes, resulting in the problem of high delay caused by data collision or multiple backoffs when sending data at the same time. In addition, the more relay nodes that can be selected, the slower the algorithm converges. In view of the above problems, this paper proposes PDDQN-HHVBF, which combines the HHVBF protocol virtual pipeline method to transmit data in the pipeline with the distance vector as the parameter and the DDQN algorithm based on empirical priority to find relay nodes. The DDQN algorithm based on empirical priority can try to avoid the problem of routing holes, and find the route that can be successfully transmitted to the Sink node. The virtual pipeline makes the data packet propagate to the Sink node with the shortest distance, and the short-distance routing protocol greatly reduces the collision probability of the data packet in large-scale M-UWSNs. In addition, PDDQN-HHVBF will fully consider the energy problem of candidate relay nodes, so that the node energy load is balanced. In addtion, in this way, the M-UWSNs network lifetime is longer. Finally, this paper proposes a “Store-Carry-Forward” mechanism. This mechanism makes AUV not forward data temporarily when it cannot find a suitable relay node, but continue to carry and store the data and continue to collect new data packets according to the track until it finds the suitable relay node. Then, the AUV forwards all stored data at the suitable relay node.

This section will introduce the specific process of the PDDQN-HHVBF routing protocol, from the establishment of the virtual pipeline, the setting of candidate relay nodes, the DDQN algorithm based on empirical priority, the selection of the optimal relay node, and the “Store-Carry-Forward” mechanism of AUV. These five aspects describe in detail the working principle of the PDDQN-HHVBF routing protocol.

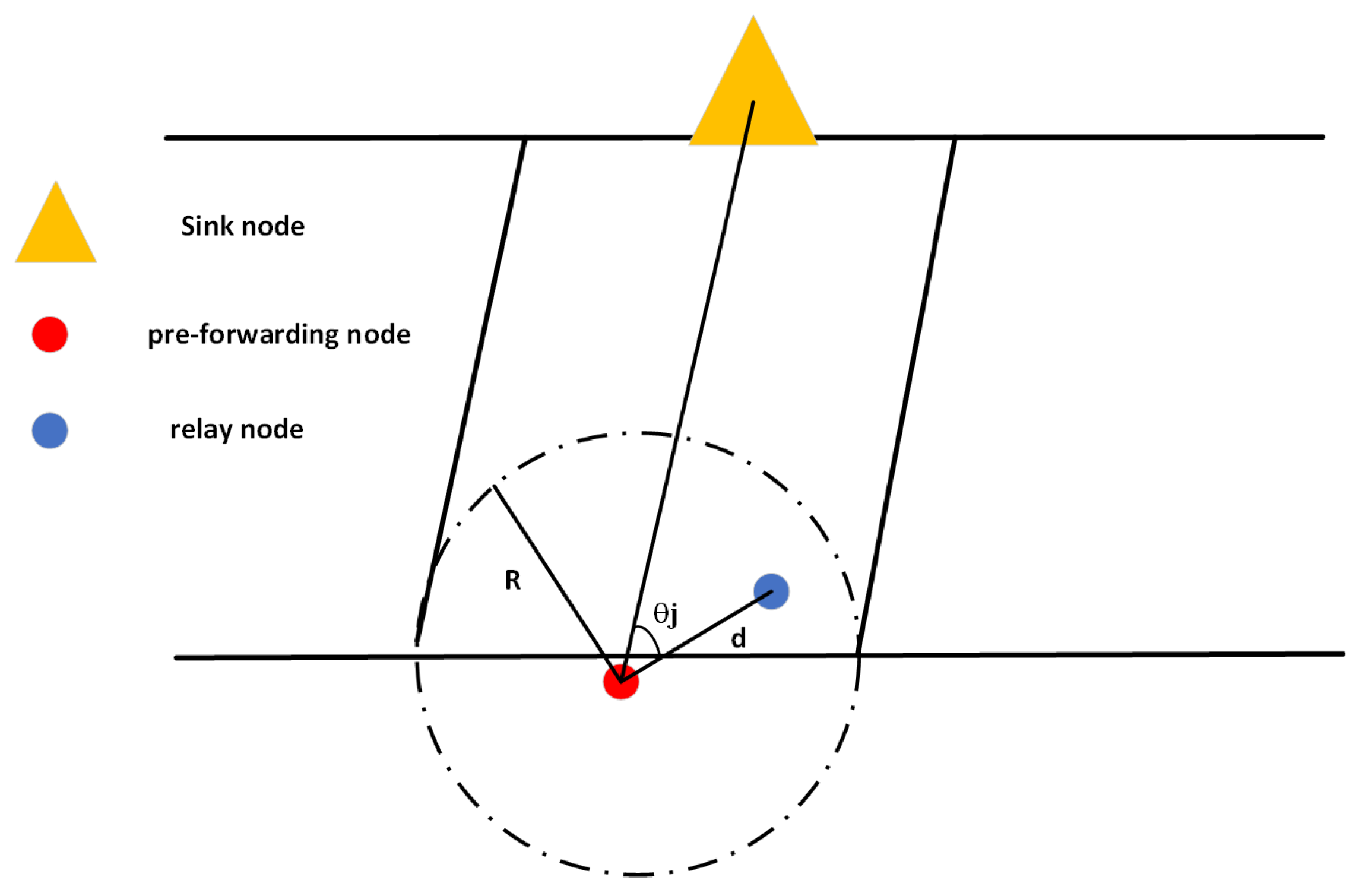

4.1. Establishment of the Virtual Pipeline

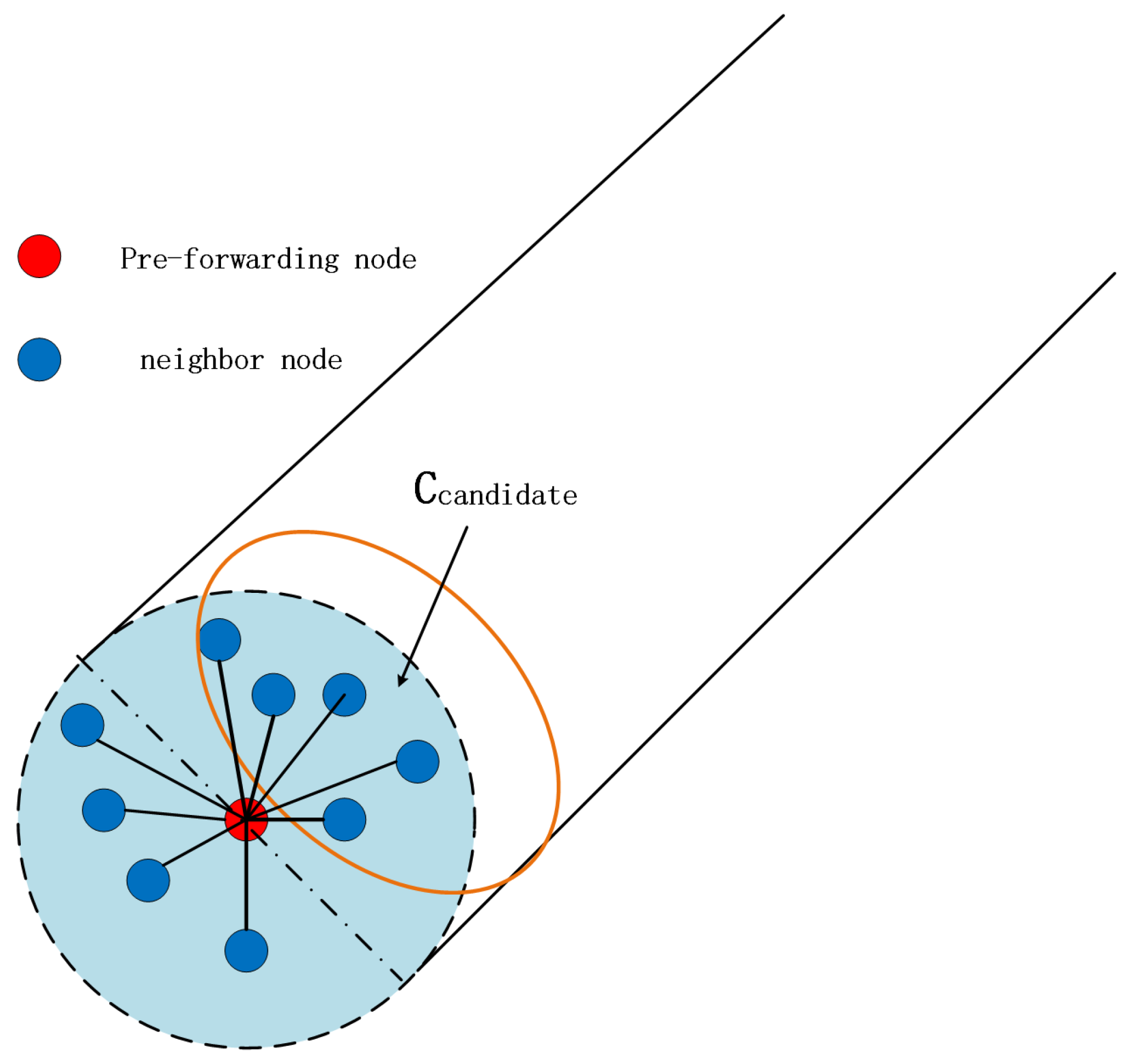

This part mainly introduces the way of establishing the virtual pipeline. Before the AUV and each node in M-UWSNs transmit data, a virtual pipeline is established with the Sink node. The virtual pipeline is established as shown in

Figure 3. As

Figure 3, the radius of the pipeline is

R, and

d is the distance that the node deliveries data to the relay node. This paper assumes that the transmission range of AUV and all nodes is the same, and the virtual pipeline radius and transmission range are the same as

R. The nodes in the pipeline are the nodes that the sending node can transmit to, that is, the candidate relay nodes.

When nodes perform data transmission, the nodes inside the virtual pipeline can be used as candidate relay nodes, while the nodes outside the virtual pipeline can no longer be used as candidate relay nodes. As shown in

Figure 4, when the node holding the data packet successfully transmits the data to the relay node, the relay node will act as the new node holding the data packet, and at this time, a virtual pipeline between the node and the Sink node is established again.

4.2. Setting of Candidate Relay Nodes

In the above, we have introduced in detail how to establish a virtual pipeline when a node forwards data. In this part, we will introduce how to determine a candidate relay node when a node within a certain pipeline forwards data. Before the sending node data, it first sends a request information packet, which requests all node location information, energy information and candidate relay node information around the sending node. Nodes in M-UWSNs are defined as Formula (

10):

n and

m, respectively, represent the sensor node and sensor node number, as shown in

Figure 5:

is the node that pre-forwards data, first find the neighbor node of

, when

satisfies the Formula (

11), it will represent the neighbor node belonging to

:

after finding the neighbor nodes of

, all neighbor nodes need to judge whether they belong to the nodes in the virtual pipeline established by

and Sink node. As shown in

Figure 3, the angle between

and

is

. When the

satisfies Formula (

12), it means that

belongs to the node in the virtual pipeline established by

and the Sink node, that is,

is the candidate relay node when

node pre-forwards data:

represents the set of candidate relay nodes of the sending node

. All candidate relay nodes of

can be selected according to the above formula. At the same time, in order to prevent the candidate relay node from drifting out of

due to the movement of ocean currents, which leads to the problem of routing holes. All candidate relay nodes will calculate their own candidate relay node number

and pass it to the pre-forwarding data node. Larger

means that the more relay nodes can be selected, the less possibility of routing holes.

4.3. DDQN Algorithm Based on Empirical Priority

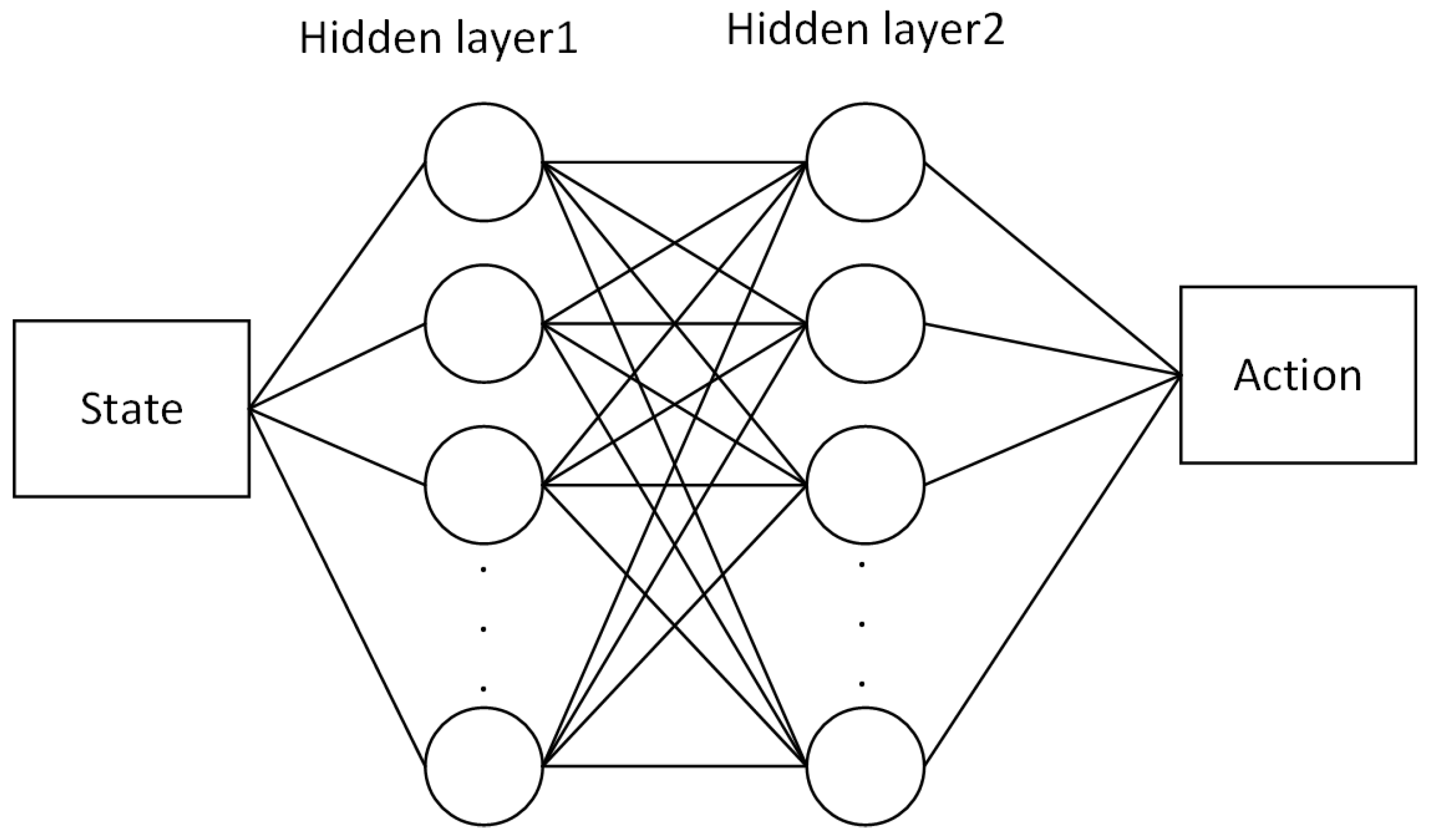

This part will detail the deep reinforcement learning algorithm used in the PDDQN-HHVBF protocol. The pre-forwarding data node will select the optimal relay node according to the predicted reward value of the neural network parameters obtained from the training, specifically using the DDQN based on the priority experience value playback. The neural network used in this method mainly includes two parts: the current network and the target network. Among them, the current network is mainly responsible for the current state

s of the root candidate relay node to give the action

a to be executed, the network is optimized based on the policy gradient. In addtion, the target network is the evaluation of the output action

a of the current network in the state

s. The target network is based on a value function. In the routing decision problem solved in this paper, the current network adopts a four-layer structure, and the network uses a fully connected network. The state

s is used as the output layer, the second and third layers are hidden layers, and the last layer is the output of action

a. In this network, the hidden layer uses the ReLu activation function for nonlinearization, and the output layer uses the Tanh activation function for nonlinearization, where the output range is

, and the current network of the strategy is shown in

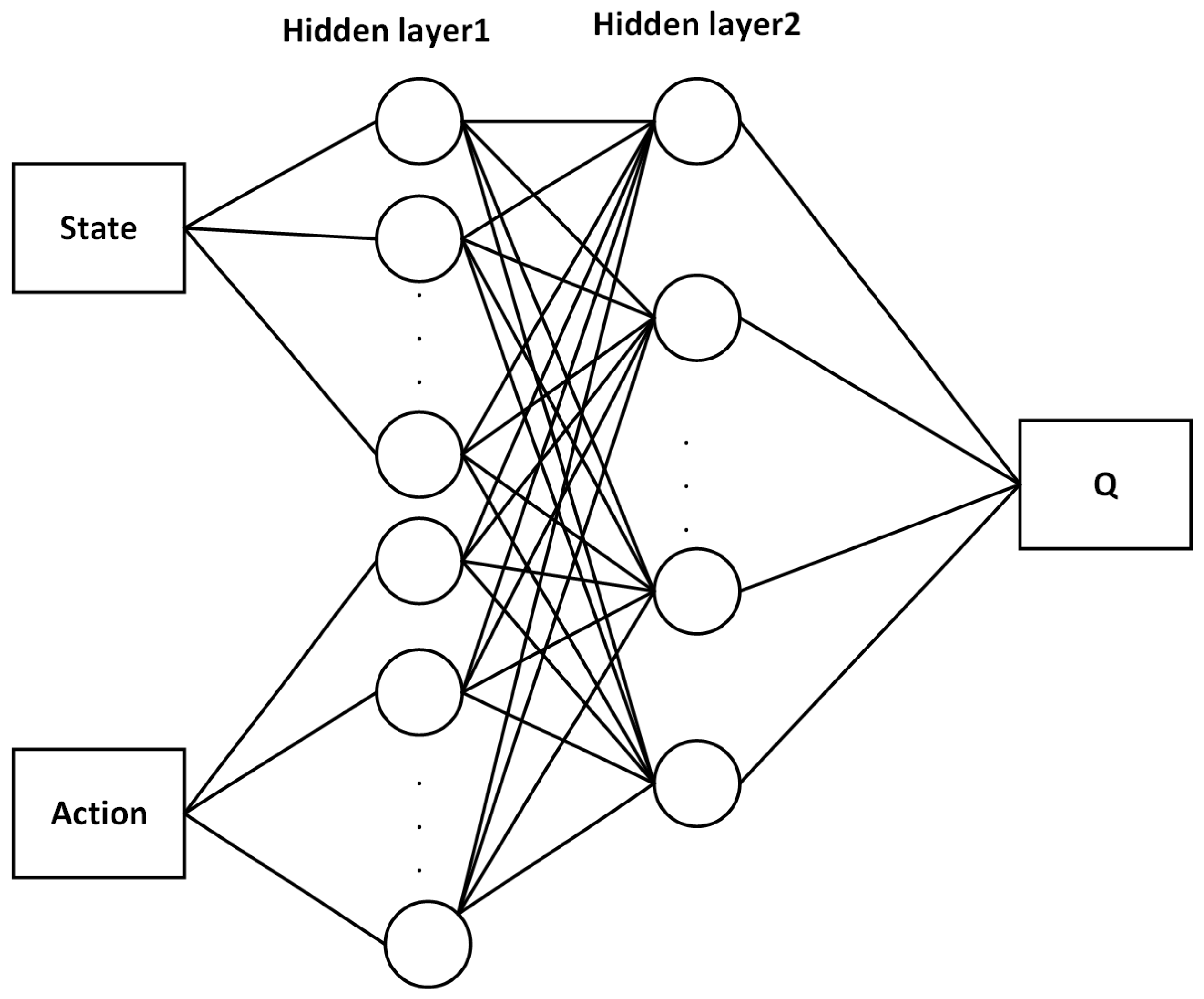

Figure 6. The target network also uses a four-layer network structure, and also uses a fully connected structure. The state–action pair

is used as the input layer, the second layer and the third layer are used as the hidden layer, and the last layer outputs the evaluation value

Q of the state and action pair. The target network is shown in

Figure 7.

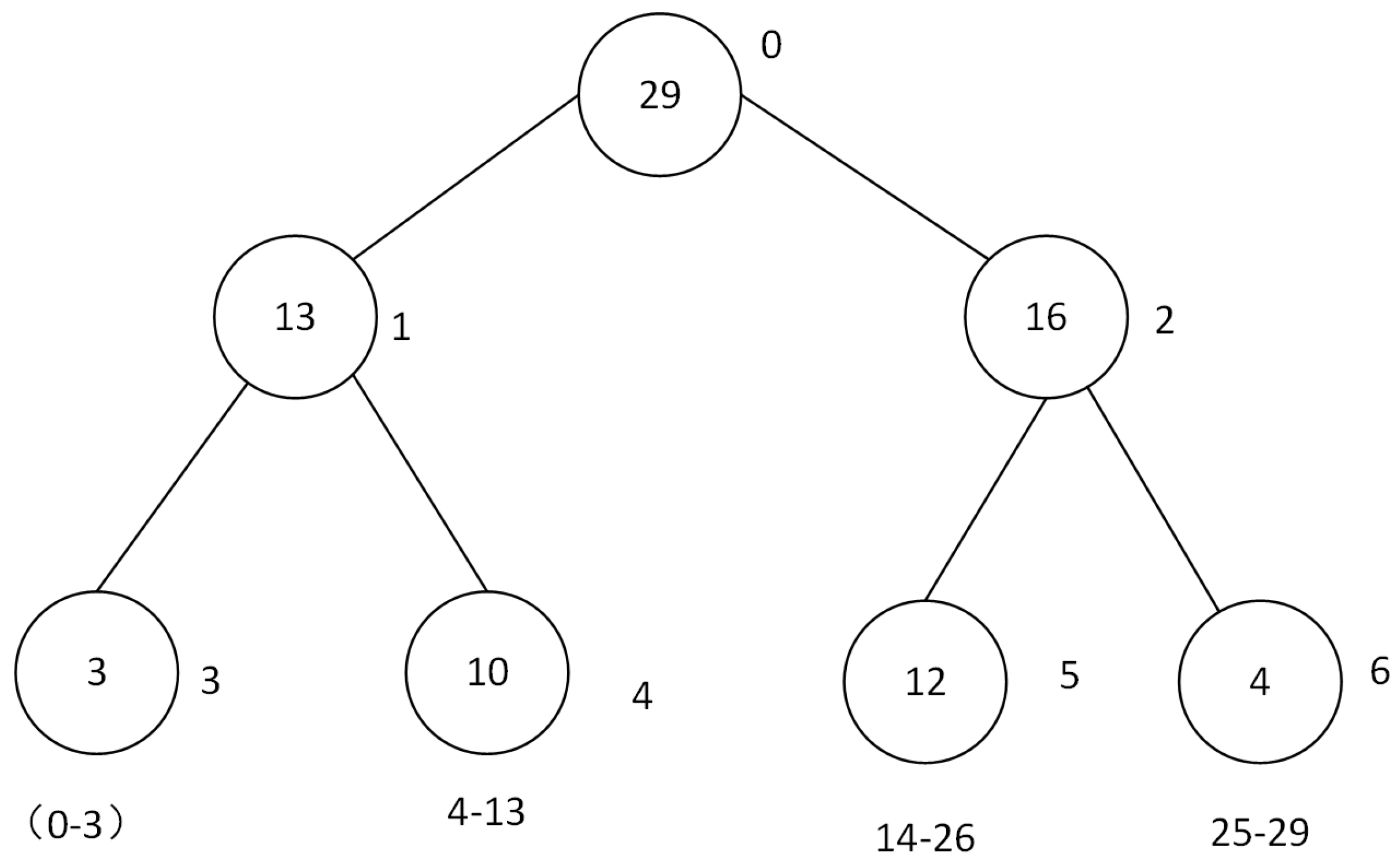

The experience replay mechanism mentioned above can avoid the strong correlation between data samples to a certain extent, but this method is not efficient to a certain extent, because the importance of the samples is not the same, making the agent learning efficiency is not the same. Therefore, the researchers proposed a priority-based experience playback mechanism, that is, when extracting samples from the experience pool during the experience playback process, the samples are sorted and sampled according to the value of the sample priority

from large to small. However, the samples sampled by the pool are too concentrated, which makes the error shrinking slowly and the training time is too long. Especially in the process of function estimation, the samples with high initial training errors will be frequently used in the network, which will make the learning results overfit. In response to the above problems, this paper takes advantage of the random uniform sampling of priority playback and the

. The random sampling method is introduced into the priority playback mechanism so that the playback samples will not be too concentrated and lead to overfitting. The sampling probability of sample

i is defined as Formula (

13):

in the above formula,

is the priority of sample

i,

is the priority sampling factor, when

is 0, the sampling method is random uniform distribution, when

, it will not always sample with the high error data. Because the research scenario of this paper is a large-scale M-UWSNs routing problem, the required sample pool will be large. During the process of storing and sampling samples, the search and sorting of samples will make the algorithm too complex, resulting in slow convergence of the algorithm and waste a lot of time in the calculation process. Based on this problem, this paper uses the binary tree structure of SumTree to store and find samples to reduce the time of searching and sorting. The structure diagram of SumTree is shown in

Figure 8.

As shown in

Figure 8, all the experience playback samples are stored in the leaf nodes in SumTree, each leaf node represents a sample, the leaf node not only saves the data, but also saves the priority of the sample. The internal nodes in the tree do not save sample data, and the number of internal nodes is the sum of the priorities of their two child nodes. When the system samples, first divide the leaf nodes of the entire SumTree into multiple intervals according to the total priority and the number of samples, as shown in the figure. The area calculation method is as Formula (

14), and then each interval is sampled with a sample. Then, sampling from top to bottom. If sampling is required, follow the procedure in

Table 1.

4.4. Selection of the Optimal Relay Node

This part will specifically introduce how to select a relay node for forwarding based on the DDQN algorithm based on empirical priority after the candidate relay node set is determined above. The PDDQN-HHVBF routing protocol provides a complete execution process for the data packets from the AUV to the Sink node. In this paper, it is assumed that nodes can obtain their own residual energy and three-dimensional coordinates in M-UWSNs. In addtion, all nodes in M-UWSNs will drift due to the movement of ocean currents, but the moving distance is limited in a short time, that is, the network topology is stable relatively in a short time. Compared with the long routing propagation time in the underwater acoustic channel and the high energy consumption, the delay and energy consumption of calculating the

Q value are very low, so it is not calculated separately in this paper. The process of requesting the

Q value is shown in

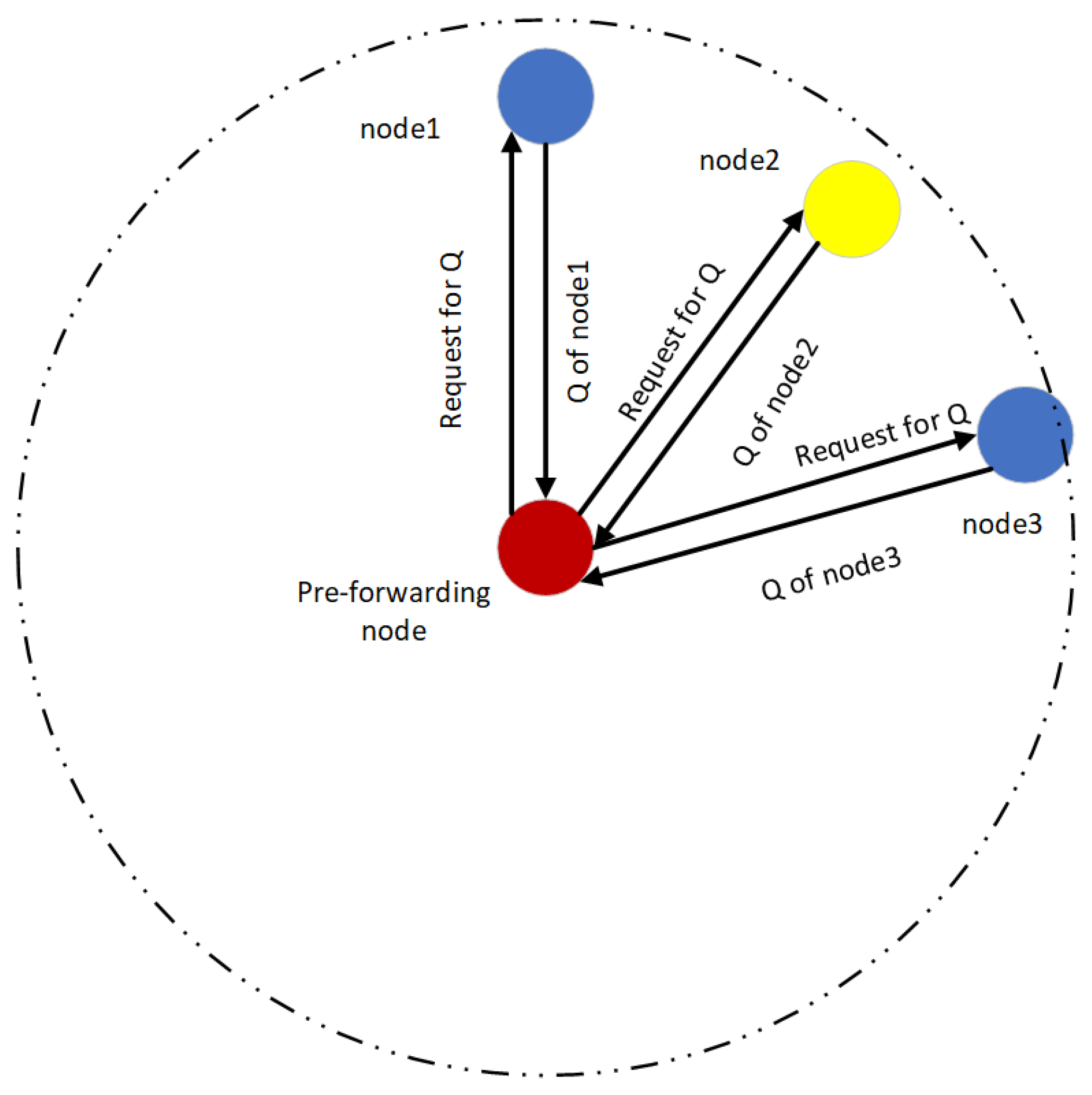

Figure 9.

As shown in

Figure 9, the pre-forwarding node requests the

Q value of the candidate relay nodes before sending data, and the request packet carries the location information of the pre-forwarding node so as to calculate the location parameter of the

Q value. After receiving the request, candidate relay nodes calculates the

Q value and returns them to the pre-forwarding node. After receiving the

Q value of the candidate relay nodes, the Source node selects the node with the largest

Q value as the relay node to transmit data, that is, node 2 in

Figure 9. Each node in M-UWSNs can be regarded as an agent. The position information, energy and number of candidate relay nodes of each sensor node can be regarded as the current state. The forwarding of the relay node selected by the sending node can be regarded as the action taken by the agent according to the state, and a

Q value will be obtained each time the data packet is forwarded to the relay node. In this protocol, a data packet needs to obtain the

Q value of the candidate relay node, so it first sends a request

Q value packet to all candidate relay nodes, and the candidate relay node calculates the

Q value and returns the value to the sending node. The sending node selects the candidate relay node with the largest

Q value to forward the data, and then the chosen node forwards the data to the Sink node hop by hop according to this method. When the node calculates the

Q value, it needs to obtain the residual energy, location information, and the number of candidate relay nodes. The formula of residual energy is as Formula (

15). The location information is

, in reference [

13], as shown in Formula (

16). The number of candidate relay nodes is

, which is the number of candidate relay nodes.

in Formula (

15),

is the existing energy of the node,

is the initial energy of the node. In Formula (

16), as

Figure 3,

R refers the radius of the pipeline,

d is the distance that the pre-forwarding node deliveries data to the relay node, and

represents the angle between

and

.

The larger

, the more the residual energy of the node, which means that the node is used less frequently. In addtion, subsequent use of nodes with large

also does not lead to energy exhaustion and end of lifetime of M-UWSNs. In addition, it is specified that the virtual pipeline radius

R is fixed, the size of

is determined by

d and

, and the value of

is inversely proportional to

. When

is smaller,

is larger. The shorter the transmission distance between nodes, the smaller the collision probability. When the transmission distance

d is constant, the smaller the

, the closer the candidate relay node is to the Sink node, and the higher the probability of successful data forwarding.

is the number of candidate relay nodes. The larger

is the more relay nodes can be selected, and the less possibility of routing holes due to ocean current movement. Therefore, the strategy of the PDDQN-HHVBF protocol proposed in this paper is to find candidate relay nodes with large

, large

, and large

for routing and forwarding. Therefore, the reward function [

32] is calculated by the above

,

,

, where the reward function

is generated by each state and action pair of the candidate relay node. The return value is set as Formula (

17):

where

a,

b,

c are the weight parameters of

,

,

, respectively. The neural network trains convergence parameters through training iterations. Since the return value of other nodes is between 0 and

c, and the

of the Sink node is always set to be 1, the original setting of this value is that when the node can directly transmit data to the Sink node. In this way, nodes will be preferentially transmitted to the Sink node, so as to avoid data looping. Therefore, the node avoids selecting nodes with lower residual energy while reducing the number of detours. More residual energy

, higher

, and more

is the strategy when looking for relay nodes.

4.5. “Store-Carry-Forward” Mechanism

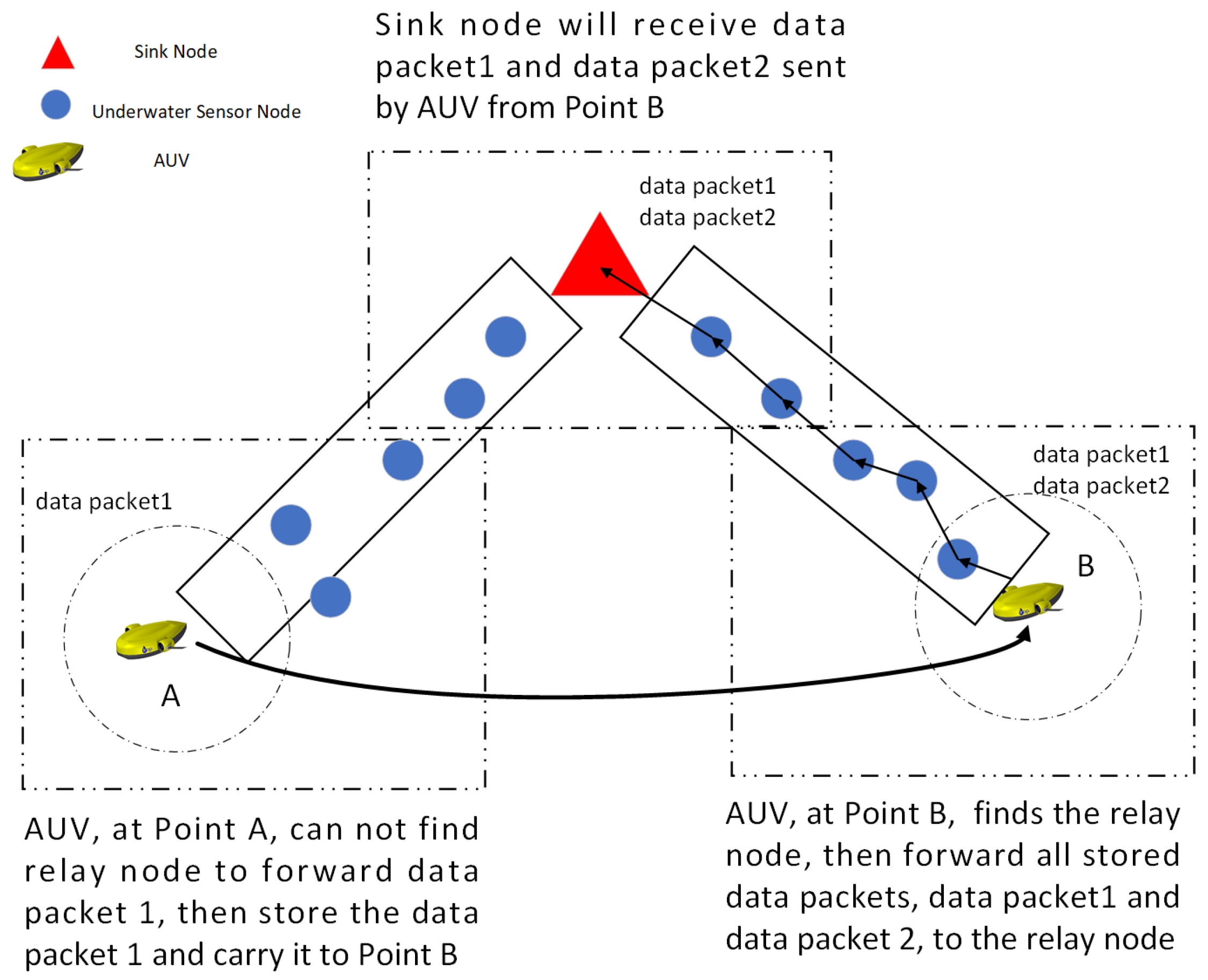

In this part we will introduce the “Store-Carry-Forward” mechanism of AUV. After the AUV data are collected, the data packets will be forwarded hop-by-hop to the Sink node. However, during the transmission of data packets, ocean current movement may cause all candidate relay nodes within the AUV transmission range to drift out of the AUV transmission range, which will lead to failure of AUV data packets transmission. If the AUV continues to re-transmit data packets in this area until the data transmission is successful, it will lead to a large waste of AUV energy. Or, the performance of all candidate relay nodes is very poor. If AUV transmits data to the relay nodes with low Q values, it is very likely that routing hole will occur, which will eventually lead to data packets loss. Therefore, “Store-Carry-Forward” mechanism makes AUV not forward data temporarily when it cannot find a suitable relay node, but continue to store and carry the packet and continue to collect new data packets according to the track until it finds a suitable relay node. Then, the AUV forwards all stored data packets to the suitable relay node.

As shown in

Figure 10, the AUV will transmit the data packet1 to the Sink node after collecting the data at the Point A. However, there is no suitable relay node in the AUV transmission range. Then, the AUV activated the “Store-Carry-Forward” mechanism to store and carry the data packet 1 to point B. During the movement from Point A to Point B, the AUV continues to detect data, that is data packet 2. After reaching Point B, the AUV starts an operation to find the relay node. If the situation is the same as at point A, it will continue to carry the two data packets to the next forwarding location. But the AUV can find a suitable relay node at point B as

Figure 10, the AUV will transmit the two data packets to the relay node together. Then the Sink node will receive the two data packets, data packet1 and data packet 2 sent by the AUV from Point B.