Energy-Aware Live VM Migration Using Ballooning in Cloud Data Center

Abstract

1. Introduction

- Pre-copy—Firstly, the data are transferred while the VM serves the customer. After a certain point, the VM is turned off and transferred to the destination host/server.

- Post-copy—Firstly, the VM is turned off and transferred to the destination host/server. Then, the data are transferred while the VM serves the customer.

- Hybrid—Data are transferred both before and after the shifting of the VM.

- Pre-copy—Disk blocks are transferred before memory pages.

- Post-copy—Disk blocks are transferred after memory pages.

- Hybrid—Disk blocks and memory pages are transferred simultaneously.

- Energy consumption—The overall energy usage in the cloud data centers can raise the cost to customers. Virtualization and live VM migration are the primary steps in this regard. Live VM migration, if performed frequently, can affect the system in the reverse direction.

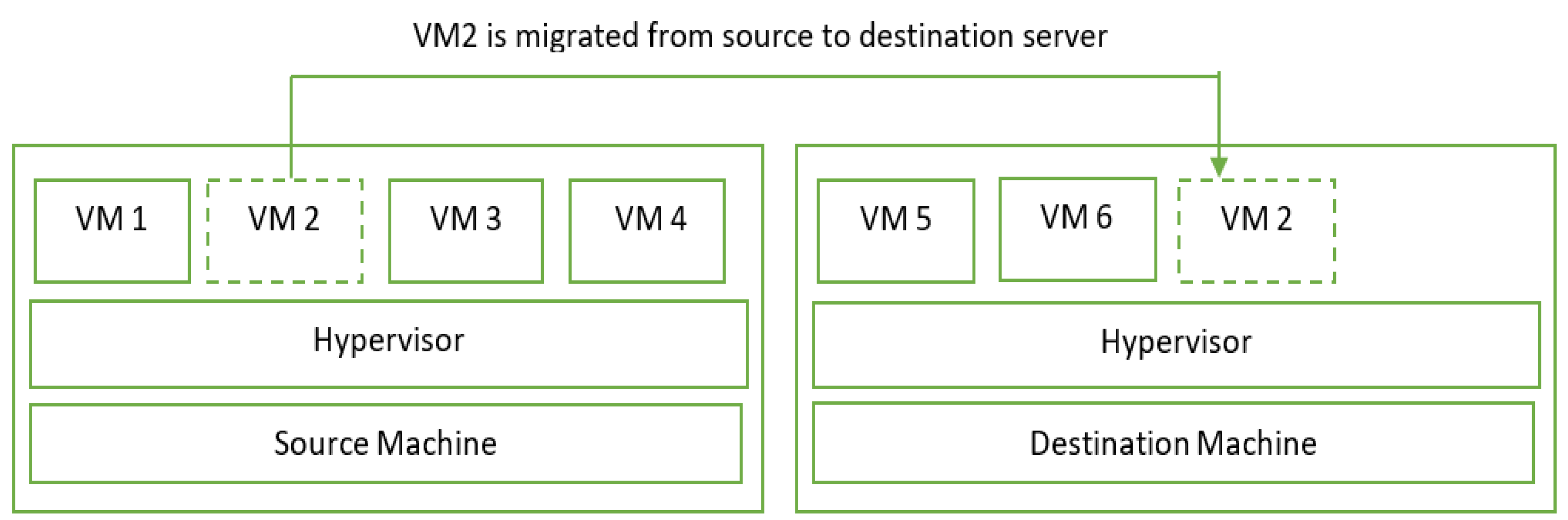

- Migration time—It defines the time between the initiation of VM migration and the resumption of the VM on the destination server.

- Downtime—It is the time when the VM remains halted so that it can be finally shifted to the destination server.

- Resource utilization—It is the measurement of the server that indicates how efficiently the server is used in the data center.

- Makespan—It is the measurement of the server regarding the working time, that is, the submission of the first VM till the completion of the last VM.

- Atomicity—It is the property whereby migration is completed successfully without disturbing other VMs.

- Convergence—It is the point in time when the difference in the memory and storage state between the source and the destination server is almost nil. This signifies a successful copy of data.

2. Related Work

3. Proposed Algorithm

- Before migration, execute ballooning, which is the process to delete unused data (memory pages and storage disk blocks) from the VM storage.

- Pre-copy to migrate the current state of memory pages and storage disk blocks.

- Power off the VM, transfer it to the target server, and restart.

- Post-copy to migrate the memory pages and disk blocks left.

- Calculate the migration time and downtime.

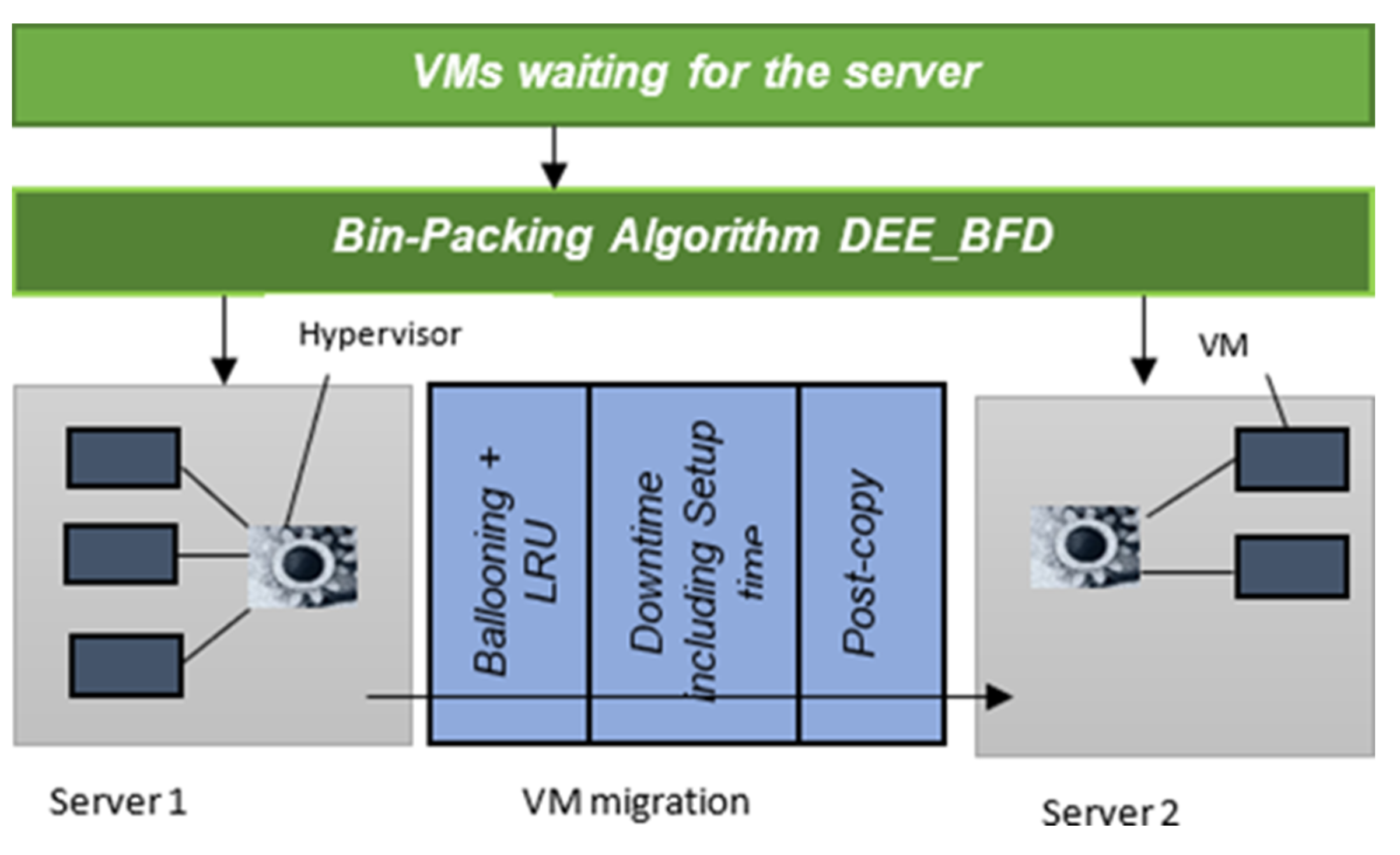

- Ballooning is combined with the least recently used (LRU) page replacement technique.

- The pre-copy step is discarded to reduce the transmission time.

- The setup of the VM at the destination is performed parallelly with ballooning to save time by sending the configuration details of the VM from the source to the destination server beforehand.

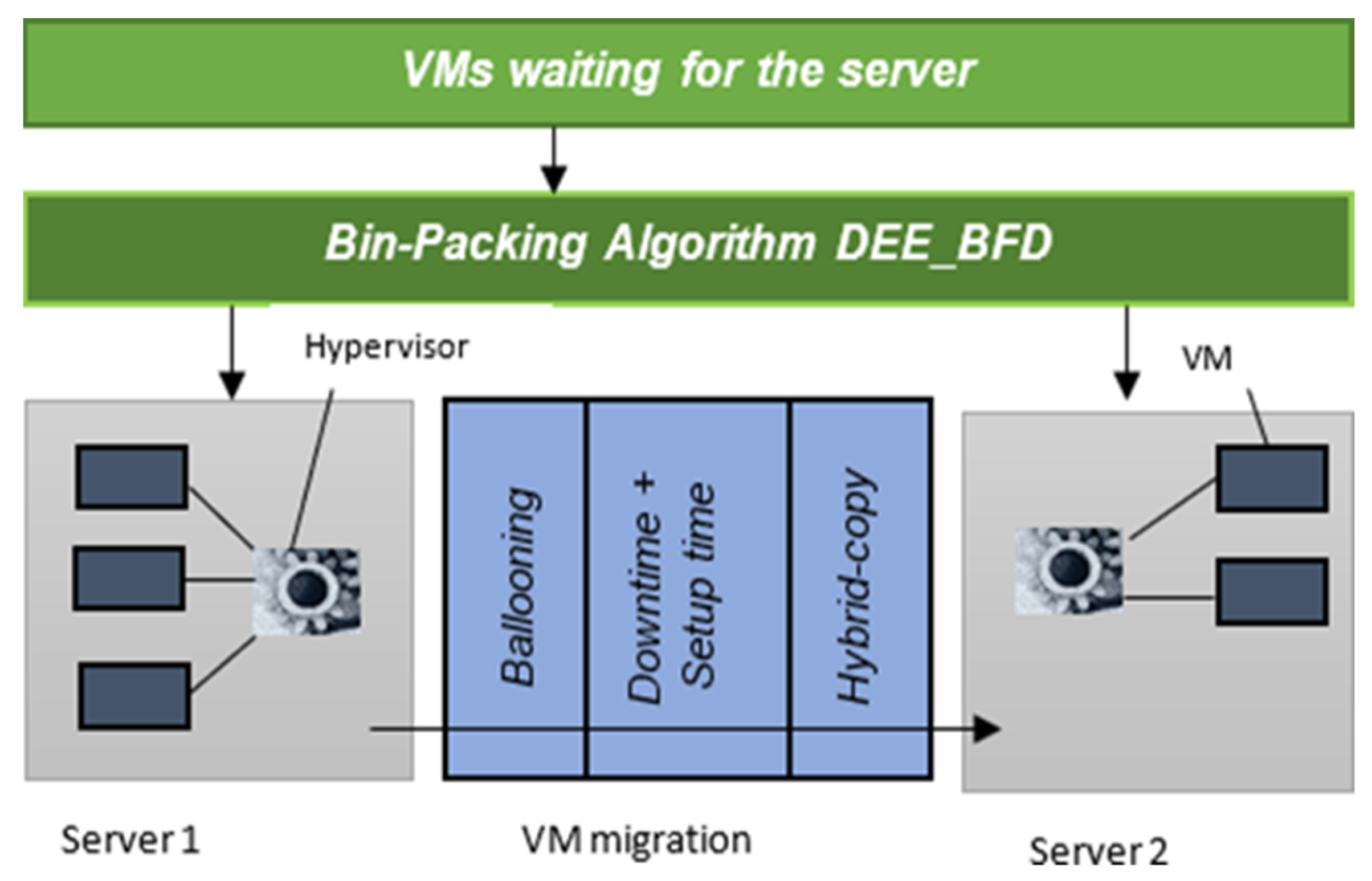

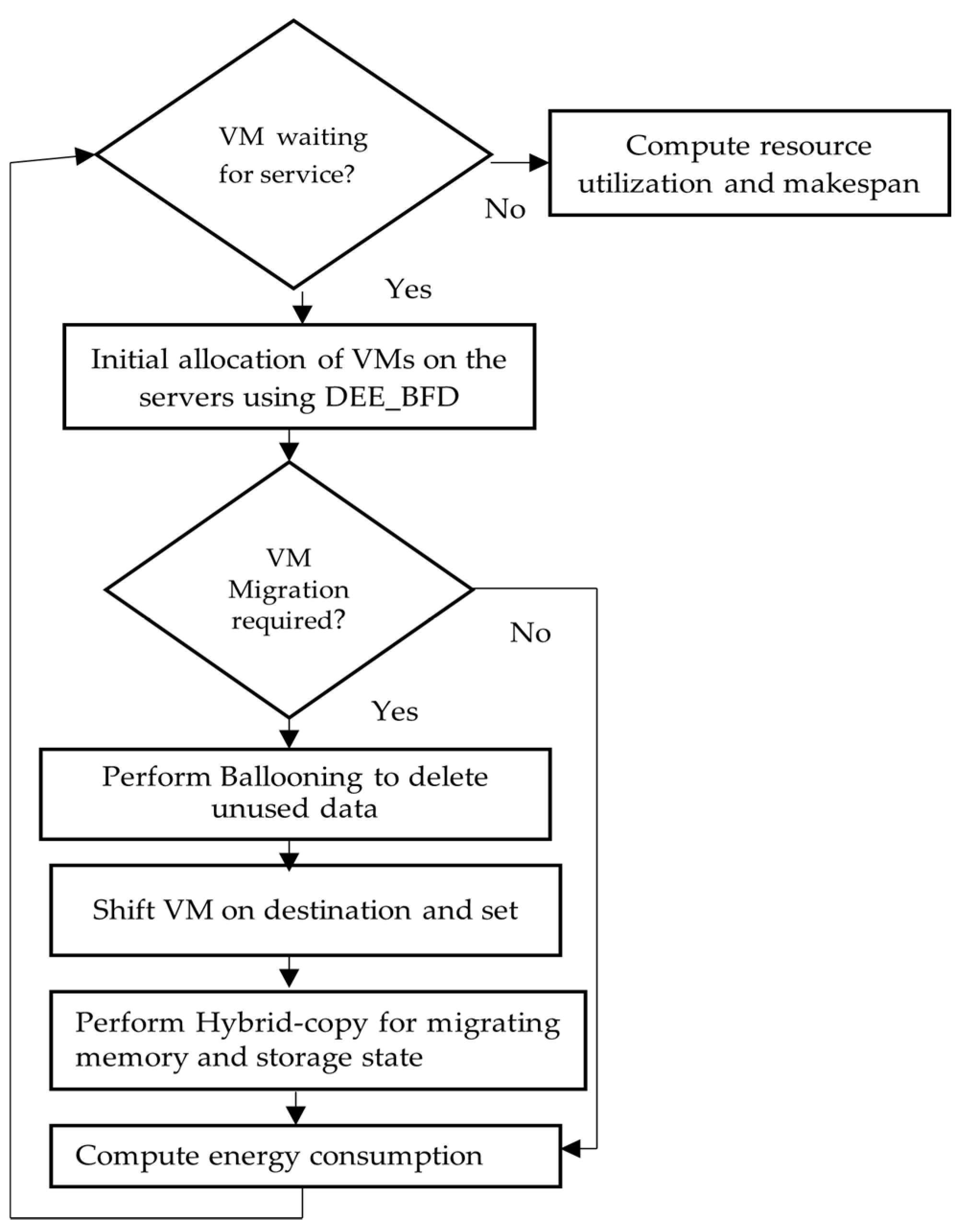

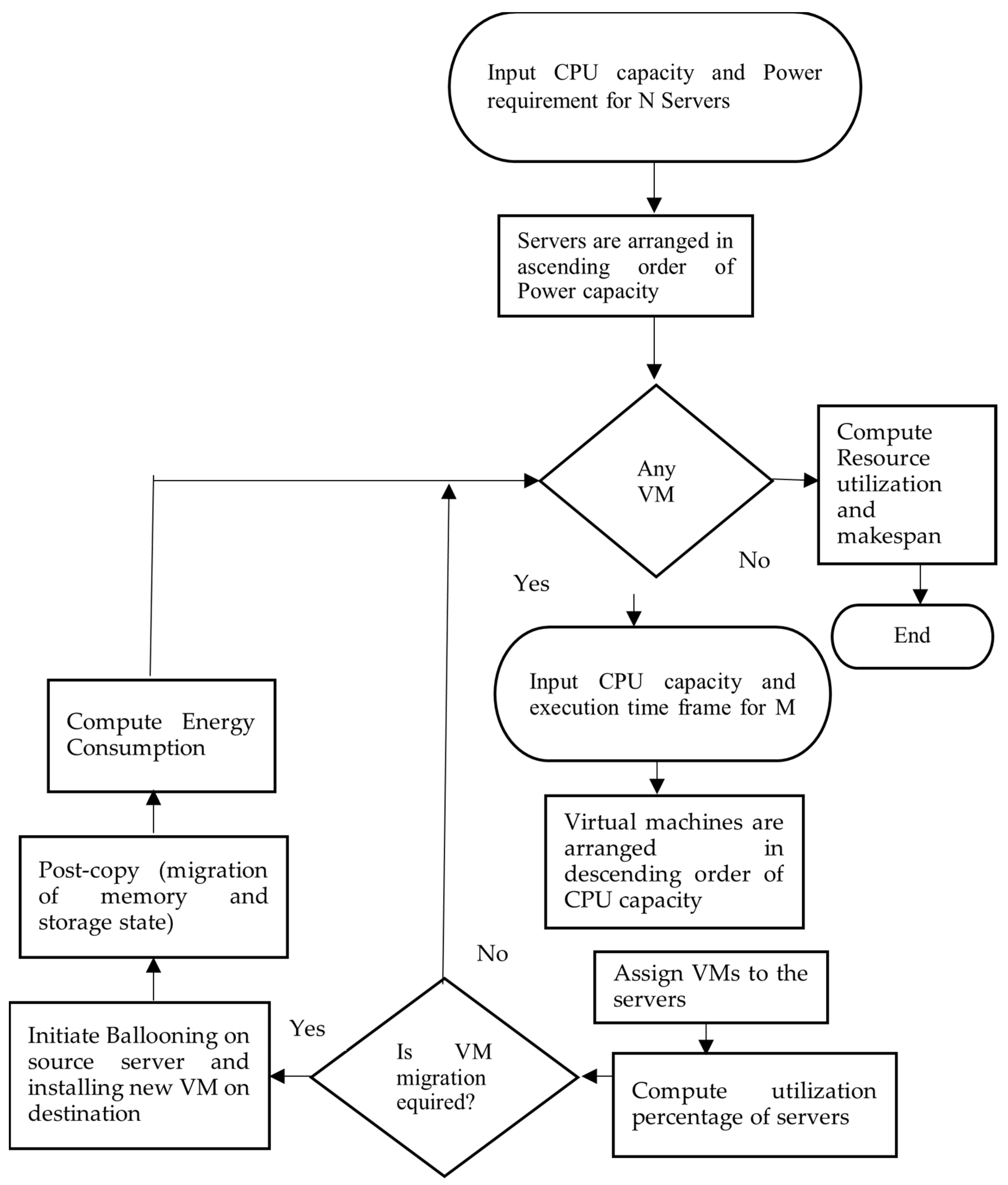

- Live migration with ballooning (LMB)—As presented in Algorithm 1, initially the waiting VMs are allotted to the available server. If the server is overburdened, then a decision is taken to migrate the minimum-CPU-capacity VM from it to some other server that has sufficient space. Firstly, ballooning is performed to delete unused pages. Then, the migration of memory and disk storage state is performed in the pre-copy stage. After copying the data, the VM is turned off at the source server and resumed at the destination after setting up the configuration. The next step is again the migration of memory and storage state in the post-copy stage. Once the migration is over, energy consumption, total migration time, and downtime are computed. When no VMs are left, resource utilization and makespan are calculated. The framework is shown in Figure 2, and the flowchart is shown in Figure 3.

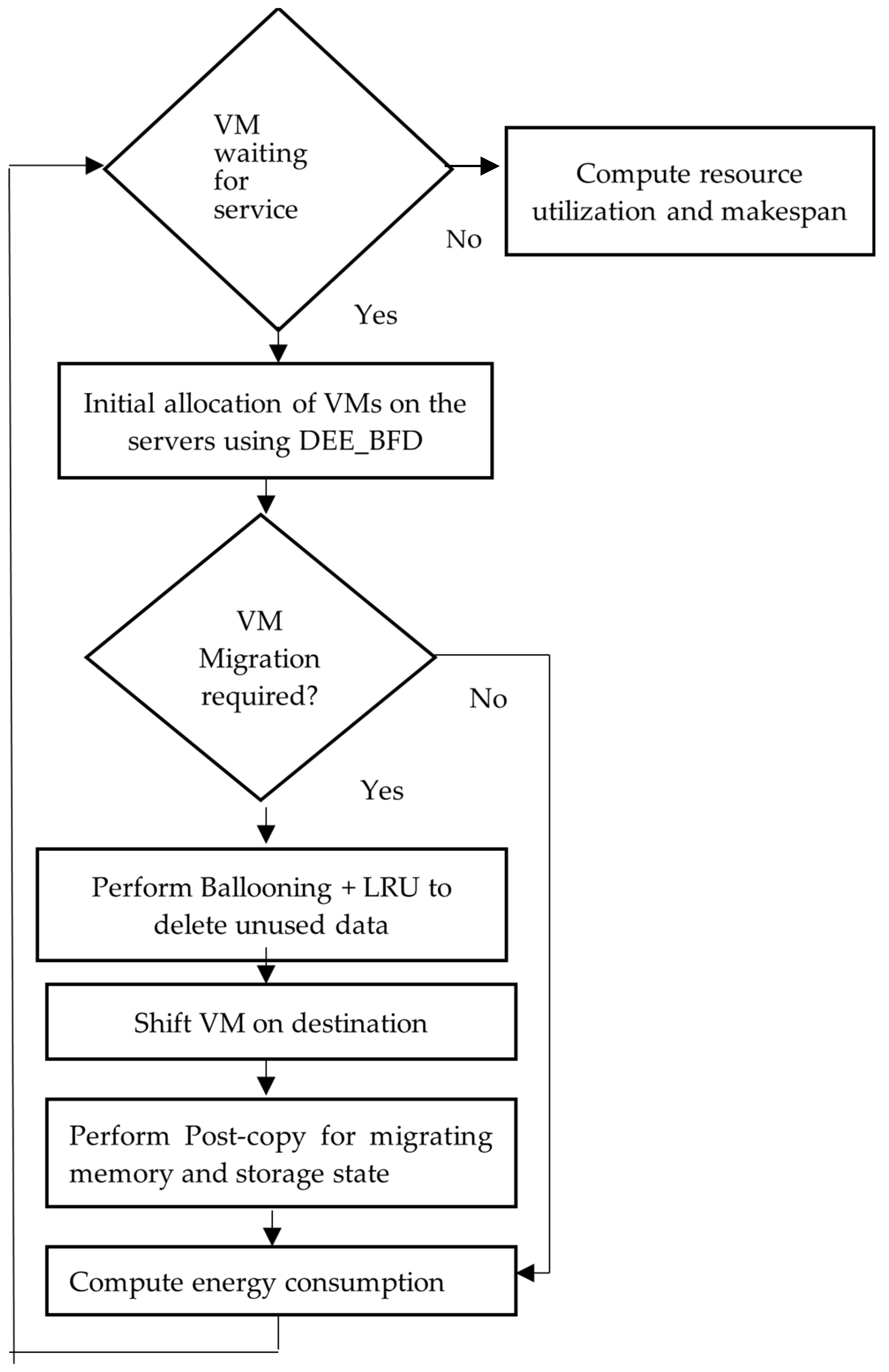

- Live migration with efficient ballooning (LMEB)—As presented in Algorithm 2, firstly, the waiting VMs are allotted to the available server. If the server is overburdened, then a decision is taken to migrate the minimum-CPU-capacity VM from it to some other server that has sufficient space. Firstly, ballooning is performed to delete unused pages and disk blocks considering the time of generation to use the LRU (least recently used) technique. Then, the VM is turned off at the source server and resumed at the destination after setting up the configuration. The next step is the migration of memory and storage state in the post-copy stage. Once the migration is over, energy consumption, total migration time, and downtime are calculated. If there are no VMs left waiting, resource utilization and makespan are computed. The framework is shown in Figure 4, and the flowchart is shown in Figure 5.

| Algorithm 1. Live migration with ballooning (LMB) |

|

| Algorithm 2. Live migration with efficient ballooning (LMEB) |

|

4. Experimental Testbed

- Maximum CPU capacity of server was 3000 instruction cycles.

- Maximum power capacity of server was 500 kWh.

- Maximum CPU capacity of VM was 1500 instruction cycles.

- Single memory page or disk block was transferred to the destination server through the network connection in 10 s.

- The energy consumed for one second in the transfer process was 10 kWh.

- The time between switching off the VM at the source server and resumption at the destination server was 10 s.

- The setup time of the VM with defined configuration (configuration of VM running on the source server) at the destination server was 5 s.

5. Results

5.1. Statistical Analysis

5.2. Discussion

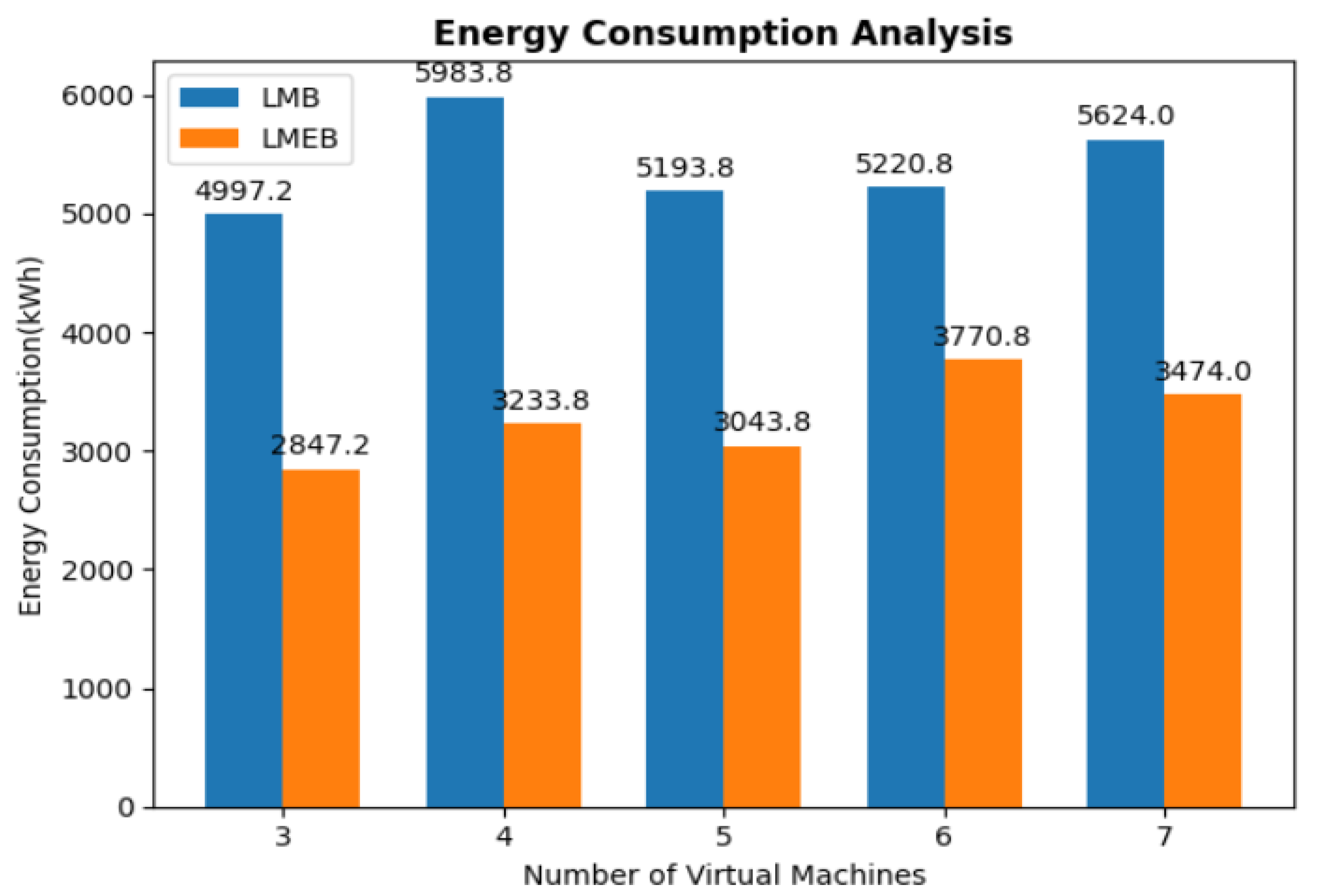

- Energy consumption—The standard deviation and variance were computed, and the results are presented in Table 11. The variance indicates the consistency of the algorithm. The lower the value is, the greater is the consistency is; therefore, LMEB was proved to be more energy efficient than LMB. The average energy consumption of the existing algorithm (LMB) was 5403.92 kWh, and that of the proposed algorithm (LMEB) was 3273.92, so it was reduced by 39%.

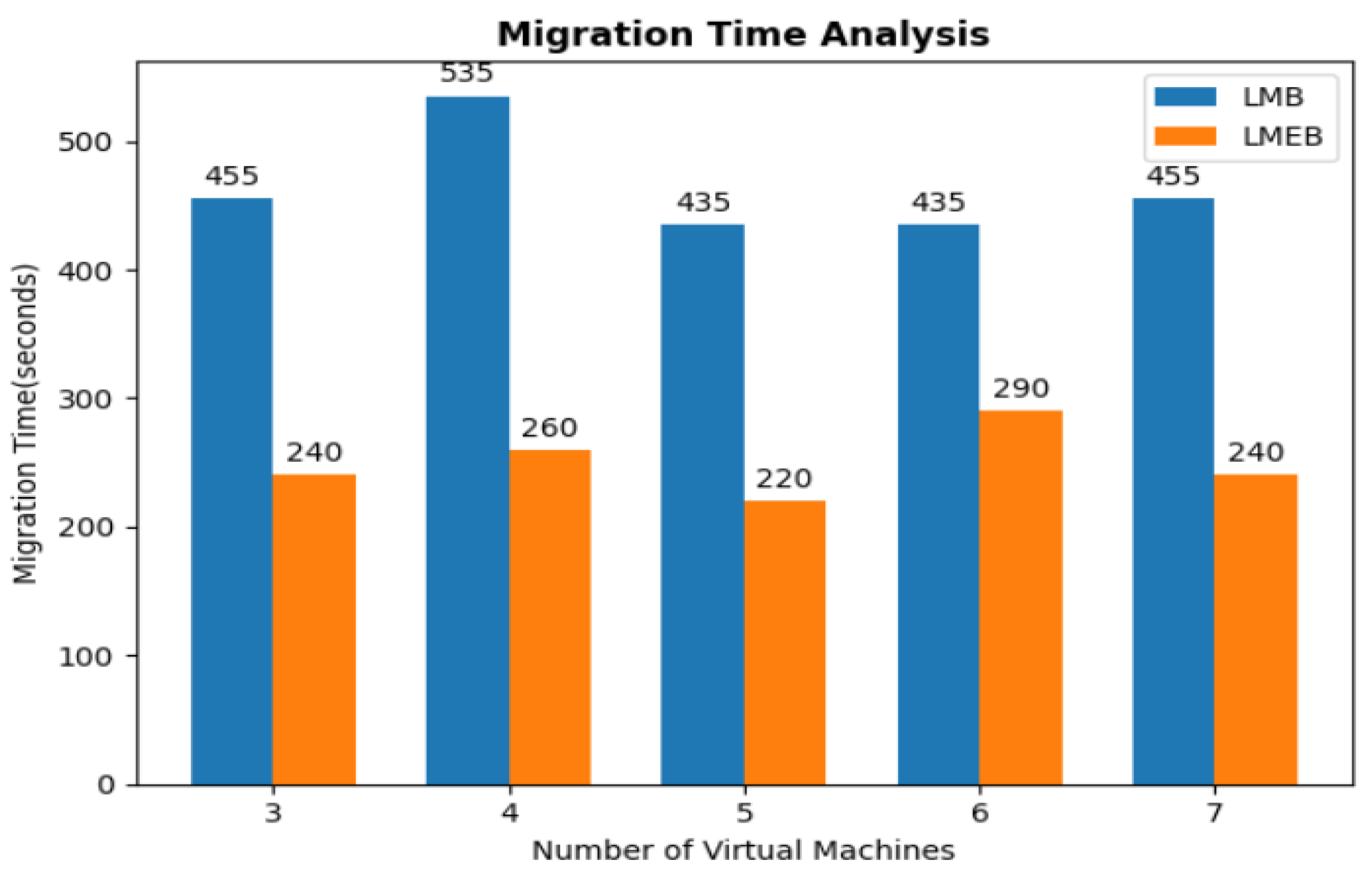

- Migration time—According to Table 9, the migration time of LMEB was smaller than that of LMB. The average migration time of LMB was 463 s, and that of LMEB was 250 s, so it was reduced by 46%.

- Downtime—The downtime depends on the network properties between the source and destination servers and was constant for both algorithms in all cases. The downtime value for LMEB was 10 s, and that for LMB was 15 s. LMEB had a smaller downtime value as it does not include the setup time (5 s) of the virtual machine at the destination. The downtime of LMB was 15 s, and that of LMEB was 10 s, so it was reduced by 25%.

- Resource utilization—The values were found to be equal in both algorithms in all cases. So, there were no differences between LMB and LMEB in terms of resource utilization.

- Atomicity and convergence—LMEB was designed in such a way that it preserves atomicity and convergence, as there is only one transfer of data during the post-copy technique, so there are no differences in the data at the source and destination machines; the migration process was not affected by any other resources, so it was successfully completed.

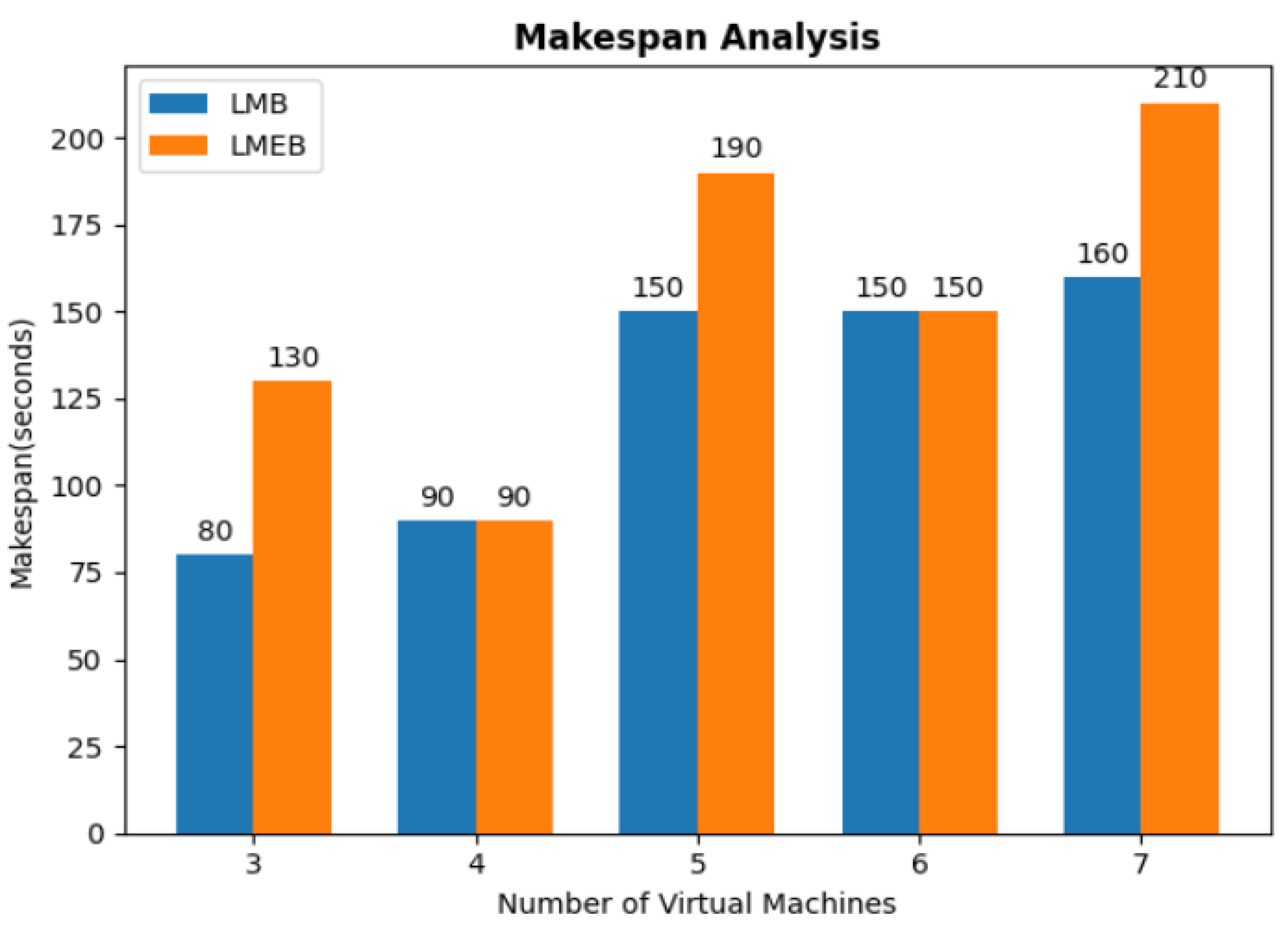

- Makespan—According to Table 10, the makespan of LMEB was found to be larger than that of LMB in some cases. So, LMEB does not guarantee a lowering of the total working time of the servers.

5.3. Complexity

6. Workflow of Cloud Data Center

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Savu, L. Cloud Computing: Deployment Models, Delivery Models, Risks and Research Challanges. In Proceedings of the 2011 International Conference on Computer and Management, CAMAN 201, Wuhan, China, 19–21 May 2011. [Google Scholar] [CrossRef]

- Xing, Y.; Zhan, Y. Virtualization and cloud computing. Lect. Notes Electr. Eng. 2012, 143, 305–312. [Google Scholar] [CrossRef]

- Kapil, D.; Pilli, E.S.; Joshi, R.C. Live virtual machine migration techniques: Survey and research challenges. In Proceedings of the 2013 3rd IEEE International Advance Computing Conference, IACC, Ghaziabad, India, 22–23 February 2013; pp. 963–969. [Google Scholar] [CrossRef]

- Zhang, F.; Liu, G.; Fu, X.; Yahyapour, R. A Survey on Virtual Machine Migration: Challenges, Techniques, and Open Issues. IEEE Commun. Surv. Tutor. 2018, 20, 1206–1243. [Google Scholar] [CrossRef]

- Michael, R.; Hines, K.G. Post-Copy Based Live Virtual Machine Migration Using Adaptive Pre-Paging and Dynamic Self-Ballooning. In Proceedings of the 2009 ACM SIGPLAN/SIGOPS International Conference on Virtual Execution Environments (VEE’09), Washington, DC, USA, 11–13 March 2009; pp. 51–60. [Google Scholar]

- Zhang, X.; Huo, Z.; Ma, J.; Meng, D. Exploiting data deduplication to accelerate live virtual machine migration. In Proceedings of the Proceedings—IEEE International Conference on Cluster Computing, ICCC 2010, Heraklion, Greece, 20–24 September 2010; pp. 88–96. [Google Scholar] [CrossRef]

- Mashtizadeh, A.; Celebi, E.; Garfinkel, T.; Cai, M. The design and evolution of live storage migration in Vmware Esx. In Proceedings of the 2011 USENIX Annual Technical Conference, USENIX ATC 2011, Portland, OR, USA, 15–17 June 2011; pp. 187–200. [Google Scholar]

- Murtazaev, A.; Oh, S. Sercon: Server consolidation algorithm using live migration of virtual machines for green computing. IETE Tech. Rev. 2011, 28, 212–231. [Google Scholar] [CrossRef]

- Adhianto, L.; Banerjee, S.; Fagan, M.; Krentel, M.; Marin, G.; Mellor-Crummey, J.; Tallent, N.R. HPCTOOLKIT: Tools for performance analysis of optimized parallel programs. Concurr. Comput. Pract. Exp. 2010, 22, 685–701. [Google Scholar] [CrossRef]

- Jeong, J.; Kim, S.H.; Kim, H.; Lee, J.; Seo, E. Analysis of virtual machine live-migration as a method for power-capping. J. Supercomput. 2013, 66, 1629–1655. [Google Scholar] [CrossRef]

- Jin, H.; Deng, L.; Wu, S.; Shi, X.; Chen, H.; Pan, X. MECOM: Live migration of virtual machines by adaptively compressing memory pages. Future Gener. Comput. Syst. 2014, 38, 23–35. [Google Scholar] [CrossRef]

- Jenitha, V.H.A.; Veeramani, R. Dynamic memory Allocation using ballooning and virtualization in cloud computing. IOSR J. Comput. Eng. 2014, 16, 19–23. [Google Scholar] [CrossRef]

- Wang, C.; Hao, Z.; Cui, L.; Zhang, X.; Yun, X. Introspection-Based Memory Pruning for Live VM Migration. Int. J. Parallel Program. 2017, 45, 1298–1309. [Google Scholar] [CrossRef]

- Zhang, F.; Fu, X.; Yahyapour, R. LayerMover: Storage migration of virtual machine across data centers based on three-layer image structure. In Proceedings of the 2016 IEEE 24th International, Symposium on Modeling, Analysis and Simulation of Computer and Telecommunication Systems, MASCOTS 2016, London, UK, 19–21 September 2016; pp. 400–405. [Google Scholar] [CrossRef]

- Wu, T.Y.; Guizani, N.; Huang, J.S. Live migration improvements by related dirty memory prediction in cloud computing. J. Netw. Comput. Appl. 2017, 90, 83–89. [Google Scholar] [CrossRef]

- Deshmukh, P.P.; Amdani, S.Y. Virtual Memory Optimization Techniques in Cloud Computing. In Proceedings of the 2018 3rd IEEE International Conference on Research in Intelligent and Computing in Engineering, RICE 2018, San Salvador, El Salvador, 22–24 August 2018. [Google Scholar] [CrossRef]

- Patel, M.; Chaudhary, S.; Garg, S. Improved pre-copy algorithm using statistical prediction and compression model for efficient live memory migration. Int. J. High Perform. Comput. Netw. 2018, 11, 55–65. [Google Scholar] [CrossRef]

- Sun, G.; Liao, D.; Anand, V.; Zhao, D.; Yu, H. A new technique for efficient live migration of multiple virtual machines. Future Gener. Comput. Syst. 2016, 55, 74–86. [Google Scholar] [CrossRef]

- Kaur, J.; Chana, I. Review of Live Virtual Machine Migration Techniques in Cloud Computing. In Proceedings of the 2018 International Conference on Circuits and Systems in Digital Enterprise Technology, ICCSDET, 2018, Kottayam, India, 21–22 December 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Chashoo, S.F.; Malhotra, D. VM-Mig-framework: Virtual machine migration with and without ballooning. In Proceedings of the PDGC 2018—2018 5th International Conference on Parallel, Distributed and Grid Computing 2018, Solan, India, 20–22 December 2018; pp. 368–373. [Google Scholar] [CrossRef]

- Silva, D.D.; Eds, L.j.Z.; Hutchison, D. Cloud Computing—CLOUD 2019; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; Volume 11513, pp. 99–113. [Google Scholar] [CrossRef]

- Mazrekaj, A.; Nuza, S.; Zatriqi, M.; Alimehaj, V. An overview of virtual machine live migration techniques. Int. J. Electr. Comput. Eng. 2019, 9, 4433–4440. [Google Scholar] [CrossRef]

- He, T.; Buyya, R. A Taxonomy of Live Migration Management in Cloud Computing. arXiv 2021, arXiv:2112.02593. [Google Scholar]

- Mishra, P.; Aggarwal, P.; Vidyarthi, A.; Singh, P.; Khan, B.; Alhelou, H.H.; Siano, P. 343 VMShield: Memory Introspection-Based Malware Detection to Secure Cloud-Based Ser- 344 vices against Stealthy Attacks. IEEE Trans. Ind. Inform. 2021, 17, 6754–6764. [Google Scholar] [CrossRef]

- Karmakar, K.; Das, R.K.; Khatua, S. An ACO-based multi-objective optimization for cooperating VM placement in cloud data center. J. Supercomput. 2022, 78, 3093–3121. [Google Scholar] [CrossRef]

- Lu Yin, J.Z.; Sun, J. A stochastic algorithm for scheduling bag-of-tasks applications on hybrid clouds under task duration variations. J. Syst. Softw. 2022, 184, 111123. [Google Scholar] [CrossRef]

- Bui, K.T.; Ho, H.D.; Pham, T.V.; Tran, H.C. Virtual machines migration game approach for multi--tier application in infrastructure as a service cloud computing. IET Netw. 2020, 9, 326–337. [Google Scholar] [CrossRef]

- Pande, S.K.; Panda, S.K.; Das, S.; Sahoo, K.S.; Luhach, A.K.; Jhanjhi, N.Z.; Alroobaea, R.; Sivanesan, S. A resource management algorithm for virtual machine migration in vehicular cloud computing 2021. Comput. Mater. Contin. 2021, 67, 2647–2663. [Google Scholar] [CrossRef]

- Tran, C.H.; Bui, T.K.; Pham, T.V. Virtual machine migration policy for multi-tier application in cloud computing based on Q-learning algorithm. Comput. 2022, 104, 1285–1306. [Google Scholar] [CrossRef]

- Gupta, A.; Namasudra, S. A novel technique for accelerating live migration in cloud computing. Autom. Softw. Eng. 2022, 29, 34. [Google Scholar] [CrossRef]

- Tuli, K.; Kaur, A.; Malhotra, M. Efficient virtual machine migration algorithms for data centers in cloud computing. In Proceedings of the International Conference on Innovative Computing and Communications, Delhi, India, 25–26 March 2022; p. 473. [Google Scholar] [CrossRef]

- Gupta, K.; Katiyar, V. Energy aware virtual machine migration techniques for cloud environment. Int. J. Comput. Appl. 2016, 141, 11–16. [Google Scholar] [CrossRef]

- Kalra, M.; Singh, S. A review of metaheuristic scheduling techniques in cloud computing. Egypt. Inform. J. 2015, 16, 275–295. [Google Scholar] [CrossRef]

- Gupta, N.; Gupta, K.; Gupta, D.; Juneja, S.; Turabieh, H.; Dhiman, G.; Kautish, S.; Viriyasitavat, W. Enhanced virtualization-based dynamic bin-packing optimized energy management solution for heterogeneous clouds. Math. Probl. Eng. 2022, 2022, 8734198. [Google Scholar] [CrossRef]

- Datta, P.; Sharma, B. A survey on IoT architectures, protocols, security and smart city based applications. In Proceedings of the 8th International Conference on Computing, Communications and Networking Technologies, ICCCNT 2017, Delhi, India, 3– 5 July 2017. [Google Scholar] [CrossRef]

- Bali, M.S.; Gupta, K.; Koundal, D.; Zaguia, A.; Mahajan, S.; Pandit, A.K. Smart architectural framework for symmetrical data offloading in IOT. Symmetry 2021, 13, 1889. [Google Scholar] [CrossRef]

- Mohammadzadeh, A.; Masdari, M.; Gharehchopogh, F.S.; Jafarian, A. A hybrid multi-objective metaheuristic optimization algorithm for scientific workflow scheduling. Clust. Comput. 2021, 24, 1479–1503. [Google Scholar] [CrossRef]

- Mohammadzadeh, A.; Masdari, M.; Gharehchopogh, F. An improved grey wolves optimization algorithm for workflow scheduling in cloud computing environment. J. Soft Comput. Inf. Technol. 2020, 8, 17–29. [Google Scholar]

- Gharehpasha, S.; Masdari, M.; Jafarian, A. Power efficient virtual machine placement in cloud data centers with a discrete and chaotic hybrid optimization algorithm. Clust. Comput. 2021, 24, 1293–1315. [Google Scholar] [CrossRef]

- Gharehpasha, S.; Masdari, M.; Jafarian, A. Virtual machine placement in cloud data centers using a hybrid multi-verse optimization algorithm. Artif. Intell. Rev. 2021, 54, 2221–2257. [Google Scholar] [CrossRef]

- Masdari, M.; Gharehpasha, S.; Jafarian, A. An optimal vm placement in cloud data centers based on discrete chaotic whale optimization algorithm. J. Adv. Comput. Eng. Technol. 2020, 6, 201–212. [Google Scholar]

- Gharehpasha, S.; Masdari, M.; Jafarian, A. The placement of virtual machines under optimal conditions in cloud datacenter. Inf. Technol. Control. 2019, 48, 545–556. [Google Scholar] [CrossRef]

| Abbreviation | Definition |

|---|---|

| CPUi | CPU capacity of server and VM |

| POWi | Power capacity of server |

| TIMEi | Execution time of VM |

| UTILi | Utilization factor of server |

| SR | Source server for VM migration |

| DS | Destination server for VM migration |

| Num_Pagess | Number of memory pages used by VM selected for migration |

| Num_Blockss | Number of disk blocks used by VM selected for migration |

| Transt | Total time needed to transfer memory page or disk block from one server to the other |

| St | Time between halt of VM at source and resumption at destination server |

| Sett | Time required to set up VM at destination |

| TMT | Total migration time |

| P (F,t) [31] | Power capacity of the ith server in terms of function of placement ”F” |

| Ui(F,t) [31] | Utilization factor of the ith server in terms of placement ”F2 and time ”t” |

| Resutili [32] | Resource utilization of the ith server |

| Makespan [32] | Time from submission of the 1st VM to completion of the last VM |

| CPU_utili | Utilized CPU value on the ith server |

| Tvmj | Execution time of VM j |

| Tvm_maxi | Maximum time of any VM on server i |

| Server No. | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| CPU capacity (instruction cycles) | 2500 | 2000 | 1500 | 3000 | 2700 |

| Power capacity (kWh) | 300 | 200 | 450 | 350 | 500 |

| Virtual Machine No. | 1 | 2 | 3 |

|---|---|---|---|

| CPU capacity (instruction cycles) | 1200 | 800 | 1000 |

| Execution time (seconds) | 30 | 50 | 40 |

| Virtual Machine No. | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| CPU capacity (instruction cycles) | 1200 | 800 | 1000 | 2000 |

| Execution time (seconds) | 30 | 50 | 40 | 20 |

| Virtual Machine No. | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| CPU capacity (instruction cycles) | 1200 | 800 | 1000 | 1300 | 700 |

| Execution time (seconds) | 30 | 50 | 40 | 20 | 90 |

| Virtual Machine No. | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| CPU capacity (instruction cycles) | 1200 | 800 | 1000 | 1300 | 700 | 600 |

| Execution time (seconds) | 30 | 50 | 40 | 20 | 90 | 40 |

| Virtual Machine No. | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| CPU capacity (instruction cycles) | 1200 | 800 | 1000 | 1300 | 900 | 750 | 2000 |

| Execution time (seconds) | 30 | 50 | 40 | 20 | 90 | 40 | 10 |

| No. of Virtual Machines | LMB | LMEB |

|---|---|---|

| 3 | 4997.2 | 2847.2 |

| 4 | 5983.8 | 3233.8 |

| 5 | 5193.8 | 3043.8 |

| 6 | 5220.8 | 3770.8 |

| 7 | 5624 | 3474 |

| No. of Virtual Machines | LMB | LMEB |

|---|---|---|

| 3 | 455 | 240 |

| 4 | 535 | 260 |

| 5 | 435 | 220 |

| 6 | 435 | 290 |

| 7 | 455 | 240 |

| No. of Virtual Machines | LMB | LMEB |

|---|---|---|

| 3 | 80 | 130 |

| 4 | 90 | 90 |

| 5 | 150 | 190 |

| 6 | 150 | 150 |

| 7 | 160 | 210 |

| LMB | LMEB | |

|---|---|---|

| Standard deviation | 396.17 | 361.79 |

| Variance | 156,950 | 130,894 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gupta, N.; Gupta, K.; Qahtani, A.M.; Gupta, D.; Alharithi, F.S.; Singh, A.; Goyal, N. Energy-Aware Live VM Migration Using Ballooning in Cloud Data Center. Electronics 2022, 11, 3932. https://doi.org/10.3390/electronics11233932

Gupta N, Gupta K, Qahtani AM, Gupta D, Alharithi FS, Singh A, Goyal N. Energy-Aware Live VM Migration Using Ballooning in Cloud Data Center. Electronics. 2022; 11(23):3932. https://doi.org/10.3390/electronics11233932

Chicago/Turabian StyleGupta, Neha, Kamali Gupta, Abdulrahman M. Qahtani, Deepali Gupta, Fahd S. Alharithi, Aman Singh, and Nitin Goyal. 2022. "Energy-Aware Live VM Migration Using Ballooning in Cloud Data Center" Electronics 11, no. 23: 3932. https://doi.org/10.3390/electronics11233932

APA StyleGupta, N., Gupta, K., Qahtani, A. M., Gupta, D., Alharithi, F. S., Singh, A., & Goyal, N. (2022). Energy-Aware Live VM Migration Using Ballooning in Cloud Data Center. Electronics, 11(23), 3932. https://doi.org/10.3390/electronics11233932