1. Introduction

Denial of service (DoS) attacks have become one of the most obstinate issues for many years regarding network security. Despite numerous detective and preventive schemes being contrived, the threat of distributed DoS (DDoS)/DoS attacks are still persistent, and their numbers increase every year [

1]. DDoS attacks are real threats in the future, as they are increasing day by day. According to the report by [

2], in the third quarter of 2022, a DDoS intelligence system detected 57,116 attacks. Most of the attacks were launched in the U.S. In the second quarter of 2022, it was 45.95%, and this ratio is 39.60% for the third quarter of 2022. Worldwide, this ratio has increased from 38.69% in Q3 2021 to 53.53% in Q3 2022. According to [

3], during the first six months of 2022, malicious DDoS attacks increased by 60% as compared to the same period in 2021. These statistics show the alarming satiation for smart systems and show the need for improvements in the security systems for smart devices. In recent years, several big IT companies faced DDoS, and one of the biggest attacks was launched on AWS in February 2020 [

4]. The attack was launched with huge traffic of approximately 2.3 terabits per second (Tbps). Similarly, GitHub was also targeted by the DDoS attack in 2018.

In today’s world, the internet plays a vital role in several aspects of human life, such as education, transport, banking [

5], hospitals, entertainment, personal use, administrations, trade, communication [

6,

7,

8], e-commerce [

9], environment [

10], and many more [

11,

12]. It has made human life easier by revolutionizing the world of communication and technology. Along with the advancement of technology, many security-related risks also emerge and rise. It is also the case with internet security where data damage, theft of resources, breaching confidentiality, social harassment, and online frauds, etc., have substantially increased [

13,

14,

15,

16].

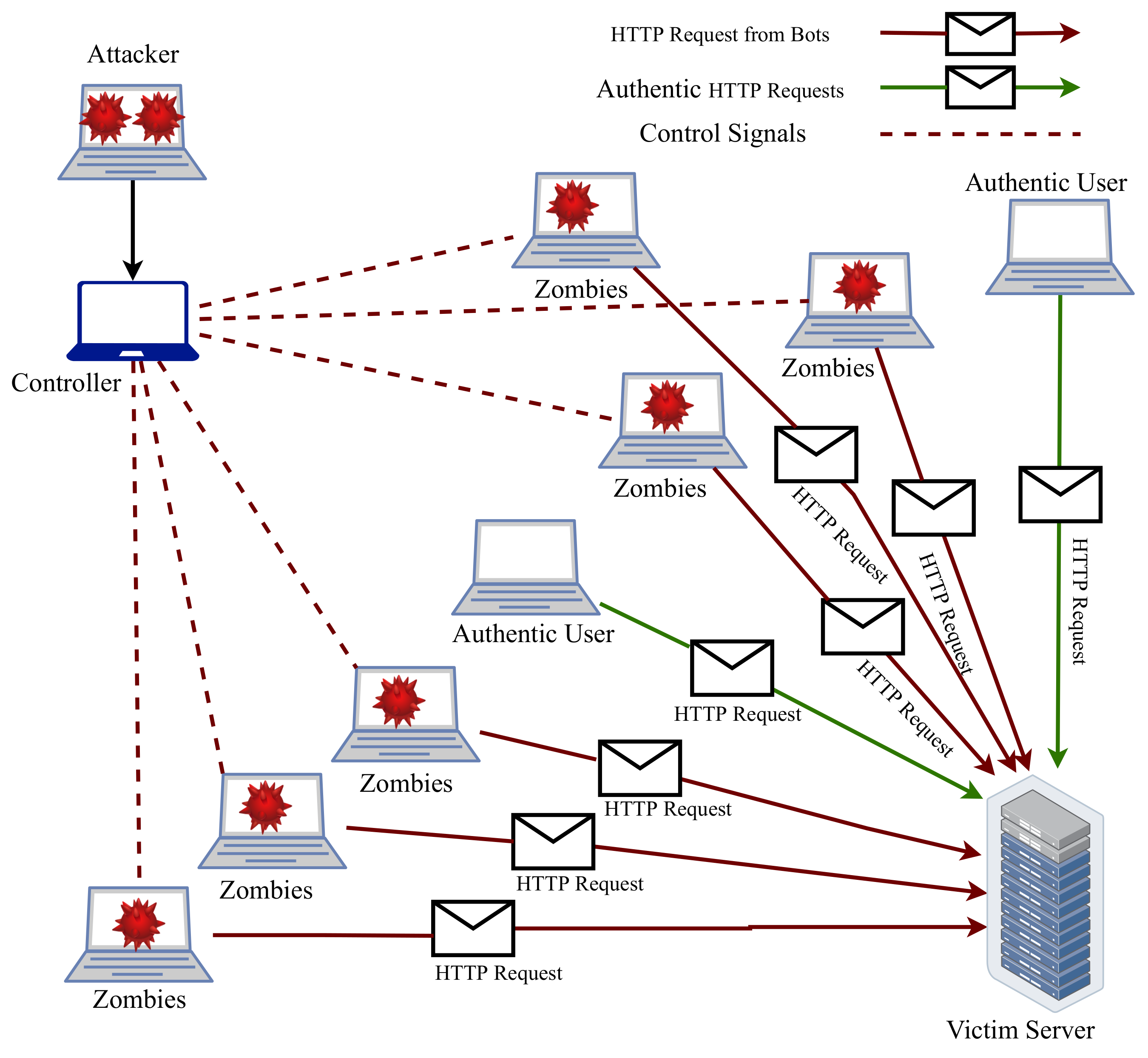

Data availability is one of the significant challenges related to network security. DDoS is one of the leading attacks that compromises the availability of a network. It accomplishes it by redundantly requesting the targeted resources to strain services provided by the system. In a DDoS attack, requests come from multiple systems to a single target, as shown in

Figure 1. Through DDoS attacks, malicious users can completely distort the availability of systems/services to authentic users. Currently, DDoS attacks are continuously growing and put network security at risk. Traditionally, DDoS attacks use a collection of compromised systems known as botnets. The major objective of this attack is to demolish the server resources such as memory, bandwidth, processor, etc., to put down the services for legitimate users. A comprehensive review of recent DDoS attacks is provided in [

17], while many mitigation techniques are discussed in [

18,

19,

20]. DoS attacks are classified concerning several aspects. Based on network protocol, a DoS attack is further fragmented in the transport layer and application layer of DoS attacks.

This study mainly focuses on application layer DoS/DDoS attacks. In network layer DDoS attacks, attackers take advantage of partially opened connections by using transmission control protocol/user datagram protocol (TCP/UDP) protocols and send numerous forged payloads normally using internet protocol (IP) spoofing. In contrast, attackers send several requests to exhaust well-known applications such as hypertext transfer protocol (HTTP), domain name system (DNS), etc., in the application layer DDoS attack. These requests are indistinguishable from authorized user requests at the network level. These attacks have a higher rate of bogus requests as compared to legitimate requests. These attacks are hard to detect because the connections are already established and requests seem to originate from authorized users.

This study proposes a system to detect DDoS attacks using machine learning techniques. It is very difficult to monitor network traffic manually to protect the system from attackers, so a smart protection system to detect the attacks is needed. The proposed system is significantly better than existing approaches, as it is a simple yet effective approach to achieve better results. The contributions of this research are as follows:

An efficient and effective DDoS/DoS attack detection framework is proposed, which can perform early detection of several attacks, such as HTTP flood and stealth DDoS/DoS attacks. For experiments, the ’Application layer DDoS attack dataset’ is used. A higher attack detection performance is aimed at reduced computational complexity.

A novel feature selection approach has been developed to select highly significant features by incorporating selective features from principal component analysis (PCA) and singular value decomposition (SVD) for this purpose.

Experiments are performed with many machine learning models such as logistic regression (LR), gradient boosting machine (GBM), random forest (RF), and extra tree classifier (ETC). Performance appraisal with state-of-the-art approaches is carried out in terms of accuracy, precision, recall, and F1 score.

This study is organized into five sections.

Section 2 presents the related work.

Section 3 explains the material and methods, including the dataset, machine learning methods, and the proposed methodology. Results are discussed in

Section 4, while

Section 5 concludes the study.

2. Related Work

Several network attack detection and protection techniques have been introduced in recent years. An intrusion detection system (IDS) can be classified into signature-based, anomaly-based, and hybrid structures [

21]. The first type detects anomalies by comparing the event with a built database containing signatures. The second approach observes attacks using deviations between the existing database containing the normal state and the current state. In all instances, an alert can be triggered if a matching similarity is found or a deviation is detected. Signature-based IDS are known for a low ratio of false alarms; however, it is very challenging to gather and keep possible variations of attacks [

22]. However, the challenge is to write signatures for all possible attack variations. Anomaly-based detection techniques also have the potential to identify other types of attacks, but it requires more evaluation resources. Hybrid techniques try to achieve the benefits of both approaches by combining them [

23,

24]. Flooding attack has drawn much attention and has been highlighted in recent surveys on cloud computing, wireless network, big data, etc. [

25,

26,

27].

Recently, several classification approaches have been proposed for DDoS attacks. On the protocol level, DDoS attacks can be classified into two categories, network-level and application-level DDoS flooding attacks [

28]. One of the major challenges in DDoS attacks is early detection and impact mitigation. However, it requires several additional features, which are not present in the current approaches [

29]. The HTTP-based approach is presented in [

30] for the detection of HTTP flooding attacks through data sampling. The study is based on the CUMSUM algorithm to identify the traffic as malicious or normal. Traffic analysis is performed by using the number of requests made by the application layer and the zero-sized number of packets. Results indicate that the approach achieves a detection rate between 80% to 86% with a 20% sampling rate.

A detection system for DDoS attacks is presented in [

31] that uses the D-FACE algorithm. This mechanism uses generalized information distance (GID) matrices and generalized entropy (GE) for the detection of various DDoS attacks. The proposed method requires the high involvement of internet service providers (ISP), which restricts its industrial use. The Sky-Shield system has been developed in [

32] to avoid DDoS attacks at the application layer. For the detection of irregularities in traffic, it considers two hash sketches to find divergence. In the mitigation phase, blacklisting, whitelisting, and the user filtering process are taken out for defensive mechanisms. The results are evaluated through customized datasets. Sky-Shield mainly focuses on the detection of flooding attacks at the application layer, especially HTTP protocol, so it is vulnerable to transport and network-level flooding attacks. Another probable method for DDoS flooding attack detection is using a semi-supervised based approach as in [

33] to detect and protect from DDoS flooding attacks. Two different clustering algorithms are used, while the final label is managed using voting. The CICIDS 2017 data set is used for the evaluation process.

The study [

34] deployed machine learning and deep learning models for DDoS attack detection. Experiments are performed using different deep learning and machine learning models such as gated recurrent unit, RF, and LR using CICDoS2017 and CICDDoS2019 datasets. Models achieved a 0.99 accuracy score on unseen test data and also perform better in the simulation network. Another study [

35] performed experiments for malicious traffic detection using ensemble classifier extra boosting forest (EBF) with PCA feature selection technique. The study conducted experiments on UNSW-NB15 and IoTID20 datasets individually, as well as both combined, and achieved 0.985 and 0.984 accuracy scores, respectively. The authors investigated the performance of three supervised machine learning algorithms including KNN, RF, and NB for the DDoS attack detection in [

36]. NB outperforms all used models with a significant 0.985 accuracy score.

Along the same lines, the study [

37] proposed an approach to detect application-layer DDoS attacks in real-time using machine learning techniques. They deployed state-of-the-art multilayer perceptron (MLP) and RF with and without a big data approach. For both approaches, RF achieved an accuracy score of 0.999, while MLP achieved 0.990 without big data and 0.993 with the big data approach. The study [

38] performed experiments for DDoS attack prediction using supervised machine learning models. For this purpose, the performance of several models is analyzed such as LR, Bayesian naive Bayes (BNB), random tree (RT), KNN, and REPTree algorithm, etc. KNN performs significantly better with a 0.998 accuracy score. Similarly, [

39] investigated DDoS traffic attack detection using a hybrid SVM-RF model. They found novel features from the dataset to train the proposed model and achieved a 0.988 accuracy. An analytical summary of the discussed research works is provided in

Table 1. In the literature, high-accuracy results are reported from studies that deploy complex deep learning models, and the computational cost is high. Contrarily, if simple methods are used, their computational cost is low, but such approaches show poor performance regarding attack detection accuracy. We aim to overcome this limitation by developing an approach that is significant in terms of both accuracy and computational cost.

4. Results and Discussion

This section discusses the results of the experiments for DoS/DDoS attack detection. For this purpose, multiple experiments are performed using the machine learning models with a dataset comprising application layer DoS/DDoS attacks.

4.1. Results without Feature Selection

In the first set of experiments, machine learning models were applied without any feature selection technique to classify normal and malicious traffic. A total of 78 attributes were used for experiments, and results are given in

Table 6.

Results show that tree base ensemble models perform better as compared to linear models because the linear models only perform well when data is linearly separable. Increasing the training samples also helps to increase the overall accuracy of models.

Table 6 shows that better accuracy is achieved by training the model using 50,000 data samples as compared to 25,000 data samples. By increasing training, data samples’ accuracy score will become refined.

Table 7 shows a performance comparison of 50,000 training samples for individual classes of attacks. RF achieves the highest precision, recall, and F1 score for all classes, while GBM and ETC perform slightly poorly as shown in

Figure 3. LR shows the worst performance, especially when DDoS attacks are considered.

4.2. Performance Using Multi-Features

In the second set of experiments, feature selection techniques such as PCA and SVD were used to select important features from data samples. By applying feature selection techniques, learning models improved their results. These techniques also improve the performance of all learning models, as shown in

Table 8 and

Table 9. The use of multi-features from PCA and SVD boosted the accuracy score of tree-based ensemble models. The results of LR also improved.

Table 8 shows that the accuracy with all features is lower than PCA + SVD because PCA and SVD, when used together, provide prominent features, which help the model’s training. It also shows that feature engineering techniques are valuable for machine learning and support results optimization. Experimental results show that the ensemble models achieve the highest accuracy because ensemble models use several decision trees and often perform better than a single decision tree in the classification process. As mentioned above, this study has multiple target classes, including ’benign’, ’DDoS’, and ’DoS’, and individual class accuracy varies, as shown in

Table 9. LR shows the worst accuracy regarding DDoS attack detection, with an accuracy score of 0.92, which is the lowest among all models.

Figure 4 shows that all machine learning models achieve the best results when trained using multi-features from PCA and SVD. PCA and SVD provide appropriate features that play a significant role to optimize the performance of machine learning models. As compared to other models, RF persistently shows better performance for all classes of network attacks.

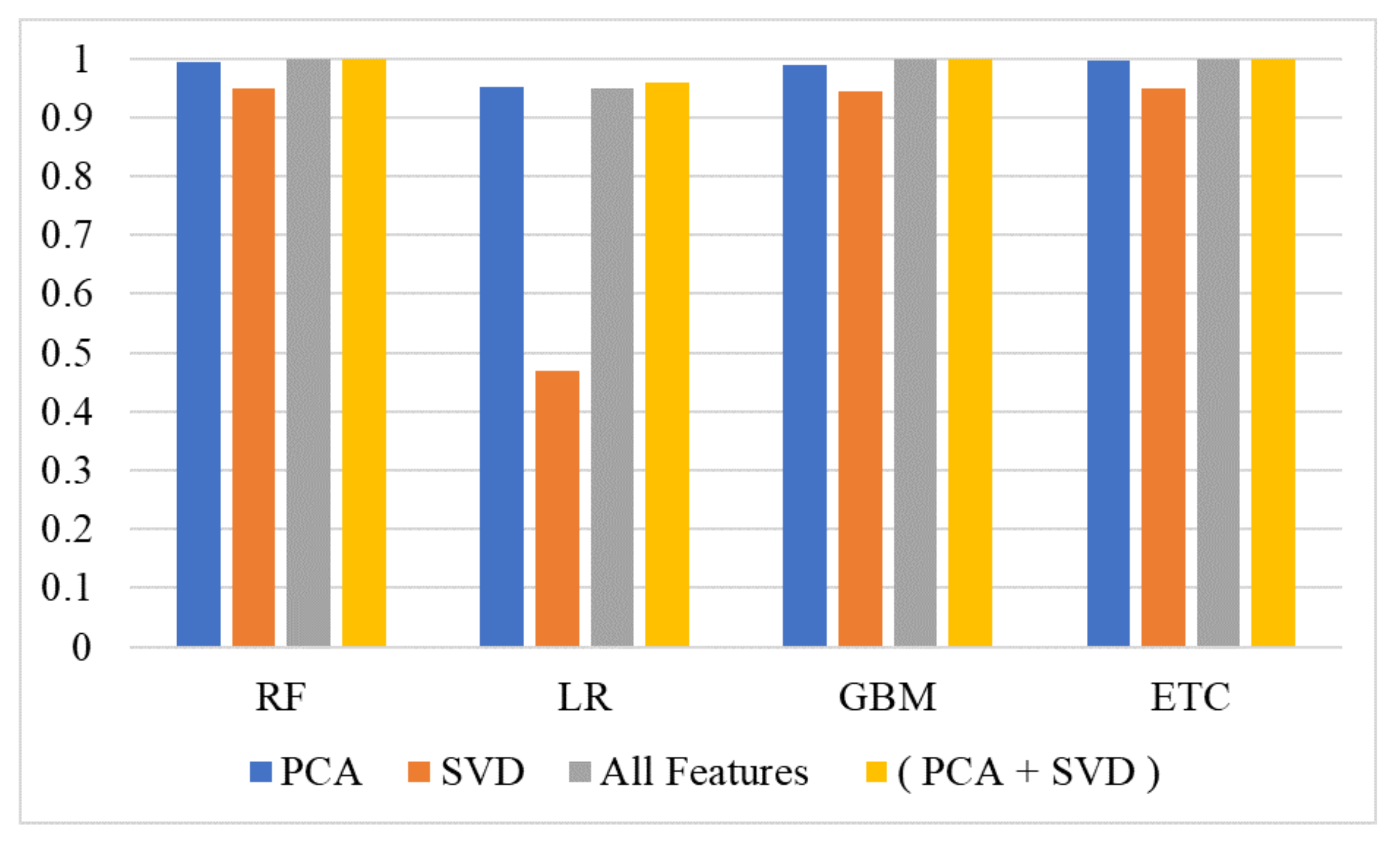

4.3. Performance Comparison Using Different Features

Besides the experiments without any feature selection and multi-features, experiments were performed using all the features and individual features from PCA and SVD to compare the performance with the proposed multi-features.

Table 10 shows the importance of multi-features for attack detection, as it shows the best performance among all the feature methods used for experiments.

Results using PCA and SVD features alone show that SVD shows poor results compared to PCA, especially for LR. Using all features also shows better performance; however, this performance is further improved when multi-features are used, as shown in

Figure 5.

It can be observed that PCA gives better results as compared to SVD because PCA recognizes correlations in data and reduces the redundancy of data by constructing new smaller features. Applying multi-features from SVD and PCA, all learning models improved their results, and tree-based ensemble learning models obtained 100% accuracy.

4.4. K-Fold Cross Validation

A 10-fold cross-validation was performed to show the significance of the proposed multi-features approach. Results of the 10-fold cross-validation with both 50 k and 25 k samples are given in

Table 11. Results show that tree-based models show significant accuracy with 10-fold cross-validation with (+/−0.00) standard deviation, while LR had a 0.96 accuracy score. LR performs better when the number of features is higher as compared to the number of samples, which is not the case with the current dataset.

4.5. Performance of Deep Learning Models

Deep learning models are also deployed in this study for performance appraisal. In this regard, state-of-the-art CNN-LSTM [

65], LSTM [

66], CNN [

67], and recurrent neural network (RNN) were deployed for DDoS attack detection. Performance analysis was carried out using the multi-features for deep learning models’ training using 25 k and 50 k samples. Results using 25 k samples with multi-features are given in

Table 12.

Results for 50 k samples with multi-features are shown in

Table 13. Results show that models perform well on 50 k samples as CNN achieved the highest accuracy score of 0.994 with 50 k samples and RNN shows poor performance as compared to other deep learning models with a 0.961 accuracy score. RNN performs poorly as a simple architecture of recurrent applications which does not perform well on large datasets. However, decreasing the size of the dataset increases its performance as shown in

Table 12. With 25 k samples, CNN achieves the highest 1.00 accuracy using the multi-features approach while all other models show a 0.99 accuracy score on average.

4.6. Computational Complexity of Multi-Features

One objective of this study is to obtain high DDoS attack detection accuracy with reduced computational complexity. Using all 78 features requires a substantial amount of time for models’ training, which can be reduced using the multi-features approach without compromising the performance of models.

Table 14 shows the computation time for each model using 25 k and 50 k samples used with the multi-features technique and all 78 features. It can be observed that the computational time using all 78 features was significantly high as compared to the proposed multi-features approach. In addition, despite using the low number of features, the provided accuracy was higher than using all 78 features.

4.7. Comparison with Existing Studies

A performance comparison was carried out with state-of-the-art studies to show the significance of the proposed multi-features approach for DDoS attack detection. For comparison, the selected models from the state-of-the-art studies were implemented and tested using the dataset used in this study. For performance comparison, we selected the most recent studies that have worked on similar tasks. We implemented the models as per their given implementation details and performed experiments in the same environment that was used for the proposed approach. The performance comparison results are shown in

Table 15. The proposed approach is significantly better than existing approaches because it has simple architecture and shows robust results. We used state-of-the-art methods in combination with feature selection to obtain high accuracy. As a result, results are good in terms of both accuracy and efficiency, as most of the models achieved 100% accuracy scores using multi-features.

Studies on DDoS attack detection predominantly work on optimizing the models to boost their performance. On the other hand, this study primarily focuses on selecting the optimal features for increased attack detection accuracy. The study [

36] used NB for DDoS attack classification, and [

34] used both deep learning and machine learning models, including LSTM, GRU, CNN, MLP, RF, LR, and KNN. The study [

39] worked on an ensemble model and combined SVM and RF to achieve better performance. In comparison with other studies, the proposed approach outperforms previous studies and obtains superior results.

4.8. Discussion

The purpose of the system is to detect a DDoS attack using machine learning. With increasing DDoS attacks recently, an automated approach is needed to mitigate the risk of such attacks. The proposed approach has the potential to be used in real-time, as it is simple and effective and provides robust results. It can be implemented on network interfaces to detect DDoS attacks. Compared to the existing complicated approach that requires high computation resources, the proposed approach is more effective regarding the need for computation resources. Additionally, the provided attack detection accuracy is high, which provides more security against DDoS attacks. The use of a smaller yet effective hybrid feature set makes it work faster and better.

An SSL DDoS attack targets the SSL handshake protocol either by sending worthless data to the SSL server, which results in connection issues for legitimate users, or by abusing the SSL handshake protocol itself. An HTTP flood DDoS attack also uses HTTP post and obtains a request to access the server. Since the attacks on application layers are targeted in this study, attacks on protocols such as HTTP can also be covered by the proposed approach.

5. Conclusions

The internet network has persistently been exploited by DoS/DDoS attacks, and the number of these attacks have substantially increased over the past few years. Despite the available advanced and sophisticated attack detection approaches, network security remains a challenging problem today. This study proposes a machine learning-based framework to detect DDoS/DoS attacks at the application layer by using multi-features. For multi-features, the best features from PCA and SVD are extracted to obtain superior performance. Extensive experiments are carried out using LR, RF, GB, ETC, as well as, CNN, LSTM, CNN-LSTM, and RNN models using different dataset sizes and different features. Experimental results indicate that PCA features tend to show better performance as compared to SVD features. Despite the results being better with using all features, multi-features help optimize the models’ performance. The RF model outperforms all other models by obtaining 100% accuracy when used with multi-features. On average, the tree-based ensemble models show better performance than linear models. This study shows excellent results, yet has several limitations. First, the approach is tested on a single dataset and requires further experiments regarding attacks on other layers. Second, the impact of dataset size is not investigated. Increasing the size might produce better results for deep learning models. Third, the influence of feature set size is also not analyzed, which we intend to perform in future work.