Federated AI-Enabled In-Vehicle Network Intrusion Detection for Internet of Vehicles †

Abstract

1. Introduction

1.1. Related Work

1.1.1. DNN-Enabled IVN Intrusion Detection

1.1.2. DRL-Based Federated Client Selection for IoV

1.2. Contributions

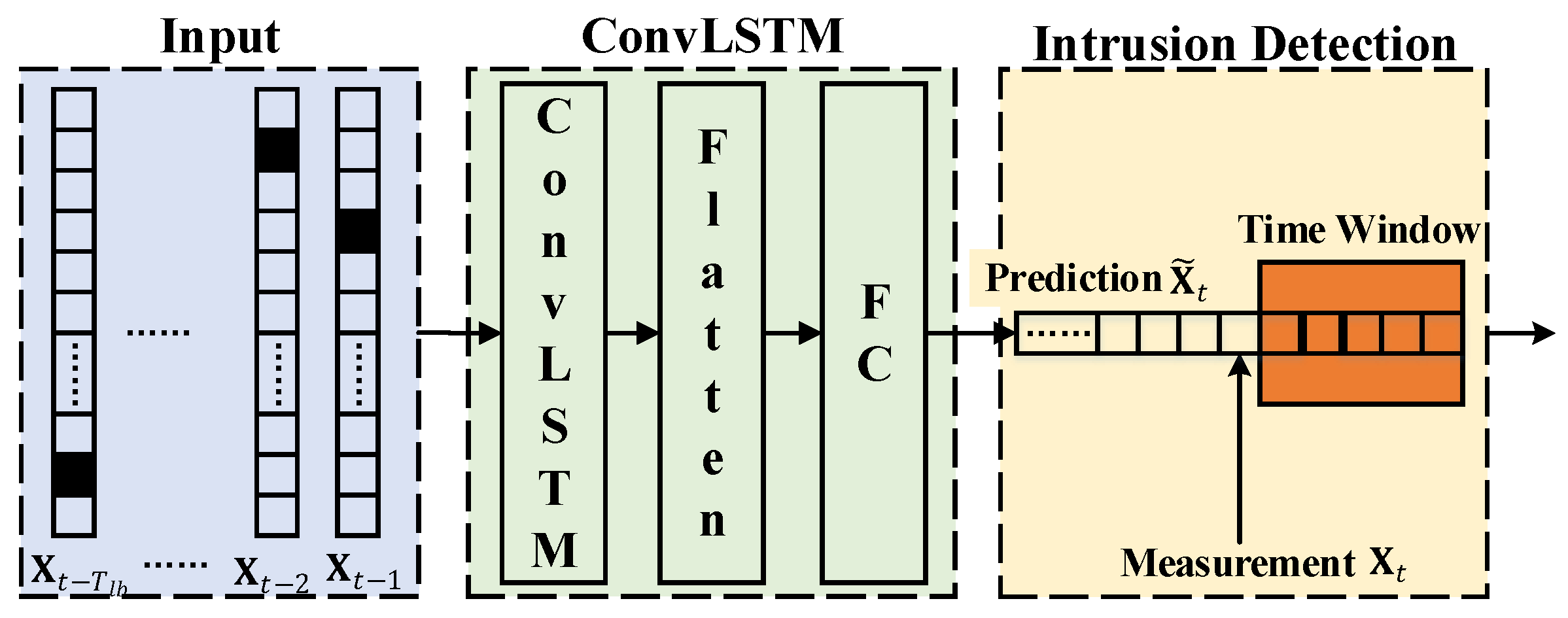

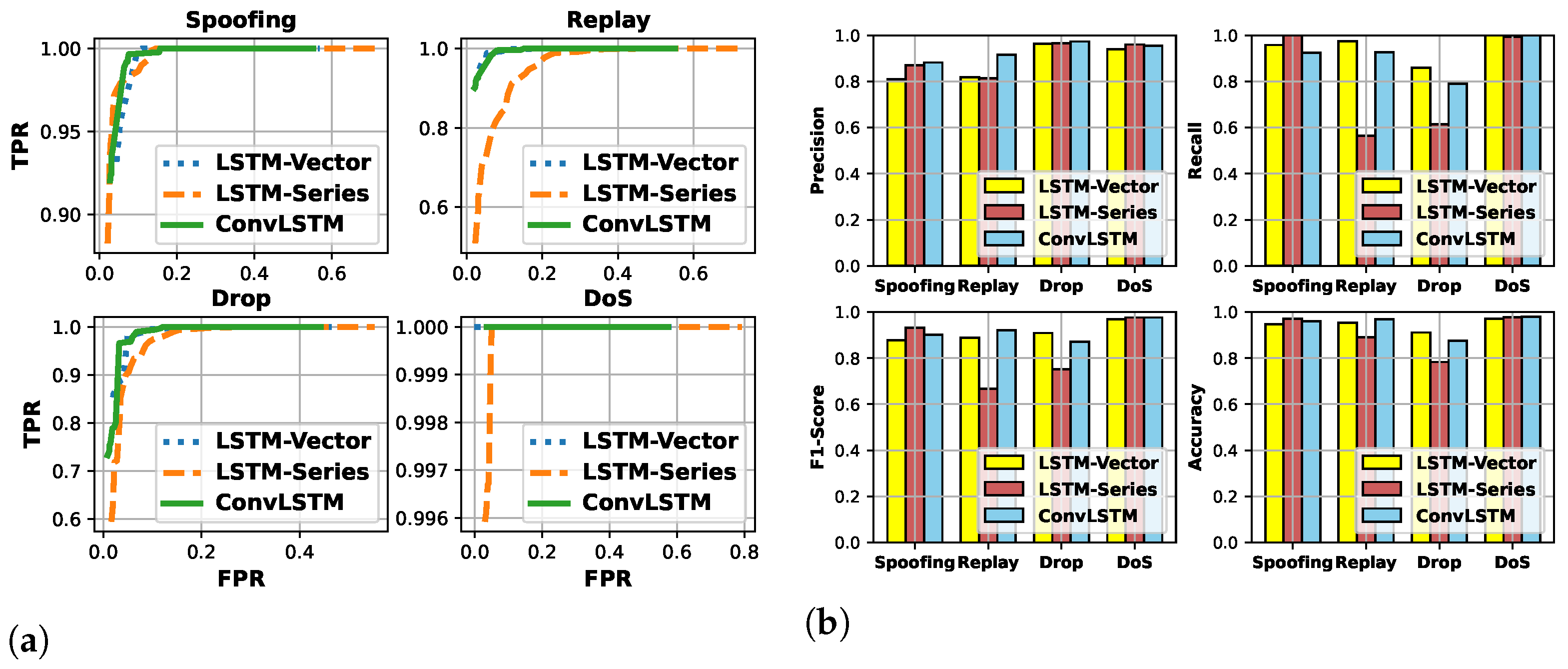

- A ConvLSTM-based intrusion detection method is developed for malicious attack detection. Simulation results indicate that by exploiting the ConvLSTM, the input ID vector can be preprocessed with the convolutional filter first and then modeled with LSTM for the prediction, which dramatically reduces the model size and improves the convergence rate while maintaining the 95%-beyond detection accuracy.

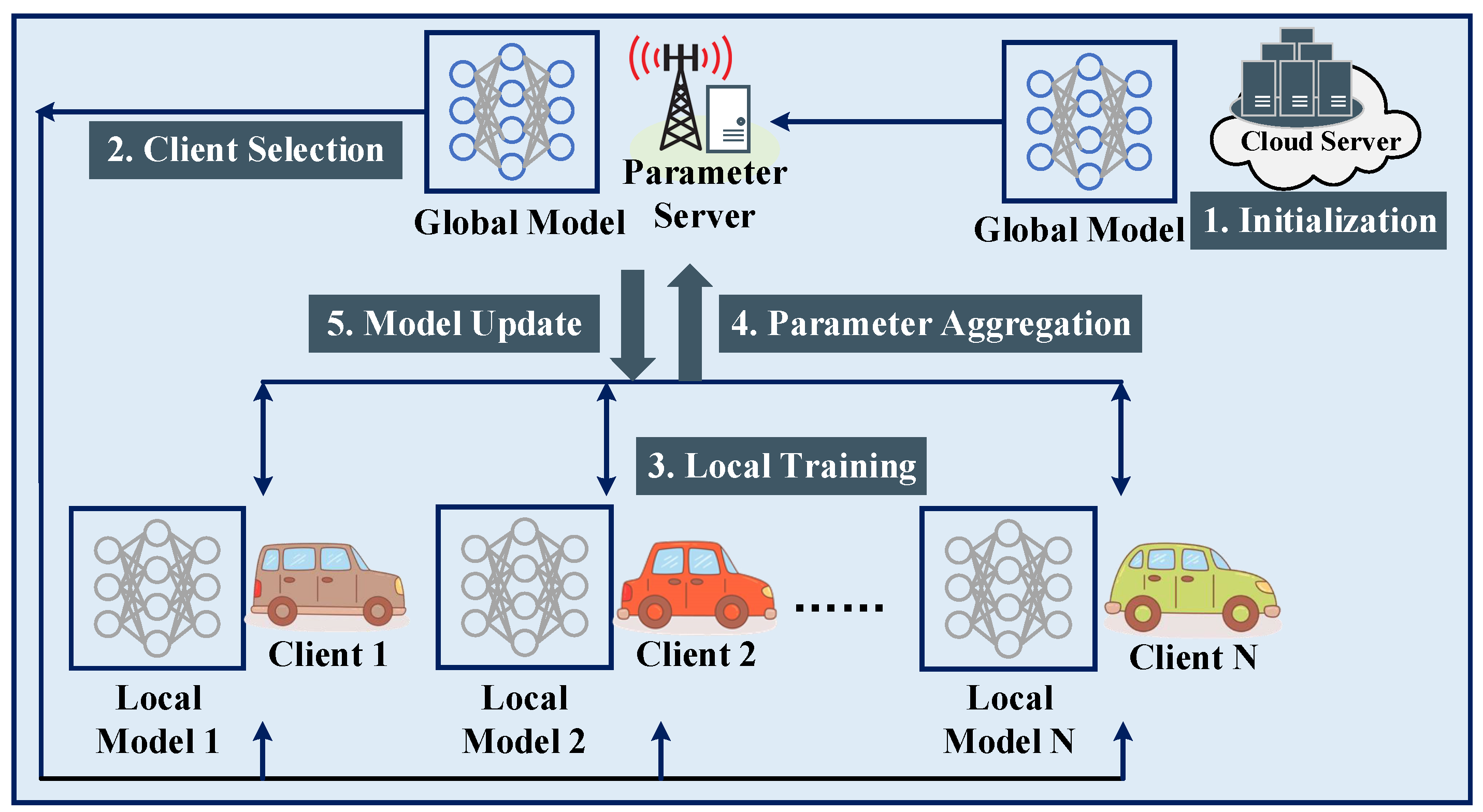

- For ConvLSTM training, a client-server FL framework is built. To further optimize the performance of the federated ConvLSTM model training, an MDP is set up for formulating the problem of FCS.

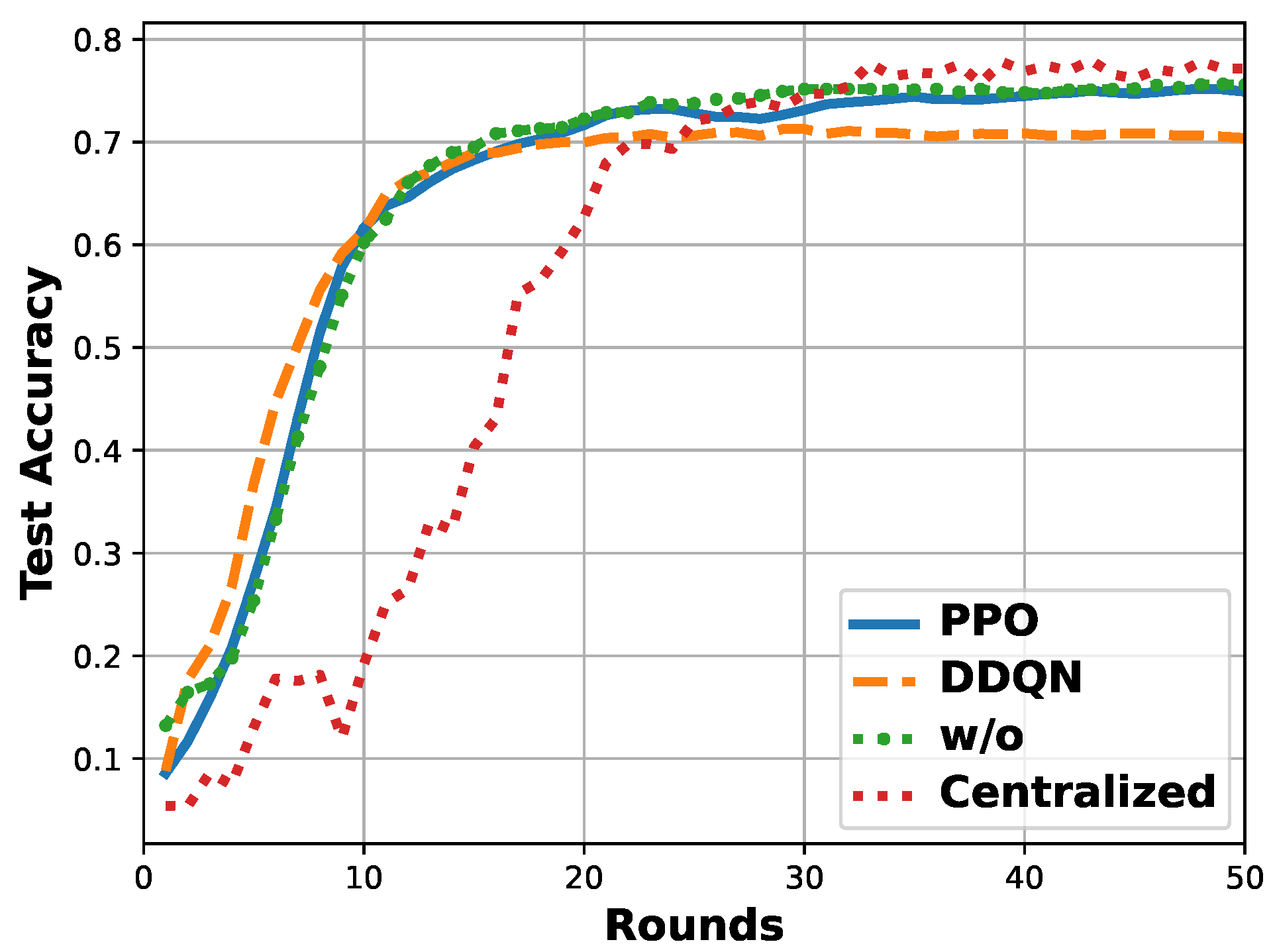

- A PPO-based FCS scheme is proposed for resolving the MDP problem. Simulation results unveil that the proposed scheme jointly optimizes the convergence rate, model accuracy, and related overhead of FL as compared to the benchmarks, including centralized model training, fundamental FL without an FCS scheme, and FL with the DDQN-based FCS scheme.

2. Preliminaries

2.1. Fundamental System Model

2.1.1. Communication Model

2.1.2. Latency Model

2.1.3. Energy Consumption Model

2.1.4. Local Data Quality Model

2.2. Malicious Attacks

3. ConvLSTM-Based In-Vehicle Network Intrusion Detection

3.1. One-Hot Encoding

3.2. ConvLSTM Neural Network

3.3. Intrusion Detection

4. Federated ConvLSTM Neural Network Model Training with Client Selection

4.1. Problem Formulation for Federated Client Selection

4.1.1. State Space S

4.1.2. Action Space A

4.1.3. State Transition P

4.1.4. Reward Function R

4.2. PPO-Based Federated Client Selection

| Algorithm 1 PPO-based FCS Scheme |

|

4.3. Federated ConvLSTM Neural Network Model Training

4.3.1. Neural Network Model Initialization

4.3.2. Federated Client Selection

- The candidate vehicles of identical vehicular series are replied with an acknowledgment. Subsequently, the global ConvLSTM model is downloaded to the candidates.

- After the pre-round training, the candidate vehicles upload their local information to the MEC server, including the model parameters after training , available CPU-cycle frequency , and remaining energy .

- The MEC server then selects N vehicles out of K candidates by executing the PPO-based FCS scheme, i.e., Algorithm 1. The state of a candidate vehicle includes . and are offered by candidates. is calculated based on by using (8). In this work, is estimated by the communication model (1). In practical application scenarios, is measured through onsite communications.

- The MEC server notifies the selected vehicles with an acceptance notice and the unselected vehicles with a rejection notice.

4.3.3. Local Model Training

4.3.4. Global Model Update by Parameter Aggregation

4.3.5. Local Model Update

5. Performance Evaluation

5.1. Experimental Settings

5.1.1. Fundamentals

5.1.2. Dataset

5.1.3. Attack Models

5.1.4. Evaluation Metrics

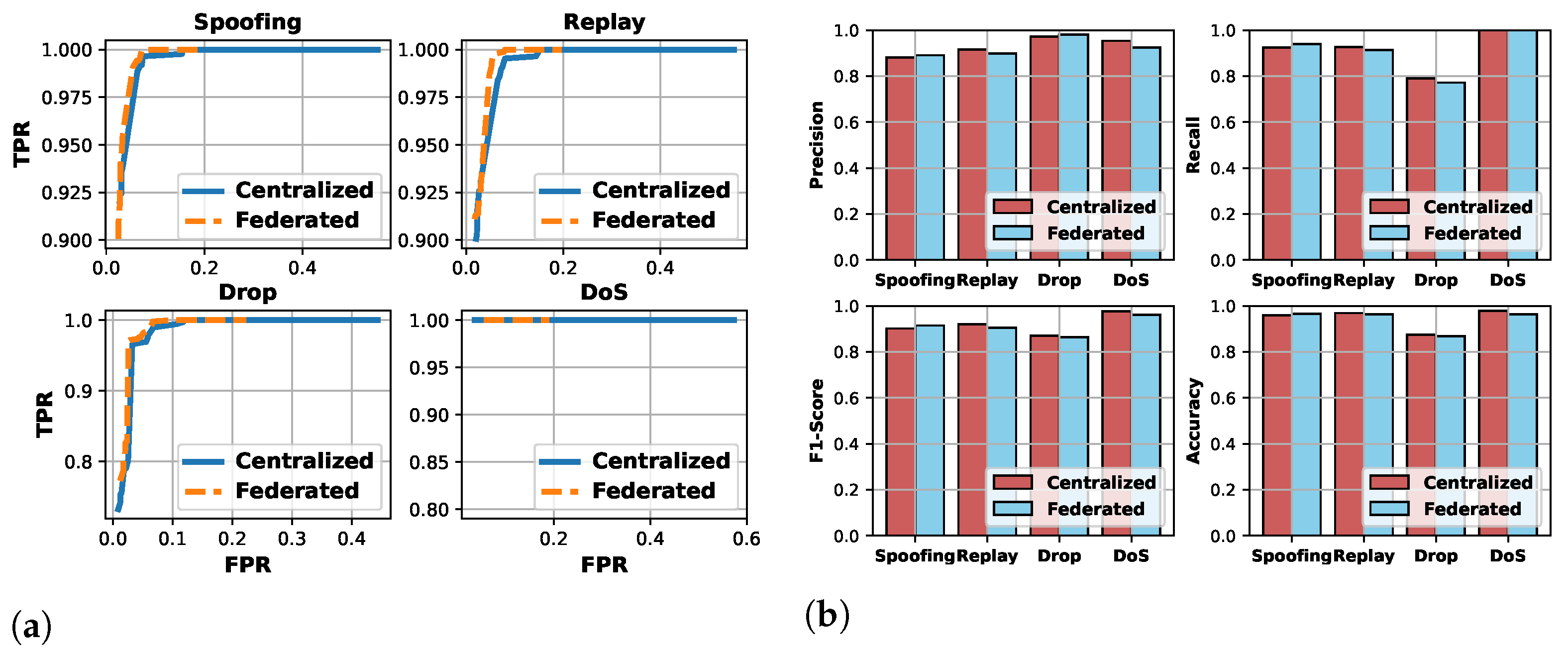

5.2. Analysis of IVN Intrusion Detection

5.3. Analysis of Federated Client Selection

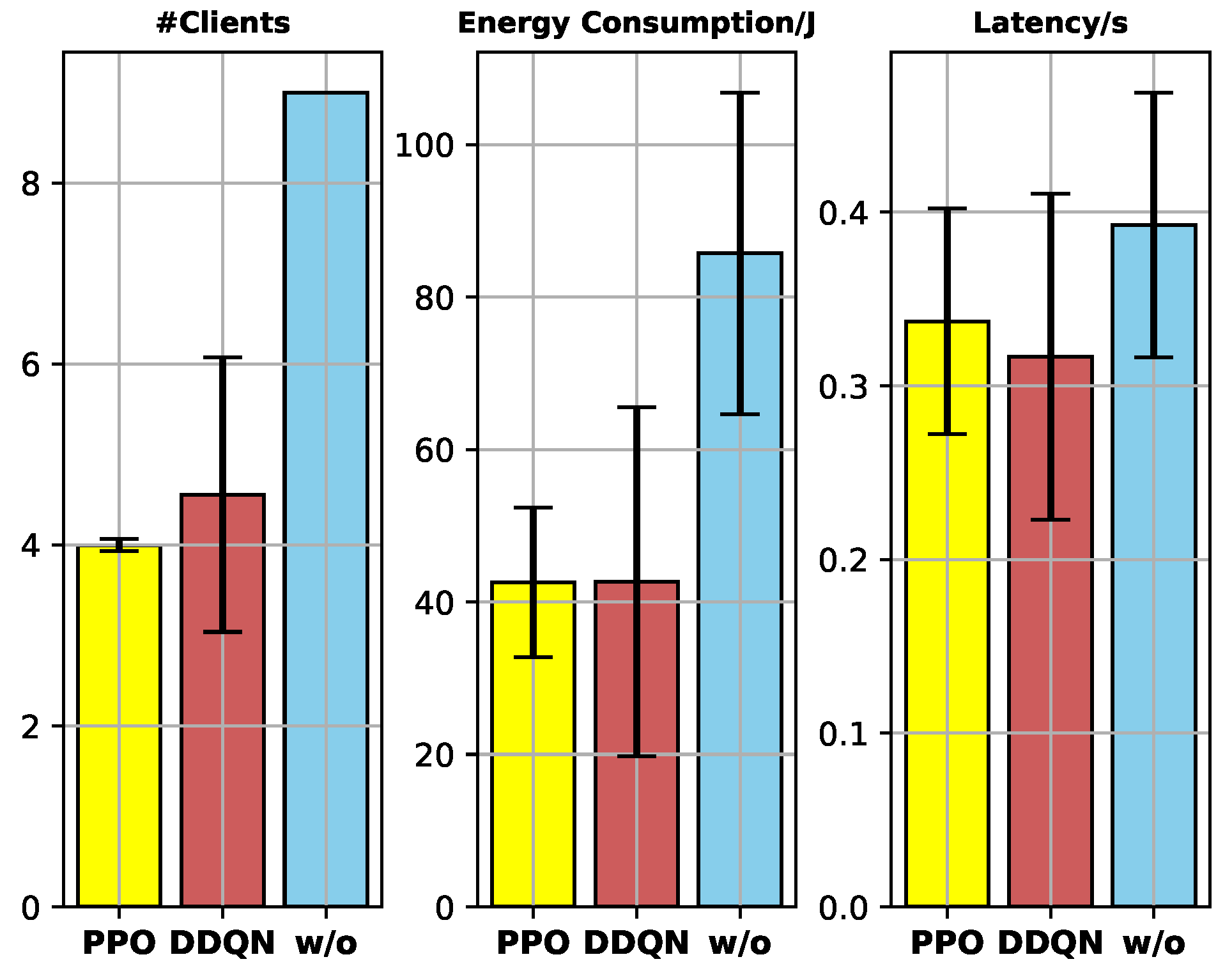

- Federated model training converges faster than the centralized one when the total number of data samples used for training is the same. The reason is that for each round, the clients of FL conduct the local training parallelly and jointly contribute to the model update.

- Compared to the fundamental FL, the proposed FCS scheme dramatically reduces the system overhead while maintaining the model training accuracy, due to the unselected clients with low data quality not contributing to the model training much.

- Compared to the DDQN-based FCS scheme, the proposed FCS scheme performs more stably with a 95.52% lower standard deviation of #clients. It is because PPO is a policy-gradient DRL algorithm that optimizes the policy directly, and the usage of the clip function enhances the stability of the policy update.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AWGN | Additive White Gaussian Noise |

| BS | Base Station |

| CAN | Controller Area Network |

| ConvLSTM | Convolutional Long-Short Term Memory |

| C-V2X | Cellular-Vehicle-to-Everything |

| DDPG | Deep Deterministic Policy Gradient |

| DoS | Denial-of-Service |

| DNN | Deep Neural Network |

| DRL | Deep Reinforcement Learning |

| FCS | Federated Client Selection |

| FL | Federated Learning |

| FPR | False Positive Rate |

| GAN | Generative Adversarial Network |

| HTM | Hierarchical Temporal Memory |

| IoV | Internet of Vehicles |

| ICV | Intelligent Connected Vehicle |

| IVN | In-Vehicle Network |

| MDP | Markov Decision Process |

| MEC | Mobile Edge Computing |

| MLP | Multi-Layer Perception |

| OBU | On-Board Unit |

| PPO | Proximal Policy Optimization |

| ROC | Receiver Operating Characteristic |

| RSU | Road-Side Unit |

| TPR | True Positive Rate |

| uRLLC | ultra-Reliable Low-Latency Communications |

| V2I | Vehicle-to-Infrastructure |

| V2X | Vehicle-to-Everything |

References

- Zeng, W.; Khalid, M.A.S.; Chowdhury, S. In-Vehicle Networks Outlook: Achievements and Challenges. IEEE Commun. Surv. Tuts. 2016, 18, 1552–1571. [Google Scholar] [CrossRef]

- Aliwa, E.; Rana, O.; Perera, C.; Burnap, P. Cyberattacks and Countermeasures for In-Vehicle Networks. ACM Comput. Surv. (CSUR) 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Khatri, N.; Shrestha, R.; Nam, S.Y. Security Issues with In-vehicle Networks, and Enhanced Countermeasures Based on Blockchain. Electronics 2021, 10, 893. [Google Scholar] [CrossRef]

- Karopoulos, G.; Kambourakis, G.; Chatzoglou, E.; Hernández-Ramos, J.L.; Kouliaridis, V. Demystifying In-Vehicle Intrusion Detection Systems: A Survey of Surveys and a Meta-Taxonomy. Electronics 2022, 11, 1072. [Google Scholar] [CrossRef]

- Nie, S.; Liu, L.; Du, Y. Free-Fall: Hacking Tesla from Wireless to CAN Bus. In Proceedings of the Black Hat USA, Las Vegas, NV, USA, 22–27 July 2017. [Google Scholar]

- Miller, C.; Valasek, C. Remote Exploitation of An Unaltered Passenger Vehicle. In Proceedings of the Black Hat USA, Las Vegas, NV, USA, 1–6 August 2015. [Google Scholar]

- Ferrag, M.A.; Friha, O.; Hamouda, D.; Maglaras, L.; Janicke, H. Edge-IIoTset: A New Comprehensive Realistic Cyber Security Dataset of IoT and IIoT Applications for Centralized and Federated Learning. IEEE Access 2022, 10, 40281–40306. [Google Scholar] [CrossRef]

- Dahou, A.; Abd Elaziz, M.; Chelloug, S.A.; Awadallah, M.A.; Al-Betar, M.A.; Al-qaness, M.A.; Forestiero, A. Intrusion Detection System for IoT Based on Deep Learning and Modified Reptile Search Algorithm. Comput. Intell. Neurosci. 2022, 2022, 6473507. [Google Scholar] [CrossRef]

- Wu, W.; Li, R.; Xie, G.; An, J.; Bai, Y.; Zhou, J.; Li, K. A Survey of Intrusion Detection for In-Vehicle Networks. IEEE Trans. Intell. Transp. Syst. 2020, 21, 919–933. [Google Scholar] [CrossRef]

- Zhang, J.; Letaief, K.B. Mobile Edge Intelligence and Computing for the Internet of Vehicles. Proc. IEEE 2019, 108, 246–261. [Google Scholar] [CrossRef]

- Samarakoon, S.; Bennis, M.; Saad, W.; Debbah, M. Distributed Federated Learning for Ultra-Reliable Low-Latency Vehicular Communications. IEEE Trans. Commun. 2020, 68, 1146–1159. [Google Scholar] [CrossRef]

- Manias, D.M.; Shami, A. Making a Case for Federated Learning in the Internet of Vehicles and Intelligent Transportation Systems. IEEE Netw. 2021, 35, 88–94. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-Efficient Learning of Deep Networks from Decentralized Data. In Proceedings of the Artificial Intelligence and Statistics PMLR, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Nishio, T.; Yonetani, R. Client Selection for Federated Learning with Heterogeneous Resources in Mobile Edge. In Proceedings of the IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–7. [Google Scholar]

- Kang, M.J.; Kang, J.W.; Tang, T. Intrusion Detection System Using Deep Neural Network for In-Vehicle Network Security. PLoS ONE 2016, 11, e0155781. [Google Scholar] [CrossRef] [PubMed]

- Moore, M.R.; Vann, J.M. Anomaly Detection of Cyber Physical Network Data Using 2D Images. In Proceedings of the International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–13 January 2019; pp. 1–5. [Google Scholar]

- Seo, E.; Song, H.M.; Kim, H.K. GIDS: GAN based Intrusion Detection System for In-Vehicle Network. In Proceedings of the IEEE 16th Annual Conference on Privacy, Security and Trust (PST), Belfast, UK, 28–30 August 2018; pp. 1–6. [Google Scholar]

- Taylor, A.; Leblanc, S.; Japkowicz, N. Anomaly Detection in Automobile Control Network Data with Long Short-Term Memory Networks. In Proceedings of the IEEE International Conference on Data Science and Advanced Analytics, Montreal, QC, Canada, 17–19 October 2016; pp. 130–139. [Google Scholar]

- Wang, C.; Zhao, Z.; Gong, L.; Zhu, L.; Liu, Z.; Cheng, X. A Distributed Anomaly Detection System for In-Vehicle Network Using HTM. IEEE Access 2018, 6, 9091–9098. [Google Scholar] [CrossRef]

- Zhu, K.; Chen, Z.; Peng, Y.; Zhang, L. Mobile Edge Assisted Literal Multi-Dimensional Anomaly Detection of In-Vehicle Network Using LSTM. IEEE Trans. Veh. Technol. 2019, 68, 4275–4284. [Google Scholar] [CrossRef]

- Desta, A.K.; Ohira, S.; Arai, I.; Fujikawa, K. ID Sequence Analysis for Intrusion Detection in the CAN bus using Long Short Term Memory Networks. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Austin, TX, USA, 23–27 March 2020; pp. 1–6. [Google Scholar]

- Yu, T.; Hua, G.; Wang, H.; Yang, J.; Hu, J. Federated-LSTM based Network Intrusion Detection Method for Intelligent Connected Vehicles. In Proceedings of the IEEE International Conference on Communications (ICC), Seoul, Korea, 16–20 May 2022; pp. 4324–4329. [Google Scholar]

- Chai, H.; Leng, S.; Chen, Y.; Zhang, K. A Hierarchical Blockchain-Enabled Federated Learning Algorithm for Knowledge Sharing in Internet of Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3975–3986. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, X.; Zhang, K.; Maharjan, S.; Zhang, Y. Blockchain Empowered Asynchronous Federated Learning for Secure Data Sharing in Internet of Vehicles. IEEE Trans. Veh. Technol. 2020, 69, 4298–4311. [Google Scholar] [CrossRef]

- Fragkos, G.; Lebien, S.; Tsiropoulou, E.E. Artificial Intelligent Multi-Access Edge Computing Servers Management. IEEE Access 2020, 8, 171292–171304. [Google Scholar] [CrossRef]

- Forestiero, A.; Papuzzo, G. Agents-based Algorithm for A Distributed Information System in Internet of Things. IEEE Internet Things J. 2021, 8, 16548–16558. [Google Scholar] [CrossRef]

- Zhang, H.; Xie, Z.; Zarei, R.; Wu, T.; Chen, K. Adaptive Client Selection in Resource Constrained Federated Learning Systems: A Deep Reinforcement Learning Approach. IEEE Access 2021, 9, 98423–98432. [Google Scholar] [CrossRef]

- Yu, T.; Wang, X.; Yang, J.; Hu, J. Proximal Policy Optimization-based Federated Client Selection for Internet of Vehicles. In Proceedings of the 2022 IEEE/CIC International Conference on Communications in China (ICCC), Foshan, China, 11–13 August 2022; pp. 648–653. [Google Scholar]

- Raza, S.; Wang, S.; Ahmed, M.; Anwar, M.R.; Mirza, M.A.; Khan, W.U. Task Offloading and Resource Allocation for IoV Using 5G NR-V2X Communication. IEEE Internet Things J. 2022, 9, 10397–10410. [Google Scholar] [CrossRef]

- Wang, H.; Li, X.; Ji, H.; Zhang, H. Federated Offloading Scheme to Minimize Latency in MEC-Enabled Vehicular Networks. In Proceedings of the 2018 IEEE Globecom Workshops (GC Wkshps), Abu Dhabi, United Arab Emirates, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Zhao, Y.; Li, M.; Lai, L.; Suda, N.; Civin, D.; Chandra, V. Federated Learning with Non-IID Data. arXiv 2018, arXiv:1806.00582. [Google Scholar] [CrossRef]

- Ji, H.; Wang, Y.; Qin, H.; Wang, Y.; Li, H. Comparative Performance Evaluation of Intrusion Detection Methods for In-Vehicle Networks. IEEE Access 2018, 6, 37523–37532. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the 28th International Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Wu, Y.; Wu, J.; Chen, L.; Yan, J.; Han, Y. Load Balance Guaranteed Vehicle-to-Vehicle Computation Offloading for Min-Max Fairness in VANETs. IEEE Trans. Intell. Transp. Syst. 2022, 23, 11994–12013. [Google Scholar] [CrossRef]

- Lee, H.; Jeong, S.H.; Kim, H.K. OTIDS: A Novel Intrusion Detection System for In-Vehicle Network by Using Remote Frame. In Proceedings of the IEEE 15th Annual Conference on Privacy, Security and Trust (PST), Calgary, AB, Canada, 28–30 August 2017; pp. 57–66. [Google Scholar]

| Notation | Description | Notation | Description |

|---|---|---|---|

| Uplink data rate | B | Channel bandwidth | |

| Transmission power | d | Distance | |

| Path loss exponent | AWGN power | ||

| Time cost of local training | x | Data size for local training | |

| q | CPU cycles/bit | f | CPU-cycle frequency |

| Energy consumption of local training | Effective switched capacitance | ||

| Time cost of model uploading | Energy consumption of model uploading | ||

| X | Model size | Weight divergence | |

| Weight of neural network model | L | Length of ID vector | |

| Prediction error | Fixed value | ||

| Time window size of cumulative error | Detection threshold | ||

| State | action | ||

| Reward | Set of trajectories | ||

| N | Number of selected clients | K | Number of candidate clients |

| Policy with parameters | Value function with parameters | ||

| Clip function | Hyperparameter of | ||

| Advantage function | Temporal difference error | ||

| Discount factor | Smoothing factor |

| Parameter | Value |

|---|---|

| CPU cycles/bit q | 1000 |

| CPU-cycle frequency f | [2, 6] GHz |

| Effective switched capacitance | |

| Transmission power | [15, 23] dBm |

| Transmission bandwidth B | 20 MHz |

| AWGN power | mW |

| Path loss exponent | 2 |

| Rayleigh distribution coefficient | |

| Vertical distance between BS and road | 100 m |

| LSTM-Vector | Input | Output | Activation |

|---|---|---|---|

| 1st FC | (None, 50, 45) | (None, 50, 128) | tanh |

| 2nd FC | (None, 50, 128) | (None, 50, 128) | tanh |

| 1st LSTM | (None, 50, 128) | (None, 50, 512) | tanh |

| 2nd LSTM | (None, 50, 512) | (None, 512) | tanh |

| 3rd FC | (None, 512) | (None, 45) | softmax |

| LSTM-Series | Input | Output | Activation |

| 1st FC | (None, 50, 45) | (None, 50, 128) | tanh |

| 1st LSTM | (None, 50, 128) | (None, 50, 128) | tanh |

| 2nd LSTM | (None, 50, 128) | (None, 128) | tanh |

| 2nd FC | (None, 128) | (None, 1) | sigmoid |

| ConvLSTM | Input | Output | Activation |

| ConvLSTM | (None, 50, 1, 45, 1) | (None, 1, 37, 64) | tanh |

| Flatten | (None, 1, 37, 64) | (None, 2368) | |

| Dense | (None, 2368) | (None, 45) | softmax |

| Spoofing | LSTM-Vector | LSTM-Series | ConvLSTM | Replay | LSTM-Vector | LSTM-Series | ConvLSTM |

|---|---|---|---|---|---|---|---|

| Precision | 0.809 | 0.871 | 0.882 | Precision | 0.818 | 0.813 | 0.916 |

| Recall | 0.958 | 1 | 0.924 | Recall | 0.974 | 0.565 | 0.926 |

| F1-Score | 0.877 | 0.931 | 0.902 | F1-Score | 0.889 | 0.667 | 0.921 |

| Accuracy | 0.947 | 0.971 | 0.960 | Accuracy | 0.953 | 0.890 | 0.969 |

| Drop | LSTM-Vector | LSTM-Series | ConvLSTM | DoS | LSTM-Vector | LSTM-Series | ConvLSTM |

| Precision | 0.963 | 0.966 | 0.973 | Precision | 0.939 | 0.960 | 0.954 |

| Recall | 0.860 | 0.614 | 0.790 | Recall | 1 | 0.993 | 1 |

| F1-Score | 0.909 | 0.751 | 0.871 | F1-Score | 0.968 | 0.976 | 0.976 |

| Accuracy | 0.911 | 0.783 | 0.875 | Accuracy | 0.971 | 0.978 | 0.979 |

| Spoofing | Replay | Drop | DoS | |

|---|---|---|---|---|

| Precision | 0.891 | 0.899 | 0.981 | 0.925 |

| Recall | 0.940 | 0.913 | 0.772 | 1 |

| F1-Score | 0.915 | 0.906 | 0.864 | 0.961 |

| Accuracy | 0.966 | 0.963 | 0.869 | 0.964 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Hu, J.; Yu, T. Federated AI-Enabled In-Vehicle Network Intrusion Detection for Internet of Vehicles. Electronics 2022, 11, 3658. https://doi.org/10.3390/electronics11223658

Yang J, Hu J, Yu T. Federated AI-Enabled In-Vehicle Network Intrusion Detection for Internet of Vehicles. Electronics. 2022; 11(22):3658. https://doi.org/10.3390/electronics11223658

Chicago/Turabian StyleYang, Jianfeng, Jianling Hu, and Tianqi Yu. 2022. "Federated AI-Enabled In-Vehicle Network Intrusion Detection for Internet of Vehicles" Electronics 11, no. 22: 3658. https://doi.org/10.3390/electronics11223658

APA StyleYang, J., Hu, J., & Yu, T. (2022). Federated AI-Enabled In-Vehicle Network Intrusion Detection for Internet of Vehicles. Electronics, 11(22), 3658. https://doi.org/10.3390/electronics11223658