Abstract

Visual localization is employed for indoor navigation and embedded in various applications, such as augmented reality and mixed reality. Image retrieval and geometrical measurement are the primary steps in visual localization, and the key to improving localization efficiency is to reduce the time consumption of the image retrieval. Therefore, a hierarchical clustering-based image-retrieval method is proposed to hierarchically organize an off-line image database, resulting in control of the time consumption of image retrieval within a reasonable range. The image database is hierarchically organized by two stages: scene-level clustering and sub-scene-level clustering. In scene-level clustering, an improved cumulative sum algorithm is proposed to detect change points and then group images by global features. On the basis of scene-level clustering, a feature tracking-based method is introduced to further group images into sub-scene-level clusters. An image retrieval algorithm with a backtracking mechanism is designed and applied for visual localization. In addition, a weighted KNN-based visual localization method is presented, and the estimated query position is solved by the Armijo–Goldstein algorithm. Experimental results indicate that the running time of image retrieval does not linearly increase with the size of image databases, which is beneficial to improving localization efficiency.

1. Introduction

With the development of communication technology, smart mobile terminals, such as smartphones and tablet personal computers, have become indispensable in modern society. Various applications on smart mobile terminals bring convenience to many aspects of people’s lives, and one example is navigation applications. The Global Navigation Satellite System (GNSS) allows individuals to acquire position information at any moment in outdoor environments [1,2,3,4]. Navigation and positioning services play crucial roles in public traffic, maritime transportation, and aviation flight. As the interior environments of buildings become increasingly complex, demands for indoor position services continue to rise. However, due to the shielding effect of structures, signals of the Global Navigation Satellite System are incapable of penetrating buildings, leading to users not being able to obtain reliable position services by the GNSS. Therefore, a stable and efficient indoor localization method independent of satellite signals has become a research hotspot in late years. Numerous daily activities can benefit from indoor localization technologies, such as shopping in large malls, finding books in libraries, and planning routes in railway stations and airports.

Many signal-based localization technologies have been investigated for querying indoor position information, such as WiFi-based [5,6], Bluetooth-based [7,8], and UWB-based [9,10,11] methods. All these methods, however, require investments in localization infrastructures. For example, WiFi-based approaches demand that a mobile terminal should receive signals transmitted by more than one access point [12,13]. Generally, more densely distributed access points contribute to improving the accuracy of localization systems. Similar to WiFi-based methods, high-density base stations must be deployed in indoor environments for Bluetooth-based and UWB-based localization systems. For the implementation of indoor localization, cost investments restrict the development of signal-based systems. By contrast, visual-based localization achieves high accuracy with hardly any infrastructure.

Another category of indoor localization approaches are those which estimate users’ positions iteratively by Inertial Measurement Unit (IMU) [14,15]. However, IMU-based systems are prone to cumulative errors accompanied by position iterative estimation, especially for a long trajectory. In addition, since IMU-based methods cannot determine the absolute positions of devices, these methods are generally combined with other localization technologies [16,17,18]. Different from IMU-based methods, visual localization could achieve either absolute position estimation or relative position estimation [19].

Visual localization aims at estimating the position of a camera (i.e., the query camera) mounted on a smart mobile terminal by image retrieval and geometrical measurement. Specifically, visual localization is usually implemented in known environments in which visual features are collected and stored as database images in the off-line stage [20]. In the on-line stage, a user captures query images by the query camera, and then the images are uploaded to the server. In the process of position estimation, the matched database images are retrieved on the server, and the positions of query images are calculated by localization algorithms. High accuracy and efficiency of image retrieval guarantee the performance of the entire localization system. The typical technology is Content-Based Image Retrieval (CBIR), the core of which is finding the most similar database images as the query image based on visual features. However, the retrieval task is challenging, because the number of database images is large, and there is no feasible strategy to organize the images in the database.

Therefore, in this paper, a hierarchical clustering-based image retrieval algorithm is proposed to organize database images, and an image retrieval strategy is investigated to improve retrieval efficiency. Moreover, a visual localization method is presented based on the Armijo–Goldstein principle. The main contributions of this paper are summarized as follows:

- (1)

- A global feature-based image clustering algorithm is proposed, in which the change-point detection method is adopted to identify which database images are captured in the same indoor scene. By this means, images captured in the same scene are clustered in a group, which achieves scene-level image clustering.

- (2)

- A local feature-based image clustering algorithm is presented, in which feature tracking is employed to further group the images that are in one scene-level cluster, by which means database images are grouped into sub-scene-level clusters.

- (3)

- A hierarchical clustering-based image retrieval algorithm is introduced, and visual localization is achieved based on the Armijo–Goldstein principle.

The remainder of this paper is organized as follows: Related work is reviewed in Section 1. Section 2 and Section 4 investigate the image clustering algorithms based on change-point detection and feature tracking, respectively. In addition, image retrieval and visual localization are explored in Section 4. In Section 5, the performances of the image retrieval and visual localization are evaluated. A discussion of the experimental results is presented in Section 6, and conclusions are drawn in the last section.

2. Related Works

Computer vision is a field of artificial intelligence that plays an essential role in widespread applications such as object tracking, object detection, image classification, and image retrieval [21,22,23,24]. The use of computer vision for pedestrian visual localization began in 2006, and then a typical framework of visual localization was determined [25,26]. Specifically, geo-tagged images are acquired as database images in the off-line stage, and then the position of the query image is estimated based on database images by image retrieval and geometrical measurement.

Recently, many studies have focused on visual localization, either in indoor or outdoor environments, with almost the same technical route [27,28,29]. The users’ positions are always estimated by query images in the condition of known or unknown camera-intrinsic parameters. One advantage of indoor visual localization is that the interior scenes of buildings can be reconstructed by mapping equipment [30]. Thus, compared with other positioning approaches, the vision-based methods provide users with more indoor-detail information and a better service experience [31]. Indoor visual localization contains three key technologies, which include: (1) 3D indoor mapping on the off-line stage (including database image acquisition), (2) image retrieval in the database, and (3) position estimation of the query camera. Image retrieval for visual localization can usually be divided into two phases: coarse retrieval and fine retrieval [32]. Specifically, global features on images can be utilized to achieve coarse image retrieval, and local features are appropriate for fine retrieval, resulting in obtaining the matched database image with the query image.

Colors, textures, and shapes are essential information to describe the global features of an image. Various global features, such as color moments, HSV histograms, wavelet transforms, and Gabor wavelet transforms, are extracted from images and used in the CBIR system [33,34]. Generally, more than one feature is selected to form a high-dimensional feature vector in order to overcome the limitations of a single feature. However, high-dimensional feature vectors would bring a heavy burden in measuring the similarity of images. Based on Gabor features, a global feature named Gist was proposed by Oliva et al., designed for scene recognition [35]. Gist features have already been widely used in indoor image retrieval and have achieved some remarkable results, which indicate that Gist features have the potential to address the image retrieval problem in visual localization [36,37].

Compared with global visual feature-based image retrieval technologies, local features are more suitable for fine image retrieval (i.e., finding the most similar database image with the query image). In recent years, research on local feature extraction has attracted much attention. Speeded Up Robust Features (SURF) [38] and Scale-Invariant Feature Transform (SIFT) [39] are the most widely used local features in the fields of object recognition, image stitch, visual tracking, and so on. For a visual localization system, local features are both applied to image retrieval and position estimation. Visual features employed in localization perform well in image similarity measuring and users’ position estimating. An efficient alternative feature (i.e., Oriented FAST and Rotated BRIEF, ORB) to SIFT or SURF was proposed by Rublee et al., which has been widely used in visual SLAM and has achieved promising results [40,41]. Based on existing literature and research, ORB features, utilizing fast key points and described by BRIEF descriptors, are also good at content-based image retrieval [42,43].

Most researchers of visual localization focus on position estimation algorithms, such as the authors of [29,44,45], but few of them pay attention to image retrieval in the localization system, much less to off-line image database organization. A typical hierarchical indexing scheme is proposed in [46], but only coarse retrieval is presented, and the best-matched database image cannot be found by this scheme. A well-organized image database contributes to improving the accuracy and efficiency of a localization system. In other words, a scalable image retrieval method is desired to fit different sizes of the database of the indoor localization system. Specifically, the search time of a scalable image system should not increase linearly with the number of database images, so that the response time of retrieval can be limited within a reasonable range. Therefore, aiming at the demand for visual localization, a database image hierarchical clustering method based on change-point detection and feature tracking is investigated in this paper. With the results of image retrieval, visual localization is executed to estimate users’ positions.

Visual localization in indoor environments has received widespread attention in recent years due to its extensive applications, such as the mobile museum tourist guide [47] and in-building emergency response [48]. Visual localization is also called image-based localization, in which images are employed as practical signals for localization [49]. Strictly speaking, visual localization is one of the fingerprinting-based localization methods, since images captured at known locations (i.e., database images) serve as fingerprints for position estimation. By image retrieval, the most similar database images as the query image are selected as fingerprints to calculate the query positions [50]. The majority of recent visual localization works focus on position estimation in the condition of known camera-intrinsic parameters by projective geometry [51,52,53]. However, the internal parameters of different cameras are not easy to obtain in practical localization scenarios. Therefore, research on image retrieval-based visual localization is crucial and necessary.

Only a limited number of works concentrate on image retrieval-based visual localization without camera calibration parameters. A representative work is the TUM indoor navigation system, in which the nearest neighbor (NN) method is employed for localization (i.e., the position attached to the most similar database image is identified as the query position) [49]. In many fingerprinting-based localization applications, finding the nearest neighbor to the query is regarded as an effective way to acquire the query position [54,55]. However, nearest neighbor-based visual localization may have a significant positioning error in some situations. For example, the query position is far from the fingerprint location when there are common objects visible in both the query image and the fingerprint image, which leads to accuracy degradation due to the improper nearest neighbor being selected to participate in the position calculation. To solve this problem, the K-nearest neighbor (KNN) method is applied in fingerprinting-based localization [56,57]. The KNN method selects the K-nearest fingerprint images and takes the average of their position coordinates as the estimated query position, avoiding the contingency of taking the nearest fingerprint image [58]. It is worth noting that each nearest neighbor in the KNN method has an equal contribution to the position estimation, which is unreasonable, because the average of nearest neighbor positions is hardly in accordance with the query position. More rational thinking is that the nearest neighbor with more similarity to the query is assigned a larger weight for the KNN method. Therefore, a weighted KNN (WKNN) method is presented in this paper to solve the estimated position of the query, taking full consideration of image similarities.

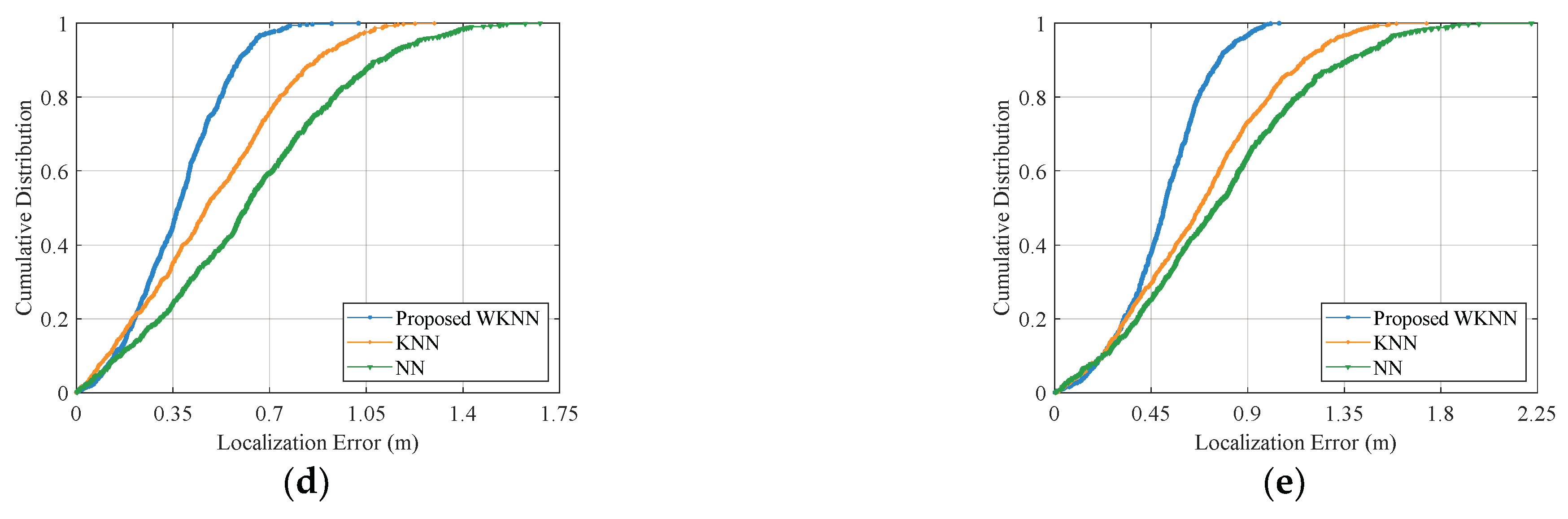

3. Image Clustering Based on Change-Point Detection in Global Features

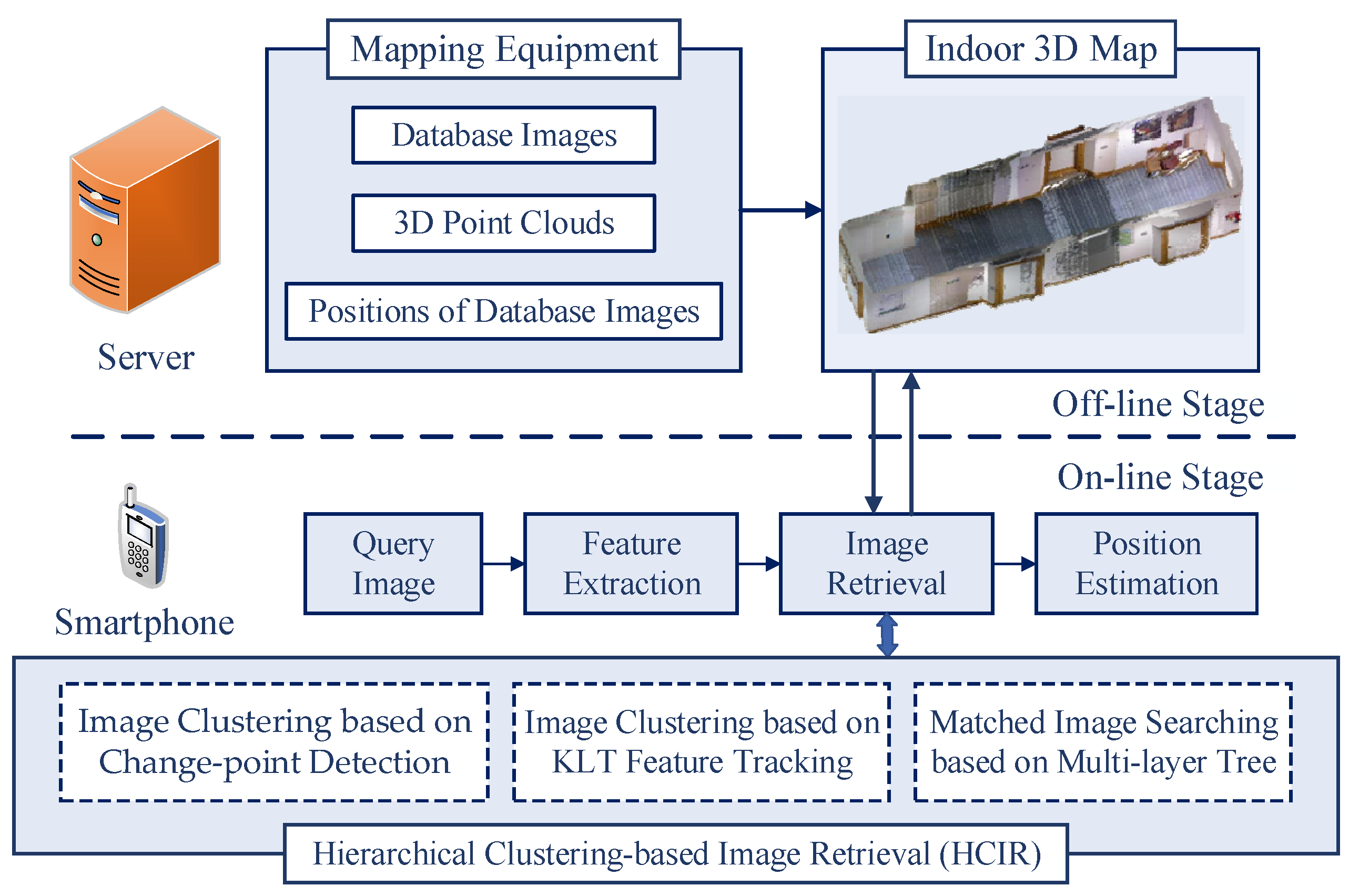

A typical indoor visual localization system contains two stages: an on-line stage and an off-line stage, as shown in Figure 1. In the off-line stage, images are captured by the database camera mounted on the mapping equipment, and poses of the equipment are recorded simultaneously. In order to construct an indoor 3D map, Microsoft Kinects and laser scanners are also mounted on the mapping equipment [19]. An off-line database should be generated before the implementation of visual localization. It contains the essential elements for localization: database images, poses (including orientations and positions) of the equipment, and indoor 3D maps.

Figure 1.

System model of indoor visual localization.

In the on-line stage, a query image is captured by the user and uploaded to the server by wireless networks. The most similar database images (i.e., matched database images) to the query image are retrieved based on the visual features extracted from the images. Then, the position of the matched database images can be employed to estimate the position of the query camera. Accuracy and efficiency of image retrieval are the key to ensuring the good performance of the system. A hierarchical clustering-based image retrieval is proposed in this paper and mainly discussed in the following.

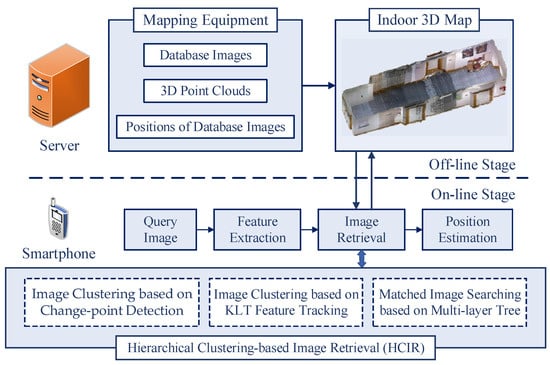

3.1. Feature Extraction and Pre-Processing

Gist is a scene-centered global feature commonly used in scene classification and place recognition. In this paper, Gist features are employed for scene-level image clustering by change-point detection. In the implementation of creating the off-line database (i.e., an indoor 3D map), database images are successively acquired by the mapping equipment in the same indoor scene, and visual features of these images have high correlations. In contrast, when the mapping equipment moves from one indoor scene to another, the correlations of the captured database images are weakened. Based on this characteristic of database images, change-points can be detected in the global features extracted from the images, resulting in the database images captured in the same indoor scene being grouped in a cluster, which achieves scene-level image clustering. The center of each cluster represents the main features of the scene, so that the query image orderly retrieves each cluster by measuring the difference between the query image and the centers of database image clusters.

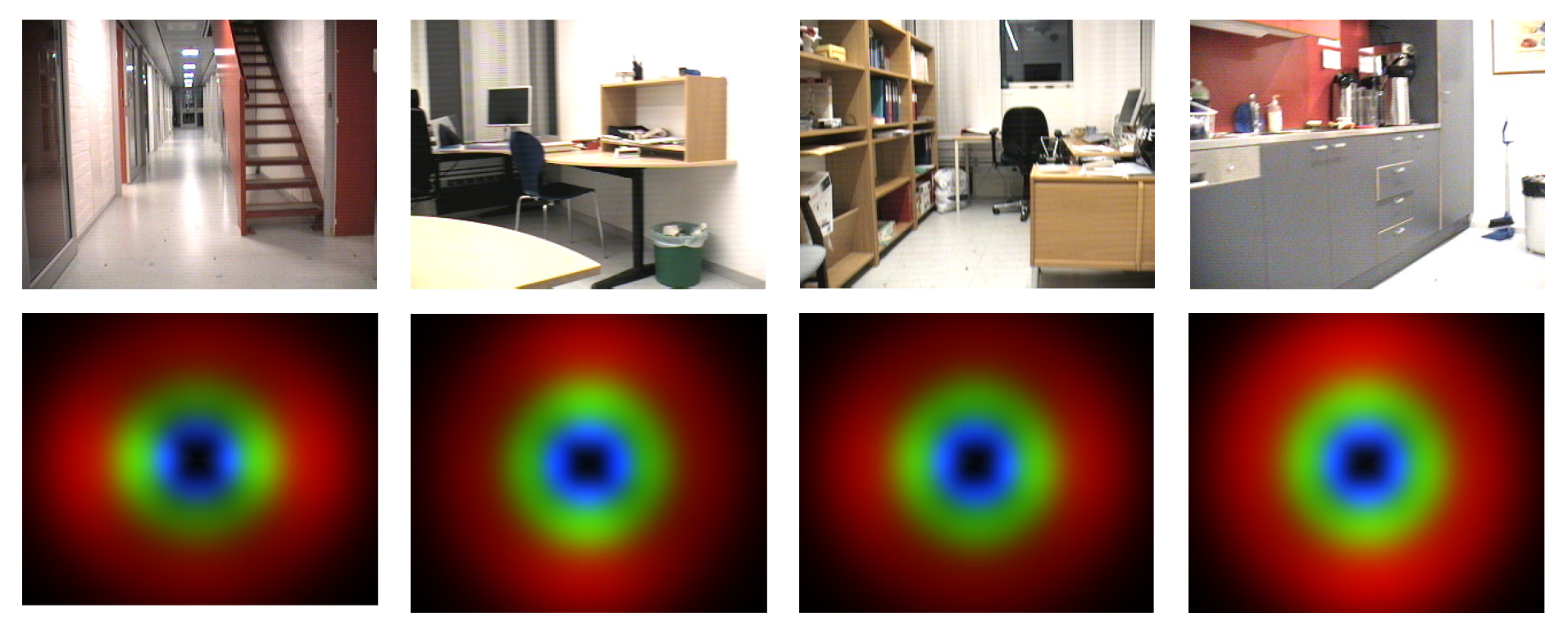

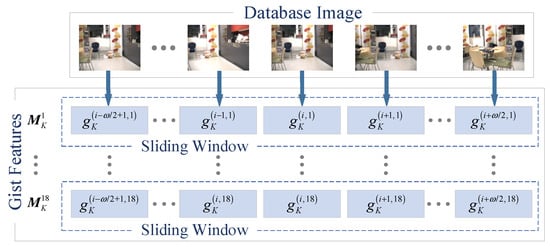

To reduce computation complexity, visual feature vectors should keep a low dimension, so an image is regarded as a whole from which global features are extracted. For each query image and database image, a three-scale () and six-orientation (, , , , , ) filters are used in Gist feature extraction, where and present spatial scale levels and cardinal orientations, respectively. During feature extraction, images are processed via convolution operation by multi-channel filters, and then the filtering results are connected to achieve 18 () dimensional feature vectors. and denote global features extracted from the query image and database images, respectively. Figure 2 shows examples of database images and the corresponding spectrograms of Gist features.

Figure 2.

Examples of database images and the corresponding spectrograms of Gist features.

For visual localization, the query image is captured by a hand-held smartphone, and image retrieval is completed on the server side after the image is uploaded to the server. The Gist feature vectors of the query image and database images are separately presented by and , where and denote the p-th and q-th elements in the feature vector and ( and ), and is the index of database images. The database images successively acquired in the same indoor scene have high visual correlations. However, once the mapping equipment switches to another scene, the correlations subsequently decrease. The change-point detection method is employed, aiming to detect the change in the visual correlations between database images, and further, the database images captured in the same scene are grouped into one cluster.

If there are in total database images in the indoor 3D map (i.e., the off-line database), features can be extracted from the database images. Therefore, a Gist feature matrix can be obtained by organizing all feature vectors:

To achieve scene-level clustering, change-point detection acts on each column in . Specifically, change points should be separately detected in the column vector , where . On account of noise existing in feature extractions, a pre-process should be applied on Gist features. Therefore, the Kalman filter and the Kalman smoother are used in this paper to recover the visual correlations of database images acquired in the same scene. The purpose of feature pre-processing is to avoid false detection caused by noise, namely, detecting a change point without a scene change.

If the state variable and the observed variable of features are separately set as and , the system equation is:

where and are gain matrixes. The process noise and measurement noise satisfy:

where denotes a normal distribution with the variance and the expectation . The initial value of is defined as , where satisfies and satisfies [59].

The discrete Kalman filter estimates the process state by feedback control. The typical Kalman filter can be divided into two parts, namely, the time-update part and the measurement-update part. In the time-update part, to obtain the prior estimate of the next time state, the Kalman filter calculates the state variables of the current time and the estimated covariance of errors by the update equation. In the measurement-update section, new observations are combined with prior estimates to obtain more reasonable posterior estimates by feedback operations.

When using a discrete Kalman filter to process data, the previous error covariance before system updating should be calculated by:

where is the error covariance after system updating. The Kalman gain can be further calculated based on the error covariance by:

According to the un-updated error covariance and Kalman gain, the error covariance can be updated by:

Then, the posterior estimates of the state variable (updated value) can be obtained by:

where the prior estimate of the state variable can be obtained by the extrapolation formula:

System iterative updates can be achieved by Equations (7) and (8), which achieves Kalman estimation for all measured values.

After Kalman filtering, Kalman smoothing needs to be performed on the filtering results. According to the Kalman backward smoothing equations, the smoothed estimate of the state variable and the smoothed error covariance can be obtained by:

where is defined as:

The optimized vector can be obtained based on the Kalman smoother by Equations (9) and (10), where is the feature element after Kalman filtering and smoothing. is the total number of database images, and is the index of feature vectors. As 18 Gist features are extracted from a database image, the range of satisfies .

3.2. Image Clustering Based on CUSUM Change-Point Detection

Change-point detection on Gist features of database images is to group the successive database images between change points into one cluster, thereby realizing scene-level database image clustering. For a random process that occurs in chronological order, change-point detection is detecting whether the distribution or distribution parameters of random elements in the process suddenly change at a certain moment. In this paper, change points are detected on the Gist features extracted from successive database images, thereby finding the database image in which the indoor scene changes. When the change-point detection on all Gist features is completed, the database images between the change points are grouped into one cluster, and these images are deemed to be acquired in the same scene (e.g., office, kitchen, corridor, etc.). The rationality of the algorithm is that when constructing the off-line 3D indoor map, the database camera successively captures the database images in the same scene. Therefore, the obtained database images are successive in the same scene. That is, the Gist features of the database images have a certain correlation. Once the indoor scene changes, it is possible to perceive the occurrence of such a change by detecting the change points of the Gist features.

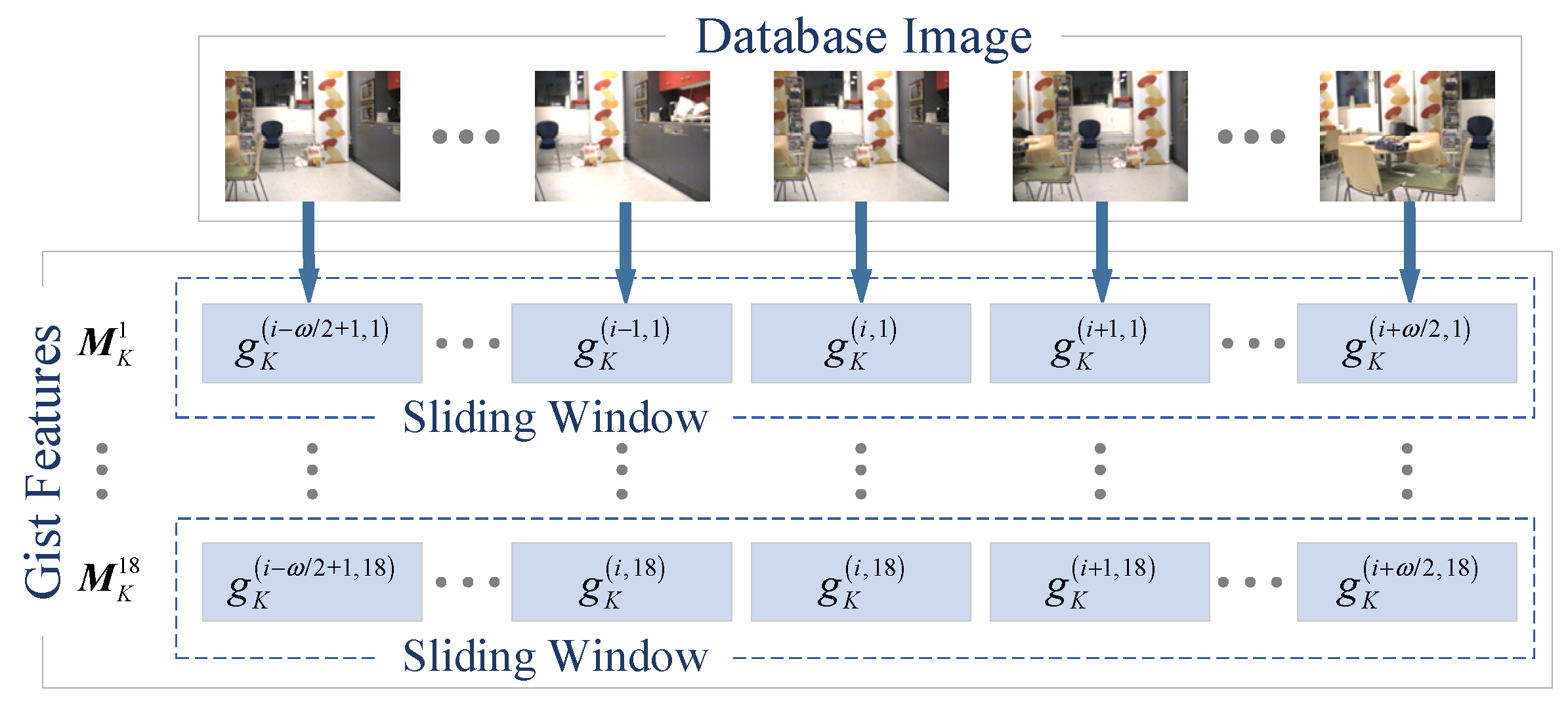

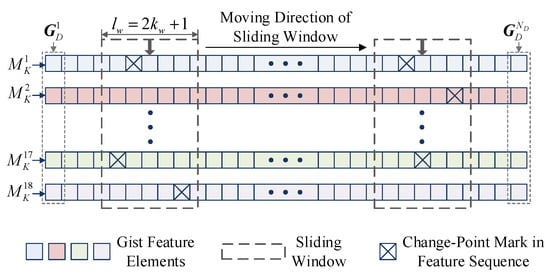

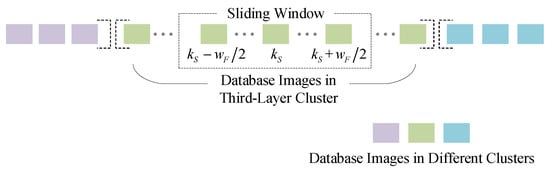

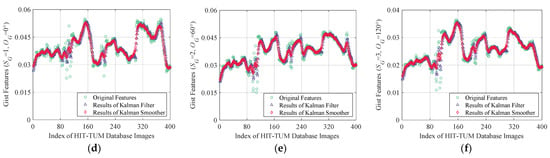

The CUSUM (Cumulative Sum) algorithm used for change-point detection in this paper is an anomaly detection method commonly used in industrial fields. The CUSUM algorithm is generally applied to all data to detect change points. For data located at a certain position in the sequence, other data in front of and behind this position are used for change-point detection. However, such a detection method will undoubtedly increase the time overhead, especially as the amount of historical data becomes larger and larger with time, which will eventually cause excessive time overhead. Therefore, a sliding window is introduced to constrain the number of Gist features that need to be processed in change-point detection, as shown in Figure 3.

Figure 3.

Diagram of sliding windows in CUSUM change-point detection.

As shown in Figure 3, an 18-dimensional Gist feature vector can be extracted with different scales and directions for each database image. After Kalman filtering and smoothing, each Gist feature can be represented as , where the superscript indicates the index of database images, and the subscript indicates the element position in a feature vector. For the Gist features extracted from different database images, if they are located in the same column , the scales and orientations of these features are the same. In order to constrain the data size during change-point detection, a sliding window of the size is implemented in CUSUM change-point detection. Specifically, if the currently detected position is , and the corresponding Gist feature is , only the features in the sliding window are considered. That is to say, only the features between and are detected. In addition, the change-point detection algorithm is only applied to the features that have the same scale and orientation. For different Gist feature sequences, such as , , , and , they should be separately detected.

In the CUSUM change-point detection, considering the sequence of successively acquired database images is a time series, the acquisition time corresponds to the index of the database image in the sequence. Therefore, the change-point detection in this paper detects the position at which the change point appears in the image sequence. The CUSUM change-point detection algorithm estimates the position of the change point in the sequence by calculating the parameter models of Gist feature sequences. The probability density function is employed to determine the positions of change points in a Gist feature sequence. For a Gist feature sequence , a sub-sequence within the sliding window can be obtained, and change-point detection only acts on feature element . According to the position of , sequence can be divided into two sub-sequences: and .

According to the Neyman–Person lemma, the core of CUSUM change-point detection can be considered a hypothesis-test problem. For this hypothesis test, the null hypothesis H0 is the case that the feature element is not a change point. In this case, the indoor scene corresponding to the database image does not change. In contrast to the null hypothesis, alternative hypothesis H1 indicates the scene changes, in which case the feature element does not satisfy the previous parameter model. The purpose of the CUSUM algorithm is to monitor and determine at which point hypothesis H1 switches to H0 in the feature sequence.

For sub-sequences and in the sliding window, it is considered that the feature elements in the sequences are independent variables and subject to the normal distribution, so two parameter models, namely, parameter model A and parameter model B, can be obtained.

The probability density functions of the two parameter models are and which satisfy:

where and are the expectation and variance of the parameter model A. and are the expectation and variance of the parameter model B.

If the hypothesis test is applied to the feature elements in the sliding window, the probability density function under the null hypothesis H0 is , and the probability density function under the alternative hypothesis H1 is . Thus, a likelihood ratio function relating to can be obtained by:

Based on the likelihood ratio function, a cumulative sum function can be defined as:

For the CUSUM algorithm, the position of the change point can be calculated by a given threshold :

According to the detection principle shown in (17), a threshold must be set in advance when employing the typical CUSUM algorithm for change-point detection. However, in many cases, it is difficult to determine the threshold for change-point detection due to the complexity and diversity of indoor scenes. Therefore, an improved cumulative sum change-point detection (ICSCD) algorithm is proposed to identify change points without a given threshold.

Since the probability density functions of the two sub-sequences in the sliding window are subject to a normal distribution, the likelihood ratio function can be expanded as:

If the variances of parameter model A and parameter model B are considered identical (i.e., ), then the likelihood ratio function can be simplified as:

The cumulative sum function of in the proposed ICSCD algorithm is defined as:

where expectations and corresponding to parameter models A and B can be calculated by:

According to Equation (20), the cumulative sum function depends on three variables: the Gist feature element detected as the change-point, the expectation of parameter model A, and the expectation of parameter model B. Therefore, the numerator of Equation (20) can be defined as a change-point detection function to monitor whether the indoor scene changes on the position :

Depending on the above analysis, Gist feature sequences can be processed by the change-point detection function. The specific process is as follows: first, for the feature elements between and in the sliding window, the values of the change-point detection function need to be calculated, where is the size of the window and is the total number of database images. Second, the peaks of the discrete values of function are detected, and the peaks correspond to the change points in feature sequences. More than one Gist feature sequence is extracted from the database images (18 feature sequences extracted in this paper, i.e., , ), and each feature sequence needs to be detected separately. Therefore, it is necessary to propose a strategy to integrate the detected change points from each feature sequence to find the images corresponding to indoor scene changes.

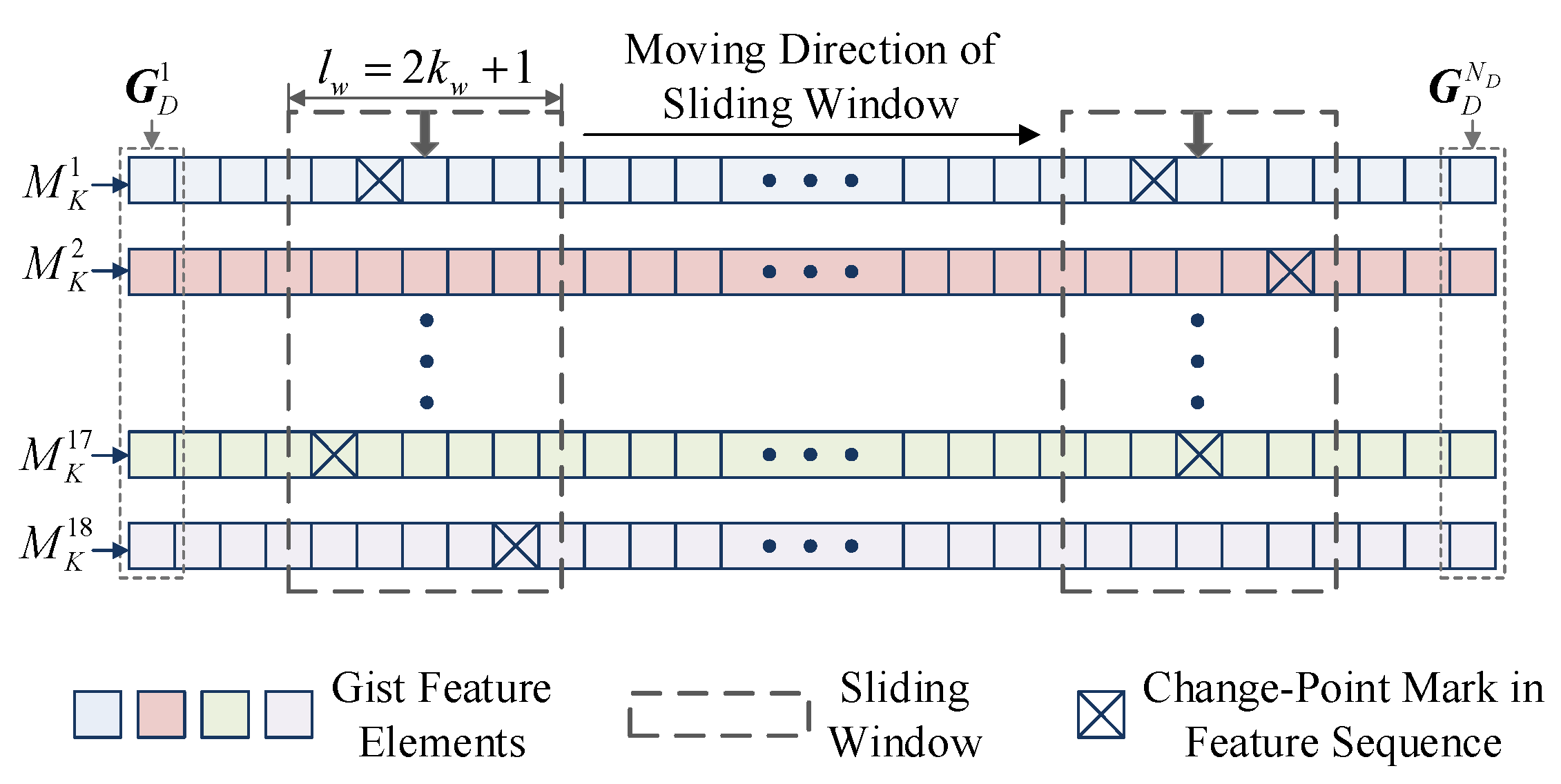

Since 18 Gist feature elements are extracted from each database image, an matrix containing change-point marks can be obtained for database images. Each mark in the matrix represents whether the Gist feature element on this position is a change point. Specifically, if the feature element in one position is detected as a change point, the value of the mark in this position is defined as 1. Otherwise, the value is defined as 0. As shown in Figure 4, a window with the size ( is an odd number, namely , and is a positive integer) slides on the matrix, and the values of the marks in the window are added up. The meaning of the accumulated value of the marks is the total number of change points in the window. If the accumulated value of the marks in the window exceeds a given threshold, the center of the window is considered the position of the change point.

Figure 4.

Diagram of change-point detection for database images.

4. Feature Tracking-Based Image Clustering, Hierarchical Retrieval and Visual Localization

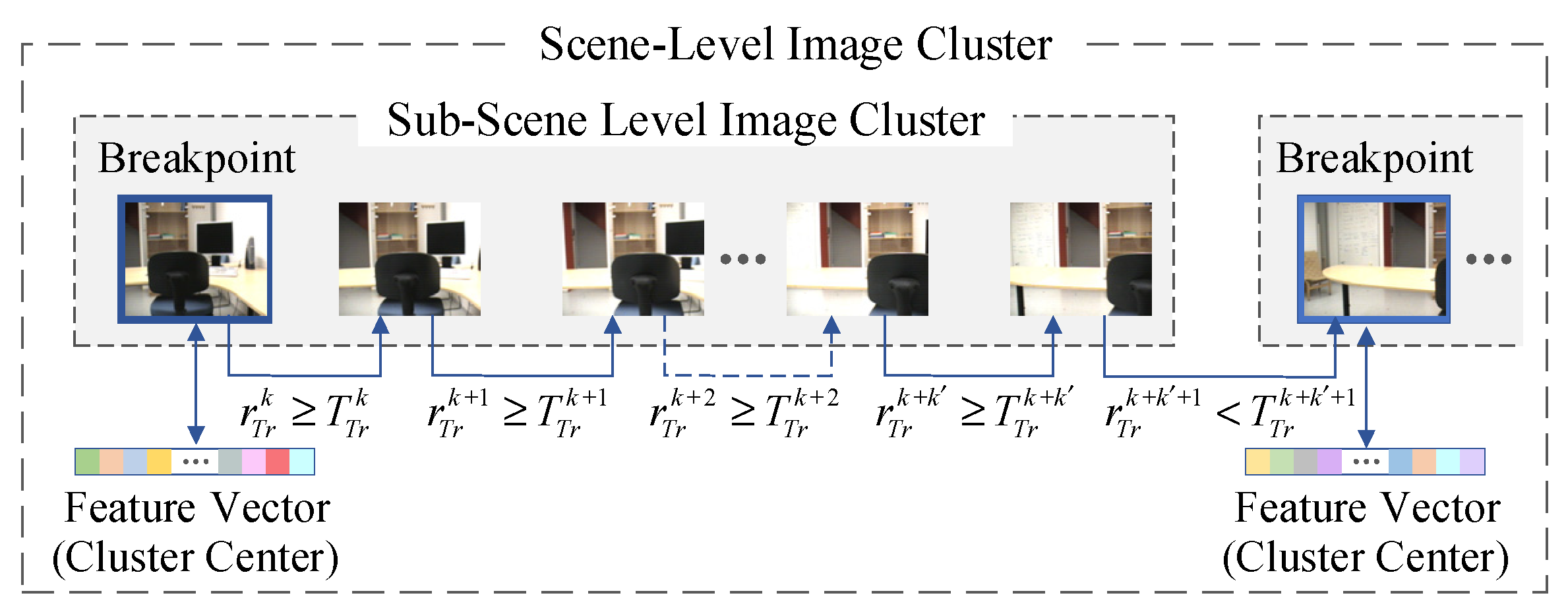

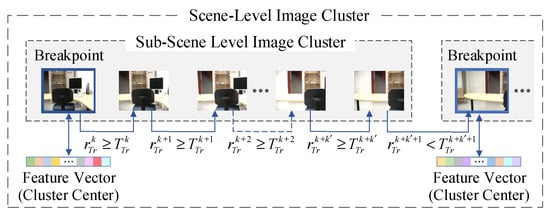

Based on the proposed ICSCD algorithm, scene-level database image clustering can be achieved by global visual features of database images. However, in the implementation of database construction, there are usually too many database images acquired in the same indoor scene, which leads to excessive time overheads of image retrieval in the on-line stage. Therefore, the database images in the same scene-level cluster are further grouped to achieve sub-scene-level clustering. For sub-scene-level clustering, local visual features of images are employed. In the process of local feature tracking, if the change rate of the number of tracked features is below the given threshold, the database image is defined as a breakpoint image. The database images between the two breakpoint images are acquired in the same sub-scene, so the images between the breakpoints are grouped into one sub-cluster.

4.1. Image Clustering Based on KLT Feature Tracking

A database image can be described by the gray-level function , where is the position of a pixel on the image, and presents the time stamp. Since database images are successively acquired, time stamp is equivalent to the image index. In addition, the interval of image acquisition is small, resulting in high visual correlations between database images, which is the precondition for using feature tracking for image breakpoint detection. When the database camera moves within a sub-scene, an overlap exists between the adjacent database images, and a certain number of local features on the images can be tracked. According to this characteristic of database images, a KLT (Kanade–Lucas–Tomasi) feature tracking-based image-clustering method is proposed in this section.

The features are continuously tracked between the two adjacent database images with index and by the KLT algorithm. Let denote an image cluster in the scene-level clustering results. In the off-line stage, the local visual features (i.e., ORB features) are extracted from images and stored in the database used in the sub-scene-level image clustering. Let present an ORB feature matrix consisting of the vector , where is the index of the database image, is the index of feature vectors, and is the index of elements in a feature vector. For each ORB feature extracted from an image, there is a feature vector (i.e., ) and a position vector (i.e., ) corresponding to the ORB feature, where is expressed in the image coordinate system.

To track features, a rectangular window on the image needs to be set whose length is pixels and whose width is pixels. Based on the assumptions of constant brightness, time continuity, and spatial consistency in the rectangular window, it is considered that the matching feature points on the database image satisfy the following relationship:

where is the gray value of the feature point located at on the i-th database image. For the next database image, is the gray value of the feature located at . and denote the displacement distances in the X and Y directions on the image, respectively. Equation (24) indicates that for the matching feature points on the database image, only the displacement changes occur on the adjacent images, and the magnitude of the gray values does not change. The core of feature tracking is to solve .

In order to obtain the displacement change, a sum of the squared intensity difference function is defined as:

Taking the derivative of the sum of the squared intensity difference function and setting it to zero, the optimal solution of the displacement can be obtained by:

where and are abbreviated to and , respectively.

Based on the Taylor formula, the first-order approximation of Equation (26) on can be obtained by:

Equation (27) can be further expressed as:

where , , and are:

Let Equation (28) be equal to zero, and the optimal solution can be obtained by:

Initialization should be applied to database image set , which means that the first database image in the set is defined as a breakpoint. From the second database image in the set , the KLT algorithm is employed to track local features between the adjacent images. Let denote the index of the breakpoint image, and then the number of tracked features can be presented by the vector , where is the number of tracked features corresponding to the image with index . To determine the position of the breakpoint image, a threshold is required to monitor the number of tracked features. For the database image with index , the corresponding breakpoint image-detection threshold is defined as:

where is the scale coefficient, and the change rate of the number of tracked features is .

According to the change rate and threshold , an image can be determined whether or not it is a breakpoint image. Specifically, if , the database image with index is regarded as a breakpoint image, as shown in Figure 5. Breakpoint detection is applied to each scene-level clustering result, and then all breakpoint images in the database image set can be found. Database images between two breakpoint images and the front breakpoint image are grouped into one cluster, achieving the sub-scene-level image clustering. The feature vector of the breakpoint image of each cluster is the cluster center. That is, the feature vector of the first image in the cluster is the cluster center (as shown in Figure 5). In the i-th scene-level cluster, the center of the j-th sub-scene-level cluster can be denoted by , which is an ORB feature vector.

Figure 5.

Illustration of sub-scene-level clustering based on feature tracking.

4.2. Hierarchical Image Retrieval and Visual Localization

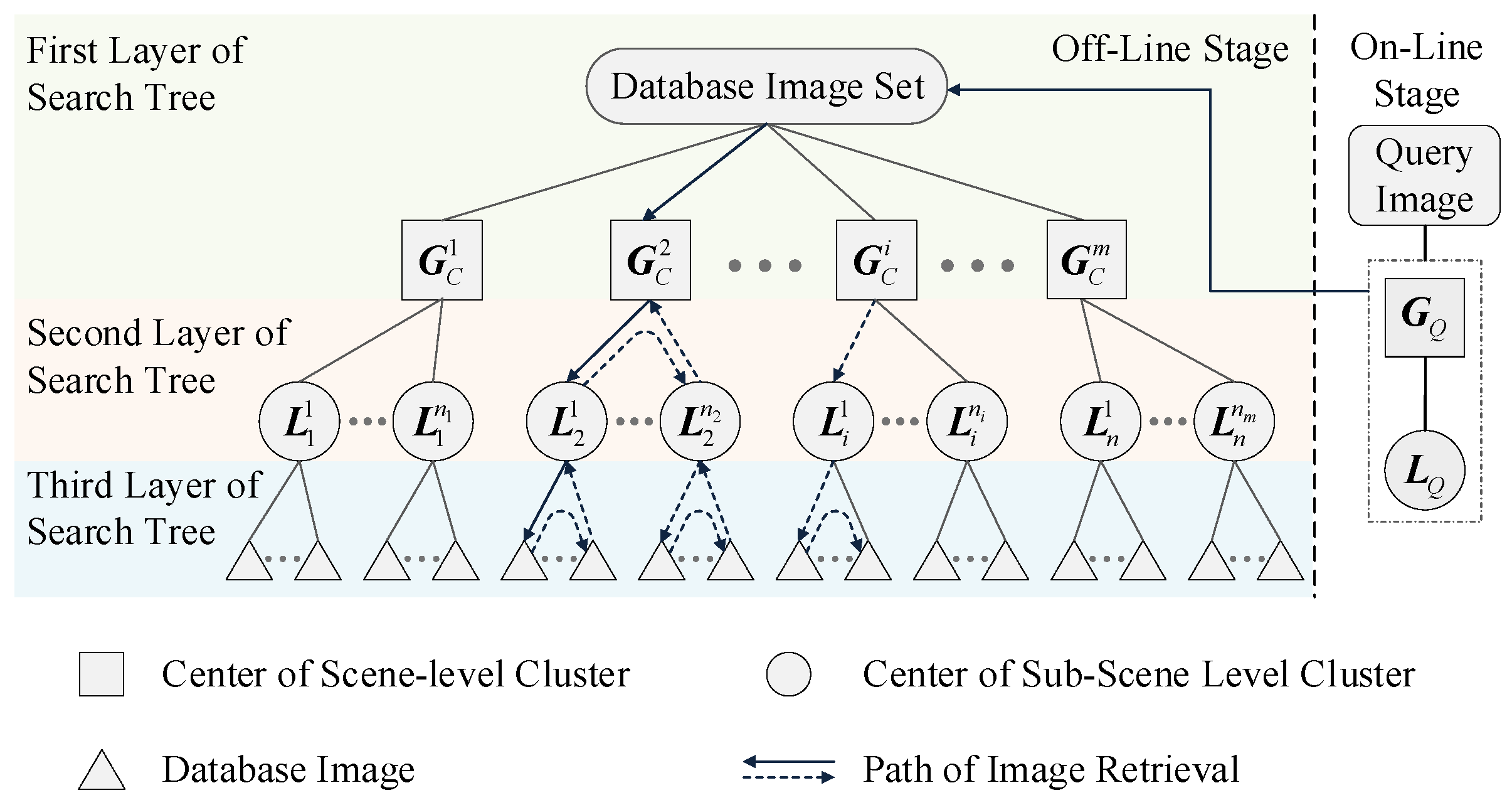

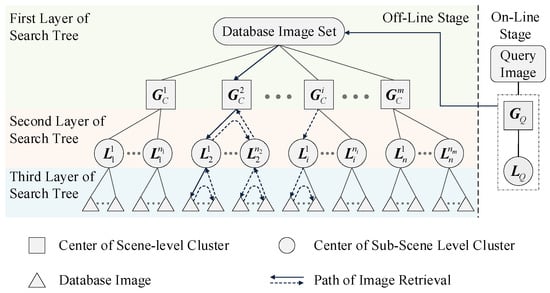

In the off-line stage, database images are hierarchically grouped, and a search tree with three layers is achieved: (1) the first layer contains centers of scene-level clusters, (2) the second layer consists of centers of sub-scene-level clusters, and (3) the third layer contains database images in sub-scene-level clusters. It should be noted that the center of the scene-level cluster is a global feature vector (i.e., a Gist feature vector), and the center of the sub-scene-level cluster is a local feature vector (i.e., an ORB feature vector). According to the results of hierarchical image clustering, a three-layer search tree can be organized. As shown in Figure 6, there are m clustering results in the first layer of the search tree, and each clustering result in the first layer, such as , corresponds to more than one second-layer result (i.e., ). In addition, the database images grouped into one sub-cluster are associated with the cluster center, such as . Based on the organized multi-layer search tree, a hierarchical clustering-based image retrieval (HCIR) algorithm is proposed for visual localization.

Figure 6.

Schematic diagram of search tree in image hierarchical retrieval.

Based on the multi-layer search tree shown in Figure 6, hierarchical image retrieval is applied to find the most similar database image to the query image. In the on-line stage, global and local features are extracted from the query image and uploaded to the server by wireless networks. Then, the similarity between the query image and the centers of scene-level clusters can be defined as:

where is the Gist feature vector extracted from the query image, and is a scene-level cluster center.

By measuring similarities between the global features of the query image and scene-level cluster centers, the scene-level clusters can be ranked. Then, the query image orderly retrieves each scene-level cluster. When the most similar scene-level cluster is found, the sub-scene-level clusters in that cluster should be sorted. Specifically, suppose a query image needs to find its most similar database image in the cluster . In that case, local features extracted from the query image should be matched with each center of sub-scene-level clusters, i.e., , , . The number of matched local features reflects the similarities between the query image and the sub-scene cluster centers. For the cluster , sub-scene-level clusters are orderly retrieved by the query image. By this means, scene-level clusters and sub-scene-level clusters can be ranked based on visual similarities between the query image and cluster centers. According to the ranked clusters, local feature matching should be orderly executed between the query image and the database images in the third-layer clusters.

Let denote the number of matched features between the query image and the ks-th database image. Then, the feature matching ratio can be defined as:

where and is the number of the ORB features extracted from the query image.

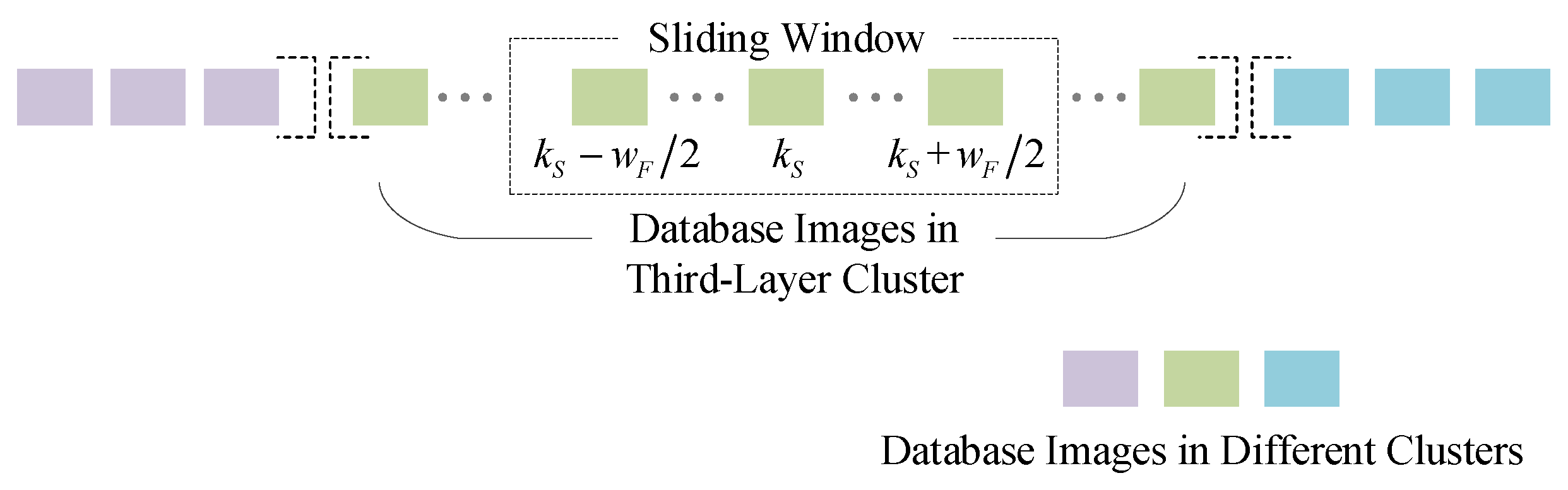

The matched features are used in visual localization, and more matched features contribute to improving localization accuracy. In addition, more matched features indicate that the query image is closer to the database image. Therefore, the best-matched database image with the query image is desired to estimate the position of the query camera in visual localization. Since database images are successively captured, when the query image is matched with database images, the trend of feature-matching ratios presents regularity. Specifically, if the query image and the database image are acquired in the same scene, when the query image is orderly matched with the database image, the trend of matching ratios first increases and then decreases. The reason is that when the query image gradually approaches the best-matched database image, the matching ratios will gradually increase until the ratio reaches a maximum value. At this position, the database image is best matched with the query image. After that, the distance between the query image and the best-matched database image gradually increases, and the matching ratios decrease. Based on the above analysis, if the maximum value of the matching ratios can be found, the best-matched database image can be determined. The method of finding the best-matched database image is named the maximum similarity method in this paper.

To find the best-matched database image, a sliding window should be set as shown in Figure 7. In a sliding window with the size , the index of the image at the center is . If the image with the index is determined as the best-matched database image, the matching ratio of the database image should satisfy:

where is the threshold of the matching ratio. The threshold ensures that the database images and the query image are captured in the same scene.

Figure 7.

Diagram of maximum similarity method for finding the best-matched database image.

According to the ranking results of the scene-level and sub-scene-level clusters, the query image orderly retrieves each cluster until it finds the best-matched database image. A situation may arise in image retrieval. That is, the query image is matched with all the database images of the most similar scene-level cluster, but the best-matched database image is still not found. Therefore, a backtracking mechanism is introduced in the proposed hierarchical retrieval. In this mechanism, when the best-matched database image with the query image cannot be found after comparing with all the database images in the scene-level cluster, the query image will return to the top of the search tree and continue to retrieve the next cluster according to the ranking results, and so on. In the worst case, all database images are compared with the query image, and the best-matched database image is still not found. Then, the database image with the maximal matching ratio is determined as the best-matched image, but in this case, the distance between the query image and the database image is perhaps far.

By the proposed HCIR algorithm, the best-matched database image with the query image can be found in the database, and the best-matched database image has the following characteristics: (1) the database image is captured in the same scene as the query image; (2) there are a number of matching feature points between the query image and the database image. If the query image is considered to be coincident with the position of the best-matched database image, a preliminary position estimation of the query camera can be achieved. However, this position estimation method is subject to the acquisition density of the database images. In order to improve the localization accuracy, the top-K best-matched database images are selected and used to estimate the position of the query image.

4.3. Visual Localization Based on Weighted KNN Method and Armijo–Goldstein Algorithm

In practical localization scenarios, images with higher similarity tend to be closer. Namely, the more similar the query image and the database image are, the smaller the distance between the two images is. With this thinking, a weighted KNN-based visual localization method is proposed, by which the matched database image with a higher similarity is assigned a larger weight. The similarities between images are evaluated by the number of matched feature points in visual localization.

The top-K best-matched database images with the query image are regarded as the nearest neighbors to estimate the query position, so the localization error function can be defined as:

where is the estimated position of the query image, and is the position of the database image. is the weight that can be calculated by:

where denotes the number of matched feature points between the query image and the database image.

For Equation (37), the Armijo–Goldstein algorithm is used to solve the estimated position of the query image (i.e., the position of the query camera) [60]. The gradient vector of at is:

According to the gradient vector, the search direction can be further determined by . The procedure of visual positioning can be treated as a line search, as shown in Algorithm 1. The count flag , index , maximum number of iterations (=5000), threshold (=), amplification coefficient (=0.4), and step length (=0.01) are set as inputs.

| Algorithm 1: Visual localization based on Armijo–Goldstein algorithm |

|

With the visual localization method, the estimated position of the query camera can be achieved by solving the line search problem, in which the similarity between the query image and the database images is reflected by the weight . Therefore, the estimated position of the query camera is closer to the database images with high similarity.

In summary, visual localization is achieved by two steps: hierarchical clustering-based image retrieval and query camera position estimation. Since the proposed visual localization method dispenses with camera calibration, it can be widely used in different application scenarios and applied to various smart mobile terminals.

4.4. Performance Analysis on Hierarchical Image Retrieval

The proposed HCIR algorithm aims to decrease the on-line search time by sacrificing the processing time of off-line image clustering. Still, database image clustering is continuously efficacious, which means that once the database image clustering is completed, the results of clustering can be repeatedly applied to on-line image retrieval. The proposed algorithm in this paper achieves multi-layer image clustering. Compared with the single-layer clustering algorithm (i.e., only scene-level image clustering is implemented), an advantage of the proposed algorithm is that the search time does not scale up for the database size. Next, the computation performance of the proposed algorithm and the single-layer clustering-based algorithm will be analyzed in detail. For clustering-based image retrieval, on-line time consumptions contain five parts: (1) the time to extract the global features of the query image, (2) the time to extract the local features of the query image, (3) the time to measure the similarity of global features between the query image and database images, (4) the time to match the local features between the query image and database images, and (5) the time to sort database images according to their similarity to the query image. If there are scene-level clusters in the first layer, and each cluster contains sub-scene-level clusters, the average running time of the single-layer clustering-based retrieval algorithm is:

where is the number of scene-level clusters that have been retrieved when the backtracking mechanism is enabled, and and are the number of database images in the scene-level cluster and the sub-scene-level cluster, separately.

The average running time of the proposed image retrieval algorithm is:

where is the image number of sub-scene-level clusters that have been retrieved when the backtracking mechanism is enabled. In this case, if the query image does not obtain a matched database image after retrieving a complete scene-level and sub-scene-level clustering result, the time consumption of the two processes is and , respectively. The total number of database images satisfies: and , where and are image numbers of the scene-level cluster and the sub-scene-level cluster, respectively.

For single-layer clustering-based image retrieval, database images are orderly matched with the query, so the average retrieving time of an image cluster is . Similarly, for the proposed algorithm, the average retrieval time of a sub-scene-level cluster is . According to the principle of multi-layer clustering, the image number is far more than . As a result, the query image could find its matched database image in a cluster using less time for the proposed HCIR algorithm. The proposed algorithm has three additional time overheads (i.e., the time to retrieve the results, the time to match features, and the time to sort database images) compared with the single-layer clustering algorithm. However, in practical applications, feature-sorting time is much shorter than feature-matching time, and in most cases, the value of is zero. Therefore, the sum of the retrieval time and the feature-matching time is still less than the retrieval time . If the best-matched database image can be obtained without trigging the backtracking mechanism (i.e., ), the difference in time consumption between the single-layer clustering algorithm and the proposed algorithm is:

Compared with the multi-layer clustering-based algorithm, there are more database images contained in the cluster for the single-layer clustering-based algorithms. Moreover, as a multi-layer clustering-based algorithm, the proposed HCIR algorithm has priorities for retrieving the image clusters with high similarities to the query, so that the matched image can be found by searching fewer database images. Therefore, the structure of a multi-layer search tree is beneficial in reducing retrieval time consumption.

5. Experimental Results and Discussion

In this section, the hierarchical clustering-based image retrieval is implemented, and the computation performance of the retrieval algorithm is analyzed. In addition, the position accuracy of the visual localization is evaluated.

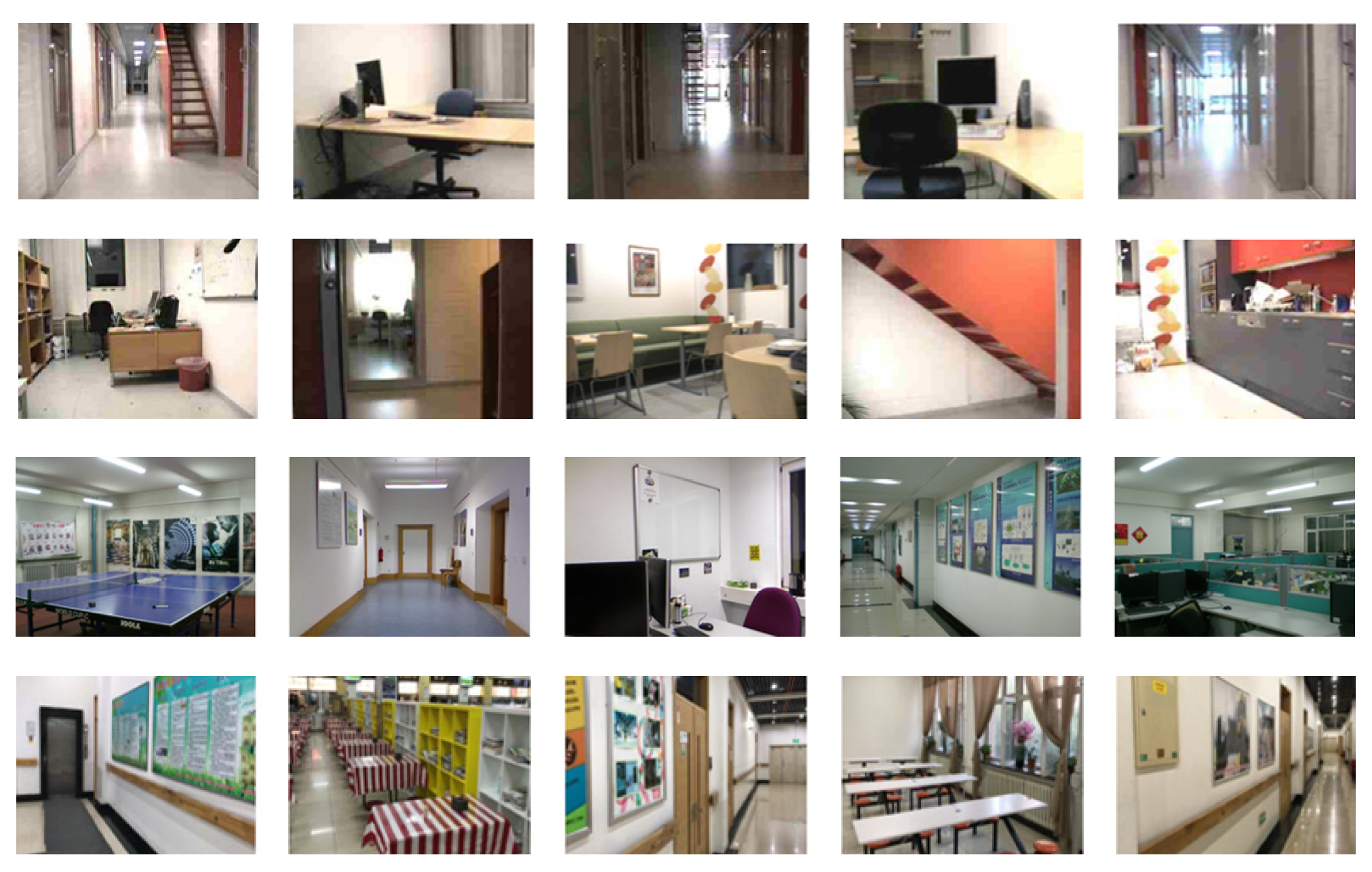

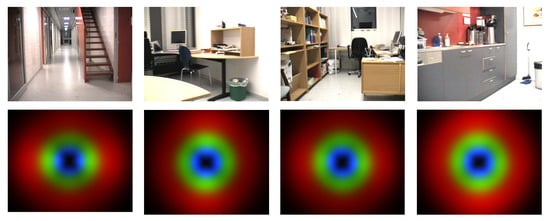

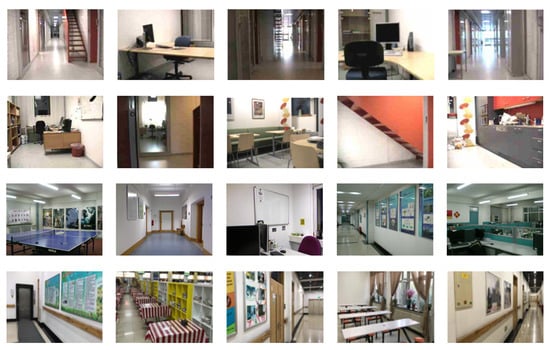

5.1. Experimental Results of Database Image-Clustering Algorithm

Two image databases (namely, the KTH image database [61] and the HIT-TUM image database) were used to evaluate the performance of the proposed algorithm. The images in the HIT-TUM database were acquired from the Harbin Institute of Technology and the Technical University of Munich. Each database contains 400 images captured in 10 different indoor scenes, such as an office, a corridor, a restaurant, and so on. All data processing was run on MATLAB 2018A with an Intel Core i7 CPU and 8GB RAM. Randomly selected example images in the databases are shown in Figure 8. It is worth noting that images in the databases are successively captured in indoor scenes, so the visual features extracted from the images captured in the same indoor scene have high correlations.

Figure 8.

Randomly selected example images of the KTH and HIT-TUM databases.

For scene-level image clustering, database images are grouped by their global features based on the CUSUM change-point detection. The results of the scene-level clustering guide the query image to retrieve the clusters that are similar to the query. Therefore, the performance of database image clustering affects the efficiency of the image retrieval system. For an image retrieval system, the efficiency of the retrieval algorithm is reflected in two aspects: the number of searched database images and the time consumption of the image retrieval. Generally, the fewer database images are searched, the less time retrieval takes.

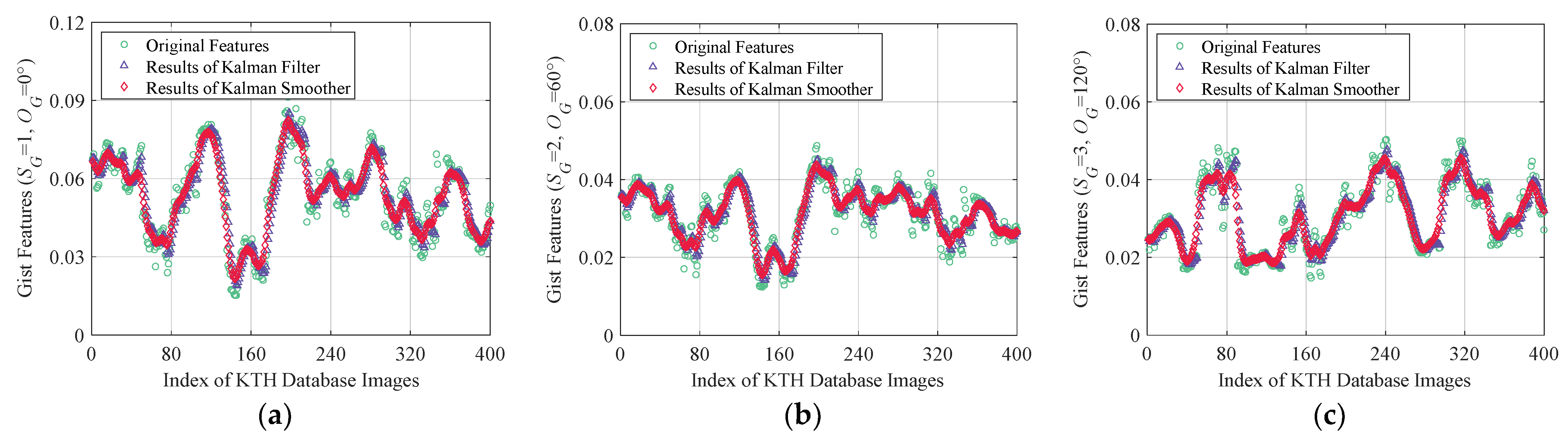

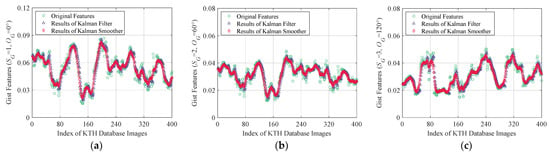

In the same indoor scene, as the database images are successively captured, the Gist features extracted from these images have high correlations. Taking advantage of the correlations, scene-level clustering of database images can be achieved. However, the noise generated in feature extraction affects the correlation of the features. Therefore, the original Gist features of database images need to be pre-processed (including Kalman filtering and Kalman smoothing) to restore the correlation of image features. For a database image, Gist features can be extracted according to different scales and directions. In this paper, Gist features are extracted at three scales and six directions, so 18 feature elements are extracted from each image. That is, the Gist feature vector of each image contains 18 feature elements. Figure 9 shows an example of the Gist feature pre-processing result of a database image, including the original Gist feature values, Kalman filtering results, and Kalman smoothing results. It can be found from Figure 9 that the correlation between original feature values is not evident due to the influence of noise. In contrast, by Kalman filtering, noise is effectively suppressed, and the correlations between features are restored. According to Kalman filtering results, more obvious correlations can be obtained by further Kalman smoothing of Gist features. The pre-processing of features recovers the correlations of global features of database images, which is beneficial to scene-level clustering.

Figure 9.

Examples of pre-processing results of Gist features of database images. (a) Pre-processing results of Gist features for KTH database images with and ; (b) Pre-processing results of Gist features for KTH database images with and ; (c) Pre-processing results of Gist features for KTH database images with and ; (d) Pre-processing results of Gist features for HIT-TUM database images with and ; (e) Pre-processing results of Gist features for HIT-TUM database images with and ; (f) Pre-processing results of Gist features for HIT-TUM database images with and .

Scene-level database image clustering is achieved by detecting the change-points in Gist feature sequences. When image retrieval is executed in the results of scene-level clustering, according to the similarity of the global features, the query image will preferentially search the database image clusters with a higher similarity. Therefore, if all database images in the same scene are grouped into one cluster, the query image captured in this scene can find its matched database images in this cluster. In contrast, if a database image captured in a certain scene is falsely grouped into other clusters, this database image cannot be retrieved when the query searches the right cluster. Depending on the above analysis, the core of the proposed algorithm is that the database images in the same scene are grouped into one cluster as much as possible.

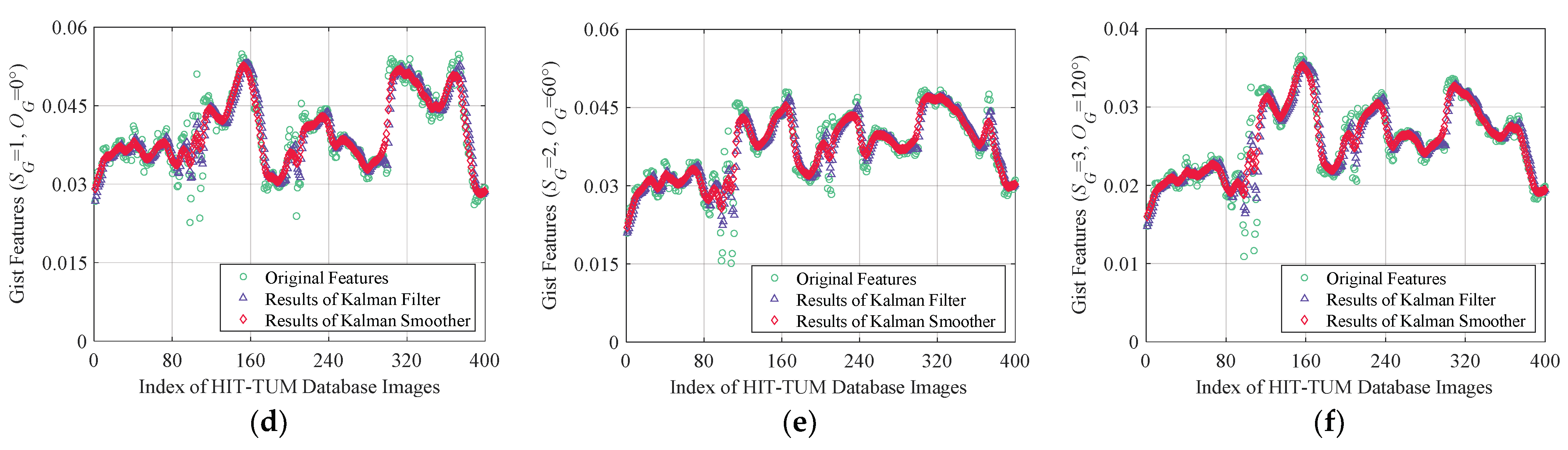

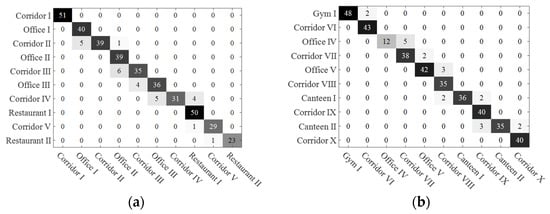

To analyze the performance of the proposed algorithm, scene-level image clustering is executed, and confusion matrices are employed to evaluate clustering accuracy. The confusion matrices of the results of database image clustering are shown in Figure 10. The confusion matrix used to evaluate clustering accuracy in this paper can also be regarded as a clustering error matrix. The row labels of the matrix are the correct cluster labels, and the column labels are the predicted cluster labels. For the matrix in Figure 10a, the values in the third, fourth, and fifth rows of the fourth column are 1, 39, and 6, respectively. This set of values shows that for 46 () database images that are grouped into one cluster, 39 database images were truly captured in the Office II scene, one image was misclassified into the Corridor II scene category, and six images were misclassified into the Corridor III scene. For a row of the confusion matrix, the sum of all values in that row represents the actual number of images in the cluster. For a column of the confusion matrix, the sum of all values in that column represents the predicted number of images in the cluster. The confusion matrix effectively reflects the performance of the proposed scene-level clustering algorithm. By observing the confusion matrix, it can be known that for image-clustering results, incorrectly grouped images only occur in two adjacent image clusters, and the reason accords with the clustering principle in this paper. Specifically, image clustering acts on the indoor database image sequence, and the change-points are detected based on global features of database images, so that images between two change-points are grouped into one cluster. Therefore, the incorrect image grouping is caused by errors in change-point detection. Obviously, if errors exist in change-point detection, some database images that should belong to a certain cluster are grouped into the former or the latter cluster.

Figure 10.

Confusion matrices of database image clustering. (a) Confusion matrix of KTH database image clustering; (b) confusion matrix of HIT-TUM database image clustering.

In this paper, four criteria (i.e., recall rate, precision rate, accuracy rate, and F1 score) are used to evaluate the performance of the clustering algorithm. The recall rate is the ratio of the number of correctly grouped images to the actual number of images in that cluster. The precision rate refers to the ratio of the number of correctly grouped images to the number of images in the cluster. The accuracy rate refers to the ratio of the number of correctly grouped images to the total number of images. The F1 score is used in statistics to measure the accuracy of a classification model. This score can be calculated by the recall rate and the precision rate:

Global features of an image include color features (such as color histogram features and color moment features) and texture features (such as wavelet transform features and Gabor transform features). In simulation experiments, Gist features, color histogram features, color moments, wavelet transform features, and Gabor features are used to perform scene-level clustering on database images, and experimental results are shown in Table 1. For the experimental results, , and denote the average recall rates, average precision rates, and average F1 scores, respectively. From the results shown in Table 1, the color features (such as color histograms and color moments) perform weakly on scene-level clustering. The reason is that the color difference of indoor scenes is relatively small. Especially in an environment with a white wall as the main background, it is not easy to distinguish the scenes by the color information. Compared with color features of images, texture features of images perform better in terms of clustering performance, especially for Gabor features and Gist features. Because multiple Gabor filters with different scales and directions are used in extracting Gist features, Gist features describe the textures of scenes more comprehensively, thereby achieving more accurate image-clustering results.

Table 1.

Performance comparison of scene-level clustering of global features.

To reveal the clustering performance of the ICSCD algorithm proposed in this paper, two typical change-point detection algorithms (i.e., the mean shift-based algorithm [36] and the Bayesian estimation-based algorithm [62]) are simulated for grouping database images at the scene level. The experimental results shown in Table 2 indicate that the proposed ICSCD algorithm significantly outperforms the Bayesian estimation-based algorithm in four metrics: the average recall rate, the average precision rate, the average F1 score, and the accuracy rate. The reason is that the Bayesian estimation-based algorithm utilizes local features in change-point detection, but the local features are too sensitive to scene changes and tend to group database images belonging to the same scene into multiple image clusters or group images belonging to the same class into other clusters. This also shows that the local features of the images are more suitable for further classification of the scene-level clustering results, which is why local features are used for the second layer of clustering in this paper. Both the proposed ICSCD algorithm and the mean shift-based algorithm use global features for clustering, but the difference is that the change-point detection function is employed to detect the change points for image clustering in the proposed ICSCD algorithm, whereas the mean shift function is utilized to detect the change points in the mean shift-based algorithm. Since both the influence of the values at the detection position and the influence of the expected values of the parameter models (i.e., the parameter model A and the parameter model B in the hypothesis test) within the sliding window (as shown in Figure 4) are taken account in the change-point detection function , a higher clustering accuracy can be obtained. Specifically, the average recall rate, the average precision rate, the average F1 score, and the accuracy rate of the proposed ICSCD algorithm are greater than 0.92, which is significantly higher than the mean shift-based algorithm.

Table 2.

Performance comparison of scene-level image clustering algorithms.

5.2. Experimental Results of Hierarchical Image Retrieval and Visual Localization

In the proposed HCIR algorithm, the best-matched database image is determined by the maximum similarity method. Therefore, the validity of the method needs to be verified by experiments. In this part of the experiments, since the best-matched image is the database image that is most similar to the query image, the database image with the highest matching similarity to the query image is found by the global search, and the index of this database image is . In addition, another best-matched database image is determined by the proposed maximum similarity method, and the index of the database image is . The average error of the index positions of best-matched database images can be calculated by:

where is the index error of the best-matched database image in the i-th experiment, and is the total number of query images for experiments.

Based on the average error , the average distance error between the best-matched database images and can be further defined by , where is the fixed acquisition distance of database images. The average index error and the average distance error reflect the performance of retrieving the best-matched database images with the maximum similarity method. For the experimental results, the smaller values of and indicate that the matched database images are closer to the query image. The results of matched image retrieval are shown in Table 3 under the condition that is set to 10 cm. For the experimental results, the ratio is the percentage of the number of experimental results that satisfy . In addition, the average value of the matching similarity and the average value of matched feature points are also calculated in experiments.

Table 3.

Experimental results of matched database image retrieval.

The experimental results shown in Table 3 indicate that for image retrieval experiments with the similarity maximum method separately conducted in the KTH database and the TUM-HIT database, the probability of successfully retrieving a matched database image (i.e., the situation of ) exceeds 94%. In other cases, although the similarity between the matched image and the query image cannot reach the maximum value, the index errors are less than 2, which indicates that the matched image is close to the query image, and there are enough matched features between the matching image and the query image. Therefore, the matched database images under the situation of , , and can be used for visual localization. For the two databases, the average error of the index positions is less than 0.1, and the average distance error is less than 1 cm, showing the effectiveness of the similarity maximum method in determining matched database images. Moreover, the experimental results also show that for different databases, the average matching similarity between the query image and the best-matched database image is greater than 0.5, and there are more than 120 pairs of matched feature points between the query image and the best-matched database image, which provides a fundamental guarantee for visual localization.

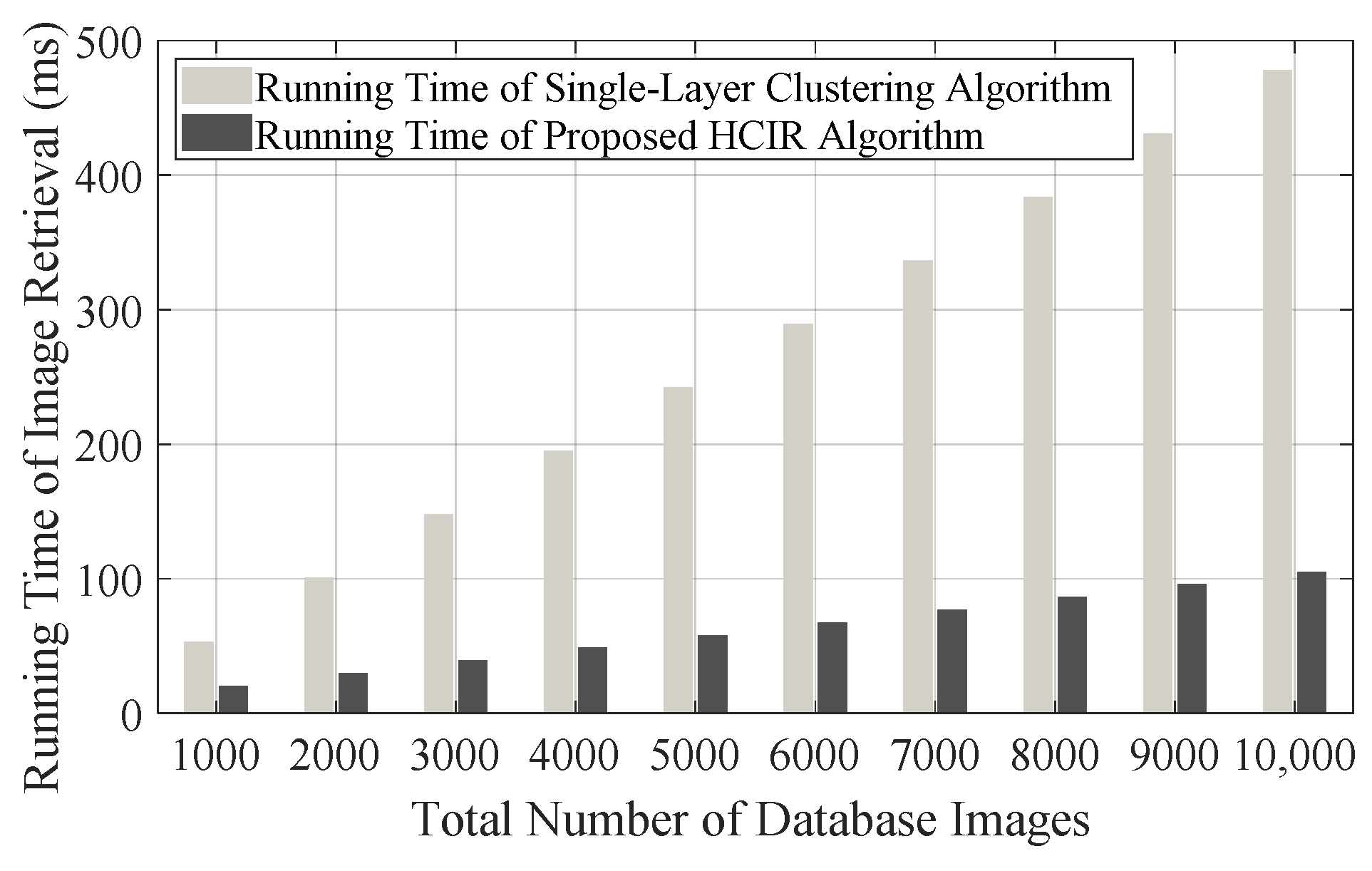

In the proposed HCIR algorithm, the scene-level clustering results are sorted based on the global feature similarity, and then the database images in the sub-scene clusters are sorted based on the local feature similarity. After the two-stage sorting, the query image is matched with database images according to the sorting result. From the above process, it is known that when retrieving the scene-level clustering results based on the global feature similarity, the best case is to obtain the matched image in the first clustering result, and the worst situation is obtaining the matched images after all the results are retrieved. Therefore, for the scene-level image retrieval, the success rate of image retrieval within the top-K clusters is proposed in this paper to evaluate the performance of the clustering algorithm in image retrieval. Specifically, after scene-level clustering of database images, more than one image cluster can be obtained. If the matched database image can be retrieved after searching K image clusters, image retrieval is considered to be achieved within K database image clusters. For a total of query images, if there are query images, and their matched database images are in the K-th cluster, the success rate of the top-K clusters is defined as . The success rate effectively reflects the impact of the scene-level clustering algorithm on the performance of image retrieval. The scene-level clustering algorithms of database images can be divided into two categories: one is based on the method of detecting change points of visual features (such as the proposed HCIR algorithm in this paper, the mean shift-based algorithm, and the Bayesian estimation-based algorithm), and another is clustering a fixed number of database images (such as the C-GIST algorithm [37]). In the C-GIST algorithm, five consecutive database images are grouped into one cluster, and the cluster center is a feature vector of the image that is located at the center position of each cluster. In this paper, two categories of image-clustering algorithms are simulated, respectively, and the success rate of the top-K clusters is calculated. The results are presented in Table 4.

Table 4.

Success rates of the top-K clusters for query image.

The results shown in Table 4 indicate that the proposed HCIR algorithm is beneficial in improving the success rate of the top-K clusters for a query image. For the two databases, the success rates of the top-five clusters achieved by the HCIR algorithm, mean shift-based algorithm, and the Bayesian estimation-based algorithm are more than 90%. At the same time, it is not difficult to find that the HCIR algorithm has more obvious performance advantages. Especially in the KTH database, the success rate of the first cluster is 66.75%, and the success rate of the top-five clusters reaches 99.75%. For the HIT-TUM database and the KTH database, the best-matched database image can be retrieved within the top-five clusters by the HCIR algorithm. In addition, for the sub-scene-level image clustering, success rates of the first K-clusters are also calculated and recorded. Experimental results show that for the HCIR algorithm, the success rate of the first cluster is more than 88%, which indicates that in most cases, the best-matched database image can be found in the first sub-cluster.

To verify the image retrieval efficiency of the proposed HCIR algorithm, image retrieval experiments are performed on the HCIR algorithm and the comparison algorithms. In the experiments, the mean shift-based algorithm, the Bayesian estimation-based algorithm, and the C-GIST algorithm are single-layer clustering algorithms. In addition, another two multi-layer clustering algorithms are considered: the mean shift-KLT algorithm and the Bayesian estimation-KLT algorithm. For the two multi-layer clustering algorithms, database images are firstly grouped by the mean shift-based algorithm or the Bayesian estimation-based algorithm, and then the images are further grouped by the KLT algorithm. According to the average number of retrieved images shown in Table 5, multi-layer clustering algorithms have higher retrieval efficiency, and the number of similar comparisons (i.e., the processes of feature matching) can be limited to 10% of the database size. The reason is that database images are only grouped into scene-level clusters for the single-layer algorithms, and thus the query image needs to match with database images in the scene-level cluster one-by-one. In contrast, database images are further grouped on the basis of scene-level image clusters in multi-layer algorithms. Then, according to visual similarities, image clusters are ranked, and the query image preferentially matches with the database images in the most similar cluster. Therefore, multi-layer algorithms have a better performance at average numbers of retrieved database images.

Table 5.

Average numbers of retrieved database images by different clustering algorithms.

It can be observed from the experimental results shown in Table 5 that fewer database images are retrieved in the HCIR algorithm compared with the other two multi-layer algorithms. The reason is that the ICSCD algorithm has a better performance at scene-level clustering (as shown in Table 2), so that the cluster center can better express the global features of the images in the cluster.

Table 6 shows the average running time of the image-retrieval system using different clustering algorithms. By comparing the number of retrieved images with the average running time of image retrieval, it can be known that when there are more retrieved database images, the running time consumed by image retrieval is also more. Experimental results shown in Table 5 and Table 6 indicate that more database images are retrieved in single-layer clustering algorithms, leading to larger time overheads than in multi-layer clustering algorithms. It is obvious that the HCIR algorithm has advantages in terms of the number of retrieved database images and the running time of image retrieval. The reason is that multi-layer clustering on database images is employed in the HCIR algorithm, and more importantly, the ICSCD algorithm is employed in the proposed retrieval algorithm that achieves a better performance in scene-level database image clustering.

Table 6.

Average running time of image retrieval by different clustering algorithms (unit: ms).

Table 7 shows the average running time of different stages in image retrieval. In the practical implementation of image retrieval, hundreds of local features are needed to be matched between the query image and the database image, resulting in the most time consumption appearing at this stage.

Table 7.

Average running time of different stages in image retrieval (unit: ms).

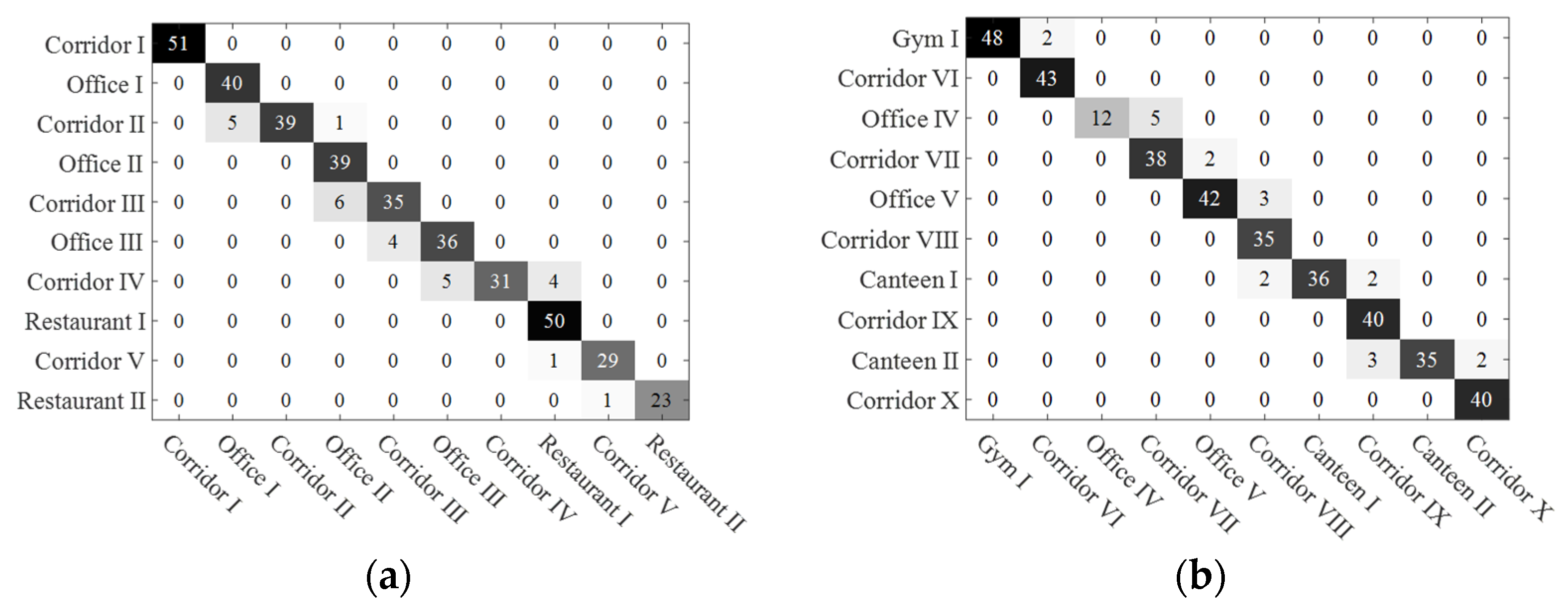

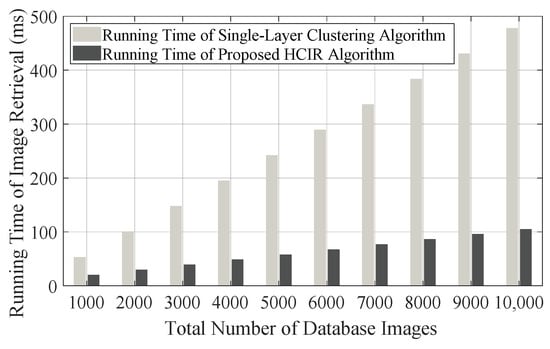

To reveal the performance difference between the single-layer clustering algorithm and the proposed HCIR algorithm, there are ten indoor scenes used for simulation, and is separately set as 100, 200, 400, 800, and 1000, and then the running time of image retrieval for different database sizes can be simulated, as shown in Figure 11. As a pre-condition of simulation, the backtracking mechanism is always not triggered. According to the simulation results, the advantage of the proposed algorithm is that the running time does not linearly increase along with the growth of the database size. Even when the database image size is increased to 10,000, the running time of image retrieval is less than 110 ms. In this case, the running time of image retrieval corresponding to the single-layer clustering-based algorithm almost reaches 500 ms, which means that only two retrievals can be performed per second. In contrast, by the proposed HCIR algorithm, image retrieval can be executed nine times when there are 10,000 images in the database. The reason that the proposed algorithm spends less time coping with image retrieval is that database images are reasonably grouped in the off-line stage. Furthermore, on a deeper level, time for image clustering is sacrificed in the off-line stage to reduce the time consumption of image retrieval in the on-line stage.

Figure 11.

Average running time of the single-layer clustering algorithm and proposed algorithm.

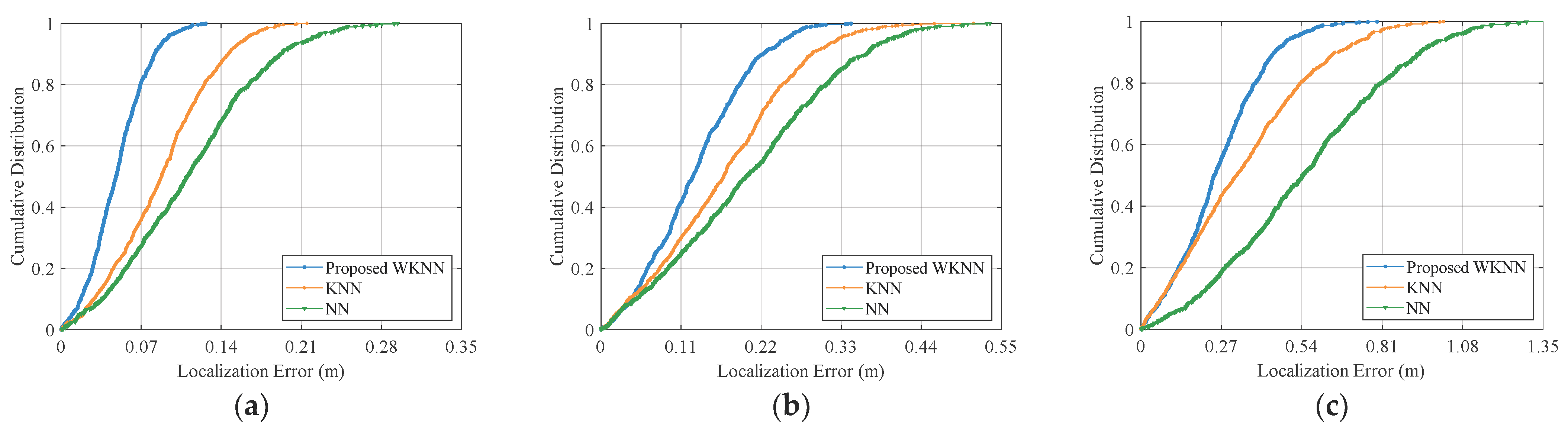

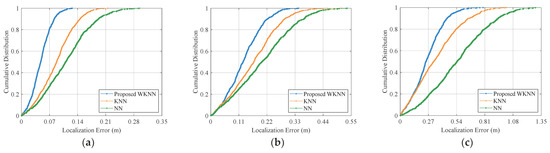

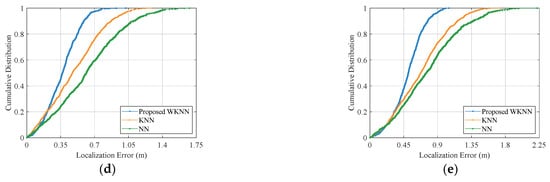

To demonstrate the performance of the proposed WKNN algorithm, two typical image retrieval-based localization methods (i.e., the NN method [49,54,55] and the KNN method [56,57]) are selected and implemented. Each image in the KTH database and the HIT-TUM database is employed as a query image for visual localization. For the proposed WKNN method and the typical KNN method, five nearest neighbors are selected to estimate the query position [57]. In order to reveal the impact of image acquisition intervals (din) on localization accuracy, database images with different acquisition intervals are set for experiments. Localization errors of query images are calculated, and the cumulative distributions of the errors are shown in Figure 12.

Figure 12.

Experimental results of visual localization. (a) Cumulative distribution of localization errors with din = 10 cm; (b) cumulative distribution of localization errors with din = 20 cm; (c) cumulative distribution of localization errors with din = 30 cm; (d) cumulative distribution of localization errors with din = 40 cm; (e) cumulative distribution of localization errors with din = 50 cm.

To quantitatively analyze the performance improvement of the WKNN method, an accuracy improvement rate rim is introduced and defined as:

where and are the average errors by the proposed WKNN method and the comparative method, respectively.

Compared with the NN and KNN methods, the proposed WKNN method achieves a better performance on localization accuracy, as shown in Table 8. In all experimental cases, the improvement of average localization accuracy reaches at least 22% and 34%, respectively, compared with the KNN and NN methods. From the localization results, it can be found that when the database images are more densely captured, the advantage of the proposed method in terms of localization accuracy is more obvious compared with the two other localization methods. The reason is that when the intervals of database images are large, the common visual features between the query image and the database images are few, which weakens the contributions of the weights in the WKNN method.

Table 8.

Localization performance of various localization methods.

As illustrated in Table 8, when the database image acquisition intervals are set to be 10 cm, 20 cm, 30 cm, 40 cm, and 50 cm, the average localization errors of the WKNN method are 0.0490 m, 0.1299 m, 0.2604 m, 0.3673 m, and 0.5048 m, respectively. The results indicate that localization accuracy increases along with database image acquisition intervals. Even if the acquisition interval is increased to 50 cm, the sub-meter localization accuracy can be achieved by the proposed method, which satisfies the requirements of most indoor location-based services. But it is worth noting that acquisition intervals that are too small lead to a large off-line image database and result further in a high time overhead of image retrieval. Therefore, when designing a visual indoor localization system, a proper database image acquisition interval should be selected by striking a balance between localization accuracy and efficiency.

6. Discussion

In the visual localization system, an off-line database generally contains a large number of images for position estimation. For example, over 40,000 database images were captured over a distance of 4.5 km in the TUMindoor localization system, which means that database images were acquired at approximately 10 cm intervals [26]. With the traditional image retrieval strategy, the query image is exhaustively compared with each database image, which is not scalable for a large-scale database. Recently, clustering-based hierarchical image retrieval has been proposed and applied in large-scale image retrieval [63,64,65]. The main advantage of hierarchical image retrieval is creating an indexing strategy by grouping the images based on the visual cues before retrieval, so that only the relevant clusters are examined in the retrieval process. With this strategy, clustering-based hierarchical image retrieval significantly speeds up the search process at the expense of the time consumption of image clustering beforehand.

However, although the existing works on hierarchical image retrieval achieve high searching efficiency, these works are unsuitable for visual localization. The reason is that geographic factors on image clustering have not been taken into consideration, and database images acquired in the same scene are not necessarily grouped in a cluster. In the visual localization system, the query image is desired to be orderly compared with database images in the relevant scenes according to visual similarity. Specifically, the query image should be compared with similar database images as a priority. In this way, the query image need not be compared with all database images, and the retrieval can be obtained.

Considering the particular requirements of image retrieval in visual localization, a hierarchical clustering-based image retrieval (i.e., HCIR) algorithm is proposed in this paper to organize database images and achieve image retrieval. The main contribution to the HCIR method is that database images are orderly grouped into clusters by visual cues according with geographical distribution characteristics. Since the database images for visual localization are successively captured by the mapping equipment in indoor scenes, visual features in the same scene have high visual correlations. However, once the mapping equipment switches to another scene, the correlations subsequently decrease. Taking advantage of this characteristic of database images, an ICSCD algorithm is presented to group database images into clusters at the scene level. Moreover, an image clustering algorithm based on KLT feature tracking is proposed to group database images at the sub-scene level. With the ICSCD algorithm and KLT feature tracking-based algorithms, the visual features of different scenes and sub-scenes can be described by the cluster centers. In the process of retrieval, the query image is initially compared with the cluster centers, and then the clusters that have the largest similarity with the query are selected, and the images in these clusters are used to compare the query. By this means, the database images with high similarities to the query are preferentially retrieved, thus reducing the time consumption of image retrieval.