Tohjm-Trained Multiscale Spatial Temporal Graph Convolutional Neural Network for Semi-Supervised Skeletal Action Recognition

Abstract

1. Introduction

- (1)

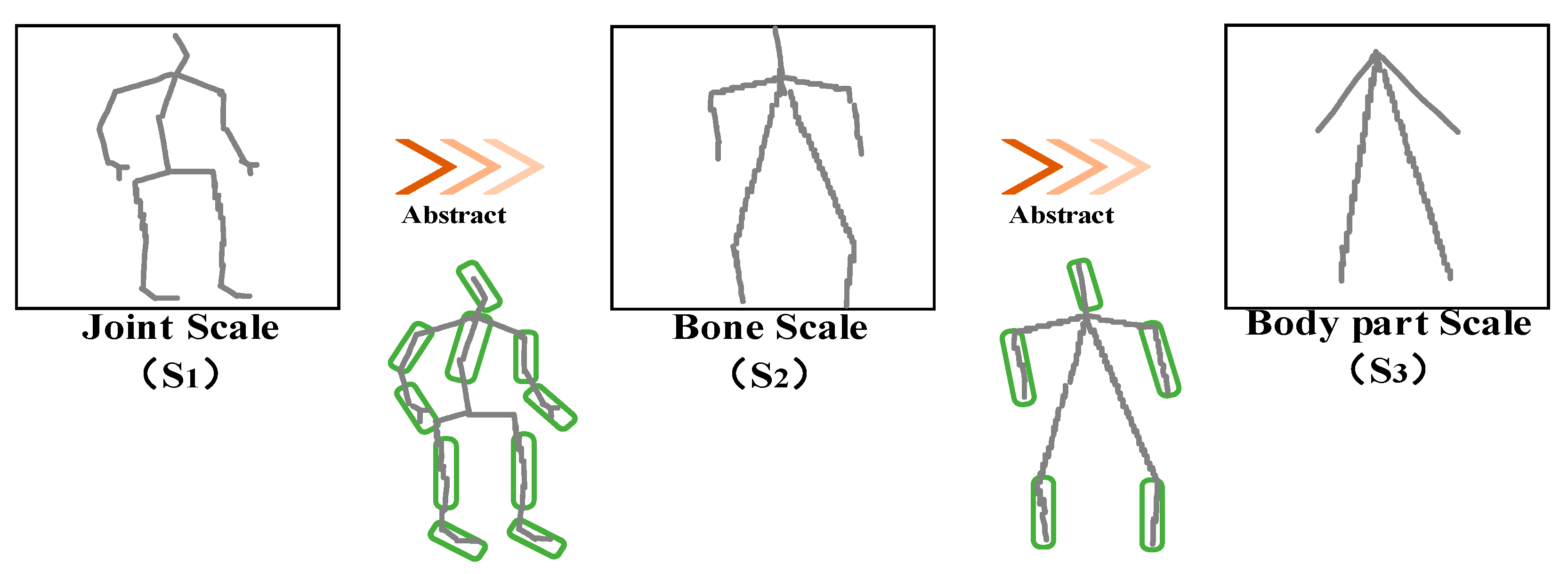

- The employment of only a single joint scale: Most methods consider joint information regardless of skeletal information or separately take joint and skeletal information into account. For example, Shi et al. [20] proposed a two-stream adaptive graph convolutional network where the topological graph of skeleton joints can be learned adaptively by the BP algorithm to increase the flexibility of the graph construction model. The two-stream network utilizes not only the 1st-order information of the skeleton data (joint information) but also the 2nd-order information of the skeleton (length and orientation of the bones), which improves the accuracy by about 7% on the NTU RGB+D dataset compared to the method of Yan et al. [13]. Although the two-stream network involves the skeleton scale in extracting action information, there is no information exchange in utilizing the joint and bone scales. Tu et al. [21] made improvements on this basis by fusing joint information and bone formation in a dual-stream network during the extraction of action features, which achieved some improved performance. However, the network fails to consider that the skeleton scale is instructive to the joint scale during human movement, resulting in the poor ability of the network to extract motion information. Considering the problem of fusing joint and skeletal scales, Zhang et al. [22] firstly proposed that the edges of the skeleton graph are also used for convolutional processing to extract information, and designed two hybrid networks. Both networks use skeletal and joint information, which improves the accuracy of action recognition but fails to consider the extraction of action information from the body-part scale. Due to the mutual actuation and limitation between the arm joint and elbow in action, lifting the elbow will change the position of the arm joint. This complex motor association between joints and bones constitutes a variety of human behaviors. Considering the potential functional correlation between joints and bones in action, we adopt a new representation of the human body: joint scale, bone scale, and body-part scale. The mutual directivity among joints, bones, and body parts in space-time is utilized to serve the presentation and recognition of movements better.

- (2)

- The equal treatment of all joint contributions in training: In human action recognition, there are often a variety of situations—such as uneven sample categories—leading to different levels of difficulty in training joints during network training. Therefore, an online hard key-point mining method based on time-level is proposed, which benefits from the OHKM method [23,24] to deal with difficult sample mining. This method corresponds the human joint to the loss value of that point. The greater the loss value, the more complex the network trained for the joint. In this way, rigid joints can be effectively mined so that the network can be well trained against the problematic joints, and the recognition accuracy of the network for complicated joints can be improved.

- (3)

- (1)

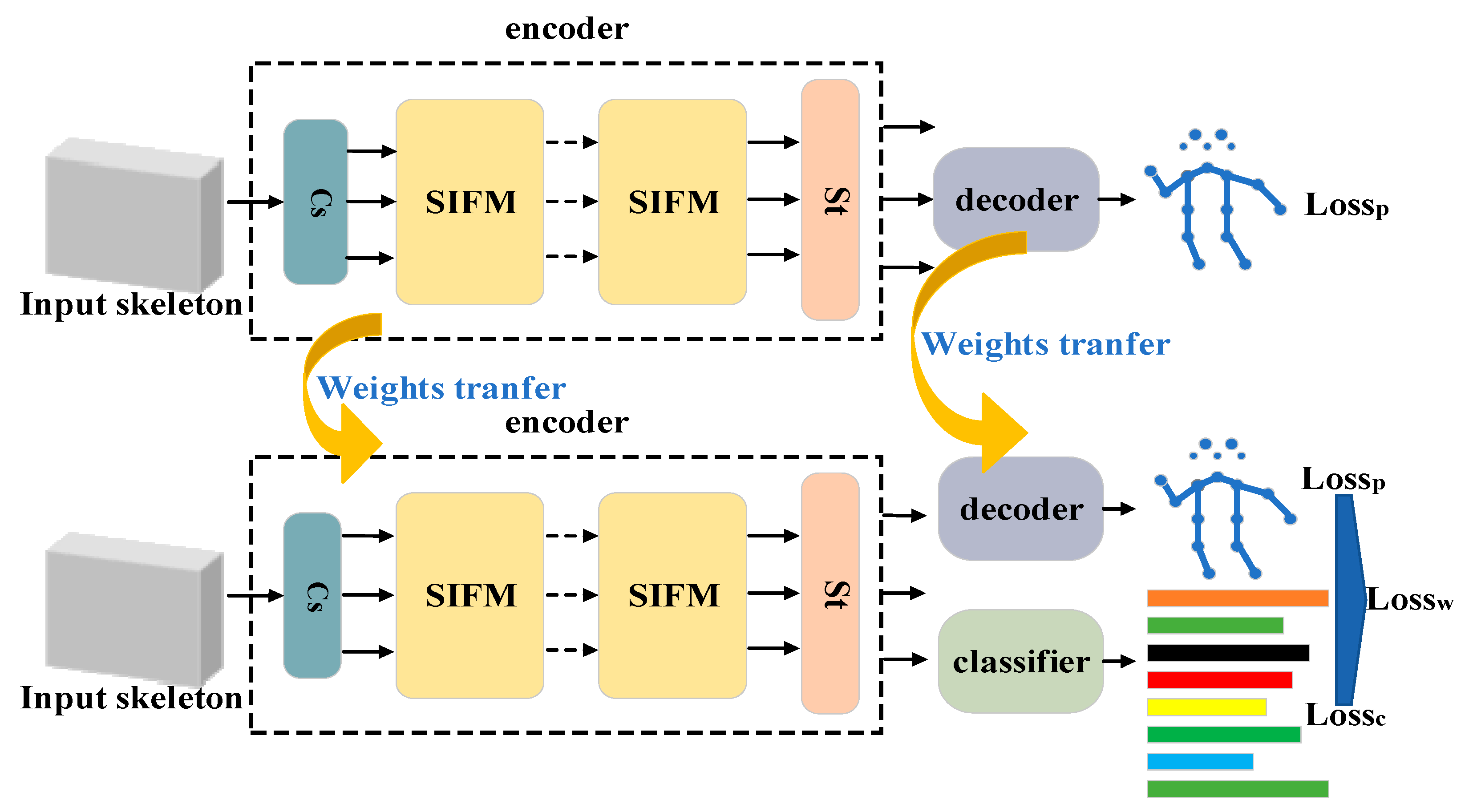

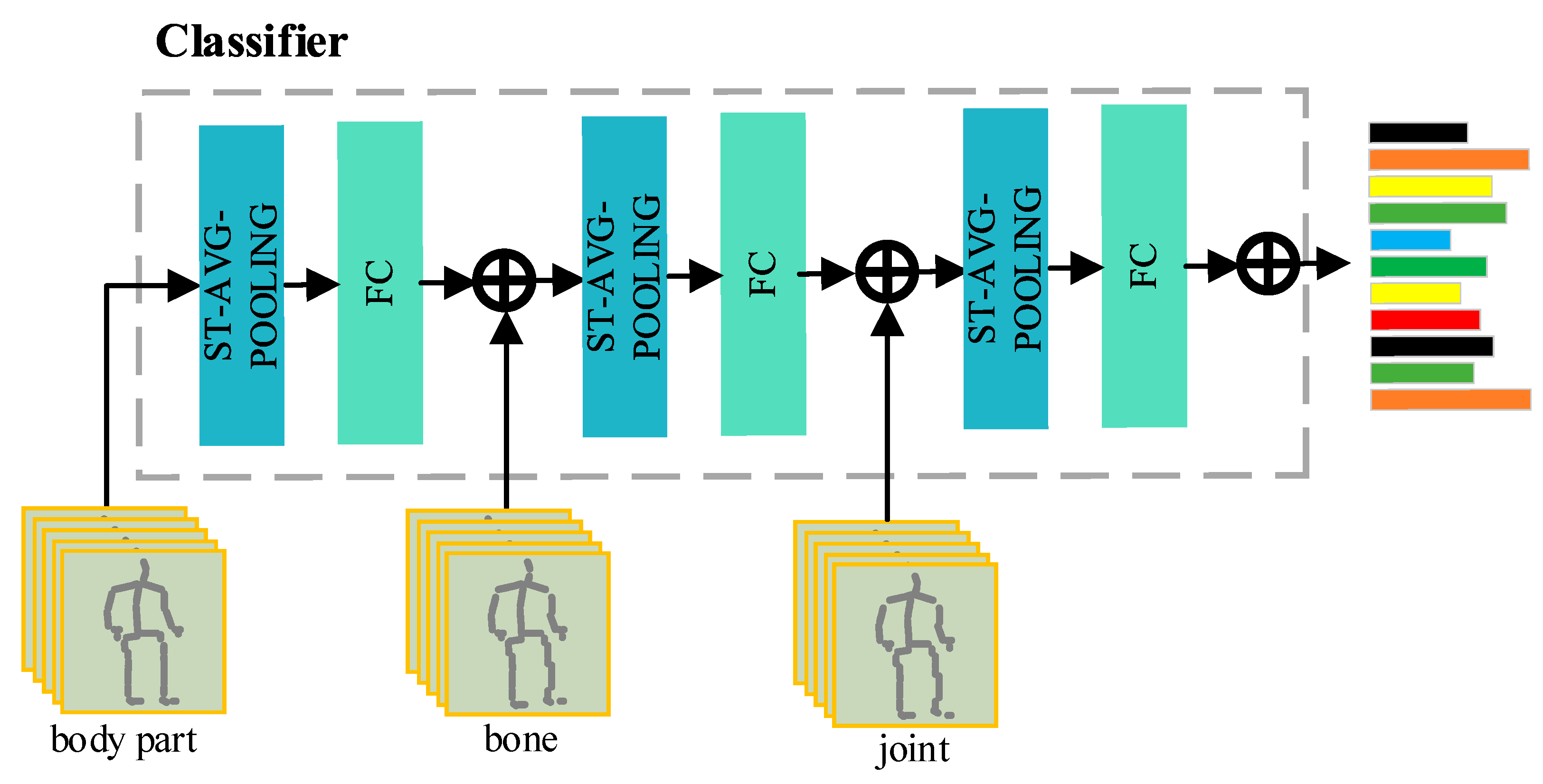

- We propose a Tohjm-trained multiscale spatial temporal graph convolutional neural network for semi-supervised action recognition (Tohjm-MSstgcn) to extract action features at different scales and achieve effective action classification. The encoder elaborately fuses joint coordinate subtraction to obtain multiscale graphs. It automatically converts low-scale graphs to large-scale with scale transformation (St). A scale-information interaction module constructed with a three-layer MLP is used to obtain cross-scale information.

- (2)

- We propose a time-level online hard joint mining training strategy, which means that the network dynamically measures joints with top-K loss in training and sets the loss of other joints to 0. This strategy helps the model to focus more on complex samples, effectively reduces prediction error and improves the training effect on unlabeled samples. The newly constructed loss function is also used to train the overall network.

2. Related Works

2.1. ST-GCN

2.2. OHKM

2.3. GRU Model

3. Methodology

3.1. Problem Definition

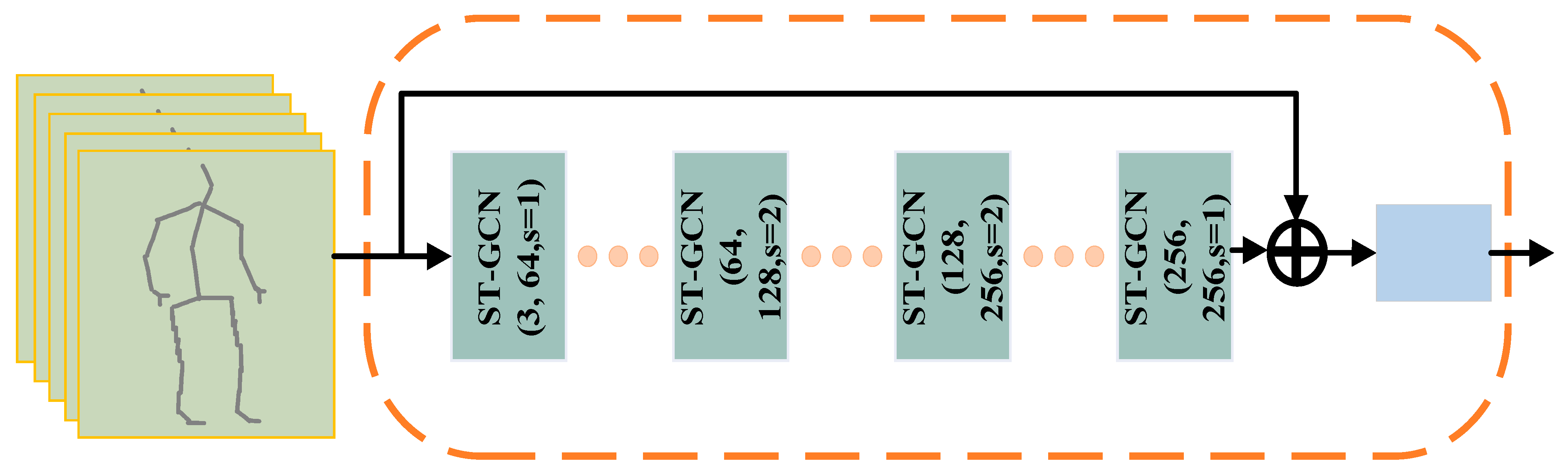

3.2. Tohjm-MSstgcn

| Algorithm 1: Time-level online hard joint mining |

| input: M: Number of frames in training time t; N: Number of human joints in a single frame; : denotes the i-th frame (i-th image) in M frames (M images); : denotes the jth joint in the i-th frame : denotes the position of j joints in frame m obtained from the training of encoder; : denotes the true position of joint j in frame m; : denotes the loss of the jth joint in the i-th frame of the calculation ; Loss values calculated by time-level online hard joint mining algorithm; output: : Loss values calculated by time-level online hard joint mining algorithm Specific steps:

|

4. Results and Discussion

4.1. Experiment Datasets and Evaluation Metric

4.2. Implementation Details

4.3. Comparison with Other Models

4.3.1. Comparison Results of Semi-Supervised Methods on NTU-RGB + D Dataset

4.3.2. Comparison Results of Fully Supervised Methods on NTU-RGB + D Dataset and Kinetics-Skeleton Dataset

4.4. Ablation Study

4.4.1. The Effect of SIFM

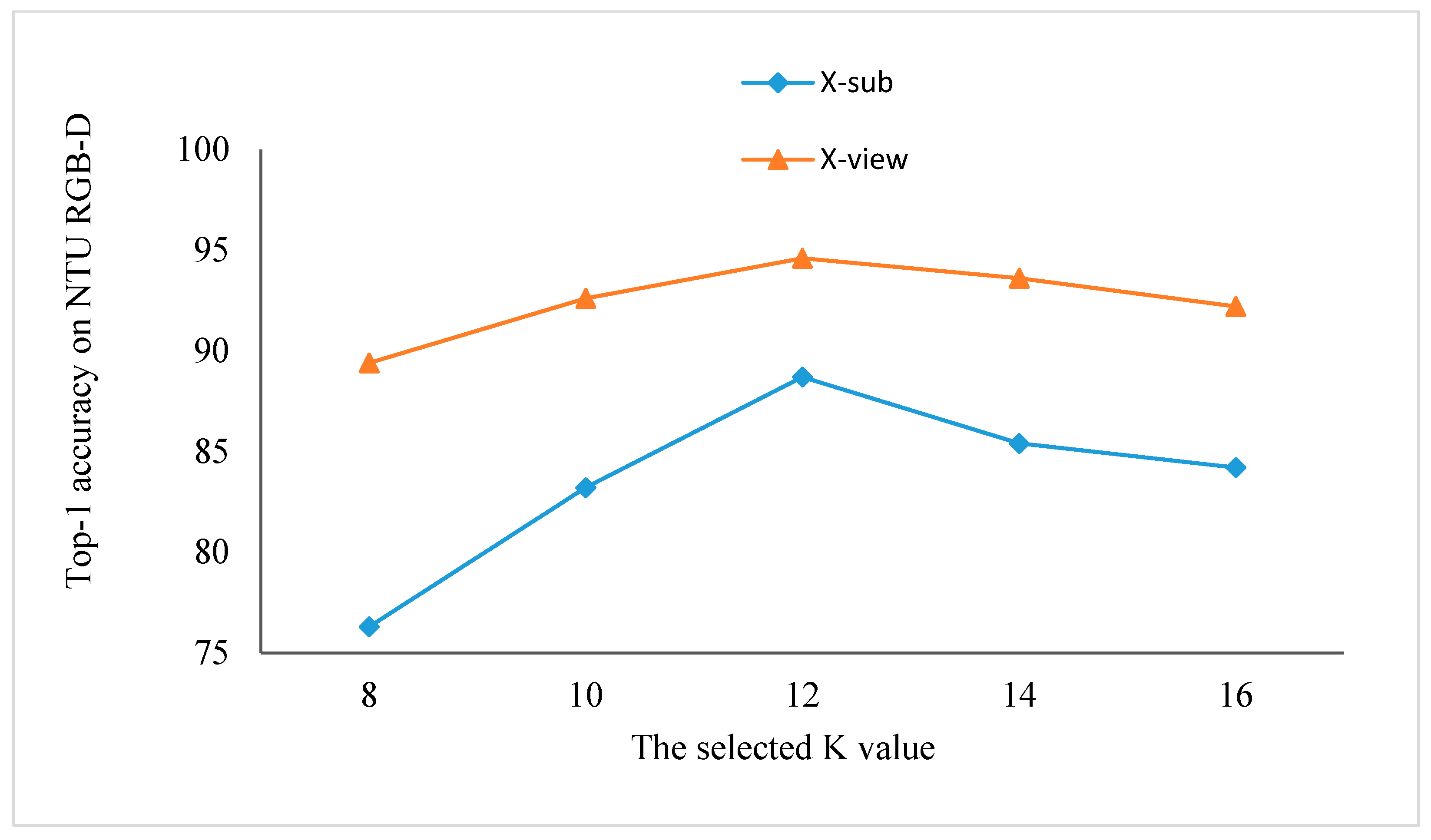

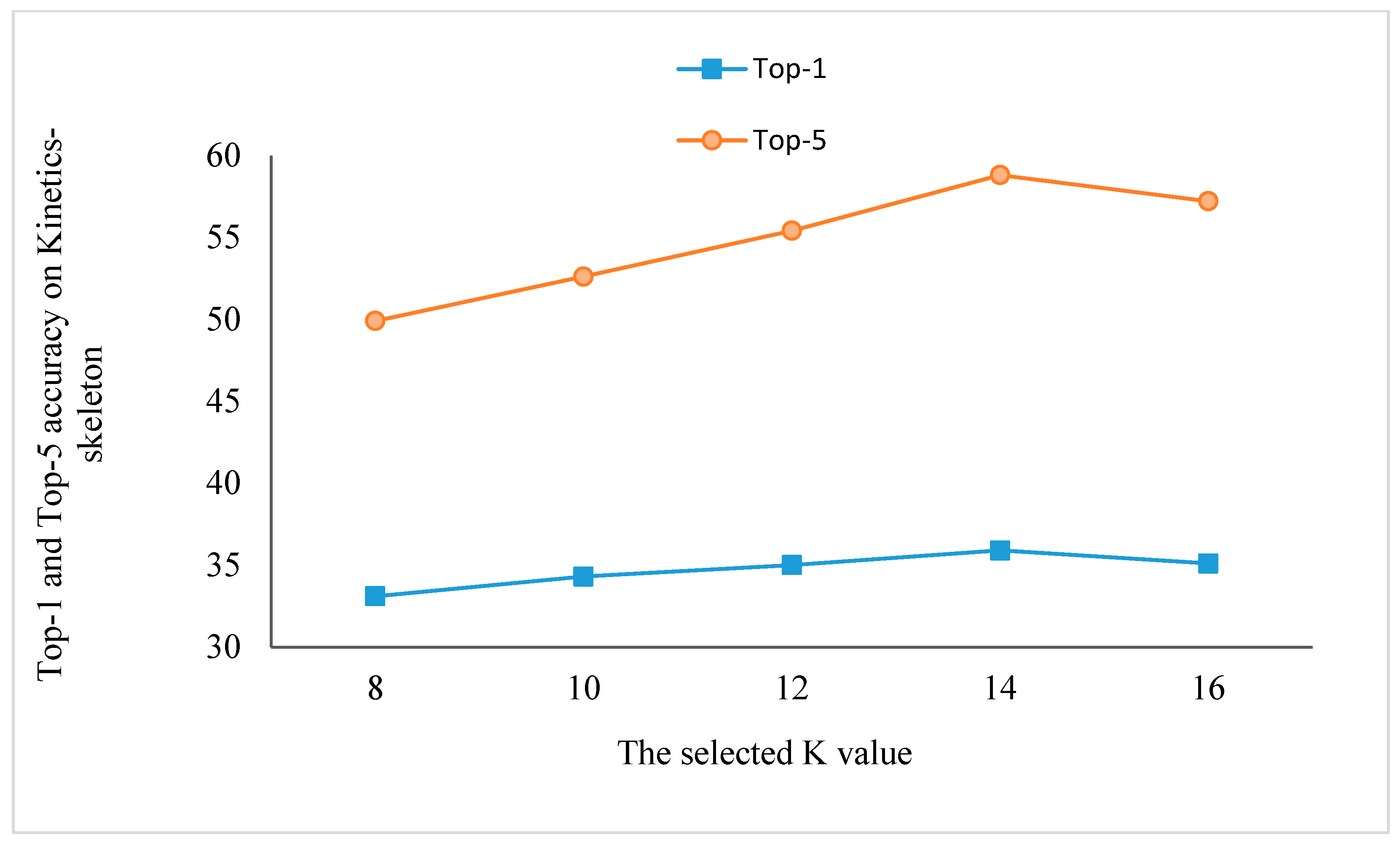

4.4.2. The Effect of K Value on Network Performance in Tohjm

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cai, Q.; Deng, Y.; Li, H.S. Review of human behavior recognition methods based on deep learning. Comput. Sci. 2020, 47, 85–93. (In Chinese) [Google Scholar]

- Wang, Y.; Xiao, Y.; Xiong, F.; Jiang, W.; Cao, Z.; Zhou, J.T.; Yuan, J. 3DV: 3D dynamic voxel for action recognition in depth video. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 511–520. [Google Scholar]

- Sanchez-Caballero, A.; de López-Diz, S.; Fuentes-Jimenez, D.; Losada-Gutiérrez, C.; Marrón-Romera, M.; Casillas-Perez, D.; Sarker, M.I. 3dfcnn: Real-time action recognition using 3D deep neural networks with raw depth information. Multimed. Tools Appl. 2022, 81, 24119–24143. [Google Scholar] [CrossRef]

- Munro, J.; Damen, D. Multi-modal domain adaptation for fine-grained action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Korea, 27–28 October 2019; pp. 122–132. [Google Scholar]

- Liu, Z.; Zhang, H.; Chen, Z.; Wang, Z.; Ouyang, W. Disentangling and unifying graph convolutions for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 143–152. [Google Scholar]

- Peng, G.; Wang, S. Dual semi-supervised learning for facial action unit recognition. Proc. AAAI Conf. Artif. Intell. 2019, 33, 8827–8834. Available online: https://ojs.aaai.org//index.php/AAAI/article/view/4909 (accessed on 27 September 2022). [CrossRef]

- Xu, Z.; Hu, R.; Chen, J.; Chen, C.; Jiang, J.; Li, J.; Li, H. Semi supervised discriminant multi manifold analysis for action recognition. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2951–2962. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.Y.; Li, C.; Shi, H.; Zhu, X.; Li, P.; Dong, J. Adapnet: Adaptability decomposing encoder-decoder network for weakly supervised action recognition and localization. IEEE Trans. Neural Netw. Learn. Syst. 2020, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Han, Y.; Tang, J.; Hu, Q.; Jiang, J. Semi-supervised image-to-video adaptation for video action recognition. IEEE Trans. Cybern. 2016, 47, 960–973. [Google Scholar] [CrossRef] [PubMed]

- Huang, S. Research on Human Action Recognition Based on Skeleton; Shanghai Jiaotong Universit: Shanghai, China, 2014. (In Chinese) [Google Scholar]

- Wang, L.; Tong, Z.; Ji, B.; Wu, G. Tdn: Temporal difference networks for efficient action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 1895–1904. [Google Scholar]

- Su, K.; Liu, X.; Shlizerman, E. Predict & cluster: Unsupervised skeleton based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9631–9640. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence; 2018. Available online: https://ojs.aaai.org/index.php/AAAI/article/view/12328 (accessed on 27 September 2022).

- Cheng, K.; Zhang, Y.; Cao, C.; Shi, L.; Cheng, J.; Lu, H. Decoupling gcn with dropgraph module for skeleton-based action recognition. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 536–553. [Google Scholar]

- Li, M.; Chen, S.; Chen, X.; Zhang, Y.; Wang, Y.; Tian, Q. Actional-structural graph convolutional networks for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3595–3603. [Google Scholar]

- Si, C.; Jing, Y.; Wang, W.; Tan, T. Skeleton-based action recognition with spatial reasoning and temporal stack learning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 103–118. [Google Scholar]

- Zhang, P.; Lan, C.; Zeng, W.; Xing, J.; Xue, J.; Zheng, N. Semantics-guided neural networks for efficient skeleton-based human action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1112–1121. [Google Scholar]

- Zhang, X.; Xu, C.; Tao, D. Context aware graph convolution for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14333–14342. [Google Scholar]

- Zhao, R.; Wang, K.; Su, H.; Ji, Q. Bayesian graph convolution lstm for skeleton based action recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6882–6892. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Two-stream adaptive graph convolutional networks for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12026–12035. [Google Scholar]

- Tu, Z.; Zhang, J.; Li, H.; Chen, Y.; Yuan, J. Joint-bone fusion graph convolutional network for semi-supervised skeleton action recognition. IEEE Trans. Multimed. 2022, 1–13. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, C.; Tian, X.; Tao, D. Graph edge convolutional neural networks for skeleton-based action recognition. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 3047–3060. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Wang, Z.; Peng, Y.; Zhang, Z.; Yu, G.; Sun, J. Cascaded pyramid network for multi-person pose estimation. IEEE Conf. Comput. Vis. Pattern Recognit. 2018, 7103–7112. [Google Scholar]

- Wang, C.; Wang, Y.; Huang, Z.; Chen, Z. Simple baseline for single human motion forecasting. IEEE/CVF Int. Conf. Comput. Vis. 2021, 2260–2265. [Google Scholar]

- Si, C.; Nie, X.; Wang, W.; Wang, L.; Tan, T.; Feng, J. Adversarial self-supervised learning for semi-supervised 3D action recognition. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 35–51. [Google Scholar]

- Zheng, N.; Wen, J.; Liu, R.; Long, L.; Dai, J.; Gong, Z. Unsupervised representation learning with long-term dynamics for skeleton based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence; 2018; pp. 1–8. Available online: https://ojs.aaai.org/index.php/AAAI/article/view/11853 (accessed on 27 September 2022).

- Li, M.; Chen, S.; Zhao, Y.; Zhang, Y.; Wang, Y.; Tian, Q. Dynamic multiscale graph neural networks for 3d skeleton based human motion prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 214–223. [Google Scholar]

- Demisse, G.G.; Papadopoulos, K.; Aouada, D.; Ottersten, B. Pose encoding for robust skeleton-based action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 188–194. [Google Scholar]

- Wang, H.; Wang, L. Beyond joints: Learning representations from primitive geometries for skeleton-based action recognition and detection. IEEE Trans. Image Process. 2018, 4382–4394. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.; Li, L.; Zhang, Z.; Huang, Y.; Wang, L. Relational network for skeleton-based action recognition. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 826–831. [Google Scholar]

- Dang, L.; Nie, Y.; Long, C.; Zhang, Q.; Li, G. MSR-GCN: Multi-scale residual graph convolution networks for human motion prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 11467–11476. [Google Scholar]

- Shahroudy, A.; Liu, J.; Ng, T.-T.; Wang, G. Ntu rgb+ d: A large scale dataset for 3D human activity analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar]

- Kay, W.; Carreira, J.; Simonyan, K.; Zhang, B.; Hillier, C.; Vijayanarasimhan, S.; Zisserman, A. The kinetics human action video dataset. arXiv 2017, arXiv:1705.06950. [Google Scholar]

- Li, C.; Zhong, Q.; Xie, D.; Pu, S. Co-occurrence feature learning from skeleton data for action recognition and detection with hierarchical aggregation. arXiv 2018, arXiv:1804.06055. [Google Scholar]

- Yang, H.; Gu, Y.; Zhu, J.; Hu, K.; Zhang, X. PGCN-TCA: Pseudo graph convolutional network with temporal and channel-wise attention for skeleton-based action recognition. IEEE Access 2020, 10040–10047. [Google Scholar] [CrossRef]

- Wen, Y.H.; Gao, L.; Fu, H. Graph CNNs with motif and variable temporal block for skeleton-based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8989–8996. [Google Scholar]

- Thakkar, K.; Narayanan, P.J. Part-based graph convolutional network for action recognition. arXiv 2018, arXiv:1809.04983. [Google Scholar]

- Song, Y.F.; Zhang, Z.; Wang, L. Richly activated graph convolutional network for action recognition with incomplete skeletons. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1–5. [Google Scholar]

- Peng, W.; Hong, X.; Zhao, G. Tripool: Graph triplet pooling for 3D skeleton-based action recognition. Pattern Recognit. 2021, 115, 107921. [Google Scholar] [CrossRef]

- Lin, L.; Song, S.; Yang, W.; Liu, J. Ms2l: Multi-task self-supervised learning for skeleton based action recognition. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2490–2498. [Google Scholar]

- Miyato, T.; Maeda, S.; Koyama, M.; Ishii, S. Virtual adversarial training: A regularization method for supervised and semi-supervised learning. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 1979–1993. [Google Scholar] [CrossRef] [PubMed]

| Methods | 5% (%) | 10% (%) | 20% (%) | 40% (%) | ||||

|---|---|---|---|---|---|---|---|---|

| X-View | X-Sub | X-View | X-Sub | X-View | X-Sub | X-View | X-Sub | |

| VAT [41] | 57.9 | 51.3 | 66.3 | 60.3 | 72.6 | 65.6 | 78.6 | 70.4 |

| S4L [26] | 55.1 | 48.4 | 63.6 | 58.1 | 71.1 | 63.1 | 76.9 | 68.2 |

| ASSL [25] | 63.6 | 57.3 | 69.8 | 64.3 | 74.7 | 68.0 | 80.0 | 72.3 |

| MS2L [40] | - | - | - | 65.2 | - | - | - | - |

| Ours | 64.8 | 60.9 | 77.2 | 69.6 | 84.5 | 78.1 | 89.5 | 82.7 |

| Methods | X-Sub (%) | X-View (%) |

|---|---|---|

| ST-GCN [13] | 81.5 | 88.3 |

| HCN [31] | 86.5 | 91.1 |

| PB-GCN [37] | 87.5 | 93.2 |

| M-GCNs + VTDB [35] | 84.2 | 94.2 |

| AS-GCN [15] | 86.8 | 94.2 |

| 2s-AGCN [20] | 88.2 | 94.9 |

| BPLHM [22] | 84.5 | 91.1 |

| CA-GCN [18] | 83.5 | 91.4 |

| RA-GCN [38] | 85.9 | 93.5 |

| PGCN-TCA [35] | 88.0 | 93.6 |

| (Ours) | 88.7 | 94.6 |

| Methods | Top-1 (%) | Top-5 (%) |

|---|---|---|

| ST-GCN [13] | 30.7 | 52.8 |

| AS-GCN [15] | 34.8 | 56.5 |

| 2s-AGCN [20] | 35.9 | 58.6 |

| BPLHM [22] | 33.4 | 56.2 |

| CA-GCN [18] | 34.1 | 56.6 |

| Tripool [39] | 34.1 | 56.2 |

| (Ours) | 35.9 | 58.8 |

| Module Number | Top-1 (%) | Top-5 (%) |

|---|---|---|

| 1 | 30.7 | 52.8 |

| 2 | 34.8 | 56.5 |

| 3 | 35.7 | 58.6 |

| 4 | 35.9 | 58.8 |

| 5 | 33.4 | 56.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gou, R.; Yang, W.; Luo, Z.; Yuan, Y.; Li, A. Tohjm-Trained Multiscale Spatial Temporal Graph Convolutional Neural Network for Semi-Supervised Skeletal Action Recognition. Electronics 2022, 11, 3498. https://doi.org/10.3390/electronics11213498

Gou R, Yang W, Luo Z, Yuan Y, Li A. Tohjm-Trained Multiscale Spatial Temporal Graph Convolutional Neural Network for Semi-Supervised Skeletal Action Recognition. Electronics. 2022; 11(21):3498. https://doi.org/10.3390/electronics11213498

Chicago/Turabian StyleGou, Ruru, Wenzhu Yang, Zifei Luo, Yunfeng Yuan, and Andong Li. 2022. "Tohjm-Trained Multiscale Spatial Temporal Graph Convolutional Neural Network for Semi-Supervised Skeletal Action Recognition" Electronics 11, no. 21: 3498. https://doi.org/10.3390/electronics11213498

APA StyleGou, R., Yang, W., Luo, Z., Yuan, Y., & Li, A. (2022). Tohjm-Trained Multiscale Spatial Temporal Graph Convolutional Neural Network for Semi-Supervised Skeletal Action Recognition. Electronics, 11(21), 3498. https://doi.org/10.3390/electronics11213498