Abstract

The massive number of users has brought severe challenges in managing cloud data centers (CDCs) composed of multi-core processor that host cloud service providers. Guaranteeing the quality of service (QoS) of multiple users as well as reducing the operating costs of CDCs are major problems that need to be solved. To solve these problems, this paper establishes a cost model based on multi-core hosts in CDCs, which comprehensively consider the hosts’ energy costs, virtual machine (VM) migration costs, and service level agreement violation (SLAV) penalty costs. To optimize the goal, we design the following solution. We employ a DAE-based filter to preprocess the VM historical workload and use an SRU-based method to predict the computing resource usage of the VMs in future periods. Based on the predicted results, we trigger VM migrations before the hosts move into the overloaded state to reduce the occurrence of SLAV. A multi-core-aware heuristic algorithm is proposed to solve the placement problem. Simulations driven by the VM real workload dataset validate the effectiveness of our proposed method. Compared with the existing baseline methods, our proposed method reduces the total operating cost by 20.9~34.4%.

1. Introduction

The world is entering a post-coronavirus era. Since countries and multinational cooperative organizations still have not formed a unified, reliable, and effective means of epidemic prevention, a local epidemic that could break out at any time brings a high risk of spreading to the world. This situation has forced people to further embrace cloud computing, migrating much of their economic, social, and personal activities online. For example, about 82% of Hong Kong businesses plan to maintain remote work in the post-COVID-19 era [1]. This trend has brought opportunities for cloud computing, as well as management pressure. According to estimates, the current compound annual growth rate of the Hong Kong data center market value is 12.6%, which means that the value will reach HKD 4.12 billion by 2026 [2]. The increase in market value means that practitioners need more cost investment.

Increasing the resource rate of cloud data centers (CDCs) is one of the most effective means to reduce management costs, but there is a conflict between reducing costs and the performance that cloud service customers receive. To improve resource usage, virtual machines (VMs) or containers assigned to users must be highly concentrated on physical hosts. However, a high degree of centralization brings a high degree of resource competition. When the competition is too intense, the host may be overloaded, thereby reducing the performance and user experience of VMs. To ensure the user experience, service level agreements (SLAs) are used to quantitatively describe the corresponding quality of service (QoS). If the SLA cannot be maintained, the QoS is threatened, a and SLA violation (SLAV) is generated. When a SLAV appears, cloud service providers (CSPs) need to provide compensation to users as punishment for failing to meet user performance requirements. Currently, server consolidation is used to dynamically adjust the load balance between hosts in a CDC. Server consolidation periodically checks the load of hosts in the cluster and initiates VM migration to achieve load balancing, thereby maintaining a balance between resource utilization and performance.

Multiple works designing server consolidation solutions assume that the physical host is equipped with a single-core CPU, and multi-core processors have long been popular in personal entertainment, scientific research, and data centers. A CPU package consists of multiple dies, and each die encapsulates multiple cores. Due to the involvement of inter-core communication, inter-die communication, and other CPU components, the power consumption of a multi-core CPU is much higher than that calculated by the single-core CPU power consumption model. Therefore, the server consolidation model based on a single-core processor cannot accurately describe the user’s energy demand. In addition, CSPs need to provide additional overhead to maintain VM migration in server consolidation and possible SLAV compensation. In this paper, we establish a server consolidation cost model based on the use of multi-core processor memory resources, VM migration, and SLAV compensation and propose corresponding solutions to achieve a balance between cost and performance. Our contributions are as follows:

- (A)

- We formally define a host power consumption model based on multi-core CPU and memory resource usage and describe the cost of VM migration and SLAV on this basis. After proposing the cost model, we give the corresponding optimization problem.

- (B)

- A denoise autoencoder-based filter is used to denoise the VM workload trace. Subsequently, we use the SRU-based RNN method to predict the workload of VMs. Based on the predicted results, a host load detection strategy is proposed that considers both current and future load conditions.

- (C)

- To minimize the total cost of server consolidation, we propose a VM selection strategy and a VM placement algorithm. These methods take into account the scheduling and placement of VMs between different cores of the same CPU and between different CPUs of different hosts, as well as the current and future requirements of VMs for different resources.

- (D)

- We conduct simulations to evaluate the performance of our proposed solution MMCC. The simulations’ results indicate that MMCC can reduce host energy consumption by 10~43.9%, SLAV cost by 33.5~51.7%, and total cost by 20.9~34.4% compared to the baseline methods.

The remainder of the paper is organized as follows. In Section 2, we survey the related work. In Section 3, we formalize the cost model and define the corresponding optimization problem. In Section 4, we propose a heuristic algorithm to solve this problem. In Section 5, we evaluate the performance of our proposed method using trace-driven simulations based on real VM workloads. In Section 6, we include the paper and discuss future works.

2. Related Work

In this section, we survey the CDC cost model related to server consolidation and the corresponding solutions.

2.1. Server Consolidation Cost Models

Based on single-core CPU usage or performance, a large number of works on server consolidation proposed host energy models [3,4,5,6,7,8,9,10,11,12]. Nagadevi et al. [13] proposed a VM placement algorithm based on multi-core processors, but they did not consider factors related to dynamic consolidation, energy consumption, and cost throughout the data center life cycle. The above work also did not consider the energy consumption of the processor at the die level and the chip level.

In addition, the composition of a host’s energy consumption is not only related to the CPU factor. Therefore, several works have proposed multi-resource utilization-oriented host energy models [14,15,16,17,18,19]. However, these models only consider the energy consumption when the host acts as an independent object and do not consider the additional energy consumption of the VM migration due to the increase of the host load during server consolidation.

To ensure user performance and service quality, Buyya et al. [20] proposed a CPU-based SLAV calculation method, which was widely adopted in many subsequent works [21,22,23,24,25,26,27,28,29]. However, the quality of service (QoS) of users when using VMs cannot be measured only by CPU performance, and SLAV must involve the use of multiple resources.

2.2. Server Consolidation Solutions

Buyya et al. [20] first proposed the classic four-step server consolidation solution. The first step is host load detection, which picks out overloaded and underloaded hosts in the cluster. The second step is VM selection for overloaded hosts. In order to reduce the host load and the occurrences of SLAV, suitable VMs are selected and added into a VM migration list. The third step is VM placement, which selects the suitable destination hosts for all objects in the VM migration list. After VM placement, underloaded hosts are handled. By migrating all the VMs on the underloaded host to other suitable hosts as much as possible and shutting down or switching these underloaded hosts to an energy-saving state, the host energy cost of the CDC can be further reduced. At present, most of the specific execution strategies for solving server consolidation are heuristic. Based on multiple resource constraints, Li et al. [30] proposed a server consolidation method that not only reduces energy consumption but also ensures QoS, but this method only guarantees the QoS of the users in terms of CPU usage. YADAV et al. [25] mainly considered the network overhead and proposed an adaptive host overloaded detection method and VM selection algorithm. Sayadnavard et al. [31] proposed a server consolidation method based on multiple resource constraints, but the optimization goal is to minimize the number of hosts used by the VM placement, and it ignores other types of costs. Yuan et al. [32] used the culture multiple-ant-colony algorithm to solve the server consolidation problem without SLAV constraints.

None of the models proposed in the above works simultaneously consider the costs associated with multi-resource usage, multi-core processors, multi-resource SLAV, and VM migration.

3. Cost Model and Problem Description

In this section, we first formally describe the multi-core processor-based cost model in server consolidation of CDC and then formulate a problem description based on this.

3.1. Cost Model

In CDC, the cost related to server consolidation mainly involves hosts, VM migrations, and SLAV compensation.

Before giving a specific cost model, we first describe the time and objects of the entire system. There are N heterogeneous hosts in the CDC, forming the host set . The total amount of resources that a host can provide is marked as a scalar , where and are the total amount of CPU and memory resources, respectively. The CPU is multi-core; hence we have , where is the number of cores in the processor on . Generally speaking, we make , where is the total amount of computing resources that each core can provide. There are M VMs running on these hosts, forming a VM set . When a user makes a VM request, the submitted resource requirements are marked as scalar , where and are the total requirements of for CPU and memory, respectively. We assume that each VM is a single-core task; that is, only the computing resources of a single core can be used by a certain VM.

The life cycle of a CDC is divided into L small and equal-length consecutive time segments , and each time segment has a length of T. In a certain time segment , if a host is in working state, , otherwise . At this time, the amount that the host can provide for each resource is , where , where is the amount of resources that the -th core can provide in . In , the amount of resources demanded by the VM is denoted as .

We summarize the total cost of a CDC for a given lifetime by analyzing the performance of each computing device in each time slice. In general, in addition to the operating cost of the hosts, it is also necessary to consider the cost of VM migration during each server consolidation and the penalty caused by the occurrences of SLAV. We will discuss them separately in the following summaries.

Host Cost Model

Given a host , its running cost is mainly related to the electricity charge and its power at a given time t, namely:

It should be noted that if is powered off or in a power-saving state, its power consumption is negligible, so it will not incur any electricity-related costs. The analysis [33] of VM traces in the Alibaba CDC shows that the demand for CPU and memory resources of VMs far exceeds that of disk and network I/O. In this paper, we consider that the power of a host is related to the CPU, memory, and other basic components (motherboard, network card, disk, etc.). We also consider the power consumption of basic components to be a fixed value, so the power consumption of CPU and memory is discussed below.

- CPU power model

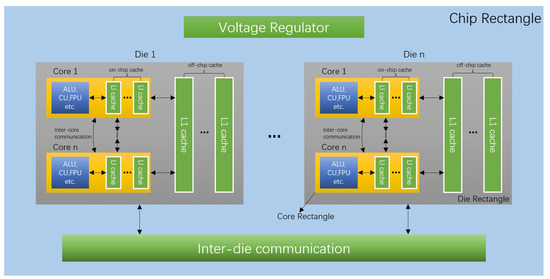

Buyya et al. [20] leveraged a single-core-based host power model in server consolidation; that is, the power of the CPU is related to its only core. Modern processors are multi-core architectures. Multiple cores are packaged on multiple CPU dies. The general architecture of a multi-core CPU is shown in Figure 1.

Figure 1.

The general architecture of a multi-core CPU.

The total power consumption of the processor involves chip-level mandatory components, cores, die-level mandatory components, communication between cores, and communication between dies. In addition, modern processors employ energy-efficient mechanisms (such as Intel’s SpeedStep) to optimize the power consumption of the CPU, which means that the power consumption of the CPU is not linearly related to its usage. We describe the power description of a given CPU at a given moment as:

where r is the energy-efficient factor, is the power consumption of chip-level mandatory components, is the power consumption of dies, and is the power consumption of inter-die communication. Next, we give the models of the above factors and energy consumption, respectively. In the case of not using an energy-efficient mechanism, the actual power when the n cores of the processor perform calculations at the same time is :

In addition, we denote the total power of all cores as:

where is the power consumption of the k-th core when other cores are idle and it is computing alone.

Basmadjian et al. [34] performed experiments to analyze the power consumption of chip-level mandatory components such as voltage regulators for:

where s is the capacitance function, v is the voltage, and f is the frequency.

where is the effective capacitance [35].

Communication between dies occurs when cores on different dies access data at the same memory address. The power consumption of inter-die communication is:

where and are the voltage and frequency of the corresponding cores on , is the set of active cores on the j-th die, and D is the set of dies related to communication, and they are:

where is the i-th core on the j-th die, is the current utilization of , , and is the total number of cores on the j-th die. We also have:

Equations (10) and (11) show that when there is only one active core on the j-th die, and of the j-th die are the voltage and frequency of the core.

The power of a single die can be described as:

where is the power consumption of die-level mandatory components, is the power consumption of constituent cores, and is the power consumption of off-chip caches. We leverage Equation (5) to model .

Inter-core communication occurs between multiple cores on a single die j. Therefore, the core-level power consumption model is:

where is the power consumption of all active cores on j-th die, and is the inter-core communication power consumption between the active cores.

The power consumption of a single core is described as:

where and are the power consumptions of exclusive components (e.g., ALU) and on-chip caches of , respectively. Based on the model in [20], we consider that is linearly related to the utilization of the core, therefore:

where is the power consumption of at the maximum utilization, which can be calculated by the model in Equation (5).

The power consumption of on-chip caches is:

where s is the number of the on-chip caches, is the power consumption of on-chip cache , which can be calculated by the model in Equation (5).

Hence, the power consumption of all active cores on the j-th die is:

By dynamically adjusting voltage and frequency and turning off temporarily unused components, the energy-efficient mechanism can effectively optimize processor power consumption. This part of the power consumption reduction is mainly affected by three factors: (1) components and communication between cores, (2) changes in the frequency of a single core, and (3) the number of cores. Here we define the three factors.

The first factor is

The second factor is

where f is the given frequency. For a multi-core processor, we have:

where m is the number of dies.

The third factor is

where is the total number of all active cores on the processor.

Based on the above analysis, the processor power consumption of a given host can be obtained. For the host , the power consumption of its processor at a certain time t is denoted as . It can be said that is a function of the current utilization of each core on the processor.

- Memory power model

In all current public data traces of a VM, workload records in the CDC provide the memory usage of monitored objects within a certain period of time. Therefore, the current footprint of the memory is used to estimate the power consumption of the host at a given time t:

where is the memory power consumption when is idle, and is the memory power factor. According to the analysis by Esfandiarpoor et al. [27], when , the power consumption of a DDR memory system can be estimated more accurately.

In summary, we obtain the total power of host :

3.2. VM Migration Cost

We assume that at the beginning of each time segment, the CDC performs server consolidation to achieve the balance between the CSP’s cost and the user’s performance. VM migration is an important part of server consolidation. In a cluster composed of multi-core processor hosts, there are two types of VM migrations. The first is the inter-core migration on the same host, and the second is the inter-host migration between different hosts. Inter-core migration occurs when the core where the VMs are located is overloaded, and other cores of the same processor have sufficient computing resources. The VM migrates from one core of the processor to another core in a very short period of time through inter-core or inter-die communication. The inter-core migration does not involve memory, and the main impact is the hit rate of the processor cache. Therefore, the energy overhead of inter-core migration is negligible.

Next, we discuss the energy cost of inter-host migration. We use live migration technology to migrate VMs between different hosts. During live migration of a VM, the memory data of the VM is transmitted. Although VMs generate dirty pages during live migration, the research [28] indicates that the energy consumption of a VM live migration is positively related to the memory size of that VM. Therefore, we can assume that the larger the VM memory size is , the longer the migration time and the more energy consumption will be.

When migrating a VM from host to another host , we assume that reserves enough resources to support the migration of , and also reserves enough resources to run . Buyya et al. [20] assumed that a VM would consume an extra 10% CPU usage to maintain the migration. In this paper, we extend this assumption to the memory resource usage of VM migration. In addition, we assume that the CDC deploys an exclusive network for VM migrations. We denote the size of the dedicated migration bandwidth of as . The total cost of VM migrations in a given life cycle is denoted as . is described as:

where and are the migration costs caused by CPU and memory in , respectively.

is calculated as:

where is a 0-1 indicator, is the power consumption generated by migrating the memory data of , and is the time spent migrating . If VM needs to be migrated from the -th core of the processor of the host to the -th core of another host , then ; otherwise . Since VM memory is the main data transferred during migration, we have:

where is the migration bandwidth size assigned to . We consider that the migration bandwidth is evenly assigned to every migrated VM within on . Hence, for a given source host and a destination host , we obtain:

then we have

After this, we substitute Equation (30) into Equation (27). We let be the memory power of within , and the memory migration cost of is .

Next, we discuss . We assume here that the power consumption generated by a host in a CDC is mainly used to keep the VM running. Since the processor power consumption is related to its respective core in the current utilization , it can be written as . For a given core on the processor , where , if a VM needs to be migrated to another host at this time, its CPU utilization is:

Hence, the power consumption of host during inter-host migration is:

Then, we combine Equation (32) into Equation (2). We denote the updated host energy consumption cost as .

SLAV Penalty Cost

In a CDC, to guarantee user QoS, CSPs must provide SLAV compensation to relevant users in some form. This part of the overhead needs to be included in the cost consideration of the CDC. In this paper, we extend the single-core CPU SLAV definition by Buyya et al. [20] to multi-core CPU and memory. They are denoted as and , respectively.

For the processor, it is considered overloaded only if all its cores are overloaded. Hence, we have

where is CPU SLAV duration caused by all cores overloaded on , is the total working duration of the host, and is the size of the unsatisfied CPU resource demand as a result of migration in .

Likewise, we propose the formal definition of :

We denote CPU and memory SLAV compensation price indices as and , respectively. Then, we have:

3.3. Problem Description

In the above Section 3.1, we analyze the factors involved in the operating cost in a CDC, which are the host energy consumption cost , the VM migration cost , and the SLAV penalty cost . In this paper, our research goal is to minimize the associated operating cost C of the CDC. Combining the above models, we have a minimizing multi-core-host-based cost problem in server consolidation (MMCC):

A 0–1 indicator is used to mark whether the VM is running on the -th core of the host ’s processor at the beginning of the time period. If runs on the -th core of the host , then , otherwise . The constraints of the MMCC problem are:

Constraint (37) means that in any period, any VM can only run on a specific core on a unique specific host. Constraint (38) means that in any period, a VM migrated from any host can only have a unique destination host and a unique core. Constraint (39) and (40) mean that in any period, the CPU and memory resources provided by each host to the VM cannot exceed its resource upper limits.

In the following, we analyze the complexity of the MMCC problem by considering a simple case of the problem. If the hosts in the CDC are homogeneous, the resource requirements of any VM in any time segment are fixed values and satisfy constraints (39) and (40). Then, the VM migration cost and SLAV penalty cost are both zero, and the objective function of the MMCC problem is:

Obviously, the MMCC problem in this simple case can be reduced to the bin-packing problem. Since the bin-packing problem is NP-hard, the MMCC problem is also NP-hard.

4. Solution for MMCC Problem

Since the MMCC problem is NP-hard, we propose a heuristic based on the traditional four-step method for dealing with server consolidation. The first step is host workload detection, the second step is VM selection, the third and fourth steps are VM placements for VM from the overloaded and underloaded hosts. Before performing host overloading detection and VM selection, we will first predict the future workload trends of the VM based on its workload history. The purpose of this is to balance the load of hosts before they become overloaded and trigger SLAV occurrences, thereby reducing costs as much as possible.

4.1. VM Workload Prediction

Before predicting the future workload of a VM, we first need to preprocess its workload trace. The sampling frequency and precision cause a certain deviation between the historical sampling records and the actual usage of resources by the VM. To minimize the impact of these biases on the final result, we denoise by assuming that there is noise in the workload’s history. In addition, we do not need to spend high computing power and a lot of time to obtain accurate prediction results. We only need to roughly judge a general trend of the VM’s resource usage in the future.

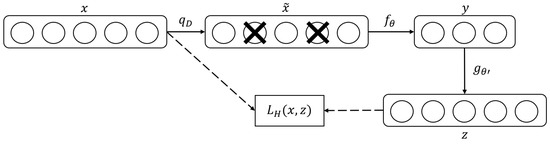

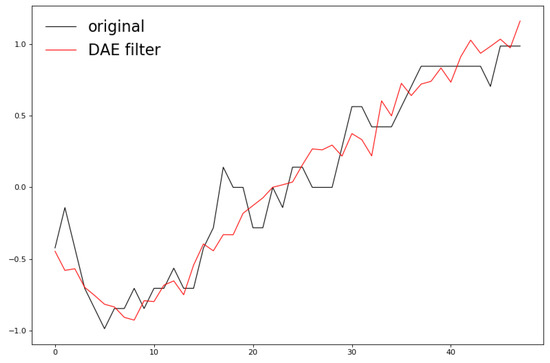

In this paper, we utilize a classic denoise autoencoder [36] (DAE) based filter algorithm to preprocess the workload of VMs. Figure 2 shows the general structure of the DEA mechanism.

Figure 2.

Denoise autoencoder.

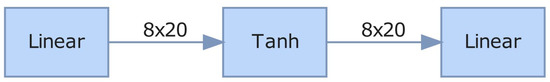

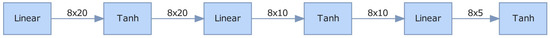

In Figure 2, x is the initialization input, and is the stochastic mapping of x. Then, the autoencoder maps to y with the encoder and generates the reconstruction z with the decoder . The reconstruction error is measured by the loss function . In our proposed DAE-based filter, three autoencoders and one compression decoder are assembled, and their network structures are shown in Figure 3, Figure 4, Figure 5 and Figure 6. Figure 7 shows the result of processing a segment of CPU usage records of a VM using the DEA-based filter.

Figure 3.

The network structure of the first autoencoder of the DAE-based filter.

Figure 4.

The network structure of the second autoencoder of the DAE-based filter.

Figure 5.

The network structure of the third autoencoder of the DAE-based filter.

Figure 6.

The network structure of the compression decoder of the DAE-based filter.

Figure 7.

Example of the DAE-based filter.

Traditional RNNs cannot be parallelized, so there is a problem of slow training speed. To address this issue, we employ an SRU-based approach to predict the workload of VMs. Simple recurrent units (SRU) [37] eliminate the time dependency of most operations, enabling parallel processing. Experiments [37] show that the processing speed of the SRU is more than ten times faster than that of traditional LSTM under the condition of similar result accuracy. Since the SRU has been open-sourced and its usage method is not much different from LSTM, we will not discuss the theoretical details of SRU in this article.

After predicting the resource usage of each VM at the next time segment, we can perform host load detection and VM selection.

4.2. Host Workload Detection

The purpose of host overloaded detection is to avoid and eliminate the fierce competition of VMs for resources, thereby reducing the occurrences of SLAV. Common host overloaded detection methods are divided into two categories, static threshold method and dynamic threshold method. In the static threshold method, the resource uasge thresholds are set as fixed values. When the usage exceeds the threshold, the host is in an overloaded state, and SLAV occurs. At this time, the VMs must be migrated to reduce the load. In the dynamic threshold, CSPs analyze the use of computing resources through various statistical methods to determine whether the competition for resources is fierce and whether the hosts are overloaded. The advantage of the static threshold method is that the host resources are fully utilized, but the disadvantage is that more overhead is required to reduce the SLAV. The advantage of the dynamic threshold method is that it can effectively reduce the SLAV, but the disadvantage is that sometimes the usages of hosts’ resources are not sufficient. Therefore, we combine the advantages of the two and propose the double insurance-based fixed threshold overloading detection method (DIFT).

In DIFT, the first insurance is that the host cannot overload the CPU and memory resources during the current period. The second insurance is that the host cannot overload the CPU and memory resources in the next period. For a given host , DIFT first detects whether the usages of various resources on exceed the given thresholds at the beginning of the time period, and then, based on the prediction results of the SRU method, we judge in the next time period whether the usages of various resources on exceed the given thresholds.

Since the VM migrations are divided into inter-core migrations and inter-host migrations, we correspondingly divide the CPU overload of the host into two situations: processor-overloaded and cores-overloaded. When the host is processor-overloaded, all cores on the processor are in an overloaded state. When the host is cores-overloaded, some (but not all) cores on the processor are in the overloaded state.

Let the overloaded threshold be , where both and are in the interval . For any on , when the following inequality holds in , it is in the state of processor-overloaded:

For some on (the number of that satisfy the condition cannot exceed ), when the above in Equations (42) and (43) are established in , it is in the cores-overloaded state.

Host is in a memory-overloaded state when the following inequality holds in :

When the host is memory-overloaded or processor-overloaded, it must be in the host-overloaded state, and VM inter-host migration is required at this time. The situation where the host has only cores-overloaded is called semi-overloaded, and inter-core migrations can be preferentially leveraged at this time.

For an underloaded host, all VMs on it are migrated to other suitable hosts through inter-host migration; hence there is no need to consider inter-core migration requirements. Let the underloaded threshold be , where both and are in the interval . For on , when the following inequalities hold in , it is in the host-underloaded state:

4.3. VM Selection

VM selection is for overloaded hosts. The reason why we use the DIFT method is to avoid host overload and SLAV as much as possible, rather than react passively after SLAV occurs. Therefore, we can assume that in the time segment, there would be slight SLAV and host overload in the CDC. Our priority in VM selection is to select the VMs on each host that may cause to be overloaded during at the time segment and form a list of VMs to be migrated. If after the migrations of these VMs are completed, is still in the overloaded state during the period, then targeted processing will be performed. We discuss VM selection strategies under various overloaded states within (e.g.,) in different cases.

- Case 1: Host with semi-overloaded

In this case, we need to reduce the load on each overloaded core. Given the j-th overloaded core on host in , we denote the set of n VMs running on it as , its total resources are , and the current available resource is . For a VM , the amount of CPU resources it uses is denoted as . Then, each selection chooses the VM that has the minimum value of into the inter-core migration list. We select a VM at a time until .

- Case 2: Host with only memory overloaded

Given a memory-overloaded host in , we denote the set of n VMs running on it as , the total amount of memory resource it has is , and the currently available amount of resources is . For a VM , the amount of memory resources used by it is recorded as . Then, each selection chooses the VM that has the minimum value of into the inter-host migration list. We select a VM at a time until .

- Case 3: Host with only processor overloaded

We select VMs from each core in the same method as proposed in Case 1. All selected VMs are added into the inter-host migration list.

- Case 4: Host with processor overloaded or cores overloaded and memory overloaded

We first use the method in Case 1 to select VMs from each overloaded core. After the load of all cores drops under the overloaded threshold, if the memory load also drops under the overload threshold, the VM selection is completed; otherwise, the method in Case 2 is used to select VMs to reduce the memory load. All selected VMs are put into the inter-host migration list.

For a given overloaded host, at the beginning of the time segment, the above VM selection strategies are executed for its overloaded condition in . If the host is still in an overloaded state in the time segment, the above strategies are executed again to reduce the current load.

4.4. VM Placement

To make full use of the resources of the hosts, we should fully consider the space and time competition of different VMs for different resources when placing VMs on hosts.

In the VM selection phase, we obtain the inter-core migration list and inter-host migration list. Regarding a semi-overloaded host, it should be noted that the load of the cores may not be reduced through inter-core migration. Therefore, in the VM placement phase, we first process the inter-core migration list and then add the remaining VMs to the inter-host migration list to process together.

There are two goals of VM placement: (1) to ensure that the resources of the target host can be fully utilized during the period; and (2) after the VM is placed on the destination host , the host will not be in the overloaded state during the period.

We address the inter-core migration list first. For the a semi-overloaded host , we sort all un-overloaded cores in ascending order of load, where is the number of overloaded cores. We denote this orderd sequence as . We arrange the VMs in the inter-core migration list of in descending order according to the current demand for CPU resources to form the list . We take the first VM from and traverse for it in order to find the first core with enough CPU resources. If a suitable core cannot be found in for this VM, we add it to the inter-host migration list. The VM is then removed from . We repeat the above operations until is empty. Each semi-overloaded host needs to execute this placement algorithm for its VMs in . Algorithm 1 demonstrates the pseudocode of the inter-core VM placement algorithm.

| Algorithm 1 Inter-Core VM Placement Algorithm |

| Input: host , inter-core migration list of Output: allocation of VMs on certain cores

|

Next, the inter-host migration list is processed. First, all the hosts are divided into two categories according to the intensity of CPU and memory usage: CPU-intensive hosts and memory-intensive hosts. The following calculation method is used to classify a given host . We take the workload trace of in 12 consecutive time segments (one hour), where the normalized CPU workload time series is , and the time series of normalized memory workload is . Since has a multi-core CPU, its normalized CPU workload at period is:

The reason why the denominator is is that CPUs with different performances can be compared with each other through normalization. The smaller the value of is, the lower the CPU load of in the time period .

At a certain time period , its normalized memory workload is:

The smaller the value of is, the lower the memory load of in the time period .

Based on Equation (50), we calculate the CPU score of :

where is the maximum value in the normalized CPU workload sequence, and is the minimum value in the normalized CPU workload sequence. We remove and from to minimize the impact of possible severe load fluctuations on the score.

Based on Equation (51), we calculate the memory score of :

where is the maximum value in the normalized memory workload sequence, and is the minimum value in the normalized memory workload sequence.

If , is CPU-intensive type; otherwise, is a memory-intensive type. The CPU-intensive type hosts have more abundant available memory resources, and the memory-intensive type hosts have more abundant available CPU resources. Therefore, in the time period , the hosts of the CPU-intensive type are arranged in ascending order according to their values of , forming a list . Memory-intensive hosts are sorted in ascending order according to their values to form a list . The reason for using the above sorting method is: CPU-intensive hosts have enough remaining memory resources, so VMs that require more memory resources can be placed on them; memory-intensive hosts have enough remaining CPU resources, so they can be placed with VMs that require more CPU resources.

In the following, we sort the VMs in the inter-host migration list by their resource usage requirements. The VMs to be migrated are also divided into CPU-intensive type and memory-intensive type. The CPU-intensive type VMs need to be placed on the memory-intensive type hosts as much as possible, and the memory-intensive VMs need to be placed on the CPU-intensive type hosts as much as possible. We use the following calculation method to classify a given VM . We take the workload trace of in 12 consecutive time segments (one hour), where the normalized CPU workload time series is , and the time series of normalized memory workload is . At certain time period , the normalized CPU workload of is:

The smaller the value of , the lower the CPU demand of in the time period .

At certain time period , the normalized memory workload of is:

Based on Equation (54), we calculate the CPU score of :

where is the maximum value in the normalized CPU workload sequence of , and is the minimum value in the normalized CPU workload sequence of .

Based on Equation (55), we calculate the memory score of :

where is the maximum value in the normalized memory workload sequence of , and is the minimum value in the normalized memory workload sequence of .

If , is a CPU-intensive type; otherwise, is a memory-intensive type.

In the time period , CPU-intensive type VMs are arranged in descending order according to their values, forming a list . We pick out the VMs in in turn, traverse the , and find the first host that can meet the resource requirements of the current VM and will not be overloaded at both and future .

Since the hosts have multi-core CPUs, we design the following judgment when deciding which core of the host will be used by the VM . We sort the cores in ’s processor in descending order by their available resources , which constitute the sequence . Then, the VM will be preferentially placed on the front core in (and this core will also meet the resource requirements of in the time period).

In the time period , memory-intensive type VMs are arranged in descending order according to their values of , forming a list . We pick out the VMs in the in turn, traverse the , and find the first host that can meet the resource requirements of the current VM and will not be overloaded at both and future . On the current host, the same multi-core placement method is used for processing .

When the destination host is determined for a given VM to be migrated, this VM is removed from the inter-host migration list. Algorithm 2 demonstrates the pseudocode of the inter-host VM placement algorithm. After the above and are traversed, and there are still VMs to be migrated, the first-fit method is used to find available hosts in the host list for them. If there are still VMs to be migrated, the hosts in the energy-saving state are powered on one by one until all the VMs to be migrated are assigned destination hosts.

After the above process, we perform underloaded host detection on the hosts in the CDC. If there are still underloaded hosts at this time, the VMs on the underloaded hosts are added to form a VM migration list, and Algorithm 2 is executed.

| Algorithm 2 Inter-Host VM Placement Algorithm |

| Input: hostlist, inter-host migration list Output: allocation of VMs

|

5. Performance Evaluation

In this section, we evaluate the performance of our proposed solution, named MMCC, with a real VM workload trace-driven simulation.

5.1. Experiment Setup

According to the energy consumption analysis and statistics of the hosts by Basmadjian et al. [34], Minartz et al. [38], and Jin et al. [39], we simulated three types of hosts as , , and , respectively. Their resource parameters are shown in Table 1, the power parameters are shown in Table 2 and Table 3, and the capacitances of different components of the processor are given in Table 4. The numbers of hosts, hosts, and hosts in the simulated CDC are both 100.

Table 1.

Resource parameters of the hosts.

Table 2.

Base power of the hosts.

Table 3.

Memory power parameters.

Table 4.

Capacitance of different components of the processor.

The VM workload trace dataset is from the Alibaba CDC [33]. The VM traces in the dataset are recorded by sampling every five minutes. We selected 1000 VMs in one day (a total of 288 time segments) from the dataset to simulate the consumers’ demands for cloud services. The simulation was implemented on CloudMatrix Lite [40]. The DAE-based filter and the SRU algorithm (the source code is available at https://github.com/asappresearch/sru accessed on 19 October 2022) was based on PyTorch [41].

We set the electricity price as kWh. The SLAV penalty is a static value [42]. The host should reserve an extra 10% resources for migrations. Thereby, we set . We also set .

We combined four overloading detection algorithms (MAD [20], IQR [20], and LR [20]), three VM selection algorithms (MMT [20,25,30], MC [20,43], and RS [20]), and one VM placement algorithm (PABFD [20]) as nine baseline methods to compare with our proposed solution MMCC. All the abovementioned workload detection and selection algorithms were initially designed for single-core; hence, we modified them to work in the multi-core hosts by seeing the capacity of the CPU as the sum of its capacities of the cores. Moreover, The PABFD placement algorithm and its corresponding energy consumption model only take into account the host’s sinlge-CPU. Therefore, we modified it here to suit our multi-core (by randomly selecting a core in the CPU for the VM) and multi-resource scenario. The new PABFD placement algorithm is PABFDM, as shown in Algorithm 3 for the pseudocode.

| Algorithm 3 PABFDM algorithm |

| Input: hostList, vmList Output: allocation of the VMs

|

5.2. Evaluation

The metrics involved in the evaluation are host energy consumption cost, SLAV penalty cost, and the number of VM migrations. Since the CPU cost of the VM migration energy consumption belongs to the hosts’ energy consumption during calculation, we used the number of VM migrations to indirectly measure the migration cost.

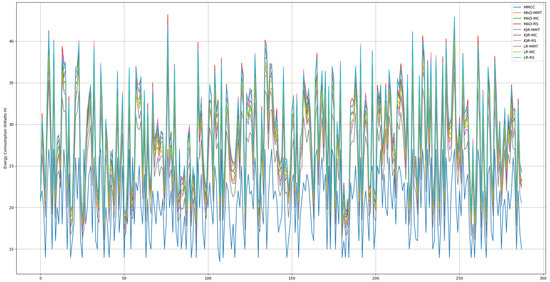

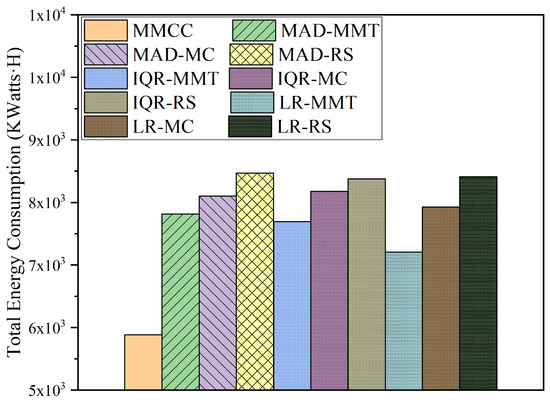

Figure 8 shows the host energy consumption for each time slice of the day when all the methods are used to perform server consolidation. Figure 9 compares the total host energy consumption over the day when all the methods are used to perform server consolidation. From Figure 8, it can be seen that the host energy consumption generated by MMCC is less than that of the baseline methods in most of the time periods. From Figure 9, it can be seen that the total host energy consumption generated by MMCC in a day is about 10% less than that of LR-MMT (the best in the baseline methods) and is about 43.9% less than that of MAD-RS (the worst in the baseline methods). In a cluster composed of multi-core processor hosts, MMCC can effectively schedule tasks among multiple cores to optimize energy consumption.

Figure 8.

Comparing the energy consumption of hosts by all methods in every time segment.

Figure 9.

Comparing the total energy consumption hosts by all methods.

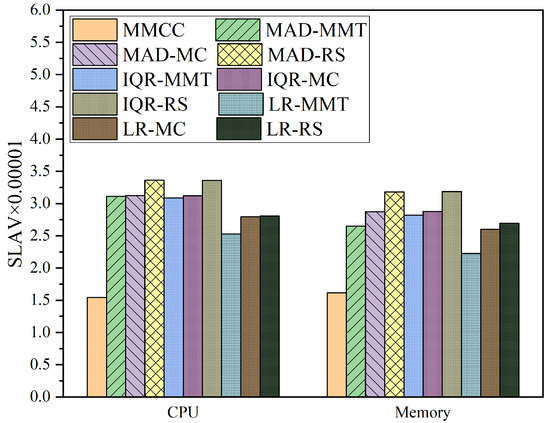

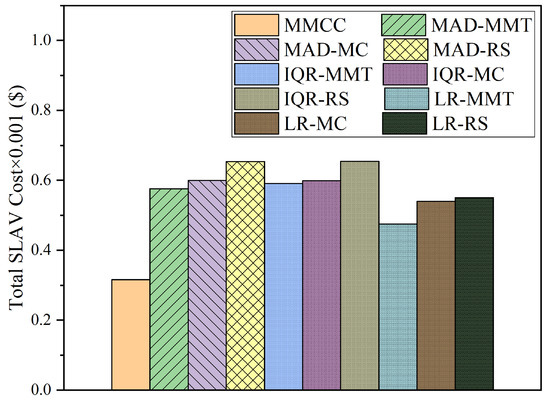

The comparison of CPU and memory SLAV produced by all the methods in a day is shown in Figure 10. The CPU SLAV generated by MMCC is much smaller than that of the baseline methods. For example, MMCC produces about 54% less CPU SLAV than that of MAD-RS and about 39% less than that of LR-MMT. Likewise, the memory SLAV produced by MMCC is much smaller than that of the baseline methods. A comparison of the total SLAV cost produced by all methods in one day is shown in Figure 11. MMCC outperforms the baseline methods. For instance, the total cost generated by MMCC is about 51.7% less than that generated by IQR-RS (the worst of the baseline methods) and about 33.5% less than that generated by LR-MMT (the best of the baseline methods). It can be said that the traditional server consolidation method represented by the baseline methods do not perform well in a cluster composed of multi-core processor hosts, while MMCC can better handle this scenario.

Figure 10.

Comparing the SLAV by all methods regarding CPU and memory.

Figure 11.

Comparing the total SLAV penalty cost by all methods.

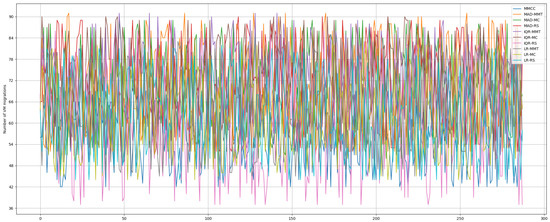

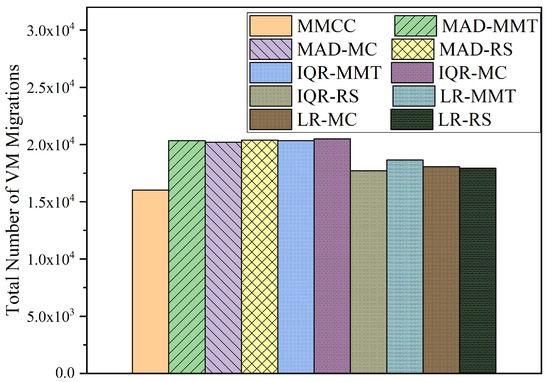

Figure 12 shows the number of VM migrations triggered in each time slice of the day when all the methods are used to perform server consolidation. Figure 13 compares the total number of VM migrations triggered in a day using all the methods to perform server consolidation. As can be seen from Figure 13, compared to the baseline method, the number of migrations triggered by MMCC does not have a large advantage. For example, MMCC triggers about 9.5% fewer migrations than that of IQR-RS. However, it should be noted that the VM migrations caused by MMCC in time is mainly to deal with the possible overloaded hosts in the future. Therefore, the SLAV produced by MMCC is much smaller than that of the baseline methods. In addition, part of the migrations caused by MMCC are inter-core migrations, which only happen inside the host. The cost of inter-core migration is negligible. The traditional baseline methods do not consider the inter-core migration in the case of multi-core processors.

Figure 12.

Comparing the number of VM migrations triggered by all methods in every time segment.

Figure 13.

Comparing the total number of VM migrations triggered by all methods.

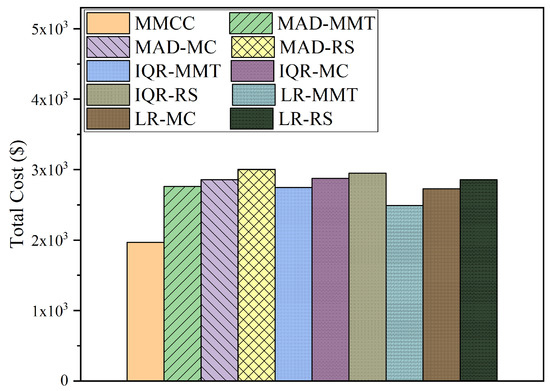

Figure 14 shows and compares the total cost generated by all the methods in one day. MMCC outperforms the baseline methods. For instance, the total cost generated by MMCC is about 20.9% less than that of LR-MMT (the best of the baseline methods) and about 34.4% less than that of MAD-RS (the worst of the baseline methods). MMCC can not only optimize the energy consumption in the environment of multi-core processor hosts, but also reduce the SLAV in server consolidation through the host load detection and VM selection strategies based on the prediction method.

Figure 14.

Comparing the total cost by all methods.

6. Conclusions

In this paper, we focus on reducing the total cost of server consolidation in a CDC, which is composed of multi-core processor hosts, operating costs while ensuring consumers’ QoS. We established a cost model based on multi-core and multi-resource usage in the CDC, taking into account the host energy cost, VM migration cost, and SLAV penalty cost. Based on this model, we define the MMCC problem in server consolidation. We designed a heuristic solution to deal with this problem. We employ a DAE-based filter to preprocess the VM workload dataset and to reduce noise in the workload trace. Subsequently, an SRU-based method is used to predict the usage of computing resources, allowing us to trigger inter-core or inter-host VM migrations before the host enters the state. We design a muliti-core-aware heuristic algorithm to solve the VM placement problem. Finally, simulations driven by real VM workload traces verify the effectiveness of our proposed method. Compared with the existing server consolidation methods, our proposed MMCC can reduce host energy consumption from 10% to 43.9%, SLAV cost by 33.5% to 51.7%, and total cost by 20.9% to 34.4% in a multi-core hosts cluster.

In the future, we will first consider a more comprehensive cost model, such as taking into account the operational life span of the host, the network topology of CDC, and cooling system.

Author Contributions

Conceptualization, H.L. and Y.S.; methodology, H.L.; software, H.L.; validation, H.L., L.W. and Y.S.; formal analysis, H.L.; investigation, H.L. and Y.L.; resources, H.L. and Y.L.; data curation, H.L.; writing—original draft preparation, H.L., L.W. and Y.L.; writing—review and editing, H.L., L.W., Y.L. and Y.S.; visualization, H.L.; supervision, Y.S.; project administration, Y.S.; funding acquisition, H.L. and Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No.62002067), the Guangzhou Youth Talent Program (QT20220101174), the Department of Education of Guangdong Province (No.2020KTSCX039), Foundation of The Chinese Education Commission (22YJAZH091), and the SRP of Guangdong Education Dept (2019KZDZX1031).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Almost 82% Hong Kong Businesses Plan to Keep Remote Working Post-COVID-19. Available online: https://hongkongbusiness.hk/information-technology/more-news/almost-82-hong-kong-businesses-plan-keep-remote-working-post-covid- (accessed on 27 September 2022).

- Hong Kong Data Center Market—Growth, Trends, COVID-19 Impact, and Forecasts (2021–2026). Available online: https://www.reportlinker.com/p06187432/Hong-Kong-Data-Center-Market-Growth-Trends-COVID-19-Impact-and-Forecasts.html (accessed on 27 September 2022).

- Dhiman, G.; Mihic, K.; Rosing, T. A system for online power prediction in virtualized environments using gaussian mixture models. In Proceedings of the 47th Design Automation Conference, Anaheim, CA, USA, 13–18 June 2010; pp. 807–812. [Google Scholar]

- Ham, S.; Kim, M.; Choi, B.; Jeong, J. Simplified server model to simulate data center cooling energy consumption. Energy Build. 2015, 86, 328–339. [Google Scholar] [CrossRef]

- Kavanagh, R.; Djemame, K. Rapid and accurate energy models through calibration with IPMI and RAPL. Concurr. Comput. Pract. Exp. 2019, 31, e5124. [Google Scholar] [CrossRef]

- Gupta, V.; Nathuji, R.; Schwan, K. An analysis of power reduction in datacenters using heterogeneous chip multiprocessors. ACM Sigmetrics Perform. Eval. Rev. 2011, 39, 87–91. [Google Scholar] [CrossRef]

- Lefurgy, C.; Wang, X.; Ware, M. Server-level power control. In Proceedings of the Fourth International Conference on Autonomic Computing (ICAC’07), Jacksonville, FL, USA, 11–15 June 2007; p. 4. [Google Scholar]

- Beloglazov, A.; Abawajy, J.; Buyya, R. Energy-aware resource allocation heuristics for efficient management of data centers for cloud computing. Future Gener. Comput. Syst. 2012, 28, 755–768. [Google Scholar] [CrossRef]

- Rezaei-Mayahi, M.; Rezazad, M.; Sarbazi-Azad, H. Temperature-aware power consumption modeling in Hyperscale cloud data centers. Future Gener. Comput. Syst. 2019, 94, 130–139. [Google Scholar] [CrossRef]

- Chen, Y.; Das, A.; Qin, W.; Sivasubramaniam, A.; Wang, Q.; Gautam, N. Managing server energy and operational costs in hosting centers. In Proceedings of the 2005 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems, Banff, AB, Canada, 6–10 June 2005; pp. 303–314. [Google Scholar]

- Wu, W.; Lin, W.; Peng, Z. An intelligent power consumption model for virtual machines under CPU-intensive workload in cloud environment. Soft Comput. 2017, 21, 5755–5764. [Google Scholar] [CrossRef]

- Lien, C.; Bai, Y.; Lin, M. Estimation by software for the power consumption of streaming-media servers. IEEE Trans. Instrum. Meas. 2007, 56, 1859–1870. [Google Scholar] [CrossRef]

- Raja, K. Multi-core Aware Virtual Machine Placement for Cloud Data Centers with Constraint Programming. In Intelligent Computing; Springer: Cham, Switzerland, 2022; pp. 439–457. [Google Scholar]

- Economou, D.; Rivoire, S.; Kozyrakis, C.; Ranganathan, P. Full-system power analysis and modeling for server environments. In Proceedings of the International Symposium on Computer Architecture, Ouro Preto, Brazil, 17–20 October 2006. [Google Scholar]

- Alan, I.; Arslan, E.; Kosar, T. Energy-aware data transfer tuning. In Proceedings of the 2014 14th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, Chicago, IL, USA, 26–29 May 2014; pp. 626–634. [Google Scholar]

- Li, Y.; Wang, Y.; Yin, B.; Guan, L. An online power metering model for cloud environment. In Proceedings of the 2012 IEEE 11th International Symposium on Network Computing and Applications, Cambridge, MA, USA, 23–25 August 2012; pp. 175–180. [Google Scholar]

- Lent, R. A model for network server performance and power consumption. Sustain. Comput. Inform. Syst. 2013, 3, 80–93. [Google Scholar] [CrossRef]

- Kansal, A.; Zhao, F.; Liu, J.; Kothari, N.; Bhattacharya, A. Virtual machine power metering and provisioning. In Proceedings of the 1st ACM Symposium on Cloud Computing, Indianapolis, IN, USA, 10–11 June 2010; pp. 39–50. [Google Scholar]

- Lin, W.; Wang, W.; Wu, W.; Pang, X.; Liu, B.; Zhang, Y. A heuristic task scheduling algorithm based on server power efficiency model in cloud environments. Sustain. Comput. Inform. Syst. 2018, 20, 56–65. [Google Scholar] [CrossRef]

- Beloglazov, A.; Buyya, R. Optimal online deterministic algorithms and adaptive heuristics for energy and performance efficient dynamic consolidation of virtual machines in cloud data centers. Concurr. Comput. Pract. Exp. 2012, 24, 1397–1420. [Google Scholar] [CrossRef]

- Li, H.; Li, W.; Wang, H.; Wang, J. An optimization of virtual machine selection and placement by using memory content similarity for server consolidation in cloud. Future Gener. Comput. Syst. 2018, 84, 98–107. [Google Scholar] [CrossRef]

- Li, H.; Li, W.; Zhang, S.; Wang, H.; Pan, Y.; Wang, J. Page-sharing-based virtual machine packing with multi-resource constraints to reduce network traffic in migration for clouds. Future Gener. Comput. Syst. 2019, 96, 462–471. [Google Scholar] [CrossRef]

- Li, H.; Li, W.; Feng, Q.; Zhang, S.; Wang, H.; Wang, J. Leveraging content similarity among vmi files to allocate virtual machines in cloud. Future Gener. Comput. Syst. 2018, 79, 528–542. [Google Scholar] [CrossRef]

- Li, H.; Wang, S.; Ruan, C. A fast approach of provisioning virtual machines by using image content similarity in cloud. IEEE Access 2019, 7, 45099–45109. [Google Scholar] [CrossRef]

- Yadav, R.; Zhang, W.; Kaiwartya, O.; Singh, P.; Elgendy, I.; Tian, Y. Adaptive energy-aware algorithms for minimizing energy consumption and SLA violation in cloud computing. IEEE Access 2018, 6, 55923–55936. [Google Scholar] [CrossRef]

- Hieu, N.; Di Francesco, M.; Ylä-Jääski, A. Virtual machine consolidation with multiple usage prediction for energy-efficient cloud data centers. IEEE Trans. Serv. Comput. 2017, 13, 186–199. [Google Scholar] [CrossRef]

- Esfandiarpoor, S.; Pahlavan, A.; Goudarzi, M. Structure-aware online virtual machine consolidation for datacenter energy improvement in cloud computing. Comput. Electr. Eng. 2015, 42, 74–89. [Google Scholar] [CrossRef]

- Arianyan, E.; Taheri, H.; Sharifian, S. Novel energy and SLA efficient resource management heuristics for consolidation of virtual machines in cloud data centers. Comput. Electr. Eng. 2015, 47, 222–240. [Google Scholar] [CrossRef]

- Rodero, I.; Viswanathan, H.; Lee, E.; Gamell, M.; Pompili, D.; Parashar, M. Energy-efficient thermal-aware autonomic management of virtualized HPC cloud infrastructure. J. Grid Comput. 2012, 10, 447–473. [Google Scholar] [CrossRef]

- Li, Z.; Yan, C.; Yu, L.; Yu, X. Energy-aware and multi-resource overload probability constraint-based virtual machine dynamic consolidation method. Future Gener. Comput. Syst. 2018, 80, 139–156. [Google Scholar] [CrossRef]

- Sayadnavard, M.; Toroghi Haghighat, A.; Rahmani, A. A reliable energy-aware approach for dynamic virtual machine consolidation in cloud data centers. J. Supercomput. 2019, 75, 2126–2147. [Google Scholar] [CrossRef]

- Yuan, C.; Sun, X. Server consolidation based on culture multiple-ant-colony algorithm in cloud computing. Sensors 2019, 19, 2724. [Google Scholar] [CrossRef] [PubMed]

- Lu, C.; Ye, K.; Xu, G.; Xu, C.; Bai, T. Imbalance in the cloud: An analysis on alibaba cluster trace. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 2884–2892. [Google Scholar]

- Basmadjian, R.; De Meer, H. Evaluating and modeling power consumption of multi-core processors. In Proceedings of the 2012 Third International Conference On Future Systems: Where Energy, Computing and Communication Meet (e-Energy), Madrid, Spain, 9–11 May 2012; pp. 1–10. [Google Scholar]

- Brodersen, R. Minimizing Power Consumption in CMOS Circuits; Department of EECS University of California at Berkeley: Berkeley, CA, USA; Available online: https://sablok.tripod.com/verilog/paper.fm.pdf (accessed on 27 September 2022).

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.; Bottou, L. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Lei, T.; Zhang, Y.; Wang, S.; Dai, H.; Artzi, Y. Simple recurrent units for highly parallelizable recurrence. arXiv 2017, arXiv:1709.02755. [Google Scholar]

- Minartz, T.; Kunkel, J.; Ludwig, T. Simulation of power consumption of energy efficient cluster hardware. Comput. Sci.-Res. Dev. 2010, 25, 165–175. [Google Scholar] [CrossRef]

- Jin, Y.; Wen, Y.; Chen, Q.; Zhu, Z. An empirical investigation of the impact of server virtualization on energy efficiency for green data center. Comput. J. 2013, 56, 977–990. [Google Scholar] [CrossRef]

- Li, H.; Xiao, Y. CloudMatrix Lite: A Real Trace Driven Lightweight Cloud Data Center Simulation Framework. In Proceedings of the 2020 2nd International Conference on Machine Learning, Big Data and Business Intelligence (MLBDBI), Taiyuan, China, 23–25 October 2020; pp. 424–429. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Aljoumah, E.; Al-Mousawi, F.; Ahmad, I.; Al-Shammri, M.; Al-Jady, Z. SLA in cloud computing architectures: A comprehensive study. Int. J. Grid Distrib. Comput. 2015, 8, 7–32. [Google Scholar] [CrossRef]

- Cao, Z.; Dong, S. Dynamic VM consolidation for energy-aware and SLA violation reduction in cloud computing. In Proceedings of the 2012 13th International Conference on Parallel and Distributed Computing, Applications And Technologies, Beijing, China, 14–16 December 2012; pp. 363–369. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).