Semantically Annotated Cooking Procedures for an Intelligent Kitchen Environment

Abstract

:1. Introduction

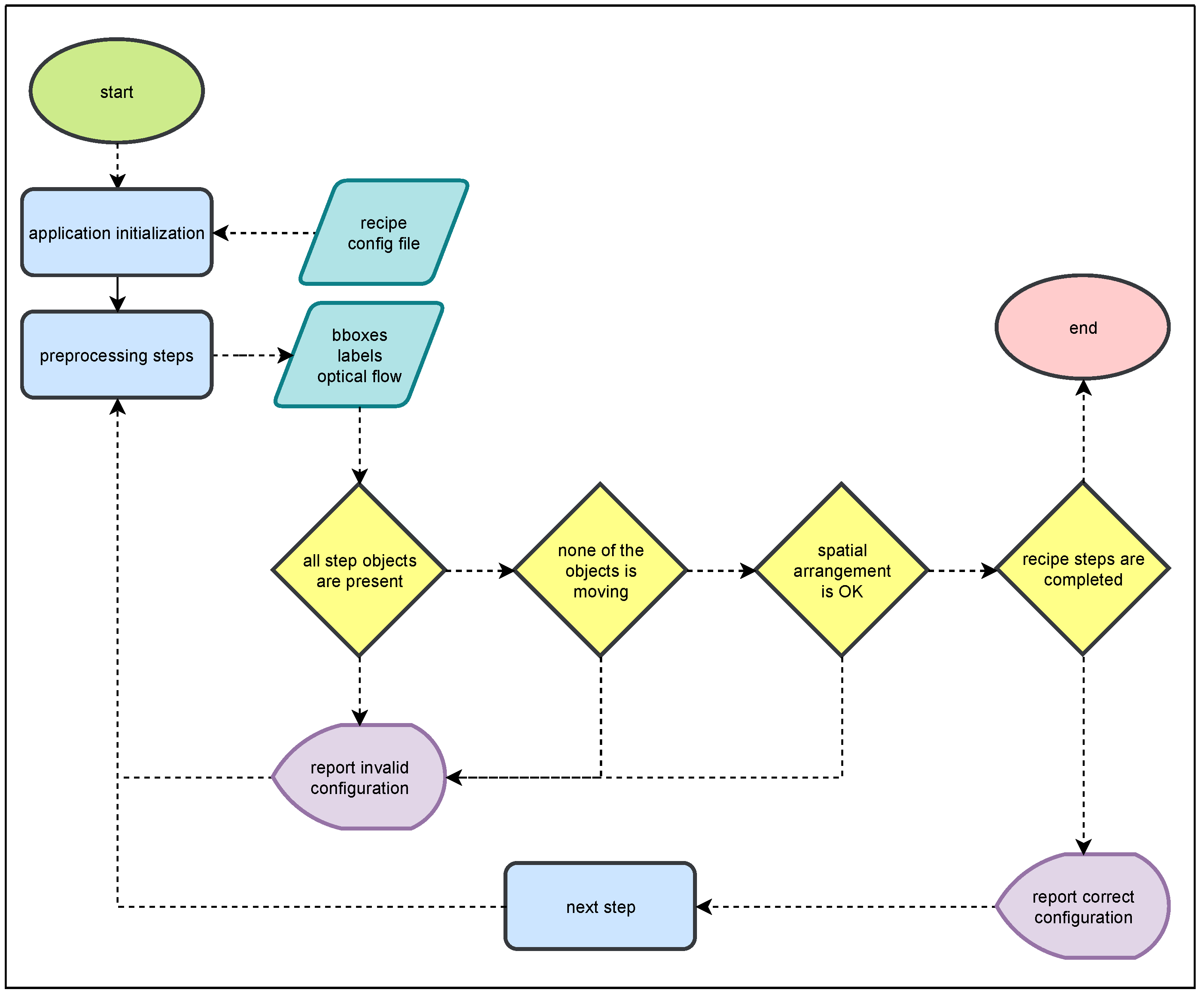

- The system supports the spatial arrangement of objects such as utensils and food ingredients in terms of where each one is located relative to another. This can be interpreted in various ways, bearing in mind the needs of each user.

- The system supports assistance of the cooking activity in terms of recipes and their correct execution. Each recipe is imported into the system in the form of a configuration file. The system is able to construe this configuration file and translate it into human perceived knowledge. It can facilitate the cooking procedure while at the same time providing the user with advice on how to carry out the specific task (i.e., carrying out the recipe).

- The system primarily supports all the necessary transformations of a raw image into real-world case scenarios via algorithmic approaches.

2. Related Work

2.1. Literature Review

2.1.1. Systems

2.1.2. Datasets and Tools

2.2. Discussion

- Computer vision techniques. Includes the research based on the field of computer vision, varying from computer vision-based tracking to object detection and providing an answer to the fundamental question of what is on the scene in a food preparation activity. High intra-class variability often foreshadows these techniques’ credibility. Features such as pose representation and face recognition are considered reliable for this kind of research and are often deployed in order to interpret the volitions of a user.

- Creation and annotation of datasets. The annotation of cooking videos requires special care due to the difficulty of gathering experimental data. It is an extremely laborious process that nonetheless provides significant records on everything regarding a food preparation activity. It is to be noted that the process of annotating and documenting the results in a dataset is the utmost and most impartial way of contributing to the progress of the field.

- Activity Recognition (AR). Activity recognition aims to recognize the actions and goals of the user in a food preparation activity such as a cooking recipe.

- Sensor fusion. There is a lot of research in the direction of using more than one sensor and combining the obtained data to advance food preparation activities. The sensors can be cameras or accelerometers attached to the ingredients themselves.

- Machine learning. Machine learning methods are often deployed either for enhancing computer-vision-based techniques or as standalone computational methods. Artificial Intelligence and Machine Learning have “infiltrated” horizontally various disciplines, and Computer Vision is no exception. Models such as YOLO [19] or Detectron [20], developed by the Facebook AI Research team, have improved tasks such as object detection.

3. Method

3.1. Detection of the Objects

3.1.1. Object Detection Framework

3.1.2. The Need for a Custom Dataset

- A large dataset does not and cannot include all possible ingredients required for preparing special recipes such as traditional recipes.

- Annotations about utensils are stateless. For example, there is no distinction between an empty bowl and a bowl with some ingredients in it

- For some classes we may need finer detection about specific object classes rather than relying on a single class. For example, we may need to specify which bowl is the one we are interested in, small-vs.-large or plastic-vs.-glass.

- Utilize an existing detector as is, predict every class that it supports and discard objects not interested in.

- Train a detector with a subset of classes of an existing dataset;

- (a)

- Utilize solely the existing dataset;

- (b)

- Augment dataset with images obtained from custom objects instances.

- Train a detector solely with a new dataset.

3.1.3. Utilization of Public Datasets

- Using a subset of COCO, keeping only classes of interest;

- Using images of the specific objects instances that we want to further classify;

- Combination of 1, 2.

3.1.4. Training with Custom Datasets

3.1.5. Model Deployment

3.2. Object Tracking

3.3. Optical Flow

3.4. Recipe Decision Logic

3.4.1. Recipe Specification

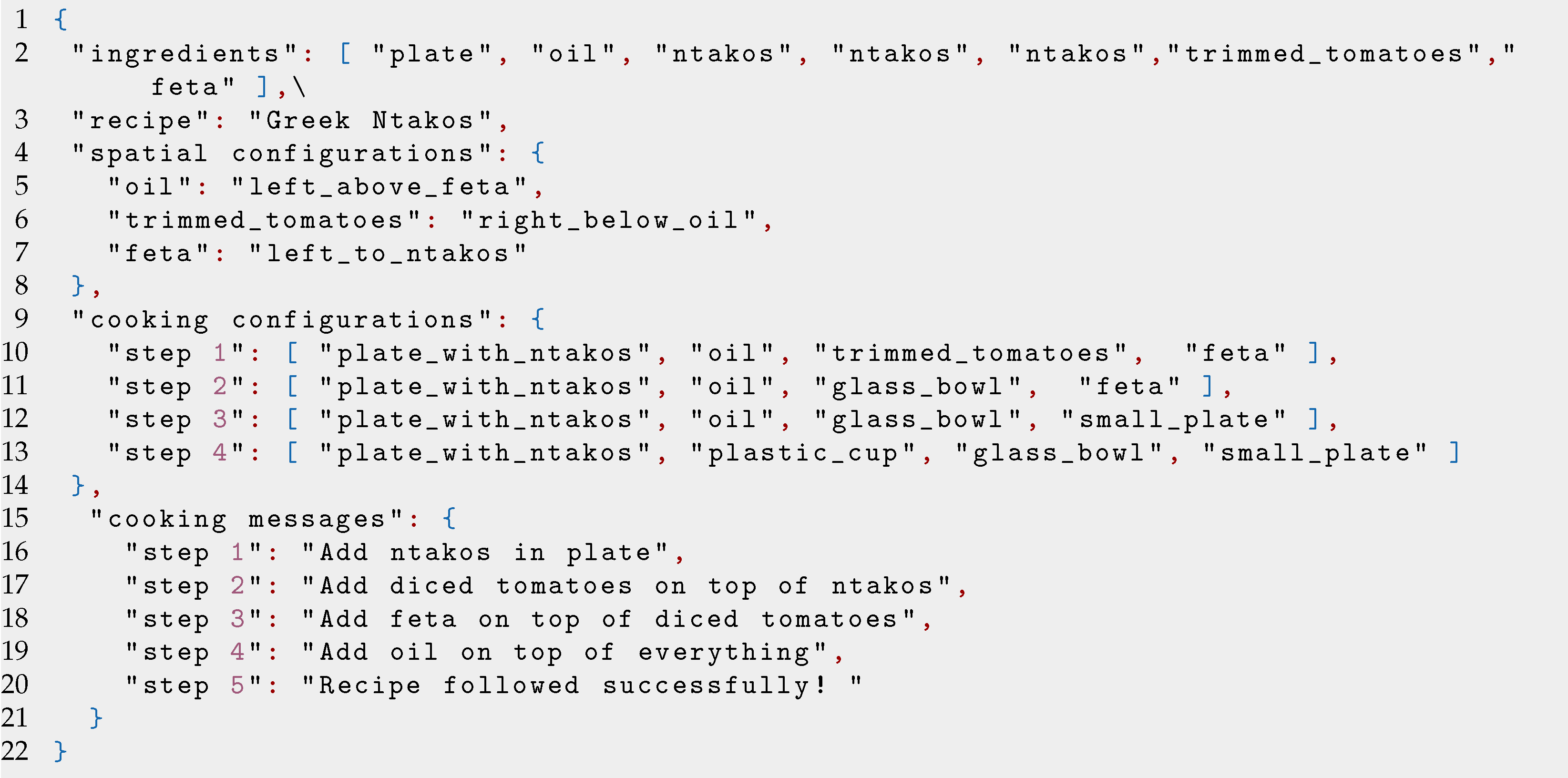

| Listing 1. “Greek Ntakos” recipe specification. |

|

| Listing 2.“Fava” recipe specification. |

|

- The name of the recipe in study;

- A list of the ingredients that are vital for completion of the recipe;

- The spatial configurations of the ingredients/utensils;

- The cooking configurations of each step of the recipe in term of ingredients;

- The necessary messages, pointed to the user to assist him in completing the recipe successfully.

3.4.2. Spatial Arrangement

3.4.3. The Cooking Assistant

4. Experiments

4.1. Checking for Correct Ingredients

- Everything found in the scene matches the list of ingredients and utensils explained in Section 3.4.1;

- The first step of the recipe, as explained in Section 4.2, is finished successfully.

4.2. Recipes

4.3. Spatial Arrangement of the Objects

4.4. Metrics

4.4.1. Optical Flow Metrics

4.4.2. Object Detection Metrics

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AAL | Ambient Assisted Living |

| AR | Activity Recognition |

| CV | Computer Vision |

| CNN | Convolutional Neural Network |

| FAIR | Facebook AI Research |

| FPN | Feature Pyramid Network |

| ML | Machine Learning |

| ViT | Vision Transformer |

References

- Johnson, L.C. Browsing the modern kitchen—A feast of gender, place and culture (part 1). Gender Place Cult. 2006, 13, 123–132. [Google Scholar] [CrossRef]

- Byrd, M.; Dunn, J.P. Cooking through History: A Worldwide Encyclopedia of Food with Menus and Recipes; ABC-CLIO: Santa Barbara, CA, USA, 2020; Volume 2. [Google Scholar]

- Berk, Z. Food Process Engineering and Technology; Academic Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Stein, S.; McKenna, S.J. Towards recognizing food preparation activities in situational support systems. In Proceedings of the Digital Futures 2012 (3rd Annual Digital Economy All Hands Conference), Aberdeen, UK, 23–25 October 2012. [Google Scholar]

- Stein, S.; McKenna, S.J. Combining embedded accelerometers with computer vision for recognizing food preparation activities. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013; pp. 729–738. [Google Scholar]

- Urabe, S.; Inoue, K.; Yoshioka, M. Cooking activities recognition in egocentric videos using combining 2DCNN and 3DCNN. In Proceedings of the Joint Workshop on Multimedia for Cooking and Eating Activities and Multimedia Assisted Dietary Management, Mässvägen, Stockholm, Sweden, 15 July 2018; pp. 1–8. [Google Scholar]

- Surie, D.; Partonia, S.; Lindgren, H. Human sensing using computer vision for personalized smart spaces. In Proceedings of the 2013 IEEE 10th International Conference on Ubiquitous Intelligence and Computing and 2013 IEEE 10th International Conference on Autonomic and Trusted Computing, Vietri sul Mare, Italy, 18–21 December 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 487–494. [Google Scholar]

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Multimed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Hashimoto, A.; Mori, N.; Funatomi, T.; Yamakata, Y.; Kakusho, K.; Minoh, M. Smart kitchen: A user centric cooking support system. In Proceedings of the IPMU 2008, Malaga, Spain, 22–27 June 2008; Volume 8, pp. 848–854. [Google Scholar]

- Tran, Q.; Mynatt, E. Cook’s collage: Two exploratory designs. In Proceedings of the Position Paper for the Technologies for Families Workshop at CHI 2002, Mineapolis MN, USA, 22 April 2002. [Google Scholar]

- Neumann, A.; Elbrechter, C.; Pfeiffer-Leßmann, N.; Kõiva, R.; Carlmeyer, B.; Rüther, S.; Schade, M.; Ückermann, A.; Wachsmuth, S.; Ritter, H.J. “Kognichef”: A cognitive cooking assistant. KI-Künstliche Intell. 2017, 31, 273–281. [Google Scholar] [CrossRef]

- Thuy, N.T.T.; Diep, N.N. Recognizing food preparation activities using bag of features. Southeast Asian J. Sci. 2016, 4, 73–83. [Google Scholar]

- An, Y.; Cao, Y.; Chen, J.; Ngo, C.W.; Jia, J.; Luan, H.; Chua, T.S. PIC2DISH: A customized cooking assistant system. In Proceedings of the 25th ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1269–1273. [Google Scholar]

- Neumann, N.; Wachsmuth, S. HanKA: Enriched Knowledge Used by an Adaptive Cooking Assistant. In Proceedings of the German Conference on Artificial Intelligence (Künstliche Intelligenz); Springer: Cham, Switzerland, 2022; pp. 173–186. [Google Scholar]

- Bosch. Cookit-Smart Food Processor. Available online: https://cookit.bosch-home.com (accessed on 28 August 2022).

- Thermomix. Thermomix® TM6®. Available online: https://www.thermomix.com/tm6 (accessed on 28 August 2022).

- Bianco, S.; Ciocca, G.; Napoletano, P.; Schettini, R.; Margherita, R.; Marini, G.; Pantaleo, G. Cooking action recognition with iVAT: An interactive video annotation tool. In Proceedings of the International Conference on Image Analysis and Processing; Springer: Berlin/Heidelberg, Germany, 2013; pp. 631–641. [Google Scholar]

- Rohrbach, M.; Amin, S.; Andriluka, M.; Schiele, B. A database for fine grained activity detection of cooking activities. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1194–1201. [Google Scholar]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 28 August 2022).

- Xiang, L.; Echtler, F.; Kerl, C.; Wiedemeyer, T.; Lars; hanyazou; Gordon, R.; Facioni, F.; laborer2008; Wareham, R.; et al. libfreenect2: Release 0.2 2016. Available online: https://zenodo.org/record/50641#.Yzarf0xBxPY (accessed on 28 August 2022).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Vancouver, Canada, 2019; pp. 8024–8035. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Software. Available online: https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45166.pdf (accessed on 28 August 2022).

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 91–99. Available online: https://www.bibsonomy.org/bibtex/2ceee12b1f8d61eed786dcdc15ffeb99f/nosebrain (accessed on 28 August 2022). [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Gupta, A.; Dollar, P.; Girshick, R. LVIS: A Dataset for Large Vocabulary Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Damen, D.; Doughty, H.; Farinella, G.M.; Fidler, S.; Furnari, A.; Kazakos, E.; Moltisanti, D.; Munro, J.; Perrett, T.; Price, W.; et al. The EPIC-KITCHENS Dataset: Collection, Challenges and Baselines. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2021, 43, 4125–4141. [Google Scholar] [CrossRef] [PubMed]

- Sekachev, B.; Manovich, N.; Zhiltsov, M.; Zhavoronkov, A.; Kalinin, D.; Hoff, B.; Tosmanov; Kruchinin, D.; Zankevich, A.; Sidnev., D.; et al. opencv/cvat: V1.1.0. 2020. Available online: https://github.com/opencv/cvat (accessed on 28 August 2022).

- Lukezic, A.; Vojir, T.; ˇCehovin Zajc, L.; Matas, J.; Kristan, M. Discriminative correlation filter with channel and spatial reliability. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 6309–6318. [Google Scholar]

- Bradski, G. The OpenCV Library. Dr. Dobb’S J. Softw. Tools 2000, 11, 120–123. [Google Scholar]

- Itseez. Open Source Computer Vision Library. 2015. Available online: https://github.com/itseez/opencv (accessed on 28 August 2022).

- Bruce, D.; Lucas, T.K. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the Imaging Understanding Workshop, Vancouver, BC, Canada, 24–28 August 1981; pp. 121–130. [Google Scholar]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Scandinavian Conference on Image Analysis, Halmstad, Sweden, 29 June–2 July 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 363–370. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Related Work | ML | AR | Spatial Config. | CV | Dataset Creation | Sensor Fusion |

|---|---|---|---|---|---|---|

| [5] | – | ✓ | – | – | – | ✓ |

| [7] | – | – | – | ✓ | – | – |

| [9] | – | ✓ | – | ✓ | – | – |

| [10] | – | – | – | – | – | – |

| [12] | ✓ | ✓ | – | – | – | ✓ |

| [4] | – | ✓ | – | ✓ | – | ✓ |

| [18] | – | ✓ | – | ✓ | ✓ | – |

| [11] | – | – | – | ✓ | – | – |

| [6] | ✓ | ✓ | – | ✓ | ✓ | – |

| [13] | ✓ | – | – | ✓ | – | – |

| [14] | ✓ | – | – | – | – | – |

| Present work | ✓ | – | ✓ | ✓ | ✓ | – |

feta | glass bowl | ntakos | oil |

plastic bowl | plastic cup | plate | plate w. ntakos |

salt | small plate | tomato | trimmed tomatoes |

lemon | onion | onion bowl |

fava | spoon | squished lemon |

| Class Name | #bboxes | Class Name | #bboxes |

|---|---|---|---|

| fava | 30 | plastic cup | 42 |

| feta | 46 | plate with ntakos | 18 |

| glass bowl | 30 | plate | 9 |

| lemon | 31 | salt | 30 |

| ntakos | 49 | small plate | 32 |

| oil | 52 | spoon | 30 |

| onion bowl | 30 | squished lemon | 30 |

| onion | 30 | tomato | 45 |

| plastic bowl | 30 | trimmed tomatoes | 31 |

| Methods | Thresholds | ||||

|---|---|---|---|---|---|

| 3.16 | 10 | 31.6 | 100 | 316.22 | |

| Lucas–Kanade Sparse Optical Flow | 0.72 | 0.67 | 0.77 | 0.75 | 0.83 |

| Lucas–Kanade Dense Optical Flow | 0.0 | 0.72 | 0.8 | 0.81 | 0.82 |

| Farneback Dense Optical Flow | 0.8 | 0.86 | 1 | 1 | 0.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kondylakis, G.; Galanakis, G.; Partarakis, N.; Zabulis, X. Semantically Annotated Cooking Procedures for an Intelligent Kitchen Environment. Electronics 2022, 11, 3148. https://doi.org/10.3390/electronics11193148

Kondylakis G, Galanakis G, Partarakis N, Zabulis X. Semantically Annotated Cooking Procedures for an Intelligent Kitchen Environment. Electronics. 2022; 11(19):3148. https://doi.org/10.3390/electronics11193148

Chicago/Turabian StyleKondylakis, George, George Galanakis, Nikolaos Partarakis, and Xenophon Zabulis. 2022. "Semantically Annotated Cooking Procedures for an Intelligent Kitchen Environment" Electronics 11, no. 19: 3148. https://doi.org/10.3390/electronics11193148

APA StyleKondylakis, G., Galanakis, G., Partarakis, N., & Zabulis, X. (2022). Semantically Annotated Cooking Procedures for an Intelligent Kitchen Environment. Electronics, 11(19), 3148. https://doi.org/10.3390/electronics11193148