API Message-Driven Regression Testing Framework

Abstract

1. Introduction

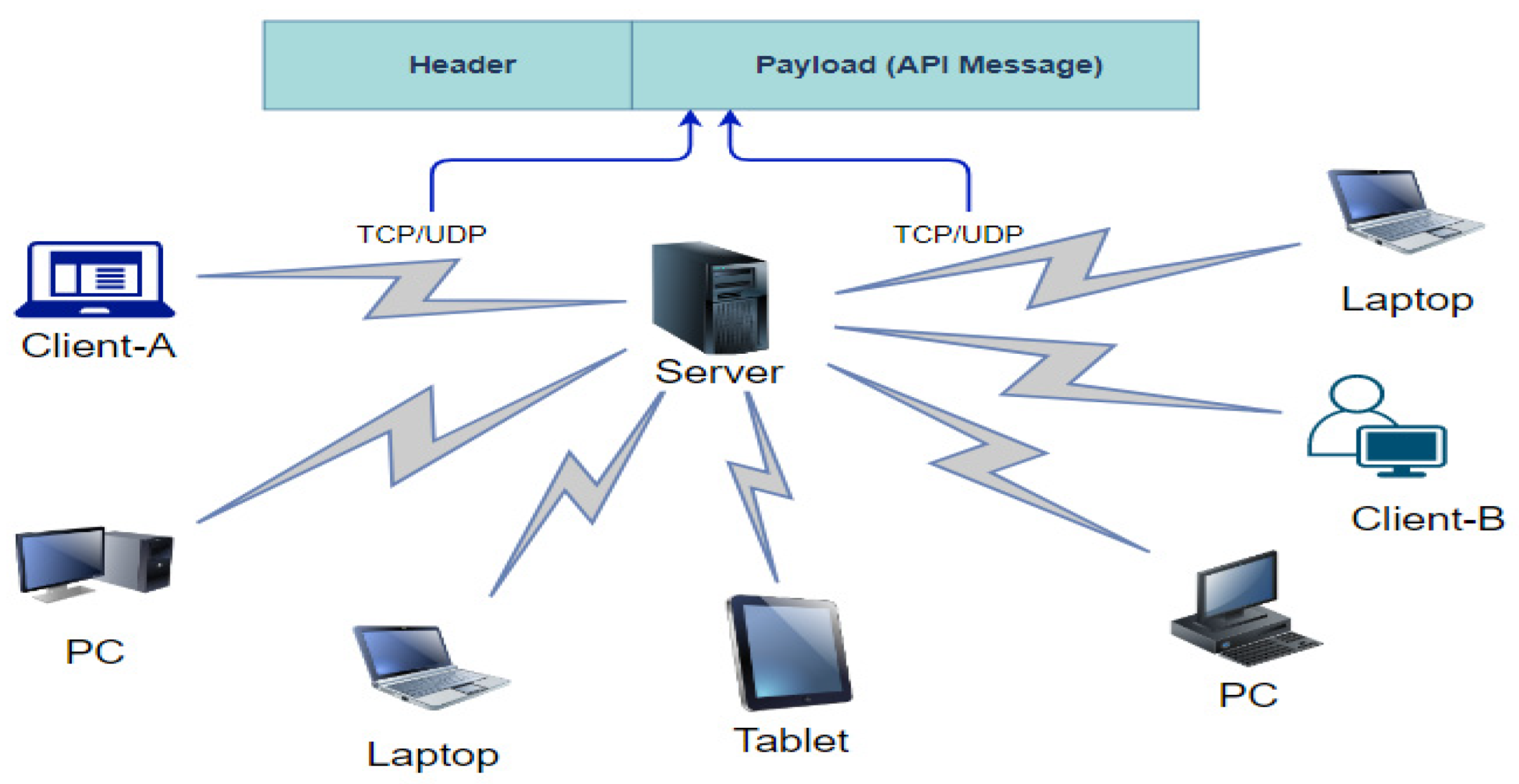

- This study is aimed to fill the gap in the regression testing framework in a specific domain—client-server architecture applications such as financial applications and trading systems that use business domain-specific API messages in their communication.

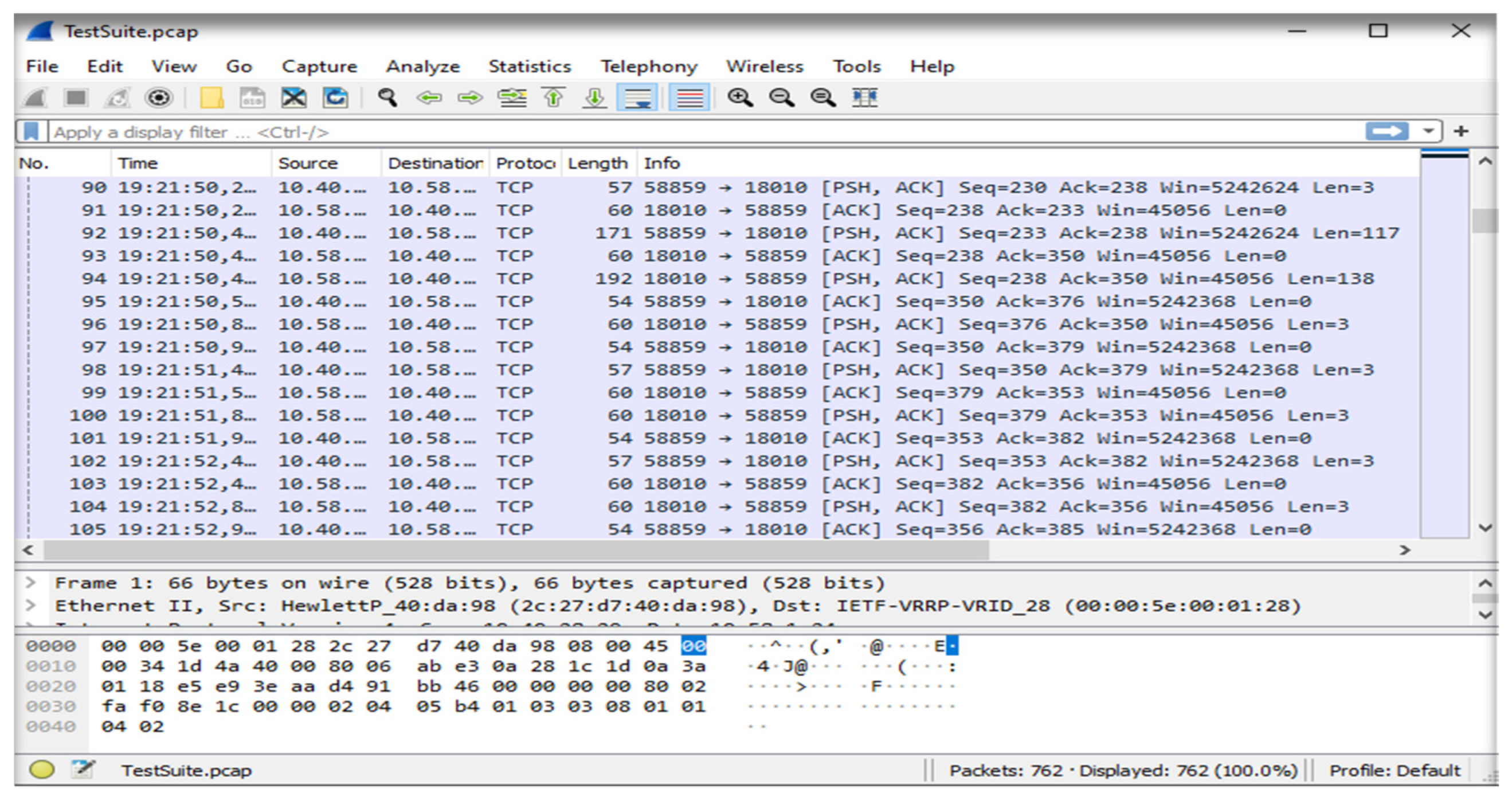

- The use of network packets increases the generalization of the framework. The easiest method to receive different request messages coming from various clients is to use network packets. This ensures more application independence. For this reason, this study uses network packets as an input. No previous research has attempted to implement and use network packets in regression testing. The network log file is standard, and is not specific to our study. It includes API request-response message pairs.

- By capturing the real data packets in production, the real client behavior can be simulated into the test environment. Moreover, a bug that occurs in the production environment, but not in the test environment, can be quickly simulated in the test environment by replaying the network packets obtained from the production environment.

- We applied the proposed framework to a real-world financial application domain in order to validate its effectiveness and obtain better test automation outcomes. We worked on two different business domain-specific API protocols used by the financial domain. According to the extracted metrics, which are proved to be accurate via the test engineers, this framework has shown remarkable reuse capacity, filling the gap in the software testing industry for business-critical systems that have business domain-specific APIs.

2. Background and Related Work

2.1. Case Description and Targeting System Structure

2.2. API Testing

2.3. Related Work

- Functional testing tools: Selenium, Ranorex, QTP (Quick Test Professional, JMeter, etc.)

- Load testing tools: Jmetter-Blazemeter, Webload, LoadRunner, etc.

- Regression testing tools: Selenium, JMeter, Postman, etc.

3. The Approach: API Message-Driven Regression Testing Framework and Its Implementation

- (1)

- Extraction of test suites from the network packets.

- (2)

- Execution of the test suite.

- (3)

- Validation and defect reporting.

| Algorithm 1 replay() |

| Input file: .PCAP 1: testSuites ← generateTestSuites (file) 2: for each test case τ in testSuites do 3: actualAPIMessage ← replay (τ) 4: testResult ← validate (t.actualAPIMessage, t.expectedAPIMessage) 5: addToTestResultList(τ); 6: end for |

3.1. Extraction of Test Suites from Network Packets

| Algorithm 2 generateTestSuites (file) |

| Input file: file 1: testSuites ← empty 2: do 3: packet ← nextPacket() 4: packetDetails ← extractPacketDetails(packet) 5: apiMessages ← buildAPIMessageInPacket (packet) 6: putIntoTestSuite (apiMessages) 7: while not end of file 8: return testSuites; |

3.2. Execution of Test Suite

3.3. Validation and Defect Reporting

4. Industrial Case Study

4.1. Experimental Setup and Data Preparation

4.2. Execution and Analysis of the Results

4.3. Threats to Validity

4.4. Lessons Learned

5. Summary and Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Sample JSON Files Created for a Financial Domain API Protocol

| OrderRejectedMessage.json |

|---|

|

References

- What Is an API? Available online: https://www.mulesoft.com/resources/api/what-is-an-api (accessed on 10 May 2022).

- Arcuri, A. RESTful API Automated Test Case Generation. In Proceedings of the 2017 IEEE International Conference on Software Quality, Reliability and Security (QRS), Prague, Czech Republic, 25–29 July 2017; pp. 9–20. [Google Scholar] [CrossRef]

- Dalal, S.; Solanki, K. Challanges of Regression Testing: A Pragmatic Perspective. Int. J. Adv. Res. Comput. Sci. 2018, 9, 499–503. [Google Scholar] [CrossRef][Green Version]

- Minhas, N.M.; Petersen, K.; Börstler, J.; Wnuk, K. Regression testing for large-scale embedded software development–Exploring the state of practice. Inf. Softw. Technol. 2020, 120, 106254. [Google Scholar] [CrossRef]

- Khari, M.; Kumar, P. An extensive evaluation of search-based software testing: A review. Soft Comput. 2019, 23, 1933–1946. [Google Scholar] [CrossRef]

- Khari, M. Emprical Evaluation of Automated Test Suite Generation and Optimization. Arab. J. Sci. Eng. 2019, 45, 2407–2423. [Google Scholar] [CrossRef]

- Jain, A.; Tayal, D.K.; Khari, M.; Vij, S. A novel method for test path prioritization using centrality measures. Int. J. Open Source Softw. Processes 2016, 7, 19–38. [Google Scholar] [CrossRef]

- Taley, D.S.; Pathak, B. Comprehensive Study of Software Testing Techniques and Strategies: A Review. Int. J. Eng. Res. 2020, 9, IJERTV9IS080373. [Google Scholar] [CrossRef]

- Gonçalves, W.F.; de Almeida, C.B.; de Araújo, L.L.; Ferraz, M.S.; Xandú, R.B.; de Farias, I. The influence of human factors on the software testing process: The impact of these factors on the software testing process. In Proceedings of the 2017 12th Iberian Conference on Information Systems and Tech-nologies (CISTI), Lisbon, Portugal, 21–24 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Lam, W.; Shi, A.; Oei, R.; Zhang, S.; Ernst, M.D.; Xie, T. Dependent-test-aware regression testing techniques. In Proceedings of the International Symposium on Software Testing and Analysis (ISSTA), Virtual Event, USA, 18 July 2020; pp. 298–311. [Google Scholar] [CrossRef]

- Arcuri, A. RESTful API Automated Test Case Generation with EvoMaster. ACM Trans. Softw. Eng. Methodol. 2019, 28, 1–37. [Google Scholar] [CrossRef]

- Han, X.; Zhang, N.; He, W.; Zhang, K.; Tang, L. Automated Warship Software Testing System Based on LoadRunner Automation API. In Proceedings of the 2018 IEEE International Conference on Software Quality, Reliability and Security Companion (QRS-C), Lisbon, Portugal, 16–20 July 2018; pp. 51–55. [Google Scholar] [CrossRef]

- Ed-douibi, H.; Izquierdo, J.L.C.; Cabot, J. Automatic Generation of Test Cases for REST APIs: A Specification-Based Approach. In Proceedings of the 2018 IEEE 22nd International Enterprise Distributed Object Computing Conference (EDOC), Stockholm, Sweden, 16–19 October 2018; pp. 181–190. [Google Scholar] [CrossRef]

- Viglianisi, E.; Dallago, M.; Ceccato, M. Resttestgen: Automated Black-Box Testing of RESTful APIs. In Proceedings of the 2020 IEEE 13th International Conference on Software Testing, Validation and Verification (ICST), Porto, Portugal, 24–28 October 2020; pp. 142–152. [Google Scholar] [CrossRef]

- Atlidakis, V.; Godefroid, P.; Polishchuk, M. RESTler: Stateful REST API Fuzzing. In Proceedings of the 41st International Conference on Software Engineering (Montreal, Quebec, Canada) (ICSE), Montreal, QC, Canada, 25–31 May 2019; IEEE Press: Piscataway, NJ, USA, 2019; pp. 748–758. [Google Scholar] [CrossRef]

- Postman|API Development Environment. Available online: https://www.getpostman.com/ (accessed on 21 August 2022).

- vREST–Automated REST API Testing Tool. Available online: https://vrest.io/ (accessed on 24 August 2022).

- The World’s Most Popular Testing Tool|SoapUI. Available online: https://www.soapui.org (accessed on 21 August 2022).

- Chen, Y.; Gao, Y.; Zhou, Y.; Chen, M.; Ma, X. Design of an Automated Test Tool Based on Interface Protocol. In Proceedings of the 2019 IEEE 19th International Conference on Software Quality, Reliability and Security Companion (QRS-C), Sofia, Bulgaria, 22–26 July 2019; pp. 57–61. [Google Scholar] [CrossRef]

- Demircioğlu, E.D.; Kalıpsız, O. Test Case Generation Framework for Client-Server Apps: Reverse Engineering Approach. In Computational Science and Its Applications–ICCSA 2020; Gervasi, O., Murgante, B., Misra, S., Garau, C., Blečić, I., Taniar, D., Apduhan, B.O., Rocha, A.M.A.C., Tarantino, E., Torre, C.M., et al., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12253. [Google Scholar] [CrossRef]

- TCP and UDP. Available online: https://www.cs.nmt.edu/~risk/TCP-UDP%20Pocket%20Guide.pdf (accessed on 20 June 2022).

- Wireshark Tool. Available online: https://www.wireshark.org/ (accessed on 1 May 2022).

- Tcpdump. Available online: https://www.tcpdump.org/ (accessed on 10 August 2022).

- Arkime. Available online: https://arkime.com/index#home (accessed on 10 August 2022).

- Isha; Sharma, A.; Revathi, M. Automated API Testing. In Proceedings of the 2018 3rd International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 15–16 November 2018; pp. 788–791. [Google Scholar] [CrossRef]

- Research on Market. Available online: https://www.researchandmarkets.com/ (accessed on 5 April 2022).

- Garousi, V.; Tasli, S.; Sertel, O.; Tokgoz, M.; Herkiloglu, K.; Arkin, H.F.; Bilir, O. Automated Testing of Simulation Software in the Aviation Industry: An Experience Report. IEEE Softw. 2019, 36, 63–75. [Google Scholar] [CrossRef]

- Wang, J.; Bai, X.; Li, L.; Ji, Z.; Ma, H. A Model-Based Framework for Cloud API Testing. In Proceedings of the 2017 IEEE 41st Annual Computer Software and Applications Conference (COMPSAC), Turin, Italy, 4–8 July 2017; pp. 60–65. [Google Scholar] [CrossRef]

- Godefroid, P.; Lehmann, D.; Polishchuk, M. Differential regression testing for REST APIs. In Proceedings of the 29th ACM SIGSOFT International Symposium on Software Testing and Analysis, Virtual Event, USA, 18 July 2020; pp. 312–323. [Google Scholar] [CrossRef]

- Xu, D.; Xu, W.; Kent, M.; Thomas, L.; Wang, L. An Automated Test Generation Technique for Software Quality Assurance. IEEE Trans. Reliab. 2015, 64, 247–268. [Google Scholar] [CrossRef]

- Sneha, K.; Malle, G.M. Research on software testing techniques and software automation testing tools. In Proceedings of the 2017 International Conference on Energy, Communication, Data Analytics and Soft Computing (ICECDS), Chennai, India, 1–2 August 2017; pp. 77–81. [Google Scholar] [CrossRef]

- Apache JMeter. Available online: https://jmeter.apache.org/ (accessed on 16 June 2022).

- GoReplay. Available online: https://github.com/buger/goreplay (accessed on 10 June 2022).

- Pollyjs. Available online: https://github.com/Netflix/pollyjs (accessed on 10 June 2022).

- Node Replay. Available online: https://www.npmjs.com/package/replay (accessed on 15 June 2022).

- Bhateja, N. A Study on Various Software Automation Testing Tools. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2015, 5, 1250–1252. [Google Scholar]

- Liu, Z.; Chen, Q.; Jiang, X. A Maintainability Spreadsheet-Driven Regression Test Automation Framework. In Proceedings of the 2013 IEEE 16th International Conference on Computational Science and Engineering, Sydney, Australia, 3–5 December 2013; pp. 1181–1184. [Google Scholar] [CrossRef]

- Malhotra, R.; Khari, M. Heuristic search-based approach for automated test data generation: A survey. Int. J. Bio-Inspired Comput. 2013, 5, 1–18. [Google Scholar] [CrossRef]

- Khari, M.; Sinha, A.; Verdu, E.; Crespo, R.G. Performance analysis of six meta-heuristic algorithms over automated test suite generation for path coverage-based optimization. Soft Comput. 2020, 24, 9143–9160. [Google Scholar] [CrossRef]

- Khari, M.; Kumar, P. An effective meta-heuristic cuckoo search algorithm for test suite optimization. Informatica 2017, 41, 363–377. [Google Scholar]

- FIX API Protocol. Available online: https://www.borsaistanbul.com/files/genium-inet-fix-protocol-specification.pdf (accessed on 18 July 2022).

- Ouch-API Protocol. Available online: https://www.borsaistan-bul.com/files/OUCH_ProtSpec_BIST_va2414.pdf (accessed on 18 July 2022).

- FIX Protocol. Available online: https://www.fixtrading.org/ (accessed on 10 August 2022).

- Wohlin, C.; Runeson, P.; Host, M.; Ohlsson, M.; Regnell, B.; Wesslen, A. Experimentation in Software Engineering; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

| Features | Our Tool | [8,9,10,11] | Postman | JMeter |

|---|---|---|---|---|

| Supports API Testing | ✓ | ✓ | ✓ | ✓ |

| Supports Web-API Testing | ✓ | ✓ | ✓ | ✓ |

| Supports Business Domain Specific APIs | ✓ | X | X | with TCP sampler, but limited |

| Simulates Real Client Behaviors with Prod. Data | ✓ | X | X | X |

| Test Case Generation | ✓ | ✓ | X | X |

| Test Case | 1 |

|---|---|

| Dest IP | 10.40.x.x |

| Dest Port | 17090 |

| API Request Message | [8 = FIXT.1.1|35 = D|34 = 7|49 = BIA|50 = F91|52 = 14:16:54.058|56 = BI|1 = XYZ|11 = 22102|38 = 20|40 = 2|44 = 21.000|54 = 1|55 = ACS.E|59 = 0|60 = 14:16:54.057|70 = AAA|528 = A|10 = 048|] |

| Expected API Message | [8 = FIXT.1.1|35 = 8|34 = 7|49 = BI|52 = 14:16:54.335|56 = BIA|57 = FX91|1 = XYZ|6 = 0|11 = 22102|14 = 0|17 = 1|22 = M|37 = 6054100010C68|38 = 20.0000000|39 = 0|40 = 2|44 = 21.0000000|54 = 1|55 = ACS.E|59 = 0|60 = 14:16:54.297|70 = AAA|119 = 220.0000000|150 = 0|151 = 20.0000000|528 = A|10 = 188] |

| Test Case | 2 |

|---|---|

| Dest IP | 10.40.x.x |

| Dest Port | 17090 |

| Request API Message | [8 = FIXT.1.1|35 = G|34 = 9|49 = BIA|50 = F91|52 = 14:25:04.944|56 = BI|11 = 22103|37 = 6054100010C68|38 = 20|40 = 2|41 = NONE|44 = 21.00000|54 = 1|55 = ACS.E|60 = 14:25:04.944|70 = AAA|10 = 031|] |

| Expected API Message | [8 = FIXT.1.1|35 = 8|34 = 9|49 = BI|52 = 14:25:05.177|56 = BIA|57 = FX91|1 = ACC|6 = 0|11 = 22103|14 = 0|17 = 2|22 = M|37 = 6054100010C68|38 = 20.0000000|39 = 0|40 = 2|41 = 22102|44 = 21.0000000|48 = 70616|54 = 1|55 = ACS.E|59 = 0|60 = 14:25:05.170|70 = AAA|119 = 220.0000000|150 = 5|151 = 20.0000000|528 = A|10 = 081|] |

| JSON File Template | JSONDataType |

|---|---|

| { “description”: “API Message Description”, “properties”: { “field”: { “type”: “JSONDataType”, “required”: true / false } } } | String boolean object integer number file array null |

| Test ID | Dest IP | Dest Port | Request Message | Response Messages/Expected Messages |

|---|---|---|---|---|

| 1 | 10.40.X.X | 8020 | Login Request Message: [AEH1,XXXXXX,TR16092650,1] | Login Response Message: [TR16092650,0] |

| 2 | 10.40.X.X | 8020 | Enter Order Request Message: [O | 1a | 10769 | B | 30 | 4000 | 0 | 0 | GMR | REF | 12345 | 0 | 1 | 0] | Order Accepted Response Message: [A | 163586118732 | 1a | 10769 | B | 9427703165229781 | 30 | 4000 | 0 | 0 | GMR | 1 | REF | 12345 | 30 | 0 | 1 | 0] |

| 3 | 10.40.X.X | 8020 | Replace Order Request Message: [U | 1a | 3407884 | 25 | 0 | 0 | | | | 0 | 0] | Order Replaced Response Message: [U | 163586167732 | 3407884 | 1a | 10769 | B | 9427703165229781 | 25 | 4000 | 0 | 0 | GMR | 1 | REF | 12345 | 25 | 0 | 1] |

| 4 | 10.40.X.X | 8020 | Cancel Order Request Message: [X | 1a] | Order Canceled Response Message: [C | 163586177305 | 3407884 | 10769 | B | 9427703165229781 | 1] |

| 5 | 10.40.X.X | 8020 | Enter Order Request Message: [O | 3407888 | 7076 | B | 22 | 7000 | 3 | 0 | GRM | REF | 12345 | 0 | 1 | 0] | Order Accepted Response Message: A | 1635862229859 | 3407888 | 7076 | B | 4277031652297829 | 22 | 7000 | 3 | 0 | GRM | 2 | REF | 12345 | 22 | 0 | 1 | 0] Order Cancelled Response Message: C | 16358622298593 | 3407888 | 7076 | B | 4277031652297829 | 9] |

| Test ID | Dest IP | Dest Port | Request Message | Response Messages / Expected Messages |

|---|---|---|---|---|

| 1 | 10.40.X.X | 17090 | Login Request Message: [8 = FIXT.1.1|35 = A|34 = 1|49 = BIA|50 = FX91|52 = 13:49:33.730|56 = BI|98 = 0|108 = 300|141 = Y|553 = USER_FIX |554 = XXX|1137 = 9|10 = 190|] | Login Response Message: [8 = FIXT.1.1|35 = A|49 = BI|56 = BIA|34 = 1|52 = 13:49:33.969|98 = 0|108 = 300|141 = Y|1409 = 0|1137 = 9|10 = 219|] |

| 2 | 10.40.X.X | 17090 | Enter Order Request Message: [8 = FIXT.1.1|35 = D|34 = 7|49 = BIA|50 = FX91|52 = 14:16:54.058|56 = BI|1 = XYZ|11 = 22102|38 = 20|40 = 2|44 = 21.000|54 = 1|55 = ACS.E|59 = 0|60 = 14:16:54.057|70 = AAA|528 = A|10 = 048|] | Order Response Message: [8 = FIXT.1.1|35 = 8|34 = 7|49 = BI|52 = 14:16:54.335|56 = BIA|57 = FX91|1 = XYZ|6 = 0|11 = 22102|14 = 0|17 = 1|22 = M|37 = 6054100010C68|38 = 20.0000000|39 = 0|40 = 2|44 = 21.0000000|54 = 1|55 = ACS.E|59 = 0|60 = 14:16:54.297|70 = AAA|119 = 220.0000000|150 = 0|151 = 20.0000000|528 = A|10 = 188|] |

| 3 | 10.40.X.X | 17090 | Replace Order Request Message: [8 = FIXT.1.1|35 = G|34 = 9|49 = BIA|50 = FX91|52 = 14:25:04.944|56 = BI|11 = 22103|37 = 6054100010C68|38 = 20|40 = 2|41 = NONE|44 = 21.00000|54 = 1|55 = ACS.E|60 = 14:25:04.944|70 = AAA|10 = 031|] | Order Replaced Response Message: [8 = FIXT.1.1|35 = 8|34 = 9|49 = BI|52 = 14:25:05.177|56 = BIA|57 = FX91|1 = ACC|6 = 0|11 = 22103|14 = 0|17 = 2|22 = M|37 = 6054100010C68|38 = 20.0000000|39 = 0|40 = 2|41 = 22102|44 = 21.0000000|48 = 70616|54 = 1|55 = ACS.E|59 = 0|60 = 14:25:05.170|70 = AAA|119 = 220.0000000|150 = 5|151 = 20.0000000|528 = A|10 = 081|] |

| 4 | 10.40.X.X | 17090 | Cancel Order Request Message: [8 = FIXT.1.1|35 = F|34 = 12|49 = BIA|50 = FX91|52 = 14:38:28.668|56 = BI|11 = 22104|37 = 6054100010C68|38 = 20|41 = NONE|54 = 1|55 = ACS.E|60 = 14:38:28.668|10 = 252] | Order Canceled Response Message: [8 = FIXT.1.1|35 = 8|34 = 12|49 = BI|52 = 14:38:28.900|56 = BIA|57 = FX91|1 = ACC|6 = 0|11 = 22104|14 = 0|17 = 3|22 = M|37 = 6054100010C68|38 = 20.0000000|39 = 4|41 = 22103|54 = 1|55 = ACS.E|58 = Canceled by user|60 = 14:38:28.894|70 = AAA|150 = 4|151 = 0|528 = A|10 = 092|] |

| 5 | 10.40.X.X | 17090 | Enter Order Request Message: [8 = FIXT.1.1|35 = D|34 = 15|49 = BIA|50 = FX91|52 = 14:44:21.663|56 = BI|1 = XYZ|11 = 22106|38 = 99|40 = 2|44 = 22.000|54 = 1|55 = GAR.E|59 = 3|60 = 14:44:21.663|70 = AAA|528 = A|10 = 115|] | Order Accepted Response Message: [8 = FIXT.1.1|35 = 8|34 = 16|49 = BI|52 = 14:44:21.892|56 = BIA|57 = FX91|1 = XYZ|6 = 0|11 = 22106|14 = 0|17 = 7|22 = M|37 = 1054100010C75|38 = 99.0000000|39 = 0|40 = 2|44 = 22.0000000|48 = 74196|54 = 1|55 = GAR.E|59 = 3|60 = 14:44:21.888|70 = AAA|119 = 1089.0000000|150 = 0|151 = 99.0000000|528 = A|10 = 099|] Order Cancelled Response Message: [8 = FIXT.1.1|35 = 8|34 = 17|49 = BI|52 = 14:44:21.893|56 = BIA|57 = FX91|1 = XYZ|6 = 0|11 = 22106|14 = 0|17 = 9|22 = M|37 = 1054100010C75|38 = 99.0000000|39 = 4|40 = 2|44 = 22.0000000|48 = 74196|54 = 1|55 = GAR.E|58 = Unsolicited Cancel|59 = 3|60 = 14:44:21.888|70 = AAA|150 = 4|151 = 0|528 = A|10 = 022|] |

| Test ID | Test Result Report | |

|---|---|---|

| 1 | TestDate | 16 May 2022 |

| TestResult | Passed | |

| RequestMsg | [AEH1,XXXXXX,TR16092650,1] | |

| ExpectedResponseMsg | [TR16092650,0] | |

| ActualResponseMsg | [TR16092650,0] | |

| 2 | TestDate | 16 May 2022 |

| TestResult | Passed | |

| RequestMsg | [O | 1a | 10769 | B | 30 | 4000 | 0 | 0 | GMR | REF | 12345 | 0 | 1 | 0] | |

| ExpectedResponseMsg | [A | 163586118732 | 1a | 10769 | B | 9427703165229781 | 30 | 4000 | 0 | 0 | GMR | 1 | REF | 12345 | 30 | 0 | 1 | 0] | |

| ActualResponseMsg | [A | 163586120732 | 20 | 10769 | B | 7899889898999997 | 30 | 4000 | 0 | 0 | GMR | 1 | REF | 12345 | 30 | 0 | 1 | 0] | |

| 3 | TestDate | 16 May 2022 |

| TestResult | Passed | |

| RequestMsg | [U | 1a | 3407884 | 25 | 0 | 0 | | | | 0 | 0] | |

| ExpectedResponseMsg | [U | 163586167732 | 3407884 | 1a | 10769 | B | 9427703165229781 | 25 | 4000 | 0 | 0 | GMR | 1 | REF | 12345 | 25 | 0 | 1] | |

| ActualResponseMsg | [U | 163586208910 | 3407884 | 1a | 10769 | B | 7899889898999997 | 25 | 4000 | 0 | 0 | GMR | 1 | REF | 12345 | 25 | 0 | 1] | |

| 4 | TestDate | 16 May 2022 |

| TestResult | Passed | |

| RequestMsg | [X | 1a] | |

| ExpectedResponseMsg | [C | 163586177305 | 3407884 | 10769 | B | 9427703165229781 | 1] | |

| ActualResponseMsg | [C | 163586228910 | 3407884 | 10769 | B | 7899889898999997 | 1] | |

| 5 | TestDate | 16 May 2022 |

| TestResult | Passed | |

| RequestMsg | [O | 3407888 | 7076 | B | 22 | 7000 | 3 | 0 | GRM | REF | 12345 | 0 | 1 | 0] | |

| ExpectedResponseMsg | A | 1635862229859 | 3407888 | 7076 | B | 4277031652297829 | 22 | 7000 | 3 | 0 | GRM | 2 | REF | 12345 | 22 | 0 | 1 | 0] C | 16358622298593 | 3407888 | 7076 | B | 4277031652297829 | 9] | |

| ActualResponseMsg | A | 163586239910 | 3407888 | 7076 | B | 1278931652297829 | 22 | 7000 | 3 | 0 | GRM | 2 | REF | 12345 | 22 | 0 | 1 | 0] C | 163586239910 | 3407888 | 7076 | B | 1278931652297829 | 9] | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Demircioğlu, E.D.; Kalipsiz, O. API Message-Driven Regression Testing Framework. Electronics 2022, 11, 2671. https://doi.org/10.3390/electronics11172671

Demircioğlu ED, Kalipsiz O. API Message-Driven Regression Testing Framework. Electronics. 2022; 11(17):2671. https://doi.org/10.3390/electronics11172671

Chicago/Turabian StyleDemircioğlu, Emine Dumlu, and Oya Kalipsiz. 2022. "API Message-Driven Regression Testing Framework" Electronics 11, no. 17: 2671. https://doi.org/10.3390/electronics11172671

APA StyleDemircioğlu, E. D., & Kalipsiz, O. (2022). API Message-Driven Regression Testing Framework. Electronics, 11(17), 2671. https://doi.org/10.3390/electronics11172671