Software Quality: How Much Does It Matter?

Abstract

:1. Introduction

2. Materials and Methods

- Harvest the research publications concerning software quality. The corpus of retrieved publications represents the content to analyse and the output of Step 1.

- Identify codes in the corpus using text mining and cluster them into an author’s keyword landscape with bibliometric mapping. Authors’ keywords were selected as codes since they most concisely present the content of a publication. The author’s keyword landscape is the output from Step 2.

- Condense author keywords with similar meanings into codes for every single cluster and analyse the links between codes in individual clusters, and then map them into categories which form the output from Step 3.

- Analyse categories and name each cluster with an appropriate theme. The list of themes is the final output of the qualitative analysis.

3. Results and Discussion

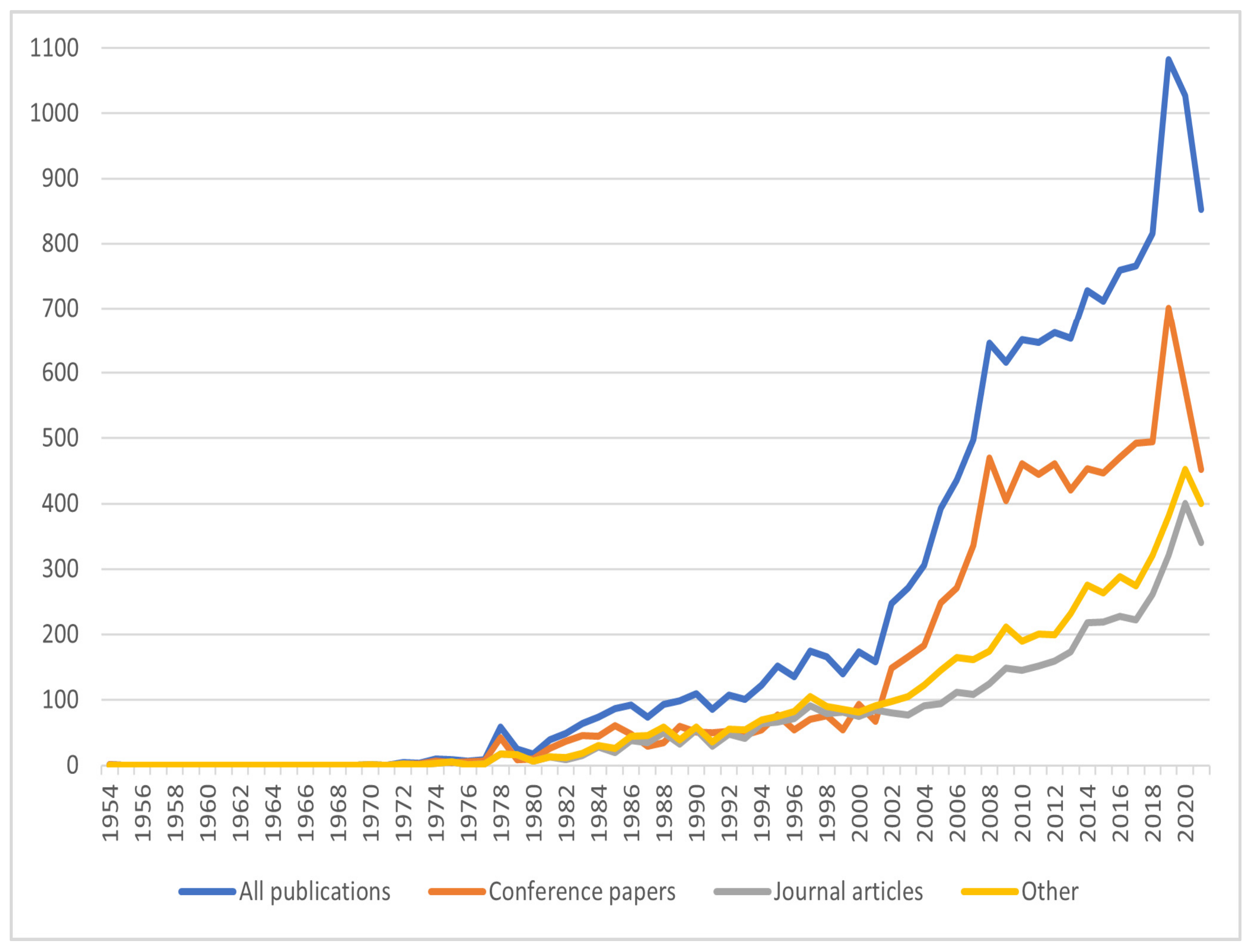

3.1. Descriptive Bibliometrics

Spatial Distribution and Productivity of Literature Production

3.2. Qualitative Synthetic Knowledge Synthesis

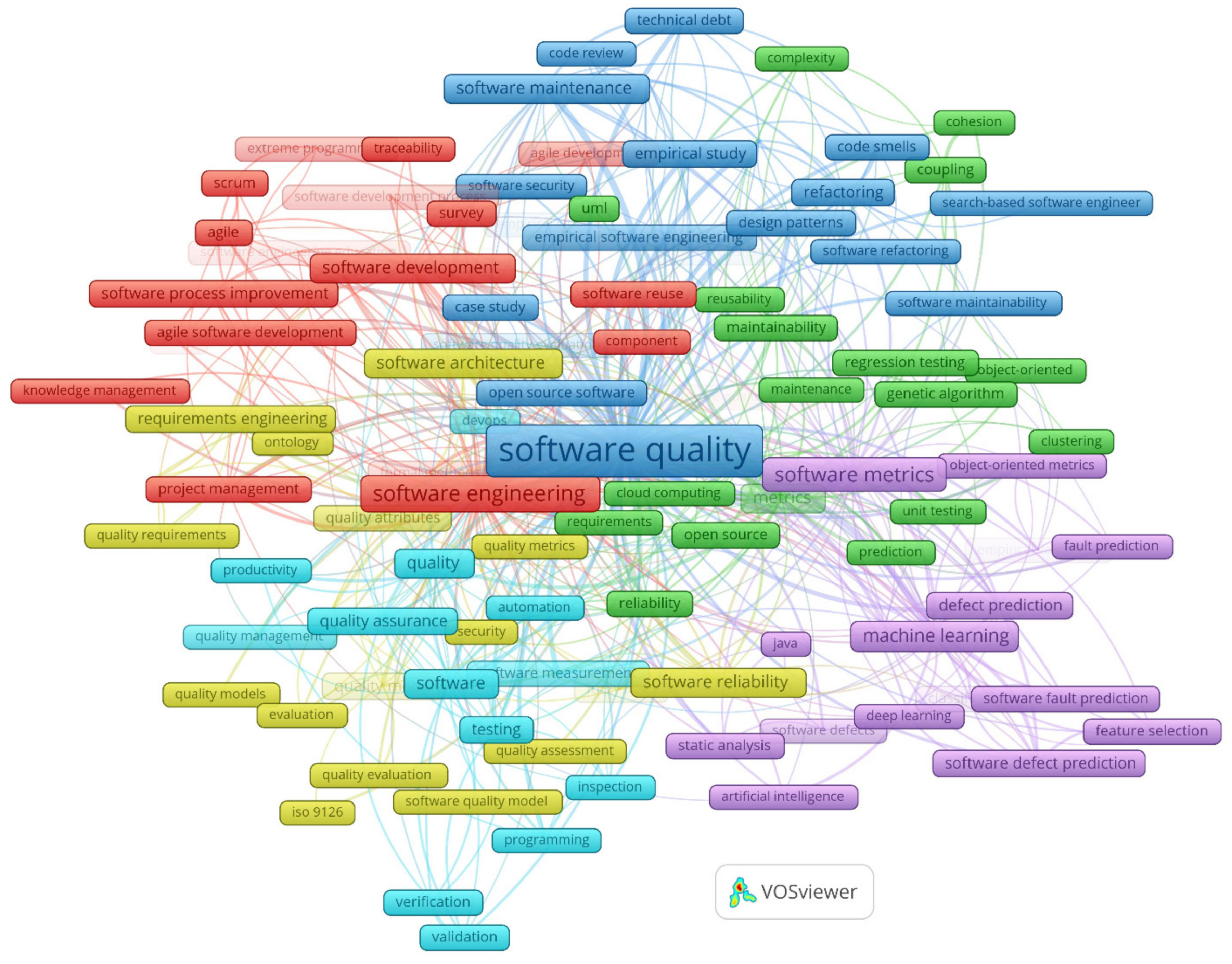

3.2.1. Text Mining and Bibliometric Mapping

3.2.2. Content Analysis

- Software process improvement: The most impactful research regarding the number of citations was done around the beginning of the new millennia and was related to software process maturity [32,33] and the importance of top management leadership, management infrastructure and stakeholder participation [34]. Recent important research is still concerned with process maturity, but CMM(I) is combined with DevOps and agile approaches [35,36,37].

- Metric-based software maintenance: Most cited papers related to this theme were published in the period 1993–2010 and are concerned with object-oriented metrics to predict maintainability [38], metrics-based refactoring [39], predicting faults [40] and code readability metrics [41]. Recent impactful papers deal with technical debt [42], code smells and refactoring [43] and test smells [44].

- Software evolution and refactoring: The most influential research was published in the past decade and was concerned with the prediction of software evolution [45,46], automatic detection of bad smells which can trigger refactoring [47,48,49], the association of software defect and refactoring [50].

- Search-based software engineering for defect prediction and classification: The research on this theme has become important in the last 15 years. The research mainly used data mining and machine learning to predict software defects and failures using static codes and other software documents [56,57,58].

- Software quality management and assurance with testing and inspections: This theme is the most established, with influential papers starting to be published around 40 years ago [59,60,61]. Recent research is concerned with regression testing [11], predictive mutation testing [62], metamorphic testing [63] and modern code reviews [64].

3.3. Hot Topics

- A new theme: Improving software development with the Integration of CMMI into agile approaches [66].

- New categories:

- Most cited categories in 2020/21:

3.4. How Much Does the Software Quality Matter?

3.5. Strengths and Limitations

4. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kokol, P.; Vošner, H.B.; Kokol, M.; Završnik, J. The Quality of Digital Health Software: Should We Be Concerned? Digit. Health 2022, 8, 20552076221109055. [Google Scholar] [CrossRef] [PubMed]

- Tian, J. Software Quality Engineering: Testing, Quality Assurance and Quantifiable Improvement; Wiley India Pvt. Limited: New Delhi, India, 2009; ISBN 978-81-265-0805-1. [Google Scholar]

- Winkler, D.; Biffl, S.; Mendez, D.; Wimmer, M.; Bergsmann, J. (Eds.) Software Quality: Future Perspectives on Software Engineering Quality: 13th International Conference, SWQD 2021, Vienna, Austria, January 19–21, 2021, Proceedings; Lecture Notes in Business Information Processing; Springer International Publishing: New York, NY, USA, 2021; ISBN 978-3-030-65853-3. [Google Scholar]

- Gillies, A. Software Quality: Theory and Management, 3rd ed.; Lulu Press: Morrisville, NC, USA, 2011. [Google Scholar]

- Suali, A.J.; Fauzi, S.S.M.; Nasir, M.H.N.M.; Sobri, W.A.W.M.; Raharjana, I.K. Software Quality Measurement in Software Engineering Project: A Systematic Literature Review. J. Theor. Appl. Inf. Technol. 2019, 97, 918–929. [Google Scholar]

- Atoum, I. A Novel Framework for Measuring Software Quality-in-Use Based on Semantic Similarity and Sentiment Analysis of Software Reviews. J. King Saud Univ. Comput. Inf. Sci. 2020, 32, 113–125. [Google Scholar] [CrossRef]

- Lacerda, G.; Petrillo, F.; Pimenta, M.; Guéhéneuc, Y.G. Code Smells and Refactoring: A Tertiary Systematic Review of Challenges and Observations. J. Syst. Softw. 2020, 167, 110610. [Google Scholar] [CrossRef]

- Wedyan, F.; Abufakher, S. Impact of Design Patterns on Software Quality: A Systematic Literature Review. IET Softw. 2020, 14, 1–17. [Google Scholar] [CrossRef]

- Saini, G.L.; Panwar, D.; Kumar, S.; Singh, V. A Systematic Literature Review and Comparative Study of Different Software Quality Models. J. Discret. Math. Sci. Cryptogr. 2020, 23, 585–593. [Google Scholar] [CrossRef]

- Guveyi, E.; Aktas, M.S.; Kalipsiz, O. Human Factor on Software Quality: A Systematic Literature Review. In International Conference on Computational Science and Its Applications; Springer: Cham, Switzerland, 2020; pp. 918–930. [Google Scholar] [CrossRef]

- Khatibsyarbini, M.; Isa, M.A.; Jawawi, D.N.A.; Tumeng, R. Test Case Prioritization Approaches in Regression Testing: A Systematic Literature Review. Inf. Softw. Technol. 2018, 93, 74–93. [Google Scholar] [CrossRef]

- Rathore, S.S.; Kumar, S. A Study on Software Fault Prediction Techniques. Artif. Intell. Rev. 2019, 51, 255–327. [Google Scholar] [CrossRef]

- Cowlessur, S.K.; Pattnaik, S.; Pattanayak, B.K. A Review of Machine Learning Techniques for Software Quality Prediction. Adv. Intell. Syst. Comput. 2020, 1089, 537–549. [Google Scholar] [CrossRef]

- Kupiainen, E.; Mäntylä, M.V.; Itkonen, J. Using Metrics in Agile and Lean Software Development—A Systematic Literature Review of Industrial Studies. Inf. Softw. Technol. 2015, 62, 143–163. [Google Scholar] [CrossRef]

- Van Raan, A. Measuring Science: Basic Principles and Application of Advanced Bibliometrics. In Springer Handbook of Science and Technology Indicators; Glänzel, W., Moed, H.F., Schmoch, U., Thelwall, M., Eds.; Springer Handbooks; Springer International Publishing: Cham, Switzerland, 2019; pp. 237–280. ISBN 978-3-030-02511-3. [Google Scholar]

- Pachouly, J.; Ahirrao, S.; Kotecha, K. A Bibliometric Survey on the Reliable Software Delivery Using Predictive Analysis. Libr. Philos. Pract. 2020, 2020, 1–27. [Google Scholar]

- Kokol, P.; Kokol, M.; Zagoranski, S. Code Smells: A Synthetic Narrative Review. arXiv 2021, arXiv:2103.01088. [Google Scholar]

- Chalmers, I.; Hedges, L.V.; Cooper, H. A Brief History of Research Synthesis. Eval Health Prof 2002, 25, 12–37. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tricco, A.C.; Tetzlaff, J.; Moher, D. The Art and Science of Knowledge Synthesis. J. Clin. Epidemiol. 2011, 64, 11–20. [Google Scholar] [CrossRef]

- Whittemore, R.; Chao, A.; Jang, M.; Minges, K.E.; Park, C. Methods for Knowledge Synthesis: An Overview. Heart Lung 2014, 43, 453–461. [Google Scholar] [CrossRef]

- Kyngäs, H.; Mikkonen, K.; Kääriäinen, M. The Application of Content Analysis in Nursing Science Research; Springer Nature: Berlin/Heidelberg, Germany, 2020. [Google Scholar] [CrossRef]

- Kokol, P.; Kokol, M.; Zagoranski, S. Machine Learning on Small Size Samples: A Synthetic Knowledge Synthesis. Sci. Prog. 2022, 105, 00368504211029777. [Google Scholar] [CrossRef]

- Van Eck, N.J.; Waltman, L. Software Survey: VOSviewer, a Computer Program for Bibliometric Mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef] [Green Version]

- Tomayko, J.E. Teaching Software Development in a Studio Environment. In Proceedings of the SIGCSE 1991, San Antonio, TX, USA, 7–8 March 1991; pp. 300–303. [Google Scholar]

- Dijkstra, E.W. The Humble Programmer. Commun. ACM 1972, 15, 859–866. [Google Scholar] [CrossRef] [Green Version]

- Cavano, J.P.; McCall, J.A. A Framework for the Measurement of Software Quality. SIGMETRICS Perform. Eval. Rev. 1978, 7, 133–139. [Google Scholar] [CrossRef]

- Dingsøyr, T.; Nerur, S.; Balijepally, V.; Moe, N.B. A Decade of Agile Methodologies: Towards Explaining Agile Software Development. J. Syst. Softw. 2012, 85, 1213–1221. [Google Scholar] [CrossRef] [Green Version]

- 10 Best Countries to Outsource Software Development, Based on Data. 2019. Available online: https://www.codeinwp.com/blog/best-countries-to-outsource-software-development/ (accessed on 25 July 2022).

- Qubit Labs. How Many Programmers Are There in the World and in the US? 2022. Available online: https://qubit-labs.com/how-many-programmers-in-the-world/#:~:text=As%20per%20the%20information%20above,are%20located%20in%20North%20America (accessed on 25 July 2022).

- Journal Metrics in Scopus: Source Normalized Impact per Paper (SNIP)|Elsevier Scopus Blog. Available online: https://blog.scopus.com/posts/journal-metrics-in-scopus-source-normalized-impact-per-paper-snip (accessed on 25 July 2022).

- Kokol, P. Funded and Non-Funded Research Literature in Software Engineering in Relation to Country Determinants. COLLNET J. Scientometr. Inf. Manag. 2019, 13, 103–109. [Google Scholar] [CrossRef]

- Harter, D.E.; Krishnan, M.S.; Slaughter, S.A. Effects of Process Maturity on Quality, Cycle Time, and Effort in Software Product Development. Manag. Sci. 2000, 46, 451–466. [Google Scholar] [CrossRef]

- Herbsleb, J.; Zubrow, D.; Goldenson, D.; Hayes, W.; Paulk, M. Software Quality and the Capability Maturity Model. Commun. ACM 1997, 40, 30–40. [Google Scholar] [CrossRef]

- Ravichandran, T.; Rai, A. Quality Management in Systems Development: An Organizational System Perspective. MIS Q. Manag. Inf. Syst. 2000, 24, 381–410. [Google Scholar] [CrossRef] [Green Version]

- Alqadri, Y.; Budiardjo, E.K.; Ferdinansyah, A.; Rokhman, M.F. The CMMI-Dev Implementation Factors for Software Quality Improvement: A Case of XYZ Corporation. In Proceedings of the 2020 2nd Asia Pacific Information Technology Conference, Bali Island, Indonesia, 17–19 January 2020; pp. 34–40. [Google Scholar]

- Ferdinansyah, A.; Purwandari, B. Challenges in Combining Agile Development and CMMI: A Systematic Literature Review. In Proceedings of the ICSCA 2021: 2021 10th International Conference on Software and Computer Applications, Kuala Lumpur, Malaysia, 23–26 February 2021; pp. 63–69. [Google Scholar]

- Rafi, S.; Yu, W.; Akbar, M.A.; Mahmood, S.; Alsanad, A.; Gumaei, A. Readiness Model for DevOps Implementation in Software Organizations. J. Softw. Evol. Process 2021, 33, e2323. [Google Scholar] [CrossRef]

- Li, W.; Henry, S. Object-Oriented Metrics That Predict Maintainability. J. Syst. Softw. 1993, 23, 111–122. [Google Scholar] [CrossRef]

- Simon, F.; Steinbrückner, F.; Lewerentz, C. Metrics Based Refactoring. In Proceedings of the Fifth European Conference on Software Maintenance and Reengineering, Lisbon, Portugal, 14–16 March 2001; pp. 30–38. [Google Scholar]

- Olague, H.M.; Etzkorn, L.H.; Gholston, S.; Quattlebaum, S. Empirical Validation of Three Software Metrics Suites to Predict Fault-Proneness of Object-Oriented Classes Developed Using Highly Iterative or Agile Software Development Processes. IEEE Trans. Softw. Eng. 2007, 33, 402–419. [Google Scholar] [CrossRef]

- Buse, R.P.L.; Weimer, W.R. Learning a Metric for Code Readability. IEEE Trans. Softw. Eng. 2010, 36, 546–558. [Google Scholar] [CrossRef]

- Tsoukalas, D.; Kehagias, D.; Siavvas, M.; Chatzigeorgiou, A. Technical Debt Forecasting: An Empirical Study on Open-Source Repositories. J. Syst. Softw. 2020, 170, 110777. [Google Scholar] [CrossRef]

- Agnihotri, M.; Chug, A. A Systematic Literature Survey of Software Metrics, Code Smells and Refactoring Techniques. J. Inf. Processing Syst. 2020, 16, 915–934. [Google Scholar] [CrossRef]

- Kim, D.J.; Chen, T.-H.; Yang, J. The Secret Life of Test Smells—An Empirical Study on Test Smell Evolution and Maintenance. Empir. Softw. Eng. 2021, 26, 1–47. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Iliofotou, M.; Neamtiu, I.; Faloutsos, M. Graph-Based Analysis and Prediction for Software Evolution. In Proceedings of the ICSE ‘12: 34th International Conference on Software Engineering, Zurich, Switzerland, 2–9 June 2012; pp. 419–429. [Google Scholar]

- Wyrich, M.; Bogner, J. Towards an Autonomous Bot for Automatic Source Code Refactoring. In Proceedings of the 2019 IEEE/ACM 1st International Workshop on Bots in Software Engineering (BotSE), Montreal, QC, Canada, 28 May 2019; pp. 24–28. [Google Scholar]

- Fontana, F.A.; Braione, P.; Zanoni, M. Automatic Detection of Bad Smells in Code: An Experimental Assessment. J. Object Technol. 2012, 11, 1–38. [Google Scholar] [CrossRef]

- Ouni, A.; Kessentini, M.; Sahraoui, H.; Inoue, K.; Deb, K. Multi-Criteria Code Refactoring Using Search-Based Software Engineering: An Industrial Case Study. ACM Trans. Softw. Eng. Methodol. 2016, 25, 1–53. [Google Scholar] [CrossRef]

- Bavota, G.; De Lucia, A.; Marcus, A.; Oliveto, R. Automating Extract Class Refactoring: An Improved Method and Its Evaluation. Empir. Softw. Eng. 2014, 19, 1617–1664. [Google Scholar] [CrossRef]

- Ratzinger, J.; Sigmund, T.; Gall, H.C. On the Relation of Refactoring and Software Defects. In Proceedings of the 2008 International Working Conference on Mining Software Repositories, MSR 2008, Leipzig, Germany, 10–11 May 2008; pp. 35–38. [Google Scholar]

- Folmer, E.; Bosch, J. Architecting for Usability: A Survey. J. Syst. Softw. 2004, 70, 61–78. [Google Scholar] [CrossRef]

- Wang, W.-L.; Pan, D.; Chen, M.-H. Architecture-Based Software Reliability Modeling. J. Syst. Softw. 2006, 79, 132–146. [Google Scholar] [CrossRef]

- Mellado, D.; Fernández-Medina, E.; Piattini, M. A Common Criteria Based Security Requirements Engineering Process for the Development of Secure Information Systems. Comput. Stand. Interfaces 2007, 29, 244–253. [Google Scholar] [CrossRef]

- Sedeño, J.; Schön, E.-M.; Torrecilla-Salinas, C.; Thomaschewski, J.; Escalona, M.J.; Mejias, M. Modelling Agile Requirements Using Context-Based Persona Stories. In Proceedings of the WEBIST 2017: 13th International Conference on Web Information Systems and Technologies, Porto, Portugal, 25–27 April 2017; pp. 196–203. [Google Scholar]

- Shull, F.; Rus, I.; Basili, V. How Perspective-Based Reading Can Improve Requirements Inspections. Computer 2000, 33, 73–79. [Google Scholar] [CrossRef]

- Menzies, T.; Greenwald, J.; Frank, A. Data Mining Static Code Attributes to Learn Defect Predictors. IEEE Trans. Softw. Eng. 2007, 33, 2–13. [Google Scholar] [CrossRef]

- Lessmann, S.; Baesens, B.; Mues, C.; Pietsch, S. Benchmarking Classification Models for Software Defect Prediction: A Proposed Framework and Novel Findings. IEEE Trans. Softw. Eng. 2008, 34, 485–496. [Google Scholar] [CrossRef] [Green Version]

- Song, Q.; Shepperd, M.; Cartwright, M.; Mair, C. Software Defect Association Mining and Defect Correction Effort Prediction. IEEE Trans. Softw. Eng. 2006, 32, 69–82. [Google Scholar] [CrossRef] [Green Version]

- Boehm, B.W.; Brown, J.R.; Lipow, M. Quantitative Evaluation of Software Quality. In Proceedings of the 2nd International Conference on Software Engineering, San Francisco, CA, USA, 13–15 October 1976; pp. 592–605. [Google Scholar]

- Fagan, M.E. Advances in Software Inspections. IEEE Trans. Softw. Eng. 1986, SE-12, 744–751. [Google Scholar] [CrossRef]

- McCabe, T.J.; Butler, C.W. Design Complexity Measurement and Testing. Commun. ACM 1989, 32, 1415–1425. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, L.; Harman, M.; Hao, D.; Jia, Y.; Zhang, L. Predictive Mutation Testing. IEEE Trans. Softw. Eng. 2019, 45, 898–918. [Google Scholar] [CrossRef] [Green Version]

- Segura, S.; Towey, D.; Zhou, Z.Q.; Chen, T.Y. Metamorphic Testing: Testing the Untestable. IEEE Softw. 2020, 37, 46–53. [Google Scholar] [CrossRef]

- Uchoa, A.; Barbosa, C.; Oizumi, W.; Blenilio, P.; Lima, R.; Garcia, A.; Bezerra, C. How Does Modern Code Review Impact Software Design Degradation? An In-Depth Empirical Study. In Proceedings of the 2020 IEEE International Conference on Software Maintenance and Evolution (ICSME), Adelaide, Australia, 28 September–2 October 2020; pp. 511–522. [Google Scholar]

- Kokol, P.; Završnik, J.; Blažun Vošner, H. Bibliographic-Based Identification of Hot Future Research Topics: An Opportunity for Hospital Librarianship. J. Hosp. Librariansh. 2018, 18, 1–8. [Google Scholar] [CrossRef]

- Amer, S.K.; Badr, N.; Hamad, A. Combining CMMI Specific Practices with Scrum Model to Address Shortcomings in Process Maturity. Adv. Intell. Syst. Comput. 2020, 921, 898–907. [Google Scholar] [CrossRef]

- Ahmad, A.; Feng, C.; Khan, M.; Khan, A.; Ullah, A.; Nazir, S.; Tahir, A. A Systematic Literature Review on Using Machine Learning Algorithms for Software Requirements Identification on Stack Overflow. Secur. Commun. Netw. 2020, 2020, 8830683. [Google Scholar] [CrossRef]

- Oriol, M.; Martínez-Fernández, S.; Behutiye, W.; Farré, C.; Kozik, R.; Seppänen, P.; Vollmer, A.M.; Rodríguez, P.; Franch, X.; Aaramaa, S.; et al. Data-Driven and Tool-Supported Elicitation of Quality Requirements in Agile Companies. Softw. Qual. J. 2020, 28, 931–963. [Google Scholar] [CrossRef]

- Minerva, R.; Lee, G.M.; Crespi, N. Digital Twin in the IoT Context: A Survey on Technical Features, Scenarios, and Architectural Models. Proc. IEEE 2020, 108, 1785–1824. [Google Scholar] [CrossRef]

- Liu, X.L.; Wang, W.M.; Guo, H.; Barenji, A.V.; Li, Z.; Huang, G.Q. Industrial Blockchain Based Framework for Product Lifecycle Management in Industry 4.0. Robot. Comput.-Integr. Manuf. 2020, 63, 101897. [Google Scholar] [CrossRef]

- Caram, F.L.; Rodrigues, B.R.D.O.; Campanelli, A.S.; Parreiras, F.S. Machine Learning Techniques for Code Smells Detection: A Systematic Mapping Study. Int. J. Softw. Eng. Knowl. Eng. 2019, 29, 285–316. [Google Scholar] [CrossRef]

- Winkle, T.; Erbsmehl, C.; Bengler, K. Area-Wide Real-World Test Scenarios of Poor Visibility for Safe Development of Automated Vehicles. Eur. Transp. Res. Rev. 2018, 10, 32. [Google Scholar] [CrossRef]

- Sun, G.; Guan, X.; Yi, X.; Zhou, Z. An Innovative TOPSIS Approach Based on Hesitant Fuzzy Correlation Coefficient and Its Applications. Appl. Soft Comput. J. 2018, 68, 249–267. [Google Scholar] [CrossRef]

| Country | Number of Publications |

|---|---|

| United States | 2917 |

| China | 1684 |

| India | 1436 |

| Germany | 961 |

| Canada | 734 |

| Brazil | 698 |

| United Kingdom | 626 |

| Italy | 510 |

| Spain | 428 |

| Japan | 427 |

| Institution | Number of Publications |

|---|---|

| Florida Atlantic University | 227 |

| Beihang University | 127 |

| Amity University | 99 |

| Peking University | 95 |

| Carnegie Mellon University | 87 |

| Universidade de São Paulo | 86 |

| Technical University of Munich | 83 |

| École de Technologie Supérieure | 80 |

| Chinese Academy of Sciences | 79 |

| Fraunhofer Institute for Experimental Software Engineering IESE | 75 |

| Source Title | Number of Publications | SNIP 2020 |

|---|---|---|

| Lecture Notes in Computer Science Including Subseries Lecture Notes in Artificial Intelligence And Lecture Notes In Bioinformatics | 737 | 0.628 |

| ACM International Conference Proceeding Series | 394 | 0.296 |

| Proceedings International Conference on Software Engineering | 324 | 1.68 |

| Communications In Computer and Information Science | 226 | 0.32 |

| Ceur Workshop Proceedings | 221 | 0.345 |

| Information And Software Technology | 214 | 2.389 |

| Software Quality Journal | 191 | 1.388 |

| Advances In Intelligent Systems and Computing | 190 | 0.428 |

| Journal Of Systems and Software | 186 | 2.16 |

| IEEE Software | 152 | 1.934 |

| Funding Agency | Number of Publications |

|---|---|

| National Natural Science Foundation of China | 318 |

| National Science Foundation (USA) | 182 |

| European Commission | 132 |

| Horizon 2020 Framework Programme (EU) | 97 |

| Conselho Nacional de Desenvolvimento Científico e Tecnológico (Brazil) | 89 |

| Natural Sciences and Engineering Research Council of Canada | 77 |

| Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (Brazil) | 76 |

| National Key Research and Development Program of China | 66 |

| European Regional Development Fund | 65 |

| Japan Society for the Promotion of Science | 61 |

| Colour | Codes | Concepts/Categories | Themes |

|---|---|---|---|

| Red (26 keywords)) | Software engineering (641), Software quality assurance and management (330), Agile software development (319), Software development (276), Software process improvement (203), Software process (109), Software reuse (91), Project management (90) | Software quality assurance with project and knowledge management; software process improvement with agile approaches and CMMI; software reuse with production lines based on software quality metrics; | Software process improvement |

| Green (21 keywords) | Software testing (694), Metrics (578), Reliability (108); Maintainability (135), UML (80); Genetics algorithms (71) | Metrics-based software testing supported by genetic algorithms; using and predicting maintainability metrics like reusability, complexity, cohesion, and coupling; | Metric-based software maintenance |

| Blue (20 keywords) | Software quality (2685), Empirical studies in software engineering (281), Refactoring (226), Software maintenance (259), Technical depth and code smells (183), Software evolution (130),) | Mining software repositories to support empirical and search software engineering; software evolution with refactoring based on code smells; technical debts and code smells in association with software maintenance; | Software evolution and refactoring |

| Yellow (19 keywords) | Software architecture (253), Software reliability (233), Requirements engineering (226), Software quality models (214), Usability (108), Quality metrics and attributes (136), Quality assessment and evaluation (99) | Quality attributes of software requirements and architecture; quality attributes of software quality models and standards; general quality metrics like reliability; security, and usability; | Quality assurance in initial phases of software development life cycles |

| Viollet (16 keywords) | Software metrics (568), Machine learning and data mining (479); Fault and defect prediction (228) | Use of software metrics and data mining in defect prediction and classification; | Search-based software engineering for defect prediction and classification |

| Light blue (14 keywords) | Quality (221), Software (204); Quality management and assurance (164); Testing (148); | Testing and inspection, verification and validation; testing automation; programming productivity and quality | Software quality management and assurance with testing and inspections |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kokol, P. Software Quality: How Much Does It Matter? Electronics 2022, 11, 2485. https://doi.org/10.3390/electronics11162485

Kokol P. Software Quality: How Much Does It Matter? Electronics. 2022; 11(16):2485. https://doi.org/10.3390/electronics11162485

Chicago/Turabian StyleKokol, Peter. 2022. "Software Quality: How Much Does It Matter?" Electronics 11, no. 16: 2485. https://doi.org/10.3390/electronics11162485

APA StyleKokol, P. (2022). Software Quality: How Much Does It Matter? Electronics, 11(16), 2485. https://doi.org/10.3390/electronics11162485