An Improved Multi-Objective Deep Reinforcement Learning Algorithm Based on Envelope Update

Abstract

1. Introduction

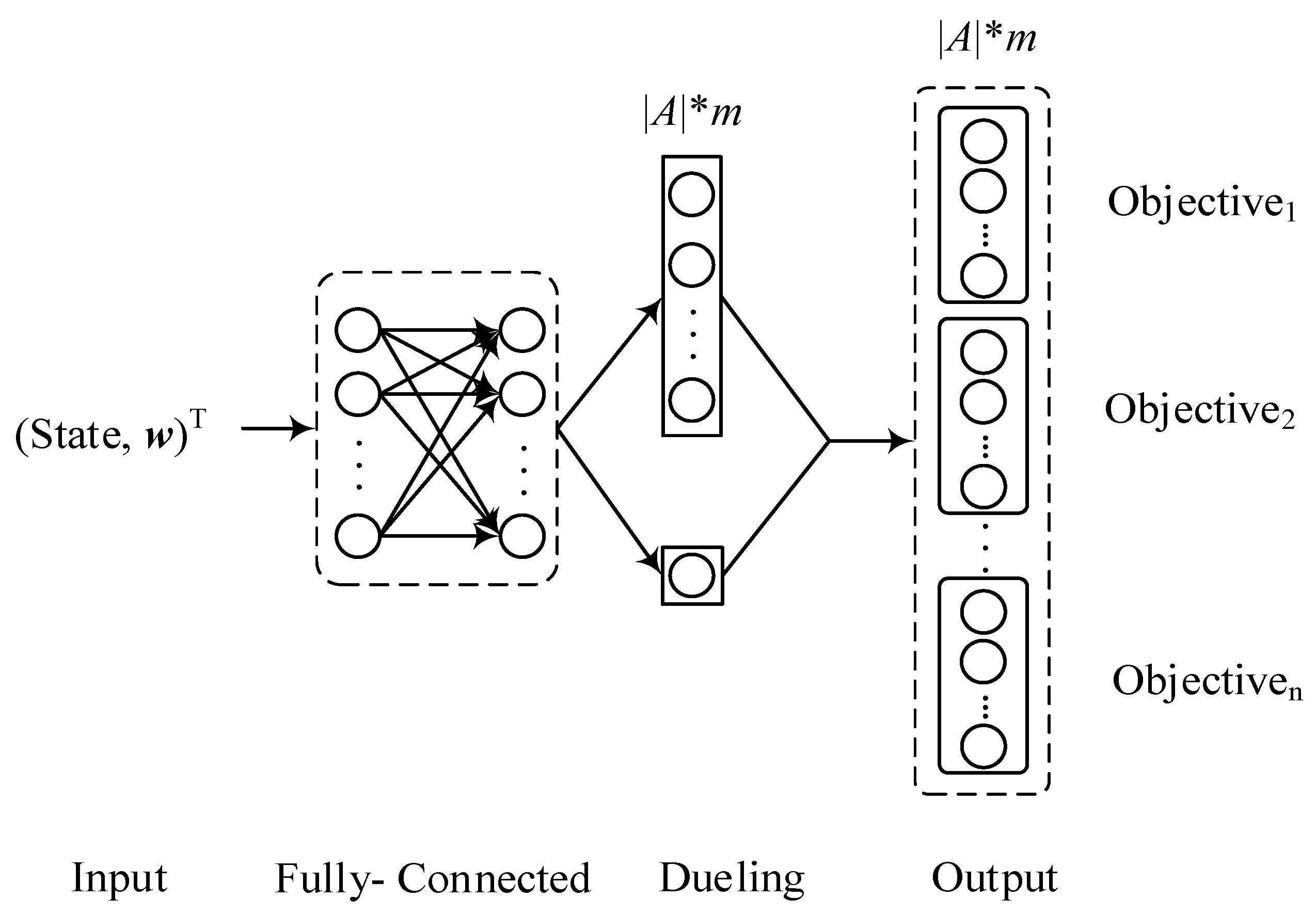

- Replacing the traditional DQN structure with a dueling structure. Splitting the state value function into the advantage and value functions to avoid unnecessary value evaluation and make the model converge faster.

- Adding exploration noise to the neural network parameters using the Noisynet method, which bring persistent, complex, state-independent random perturbations to the strategy and make the agent efficient in exploration.

- Replacing the traditional DQN parameter update with a soft parameter update to ensure that the target network is updated at each iteration. Improving the training speed and stabilizing the training procedure.

- A hindsight experience replay approach [12] is used to update the utility-based multi-objective Q-network with a strategy that allows the algorithm to learn more efficiently by reusing different sampling preferences.

2. Related Work

3. Background

3.1. Multi-Objective Optimization

3.2. DQN

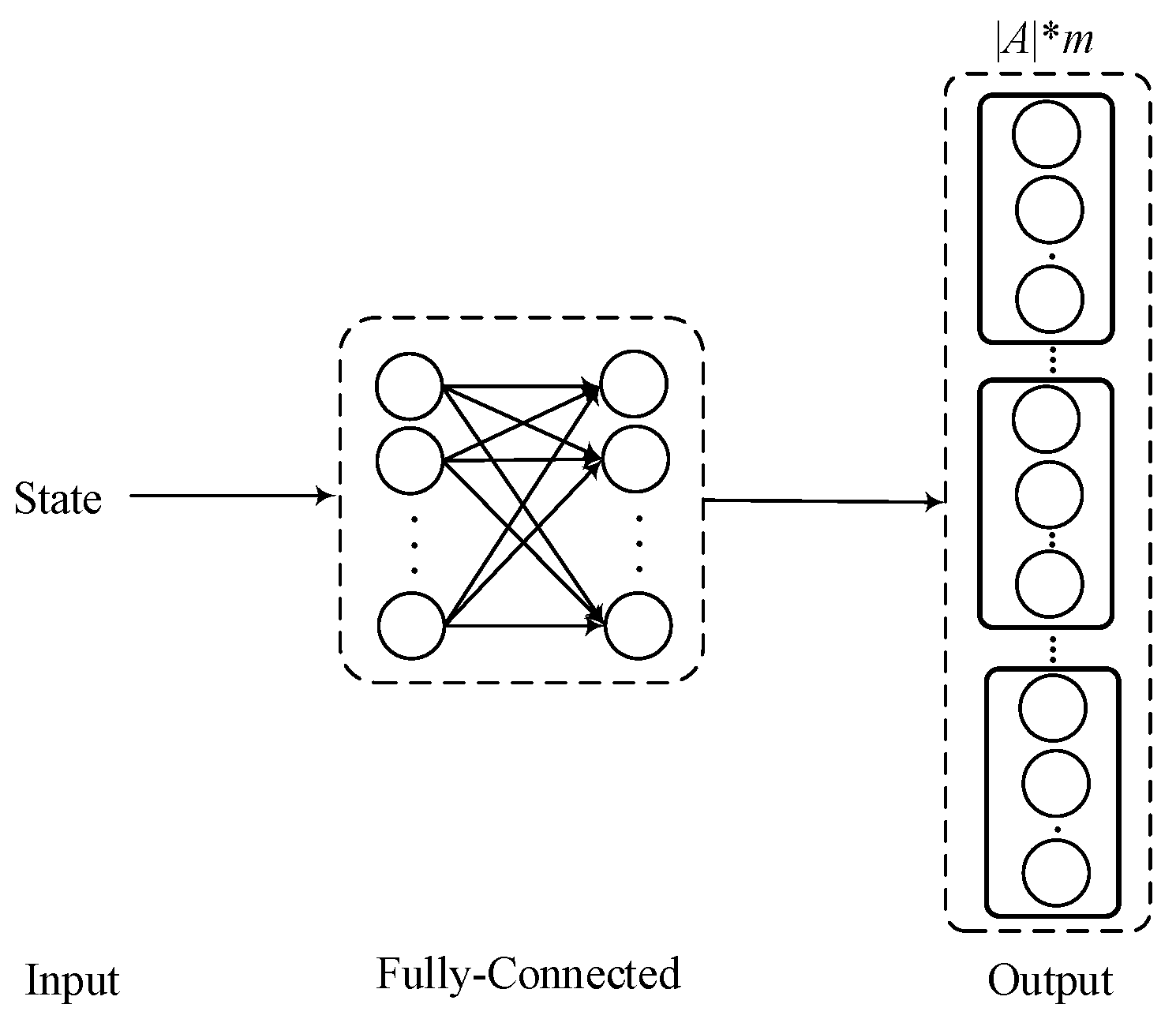

3.3. Multi-Objective Deep Reinforcement Learning

3.4. Envelope Update Method

4. EDNs Algorithm

4.1. Dueling Network

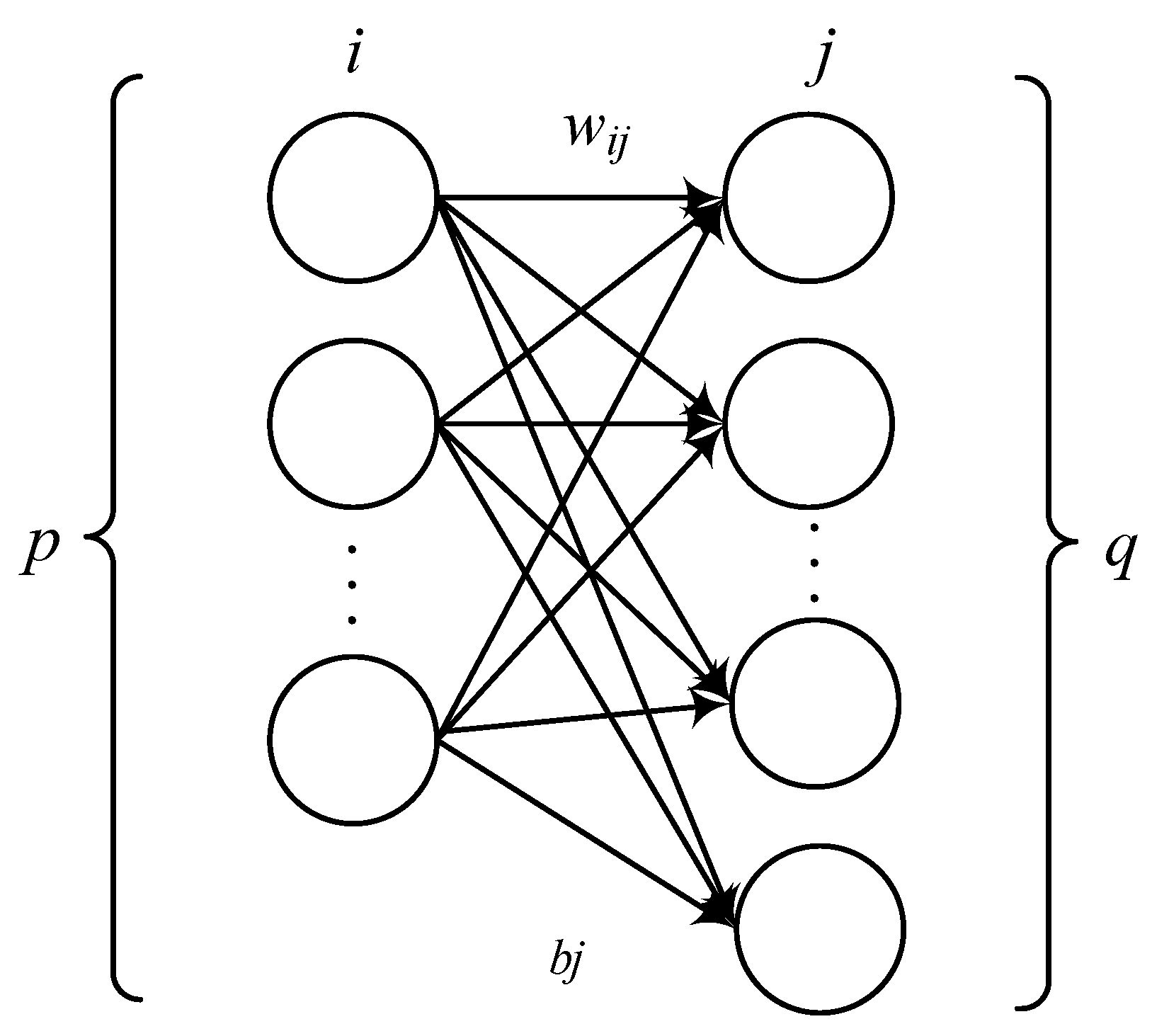

4.2. Exploring Noise

4.3. Soft Update of the Target Network

4.4. Algorithm Description

| Algorithm 1 Envelope with dueling structure, Noisynet, and soft update. |

|

5. Experiment Design and Result Analysis

5.1. Environment Description and Parameter Setting

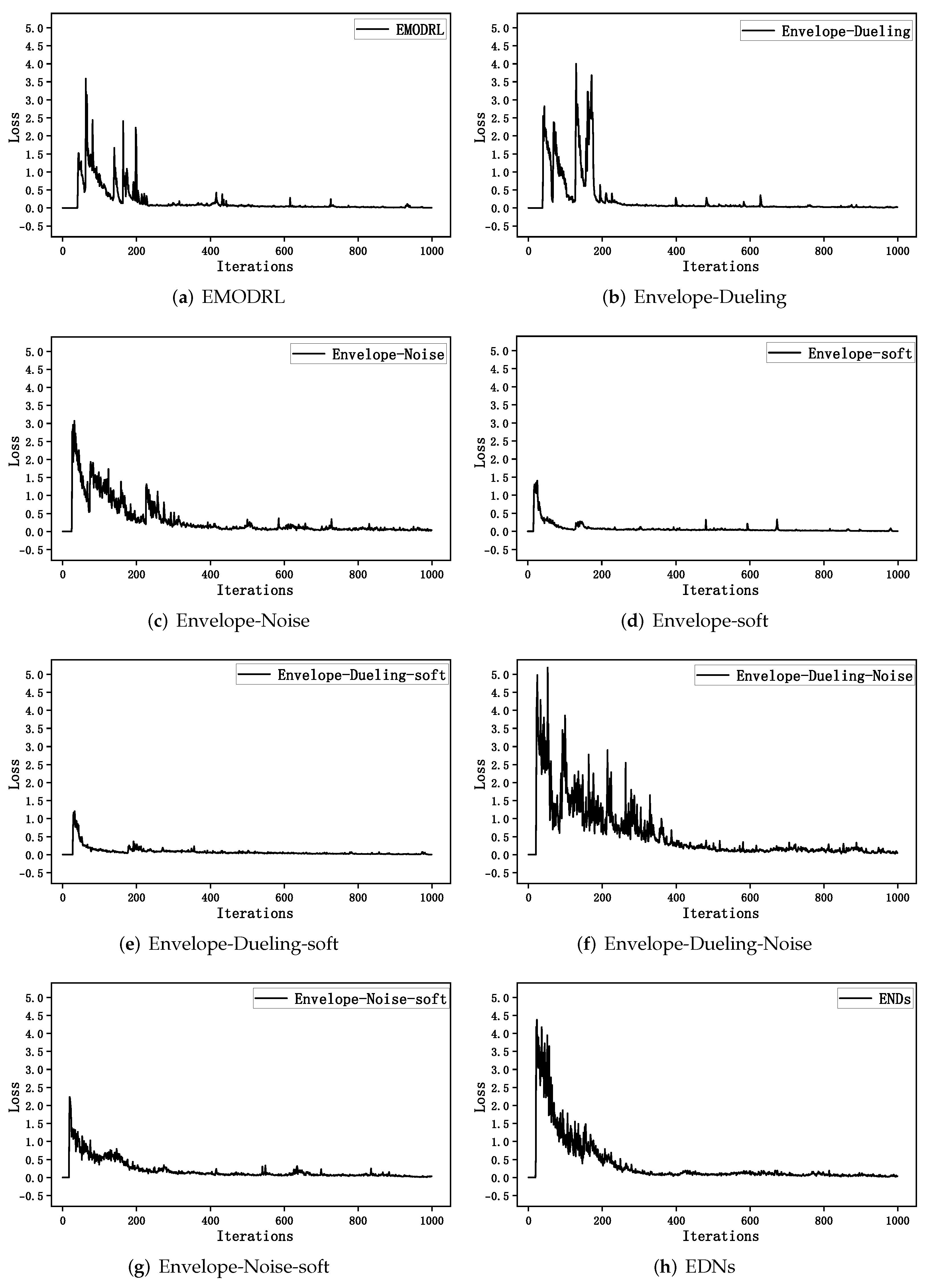

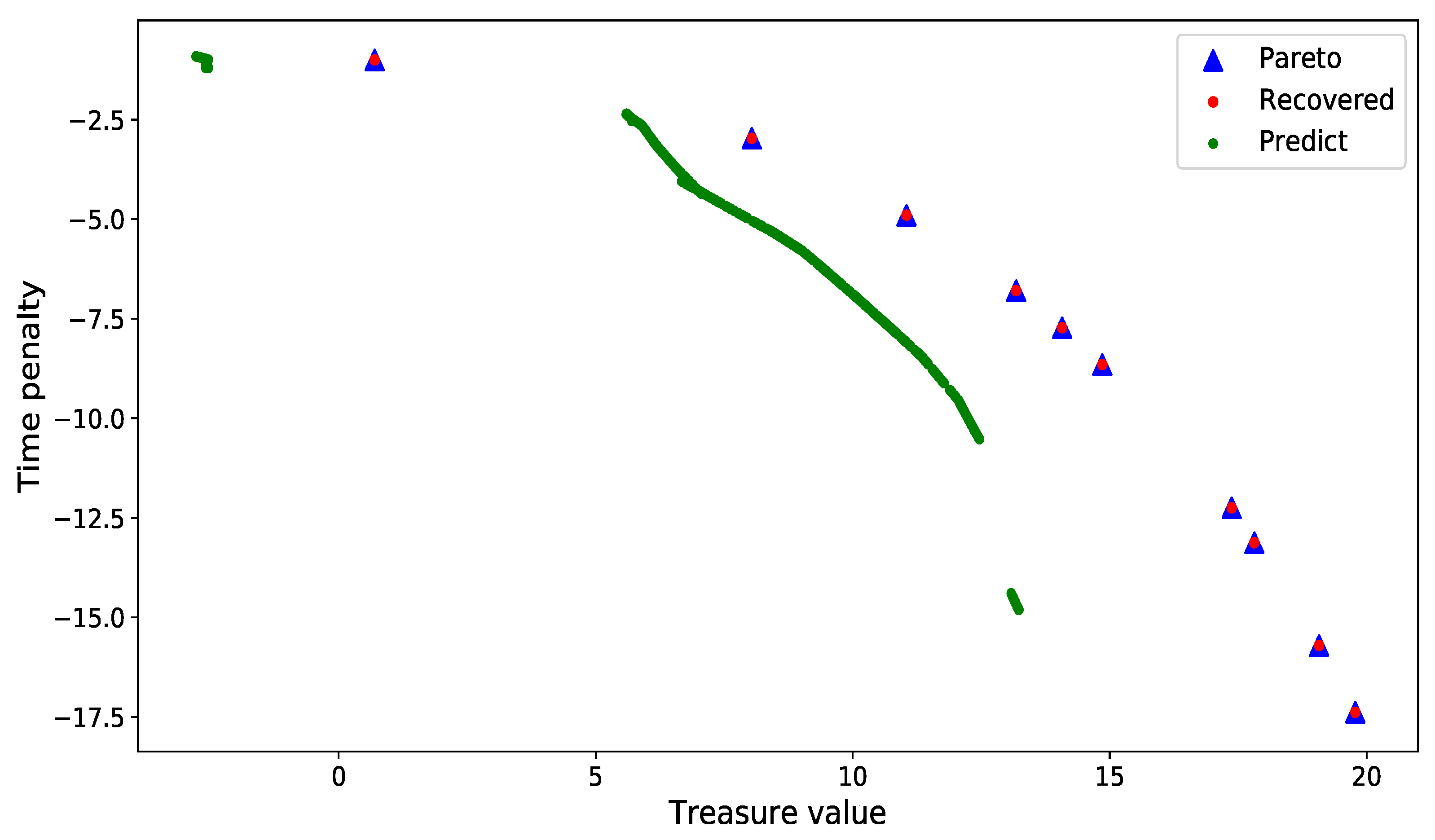

5.2. Ablation Study

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Gronauer, S.; Diepold, K. Multi-agent deep reinforcement learning: A survey. Artif. Intell. Rev. 2022, 55, 895–943. [Google Scholar] [CrossRef]

- Pateria, S.; Subagdja, B.; Tan, A.H.; Quek, C. Hierarchical reinforcement learning: A comprehensive survey. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Chen, W.; Qiu, X.; Cai, T.; Dai, H.N.; Zheng, Z.; Zhang, Y. Deep reinforcement learning for Internet of Things: A comprehensive survey. IEEE Commun. Surv. Tutor. 2021, 23, 1659–1692. [Google Scholar] [CrossRef]

- Czech, J. Distributed methods for reinforcement learning survey. In Reinforcement Learning Algorithms: Analysis and Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 151–161. [Google Scholar]

- Schneider, S.; Khalili, R.; Manzoor, A.; Qarawlus, H.; Schellenberg, R.; Karl, H.; Hecker, A. Self-learning multi-objective service coordination using deep reinforcement learning. IEEE Trans. Netw. Serv. Manag. 2021, 18, 3829–3842. [Google Scholar] [CrossRef]

- Hayes, C.F.; Rădulescu, R.; Bargiacchi, E.; Källström, J.; Macfarlane, M.; Reymond, M.; Verstraeten, T.; Zintgraf, L.M.; Dazeley, R.; Heintz, F.; et al. A practical guide to multi-objective reinforcement learning and planning. Auton. Agents Multi-Agent Syst. 2022, 36, 26. [Google Scholar] [CrossRef]

- Nakayama, H.; Yun, Y.; Yoon, M. Sequential Approximate Multiobjective Optimization Using Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Konak, A.; Coit, D.W.; Smith, A.E. Multi-objective optimization using genetic algorithms: A tutorial. Reliab. Eng. Syst. Saf. 2006, 91, 992–1007. [Google Scholar] [CrossRef]

- Friedman, E.; Fontaine, F. Generalizing across multi-objective reward functions in deep reinforcement learning. arXiv 2018, arXiv:1809.06364. [Google Scholar]

- Roijers, D.M.; Vamplew, P.; Whiteson, S.; Dazeley, R. A survey of multi-objective sequential decision-making. J. Artif. Intell. Res. 2013, 48, 67–113. [Google Scholar] [CrossRef]

- Dornheim, J. gTLO: A Generalized and Non-linear Multi-Objective Deep Reinforcement Learning Approach. arXiv 2022, arXiv:2204.04988. [Google Scholar]

- Andrychowicz, M.; Wolski, F.; Ray, A.; Schneider, J.; Fong, R.; Welinder, P.; McGrew, B.; Tobin, J.; Pieter Abbeel, O.; Zaremba, W. Hindsight experience replay. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dubey, R.; Mishra, V.N. Higher-order symmetric duality in nondifferentiable multiobjective fractional programming problem over cone contraints. Stat. Optim. Inf. Comput. 2020, 8, 187–205. [Google Scholar] [CrossRef]

- Vandana, D.R.; Deepmala, M.L.; Mishra, V. Duality relations for a class of a multiobjective fractional programming problem involving support functions. Am. J. Oper. Res. 2018, 8, 294–311. [Google Scholar] [CrossRef][Green Version]

- Vamplew, P.; Dazeley, R.; Berry, A.; Issabekov, R.; Dekker, E. Empirical evaluation methods for multiobjective reinforcement learning algorithms. Mach. Learn. 2011, 84, 51–80. [Google Scholar] [CrossRef]

- Van Moffaert, K.; Drugan, M.M.; Nowé, A. Scalarized multi-objective reinforcement learning: Novel design techniques. In Proceedings of the 2013 IEEE Symposium on Adaptive Dynamic Programming and Reinforcement Learning (ADPRL), Singapore, 16–19 April 2013; pp. 191–199. [Google Scholar]

- Vamplew, P.; Dazeley, R.; Foale, C. Softmax exploration strategies for multiobjective reinforcement learning. Neurocomputing 2017, 263, 74–86. [Google Scholar] [CrossRef]

- Abels, A.; Roijers, D.; Lenaerts, T.; Nowé, A.; Steckelmacher, D. Dynamic weights in multi-objective deep reinforcement learning. In Proceedings of the International Conference on Machine Learning (PMLR), Long Beach, CA, USA, 9–15 June 2019; pp. 11–20. [Google Scholar]

- Xu, J.; Tian, Y.; Ma, P.; Rus, D.; Sueda, S.; Matusik, W. Prediction-guided multi-objective reinforcement learning for continuous robot control. In Proceedings of the International Conference on Machine Learning (PMLR), Virtual, 13–18 July 2020; pp. 10607–10616. [Google Scholar]

- de Oliveira, T.H.F.; de Souza Medeiros, L.P.; Neto, A.D.D.; Melo, J.D. Q-Managed: A new algorithm for a multiobjective reinforcement learning. Expert Syst. Appl. 2021, 168, 114228. [Google Scholar] [CrossRef]

- Tajmajer, T. Modular multi-objective deep reinforcement learning with decision values. In Proceedings of the 2018 Federated Conference on Computer Science and Information Systems (FedCSIS), Poznan, Poland, 9–12 September 2018; pp. 85–93. [Google Scholar]

- Nguyen, T.T.; Nguyen, N.D.; Vamplew, P.; Nahavandi, S.; Dazeley, R.; Lim, C.P. A multi-objective deep reinforcement learning framework. Eng. Appl. Artif. Intell. 2020, 96, 103915. [Google Scholar] [CrossRef]

- Nguyen, N.D.; Nguyen, T.T.; Vamplew, P.; Dazeley, R.; Nahavandi, S. A Prioritized objective actor–critic method for deep reinforcement learning. Neural Comput. Appl. 2021, 33, 10335–10349. [Google Scholar] [CrossRef]

- Guo, K.; Zhang, L. Multi-objective optimization for improved project management: Current status and future directions. Autom. Constr. 2022, 139, 104256. [Google Scholar] [CrossRef]

- Monfared, M.S.; Monabbati, S.E.; Kafshgar, A.R. Pareto-optimal equilibrium points in non-cooperative multi-objective optimization problems. Expert Syst. Appl. 2021, 178, 114995. [Google Scholar] [CrossRef]

- Peer, O.; Tessler, C.; Merlis, N.; Meir, R. Ensemble bootstrapping for Q-Learning. In Proceedings of the International Conference on Machine Learning (PMLR), Virtual, 18–24 July 2021; pp. 8454–8463. [Google Scholar]

- Yang, R.; Sun, X.; Narasimhan, K. A generalized algorithm for multi-objective reinforcement learning and policy adaptation. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling network architectures for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning (PMLR), New York, NY, USA, 20–22 June 2016; pp. 1995–2003. [Google Scholar]

- Zhu, Z.; Hu, C.; Zhu, C.; Zhu, Y.; Sheng, Y. An improved dueling deep double-q network based on prioritized experience replay for path planning of unmanned surface vehicles. J. Mar. Sci. Eng. 2021, 9, 1267. [Google Scholar] [CrossRef]

- Treesatayapun, C. Output Feedback Controller for a Class of Unknown Nonlinear Discrete Time Systems Using Fuzzy Rules Emulated Networks and Reinforcement Learning. Fuzzy Inf. Eng. 2021, 13, 368–390. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, C.; Wang, J.; Ouyang, D.; Zheng, Y.; Shao, J. Exploring parameter space with structured noise for meta-reinforcement learning. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, Yokohama, Japan, 7–15 January 2021; pp. 3153–3159. [Google Scholar]

- Yang, T.; Tang, H.; Bai, C.; Liu, J.; Hao, J.; Meng, Z.; Liu, P. Exploration in deep reinforcement learning: A comprehensive survey. arXiv 2021, arXiv:2109.06668. [Google Scholar]

- Fortunato, M.; Azar, M.G.; Piot, B.; Menick, J.; Osband, I.; Graves, A.; Mnih, V.; Munos, R.; Hassabis, D.; Pietquin, O.; et al. Noisy networks for exploration. arXiv 2017, arXiv:1706.10295. [Google Scholar]

- Sokar, G.; Mocanu, E.; Mocanu, D.C.; Pechenizkiy, M.; Stone, P. Dynamic sparse training for deep reinforcement learning. arXiv 2021, arXiv:2106.04217. [Google Scholar]

| Algorithm | Learning Rate | Discount Factor | Soft Update Factor |

|---|---|---|---|

| EMODRL | 0.001 | 0.99 | / |

| Envelope–dueling | 0.001 | 0.99 | / |

| Envelope–noise | 0.001 | 0.99 | / |

| Envelope–soft | 0.001 | 0.99 | 0.01 |

| Envelope–dueling–soft | 0.001 | 0.99 | 0.01 |

| Envelope–dueling–noise | 0.001 | 0.99 | / |

| Envelope–noise–soft | 0.001 | 0.99 | 0.01 |

| ENDs | 0.001 | 0.99 | 0.01 |

| Algorithm | Coverage Ratio | Adaptation Error |

|---|---|---|

| EMODRL | 94.7% | 0.198 |

| Envelope–dueling | 95.8% | 0.338 |

| Envelope–noise | 97.1% | 0.275 |

| Envelope–soft | 91.4% | 0.132 |

| Envelope–dueling–soft | 95.1% | 0.177 |

| Envelope–dueling–noise | 98.2% | 0.414 |

| Envelope–noise–soft | 96.3% | 0.157 |

| ENDs | 99.8% | 0.125 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, C.; Zhu, Z.; Wang, L.; Zhu, C.; Yang, Y. An Improved Multi-Objective Deep Reinforcement Learning Algorithm Based on Envelope Update. Electronics 2022, 11, 2479. https://doi.org/10.3390/electronics11162479

Hu C, Zhu Z, Wang L, Zhu C, Yang Y. An Improved Multi-Objective Deep Reinforcement Learning Algorithm Based on Envelope Update. Electronics. 2022; 11(16):2479. https://doi.org/10.3390/electronics11162479

Chicago/Turabian StyleHu, Can, Zhengwei Zhu, Lijia Wang, Chenyang Zhu, and Yanfei Yang. 2022. "An Improved Multi-Objective Deep Reinforcement Learning Algorithm Based on Envelope Update" Electronics 11, no. 16: 2479. https://doi.org/10.3390/electronics11162479

APA StyleHu, C., Zhu, Z., Wang, L., Zhu, C., & Yang, Y. (2022). An Improved Multi-Objective Deep Reinforcement Learning Algorithm Based on Envelope Update. Electronics, 11(16), 2479. https://doi.org/10.3390/electronics11162479