TMCS-ENN: A Temporal Feature-Correlation Cuckoo Search-Elman Neural Network for Sugar Raw Materials Demands Prediction

Abstract

:1. Introduction

2. Related Work

3. Background Knowledge

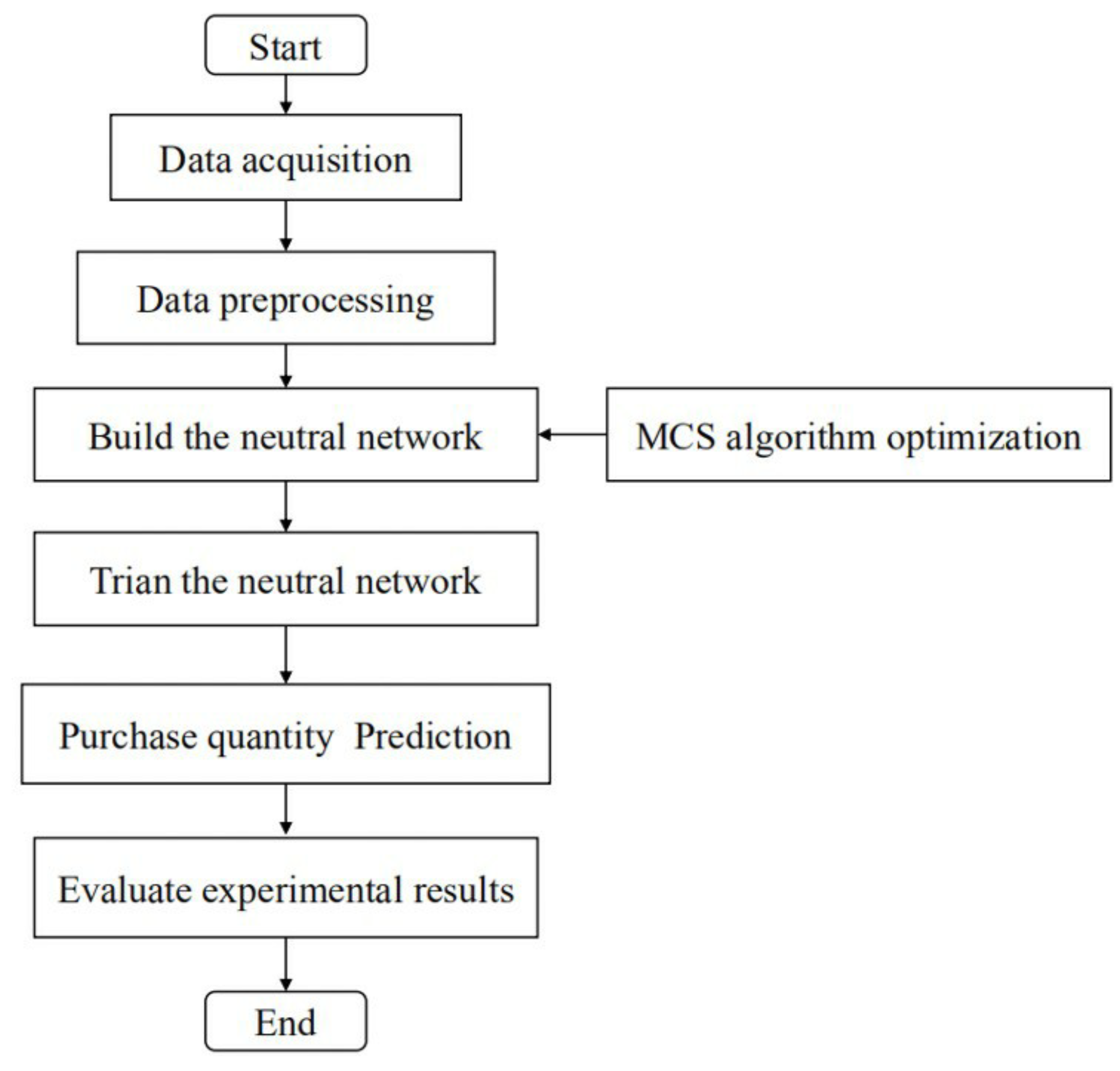

3.1. Research Ideas

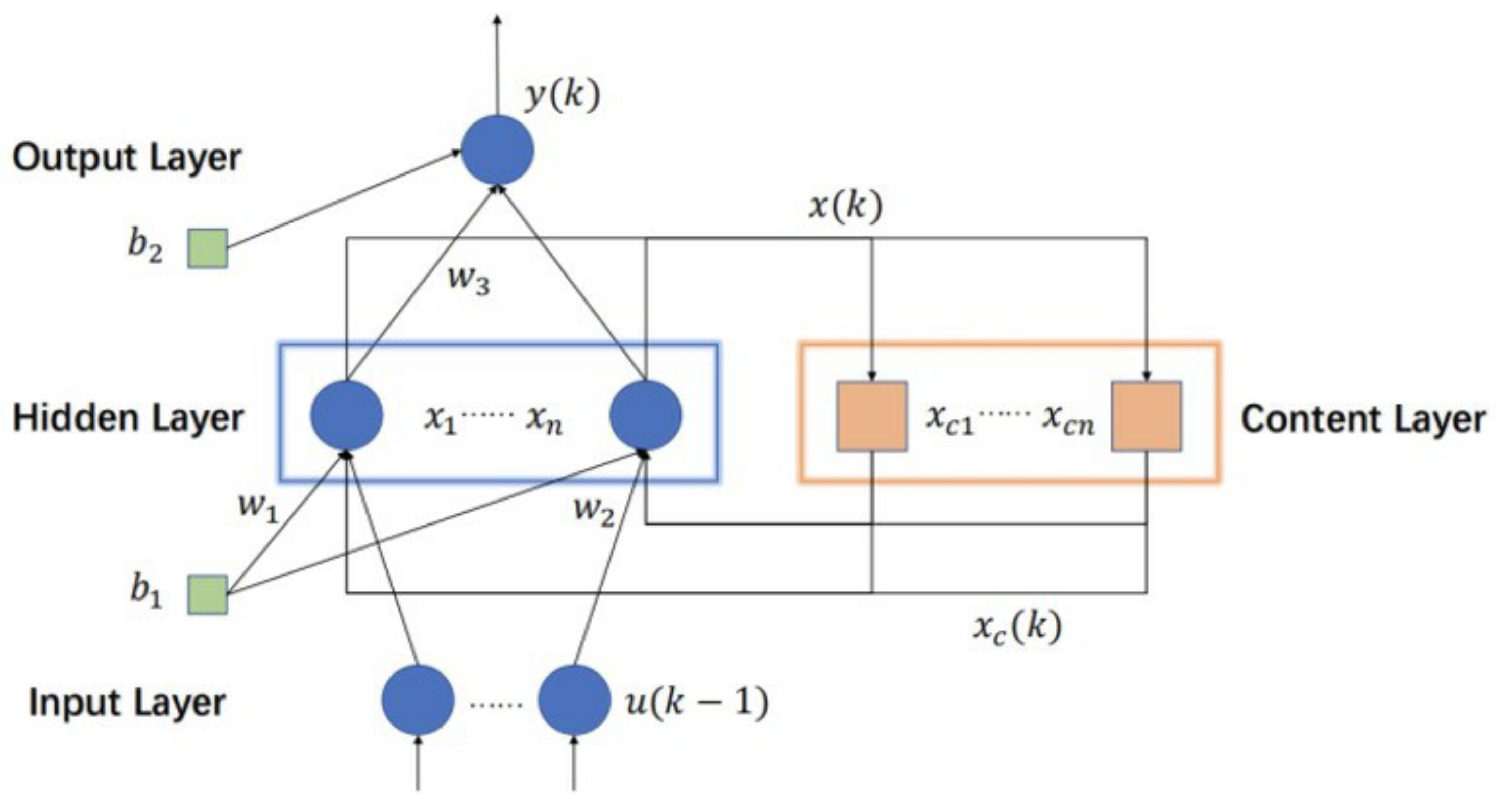

3.2. Elman Neural Network

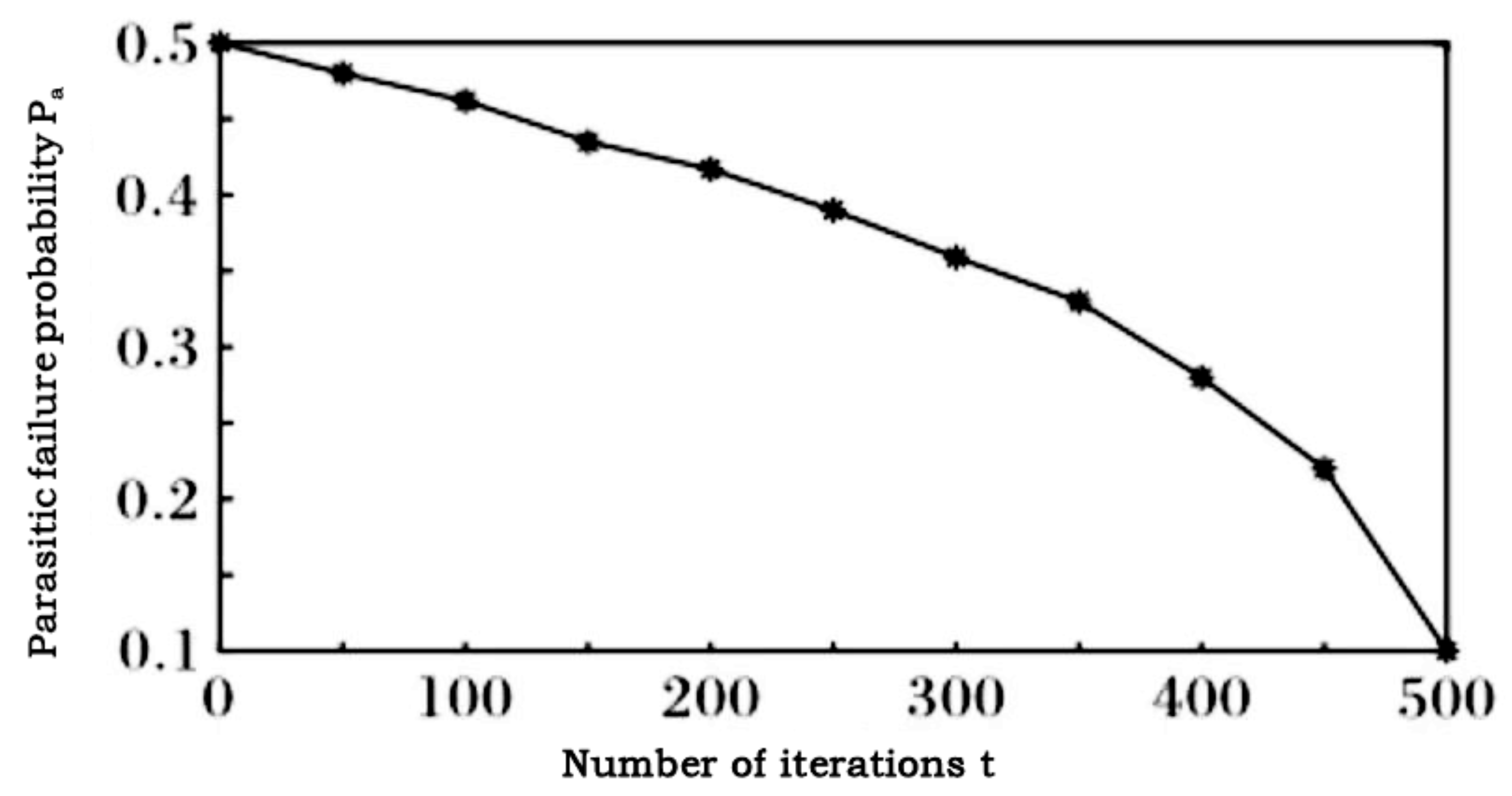

3.3. Cuckoo Search Algorithm

3.4. Variables and Acronyms Definition

| Type | Abbreviation | Full Name |

| Acronyms | CS algorithm | Cuckoo search algorithm |

| Acronyms | MCS algorithm | Improved cuckoo search algorithm |

| Acronyms | ENN | Elman neural network |

| Acronyms | TMCS-ENN | MCS-Elman neural network |

| Acronyms | RMSE | Root-mean-square error |

| Acronyms | MAPE | Mean Absolute Percentage Error |

| Variables | The updated position of nest in CS algorithm | |

| Variables | Pa | Parasitic failure probability |

| Variables | t | Number of iterations |

4. The Temporal Feature-Correlation Cuckoo Search-Elman Neural Model

4.1. Research and Analysis

4.2. TMCS-Elman Neural Network (TMCS-ENN) Structure

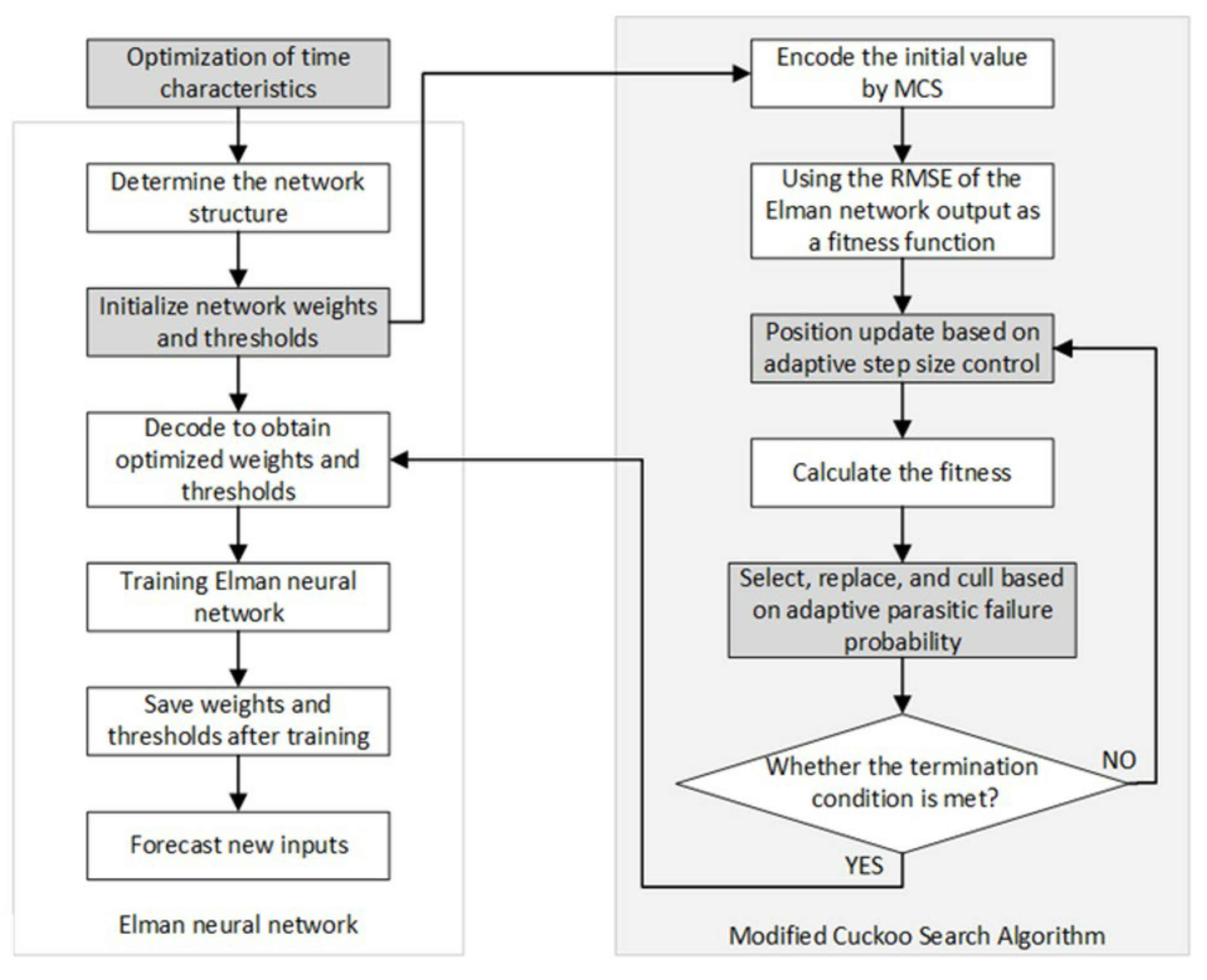

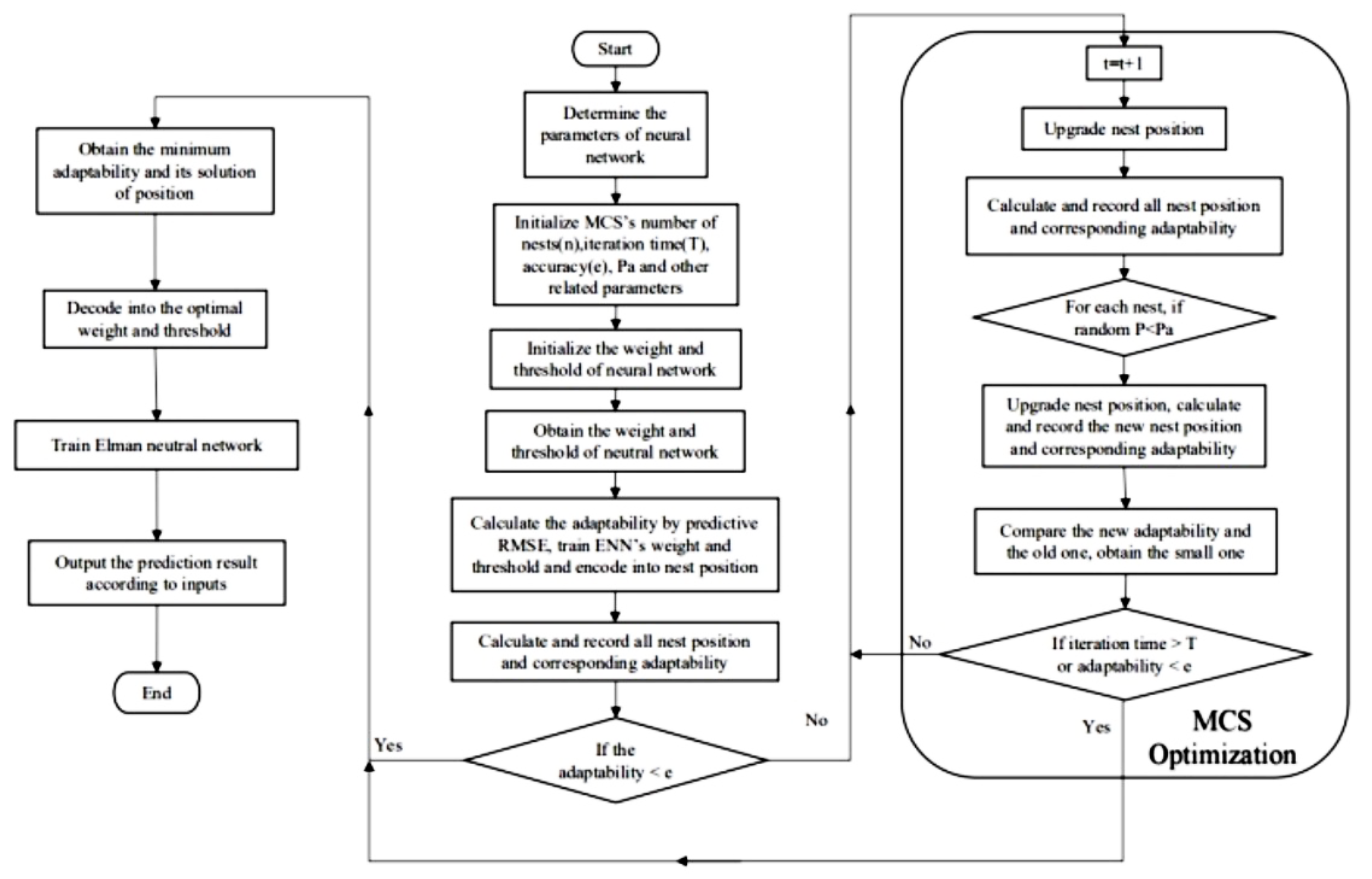

4.3. The Weight and Threshold Optimization of TMCS-ENN Model

- Elucidate the Elman neural network’s structure, weight, and threshold number according to the dimensions of the data source.

- Optimize the Elman neural network’s weight and threshold by using the MCS algorithm. Each individual is optimized according to the value of its fitness during optimization.

4.4. The Temporal Feature Optimization of TMCS-ENN

| Algorithm 1:The pseudocode of the TMCS-ENN prediction model. |

|

5. Experiments

5.1. Datasets and Analysis

5.2. Experimental Data Preprocessing

5.3. Experiment Design and Analysis

- (1)

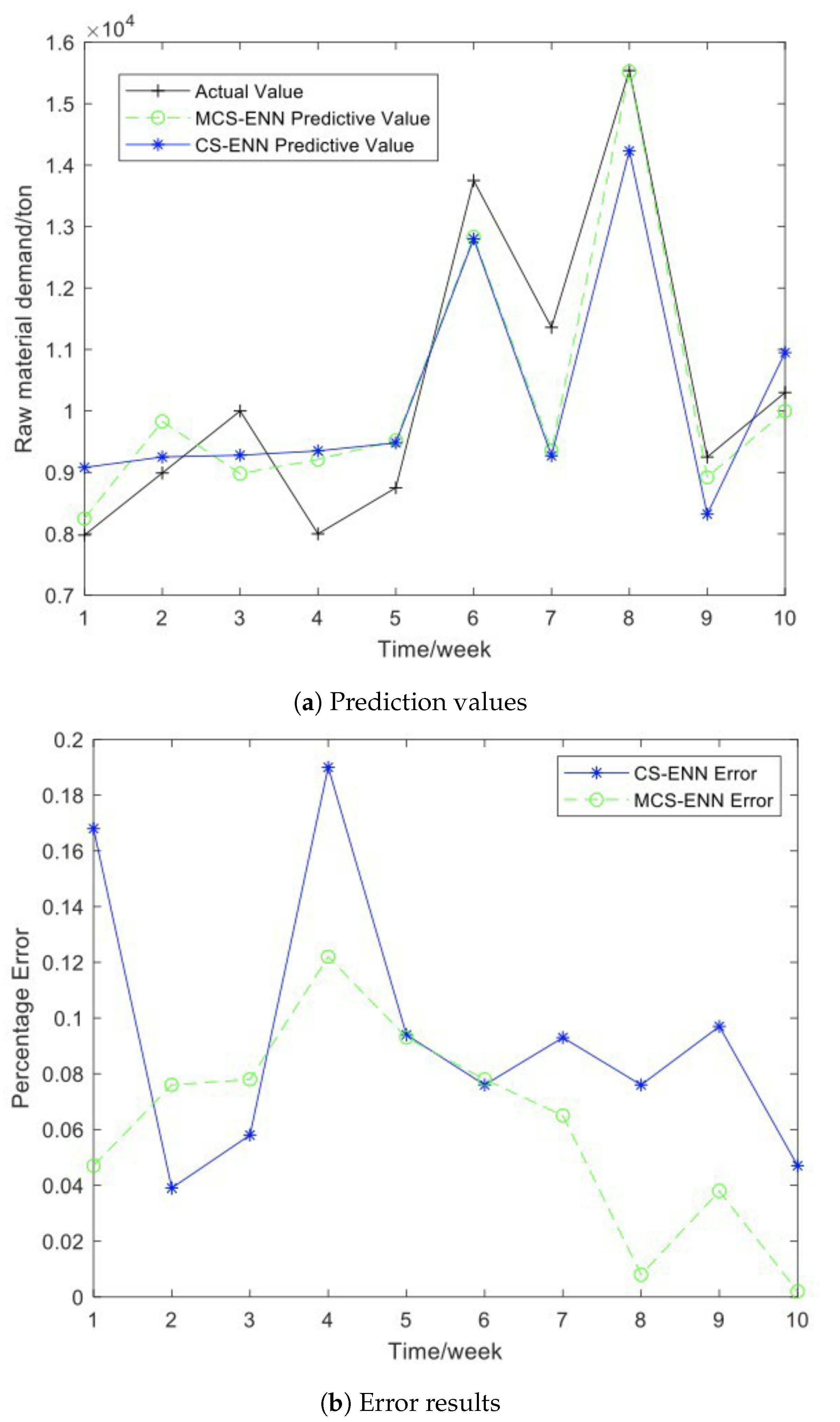

- The effect of weight and threshold optimization

- (2)

- The effect of MCS algorithm on predictive model

- (3)

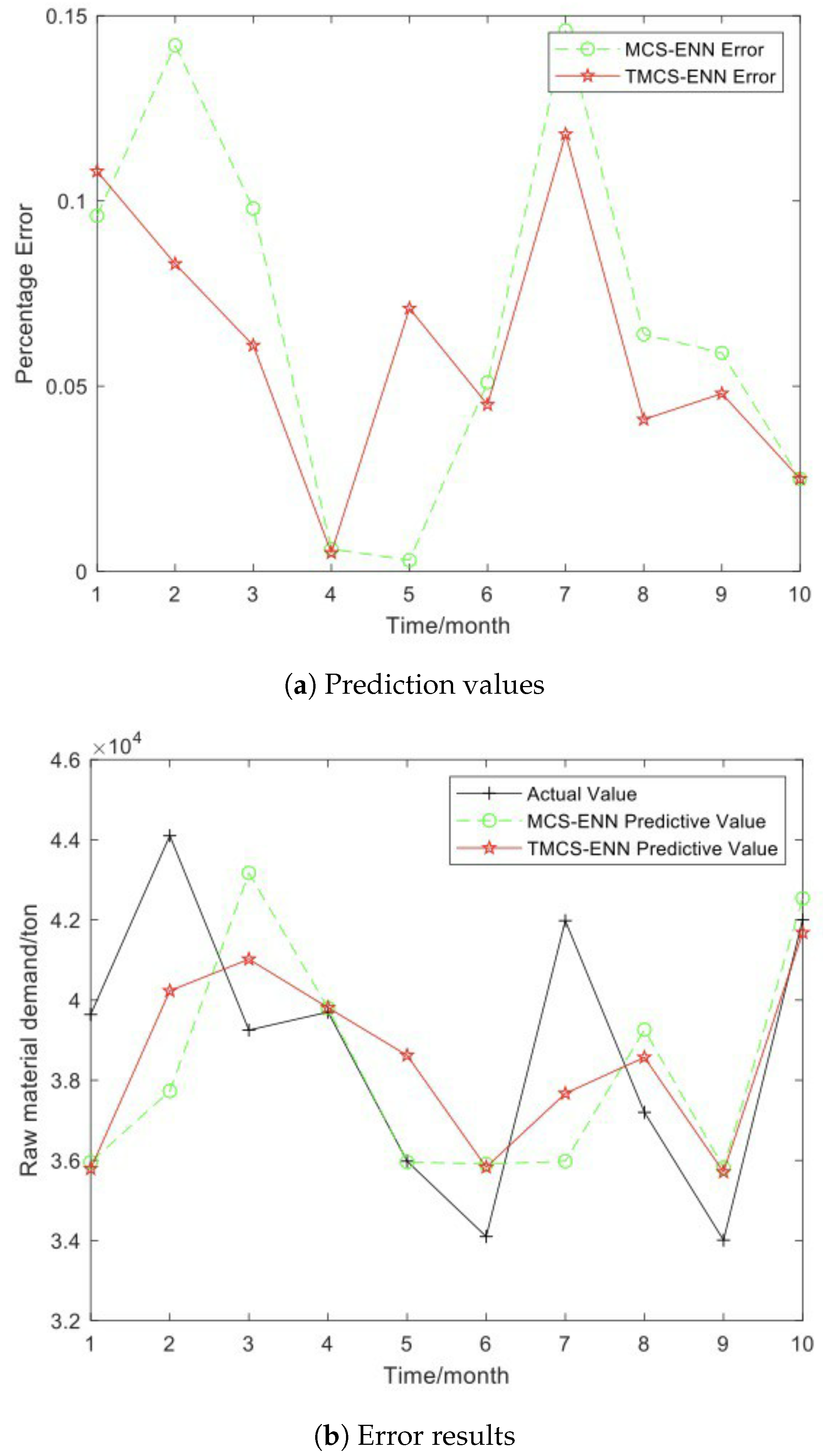

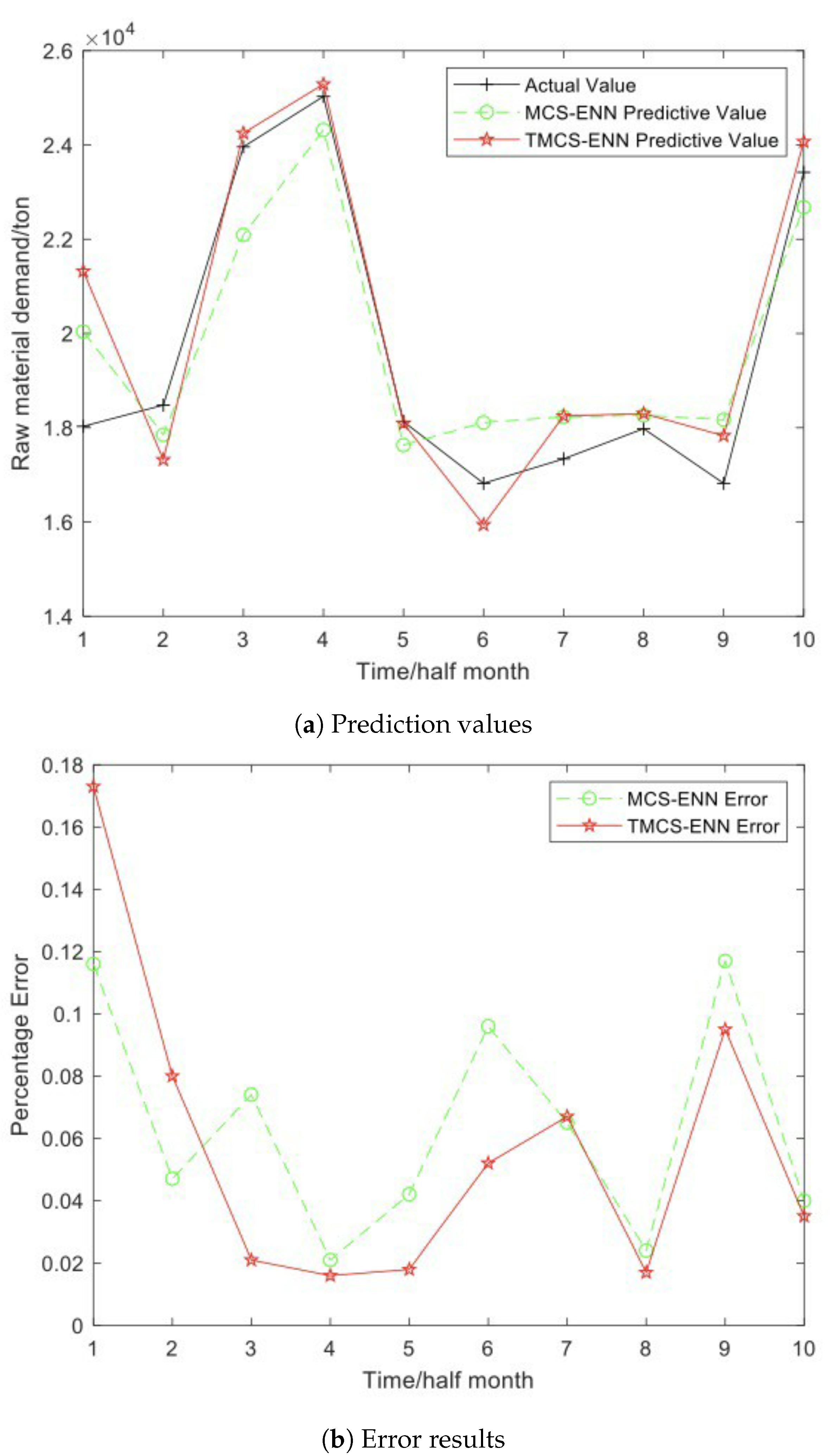

- The effect of temporal feature on predictive model

- (4)

- The effect of time granularity on predictive model

6. Conclusions and Future

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ekawati, R.; Kurnia, E.; Wardah, S.; Djatna, T. Predictive demand analytics for inventory control in refined sugar supply chain downstream of paper. In Proceedings of the 2019 International Seminar on Application for Technology of Information and Communication (iSemantic), Semarang, Indonesia, 21–22 September 2019; pp. 100–104. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhou, M.; Li, C.; Zheng, X.; Wang, K.; Yang, S. Case analysis on value creation and sustainable development path of supply chain integrators of paper. In Proceedings of the 2018 15th International Conference on Service Systems and Service Management (ICSSSM), Hang Zhou, China, 21–22 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Ke, U.F.; Liang, G.T.; Wang, X.Y. Joint inventory and production decisions under fluctuating material costs. J. Manag. Ences China 2016, 47–58. [Google Scholar]

- Modi, A.S. Review article on deep learning approaches of paper. In Proceedings of the 2018 International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018; pp. 1635–1639. [Google Scholar] [CrossRef]

- Sharma, O. Deep challenges associated with deep learning of paper. In Proceedings of the ComitCon-2019: International Conference on Machine Learning, Big Data, Cloud and Parallel Computing, Faridabad, India, 14–16 February 2019; pp. 72–75. [Google Scholar] [CrossRef]

- Liu, J.; Liu, X.-Z.; Li, Y. Two Subpopulations Cuckoo Search Algorithm Based on Mean Evaluation Method for Function Optimization Problems. Int. J. Pattern Recognit. Artif. Intell. 2020, 34, 2059027. [Google Scholar] [CrossRef]

- Pan, S.; Liu, J.; Wang, Y. Forecasting stock returns with artificial neural networks. J. Zhejiang Univ. (Sci. Ed.) 2019, 46, 550–555. [Google Scholar] [CrossRef]

- Guo, Y.; Lu, L. Application of a Traffic Flow Prediction Model Based on Neural Network in Intelligent Vehicle Management. Int. J. Pattern Recognit. Artif. Intell. 2018, 33, 1959009.1–1959009.18. [Google Scholar] [CrossRef]

- Li, J.; Yang, L. Prediction of time series data based on multi-time scale rnn. Comput. Appl. Softw. 2018, 35, 33–37. [Google Scholar]

- Yang, H.M. Review of Time Series Prediction Methods. Comput. Sci. 2019. [Google Scholar]

- Klein, J.L.; Klein, D. Statistical Visions in Time: A History of Time Series Analysis, 1662–1938; Cambridge University Press: Cambridge, UK, 28 October 1997. [Google Scholar]

- Wu, C.L.; Chau, K.W. Data-driven models for monthly streamflow time series prediction. Eng. Appl. Artif. Intell. 2010, 23, 1350–1367. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Q.-H.; Ni, D.-M. Integrated demand forecasting model and its integration with inventory decision. J. Manag. Sci. China 2013, 1007–9807. [Google Scholar]

- Abraham, T.H. (Physio) logical circuits: The intellectual origins of the McCulloch–Pitts neu-ral networks. J. Hist. Behav. Sci. 2002, 38, 3–25. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Cheng, J.H.; Shi, J.Y.; Huang, F. Brief introduction of back propagation (BP) neural network algorithm and its improve-ment. In Advances in Computer Science and Information Engineering; Springer: Berlin/Heidelberg, Germany, 2012; pp. 553–558. [Google Scholar]

- Xia, Q.-Y.; Yang, J.-M. Research on BP neural network method in spare parts demand fore-casting. In Proceedings of the 7th Annual Academic Conference of Chinese Enterprise Operations Research, Hong Kong, China, 2–3 March 2012; pp. 98–105. [Google Scholar]

- Jiang, S.; Yang, C.; Li, P. Research on application of BP- ANN forecast in process supply chain. Opreations Res. Manag. Sci. 2013, 12, 57–61. [Google Scholar]

- Huang, Y.-B.; Cai, Q.-M.; Yang, W.-L.; Huang, Y.-M. Research of spare parts requirement prediction based on support vector regression. Logist. Sci-Tech 2006, 29, 95–97. [Google Scholar] [CrossRef]

- Zong, C.-S.; Zheng, H.-X. Grain Yield Prediction Based on BP Neural Network Optimized by Improved Particle Swarm Optimization. Comput. Syst. Appl. 2018, 27, 204–209. [Google Scholar]

- Guo-yan, X.U.; Jin, Z.H.U.; Cun-you, S.I.; Wen-bin, H.U.; Fan, L.I.U. Combined Hydrological Time Series Forecasting Model Based on CNN and MC. Comput. Mod. 2019. [Google Scholar] [CrossRef]

- Song, K.; Lv, S.; Hu, D.; He, P. Software Defect Prediction Based on Elman Neural Network and Cuckoo Search Algorithm. Math. Probl. Eng. 2021, 2021, 5954432. [Google Scholar] [CrossRef]

- Gao, X.-Z.; Gao, X.-M.; Ovaska, S.J. A modified Elman neural network model with application to dynamical systems identification of paper. In Proceedings of the 1996 IEEE International Conference on Systems, Man and Cybernetics: Information Intelligence and Systems, Beijing, China, 14–17 October 1996; pp. 1376–1381. [Google Scholar]

- Yi, L.; Ke, X.; Junde, S.; Yuwen, Z.; Qiang, B. Forecasting model based on an improved Elman neural network and its application in the agricultural production of paper. In Proceedings of the 2013 IEEE International Conference on Granular Computing (GrC), Beijing, China, 13–15 December 2013; pp. 202–207. [Google Scholar]

- Yan, W. An improved Elman neural network algorithm. J. Bohai Univ. (Natural Sci. Ed. China) 2007, 377–381. [Google Scholar]

- Zhongji, Q.; Mandan, L.; Honggang, W. Process modeling method based on an improved Elman Neural Network of paper. In Proceedings of the 2008 7th World Congress on Intelligent Control and Automation, Chongqing, China, 25–27 June 2008; pp. 8188–8192. [Google Scholar] [CrossRef]

- Liu, S.; Yan, M.; Tai, H.; Xu, L.; Li, D. Prediction of dissolved oxygen content in aquaculture of Hyriopsis cumingii using elman neural network of paper. In International Conference on Computer and Computing Technologies in Agriculture; Li, D., Chen, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 508–518. [Google Scholar]

- Ouaarab, A.; Ahiod, B.; Yang, X.-S. Discrete cuckoo search algorithm for the travelling salesman problem. Neural Comput. Appl. 2014, 24, 1659–1669. [Google Scholar] [CrossRef]

- Cai, Z.; Yang, X. Cuckoo search algorithm with deep search of paper. In Proceedings of the 2017 3rd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 13–16 December 2017; pp. 2241–2246. [Google Scholar] [CrossRef]

- Soneji, H.; Sanghvi, R.C. Towards the improvement of Cuckoo search algorithm of paper. In Proceedings of the 2012 World Congress on Information and Communication Technologies (WICT 2012), Trivandrum, India, 30 October–2 November 2012; pp. 878–883. [Google Scholar] [CrossRef]

- Vazquez, R.A. Training spiking neural models using cuckoo search algorithm of paper. In Proceedings of the 2011 IEEE Congress of Evolutionary Computation (CEC), New Orleans, LA, USA, 5–8 June 2011; pp. 679–686. [Google Scholar] [CrossRef]

- Marcos-Jorquera, D.; Maciá-Pérez, F.; Gilart-Iglesias, V.; Berná-Martínez, J.V. Business continuity model. Regeneration system for manufacturing components of paper. In Proceedings of the 2006 10th IEEE International Enterprise Distributed Object Computing Conference (EDOC’06), Hong Kong, China, 16–20 October 2006; pp. 97–105. [Google Scholar] [CrossRef] [Green Version]

- Kasemset, C.; Petchalalai, A. Application of mathematical model for raw material storage management of paper. In Proceedings of the 2018 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Bangkok, Thailand, 16–19 December 2018; pp. 879–883. [Google Scholar] [CrossRef]

- Zheng, Y.; Yao, Z.; Zhou, H.; Yang, C.; Zhang, H.; Li, M.; Fan, L. Power generation forecast of top gas recovery turbine unit based on Elman model of paper. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 7498–7501. [Google Scholar] [CrossRef]

- Kang, C.H.; Cho, S.Y. Neural networks based modeling with adaptive selection of hidden layer’s node for path loss model. J. Position. Navig. Timing 2019, 8, 193–200. [Google Scholar] [CrossRef]

- Rastegar, R.; Hariri, A. A step forward in studying the compact genetic algorithm. Evol. Comput. 2014, 14, 277–289. [Google Scholar] [CrossRef] [Green Version]

- Shen, H.Y.; Wang, Z.X.; Gao, C.Y.; Qin, J.; Yao, F.B.; Xu, W. Determination of the number of hidden layer units of BP neural network. J. Tianjin Univ. Technol. 2008, 24, 13–15. [Google Scholar] [CrossRef]

- Wang, R.; Xu, H.; Li, B. Research on the method of determining the number of hidden layer nodes of BP neural network. Comput. Technol. Dev. 2018. [Google Scholar] [CrossRef]

- Yu, Y.; Li, C.; Yang, Q.; Wang, Z. The fault diagnosis based on self-organizing fuzzy clustering Elman network in wind turbines of paper. In Proceedings of the 2016 Chinese Control and Decision Conference (CCDC), Yinchuan, China, 28–30 May 2016; pp. 2940–2945. [Google Scholar] [CrossRef]

- Chibane, F.; Benammar, A.; Drai, R. Parameters estimation of ultrasonics echoes using the cuckoo search and adaptive cuckoo search algorithms of paper. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 2415–2418. [Google Scholar] [CrossRef]

- Tholen, C.; El-Mihoub, T.A.; Nolle, L.; Zielinski, O. On the robustness of self-adaptive levy-flight of paper. In Proceedings of the 2018 OCEANS—MTS/IEEE Kobe Techno-Oceans (OTO), Kobe, Japan, 28–31 May 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Song, Q. On the weight convergence of Elman Networks. IEEE Trans. Neural Netw. 2010, 21, 463–480. [Google Scholar] [CrossRef] [PubMed]

- Shi, S. Agile manufacturing predicting model based on neural network and automatic logistics predicting system in China of paper. In Proceedings of the 2012 Second International Conference on Intelligent System Design and Engineering Application, Sanya, China, 6–7 January 2012; pp. 764–767. [Google Scholar] [CrossRef]

- Kadam, P.; Vijayumar, S. Prediction Model: CO2 emission using machine learning of paper. In Proceedings of the 2018 3rd International Conference for Convergence in Technology (I2CT), Pune, India, 6–8 April 2018; pp. 1–3. [Google Scholar] [CrossRef]

| Data Item | Data Item |

|---|---|

| Type of raw materials | Raw sugar, including Brazilian sugar, Australian raw sugar, Thai raw sugar, etc. |

| Purchase quantity | The quantity of raw materials purchased in tons |

| Buyer name | Company |

| Place of origin | Raw material origin |

| Unit price | The price of raw materials in yuan/ton |

| Purchase date | Time of purchase, precise to the day |

| Data Item | Description |

|---|---|

| Type of raw materials | Usually raw sugar, including Brazilian sugar, Australian raw sugar, Thai raw sugar, etc. |

| Consumption quantity | The amount of consumed raw material, in tons |

| Factory area | Production plant |

| Date of use | Time of using raw material, precise to the day |

| Data Item | Description |

|---|---|

| Product name | Generally finished sugar, including white sugar, soft white sugar, caster sugar, etc. |

| Sales volume | Total sales of finished sugar, in tons |

| Client’s name | Purchaser |

| Unit price | The price of finished sugar in yuan/ton |

| Sales date | Finished sugar sales time, precise to the day |

| RMSE | MAPE | Prediction Accuracy | ||||

|---|---|---|---|---|---|---|

| Time Granularity | TMCS- ENN | MCS-El Man | TMCS- ENN | MCS-El Man | TMCS- ENN | MCS-El Man |

| Month | 0.245 | 0.307 | 5.81% | 6.87% | 94.19% | 87.85% |

| Half month | 0.117 | 0.118 | 5.62% | 6.38% | 94.38% | 93.61% |

| Week | 0.058 | 0.092 | 5.36% | 6.73% | 94.64% | 93.27% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, H.; Zhou, Y.; Liu, W.; Li, Y.; Chen, Z.; He, D. TMCS-ENN: A Temporal Feature-Correlation Cuckoo Search-Elman Neural Network for Sugar Raw Materials Demands Prediction. Electronics 2022, 11, 2370. https://doi.org/10.3390/electronics11152370

Cui H, Zhou Y, Liu W, Li Y, Chen Z, He D. TMCS-ENN: A Temporal Feature-Correlation Cuckoo Search-Elman Neural Network for Sugar Raw Materials Demands Prediction. Electronics. 2022; 11(15):2370. https://doi.org/10.3390/electronics11152370

Chicago/Turabian StyleCui, Haibo, Yuqi Zhou, Weiming Liu, Yangying Li, Zhijun Chen, and Du He. 2022. "TMCS-ENN: A Temporal Feature-Correlation Cuckoo Search-Elman Neural Network for Sugar Raw Materials Demands Prediction" Electronics 11, no. 15: 2370. https://doi.org/10.3390/electronics11152370

APA StyleCui, H., Zhou, Y., Liu, W., Li, Y., Chen, Z., & He, D. (2022). TMCS-ENN: A Temporal Feature-Correlation Cuckoo Search-Elman Neural Network for Sugar Raw Materials Demands Prediction. Electronics, 11(15), 2370. https://doi.org/10.3390/electronics11152370