Real-Time 3D Mapping in Isolated Industrial Terrain with Use of Mobile Robotic Vehicle

Abstract

:1. Introduction

2. SLAM Mapping Overview

- —a sequence of the mobile vehicle’s poses (passed path) in a sampling time interval <0, t>;

- —a sequence of control signals;

- —a sequence of relative observations.

3. Hybrid Mapping Concept for Inspection Purposes

4. Environment Description and Experimental Results

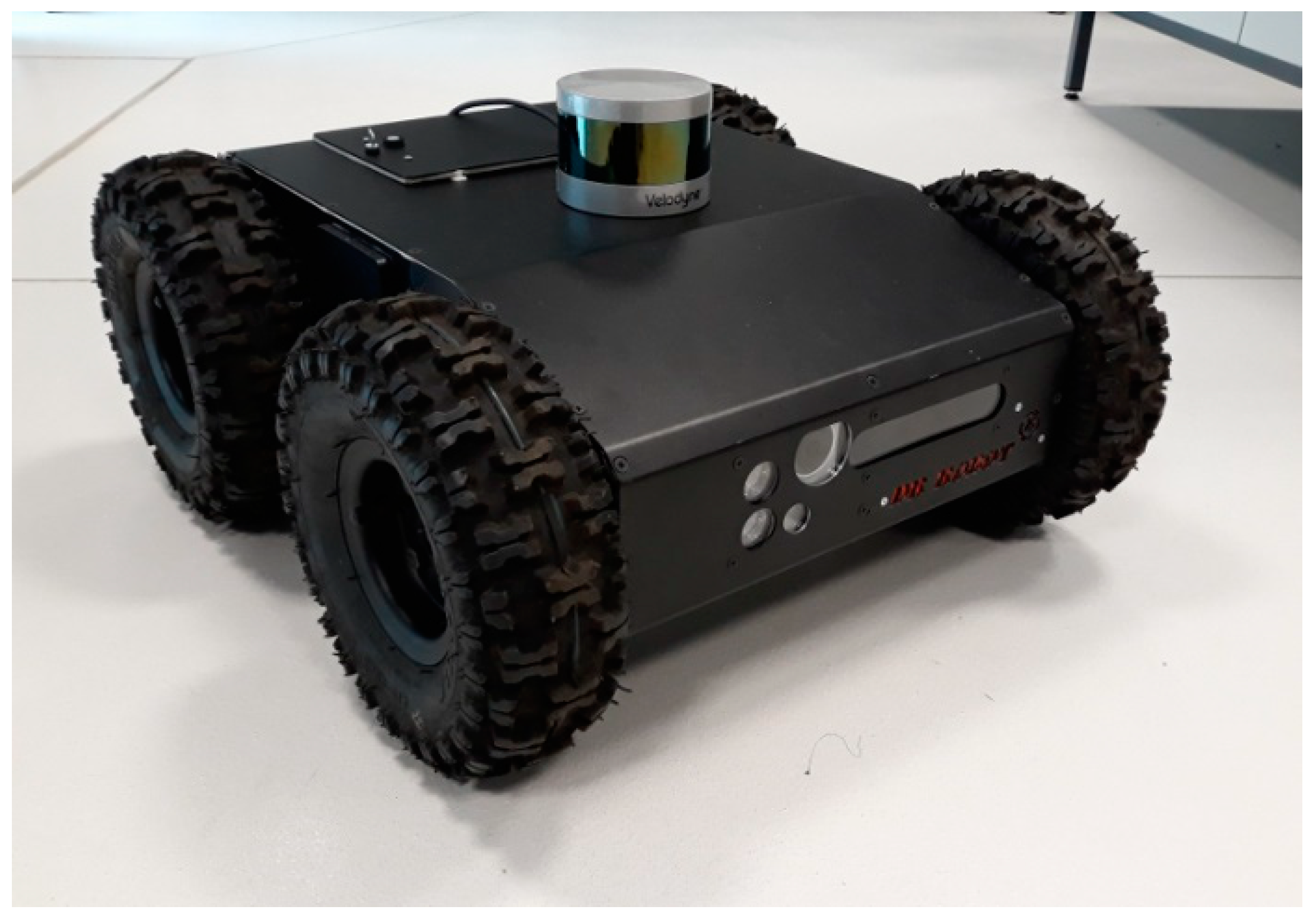

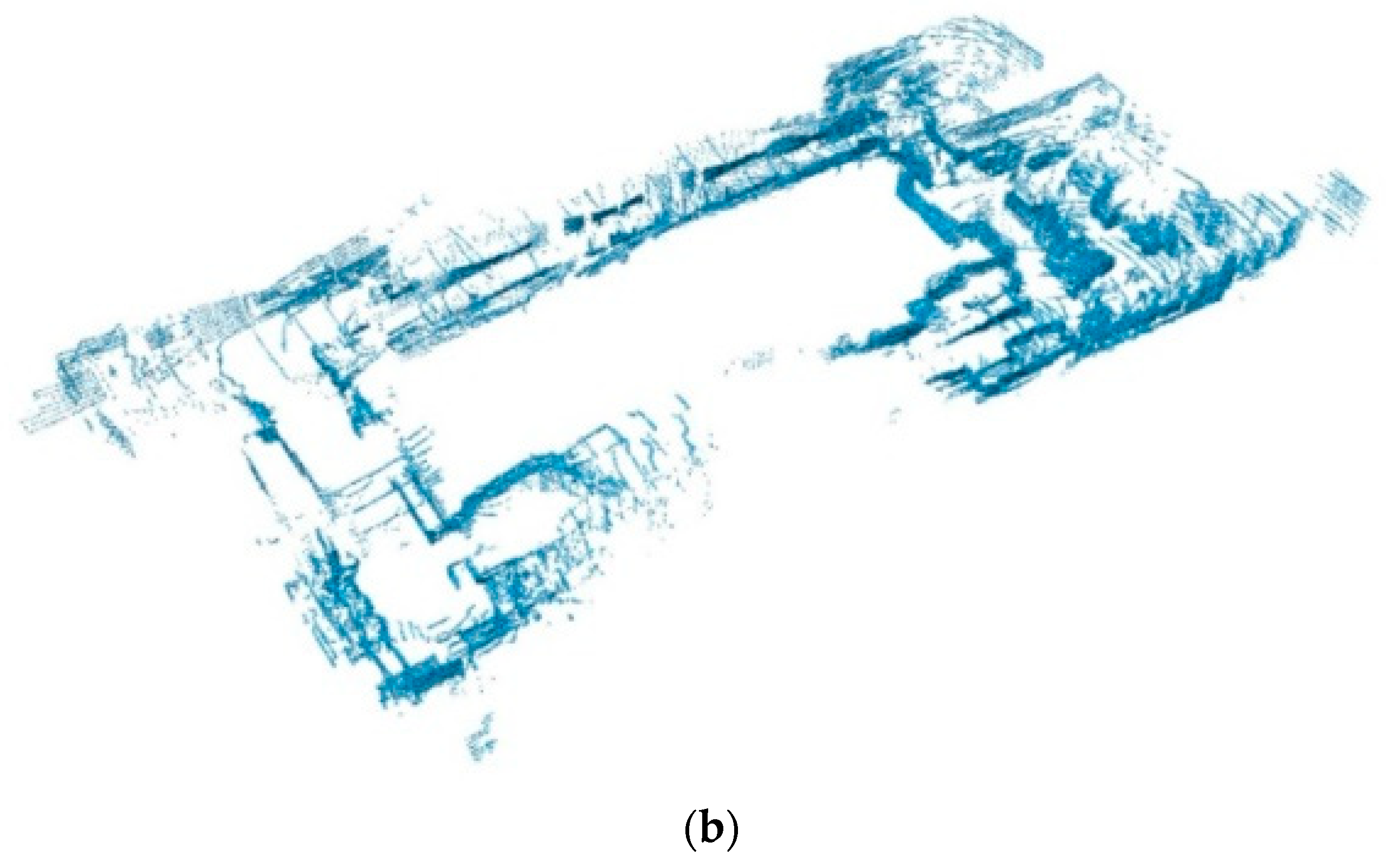

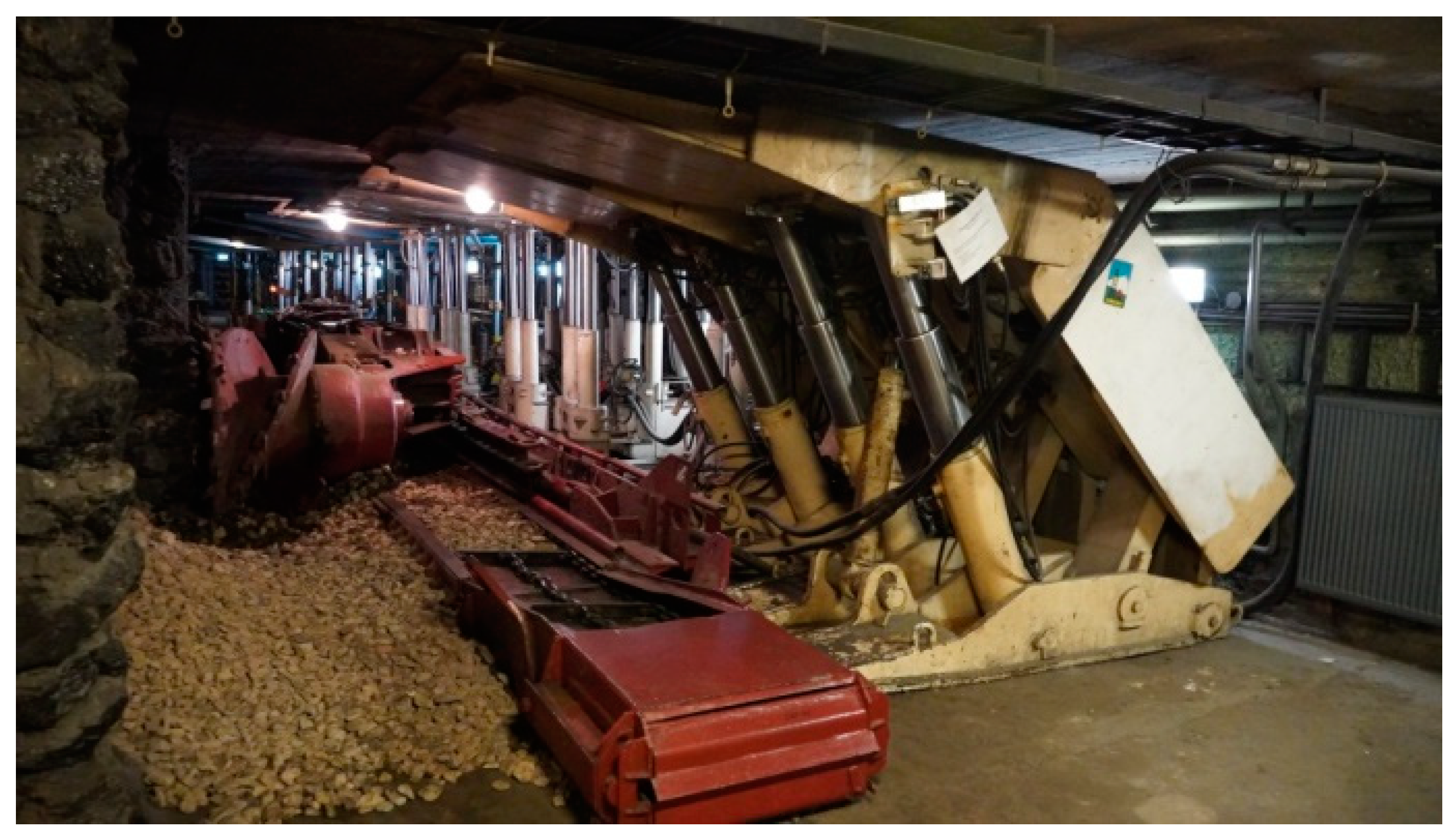

4.1. Robotic Vehicle and Environment

4.2. Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Murphy, R.R.; Kravitz, J.; Stover, S.L.; Shoureshi, R. Mobile robot in mine rescue and recovery. IEEE Robot. Autom. Mag. 2009, 16, 91–103. [Google Scholar] [CrossRef]

- Michael, N.; Shen, S.; Mohta, K.; Mulgaonkar, Y.; Kumar, V.; Nagatani, K.; Okada, Y.; Kiribayashi, S.; Otake, K.; Yoshida, K.; et al. Collaborative mapping of an earthquake-damaged building via ground and aerial robots. J. Field Robot. 2012, 29, 832–841. [Google Scholar] [CrossRef]

- Kruijff, G.J.; Kruijff-Korbayová, I.; Keshavdas, S.; Larochelle, B.; Janíček, M.; Colas, F.; Liu, M.; Pomerleau, F.; Siegwart, R.; Neerincx, M.A.; et al. Designing, developing, and deploying systems to support human-robot teams in disaster response. Adv. Robot. 2014, 28, 1547–1570. [Google Scholar] [CrossRef] [Green Version]

- Pradhan, D.; Sen, J.; Hui, N.B. Design and development of an automated all-terrain wheeled robot. Adv. Robot. Res. 2014, 1, 21–39. [Google Scholar] [CrossRef] [Green Version]

- Pierzchała, M.; Giguère, P.; Astrup, A. Mapping forests using an unmanned ground vehicle with 3D LIDAR and graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Saponara, S. Sensing and connection systems for assisted and autonomous driving and unmanned vehicles. Sensors 2018, 18, 1999. [Google Scholar] [CrossRef] [Green Version]

- Zhang, K.; Yang, Y.; Fu, M.; Wang, M. Traversability assessment and trajectory planning of unmanned ground vehicles with suspension systems on rough terrain. Sensors 2019, 19, 4372. [Google Scholar] [CrossRef] [Green Version]

- Elfes, A. Using occupancy grids for mobile robot perception and navigation. Computer 1989, 6, 46–57. [Google Scholar] [CrossRef]

- Mihálik, M.; Malobický, B.; Peniak, P.; Vestenický, P. The new method of active SLAM for mapping using LiDAR. Electronics 2022, 11, 1082. [Google Scholar] [CrossRef]

- Fabrizi, E.; Saffiotti, A. Extracting topology-based maps from gridmaps. In Proceedings of the IEEE International Conference on Robotics and Automation ICRA’2000, San Francisco, CA, USA, 24–28 April 2000; pp. 2972–2978. [Google Scholar]

- Fox, D.; Burgard, W.; Thrun, V. Markov localization for mobile robots in dynamic environments. J. Artif. Intell. Res. 1999, 11, 391–427. [Google Scholar] [CrossRef]

- Schmidt, A. The EKF-based visual SLAM system with relative map orientation measurements. In Proceedings of the 2014 International Conference on Computer Vision and Graphics, Warsaw, Poland, 15 September 2014; pp. 182–194. [Google Scholar]

- Montemerlo, M.; Thrun, S. A scalable method for the simultaneous localization and mapping problem in robotics. In FastSLAM 2.0; Springer: Berlin/Heidelberg, Germany, 2007; pp. 63–90. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D.; Dellaert, F. Robust Monte Carlo localization for mobile robots. Artif. Intell. 2001, 1–2, 99–141. [Google Scholar] [CrossRef] [Green Version]

- Thrun, S.; Montemerlo, M. The graph SLAM algorithm with applications to large-scale mapping of urban structures. Int. J. Robot. Res. 2006, 5–6, 403–429. [Google Scholar] [CrossRef]

- Ismail, H.; Roy, R.; Sheu, L.J.; Chieng, W.H.; Tang, L.C. Exploration-based SLAM (e-SLAM) for the indoor mobile robot using Lidar. Sensors 2022, 2, 1689. [Google Scholar] [CrossRef] [PubMed]

- Bresson, G.; Alsayed, Z.; Yu, L.; Glaser, S. Simultaneous localization and mapping: A survey of current trends in autonomous driving. IEEE Trans. Intell. Veh. 2017, 3, 194–220. [Google Scholar] [CrossRef] [Green Version]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with Rao-Blackwellized particle filters. IEEE Trans. Robot. 2007, 1, 34–46. [Google Scholar] [CrossRef] [Green Version]

- Kudriashov, A.; Buratowski, T.; Giergiel, M. Multi-level exploration and 3D mapping with octrees and differential drive robots. Warsaw University of Technology Press. Electronics 2018, 192, 491–500. [Google Scholar]

- Milstein, A. Occupancy grid maps for localization and mapping. In Motion Planning; Jing, X.J., Ed.; InTech: London, UK, 2008; pp. 382–408. [Google Scholar]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM: A factored solution to the simultaneous localization and mapping problem. In Proceedings of the AAAI National Conference on Artificial Intelligence, Edmonton, Canada, 28 July–1 August 2002; pp. 318–329. [Google Scholar]

- Nuss, D.; Reuter, S.; Thom, M.; Yuan, T.; Krehl, G.; Maile, M.; Gern, A.; Dietmayer, K. A random finite set approach for dynamic occupancy grid maps with real-time application. arXiv 2016, arXiv:1605.02406. [Google Scholar] [CrossRef]

- Chang, B.; Kaneko, M.; Lim, H. Robust 2D mapping integrating with 3d information for the autonomous mobile robot under dynamic environment. Electronics 2019, 8, 1503. [Google Scholar]

- Wiliams, S.B.; Dissanayake, V.; Durant-Whyte, H.F. An efficient approach to the simultaneous localisation and mapping problem. In Proceedings of the IEEE International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; pp. 4006–4411. [Google Scholar]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. Octomap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 3, 189–206. [Google Scholar] [CrossRef] [Green Version]

- Peasley, B.; Birchfield, S.; Cunningham, A.; Dellaert, F. Accurate on-line 3D occupancy grids using manhattan world constraints. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems IROS’2012, Vilamoura, Portugal, 7–12 October 2012; pp. 5283–5290. [Google Scholar]

- Skrzypczynski, P. Simultaneous localization and mapping: A feature-based probabilistic approach. Int. J. Appl. Math. Comp. Sci. 2009, 4, 575–588. [Google Scholar] [CrossRef] [Green Version]

- Grewal, M.S. Kalman filtering. In International Encyclopaedia of Statistical Science; Lovric, M., Ed.; Springer: Berlin, Germany, 2011; pp. 705–708. [Google Scholar]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep learning sensor fusion for autonomous vehicle perception and localization: A review. Sensors 2020, 15, 4220. [Google Scholar] [CrossRef] [PubMed]

- Fox, D.; Burgard, W.; Thrun, V.; Dellaert, F. Particle Filters for Mobile Robot Localization. In Sequential Monte Carlo Methods in Practice; Doucet, A., Freitas, N., Gordon, N., Eds.; Springer: Berlin, Germany, 2001; pp. 401–428. [Google Scholar]

- Smith, S.R.; Self, M.; Cheesman, P. Estimating uncertain spatial relationships in robotics. In Proceedings of the Second Conference on Uncertainty in Artificial Intelligence, Philadelphia, PA, USA, 8 August 1990. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 92, 401–422. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Wang, L.F.; Yu, J.H. Depth-image based 3D map reconstruction of indoor environment for mobile robots. J. Comput. Appl. 2014, 34, 3438–3445. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improving grid-based SLAM with Rao-Blackwellized particle filters by adaptive proposals and selective resampling. In Proceedings of the IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18 April 2005. [Google Scholar]

- Grisetti, G.; Tipaldi, G.D.; Stachniss, C.; Burgard, W.; Nardi, D. Fast and accurate SLAM with Rao-Blackwellized particle filters. Robot. Auton. Syst. 2007, 55, 30–38. [Google Scholar] [CrossRef] [Green Version]

- Godsill, S. Particle filtering: The first 25 years and beyond. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 7760–7764. [Google Scholar]

- Doucet, A.; Freitas, N.; Murphy, K.; Russell, S. Rao-Blackwellised particle filtering for dynamic Bayesian networks. In Proceedings of the 16th Conference on Uncertainty in Artificial Intelligence, Stanford, CA, USA, 30 June–3 July 2000; pp. 176–183. [Google Scholar]

- Yu, S.; Fu, C.; Gostar, A.K.; Hu, M. A review on map-merging methods for typical map types in multiple-ground-robot SLAM solutions. Sensors 2020, 20, 6988. [Google Scholar] [CrossRef]

- Li, H.; Tsukada, M.; Nashashibi, F.; Parent, M. Multivehicle cooperative local mapping: A methodology based on occupancy grid map merging. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2089–2100. [Google Scholar] [CrossRef] [Green Version]

- Birk, A.; Carpin, S. Merging occupancy grid maps from multiple robots. Proc. IEEE 2006, 94, 1384–1397. [Google Scholar] [CrossRef]

- Sünderhauf, N. Robust Optimization for Simultaneous Localization and Mapping. Ph.D. Thesis, Chemnitz University of Technology, Chemnitz, Germany, 2012. [Google Scholar]

- Sobczak, L.; Filus, K.; Domanski, A.; Domanska, J. LiDAR point cloud generation for SLAM algorithm evaluation. Sensors 2021, 21, 3313. [Google Scholar] [CrossRef]

- Ren, Z.; Wang, L.; Bi, L. Robust GICP-based 3D LiDAR SLAM for underground mining environment. Sensors 2019, 19, 2915. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Zhu, H.; You, S.; Wang, L.; Tang, C. Efficient laser-based 3D SLAM for coal mine rescue robots. IEEE Access 2018, 7, 14124–14138. [Google Scholar] [CrossRef]

- Parisotto, E.; Chaplot, D.S.; Zhang, J.; Salakhutdinov, R. Global pose estimation with an attention-based recurrent network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18 June 2018. [Google Scholar]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. Towards end-to-end visual odometry with deep recurrent convolutional neural networks. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May 2017. [Google Scholar]

- Bruno, H.M.S.; Colombini, E.L. LIFT-SLAM: A deep-learning feature-based monocular visual SLAM method. Neurocomputing 2021, 455, 97–110. [Google Scholar] [CrossRef]

- Moravec, H.P. Sensor fusion in certainty grids for mobile robots. AI Mag. 1988, 9, 61–71. [Google Scholar]

- Kudriashov, A.; Buratowski, T.; Giergiel, M. Hybrid AMCL-EKF filtering for SLAM-based pose estimation in rough terrain. In Proceedings of the 15th IFToMM World Congress on Advances in Mechanism and Machine Science, Krakow, Poland, 30 June–4 July 2019; pp. 2819–2828. [Google Scholar]

- Kudriashov, A.; Buratowski, T.; Garus, J.; Giergiel, M. 3D environment exploration with SLAM for autonomous mobile robot control. WSEAS Trans. Control Sys. 2021, 16, 451–456. [Google Scholar] [CrossRef]

- Kudriashov, A.; Buratowski, T.; Giergiel, M. Robot’s pose estimation in environment exploration process with SLAM and laser techniques. Nauk. Notatki 2017, 58, 204–212. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Buratowski, T.; Garus, J.; Giergiel, M.; Kudriashov, A. Real-Time 3D Mapping in Isolated Industrial Terrain with Use of Mobile Robotic Vehicle. Electronics 2022, 11, 2086. https://doi.org/10.3390/electronics11132086

Buratowski T, Garus J, Giergiel M, Kudriashov A. Real-Time 3D Mapping in Isolated Industrial Terrain with Use of Mobile Robotic Vehicle. Electronics. 2022; 11(13):2086. https://doi.org/10.3390/electronics11132086

Chicago/Turabian StyleBuratowski, Tomasz, Jerzy Garus, Mariusz Giergiel, and Andrii Kudriashov. 2022. "Real-Time 3D Mapping in Isolated Industrial Terrain with Use of Mobile Robotic Vehicle" Electronics 11, no. 13: 2086. https://doi.org/10.3390/electronics11132086

APA StyleBuratowski, T., Garus, J., Giergiel, M., & Kudriashov, A. (2022). Real-Time 3D Mapping in Isolated Industrial Terrain with Use of Mobile Robotic Vehicle. Electronics, 11(13), 2086. https://doi.org/10.3390/electronics11132086