Abstract

Person re-identification (Re-ID) aims to identify the same pedestrian from a surveillance video in various scenarios. Existing Re-ID models are biased to learn background appearances when there are many background variations in the pedestrian training set. Thus, pedestrians with the same identity will appear with different backgrounds, which interferes with the Re-ID performance. This paper proposes a swin transformer based on two-fold loss (TL-TransNet) to pay more attention to the semantic information of a pedestrian’s body and preserve valuable background information, thereby reducing the interference of corresponding background appearance. TL-TransNet is supervised by two types of losses (i.e., circle loss and instance loss) during the training phase. In the retrieval phase, DeepLabV3+ as a pedestrian background segmentation model is applied to generate body masks in terms of query and gallery set. The background removal results are generated according to the mask and are used to filter out interfering background information. Subsequently, a background adaptation re-ranking is designed to combine the original information with the background-removed information, which digs out more positive samples with large background deviation. Extensive experiments on two public person Re-ID datasets testify that the proposed method achieves competitive robustness performance in terms of the background variation problem.

1. Introduction

Person Re-ID [1,2,3] as a branch of image retrieval is widely used in urban surveil-lance systems. Its aim is to find a target pedestrian from surveillance videos across multiple cameras. In general, given a probe pedestrian, the Re-ID system aims to retrieve the pedestrians with the same identity in the gallery set. Recently, convolutional neural network (CNN) [4,5] and transformers have been continuously introduced into the field of Re-ID and achieved comparative performance.

Person Re-ID mainly includes two parts, i.e., a training stage and a retrieval stage. The training phase extracts the pedestrian features through representation learning and then obtains the robust Re-ID model. The retrieval stage aims to use the Re-ID model to extract the pedestrian features in a test set and then uses the extracted feature vector as the input to measure the similarity to obtain the final ranking result. Prior work mainly focuses on representation learning to describe pedestrian appearance differences in training stage. Existing research proposed various network models to optimize feature extraction of pedestrian images; for example, the special dense convolutional neural network (SD-CNN) [6], the mask-guided contrastive attention model (MGCAM) [7], feature fusion sub-net [8], and vision transformer (ViT) [9], etc. However, the above solutions ignore the challenge of the same pedestrian’s background variations in different cameras. Some state-of-the-art methods [10,11,12] have been researched for such problems. For example, Song et al. [7] designed a mask-guided contrastive attention model (MGCAM) to learn features separately from the body and background regions. MGCAM introduced a unique region-level triplet loss to bring characteristics from the complete image and body area closer together while pushing background features away.

To deal with the background variation issue, previous methods chose to completely remove the background information for training. Methods that completely remove the background do not learn any background information during the training phase, resulting in the inability to extract valuable background features to calculate the similarity metric in the retrieval stage. Compared with these methods, the proposed method does not ignore learning valuable background information, although it focuses on learning the pedestrian body during the training phase. Thus, the valuable background features and pedestrian body features can be extracted and fused to compute the final distance metric. For pedestrians with the same identity in different backgrounds, similarity metric through the difference of background information is the key to distinguish the pedestrian identity. Learning without background information can only distinguish identities through the extracted pedestrian body information, which will lead to poor Re-ID performance. For pedestrians with different identities in the same background, it is the key to distinguish pedestrian identities by using more information about the pedestrian body to measure the similarity. To summarize, the background information can both interfere with and assist with the identification of the identity in different cases. Thus, the proposed Re-ID network cannot completely ignore the background information under the premise of paying more attention to the pedestrian’s body information.

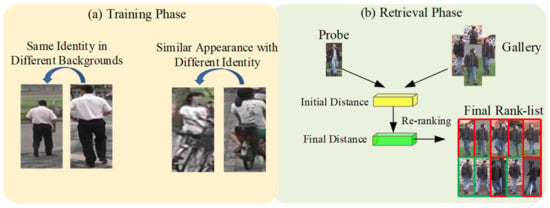

Improving the performance of person Re-ID depends not only on feature extraction in the training stage, but also on the distance similarity metric in the retrieval stage. The re-ranking is designed to improve retrieval stage quality. After computing the initial distances, the re-ranking is defined as re-computing the distances between probe and gallery pedestrian sets so that the more positive examples, the better the anti-background-interference capability in terms of the final Re-ID result. However, several re-ranking methods only considers the distance similarity metric of the original features and do not fuse the original features and background removal features to recalculate the fusion distance metric. Thus, the final rank-list will still be affected by the background variations after re-computing the distances. As shown in Figure 1a,b, the background variations of person Re-ID mainly exist in two aspects:

- In the training phase, many background removal methods completely remove the background information, which makes Re-ID model unable to utilize a little valuable background information to distinguish the similarity of different identities.

- In the retrieval phase, some re-ranking algorithms only consider original features for retrieval without further calculating the mixed metric of original features and background removal features, resulting in many positive samples still ranking low after recalculating the distance due to background interference.

Figure 1.

The challenges of background variations for person Re-ID.

To handle the aforementioned issues, this paper makes the Re-ID model focus more on pedestrian body information while retaining a little background information. Our motivation is to enable the Re-ID model to distinguish between pedestrians with the same identity and similar pedestrians with different identities. Simultaneously, how to design a novel re-ranking method based on a mixed metric of original features and background removal features is also our goal. Thus, we proposed a TL-TransNet and background adaptation re-ranking for person Re-ID to reduce the background variations issue.

The contributions of this paper can be summarized as follows:

- (1)

- The complete background-removal methods cannot learn any valuable background information. Compared with these methods, TL-TransNet is proposed to force the Re-ID model to preserve several pieces of background-related information and pay more attention to pedestrian body information. Meanwhile, the parameters of TL-TransNet are optimized by minimizing two-fold loss.

- (2)

- A background adaptation re-ranking method has been developed to improve the ranking results of the positive samples that rank lower due to the interference of background information in the retrieval phase. Firstly, DeepLabV3+ [13] is used as a pedestrian background segmentation model to obtain pedestrian body regions extracted directly with the mask in probe and gallery pedestrian set. Then, the proposed re-ranking based on mixed metrics (i.e., Euclidean and Jaccard metric) combines the original feature with the background-removed feature to obtain a more reliable rank-list that is robust to interference background information.

- (3)

- Comprehensive experiments show that the proposed method can improve generalization ability in terms of background variation issues.

2. Related Work

Person Re-ID research has made considerable strides since the advent of deep learning, which outperforms hand-crafted feature extraction algorithms [14,15]. Deep learning is now used to study the majority of Re-ID challenges. As a result, we will provide a brief overview of Re-ID research accomplishments in three areas: feature representation learning, deep metric learning, and ranking optimization.

2.1. Feature Representation Learning for Person Re-ID

Recently, some researchers proposed various methods to optimize the feature extraction of person Re-ID. Some researchers [16,17,18,19,20] have also conducted further research to chase more efficient solutions to a series of problems in the process of pedestrian feature extraction. Li et al. [16] propose an approach called part-guided representation (PGR), which is made up of pose invariant feature (PIF) and local descriptive feature (LDF) to handle the problem of significant pose variations and misalignment errors in person images. Zhang et al. [17] present view confusion feature learning (VCFL), an end-to-end trainable system for learning view-invariant features by removing view-specific information. Han et al. [18] present a fine and coarse-grained unsupervised (FCU) feature-learning framework for global and local branches, which takes into account both the spatial integrity of a person’s attributes and the discriminative features of distinct patches. Wei et al. [19] introduce a self-inspirited feature learning (SIF) technique, which essentially contains a basic adversarial learning strategy to encourage a network to learn more discriminative human representation. However, these representation learning methods capture a lot of background interference information in the training process, so they cannot deal with the challenge of pedestrian background variation well. The purpose of this paper is to overcome the bottleneck of representation learning in the problem of background variation through the proposed TL-TransNet to capture pedestrian body features and retain valuable background information.

2.2. Metric Learning for Person Re-ID

Several scholars have achieved significant advances in the field of metric learning [21,22,23,24,25,26]. Chen et al. [21] introduce the deep top-rank counter measure, a generalized logistic function-based metric with effective applicability in deep learning, to approximately maximize the counted occurrences of the accurate top-rank matches. Hence, B. Nguyen and B. De Baets [23] propose a kernel method resulting in a nonlinear transformation. Moreover, it can be used for learning distance metrics from structured objects without having a vectorial representation. Despite recent encouraging advances, person Re-ID remains a difficult process due to the intricate changes in human features from diverse camera perspectives. To tackle this problem, Yang et al. [24] introduce a logistic discriminant metric learning approach that uses both original and auxiliary data during training and is driven by the new machine learning paradigm of privileged information learning. Yu et al. [25] propose an unsupervised asymmetric distance metric based on cross-view clustering, which allows specific feature transformations for each camera view to tackle the specific feature distortions. The proposed TL-TransNet uses two-fold loss for metric learning in the training phase, which can effectively distinguish similar pedestrians with different identities from pedestrians with the same identity under different backgrounds.

2.3. Re-Ranking for Person Re-ID

The primary idea behind re-ranking [27,28,29,30,31,32,33,34] is to optimize the initial ranking list by utilizing gallery-to-gallery similarities to boost the retrieval performance at post-processing steps. Guo et al. [27] introduce inverse density-adaptive kernel-based re-ranking (inv-DAKR) and bidirectional density-adaptive kernel-based re-ranking (bi-DAKR), two simple yet efficient re-ranking algorithms based on a smooth kernel function with a density-adaptive parameter. Xu et al. [28] present a feature-relation-map-based similarity evaluation (FRM-SE) model that uses convolution operations to automatically mine the latent relations between k-neighbors, reducing queries and memory use. Wu et al. [29] explore a concept of RANkinG ensembles (RANGE) that learns the probe-specific matching information against the gallery set encoded in ranking lists, which tackles the problem of a breach of deployment. In contrast with the above method, this paper fuses the background removal features in the retrieval phase. The proposed re-ranking method combines two types of distance metrics to improve the rank-list results subject to background interference.

2.4. Semantic Segmentation

In recent years, semantic segmentation has gained remarkable success. Wang et al. [35] proposed a one-pixel comparison algorithm for semantic segmentation in fully supervised settings, focusing on global context information of training data. Moreover, Zhou et al. [36] proposed the regional semantic contrast and aggregation (RCA), which is equipped with a regional memory bank to store a large number of diverse object patterns in training data, providing powerful support for exploring dataset-level semantic structure. Later, the limitations of semantic segmentation solutions based on FCN or pixel query were revealed in [37]. A non-parametric segmentation method based on a non-learning prototype was proposed, which can directly shape the pixel embedding space. Some person Re-ID methods based on semantic segmentation are used in the training phase to completely remove the background information. Compare with these methods, this paper combines the semantic segmentation method to obtain the background removal features in the retrieval phase and improves the rank quality through the mixed metric of the proposed re-ranking method.

3. Proposed Method

3.1. Pipeline and Overview

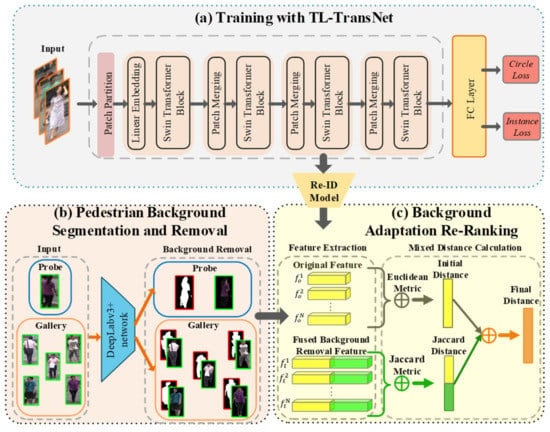

Our motivation is mainly to solve the Re-ID challenge of background variations. To alleviate the interference of background variations in the training phase and retrieval phase, we proposed a TL-TransNet and background adaptation re-ranking for person re-identification. As shown in Figure 2, the pipeline of our framework consists of three parts (i.e., training with TL-TransNet, pedestrian background segmentation and removal, and background adaptation re-ranking).

Figure 2.

Framework of the proposed method for person Re-ID.

First, a TL-TransNet based on a swin transformer and two types of loss supervision is developed to train input data, which captures pedestrian body information intensively and preserves a little valuable background information. Then, DeepLabV3+ is applied to remove background interference in terms of probe and gallery set. Finally, a background adaptation re-ranking method based on a mixed similarity metric is designed to combine original and background removal features. It can obtain a ranking list that is more robust to background interference.

3.2. Training with TL-TransNet

Architecture. The key to enhancing the training model of the pedestrian sample is to reduce background noise interference. Simultaneously, some valuable background information should be retained when facing similar pedestrians with different identities. The swin transformer [38] as a Re-ID benchmark model is utilized in this paper. Hence, a TL-TransNet based on two-fold loss is designed to pay more attention to the pedestrian identity embeddings during the training phase in this section.

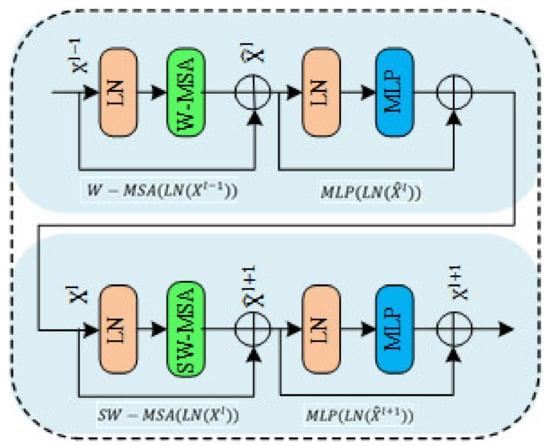

The swin transformer block constructs the multi-headed self-attention based on shift windows. As shown in Figure 3, there are two swin transformer blocks in a row. A LayerNorm layer, a multi-head self-attention module, residual connection, and a multilayer perceptron with two completely linked layers and GELU non-linearity make up each swin transformer block. In the two succeeding transformer blocks, the window-based multi-head self-attention and shifted window-based multihead self-attention modules are used. Based on such a window partitioning method, continuous swin transformer blocks may be defined as follows:

in which represents the outputs of the or module, and denotes the module of the block l.

Figure 3.

Two successive swin transformer blocks.

Given a pedestrian input of size, , the swin transformer first reshapes the input to a feature. In addition, each window has patches, for a total of windows. Then, self-attention is calculated for each patch. For a patch feature, the query, key, and value matrices, Q, K and V, are computed as:

where , , and are projection matrices that are shared across different windows. Self-attention is computed as follows:

where and represent the dimension of the query or key. Additionally, the values in are taken from the bias matrix . In this way, the complexity of computation receives huge optimization.

Loss Function. In this work, two-fold loss is designed to supervise the entire training process of TL-TransNet, which consists of circle loss [39] and instance loss [40].

- Circle loss is introduced to strengthen the identity ability of pedestrians. This loss, as an improved triplet loss, designs a weight to control the gradient contribution of positive and negative samples to each other. Given L classes in a person Re-ID dataset, a triplet input is composed of three samples , and . is an anchor sample belong to class a, where class a in {1, 2, …, L}. is a positive sample that comes from the same person class as , while is a negative sample taken from a different person class in terms of . The function of circle loss is computed as follows:

Assume that there are K samples within the same class as anchor and L is the class number of the whole dataset. That is to say that there are within-class similarity scores and between-class similarity scores associated with . We denote these similarity scores as and , respectively. and are non-negative weighting factors. is a scale factor for better within-class similarity separation.

During training, the gradient with respect to is to be multiplied with when backpropagated to . When a similarity score deviates far from its optimum (i.e., for and for ), it should obtain a large weighting factor so as to receive an effective update with a large gradient. To this end, we define and in a self-paced manner:

where is the “cut-off at zero” operation to ensure and are non-negative.

- Instance loss is added to provide better weight initialization for TL-TransNet and encourage the Re-ID model to find fine-grained details with different pedestrians. As instance loss clearly considers the inter-class distance, it can reduce the feature correlation between two pedestrian images. The instance loss is formulated as follows:where is a feature vector extracted from TL-TransNet. , in which I denotes input image, and function is the forward propagation process of TL-TransNet. is the parameter of the final fully connected layer. It can be viewed as concatenated weights , and p represents the total number of categories in the training process. Every weight from is a 2048-dim vector. Since the total number of identity classes in the two person Re-ID datasets ranges between 1024 and 2048, in order to unify the hyperparameters of the network, the weight is a 2048-dim vector. denotes the loss and denotes the probability over all classes. is the predicted possibility of the right class .

The final loss function with TL-TransNet is the combination of circle loss and instance loss, which can be defined as follows:

where is a predefined weight for instance loss. The generalization ability of two-fold loss is greatly improved by integrating the advantages of the two losses mentioned above, which is outstanding in the model training of Re-ID.

3.3. Pedestrian Background Segmentation and Removal

Each pedestrian across the multi-camera has multiple backgrounds and then generates background interference. The aforementioned phenomena enable the Re-ID model to add too much background information during the feature extraction stage, causing retrieval accuracy to suffer. That is to say, each pedestrian image has a lot of background information that will reduce the weight of each pedestrian instance feature during the retrieval stage. Some existing approaches usually incorporate an attention mechanism to make the network focus more on extracting the salient aspects of pedestrians in response to the above challenge. However, the robustness of the attention mechanism in some complicated and variable scenarios is not good. Thus, our purpose is to embed robust pedestrian body representation after filtering out the background through image segmentation technology in the feature extraction stage.

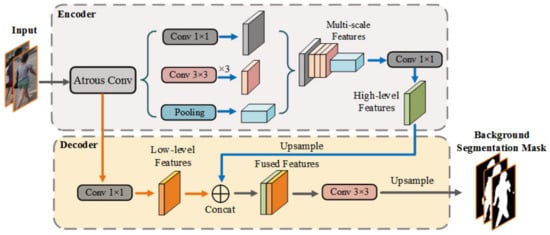

The pedestrian segmentation results are not sufficient when using the original semantic segmentation method. It would have a major impact on pedestrian Re-ID accuracy if background segmentation results were applied to train the model directly. This is due to the presence of distractors such as roadblocks, buildings, and distractors, as well as incomplete pedestrian features. As shown in Figure 4, this paper employs the DeepLabV3+ network as a segmentation tool to segment the findings in the initial scene segmentation. Therefore, the background in the whole image can be better removed, and the foreground (pedestrian) can be precisely retrieved while cutting the background.

Figure 4.

The process of background segmentation with DeepLabv3+.

The background segmentation process based on DeeplabV3+ in Figure 4 is divided into two processes, i.e., encoder and decoder. In encoder part, the pedestrian input ares passed into atrous convolution to extract the multi-scale features and then the number of channels is changed using 1 × 1 convolution to produce high-level features. In the decoder part, the high-level features are first upsampled and then concatenated with the corresponding low-level features from the network backbone. After the concatenation, the fused features use a 3 × 3 convolution to upsample and then obtain the background segmentation mask.

The Deeplabv3+ model used in the paper is evaluated on the PASCAL VOC 2012 semantic segmentation benchmark which contains 20 foreground object classes and one background class. The original dataset contains 1464(train), 1449(val), and 1456(test) pixel-level annotated images.

Before the test phase of person Re-ID, this paper segments all the pedestrians enhanced by the interference background in probe and gallery set in order to limit the impact of the background on pedestrians as much as feasible. The redundant information is erased according to the mask, and the erased backdrop is set to zero by setting all pixel values to zero, resulting in all black, leaving only the color of the essential characteristics such as pedestrians. The background removal is shown in the Formula (12):

The original image in the probe and gallery set is represented by , and is the mask created by segmenting the original image using the DeepLabV3+ model. Except for the foreground pixel in pedestrian, all other pixels in the mask are black. I denotes a background removal pedestrian image, which subtracts the grayscale of the original image’s mask pixel by pixel.

Figure 5 shows the whole process of background removal. Pedestrians in Figure 5 are in a complex and changing public space, which prompts the Re-ID model to extract redundant environmental information during the testing phase. For example, the pedestrian’s legs are partially occluded by the box in Figure 5. Therefore, the Re-ID model incorrectly extracts the occluded body parts as the occluded information.

Figure 5.

Some examples of the pedestrian background removal results.

3.4. Background Adaptation Re-Ranking

In order to improve the final rank-list result, a background adaptation re-ranking method is designed to dig out more positive samples with lower rankings due to background variations. That is to say, the motivation for background adaptation re-ranking is to fuse the original results and background removal results to improve positive samples affected by background variations. The proposed re-ranking method mainly consists of two steps.

In the first step, we are given a probe pedestrian image p and a gallery pedestrian image set with N examples. The initial ranking for each example is determined by sorting the pairwise distance between a query and the gallery sets in an ascending order. The pairwise distance is calculated by the Euclidean metric of the feature output by TL-TransNet. Assuming as k-nearest neighbors of the probe, p is defined as follows:

In [41], the k-reciprocal nearest neighbors set , which can be defined as:

Due to background variations, some positive samples tend to rank lower in the initial rank-list. In the second step, the background adaptation set of probe p is designed based on . The similarity distance of is calculated by fusing the original features and background removal ones. The fused feature adopts the method of dimension splicing, which can be computed as follows:

where and denote the original and background removal feature vectors, respectively, that are extracted by TL-TransNet. The background adaptation set sequentially can be defined as:

where represents the k-nearest neighbor set obtained by the fused features.

As the Jaccard metric evaluates dissimilarity between samples, if the background of the two images is different and belongs to the same pedestrian, their k-reciprocal closest neighbor sets overlap. To add more positive candidates, the proposed re-ranking re-calculates the Jaccard distance from the background adaptation set .

The final distance of our re-ranking is composed of the original distance and the Jaccard distance metric, which is expressed as follows:

where ; and express the Jaccard distance and the Euclidean distance between and , respectively.

4. Experimental Section

4.1. Datasets and Evaluation Metric

Datasets: As shown in Table 1, we used two benchmark Re-ID datasets including Market-1501 and DukeMTMC-reID. Brief descriptions of these datasets are given below:

- Market-1501 is a dataset of 32,668 pedestrian images collected from 6 cameras on campus. It is divided into two subsets. The training set consists of 12,963 images of 751 subjects. Additionally, the testing set is composed of 19,281 images of 750 subjects.

- DukeMTMC-reID is a large-scale person re-identification dataset captured by eight different cameras in the real world. Its training set contains 16,522 images of 702 IDs. Additionally, its test set is composed of 17,661 images of 702 IDs, of which 2228 images are a query set.

Table 1.

Person Re-ID datasets introduction.

Table 1.

Person Re-ID datasets introduction.

| Benchmark | Item | Total | Train | Test |

|---|---|---|---|---|

| Market-1501 | ID | 1501 | 751 | 750 |

| Image | 32,668 | 12,936 | 19,281 | |

| DukeMTMC-ReID | ID | 1404 | 702 | 702 |

| Image | 36,411 | 16,522 | 17,661 |

Evaluation Metrics: Two widely used evaluation metrics are employed to evaluate the person Re-ID predictions, including mean Average Precision (mAP) and cumulative matching characteristic (CMC). Top-1 accuracy is expressed as Rank-1, the conventional accuracy where the model outputs the highest probability for the input identity. Top-5 accuracy, represented as Rank-5, means that any of the five highest probability identities must match the ground truth identity.

4.2. Experimental Settings

The experimental running environment is the Ubuntu 20.04.1 LTS operating system. The processor is an 11th Gen Intel Core i7-11700K, the memory is 32 GB, the graphics processing card is an Nvidia RTX A1000 (24 GB), the Cuda version is 11.6, and the data processing uses Python 3.6 and Pytorch 1.10.4. The optimization algorithm used in the network training process is Stochastic Gradient Descent Momentum (SGDM) with momentum. During experiments, the swin transformer design serves as the foundation for our concept. We trained the model with batches of size 80. Each image is resized to 224 × 224. All models are trained with 100 epochs. The learning rates are initialized to 0.05. The drop rate is initialized to 0.5. To improve the performance, we train the Re-ID model using the circle loss and instance loss jointly.

4.3. Ablation Studies

To further justify the contribution of the TL-TransNet, we conduct some ablation analyses in Table 2. We first evaluate the effects of the addition of TL-TransNet. Notably, it improves the Re-ID performance by 13.35% in mAP and 5.79% in Rank-1 accuracy on Market-1501, respectively. Meanwhile, we also conduct an evaluation on DukeMTMC-ReID, and our TL-TransNet outperforms the ResNet-50 by a larger margin. This validates our design of TL-TransNet, which optimizes the performance of our network model with more attention to the pedestrian body rather than the surrounding environment. When it refers to TL-TransNet with background adaptation re-ranking (short for BAR), we observe that it significantly outperforms ResNet-50 by 6.98% Rank-1 and 19.95% mAP on Market-1501 and 22.44% Rank-1 and 38.26% mAP on DukeMTMC-ReID. This strongly demonstrates the superiority of TL-TransNet + BAR over ResNet-50. Given that BAR optimization is more efficient for person re-identification rather than exhaustively testing all people in different scenarios the same way.

Table 2.

Ablation studies of the proposed method on individual components.

In order to verify whether our two-fold loss is the optimal solution, we continue to conduct ablation analysis of experiments with different type losses in Table 3. The effect of different collocations of double loss is carried out on TL-TransNet. It is worth noting that our two-fold loss improves the Re-ID performance by up to 1.28% and 6.96% in mAP and Rank-1 accuracy on Market-1501, respectively. Similarly, we also evaluate DukeMTMC-ReID; our two-fold loss improves the Re-ID performance by up to 3.81% and 12.37% in mAP and Rank-1 accuracy, respectively. It can be observed that the proposed two-fold loss is superior to contrastive loss.

Table 3.

Ablation studies of TL-TransNet with other loss.

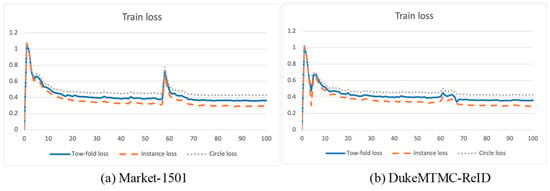

To verify the effect of two different losses (i.e., circle loss and instance loss), some ablation experiments based on loss selections are tested as shown in Table 4 and Figure 6. It can be seen that the Re-ID performance of our two-fold-loss is superior to that of a separate instance loss or circle loss. We observe that the value of circle loss is larger than the instance loss during the whole training phase. In order for these two losses to supervise and penalize each other equally during training, our proposed two-fold loss fuses circle loss for identity discrimination and instance loss for fine-grained feature extraction with the equal contribution.

Table 4.

The effectiveness of loss selections on TL-TransNet.

Figure 6.

Loss function curves when training on Person Re-ID datasets.

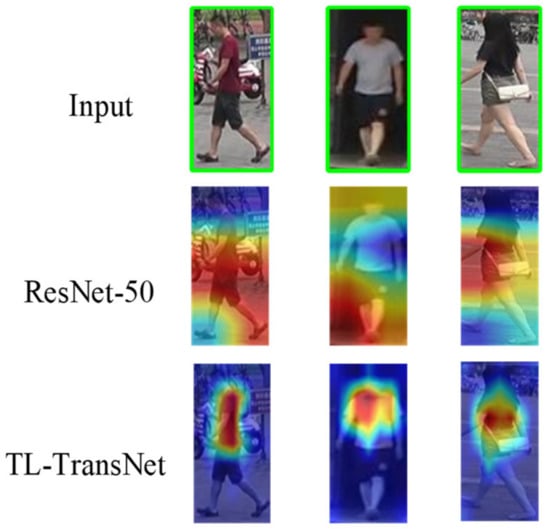

4.4. Visualized Attention Maps of the TL-TransNet

To better understand the effect of the proposed network, we execute a series of feature map visualizations on the final output feature maps of TL-TransNet and ResNet-50 in Figure 7. ResNet-50 appears to pay little regard to regional features; however, TL-TransNet can focus on regional features more effectively, as indicated in the first and second rows of the accompanying image. Furthermore, TL-TransNet can strike a better balance by focusing on more local aspects of the person’s body while still being able to remove the person from their surroundings. Compared with ResNet-50, TL-TransNet’s multi-scale attention has a more preeminent ability to extract discriminative Re-ID features from the row images. ResNet-50 is more about capturing global features and ignoring significant local features. The results show that TL-TransNet enables the Re-ID model to capture pedestrian body features more precisely.

Figure 7.

Visualization of feature maps based on different Re-ID models. (i.e., ResNet-50 and TL-TransNet).

4.5. The Impact on Tow-Fold Loss with Different

To verify the effectiveness of the proposed two-fold loss function, illustrated in Formula (11), as a vital parameter between 0 and 1 is varied to find the optimal choice. Table 5 compares the Rank-1 and mAP based on two-fold loss functions with different It can be observed that the Rank-1 and mAP are achieved best when is set to 0.7. From the overall point of view, different has weak effect on the final Re-ID performance, which also shows that the two-fold loss has a weak sensitivity to parameter values and strong robustness to a different person Re-ID dataset.

Table 5.

The effectiveness of parameter on TL-TransNet.

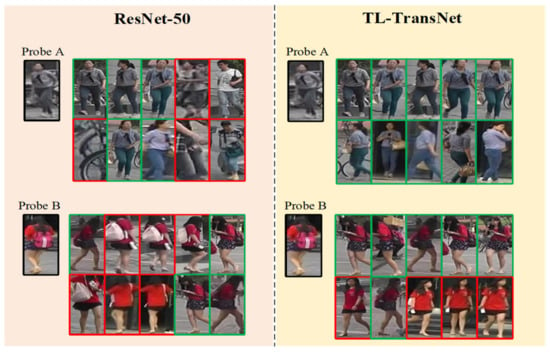

4.6. Rank-List Visualization Analysis

For each probe pedestrian, the top 10 search results from TL-TransNet and ResNet-50 are shown in Figure 8. The green box indicates the correct match, while the red box is the opposite. Numbers 1–4 show the query results for the different test samples.

Figure 8.

Top 10 visualization results of the rank-list on Market-1501.

In the inference step, TL-TransNet can quickly discover the same ID in the gallery given a query image. The gallery can be a cluttered collection of images or a series of video sequences with serious background noise. As shown in Figure 8, we test some samples on TL-TransNet and ResNet-50. It can be observed that whether for simple samples (1), or for difficult samples (2), TL-TransNet can return the correct match (if the query in gallery). At the same time, TL-TransNet can outperform ResNet-50 every time in a query.

4.7. Comparison with the State-of-the-Art Method

TL-TransNet with ABR is applied to Market-1501 and DukeMTMC-ReID, respectively. To balance the contribution of the two-fold loss, is used in the following experiments. As shown in Table 6, we have the following observations:

Table 6.

Comparison with the state-of-the-art methods.

On Market-1501, the proposed method achieves 92.60% in mAP and 95.34% in Rank-1, improving the state-of-the-art performance by 3.07% and 0.91%, respectively. Compared with DDC, we can observe that the Rank-1 and mAP of our method increase by 8.44% and 23.2%, respectively. We also compare against Improved ResNet, a strong network for person Re-ID that optimizes the structure of ResNet-50 to achieve excellent performance. It shows that our TL-TransNet + BAR outperforms it by a large margin. Although SORN achieves a high 94.8% Rank-1 that is merely 0.54% below ours, its mAP is clearly inferior to our TL-TransNet + BAR.

On DukeMTMC-ReID, our TL-TransNet + BAR gets the best Rank-1 accuracy (88.91%) and the third best mAP (84.83%). While SORN and BiFeNet + re-ranking + Circle loss perform better in terms of Rank-1 and mAP, respectively, their ranking optimization methods are inferior to ours, making them less accurate than TL-TransNet + BAR. On the contrary, TL-TransNet + BAR utilizes the background filtering re-ranking skills to achieve very competitive performance. Compared with AOPS, which extracts features from images of obscured pedestrians, TL-TransNet + BAR significantly improves the performance on this dataset. This clearly demonstrates that our method gains a satisfactory performance on background variations in terms of person Re-ID.

5. Conclusions

In this work, we proposed a swin transformer based on two-fold loss and background adaptation re-ranking for person Re-ID. During the training phase, TL-TransNet is applied to focus on the pedestrian body and preserve valuable background information, which is supervised by circle loss and instance loss. In the retrieval phase, DeepLabV3+ as a background segmentation model is used in the query and gallery set to generate the mask image. The background removal results are obtained according to the mask. Then, a background adaptation re-ranking is designed to dig out more positive samples with large background bias, which improves the quality of the rank list. Extensive experimental results prove that the proposed method is superior to other state-of-the-art methods when it comes to alleviating background variation issues.

Author Contributions

Conceptualization, Q.W., H.H., Y.Z. and W.M.; methodology, Q.W., H.H., Y.Z. and W.M.; software, Q.W., H.H., Y.Z. and D.X.; formal analysis, Q.W., H.H., Y.Z. and Q.H.; writing—original draft preparation, Q.W., H.H., Y.Z., Q.H., D.X. and C.X.; writing—review and editing, Q.W., Q.H., D.X. and C.X.; supervision, W.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 62076117 and No. 62166026) and Jiangxi Key Laboratory of Smart City (Grant No. 20192BCD40002).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, X.; Liu, M.; Raychaudhuri, D.S.; Paul, S.; Wang, Y.; Roy-Chowdhurry, A.K. Learning person re-identification models from videos with weak supervision. IEEE Trans. Image Process. 2021, 30, 3017–3028. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Wu, A.; Zheng, W. Person re-identification by contour sketch under moderate clothing change. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2029–2046. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, S.; Li, C.-T.; Kot, A.C. Multi-domain adversarial feature generalization for person re-identification. IEEE Trans. Image Process. 2021, 30, 1596–1607. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Min, W.; Xu, J.; Han, Q.; Wang, Q.; Yang, Z.; Zhou, L. SPACE: Finding key-speaker in complex multi-person scenes. IEEE Trans. Emerg. Topics Comput. 2021. [Google Scholar] [CrossRef]

- Wang, Q.; Min, W.; Han, Q.; Liu, Q.; Zha, C.; Zhao, H.; Wei, Z. Inter-domain adaptation label for data augmentation in vehicle re-identification. IEEE Trans. Multimed. 2022, 24, 1031–1041. [Google Scholar] [CrossRef]

- Wang, S.; Duan, L.; Yang, N.; Dong, J. Person re-identification with deep dense feature representation and Joint Bayesian. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3560–3564. [Google Scholar]

- Song, C.; Huang, Y.; Ouyang, W.; Wang, L. Mask-guided contrastive attention model for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1179–1188. [Google Scholar]

- Yang, C.; Qi, F.; Jia, H. Part-weighted deep representation learning for person re-identification. In Proceedings of the 2020 International Conference on Computing and Data Science (CDS), Stanford, CA, USA, 1–2 August 2020; pp. 36–39. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar]

- Tian, M.; Yi, S.; Li, H.; Li, S.; Zhang, X.; Shi, J.; Yan, J.; Wang, X. Eliminating background-bias for robust person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5794–5803. [Google Scholar]

- Huang, Y.; Wu, Q.; Xu, J.; Zhong, Y. SBSGAN: Suppression of inter-domain background shift for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9526–9535. [Google Scholar]

- Mansouri, N.; Ammar, S.; Kessentini, Y. Improving person re-identification by combining Siamese convolutional neural network and re-ranking process. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taipei, Taiwan, 18–21 September 2019; pp. 1–8. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Li, Y.; Zhou, L.; Hu, X.; Zhang, J. A combined feature representation of deep feature and hand-crafted features for person re-identification. In Proceedings of the 2016 International Conference on Progress in Informatics and Computing (PIC), Shanghai, China, 23–25 December 2016; pp. 224–227. [Google Scholar]

- Zheng, S.; Li, X.; Men, A.; Guo, X.; Yang, B. Integration of deep features and hand-crafted features for person re-identification. In Proceedings of the 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Hong Kong, China, 10–14 July 2017; pp. 674–679. [Google Scholar]

- Li, J.; Zhang, S.; Tian, Q.; Wang, M.; Gao, W. Pose-guided representation learning for person re-identification. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 622–635. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Liu, F.; Zhang, D. Adversarial view confusion feature learning for person re-identification. IEEE Trans. Circ. Syst. Video Technol. 2022, 31, 1490–1502. [Google Scholar] [CrossRef]

- Han, H.; Tang, J.; Huang, L.; Zhang, Y. Fine and coarse-grained feature learning for unsupervised person re-identification. In Proceedings of the 2021 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shanghai, China, 27–29 August 2021; pp. 314–318. [Google Scholar]

- Wei, L.; Wei, Z.; Jin, Z.; Yu, Z.; Huang, J.; Cai, D.; He, X.; Hua, X.-S. SIF: Self-inspirited feature learning for person re-identification. IEEE Trans. Image Process. 2020, 29, 4942–4951. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Lv, X.; Zhang, Z.; Zuo, W.; Wu, J.; Miao, D. Deep fusion feature representation learning with hard mining center-triplet loss for person re-identification. IEEE Trans. Multimed. 2020, 22, 3180–3195. [Google Scholar] [CrossRef]

- Chen, C.; Dou, H.; Hu, X.; Peng, S. Deep top-rank counter metric for person re-identification. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 2732–2739. [Google Scholar]

- Fernando, D.N.; del Carmen, D.J.; Cajote, R. Descriptor extraction and distance metric earning for a robust person re-identification system. In Proceedings of the TENCON 2018—2018 IEEE Region 10 Conference, Jeju, Korea, 28–31 October 2018; pp. 477–482. [Google Scholar]

- Nguyen, B.; De Baets, B. Kernel distance metric learning using pairwise constraints for person re-identification. IEEE Trans. Image Process. 2019, 28, 589–600. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Wang, M.; Tao, D. Person re-identification with metric learning using privileged information. IEEE Trans. Image Process. 2018, 27, 791–805. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yu, H.-X.; Wu, A.; Zheng, W.-S. Unsupervised person re-identification by deep asymmetric metric embedding. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 956–973. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, R.; Shen, F.; Wu, H.; Zhu, J.; Zeng, H. Dual modal meta metric learning for attribute-image person re-identification. In Proceedings of the 2021 IEEE International Conference on Networking, Sensing and Control (ICNSC), Xiamen, China, 3–5 December 2021; pp. 1–6. [Google Scholar]

- Guo, R.-P.; Li, C.-G.; Li, Y.; Lin, J. Density-adaptive kernel based re-ranking for person re-identification. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 982–987. [Google Scholar]

- Xu, T.; Zhao, X.; Hou, J.; Zhang, J.; Hao, X.; Yin, J. A general re-ranking method based on metric learning for person re-identification. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar]

- Wu, G.; Zhu, X.; Gong, S. Person re-identification by ranking ensemble representations. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 2259–2263. [Google Scholar]

- Bai, S.; Tang, P.; Torr, P.H.; Latecki, L.J. Re-ranking via metric fusion for object retrieval and person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 740–749. [Google Scholar]

- Jiang, L.; Liang, C.; Xu, D.; Huang, W. Multi-similarity re-ranking for person re-identification. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1212–1216. [Google Scholar]

- Chen, S.; Guo, C.; Lai, J. Deep ranking for person re-identification via joint representation learning. IEEE Trans. Image Process. 2016, 25, 2353–2367. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mortezaie, Z.; Hassanpour, H.; Beghdadi, A. A color-based re-ranking process for people re-identification: Paper ID 21. In Proceedings of the 2021 European Workshop on Visual Information Processing (EUVIP), Paris, France, 23–25 June 2021; pp. 1–5. [Google Scholar]

- Zhang, X.; Li, N.; Zhang, R.; Li, G. Pedestrian re-identification method based on bilateral feature extraction network and re-ranking. In Proceedings of the 2021 International Conference on Artificial Intelligence, Big Data and Algorithms (CAIBDA), Xi’an, China, 28–30 May 2021; pp. 191–197. [Google Scholar]

- Wang, W.; Zhou, T.; Yu, F.; Dai, J.; Konukoglu, E.; Gool, L.V. Exploring cross-image pixel contrast for semantic segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 7283–7293. [Google Scholar]

- Zhou, T.; Zhang, M.; Zhao, F.; Li, J. Regional semantic contrast and aggregation for weakly supervised semantic segmentation. arXiv 2022, arXiv:2203.09653. [Google Scholar]

- Zhou, T.; Wang, W.; Konukoglu, E.; Gool, L.V. Rethinking semantic segmentation: A prototype view. arXiv 2022, arXiv:2203.15102. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Sun, Y.; Cheng, C.; Zhang, Y.; Zhang, C.; Zheng, L.; Wang, Z.; Wei, Y. Circle loss: A unified perspective of pair similarity optimization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6398–6407. [Google Scholar]

- Zheng, Z.; Zheng, L.; Garrett, M.; Yang, Y.; Xu, M.; Shen, Y.D. Dual-path convolutional image-text embeddings with instance loss. ACM Trans. Multimed. Comput. 2020, 16, 1–23. [Google Scholar] [CrossRef]

- Zhong, Z.; Zheng, L.; Cao, D.; Li, S. Re-ranking person re-identification with k-reciprocal encoding. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3652–3661. [Google Scholar]

- Wang, Y.; Wang, L.; You, Y.; Zou, X.; Chen, V.; Li, S.; Huang, G.; Hariharan, B.; Weinberger, K.Q. Resource aware person re-identification across multiple resolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8042–8051. [Google Scholar]

- Wu, L.; Wang, Y.; Gao, J.; Wang, M.; Zha, Z.-J.; Tao, D. Deep coattention-based comparator for relative representation learning in person re-identification. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 722–735. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.; Shi, Y.; Wang, Y.; Tian, Y. Bi-directional re-ranking for person re-identification. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 48–53. [Google Scholar]

- Sun, L.; Liu, J.; Zhu, Y.; Jiang, Z. Local to global with multi-scale attention network for person re-identification. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 2254–2258. [Google Scholar]

- Shi, X. Person re-identification based on improved residual neural networks. In Proceedings of the 2021 5th International Conference on Communication and Information Systems (ICCIS), Chongqing, China, 15–17 October 2021; pp. 170–174. [Google Scholar]

- Zhang, X.; Yan, Y.; Xue, J.-H.; Hua, Y.; Wang, H. Semantic-aware occlusion-robust network for occluded person re-identification. IEEE Trans. Circ. Syst. Video Technol. 2020, 31, 2764–2778. [Google Scholar] [CrossRef]

- Munir, A.; Martinel, N.; Micheloni, C. Multi branch siamese network for person re-identification. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2019; pp. 2351–2355. [Google Scholar]

- Jin, H.; Lai, S.; Qian, X. Occlusion-sensitive person re-identification via attribute-based shift attention. IEEE Trans. Circ. Syst. Video Technol. 2022, 32, 2170–2185. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).