A Hybrid Ensemble Stacking Model for Gender Voice Recognition Approach

Abstract

:1. Introduction

2. Related Work

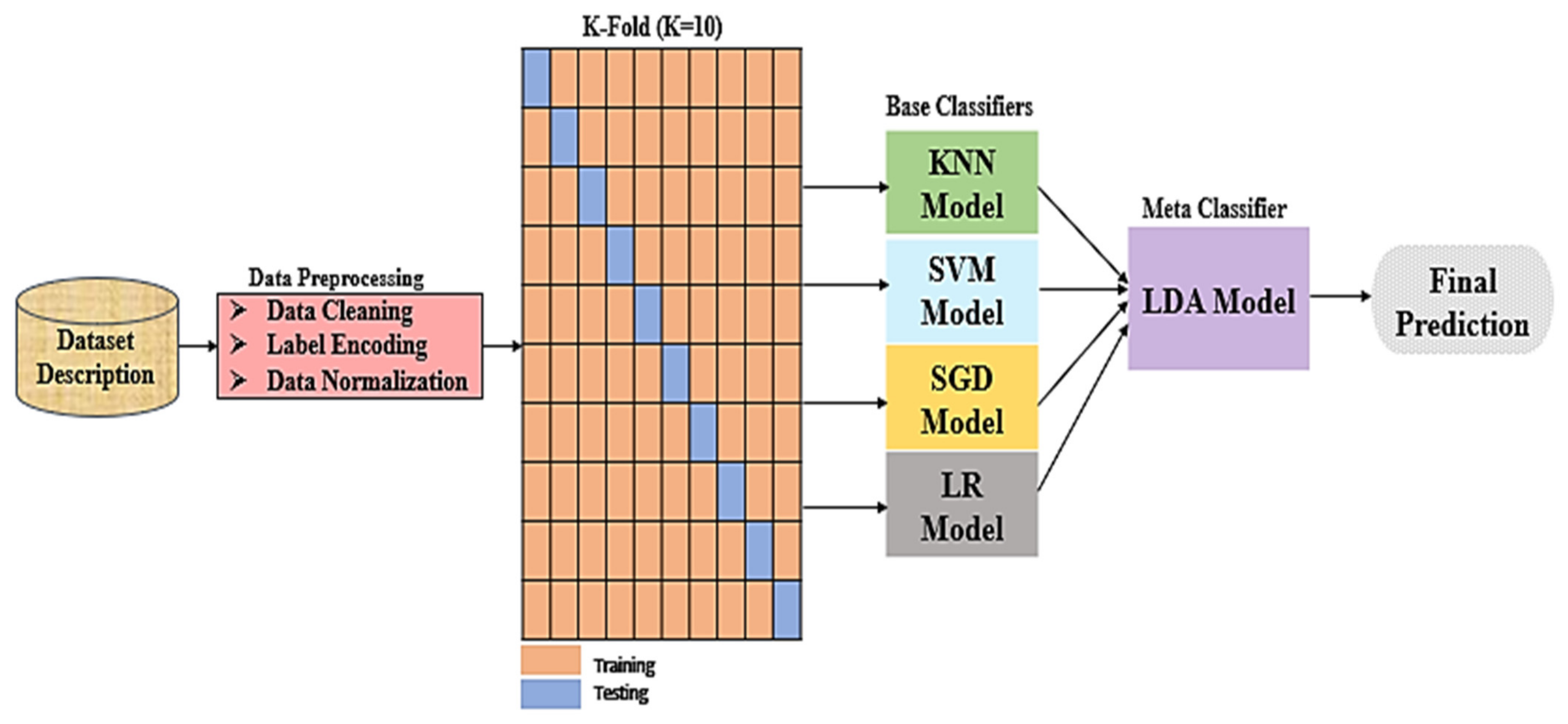

3. Materials and Methods

3.1. Dataset Description

3.2. Data Preprocessing

3.2.1. Data Cleaning

3.2.2. Label Encoding

3.2.3. Data Normalization

3.3. K-Fold Cross-Validation

- Randomize the dataset.

- Divide the dataset into groups.

- For every group:

- (a)

- One group is used as a test set.

- (b)

- Remaining groups will be utilized as the training set.

- (c)

- Use a machine learning model on the training set and then evaluate it on the test set.

- (d)

- Save the evaluation score and reject the model.

- Establish the effectiveness of the model by using the evaluation scores of the model.

4. Machine Learning Models

4.1. K-Nearest Neighbor

4.2. Support Vector Machine

4.3. Stochastic Gradient Descent

4.4. Logistic Regression

4.5. Linear Discriminant Analysis

4.6. Stacking Learning

5. Results and Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pahwa, A.; Aggarwal, G. Speech feature extraction for gender recognition. Int. J. Image Graph. Signal Process. 2016, 8, 17. [Google Scholar] [CrossRef] [Green Version]

- Ericsdotter, C.; Ericsson, A.M. Gender differences in vowel duration in read Swedish: Preliminary results. Work. Pap. Lund Univ. Dep. Linguist. Phon. 2001, 49, 34–37. [Google Scholar]

- Gamit, M.R.; Dhameliya, K.; Bhatt, N.S. Classification techniques for speech recognition: A review. Int. J. Emerg. Technol. Adv. Eng. 2015, 5, 58–63. [Google Scholar]

- Yasmin, G.; Dutta, S.; Ghosal, A. Discrimination of male and female voice using occurrence pattern of spectral flux. In Proceedings of the 2017 International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kerala, India, 6–7 July 2017; pp. 576–581. [Google Scholar]

- Hautamäki, R.G.; Sahidullah, M.; Hautamäki, V.; Kinnunen, T. Acoustical and perceptual study of voice disguise by age modification in speaker verification. Speech Commun. 2017, 95, 5. [Google Scholar]

- Bisio, I.; Lavagetto, F.; Marchese, M.; Sciarrone, A.; Fra, C.; Valla, M. Spectra: A speech processing platform as smartphone application. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 7030–7035. [Google Scholar]

- Wang, W.C.; Pestana, M.H.; Moutinho, L. The effect of emotions on brand recall by gender using voice emotion response with optimal data analysis. In Innovative Research Methodologies in Management; Springer: Berlin/Heidelberg, Germany, 2018; pp. 103–133. [Google Scholar]

- Holzinger, A. Introduction to Machine Learning & Knowledge Extraction (MAKE). Mach. Learn. Knowl. Extr. 2019, 1, 20. [Google Scholar]

- Ferri, M. Why topology for machine learning and knowledge extraction? Mach. Learn. Knowl. Extr. 2019, 1, 115–120. [Google Scholar] [CrossRef] [Green Version]

- Buyukyilmaz, M.; Cibikdiken, A.O. Voice gender recognition using deep learning. Adv. Comput. Sci. Res. 2016, 58, 409–411. [Google Scholar]

- Maka, T.; Dziurzanski, P. An analysis of the influence of acoustical adverse conditions on speaker gender identification. In Proceedings of the XXII Annual Pacific Voice Conference (PVC), Krakow, Poland, 11–13 April 2014; pp. 1–4. [Google Scholar]

- Clarke, B.; Ernes, F.; Ha, H.Z. Principles and Theory for Data Mining and Machine Learning; Springer: New York, NY, USA, 2009. [Google Scholar]

- Livieris, I.E.; Kanavos, A.; Tampakas, V.; Pintelas, P. An ensemble SSL algorithm for efficient chest x-ray image classification. J. Imaging 2018, 4, 95. [Google Scholar] [CrossRef] [Green Version]

- Livieris, I.E.; Kiriakidou, N.; Kanavos, A.; Tampakas, V.; Pintelas, P. On ensemble SSL algorithms for credit scoring problem. Informatics 2018, 5, 40. [Google Scholar] [CrossRef] [Green Version]

- Pławiak, P.; Acharya, U.R. Novel deep genetic ensemble of classifiers for arrhythmia detection using ECG signals. Neural Comput. Appl. 2020, 32, 11137–11161. [Google Scholar] [CrossRef] [Green Version]

- Pławiak, P. Novel genetic ensembles of classifiers applied to myocardium dysfunction recognition based on ECG signals. Swarm Evol. Comput. 2018, 39, 192–208. [Google Scholar] [CrossRef]

- Wang, G.; Hao, J.; Ma, J.; Jiang, H. A comparative assessment of ensemble learning for credit scoring. Expert Syst. Appl. 2011, 38, 223–230. [Google Scholar] [CrossRef]

- Ramadhan, M.M.; Sitanggang, I.S.; Nasution, F.R.; Ghifari, A. Parameter tuning in random forest based on grid search method for gender classification based on voice frequency. DEStech Trans. Comput. Sci. Eng. 2017, 10. [Google Scholar] [CrossRef] [Green Version]

- Přibil, J.; Přibilová, A.; Matoušek, J. GMM-based speaker gender and age classification after voice conversion. In Proceedings of the 2016 First International Workshop on Sensing, Processing and Learning for Intelligent Machines (SPLINE), Aalborg, Denmark, 6–8 July 2016; pp. 1–5. [Google Scholar]

- Zvarevashe, K.; Olugbara, O.O. Gender voice recognition using random forest recursive feature elimination with gradient boosting machines. In Proceedings of the 2018 International Conference on Advances in Big Data, Computing and Data Communication Systems (icABCD), Durban, South Africa, 6–7 August 2018; pp. 1–6. [Google Scholar]

- Livieris, I.E.; Pintelas, E.; Pintelas, P. Gender recognition by voice using an improved self-labeled algorithm. Mach. Learn. Knowl. Extr. 2019, 1, 492–503. [Google Scholar] [CrossRef] [Green Version]

- Ertam, F. An effective gender recognition approach using voice data via deeper LSTM networks. Appl. Acoust. 2019, 156, 351–358. [Google Scholar] [CrossRef]

- Prasad, B.S. Gender classification through voice and performance analysis by using machine learning algorithms. Int. J. Res. Comput. Appl. Robot. 2019, 7, 1–11. [Google Scholar]

- Gender Recognition by Voice. Available online: https://www.kaggle.com/primaryobjects/voicegender (accessed on 17 February 2022).

- Kotsiantis, S.B.; Kanellopoulos, D.; Pintelas, P.E. Data preprocessing for supervised leaning. Int. J. Comput. Sci. 2006, 1, 111–117. [Google Scholar]

- García, S.; Luengo, J.; Herrera, F. Data Preprocessing in Data Mining; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Chu, X.; Ilyas, I.F.; Krishnan, S.; Wang, J. Data cleaning: Overview and emerging challenges. In Proceedings of the 2016 International Conference on Management of Data, San Francisco, CA, USA, 26 June–1 July 2016; pp. 2201–2206. [Google Scholar]

- Gudivada, V.; Apon, A.; Ding, J. Data quality considerations for big data and machine learning: Going beyond data cleaning and transformations. Int. J. Adv. Softw. 2017, 10, 20. [Google Scholar]

- Mottini, A.; Acuna-Agost, R. Relative label encoding for the prediction of airline passenger nationality. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW), Barcelona, Spain, 12–15 December 2016; pp. 671–676. [Google Scholar]

- Zhuang, F.; Cheng, X.; Luo, P.; Pan, S.J.; He, Q. Supervised representation learning: Transfer learning with deep autoencoders. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Rodríguez, C.K. A Computational Environment for Data Preprocessing in Supervised Classification; University of Puerto Rico: Mayaguez, Puerto Rico, 2004. [Google Scholar]

- Anguita, D.; Ghelardoni, L.; Ghio, A.; Oneto, L.; Ridella, S. The ‘K’in K-fold cross validation. In Proceedings of the 20th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN), Bruges, Belgium, 25–27 April 2012; pp. 441–446. [Google Scholar]

- Fushiki, T. Estimation of prediction error by using K-fold cross-validation. Stat. Comput. 2011, 21, 137–146. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Jamjoom, M.; Alabdulkreem, E.; Hadjouni, M.; Karim, F.; Qarh, M. Early Prediction for At-Risk Students in an Introductory Programming Course Based on Student Self-Efficacy. Informatica 2021, 45, 6. [Google Scholar] [CrossRef]

- Paul, B.; Dey, T.; Adhikary, D.D.; Guchhai, S.; Bera, S. A Novel Approach of Audio-Visual Color Recognition Using KNN. In Computational Intelligence in Pattern Recognition; Springer: Singapore, 2022; pp. 231–244. [Google Scholar]

- Zhang, C.; Zhong, P.; Liu, M.; Song, Q.; Liang, Z.; Wang, X. Hybrid Metric K-Nearest Neighbor Algorithm and Applications. Math. Probl. Eng. 2022, 2022, 8212546. [Google Scholar] [CrossRef]

- Szabo, F. The Linear Algebra Survival Guide: Illustrated with Mathematica; Academic Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Drucker, H.; Burges, C.J.; Kaufman, L.; Smola, A.; Vapnik, V. Support vector regression machines. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Ukil, A. Intelligent Systems and Signal Processing in Power Engineering; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Hsu, C.W.; Lin, C.J. A comparison of methods for multiclass support vector machines. IEEE Trans. Neural Netw. 2002, 13, 415–425. [Google Scholar]

- Yue, S.; Li, P.; Hao, P. SVM classification: Its contents and challenges. Appl. Math. A J. Chin. Univ. 2003, 18, 332–342. [Google Scholar] [CrossRef]

- Ketkar, N. Stochastic gradient descent. In Deep Learning with Python; Apress: Berkeley, CA, USA, 2017; pp. 113–132. [Google Scholar]

- Bottou, L. Stochastic gradient descent tricks. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 421–436. [Google Scholar]

- Sammut, C.; Webb, G.I. Logistic Regression. In Encyclopedia of Machine Learning; Springer: Berlin/Heidelberg, Germany, 2010; p. 631. [Google Scholar]

- Stoltzfus, J.C. Logistic regression: A brief primer. Acad. Emerg. Med. 2011, 18, 1099–1104. [Google Scholar] [CrossRef]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B. Linear discriminant analysis. In Robust Data Mining; Springer: Berlin/Heidelberg, Germany, 2013; pp. 27–33. [Google Scholar]

- Nie, F.; Wang, Z.; Wang, R.; Wang, Z.; Li, X. Adaptive local linear discriminant analysis. ACM Trans. Knowl. Discov. Data (TKDD) 2020, 14, 9. [Google Scholar] [CrossRef] [Green Version]

- Tang, Y.; Gu, L.; Wang, L. Deep Stacking Network for Intrusion Detection. Sensors 2022, 22, 25. [Google Scholar] [CrossRef]

- Wolpert, D.H. Stacked generalization. Neural Netw. 1992, 5, 241–259. [Google Scholar] [CrossRef]

- Hossin, M.; Sulaiman, M.N. A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 1. [Google Scholar]

- Hoo, Z.H.; Candlish, J.; Teare, D. What is an ROC curve? Emerg. Med. J. 2017, 34, 357–359. [Google Scholar] [CrossRef] [PubMed]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef] [Green Version]

- Song, Y.Y.; Ying, L.U. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130. [Google Scholar] [PubMed]

- Azar, A.T.; Elshazly, H.I.; Hassanien, A.E.; Elkorany, A.M. A random forest classifier for lymph diseases. Comput. Methods Programs Biomed. 2014, 113, 465–473. [Google Scholar] [CrossRef]

- Schapire, R.E. Explaining adaboost. In Empirical Inference: Festschrift in Honor of Vladimir N. Vapnik; Springer: Berlin/Heidelberg, Germany, 2013; pp. 37–52. [Google Scholar]

| Models | Parameters |

|---|---|

| KNN | N_neighbors = 2, distance = Manhattan. |

| SVM | Kernel = linear, regularization parameter (C) = 0.1. |

| SGD | Loss = hinge, penalty = l2. |

| LR | Penalty = l2, fit_intercept = true. |

| Models | Accuracy | F1 Score | Recall | Precision | AUC |

|---|---|---|---|---|---|

| KNN | 97.78% | 97.78% | 98.73% | 96.89% | 0.998298 |

| SVM | 99.61% | 99.42% | 99.50% | 99.60% | 0.999479 |

| SGD | 96.20% | 96.20% | 96.83% | 95.62% | 0.997797 |

| LR | 99.05% | 99.05% | 99.50% | 98.74% | 0.999439 |

| Stacked model | 99.64% | 99.42% | 99.50% | 99.60% | 0.999639 |

| Models | Accuracy | ||

|---|---|---|---|

| KNN | 3168 | 97.78% | 0.00842 |

| SVM | 3168 | 99.61% | 3.304537 |

| SGD | 3168 | 96.20% | 0.018447 |

| LR | 3168 | 99.05% | 0.115368 |

| Stacked model | 3168 | 99.64% | 14.960304 |

| Models | Parameters |

|---|---|

| DT | Max_depth = 2, criterion = entropy. |

| RF | N_estimators = 100. |

| Adaboost | N_estimators = 100. |

| Models | Accuracy | F1 Score | Recall | Precision | AUC |

|---|---|---|---|---|---|

| DT | 95.72% | 95.41% | 95.53% | 95.66% | 0.975841 |

| RF | 98.79% | 98.57% | 98.61% | 98.71% | 0.998697 |

| Adaboost | 97.48% | 97.27% | 97.34% | 97.42% | 0.998182 |

| Stacked model | 99.64% | 99.42% | 99.50% | 99.60% | 0.999639 |

| Studies | Model | Accuracy |

|---|---|---|

| Ref. [10] | MLP with deep learning | 96.74% |

| Ref. [18] | Grid search optimization | 96.90% |

| Ref. [21] | iCST-Voting | 98.4% |

| Ref. [22] | Deeper LSTM | 98.4% |

| Ref. [23] | ANN | 98.35% |

| Proposed stacked model | KNN, SVM, SGD, and LR as base classifiers and LDA as meta classifier | 99.64% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alkhammash, E.H.; Hadjouni, M.; Elshewey, A.M. A Hybrid Ensemble Stacking Model for Gender Voice Recognition Approach. Electronics 2022, 11, 1750. https://doi.org/10.3390/electronics11111750

Alkhammash EH, Hadjouni M, Elshewey AM. A Hybrid Ensemble Stacking Model for Gender Voice Recognition Approach. Electronics. 2022; 11(11):1750. https://doi.org/10.3390/electronics11111750

Chicago/Turabian StyleAlkhammash, Eman H., Myriam Hadjouni, and Ahmed M. Elshewey. 2022. "A Hybrid Ensemble Stacking Model for Gender Voice Recognition Approach" Electronics 11, no. 11: 1750. https://doi.org/10.3390/electronics11111750

APA StyleAlkhammash, E. H., Hadjouni, M., & Elshewey, A. M. (2022). A Hybrid Ensemble Stacking Model for Gender Voice Recognition Approach. Electronics, 11(11), 1750. https://doi.org/10.3390/electronics11111750