A 3D Image Registration Method for Laparoscopic Liver Surgery Navigation

Abstract

1. Introduction

- (1)

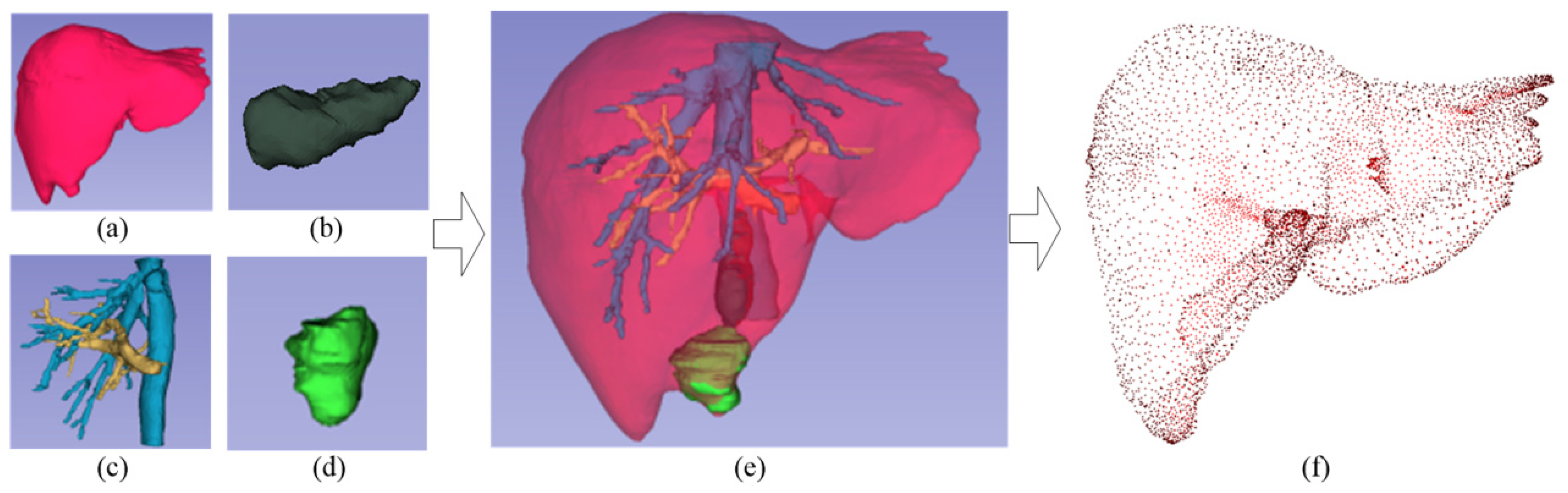

- A 3D reconstruction of the segmented preoperative CT images using the Marching Cubes algorithm on the VTK platform, and the 3D point cloud was generated after obtaining the 3D model of the liver;

- (2)

- The laparoscopic (binocular vision camera) image was processed, and the 3D point cloud of the intraoperative liver image was generated;

- (3)

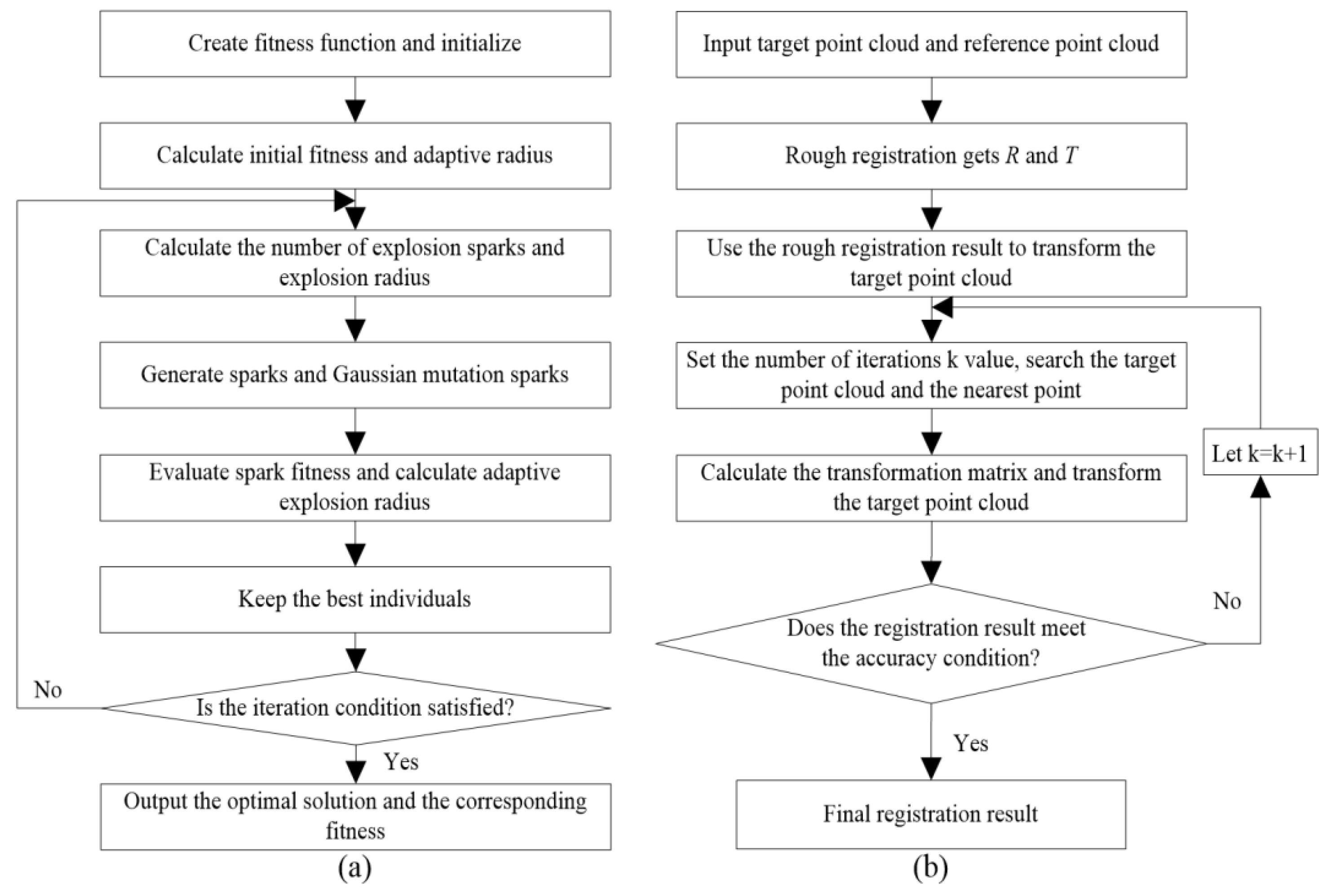

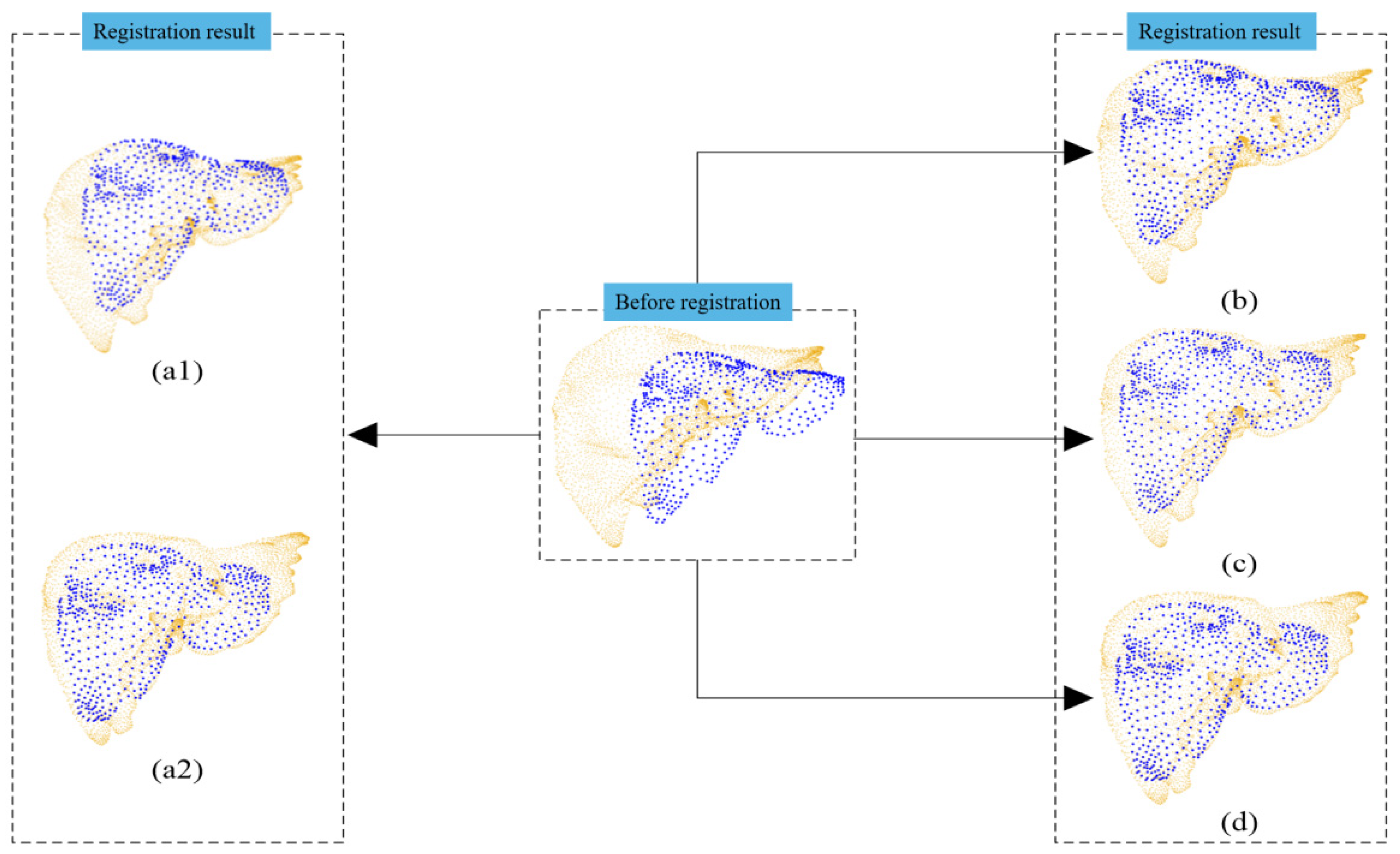

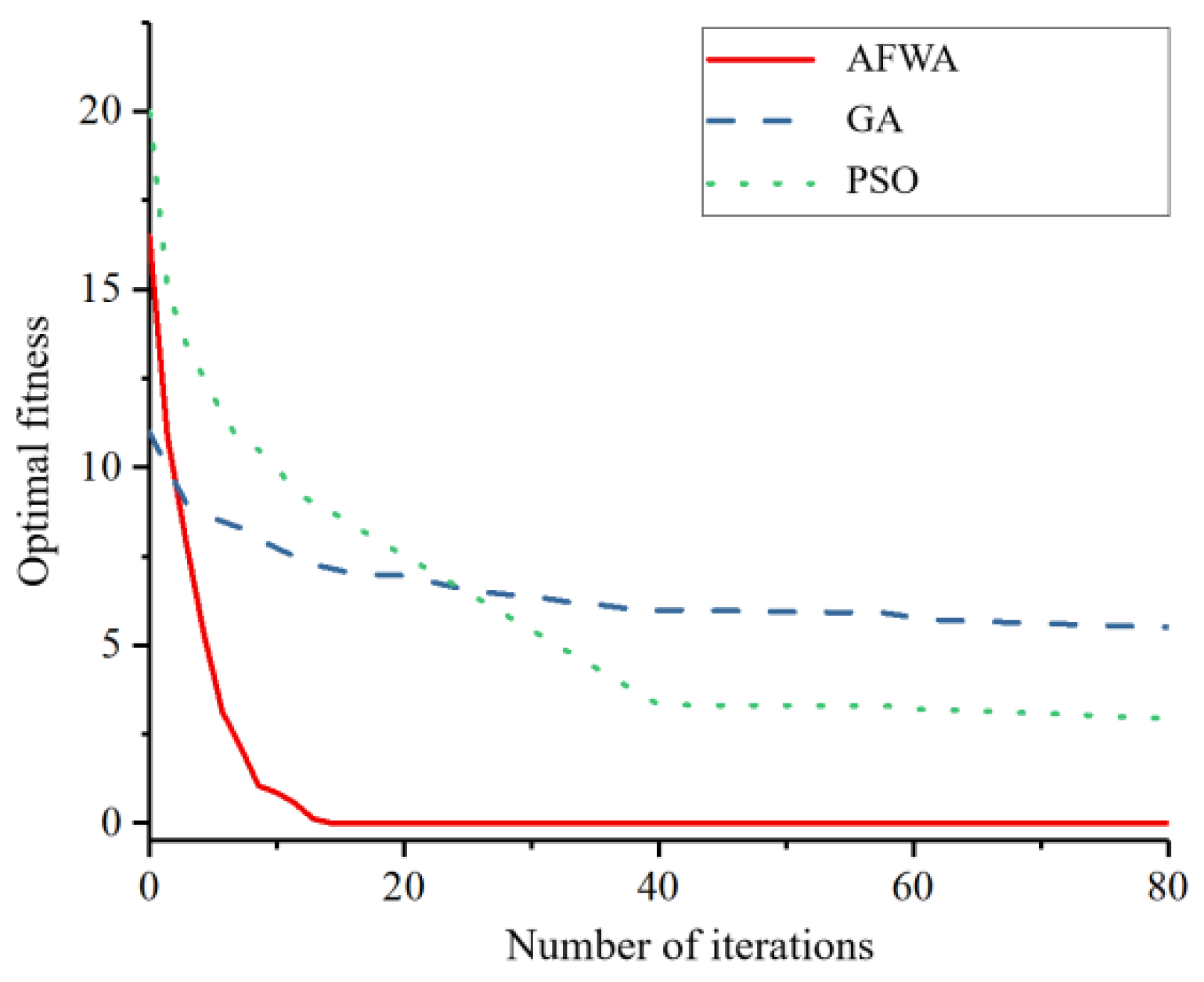

- A two-step combined registration method through rough registration and fine registration is introduced. First, AFWA is applied to rough registration, and then the optimized ICP is applied to fine registration, which solves the problem that the ICP algorithm will fall into local extreme values during the iterative process;

- (4)

- The registration method we proposed and other registration methods based on stochastic optimization algorithms are jointly tested in experiments. From the point cloud registration results, our method is better in terms of computation time and registration accuracy.

2. Background

3. Related Work

4. Materials and Methods

4.1. CT Data Preprocess

4.2. Preoperative Liver Point Cloud Generation

4.3. Intraoperative Liver Point Cloud Generation

4.3.1. Calibration of Binocular Vision Camera

4.3.2. Image Acquisition and Image Processing

4.3.3. Point Cloud Generation

4.4. Two-Step Combined Registration Method through Rough Registration and Fine Registration

4.4.1. Rough Registration Process Based on AFWA

4.4.2. Fine Registration Process

- (1)

- Input the calculated target point set and the original point set Q together. At this time, the KD-tree structure is used to store the point set Q. Then the focus is to search the closest neighbor point set of , which is implemented by the nearest neighbor algorithm, and then set the iteration number k (the initial value is k = 1).

- (2)

- Calculate the rotation variable and translation variable from to . Here, the quaternion calculation method is used and the value of Equation (3) should be minimized.

- (3)

- Calculate the average distance between point set and point set

- (4)

- According to the obtained rotation variable and translation variable , the p point set is transformed, and finally, the final registration result is obtained together with the reference point cloud Q.

5. Experiments and Validation

6. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Winne, C.; Khan, M.; Stopp, F.; Jank, E.; Keeve, E. Overlay visualization in endoscopic ENT surgery. Int. J. Comput. Assist. Radiol. Surg. 2011, 6, 401–406. [Google Scholar] [CrossRef] [PubMed]

- Collins, T.; Pizarro, D.; Bartoli, A.; Canis, M.; Bourdel, N. Computer-assisted laparoscopic myomectomy by augmenting the uterus with pre-operative MRI data. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; IEEE: Munich, Germany, 2014; pp. 243–248. [Google Scholar]

- Pascau, J. Image-guided intraoperative radiation therapy: Current developments and future perspectives. Expert Rev. Med. Devices 2014, 11, 431–434. [Google Scholar] [CrossRef][Green Version]

- Roberts, D.W.; Strohbehn, J.W.; Hatch, J.F.; Murray, W.; Kettenberger, H. A frameless stereotaxic integration of computerized tomographic imaging and the operating microscope. J. Neurosurg. 1986, 65, 545–549. [Google Scholar] [CrossRef] [PubMed]

- Kelly, P.J.; Kall, B.A.; Goerss, S.; Earnest, F. Computer-assisted stereotaxic laser resection of intra-axial brain neoplasms. J. Neurosurg. 1986, 64, 427–439. [Google Scholar] [CrossRef]

- Wengert, C.; Cattin, P.C.; Duff, J.M.; Baur, C.; Székely, G. Markerless endoscopic registration and referencing. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Copenhagen, Denmark, 1–6 October 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 816–823. [Google Scholar]

- Okamoto, T.; Onda, S.; Matsumoto, M.; Gocho, T.; Futagawa, Y.; Fujioka, S.; Yanaga, K.; Suzuki, N.; Hattori, A. Utility of augmented reality system in hepatobiliary surgery. J. Hepato-Biliary Pancreat. Sci. 2013, 20, 249–253. [Google Scholar] [CrossRef] [PubMed]

- Teber, D.; Guven, S.; Simpfendörfer, T.; Baumhauer, M.; Güven, E.O.; Yencilek, F.; Gözen, A.S.; Rassweiler, J. Augmented reality: A new tool to improve surgical accuracy during laparoscopic partial nephrectomy? Preliminary in vitro and in vivo results. Eur. Urol. 2009, 56, 332–338. [Google Scholar] [CrossRef]

- Kenngott, H.G.; Neuhaus, J.; Müller-Stich, B.P.; Wolf, I.; Vetter, M.; Meinzer, H.P.; Köninger, J.; Büchler, M.W.; Gutt, C.N. Development of a navigation system for minimally invasive esophagectomy. Surg. Endosc. 2008, 22, 1858–1865. [Google Scholar] [CrossRef]

- Kersten-Oertel, M.; Jannin, P.; Collins, D.L. The state of the art of visualization in mixed reality image guided surgery. Comput. Med. Imaging Graph. 2013, 37, 98–112. [Google Scholar] [CrossRef]

- Schneider, C.; Nguan, C.; Longpre, M.; Rohling, R. Motion of the Kidney between Preoperative and Intraoperative Positioning. IEEE Trans. Biomed. Eng. 2013, 60, 1619–1627. [Google Scholar] [CrossRef]

- Haouchine, N.; Cotin, S.; Peterlik, I.; Dequidt, J.; Sanz-Lopez, M.; Kerrien, E.; Berger, M.O. Impact of soft tissue heterogeneity on augmented reality for liver surgery. IEEE Trans. Vis. Comput. Graph. 2014, 21, 584–597. [Google Scholar] [CrossRef]

- Fischer, J.; Eichler, M.; Bartz, D.; Strasser, W. A hybrid tracking method for surgical augmented reality. Comput. Graph. 2007, 31, 39–52. [Google Scholar] [CrossRef]

- Totz, J.; Thompson, S.; Stoyanov, D.; Gurusamy, K.; Davidson, B.R.; Hawkes, D.J.; Clarkson, M.J. Fast semi-dense surface reconstruction from stereoscopic video in laparoscopic surgery. In Proceedings of the International Conference on Information Processing in Computer-Assisted Interventions, Fukuoka, Japan, 28 June 2014; Springer: Cham, Switzerland, 2014; pp. 206–215. [Google Scholar]

- Haouchine, N.; Dequidt, J.; Peterlik, I.; Kerrien, E.; Berger, M.O.; Cotin, S. Image-guided simulation of heterogeneous tissue deformation for augmented reality during hepatic surgery. In Proceedings of the 2013 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Adelaide, Australia, 1–4 October 2013; IEEE: Adelaide, Australia, 2013; pp. 199–208. [Google Scholar]

- Chang, P.L.; Handa, A.; Davison, A.J.; Stoyanov, D.; Edwards, P.E. Robust real-time visual odometry for stereo endoscopy using dense quadrifocal tracking. In Proceedings of the International Conference on Information Processing in Computer-Assisted Interventions, Fukuoka, Japan, 28 June 2014; Springer: Cham, Switzerland, 2014; pp. 11–20. [Google Scholar]

- Krücker, J.; Viswanathan, A.; Borgert, J.; Glossop, N.; Yang, Y.; Wood, B.J. An electro-magnetically tracked laparoscopic ultrasound for multi-modality minimally invasive surgery. In Proceedings of the International Congress Series, Madrid, Spain, 18–20 April 2005; Elsevier: Amsterdam, The Netherlands, 2005; Volume 1281, pp. 746–751. [Google Scholar]

- Martens, V.; Besirevic, A.; Shahin, O.; Schlaefer, A.; Kleemann, M. LapAssistent-computer assisted laparoscopic liver surgery. In Proceedings of the Biomedizinischen Technik (BMT) Conference, Rostock, Germany, 5–8 October 2010. [Google Scholar]

- Hammill, C.W.; Clements, L.W.; Stefansic, J.D.; Wolf, R.F.; Hansen, P.D.; Gerber, D.A. Evaluation of a minimally invasive image-guided surgery system for hepatic ablation procedures. Surg. Innov. 2014, 21, 419–426. [Google Scholar] [CrossRef] [PubMed]

- Feuerstein, M.; Mussack, T.; Heining, S.M.; Navab, N. Intraoperative laparoscope augmentation for port placement and resection planning in minimally invasive liver resection. IEEE Trans. Med. Imaging 2008, 27, 355–369. [Google Scholar] [CrossRef] [PubMed]

- Rauth, T.P.; Bao, P.Q.; Galloway, R.L.; Bieszczad, J.; Friets, E.M.; Knaus, D.A.; Kynor, D.B.; Herline, A.J. Laparoscopic surface scanning and subsurface targeting: Implications for image-guided laparoscopic liver surgery. Surgery 2007, 137, 229. [Google Scholar] [CrossRef] [PubMed]

- Shekhar, R.; Dandekar, O.; Bhat, V.; Philip, M.; Lei, P.; Godinez, C.; Sutton, E.; George, I.; Kavic, S.; Mezrich, R. Live augmented reality: A new visualization method for laparoscopic surgery using continuous volumetric computed tomography. Surg. Endosc. 2010, 24, 1976–1985. [Google Scholar] [CrossRef] [PubMed]

- Fusaglia, M.; Tinguely, P.; Banz, V.; Weber, S.; Lu, H. A Novel Ultrasound-Based Registration for Image-Guided Laparoscopic Liver Ablation. Surg. Innov. 2016, 23, 397–406. [Google Scholar] [CrossRef]

- Tam, G.K.L.; Cheng, Z.Q.; Lai, Y.K.; Langbein, F.C.; Liu, Y.; Marshall, D.; Martin, R.R.; Sun, X.F.; Rosin, P.L. Registration of 3D point clouds and meshes: A survey from rigid to nonrigid. IEEE Trans. Vis. Comput. Graph. 2012, 19, 1199–1217. [Google Scholar] [CrossRef]

- Segal, A.; Hhnel, D.; Thrun, S. Generalized-ICP. In Proceedings of the Robotics: Science and Systems V, Seattle, DC, USA, 28 June–1 July 2009. [Google Scholar]

- Bentley, J.L. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Liu, J.; Zhu, J.; Yang, J.; Meng, X.; Zhang, H. Three-dimensional point cloud registration based on ICP algorithm employing KD tree optimization. In Proceedings of the Eighth International Conference on Digital Image Processing (ICDIP 2016), Chengdu, China, 20–22 May 2016; International Society for Optics and Photonics: Bellingham, WA, USA, 2016; Volume 10033, p. 100334D. [Google Scholar]

- Li, J.; Zheng, S.; Tan, Y. Adaptive fireworks algorithm. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014. [Google Scholar]

- Shi, X.J.; Liu, T.; Han, X. Improved Iterative Closest Point (ICP) 3D point cloud registration algorithm based on point cloud filtering and adaptive fireworks for coarse registration. Int. J. Remote Sens. 2020, 41, 3197–3220. [Google Scholar] [CrossRef]

- Chen, Y.L.; He, F.Z.; Zeng, X.T.; Li, H.R.; Liang, Y.Q. The explosion operation of fireworks algorithm boosts the coral reef optimization for multimodal medical image registration. Eng. Appl. Artif. Intell. 2021, 102, 104252. [Google Scholar] [CrossRef]

- Zhang, W.Q.; Zhu, W.; Yang, J.; Xiang, N.; Zeng, N.; Hu, H.Y.; Jia, F.C.; Fang, C.H. Augmented reality navigation for stereoscopic laparoscopic anatomical hepatectomy of primary liver cancer: Preliminary experience. Front. Oncol. 2021, 11, 996. [Google Scholar] [CrossRef] [PubMed]

- Pelanis, E.; Teatini, A.; Eigl, B.; Regensburger, A.; Alzaga, A.; Kumar, R.P.; Rudolph, T.; Aghayan, D.L.; Riediger, C.; Kvarnström, N.; et al. Evaluation of a novel navigation platform for laparoscopic liver surgery with organ deformation compensation using injected fiducials. Med. Image Anal. 2021, 69, 101946. [Google Scholar] [CrossRef] [PubMed]

- Verhey, J.T.; Haglin, J.M.; Verhey, E.M.; Hartigan, D.E. Virtual, augmented, and mixed reality applications in orthopedic surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2020, 16, e2067. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; Mai, C.; Liao, W.; Wen, Y.; Liu, G. 3D point cloud registration for apple tree based on Kinect camera. Trans. Chin. Soc. Agric. Mach. 2016, 47, 1–6. [Google Scholar]

- Saiti, E.; Theoharis, T. An application independent review of multimodal 3D registration methods. Comput. Graph. 2020, 91, 153–178. [Google Scholar] [CrossRef]

| GA + Improved ICP | PSO + Improved ICP | AFWA + ICP | Ours | ||

|---|---|---|---|---|---|

| Dataset 1 | Registration time (s) | 0.709 | 0.814 | 16.186 | 0.606 |

| Accuracy (mm) | 0.0208 | 0.0019 | 0.0018 | 0.0018 | |

| Dataset 2 | Registration time (s) | 0.768 | 0.861 | 17.548 | 0.657 |

| Accuracy (mm) | 0.0346 | 0.0027 | 0.0022 | 0.0022 | |

| Dataset 3 | Registration time (s) | 0.849 | 0.953 | 18.658 | 0.726 |

| Accuracy (mm) | 0.0253 | 0.0023 | 0.0019 | 0.0019 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, D.; Wang, M. A 3D Image Registration Method for Laparoscopic Liver Surgery Navigation. Electronics 2022, 11, 1670. https://doi.org/10.3390/electronics11111670

Li D, Wang M. A 3D Image Registration Method for Laparoscopic Liver Surgery Navigation. Electronics. 2022; 11(11):1670. https://doi.org/10.3390/electronics11111670

Chicago/Turabian StyleLi, Donghui, and Monan Wang. 2022. "A 3D Image Registration Method for Laparoscopic Liver Surgery Navigation" Electronics 11, no. 11: 1670. https://doi.org/10.3390/electronics11111670

APA StyleLi, D., & Wang, M. (2022). A 3D Image Registration Method for Laparoscopic Liver Surgery Navigation. Electronics, 11(11), 1670. https://doi.org/10.3390/electronics11111670