An Autonomous Grape-Harvester Robot: Integrated System Architecture

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

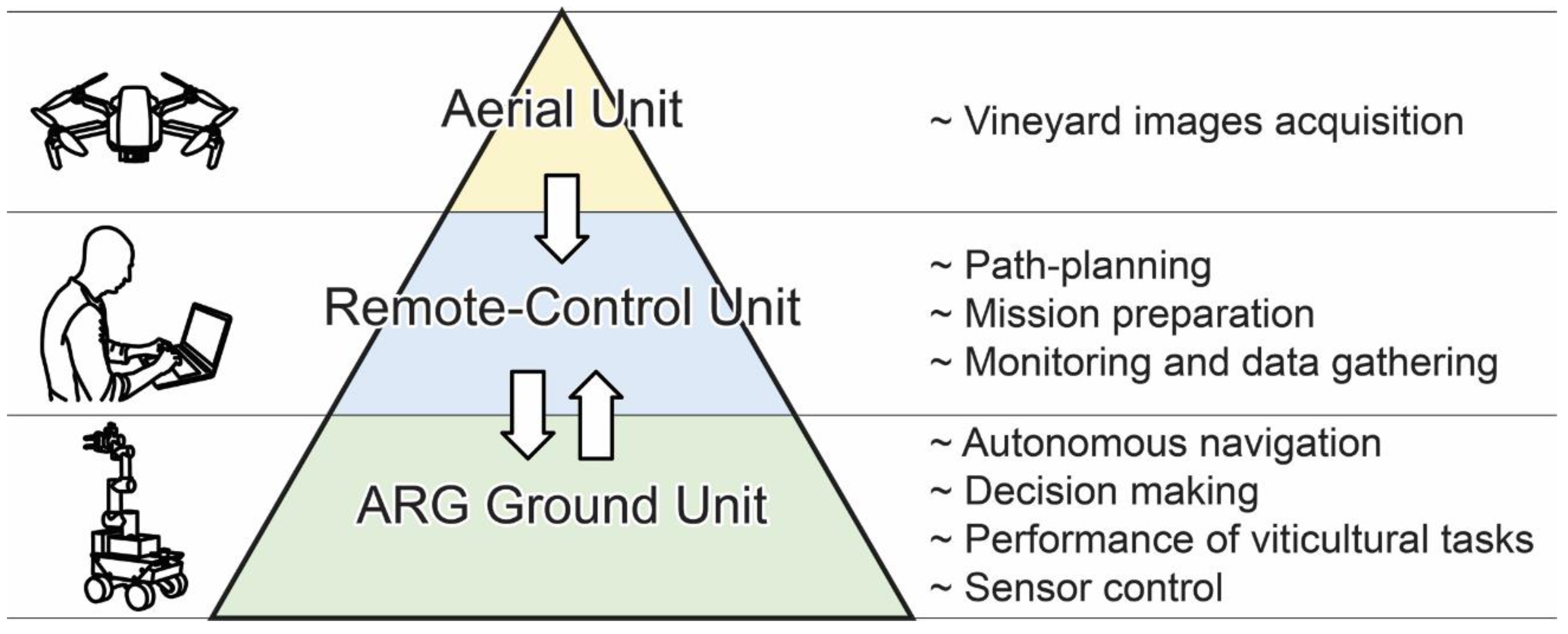

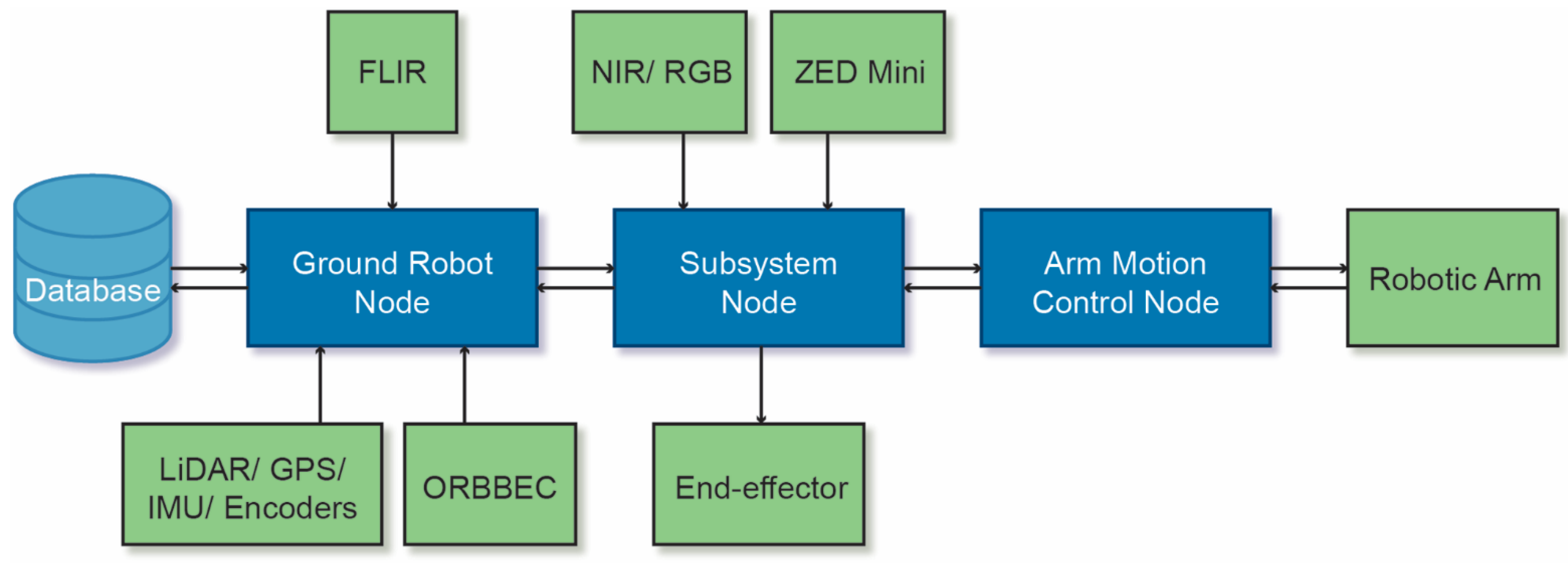

3.1. System Overview

3.2. The ARG Ground Unit

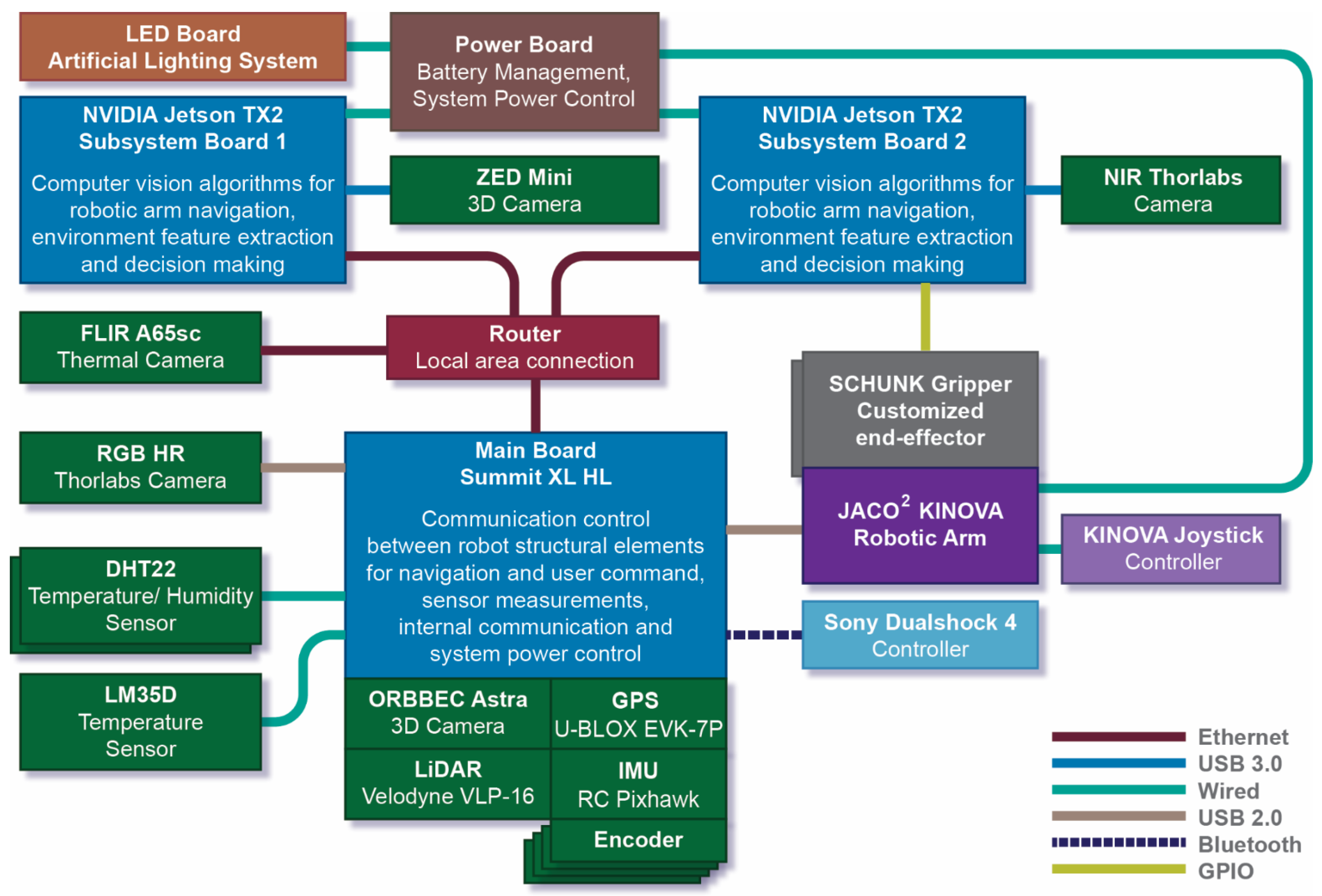

- Environmental sensors, which include two DHT22 temperature and humidity sensors as well as one LM35D temperature sensor. More specifically, one DHT22 is placed inside the box containing electronic circuits, batteries and connections, for malfunction overheating monitoring, whereas the other DHT22 is placed externally on the robot vehicle for environmental measurements. Note that a DHT22 sensor measures humidity in the range from 0% to 100% with 2–5% accuracy and temperature in the range from 40 to +80 degrees Celsius with ±0.5 degrees accuracy. The LM35D sensor enhances the accuracy of external temperature measurements.

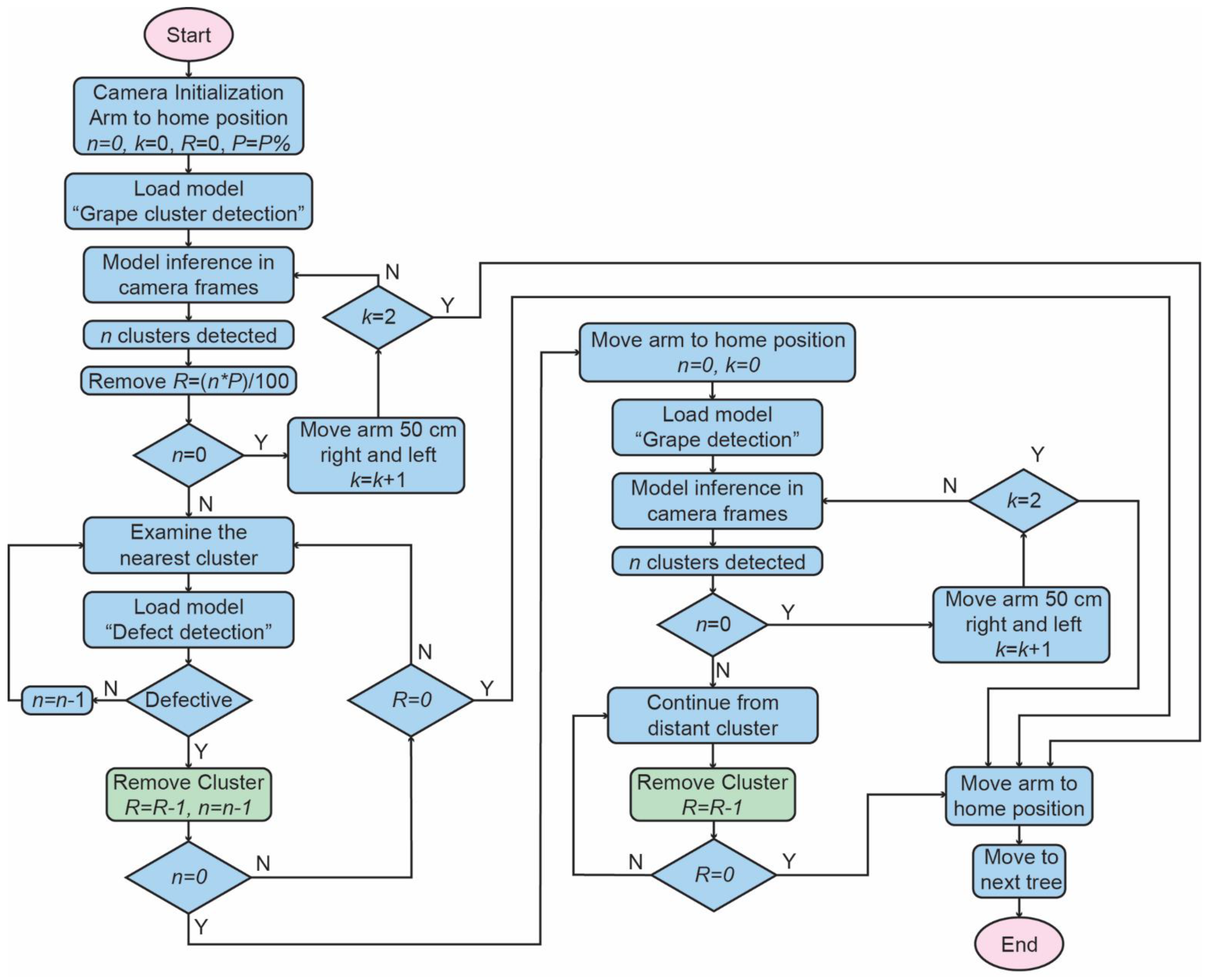

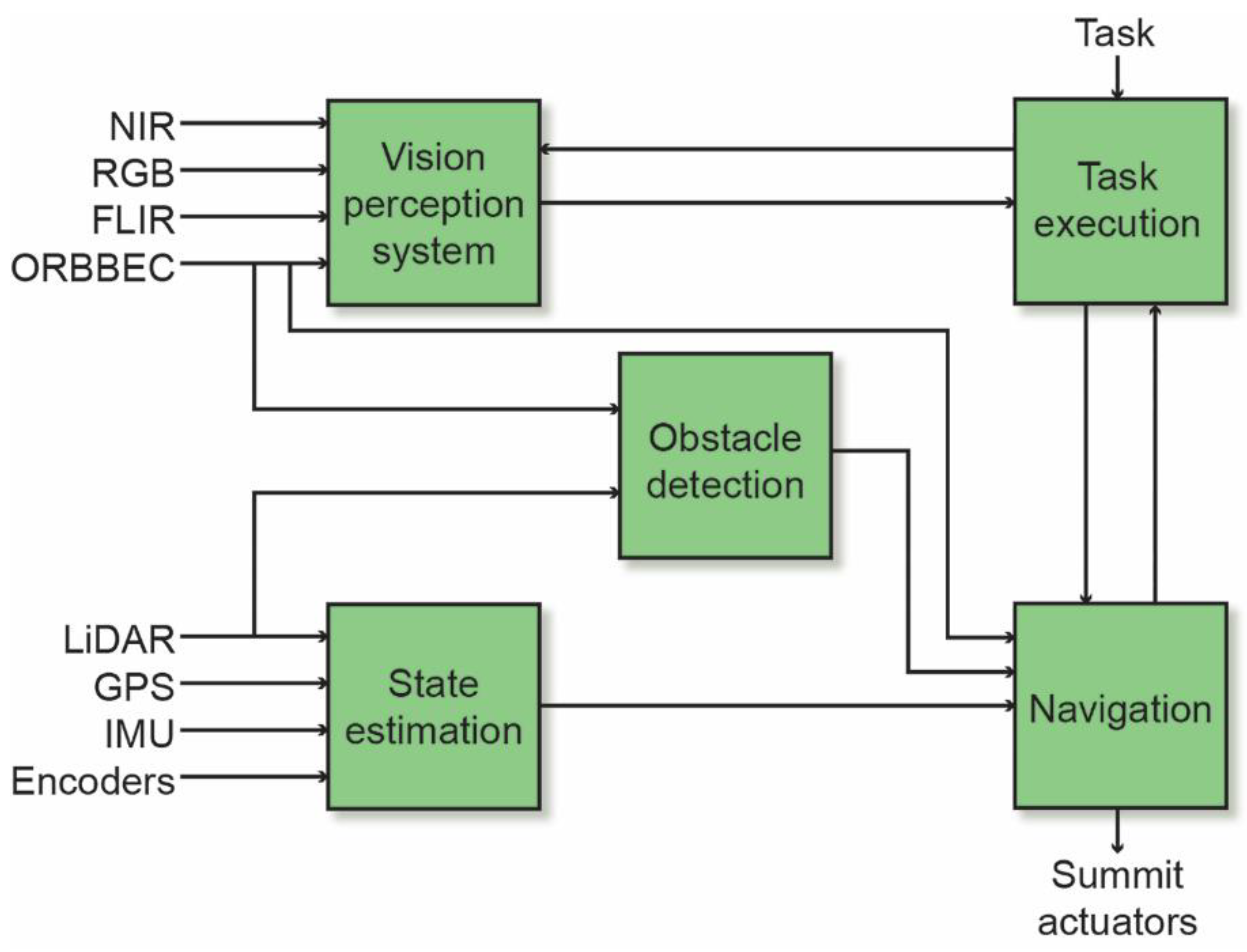

- A ZED Mini 3D camera mounted on the robotic arm. This is the main visual sensor of the system. ZED Mini provides a streaming video sequence that can be monitored from the remote-control unit. Frames are used for: (1) grape cluster and leaves detection [33], (2) grape stem detection [34], (3) harvest crate detection [35], (4) grapevine trunk detection [36], (5) ripeness estimation and yield time prediction [37] and (6) grapes defect detection.

- Three auxiliary cameras are mounted on the left side of the wheeled mobile robot on a fixed basis. The high resolution RGB and NIR cameras are placed on one side, whereas on the other side is placed the FLIR camera. The two synchronized NIR and RGB cameras are placed at a fixed distance of 3.5 cm from one another. These cameras are used to capture images from the vineyard rows in order to calculate vegetation and temperature indices. Vegetation indices are used to characterize areas in terms of vegetation density, allowing the user to have an overview of the vineyard and, from there, locate possible working areas. More specifically, FLIR provides thermal images and NIR provides spectral images to determine the density of green. FLIR camera is a high-cost equipment, therefore underexplored. However, studies reveal the correlation between FLIR thermal images with vegetation indices [38]. All measurements are displayed on the remote-control unit on the vineyard maps [29]. The user can consult on equipotential measurements maps in order to drive the robot to areas of his/her choice, according to the values of indicators related to ripeness and/or vegetation [39].

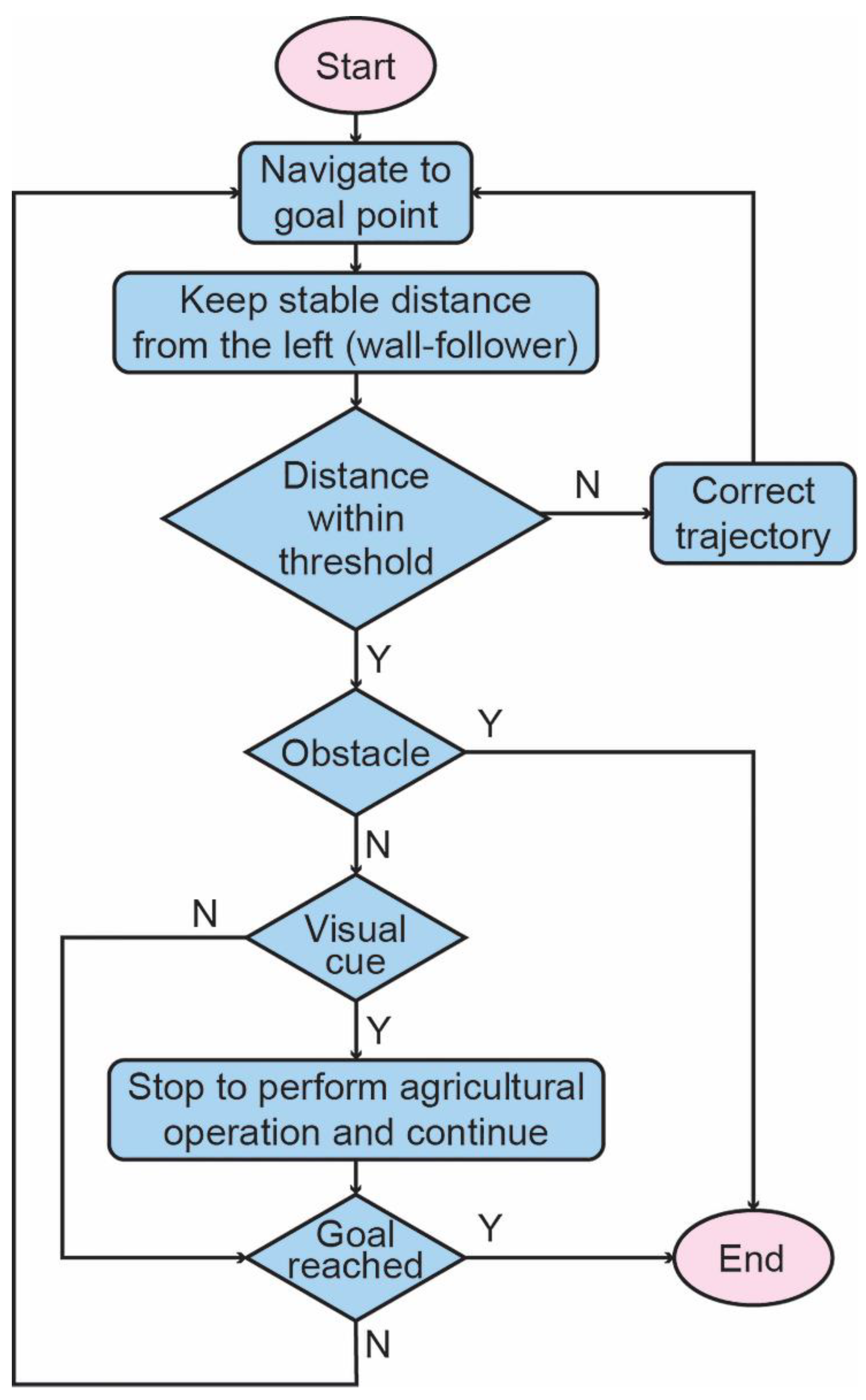

- An ORBBEC Astra 3D camera, embedded on the wheeled mobile robot. This camera is used for navigation and it is embedded on the front of the wheeled robot. The camera recognizes harvest crates [35] as well as vine trunks [36] and uses them as markers. The ARG pauses in front of either a harvest crate or a vine trunk and carries out a specific agricultural task. In addition, the camera provides an RGB-D map of the field used for obstacle detection. Due to the 65 cm width of the ARG ground unit, combined with the 220 cm standard width of vineyard corridors, it is dangerous for the crops and fruits and difficult for the robot to navigate performing obstacle avoidance in the corridors. Therefore, in case the robot senses an obstacle, it stops navigation and informs the user of its exact location and the status, through the remote-control unit. In general, vineyards are considered semi-structured environments. The challenge for ARG is to move dynamically along the pre-defined vine corridors on uneven, heterogeneous, or muddy soil at a fixed safe distance from the crops line. Obstacles inside the vineyard corridors are considered non-existing and rare, therefore, were not assumed in the context of this work.

- A GPS sensor, to locate the ARG and display in real-time its position on vineyard maps through the remote-control unit. Accurate location is not confirmed with the GPS. For this reason, GPS is not used for localization purposes, but only for the approximate visualization of ARG on the computer interface. To ensure the safe operation of ARG and minimize damage risks to both ARG and crops, additional sensory information is used for ARG’s localization, as explained next. The GPS sensor is mounted on top of the wheeled mobile robot on its back.

- A fusion of four encoders for odometry, an internal measurement unit (IMU) and a LiDAR for ARG localization [40]. A fusion of encoder data with IMU data results in an initial state estimation for ARG. Localization is further optimized by using the LiDAR. It is well known that multi-modal systems based on a combination of sensors provide more accurate and robust state estimation. LiDAR uses two algorithms to achieve optimal localization: (1) the iterative closest points (ICP) algorithm [41] to registrate the 3D point cloud data of 16 laser beam layers; thus, it builds a map tracking the robot pose in six full degrees of freedom (DoF) simultaneous localization [42], and (2) an algorithm for wall-following, based on the information of one LiDAR laser beam; this algorithm provides the robot with a fixed distance from the working side. In order to maximize its viewing angle and scanning area, the LiDAR is adjusted on the wheeled robot on an elevated aluminum base. Thus, the interference of the LiDAR with the robotic arm or the box is avoided. The IMU sensor is located inside the wheeled mobile robot.

- Main Board: The main board (motherboard) controls the wheeled robot regarding navigation and user commands. It assumes the internal communication between robot structure build in devices (IMU, GPS, LiDAR, Encoders, ORBBEC Astra) and all additional connected devices via three available USB 2.0 ports (JACO2 and RGB camera) or wire connection (DHT22 and LM35D sensors). The main board collects all data from the linked sensors and runs the algorithms listed in Table 3. The board provides its own power supply (LiFePO4 15 Ah @ 48 V DC battery) supporting all connected devices, apart from JACO2 which is powered by an additional power supporting board (Battery LiFePO4 50Ah@24V DC).

- NVIDIA Jetson TX2: The main task of these two processing boards is to ensure high level autonomy of the system, communication tasks and machine vision algorithms along with feature extraction towards decision making, as shown in Table 3. Each board provides one USB 3.0 port. Board 1 is connected to ZED Mini, whereas board 2 is connected to the NIR camera; board 2 also controls the end-effector gripper. Both boards are connected via a Wi-Fi network with the remote-control unit as described in [31]. Data are communicated via the database, i.e., a MongoDB, that runs on the host computer of the remote-control unit to ARG and vice-versa. All information is transmitted inside JavaScript Object Notation (JSON) packets as JSON arrays. Both boards and all linked devices are powered by the power board (LiFePO4 50 Ah @ 24 V DC battery).

3.2.1. Manipulator

| Computing Device | Machine Vision Algorithm | Description (Method, Model) | Related Task | Performance |

|---|---|---|---|---|

| NVIDIA Jetson TX2 Board 1 | Grape cluster detection [33] | Semantic segmentation model (Convolutional Neural Networks, ResNet50_FRRN) | Harvest, Green harvest | 87.89% IU |

| Ripeness estimation [37] | Regression model (Lattice Computing Modeling, Intervals Numbers (INs) technique) | Harvest | 5.36% Average error | |

| Defect Detection [45] | Classification model (Random Forest (RF) Classifier) | Harvest, Green harvest | 87% Classification accuracy | |

| Leaves detection [33] | Semantic segmentation model (Convolutional Neural Networks, MobileNetV2_PSPNet) | Defoliation | 83.45% IU | |

| NVIDIA Jetson TX2 Board 2 | Stem detection [34] | Semantic segmentation model with regression (Convolutional Neural Networks, UNET_MobileNetV2) | Harvest, Green harvest | 98.90% IU |

| Main Board Summit | Harvest crates detection [35] | Object detection model (You-Only-Look-Once version 3—YOLOv3) | Harvest, Green harvest | 99.74% mAP |

| Vine trunk detection [36] | Object detection model (You-Only-Look-Once version 5—YOLOv5) | Defoliation | 73.2% mAP |

3.2.2. Wheeled Mobile Robot

4. System Integration

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rose, D.C.; Wheeler, R.; Winter, M.; Lobley, M.; Chivers, C.-A. Agriculture 4.0: Making it work for people, production, and the planet. Land Use Policy 2021, 100, 104933. [Google Scholar] [CrossRef]

- Duckett, T.; Pearson, S.; Blackmore, S.; Grieve, B.; Chen, W.-H.; Cielniak, G.; Cleaversmith, J.; Dai, J.; Davis, S.; Fox, C.; et al. Agricultural Robotics: The Future of Robotic Agriculture. arXiv 2018, arXiv:1806.06762. [Google Scholar]

- Sparrow, R.; Howard, M. Robots in agriculture: Prospects, impacts, ethics, and policy. Precis. Agric. 2020, 1, 1–16. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations. Part 2: Operations and systems. Biosyst. Eng. 2017, 153, 110–128. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Feng, Q.; Zou, W.; Fan, P.; Zhang, C.; Wang, X. Design and test of robotic harvesting system for cherry tomato. Int. J. Agric. Biol. Eng. 2018, 11, 96–100. [Google Scholar] [CrossRef]

- Scarfe, A.J.; Flemmer, R.C.; Bakker, H.H.; Flemmer, C.L. Development of an autonomous kiwifruit picking robot. In Proceedings of the 2009 4th International Conference on Autonomous Robots and Agents, Wellington, New Zealand, 10–12 February 2009; pp. 380–384. [Google Scholar]

- Søgaard, H.T.; Lund, I. Application Accuracy of a Machine Vision-controlled Robotic Micro-dosing System. Biosyst. Eng. 2007, 96, 315–322. [Google Scholar] [CrossRef]

- He, B.; Liu, G.; Ji, Y.; Si, Y.; Gao, R. Auto Recognition of Navigation Path for Harvest Robot Based on Machine Vision. In IFIP Advances in Information and Communication Technology; Springer: Berlin/Heidelberg, Germany, 2011; pp. 138–148. ISBN 9783642183324. [Google Scholar]

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An autonomous strawberry-harvesting robot: Design, development, integration, and field evaluation. J. Field Robot. 2020, 37, 202–224. [Google Scholar] [CrossRef]

- McCool, C.S.; Beattie, J.; Firn, J.; Lehnert, C.; Kulk, J.; Bawden, O.; Russell, R.; Perez, T. Efficacy of Mechanical Weeding Tools: A study into alternative weed management strategies enabled by robotics. IEEE Robot. Autom. Lett. 2018, 32, 1184–1190. [Google Scholar] [CrossRef]

- Harvest CROO. Robotics. Available online: https://harvestcroo.com/ (accessed on 30 January 2021).

- GUSS. Autonomous Orchard Sprayers. Available online: https://gussag.com/ (accessed on 30 January 2021).

- Naïo Technologies. Automated Robots and Farming Tools. Available online: https://www.naio-technologies.com/en/agricultural-equipment/ (accessed on 30 January 2021).

- AGERRIS. The Digital Farmhand. Available online: https://agerris.com/the-digital-farmhand/ (accessed on 30 January 2021).

- FARMDROID. Available online: http://farmdroid.dk/ (accessed on 30 January 2021).

- CLEARPATH Robotics. Husky. Available online: https://clearpathrobotics.com/husky-unmanned-ground-vehicle-robot/ (accessed on 30 January 2021).

- Drenjančević, M.; Jukić, V.; Zmaić, K.; Kujundžić, T.; Rastija, V. Effects of early leaf removal on grape yield, chemical characteristics, and antioxidant activity of grape variety Cabernet Sauvignon and wine from eastern Croatia. Acta Agric. Scand. Sect. B Soil Plant Sci. 2017, 67, 705–711. [Google Scholar] [CrossRef]

- Martin, D.; Grose, C.; Fedrizzi, B.; Stuart, L.; Albright, A.; McLachlan, A. Grape cluster microclimate influences the aroma composition of Sauvignon blanc wine. Food Chem. 2016, 210, 640–647. [Google Scholar] [CrossRef] [PubMed]

- Roure, F.; Moreno, G.; Soler, M.; Faconti, D.; Serrano, D.; Astolfi, P.; Bardaro, G.; Gabrielli, A.; Bascetta, L.; Matteucci, M. GRAPE: Ground Robot for vineyArd Monitoring and ProtEction. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2018; pp. 249–260. [Google Scholar]

- Lopez-Castro, A.; Marroquin-Jacobo, A.; Soto-Amador, A.; Padilla-Davila, E.; Lopez-Leyva, J.A.; Castaneda-Ramos, M.O. Design of a Vineyard Terrestrial Robot for Multiple Applications as Part of the Innovation of Process and Product: Preliminary Results. In Proceedings of the 2020 IEEE International Conference on Engineering Veracruz (ICEV), Boca del Rio, Mexico, 26–29 October 2020; pp. 1–4. [Google Scholar]

- Monta, M.; Kondo, N.; Shibano, Y. Agricultural robot in grape production system. In Proceedings of the 1995 IEEE International Conference on Robotics and Automation, Nagoya, Japan, 21–27 May 1995; Volume 3, pp. 2504–2509. [Google Scholar]

- Neves Dos Santos, F.; Sobreira, H.M.P.; Campos, D.F.B.; Morais, R.; Moreira, A.P.G.M.; Contente, O.M.S. Towards a Reliable Monitoring Robot for Mountain Vineyards. In Proceedings of the 2015 IEEE International Conference on Autonomous Robot Systems and Competitions, Vila Real, Portugal, 8–10 April 2015; pp. 37–43. [Google Scholar]

- dos Santos, F.N.; Sobreira, H.; Campos, D.; Morais, R.; Paulo Moreira, A.; Contente, O. Towards a Reliable Robot for Steep Slope Vineyards Monitoring. J. Intell. Robot. Syst. 2016, 83, 429–444. [Google Scholar] [CrossRef]

- Adamides, G.; Katsanos, C.; Constantinou, I.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. Design and development of a semi-autonomous agricultural vineyard sprayer: Human-robot interaction aspects. J. Field Robot. 2017, 34, 1407–1426. [Google Scholar] [CrossRef]

- Botterill, T.; Paulin, S.; Green, R.; Williams, S.; Lin, J.; Saxton, V.; Mills, S.; Chen, X.; Corbett-Davies, S. A Robot System for Pruning Grape Vines. J. Field Robot. 2017, 34, 1100–1122. [Google Scholar] [CrossRef]

- Lopes, C.; Torres, A.; Guzman, R.; Graca, J.; Reyes, M.; Victorino, G.; Braga, R.; Monteiro, A.; Barriguinha, A. Using an unmanned ground vehicle to scout vineyards for non-intrusive estimation of canopy features and grape yield. In Proceedings of the GiESCO International Meeting, 20th, Sustainable Viticulture and Wine Making in Climate Chenge Sce-narios, Mendoza, Argentina, 5–10 November 2017. [Google Scholar]

- VineRobot. Available online: http://www.vinerobot.eu/ (accessed on 31 January 2021).

- Personalized Optimal Grape Harvest by Autonomous Robot (POGHAR). Available online: http://evtar.eu/ (accessed on 18 April 2020).

- Badeka, E.; Vrochidou, E.; Tziridis, K.; Nicolaou, A.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Navigation Route Mapping for Harvesting Robots in Vineyards Using UAV-based Remote Sensing. In Proceedings of the 2020 IEEE 10th International Conference on Intelligent Systems (IS), Varna, Bulgaria, 28–30 August 2020; pp. 171–177. [Google Scholar]

- Tziridis, K.; Nikolaou, A.; Kalampokas, T.; Vrochidou, E.; Pachidis, T.; Papakostas, G.A.; Kaburlasos, V.G. Information management and monitoring system for a grapes harvesting robot. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1032, 012051. [Google Scholar] [CrossRef]

- Vrochidou, E.; Pachidis, T.; Manios, M.; Papakostas, G.A.; Kaburlasos, V.G.; Theocharis, S.; Koundouras, S.; Karabatea, K.; Bouloumpasi, E.; Pavlidis, S.; et al. Identifying the technological needs for developing a grapes harvesting robot: Operations and systems. In Proceedings of the CEUR Workshop Proceedings, Thessaloniki, Greece, 24–27 September 2020; pp. 105–113. [Google Scholar]

- Kalampokas, T.; Tziridis, K.; Nikolaou, A.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Semantic Segmentation of Vineyard Images Using Convolutional Neural Networks. In 21st International Conference on Engineering Applications of Neural Networks (EANN 2020); Springer: Berlin, Germany, 2020; pp. 292–303. [Google Scholar]

- Kalampokas, Τ.; Vrochidou, Ε.; Papakostas, G.; Pachidis, T.; Kaburlasos, V.G. Grape Stem Detection Using Regression Convolutional Neural Networks. submitted.

- Badeka, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Harvest Crate Detection for Grapes Harvesting Robot Based on YOLOv3 Model. In Proceedings of the 2020 Fourth International Conference on Intelligent Computing in Data Sciences (ICDS), Fez, Morocco, 21–23 October 2020; pp. 1–5. [Google Scholar]

- Badeka, E.; Kalampokas, T.; Vrochidou, E.; Tziridis, K.; Papakostas, G.; Pachidis, T.; Kaburlasos, V. Real-time vineyard trunk detection for a grapes harvesting robot via deep learning. In Proceedings of the Thirteenth International Conference on Machine Vision, Rome, Italy, 4 January 2021; Osten, W., Zhou, J., Nikolaev, D.P., Eds.; SPIE: Rome, Italy, 2021; p. 5. [Google Scholar]

- Kaburlasos, V.G.; Vrochidou, E.; Lytridis, C.; Papakostas, G.A.; Pachidis, T.; Manios, M.; Mamalis, S.; Merou, T.; Koundouras, S.; Theocharis, S.; et al. Toward Big Data Manipulation for Grape Harvest Time Prediction by Intervals’ Numbers Techniques. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–6. [Google Scholar]

- Espinoza, C.Z.; Khot, L.R.; Sankaran, S.; Jacoby, P.W. High resolution multispectral and thermal remote sensing-based water stress assessment in subsurface irrigated grapevines. Remote Sens. 2017, 9, 961. [Google Scholar] [CrossRef]

- Bourgeon, M.A.; Gée, C.; Debuisson, S.; Villette, S.; Jones, G.; Paoli, J.N. «On-the-go» multispectral imaging system to characterize the development of vineyard foliage with quantitative and qualitative vegetation indices. Precis. Agric. 2017, 18, 293–308. [Google Scholar] [CrossRef]

- Moore, T.; Stouch, D. A Generalized Extended Kalman Filter Implementation for the Robot Operating System. In Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2016; pp. 335–348. ISBN 9783319083377. [Google Scholar]

- Wang, Y.-T.; Peng, C.-C.; Ravankar, A.; Ravankar, A. A Single LiDAR-Based Feature Fusion Indoor Localization Algorithm. Sensors 2018, 18, 1294. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems; Robotics: Science and Systems Foundation: Berkeley, CA, USA, 2014; Volume 2, pp. 1–9. [Google Scholar]

- Pachidis, T.; Sgouros, C.; Kaburlasos, V.G.; Vrochidou, E.; Kalampokas, T.; Tziridis, K.; Nikolaou, A.; Papakostas, G.A. Forward Kinematic Analysis of JACO 2 Robotic Arm Towards Implementing a Grapes Harvesting Robot. In Proceedings of the 2020 International Conference on Software, Telecommunications and Computer Networks (SoftCOM), Split, Croatia, 1–19 September 2020; pp. 1–6. [Google Scholar]

- Fernandes, A.M.; Franco, C.; Mendes-Ferreira, A.; Mendes-Faia, A.; da Costa, P.L.; Melo-Pinto, P. Brix, pH and anthocyanin content determination in whole Port wine grape berries by hyperspectral imaging and neural networks. Comput. Electron. Agric. 2015, 115, 88–96. [Google Scholar] [CrossRef]

- Knauer, U.; Matros, A.; Petrovic, T.; Zanker, T.; Scott, E.S.; Seiffert, U. Improved classification accuracy of powdery mildew infection levels of wine grapes by spatial-spectral analysis of hyperspectral images. Plant Methods 2017, 13, 47. [Google Scholar] [CrossRef] [PubMed]

- Pellegrino, A.; Clingeleffer, P.; Cooley, N.; Walker, R. Management practices impact vine carbohydrate status to a greater extent than vine productivity. Front. Plant Sci. 2014, 5, 283. [Google Scholar] [CrossRef] [PubMed]

- Poni, S.; Casalini, L.; Bernizzoni, F.; Civardi, S.; Intrieri, C. Effects of early defoliation on shoot photosynthesis, yield components, and grape composition. Am. J. Enol. Vitic. 2006, 57, 397–407. [Google Scholar]

- Sivilotti, P.; Falchi, R.; Herrera, J.C.; Škvarč, B.; Butinar, L.; Sternad Lemut, M.; Bubola, M.; Sabbatini, P.; Lisjak, K.; Vanzo, A. Combined Effects of Early Season Leaf Removal and Climatic Conditions on Aroma Precursors in Sauvignon Blanc Grapes. J. Agric. Food Chem. 2017, 65, 8426–8434. [Google Scholar] [CrossRef] [PubMed]

- Patel, R.V.; Shadpey, F.; Ranjbaran, F.; Angeles, J. A collision-avoidance scheme for redundant manipulators: Theory and experiments. J. Robot. Syst. 2005, 22, 737–757. [Google Scholar] [CrossRef]

- Lin, F.; Lin, Z.; Qiu, X. LQR controller for car-like robot. In Proceedings of the 2016 35th Chinese Control Conference (CCC), Chengdu, China, 27–29 July 2016; pp. 2515–2518. [Google Scholar]

- Saiful Azimi Mahmud, M.; Shukri Zainal Abidin, M.; Abiodun Emmanuel, A.; Sahib Hasan, H. Robotics and Automation in Agriculture: Present and Future Applications. Appl. Model. Simul. 2020, 4, 130–140. [Google Scholar]

- Kaburlasos, V.G. The Lattice Computing (LC) Paradigm. In Proceedings of the 15th International Conference on Concept Lattices and Their Applications, Tallinn, Estonia, 29 June–1 July 2020; CLA: Tallinn, Estonia, 2020; pp. 1–8. [Google Scholar]

| Characteristics | Viniculture Agrobots | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| [20] | [21] | [22] | [23] | [24] | [25] | [26] | [27] | [28] | ARG | ||

| General | Crop-scale application | ✓ | x | ✓ | x | x | ✓ | ✓ | x | x | ✓ |

| Multi-modal | ✓ | ✓ | x | x | x | ✓ | x | ✓ | ✓ | ✓ | |

| ◦ Aerial | x | x | x | x | x | x | x | x | x | ✓ | |

| ◦ Remote-control | ✓ | ✓ | x | x | x | ✓ | x | ✓ | ✓ | ✓ | |

| ◦ Ground robot | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Multi -purpose | ✓ | ✓ | ✓ | x | x | x | x | x | x | ✓ | |

| Functionalities | Harvest | x | x | ✓ | x | x | x | x | x | x | ✓ |

| Green Harvest | x | x | x | x | x | x | x | x | x | ✓ | |

| Defoliation | x | x | x | x | x | x | x | x | x | ✓ | |

| Spraying | ✓ | ✓ | ✓ | x | x | ✓ | x | x | x | x | |

| Berry thinning | x | x | ✓ | x | x | x | x | x | x | x | |

| Bagging | x | x | ✓ | x | x | x | x | x | x | x | |

| Pruning | x | x | x | x | x | x | ✓ | x | x | x | |

| Monitoring | ✓ | ✓ | x | x | x | ✓ | x | ✓ | ✓ | ✓ | |

| Navigation | ✓ | ✓ | x | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Personalization ability | x | x | x | x | x | x | x | x | x | ✓ | |

| Hardware specifications | Bill of materials | ✓ | x | x | ✓ | ✓ | ✓ | x | x | x | ✓ |

| System design | x | x | x | ✓ | ✓ | x | x | x | x | ✓ | |

| Interoperability | x | x | x | ✓ | ✓ | x | x | x | x | ✓ | |

| Integration | x | x | x | ✓ | ✓ | x | x | x | x | ✓ | |

| Software specifications | Procedural flows | x | x | x | x | x | x | x | x | x | ✓ |

| Machine-vision algorithms | x | x | x | ✓ | ✓ | x | ✓ | ✓ | x | ✓ | |

| Simulation results | ✓ | x | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | x | ✓ | |

| Main hardware components | Mobile robot | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| ◦ Summit XL HL | x | x | x | x | x | ✓ | x | ✓ | x | ✓ | |

| ◦ Husky | ✓ | x | x | x | x | x | x | x | x | x | |

| ◦ Traxxas E-Maxx | x | x | x | ✓ | ✓ | x | x | x | x | x | |

| ◦ Customized | x | ✓ | ✓ | x | x | x | ✓ | x | ✓ | x | |

| Robotic arm | ✓ | x | ✓ | x | x | ✓ | ✓ | x | x | ✓ | |

| ◦ JACO2 7-DoF | x | x | x | x | x | x | x | x | x | ✓ | |

| ◦ JACO2 6-DoF | ✓ | x | x | x | x | x | x | x | x | x | |

| ◦ OUR-1 | x | x | x | x | x | ✓ | x | x | x | x | |

| ◦ UR5 | x | x | x | x | x | x | ✓ | x | x | x | |

| ◦ Customized | x | x | ✓ | x | x | x | x | x | x | x | |

| Customized end-effectors | x | x | ✓ | x | x | ✓ | ✓ | x | x | ✓ | |

| Unit | Hardware Component | Quantity | |

|---|---|---|---|

| Aerial Unit | Octocopter SkyHawk, 3Dsurvey | 1 | |

| RGB Samsung NX500 Mirrorless Camera | 1 | ||

| Remote-Control Unit | Laptop (Windows 10, 64bit operating system, 8GB RAM, CPU, i5-2540M, Wi-Fi) | 1 | |

| ARG Ground Unit | Manipulator JACO2 KINOVA Robotic Arm 7-DoF | Two-finger SCHUNK Gripper, Customized End-Effectors | 2 |

| ZED Mini 3D IMU Camera | 1 | ||

| LED Board Artificial Lighting | 1 | ||

| KINOVA Joystick controller | 1 | ||

| Wheeled Mobile Robot Summit XL HL Robotnik | Sony Dualshock 4 controller | 1 | |

| ORBBEC Astra 3D Camera | 1 | ||

| LiDAR Velodyne VLP-16 | 1 | ||

| GPS U-BLOX EVK-7P | 1 | ||

| IMU RC Pixhawk | 1 | ||

| Encoder | 4 | ||

| LM35D Temperature Sensor | 1 | ||

| DHT22 Temperature and Humidity Sensor | 2 | ||

| NVIDIA Jetson TX2 | 2 | ||

| FLIR A65sc Thermal Camera | 1 | ||

| RGB USB 3.0 Thorlabs Camera | 1 | ||

| NIR USB 3.0 Thorlabs Camera | 1 | ||

| Battery LiFePO4 15Ah@48V DC | 1 | ||

| Battery LiFePO4 50Ah@24V DC | 1 | ||

| Harvest | Green Harvest | Defoliation |

|---|---|---|

| Ripeness level (Define a threshold) | Define percentage of grape clusters to be removed | Define percentage of leaves area to be removed |

| Grape clusters with ripeness level above/below threshold (Define if cut and thrown on the ground or left on the vine tree) | Removed grape clusters (Define if collected in crates or thrown on the ground) | Define working side (East-West) |

| Define working area (path(s) selection) | Define working area (path(s) selection) | Define working area (path(s) selection) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vrochidou, E.; Tziridis, K.; Nikolaou, A.; Kalampokas, T.; Papakostas, G.A.; Pachidis, T.P.; Mamalis, S.; Koundouras, S.; Kaburlasos, V.G. An Autonomous Grape-Harvester Robot: Integrated System Architecture. Electronics 2021, 10, 1056. https://doi.org/10.3390/electronics10091056

Vrochidou E, Tziridis K, Nikolaou A, Kalampokas T, Papakostas GA, Pachidis TP, Mamalis S, Koundouras S, Kaburlasos VG. An Autonomous Grape-Harvester Robot: Integrated System Architecture. Electronics. 2021; 10(9):1056. https://doi.org/10.3390/electronics10091056

Chicago/Turabian StyleVrochidou, Eleni, Konstantinos Tziridis, Alexandros Nikolaou, Theofanis Kalampokas, George A. Papakostas, Theodore P. Pachidis, Spyridon Mamalis, Stefanos Koundouras, and Vassilis G. Kaburlasos. 2021. "An Autonomous Grape-Harvester Robot: Integrated System Architecture" Electronics 10, no. 9: 1056. https://doi.org/10.3390/electronics10091056

APA StyleVrochidou, E., Tziridis, K., Nikolaou, A., Kalampokas, T., Papakostas, G. A., Pachidis, T. P., Mamalis, S., Koundouras, S., & Kaburlasos, V. G. (2021). An Autonomous Grape-Harvester Robot: Integrated System Architecture. Electronics, 10(9), 1056. https://doi.org/10.3390/electronics10091056