An Efficient Stereo Matching Network Using Sequential Feature Fusion

Abstract

1. Introduction

2. Related Work

2.1. Classical Stereo Matching

2.2. Deep Stereo Matching

2.2.1. 2D Convolution-Based Methods

2.2.2. 3D Convolution-Based Methods

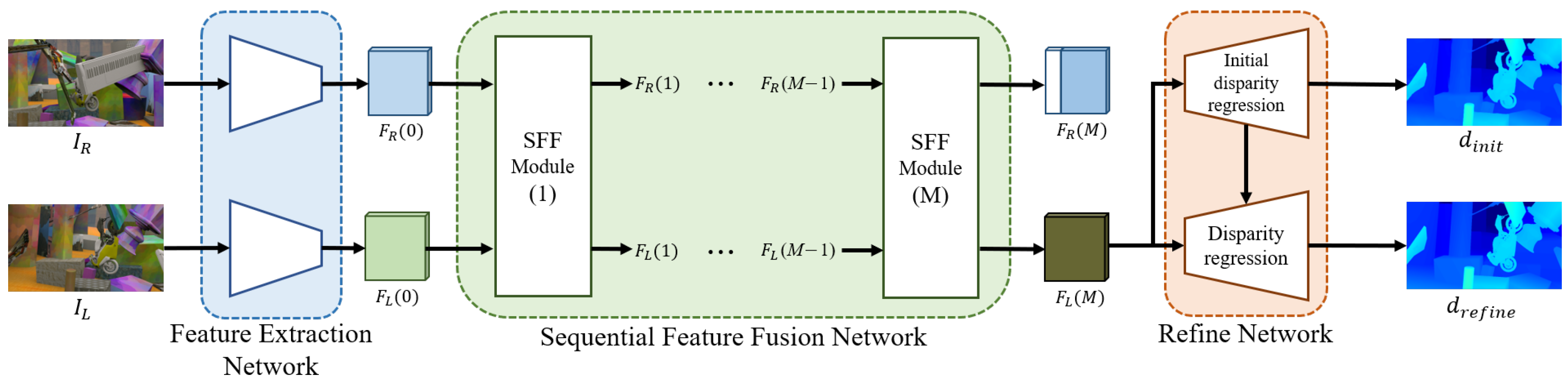

3. Method

3.1. Feature Extraction Network

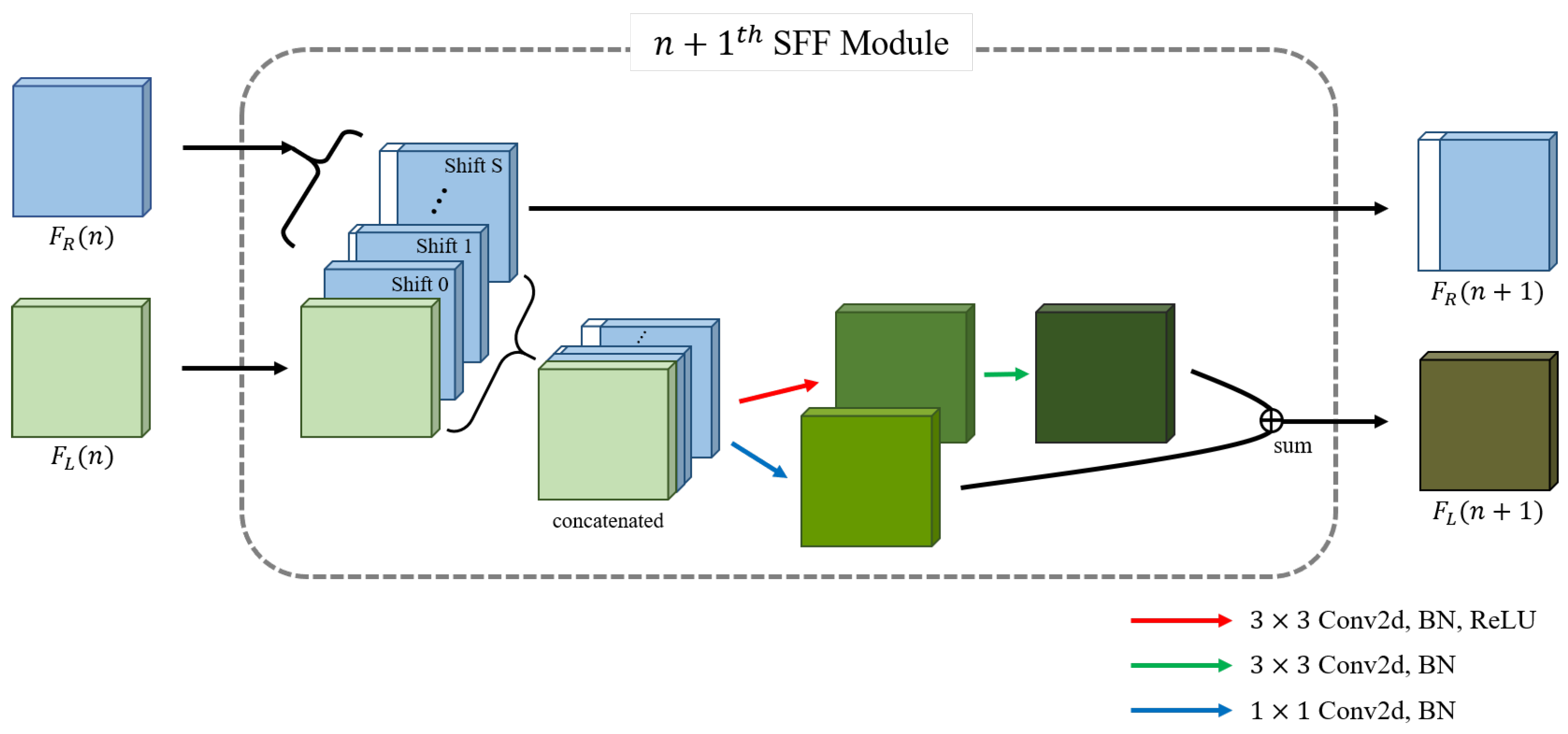

3.2. Sequential Feature Fusion Network (SFFNet)

3.3. Refine Network and Loss Function

4. Experimental Results

4.1. Datasets

- Scene Flow [10]: A synthetic stereo dataset which includes ground-truth disparity for each viewpoint generated using computer graphics. It contains 35,454 training and 4370 testing image pairs with and . EPE (End-Point-Error) was used as an evaluation metric to evaluate the results, where the EPE is defined by the average difference of the predicted disparities and their true ones.

- KITTI [41,42]: A dataset based on actual images (not synthesized images). The KITTI-2015 version contains training and test sets, each of which have 200 image pairs. The KITTI-2012 version contains 194 image pairs for training and 195 image pairs for testing. The image size is and for both versions. We trained and tested using only the training dataset, which includes ground-truth disparity information. 354 random image pairs from the 2015 and 2012 version training sets were used as the training dataset. For the evaluation indicator, we used the 3-pixel-error (3PE) provided by the benchmark dataset [41,42]. The 3PE represents the percentage of pixels for which the difference between the predicted disparity and the true one is more than 3 pixels.

4.2. Implementation Details

4.3. Results and Analysis on the Scene Flow Dataset

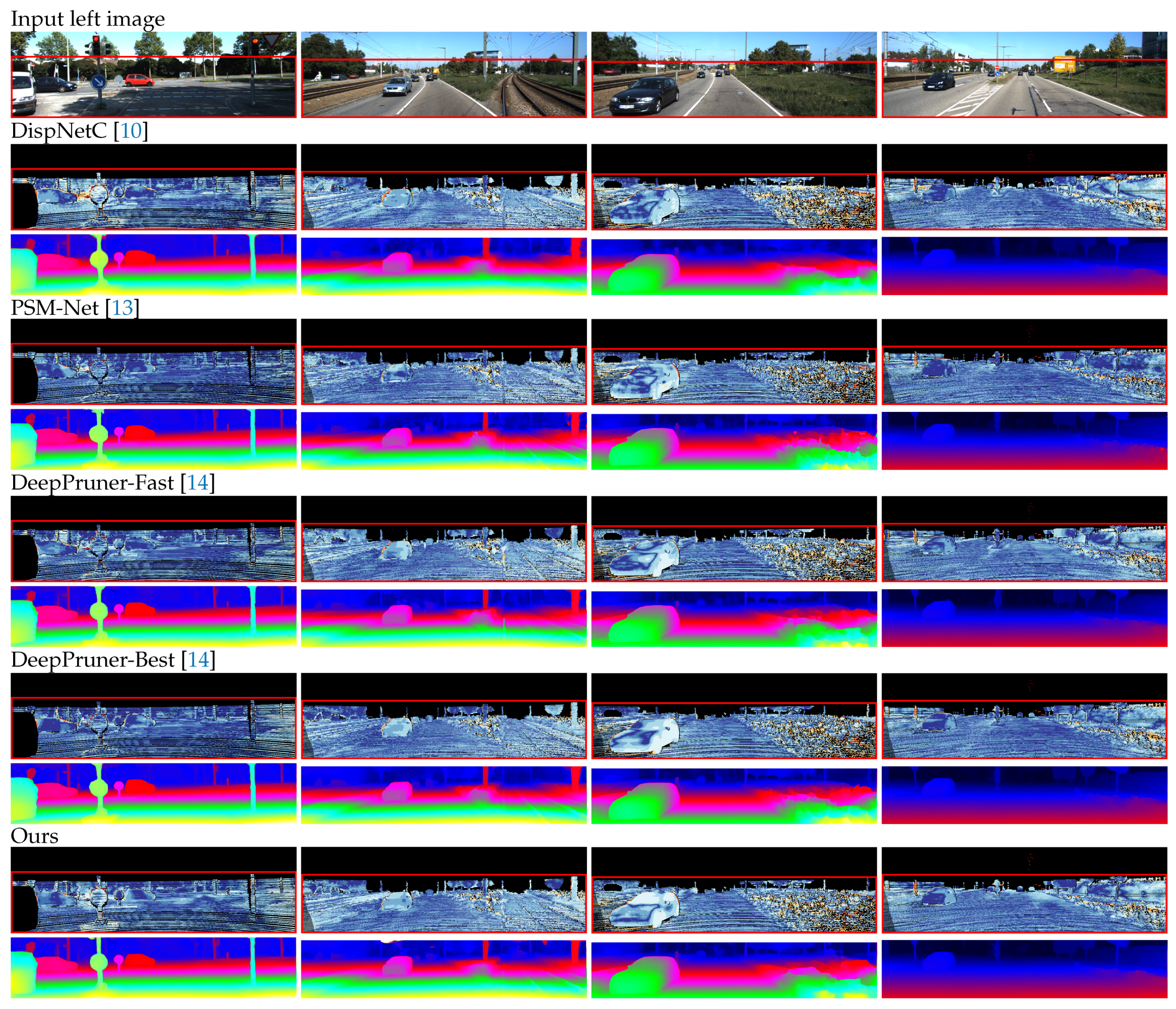

4.4. Results and Analysis on the KITTI-2015 Dataset

4.5. Effect of the Number of Shift S and the Number of Modules M

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-the-art and applications of 3D imaging sensors in industry, cultural heritage, medicine, and criminal investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef]

- Chen, C.; Seff, A.; Kornhauser, A.; Xiao, J. Deepdriving: Learning affordance for direct perception in autonomous driving. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2722–2730. [Google Scholar]

- Zenati, N.; Zerhouni, N. Dense stereo matching with application to augmented reality. In Proceedings of the IEEE International Conference on Signal Processing and Communications, Dubai, United Arab Emirates, 24–27 November 2007; pp. 1503–1506. [Google Scholar]

- El Jamiy, F.; Marsh, R. Distance estimation in virtual reality and augmented reality: A survey. In Proceedings of the IEEE International Conference on Electro Information Technology, Brookings, SD, USA, 20–22 May 2019; pp. 063–068. [Google Scholar]

- Huang, J.; Tang, S.; Liu, Q.; Tong, M. Stereo matching algorithm for autonomous positioning of underground mine robots. In Proceedings of the International Conference on Robots &Intelligent System, Changsha, China, 26–27 May 2018; pp. 40–43. [Google Scholar]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Zbontar, J.; LeCun, Y. Stereo Matching by Training a Convolutional Neural Network to Compare Image Patches. J. Mach. Learn. Res. 2016, 17, 2287–2318. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Learning to compare image patches via convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4353–4361. [Google Scholar]

- Luo, W.; Schwing, A.G.; Urtasun, R. Efficient deep learning for stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5695–5703. [Google Scholar]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- Pang, J.; Sun, W.; Ren, J.S.; Yang, C.; Yan, Q. Cascade Residual Learning: A Two-Stage Convolutional Neural Network for Stereo Matching. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 887–895. [Google Scholar]

- Kendall, A.; Martirosyan, H.; Dasgupta, S.; Henry, P.; Kennedy, R.; Bachrach, A.; Bry, A. End-to-end learning of geometry and context for deep stereo regression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 66–75. [Google Scholar]

- Chang, J.R.; Chen, Y.S. Pyramid Stereo Matching Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5410–5418. [Google Scholar]

- Duggal, S.; Wang, S.; Ma, W.C.; Hu, R.; Urtasun, R. Deeppruner: Learning efficient stereo matching via differentiable patchmatch. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 4384–4393. [Google Scholar]

- Wang, Y.; Lai, Z.; Huang, G.; Wang, B.H.; Van Der Maaten, L.; Campbell, M.; Weinberger, K.Q. Anytime stereo image depth estimation on mobile devices. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5893–5900. [Google Scholar]

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Birchfield, S.; Tomasi, C. Depth discontinuities by pixel-to-pixel stereo. Int. J. Comput. Vis. 1999, 35, 269–293. [Google Scholar] [CrossRef]

- Hamzah, R.A.; Abd Rahim, R.; Noh, Z.M. Sum of absolute differences algorithm in stereo correspondence problem for stereo matching in computer vision application. In Proceedings of the International Conference on Computer Science and Information Technology, Chengdu, China, 9–11 July 2010; Volume 1, pp. 652–657. [Google Scholar]

- Hirschmuller, H.; Scharstein, D. Evaluation of cost functions for stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Yoo, J.C.; Han, T.H. Fast normalized cross-correlation. Circuits Syst. Signal Process. 2009, 28, 819–843. [Google Scholar] [CrossRef]

- Zabih, R.; Woodfill, J. Non-parametric local transforms for computing visual correspondence. In Proceedings of the European Conference on Computer Vision, Stockholm, Sweden, 2–6 May 1994; pp. 151–158. [Google Scholar]

- Geng, N.; Gou, Q. Adaptive color stereo matching based on rank transform. In Proceedings of the International Conference on Industrial Control and Electronics Engineering, Xi’an, China, 23–25 August 2012; pp. 1701–1704. [Google Scholar]

- Lu, H.; Meng, H.; Du, K.; Sun, Y.; Xu, Y.; Zhang, Z. Post processing for dense stereo matching by iterative local plane fitting. In Proceedings of the IEEE International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing, Las Vegas, NV, USA, 30 June–2 July 2014; pp. 1–6. [Google Scholar]

- Xu, L.; Jia, J. Stereo matching: An outlier confidence approach. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; pp. 775–787. [Google Scholar]

- Aboali, M.; Abd Manap, N.; Yusof, Z.M.; Darsono, A.M. A Multistage Hybrid Median Filter Design of Stereo Matching Algorithms on Image Processing. J. Telecommun. Electron. Comput. Eng. 2018, 10, 133–141. [Google Scholar]

- Ma, Z.; He, K.; Wei, Y.; Sun, J.; Wu, E. Constant time weighted median filtering for stereo matching and beyond. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 49–56. [Google Scholar]

- Sun, X.; Mei, X.; Jiao, S.; Zhou, M.; Wang, H. Stereo matching with reliable disparity propagation. In Proceedings of the 2011 International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission, Hangzhou, China, 16–19 May 2011; pp. 132–139. [Google Scholar]

- Wu, W.; Zhu, H.; Yu, S.; Shi, J. Stereo matching with fusing adaptive support weights. IEEE Access 2019, 7, 61960–61974. [Google Scholar] [CrossRef]

- Zhang, K.; Lu, J.; Lafruit, G. Cross-based local stereo matching using orthogonal integral images. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 1073–1079. [Google Scholar] [CrossRef]

- Yang, G.; Zhao, H.; Shi, J.; Deng, Z.; Jia, J. Segstereo: Exploiting semantic information for disparity estimation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 636–651. [Google Scholar]

- Yin, Z.; Darrell, T.; Yu, F. Hierarchical discrete distribution decomposition for match density estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6044–6053. [Google Scholar]

- Tonioni, A.; Tosi, F.; Poggi, M.; Mattoccia, S.; Stefano, L.D. Real-time self-adaptive deep stereo. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 195–204. [Google Scholar]

- Lu, C.; Uchiyama, H.; Thomas, D.; Shimada, A.; Taniguchi, R.i. Sparse cost volume for efficient stereo matching. Remote Sens. 2018, 10, 1844. [Google Scholar] [CrossRef]

- Tulyakov, S.; Ivanov, A.; Fleuret, F. Practical Deep Stereo (PDS): Toward applications-friendly deep stereo matching. In Proceedings of the International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 5875–5885. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Mehta, S.; Rastegari, M.; Caspi, A.; Shapiro, L.; Hajishirzi, H. Espnet: Efficient spatial pyramid of dilated convolutions for semantic segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 552–568. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Menze, M.; Geiger, A. Object scene flow for autonomous vehicles. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3061–3070. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations (Poster), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Song, X.; Zhao, X.; Hu, H.; Fang, L. Edgestereo: A context integrated residual pyramid network for stereo matching. In Proceedings of the Asian Conference on Computer Vision, Perth, Australia, 2–6 December 2018; pp. 20–35. [Google Scholar]

- Bianco, S.; Cadene, R.; Celona, L.; Napoletano, P. Benchmark Analysis of Representative Deep Neural Network Architectures. IEEE Access 2018, 6, 64270–64277. [Google Scholar] [CrossRef]

| Name | Layer Definition | Input Dimension | Output Dimension |

|---|---|---|---|

| / | H × W × 3 | ||

| Feature Extraction Network | |||

| / | Input of Network | ||

| / | Output of Network | × × 32 | |

| SFF Network (SFF Module × M) | |||

| / | Input of 1st SFF Module | ||

| Cost Volume | concat [, | × × 32, | × × 128 |

| …, ] | × × 32 | ||

| branch_1 | × × 128 | × × 32 | |

| branch_2 | [1×1, 128, 32] | × × 128 | × × 32 |

| sum(branch_1, branch_2) | × × 32 | ||

| / | Output of 1st SFF Module | ||

| Repeat M times. | |||

| / | Output of SFF Module | ||

| Refine Network | |||

| Initial Disparity Regression | |||

| Input of Initial Disparity Regression | |||

| Init_refine1 | × × 32 | × × 1 | |

| Init_refine2 | bilinear interpolation | × × 1 | × × 1 |

| [5 × 5, 1, 1] | |||

| Init_refine3 | bilinear interpolation | × × 1 | H × W × 1 |

| [5 × 5, 1, 1] | |||

| Output of Initial Disparity Regression | H × W × 1 | ||

| Disparity regression | |||

| Input of Disparity Regression | |||

| Init_refine2 | |||

| Disp_refine1 | bilinear interpolation | × × 32 | × × 32 |

| [5 × 5, 32, 32] | |||

| concat [Disp_refine1, Init_refine2] | × × 33 | ||

| refinement | [3 × 3, 33, 32] | × × 33 | × × 1 |

| [3 × 3, 32, 32] × 2 | |||

| [3 × 3, 32, 16] | |||

| [3 × 3, 16, 16] × 2 | |||

| [1 × 1, 16, 1] | |||

| sum(refinement, Init_refine2) | × × 1 | ||

| Disp_refine2 | bilinear interpolation | × × 1 | H × W × 1 |

| [5 × 5, 1, 1] | |||

| Output of Disparity Regression | H × W × 1 | ||

| GC-Net [12] | SegStereo [30] | CRL [11] | PDS-Net [34] | PSM-Net [13] | DeepPruner-Best [14] | DeepPruner-Fast [14] | DispNetC [10] | Ours (Initial) | Ours |

|---|---|---|---|---|---|---|---|---|---|

| 2.51 | 1.45 | 1.32 | 1.12 | 1.09 | 0.86 | 0.97 | 1.68 | 1.19 | 1.04 |

| Model | Feature Extraction | Network Component | Runtime | EPE | ||||

|---|---|---|---|---|---|---|---|---|

| PSMNet [13] | CNNs with SPP | Stacked Hourglass | 379 ms | 1.09 | ||||

| DeepPruner-Best [14] | PM-1 | CRP | PM-2 | CA | RefineNet | 128 ms | 0.858 | |

| Ours | SFFNet - RefineNet | 45 ms | 1.04 | |||||

| Method | Network | Runtime | Noc(%) | All(%) | Params | FLOPs | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| bg | fg | all | bg | fg | all | |||||

| 3D conv. method | Content-CNN [9] | 1000 ms | 3.32 | 7.44 | 4.00 | 3.73 | 8.58 | 4.54 | 0.70 M | 978.19 G |

| MC-CNN [7] | 67,000 ms | 2.48 | 7.64 | 3.33 | 2.89 | 8.88 | 3.89 | 0.15 M | 526.28 G | |

| GC-Net [12] | 900 ms | 2.02 | 3.12 | 2.45 | 2.21 | 6.16 | 2.87 | 2.86 M | 2510.96 G | |

| CRL [11] | 470 ms | 2.32 | 3.68 | 2.36 | 2.48 | 3.59 | 2.67 | 78.21 M | 185.85 G | |

| PDS-Net [34] | 500 ms | 2.09 | 3.68 | 2.36 | 2.29 | 4.05 | 2.58 | 2.22 M | 436.46 G | |

| PSM-Net [13] | 410 ms | 1.71 | 4.31 | 2.14 | 1.86 | 4.62 | 2.32 | 5.36 M | 761.57 G | |

| SegStereo [30] | 600 ms | 1.76 | 3.70 | 2.08 | 1.88 | 4.07 | 2.25 | 28.12 M | 30.50 G | |

| EdgeStereo [46] | 700 ms | 1.72 | 3.41 | 2.00 | 1.87 | 3.61 | 2.16 | - | - | |

| DeepPruner-Best [14] | 182 ms | 1.71 | 3.18 | 1.95 | 1.87 | 3.56 | 2.15 | 7.39 M | 383.49 G | |

| DeepPruner-Fast [14] | 64 ms | 2.13 | 3.43 | 2.35 | 2.32 | 3.91 | 2.59 | 7.47 M | 153.77 G | |

| 2D conv. method | MAD-Net [32] | 20 ms | 3.45 | 8.41 | 4.27 | 3.75 | 9.2 | 4.66 | 3.83 M | 55.66 G |

| DipsNetC [10] | 60 ms | 4.11 | 3.72 | 4.05 | 4.32 | 4.41 | 4.34 | 42.43 M | 93.46 G | |

| SCV-Net [33] | 360 ms | 2.04 | 4.28 | 2.41 | 2.22 | 4.53 | 2.61 | 2.32 M | 726.48 G | |

| Ours | 76 ms | 2.50 | 5.44 | 2.99 | 2.69 | 6.23 | 3.28 | 4.61 M | 208.21 G | |

| Params | Runtime | EPE | |

|---|---|---|---|

| 4.6 M | 76 ms | 1.372 | |

| 4.2 M | 69 ms | 1.431 | |

| 3.9 M | 64 ms | 1.47 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, J.; Jeon, S.; Heo, Y.S. An Efficient Stereo Matching Network Using Sequential Feature Fusion. Electronics 2021, 10, 1045. https://doi.org/10.3390/electronics10091045

Jeong J, Jeon S, Heo YS. An Efficient Stereo Matching Network Using Sequential Feature Fusion. Electronics. 2021; 10(9):1045. https://doi.org/10.3390/electronics10091045

Chicago/Turabian StyleJeong, Jaecheol, Suyeon Jeon, and Yong Seok Heo. 2021. "An Efficient Stereo Matching Network Using Sequential Feature Fusion" Electronics 10, no. 9: 1045. https://doi.org/10.3390/electronics10091045

APA StyleJeong, J., Jeon, S., & Heo, Y. S. (2021). An Efficient Stereo Matching Network Using Sequential Feature Fusion. Electronics, 10(9), 1045. https://doi.org/10.3390/electronics10091045