CIRO: The Effects of Visually Diminished Real Objects on Human Perception in Handheld Augmented Reality

Abstract

1. Introduction

2. Related Work

2.1. Perceptual Issues in AR

2.2. Visual Stimuli Altering Our Perception

2.3. Dynamic Object Removal

2.4. Impacts of Diminishing Intensity on User Perception

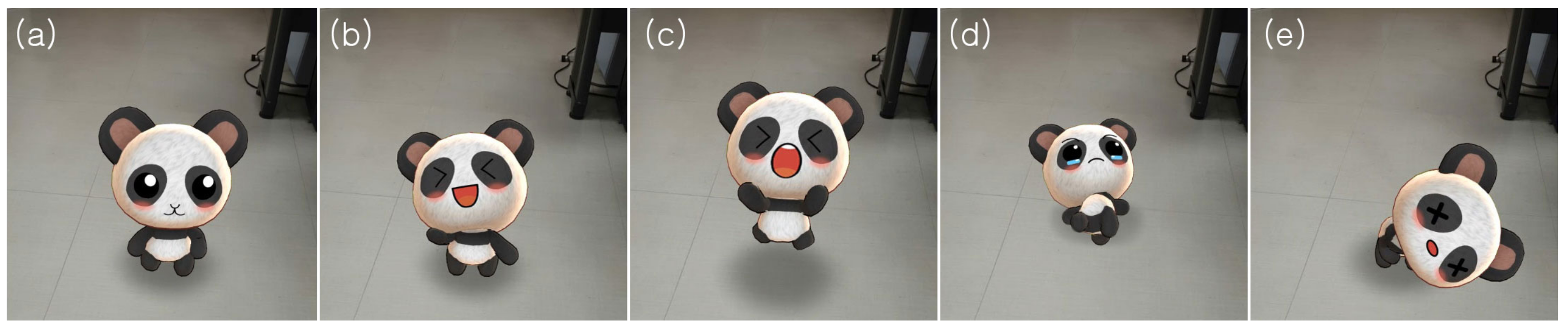

3. Basic Experimental Set-Up

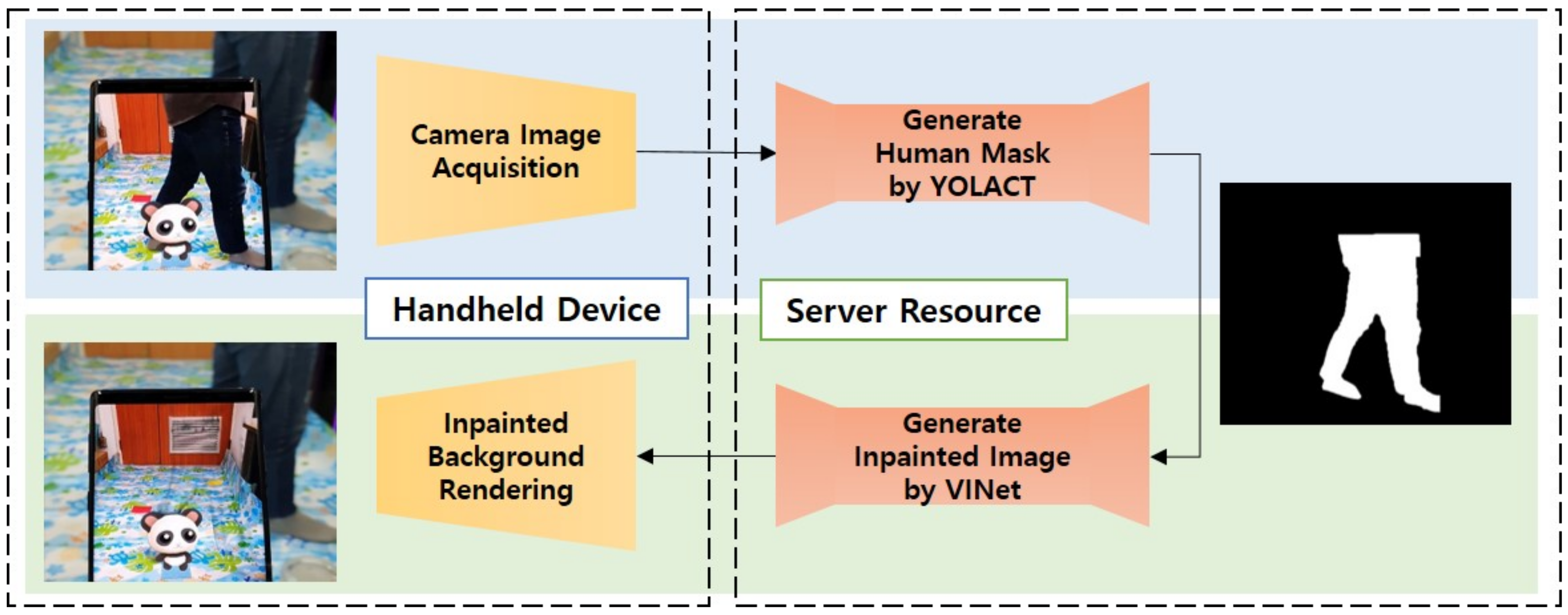

4. CIRO Diminishing System

System Design

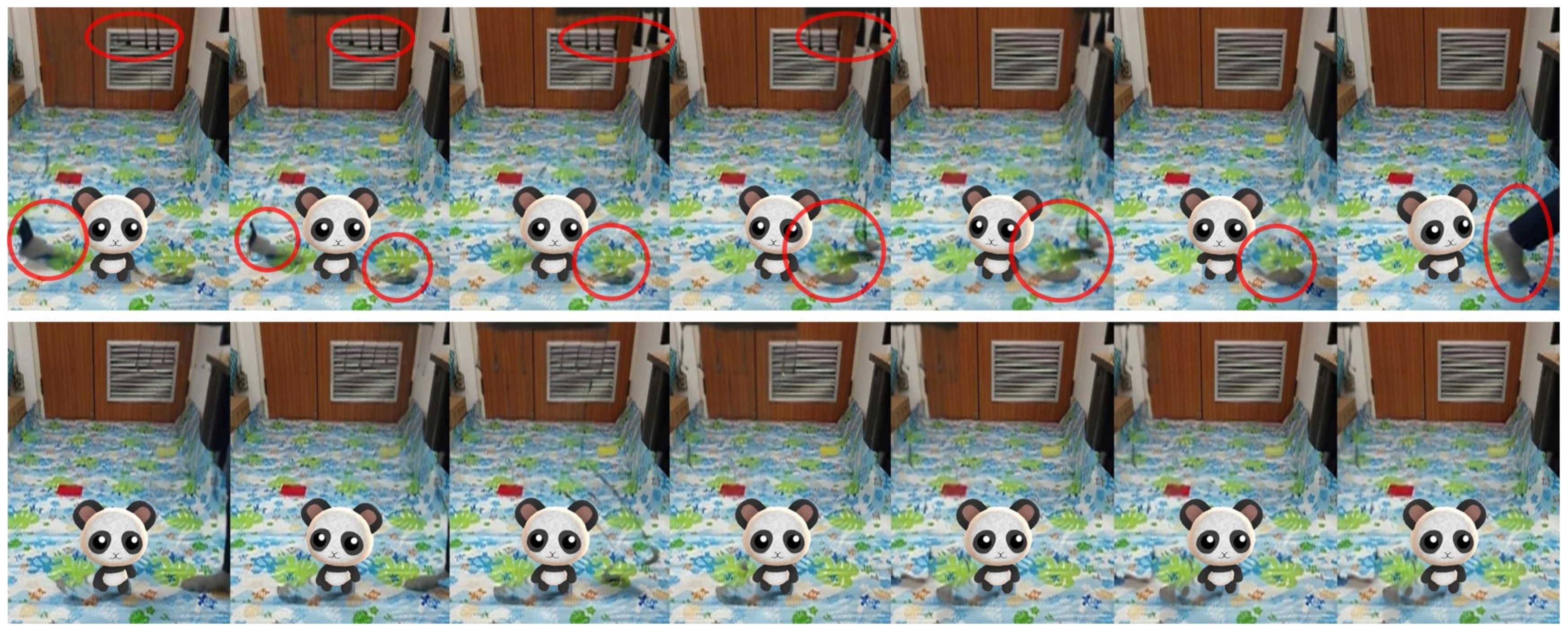

Inpainting Performance Evaluation

5. Experiment: Impacts of the Inpainting Method on User Perception

5.1. Study Design

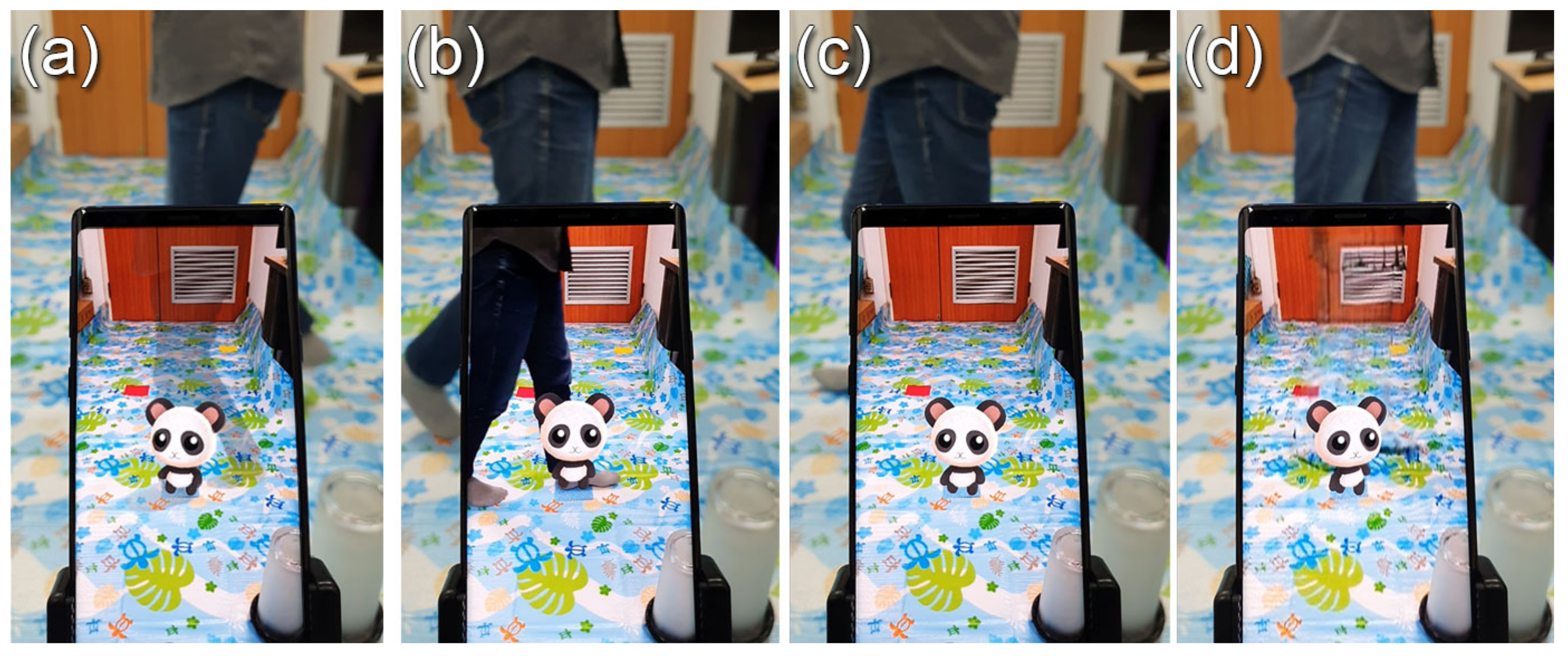

- Default/as-is (DF): The participant sees the pedestrian pass by both through the AR screen and by the naked eye in their real environment. No diminishing is applied (see Figure 1b). This condition serves as the baseline with which much perceptual problems (depth perception and double view) [8] are likely to arise, as also demonstrated in our prior study [7].

- Transparent (TP): The CIRO, the pedestrian, is completely and perfectly removed (staged) from the AR scene and filled in, but still visible in the real world by the naked eye (see Figure 1c). The staged imagery is prepared offline using video editing tools. Note that, similarly to the prior study, this experiment also was not conducted in situ, but used offline video review (for more details, see Section 5.2). This condition serves as the ground truth of perfect CIRO removal.

- Inpainted (IP): The CIRO, the pedestrian, is removed from the AR scene and filled in using the system implementation described in the previous section, possibly with occasional visual artifacts (e.g., due to fast moving large pedestrians). The pedestrian is still visible in the real world by the naked eye (see Figure 1d).

5.2. Video-Based Online Survey

5.3. Subjective Measures

5.4. Participants and Procedure

5.5. Hypotheses

5.6. Results

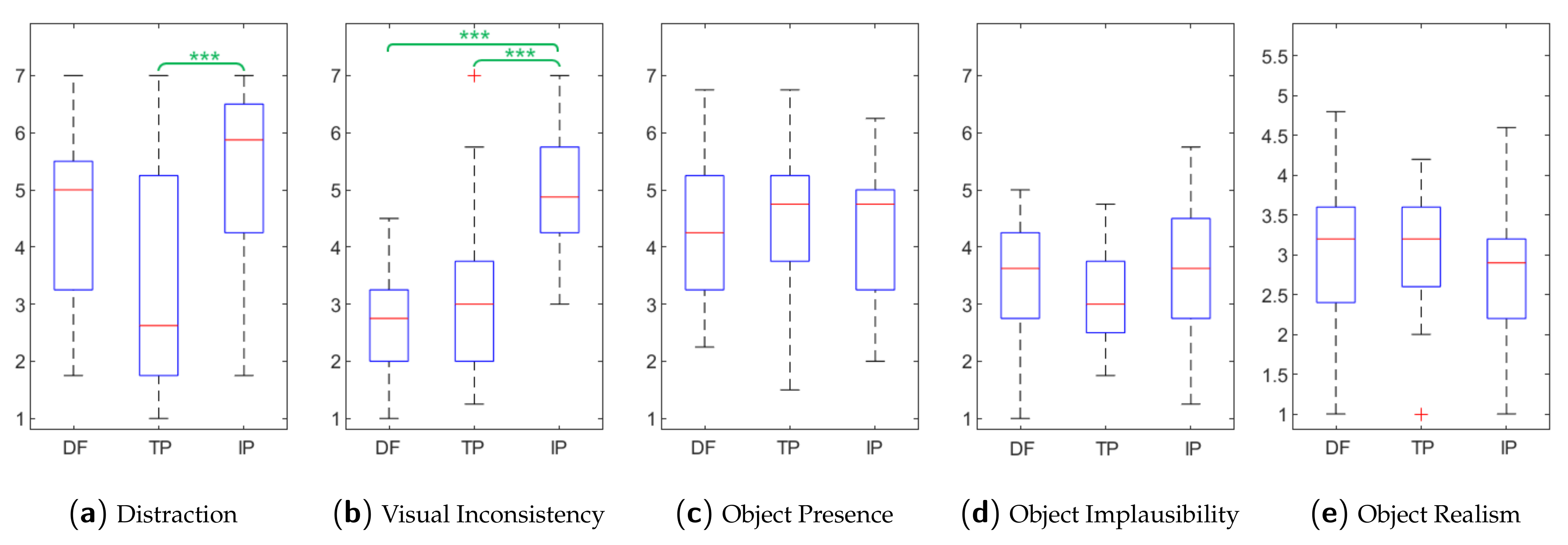

6. Discussion

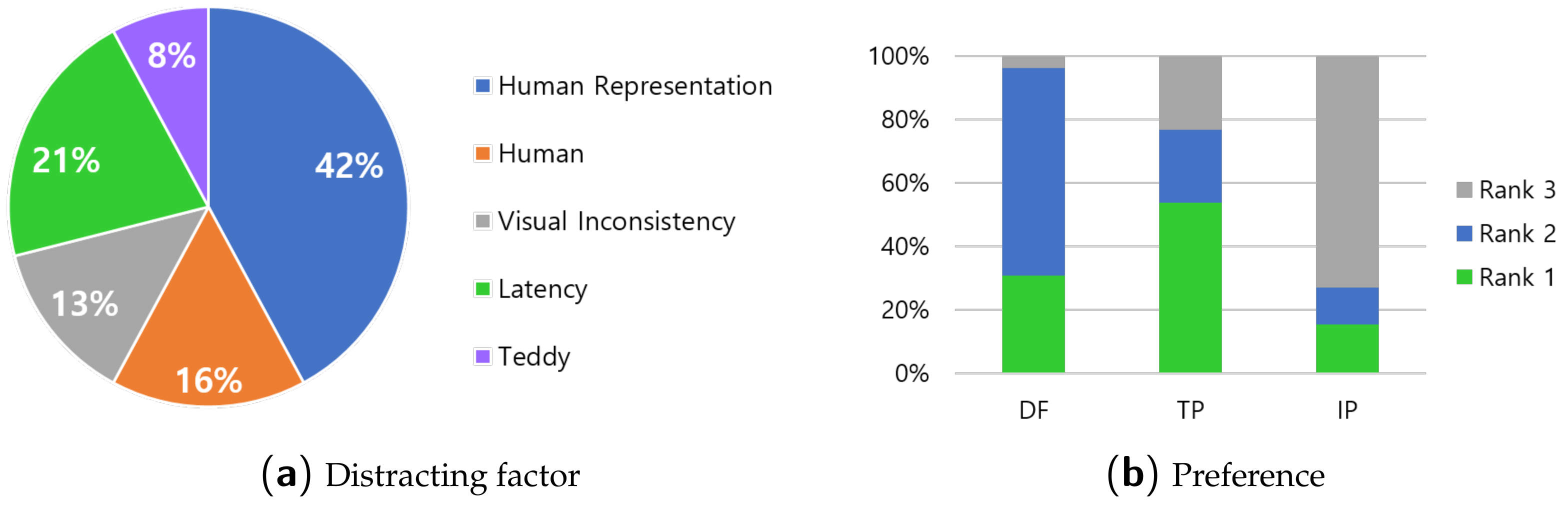

6.1. H1: Subjects Are Most Distracted by the CIRO among Various Factors

6.2. H2/H3: The More Diminished the CIRO Is, the Less Distracted the Subjects Will Feel, and This Is Their Preference

6.3. H4: Diminishing CIROs May Not Worsen the Visual Inconsistency

6.4. H5: CIRO Diminishments May Have Positive Effects on User Experience

6.5. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Kim, H.; Ali, G.; Pastor, A.; Lee, M.; Kim, G.J.; Hwang, J.I. Silhouettes from Real Objects Enable Realistic Interactions with a Virtual Human in Mobile Augmented Reality. Appl. Sci. 2021, 11, 2763. [Google Scholar] [CrossRef]

- Taylor, A.V.; Matsumoto, A.; Carter, E.J.; Plopski, A.; Admoni, H. Diminished Reality for Close Quarters Robotic Telemanipulation. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 11531–11538. [Google Scholar] [CrossRef]

- Norouzi, N.; Kim, K.; Lee, M.; Schubert, R.; Erickson, A.; Bailenson, J.; Bruder, G.; Welch, G. Walking your virtual dog: Analysis of awareness and proxemics with simulated support animals in augmented reality. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Beijing, China, 14–18 October 2019; pp. 157–168. [Google Scholar]

- Kang, H.; Lee, G.; Han, J. Obstacle detection and alert system for smartphone ar users. In Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology, Parramatta, Australia, 12–15 November 2019; pp. 1–11. [Google Scholar]

- Kim, H.; Lee, M.; Kim, G.J.; Hwang, J.I. The Impacts of Visual Effects on User Perception with a Virtual Human in Augmented Reality Conflict Situations. IEEE Access 2021, 9, 35300–35312. [Google Scholar] [CrossRef]

- Kim, H.; Kim, T.; Lee, M.; Kim, G.J.; Hwang, J.I. Don’t Bother Me: How to Handle Content-Irrelevant Objects in Handheld Augmented Reality. In Proceedings of the 26th ACM Symposium on Virtual Reality Software and Technology, Virtual Event, 1–4 November 2020; ACM: New York, NY, USA, 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Kruijff, E.; Swan, J.E.; Feiner, S. Perceptual issues in augmented reality revisited. In Proceedings of the 9th IEEE International Symposium on Mixed and Augmented Reality 2010: Science and Technology, ISMAR 2010, Seoul, Korea, 13–16 October 2010; pp. 3–12. [Google Scholar] [CrossRef]

- Berning, M.; Kleinert, D.; Riedel, T.; Beigl, M. A study of depth perception in hand-held augmented reality using autostereoscopic displays. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; pp. 93–98. [Google Scholar] [CrossRef]

- Diaz, C.; Walker, M.; Szafir, D.A.; Szafir, D. Designing for depth perceptions in augmented reality. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Nantes, France, 9–13 October 2017; pp. 111–122. [Google Scholar]

- Kim, K.; Bruder, G.; Welch, G. Exploring the effects of observed physicality conflicts on real-virtual human interaction in augmented reality. In Proceedings of the 23rd ACM Symposium on Virtual Reality Software and Technology—VRST ’17, Gothenburg, Sweden, 8–10 November 2017; ACM Press: New York, NY, USA, 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Kim, K.; Boelling, L.; Haesler, S.; Bailenson, J.; Bruder, G.; Welch, G.F. Does a digital assistant need a body? The influence of visual embodiment and social behavior on the perception of intelligent virtual agents in AR. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018; pp. 105–114. [Google Scholar]

- Lee, M.; Norouzi, N.; Bruder, G.; Wisniewski, P.; Welch, G. Mixed Reality Tabletop Gameplay: Social Interaction with a Virtual Human Capable of Physical Influence. IEEE Trans. Vis. Comput. Graph. 2019, 24. [Google Scholar] [CrossRef] [PubMed]

- Mori, S.; Ikeda, S.; Saito, H. A survey of diminished reality: Techniques for visually concealing, eliminating, and seeing through real objects. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 1–14. [Google Scholar] [CrossRef]

- Čopič Pucihar, K.; Coulton, P.; Alexander, J. The use of surrounding visual context in handheld AR: Device vs. user perspective rendering. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 197–206. [Google Scholar]

- Sanches, S.R.R.; Tokunaga, D.M.; Silva, V.F.; Sementille, A.C.; Tori, R. Mutual occlusion between real and virtual elements in Augmented Reality based on fiducial markers. In Proceedings of the 2012 IEEE Workshop on the Applications of Computer Vision (WACV), Breckenridge, CO, USA, 9–11 January 2012; pp. 49–54. [Google Scholar] [CrossRef]

- Zhou, Y.; Ma, J.T.; Hao, Q.; Wang, H.; Liu, X.P. A novel optical see-through head-mounted display with occlusion and intensity matching support. In Proceedings of the International Conference on Technologies for E-Learning and Digital Entertainment, Hong Kong, China, 11–13 June 2007; pp. 56–62. [Google Scholar]

- Cakmakci, O.; Ha, Y.; Rolland, J.P. A compact optical see-through head-worn display with occlusion support. In Proceedings of the Third IEEE and ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 5 November 2004; pp. 16–25. [Google Scholar]

- Kim, K.; Maloney, D.; Bruder, G.; Bailenson, J.N.; Welch, G.F. The effects of virtual human’s spatial and behavioral coherence with physical objects on social presence in AR. Comput. Animat. Virtual Worlds 2017, 28, 1–9. [Google Scholar] [CrossRef]

- Klein, G.; Drummond, T. Sensor fusion and occlusion refinement for tablet-based AR. In Proceedings of the Third IEEE and ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 5 November 2004; pp. 38–47. [Google Scholar]

- Tang, X.; Hu, X.; Fu, C.W.; Cohen-Or, D. GrabAR: Occlusion-aware Grabbing Virtual Objects in AR. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology, Virtual Event, 20–23 October 2020; pp. 697–708. [Google Scholar]

- Holynski, A.; Kopf, J. Fast Depth Densification for Occlusion-Aware Augmented Reality. ACM Trans. Graph. 2018, 37. [Google Scholar] [CrossRef]

- Runz, M.; Buffier, M.; Agapito, L. MaskFusion: Real-Time Recognition, Tracking and Reconstruction of Multiple Moving Objects. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 16–20 October 2018; pp. 10–20. [Google Scholar] [CrossRef]

- Godard, C.; Mac Aodha, O.; Firman, M.; Brostow, G.J. Digging into self-supervised monocular depth estimation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 3828–3838. [Google Scholar]

- Luo, X.; Huang, J.B.; Szeliski, R.; Matzen, K.; Kopf, J. Consistent video depth estimation. ACM Trans. Graph. 2020, 39, 71:1–71:13. [Google Scholar] [CrossRef]

- Kim, K.; Bruder, G.; Welch, G.F. Blowing in the Wind: Increasing Copresence with a Virtual Human via Airflow Influence in Augmented Reality. In Proceedings of the International Conference on Artificial Reality and Telexistence Eurographics Symposium on Virtual Environments, Limassol, Cyprus, 7–9 November 2018; pp. 183–190. [Google Scholar] [CrossRef]

- Mohr, P.; Tatzgern, M.; Grubert, J.; Schmalstieg, D.; Kalkofen, D. Adaptive user perspective rendering for handheld augmented reality. In Proceedings of the 2017 IEEE Symposium on 3D User Interfaces (3DUI), Los Angeles, CA, USA, 18–19 March 2017; pp. 176–181. [Google Scholar]

- Baričević, D.; Höllerer, T.; Sen, P.; Turk, M. User-perspective augmented reality magic lens from gradients. In Proceedings of the 20th ACM Symposium on Virtual Reality Software and Technology—VRST ’14, Edinburgh, UK, 11–13 November 2014; ACM Press: New York, NY, USA, 2014; pp. 87–96. [Google Scholar] [CrossRef]

- Capó-Aponte, J.E.; Temme, L.A.; Task, H.L.; Pinkus, A.R.; Kalich, M.E.; Pantle, A.J.; Rash, C.E.; Russo, M.; Letowski, T.; Schmeisser, E. Visual perception and cognitive performance. In Helmet-Mounted Displays: Sensation, Perception and Cognitive Issues; U.S. Army Aeromedical Research Laboratory: Fort Rucker, AL, USA, 2009; pp. 335–390. [Google Scholar]

- Spence, C. Explaining the Colavita visual dominance effect. In Progress in Brain Research; Elsevier: Amsterdam, The Netherlands, 2009; Volume 176, pp. 245–258. [Google Scholar] [CrossRef]

- Weir, P.; Sandor, C.; Swoboda, M.; Nguyen, T.; Eck, U.; Reitmayr, G.; Day, A. Burnar: Involuntary heat sensations in augmented reality. In Proceedings of the 2013 IEEE Virtual Reality (VR), Lake Buena Vista, FL, USA, 18–20 March 2013; pp. 43–46. [Google Scholar] [CrossRef]

- Erickson, A.; Bruder, G.; Wisniewski, P.J.; Welch, G.F. Examining Whether Secondary Effects of Temperature-Associated Virtual Stimuli Influence Subjective Perception of Duration. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 22–26 March 2020; pp. 493–499. [Google Scholar] [CrossRef]

- Blaga, A.D.; Frutos-Pascual, M.; Creed, C.; Williams, I. Too Hot to Handle: An Evaluation of the Effect of Thermal Visual Representation on User Grasping Interaction in Virtual Reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–16. [Google Scholar] [CrossRef]

- Von Castell, C.; Hecht, H.; Oberfeld, D. Measuring Perceived Ceiling Height in a Visual Comparison Task. Q. J. Exp. Psychol. 2017, 70, 516–532. [Google Scholar] [CrossRef] [PubMed]

- Punpongsanon, P.; Iwai, D.; Sato, K. SoftAR: Visually manipulating haptic softness perception in spatial augmented reality. IEEE Trans. Vis. Comput. Graph. 2015, 21, 1279–1288. [Google Scholar] [CrossRef] [PubMed]

- Kawai, N.; Sato, T.; Nakashima, Y.; Yokoya, N. Augmented reality marker hiding with texture deformation. IEEE Trans. Vis. Comput. Graph. 2016, 23, 2288–2300. [Google Scholar] [CrossRef] [PubMed]

- Korkalo, O.; Aittala, M.; Siltanen, S. Light-weight marker hiding for augmented reality. In Proceedings of the 2010 IEEE International Symposium on Mixed and Augmented Reality, Seoul, Korea, 13–16 October 2010; pp. 247–248. [Google Scholar]

- Siltanen, S. Diminished reality for augmented reality interior design. Vis. Comput. 2017, 33, 193–208. [Google Scholar] [CrossRef]

- Guida, J.; Sra, M. Augmented Reality World Editor. In Proceedings of the 26th ACM Symposium on Virtual Reality Software and Technology; Association for Computing Machinery VRST’20, New York, NY, USA, 2–4 November 2020. [Google Scholar] [CrossRef]

- Queguiner, G.; Fradet, M.; Rouhani, M. Towards mobile diminished reality. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Munich, Germany, 16–20 October 2018; pp. 226–231. [Google Scholar]

- Buchmann, V.; Nilsen, T.; Billinghurst, M. Interaction with partially transparent hands and objects. In Proceedings of the 6th Australasian Conference on User Interface, Newcastle, Australia, 30 January–3 February 2005; Volume 40, pp. 17–20. [Google Scholar] [CrossRef]

- Okumoto, H.; Yoshida, M.; Umemura, K. Realizing Half-Diminished Reality from video stream of manipulating objects. In Proceedings of the 4th IGNITE Conference and 2016 International Conference on Advanced Informatics: Concepts, Theory and Application, ICAICTA 2016, Penang, Malaysia, 16–19 August 2016. [Google Scholar] [CrossRef]

- Enomoto, A.; Saito, H. Diminished reality using multiple handheld cameras. ACCV’07 Workshop on Multi-dimensional and Multi-view Image Processing. Citeseer 2007, 7, 130–135. [Google Scholar]

- Hasegawa, K.; Saito, H. Diminished reality for hiding a pedestrian using hand-held camera. In Proceedings of the 2015 IEEE International Symposium on Mixed and Augmented Reality Workshops, Fukuoka, Japan, 29 September–3 October 2015; pp. 47–52. [Google Scholar]

- Yagi, K.; Hasegawa, K.; Saito, H. Diminished reality for privacy protection by hiding pedestrians in motion image sequences using structure from motion. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, 9–13 October 2017; pp. 334–337. [Google Scholar]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-time Instance Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019; pp. 9156–9165. [Google Scholar] [CrossRef]

- Huang, J.B.; Kang, S.B.; Ahuja, N.; Kopf, J. Temporally coherent completion of dynamic video. ACM Trans. Graph. (TOG) 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Xu, R.; Li, X.; Zhou, B.; Loy, C.C. Deep flow-guided video inpainting. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3718–3727. [Google Scholar] [CrossRef]

- Newson, A.; Almansa, A.; Fradet, M.; Gousseau, Y.; Pérez, P. Video inpainting of complex scenes. Siam J. Imaging Sci. 2014, 7, 1993–2019. [Google Scholar] [CrossRef]

- Kim, D.; Woo, S.; Lee, J.Y.; So Kweon, I. Deep Video Inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5792–5801. [Google Scholar]

- Google. ARCore. Available online: https://developers.google.com/ar (accessed on 6 April 2021).

- Lee, M.; Norouzi, N.; Bruder, G.; Wisniewski, P.J.; Welch, G.F. The physical-virtual table: Exploring the effects of a virtual human’s physical influence on social interaction. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, Tokyo, Japan, 28 November–1 December 2018; pp. 1–11. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Oh, S.W.; Lee, S.; Lee, J.Y.; Kim, S.J. Onion-peel networks for deep video completion. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 4403–4412. [Google Scholar]

- Perazzi, F.; Pont-Tuset, J.; McWilliams, B.; Van Gool, L.; Gross, M.; Sorkine-Hornung, A. A benchmark dataset and evaluation methodology for video object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 724–732. [Google Scholar]

- Vorderer, P.; Wirth, W.; Gouveia, F.R.; Biocca, F.; Saari, T.; Jäncke, F.; Böcking, S.; Schramm, H.; Gysbers, A.; Hartmann, T.; et al. MEC Spatial Presence Questionnaire (MEC-SPQ): Short Documentation and Instructions for Application. Available online: https://www.researchgate.net/publication/318531435_MEC_spatial_presence_questionnaire_MEC-SPQ_Short_documentation_and_instructions_for_application (accessed on 9 April 2021).

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement Instruments for the Anthropomorphism, Animacy, Likeability, Perceived Intelligence, and Perceived Safety of Robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Sandor, C.; Cunningham, A.; Dey, A.; Mattila, V.V. An augmented reality X-ray system based on visual saliency. In Proceedings of the 2010 IEEE International Symposium on Mixed and Augmented Reality, Seoul, Korea, 13–16 October 2010; pp. 27–36. [Google Scholar]

- Enns, J.T.; Di Lollo, V. What’s new in visual masking? Trends Cogn. Sci. 2000, 4, 345–352. [Google Scholar] [CrossRef]

- Blau, Y.; Michaeli, T. The Perception-Distortion Tradeoff. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6228–6237. [Google Scholar] [CrossRef]

| Measure | Tests | |||

|---|---|---|---|---|

| Cronbach | Friedman | Post-Hoc | ||

| Distraction | DF > TP ***, DF > STP *** | |||

| Visual Inconsistency | ||||

| Object Presence | DF < TP * | |||

| Object Implausibility | DF > TP ** | |||

| Object Realism | DF < TP *, DF < STP * | |||

| Ours | VINet [52] | |||

|---|---|---|---|---|

| SSIM | PSNR | SSIM | PSNR | |

| DAVIS [57] | 0.87 | 23.88 | 0.86 | 23.37 |

| YOLACT [48] | 0.84 | 23.75 | 0.82 | 22.89 |

| Distraction | |

| DS1 | I was not able to entirely concentrate on the AR scene because of the person roaming around in the background. |

| DS2 | The passerby’s existence bothered me when observing and interacting with the virtual pet. |

| DS3 | To what extent were you aware of the person passing in the AR scene (or real environment)? |

| DS4 | I did not pay attention to the passerby. |

| Visual Inconsistency | |

| VI1 | The visual mismatch between outside and inside the screen of the passerby was obvious to me. |

| VI2 | The different visual representations of the passerby’s leg in the AR scene felt awkward. |

| VI3 | I did not notice the visual inconsistency between the AR scene and the real scene. |

| VI4 | The passerby’s leg (or body parts) in the AR scene was not felt awkward at all. |

| Object Presence | |

| OP1 | I felt like Teddy was a part of the environment. |

| OP2 | I felt like Teddy was actually there in the environment. |

| OP3 | It seemed as though Teddy was present in the environment. |

| OP4 | I felt as though Teddy was physically present in the environment. |

| Object Implausibility | |

| OI1 | Teddy’s movements/behavior in real space looked awkward. |

| OI2 | Teddy’s appearance was out of harmony with the background space. |

| OI3 | Teddy seemed to be in a different space than the background. |

| OI4 | I felt Teddy turned on the lamp. |

| Object Realism; Please rate your impression of the Teddy on these scales. | |

| OR1 | Fake (1) to Natural (5) |

| OR2 | Machine (1) to Animal (5) |

| OR3 | Unconscious (1) to Conscious (5) |

| OR4 | Artificial (1) to Lifelike (5) |

| OR5 | Moving rigidly (1) to Moving elegantly (5) |

| Measure | Tests | |||

|---|---|---|---|---|

| Cronbach | Friedman | Post-Hoc | ||

| Distraction | TP < IP *** | |||

| Visual Inconsistency | TP < IP ***, DF < IP *** | |||

| Object Presence | ||||

| Object Implausibility | ||||

| Object Realism | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Kim, T.; Lee, M.; Kim, G.J.; Hwang, J.-I. CIRO: The Effects of Visually Diminished Real Objects on Human Perception in Handheld Augmented Reality. Electronics 2021, 10, 900. https://doi.org/10.3390/electronics10080900

Kim H, Kim T, Lee M, Kim GJ, Hwang J-I. CIRO: The Effects of Visually Diminished Real Objects on Human Perception in Handheld Augmented Reality. Electronics. 2021; 10(8):900. https://doi.org/10.3390/electronics10080900

Chicago/Turabian StyleKim, Hanseob, Taehyung Kim, Myungho Lee, Gerard Jounghyun Kim, and Jae-In Hwang. 2021. "CIRO: The Effects of Visually Diminished Real Objects on Human Perception in Handheld Augmented Reality" Electronics 10, no. 8: 900. https://doi.org/10.3390/electronics10080900

APA StyleKim, H., Kim, T., Lee, M., Kim, G. J., & Hwang, J.-I. (2021). CIRO: The Effects of Visually Diminished Real Objects on Human Perception in Handheld Augmented Reality. Electronics, 10(8), 900. https://doi.org/10.3390/electronics10080900