Movement Tube Detection Network Integrating 3D CNN and Object Detection Framework to Detect Fall

Abstract

1. Introduction

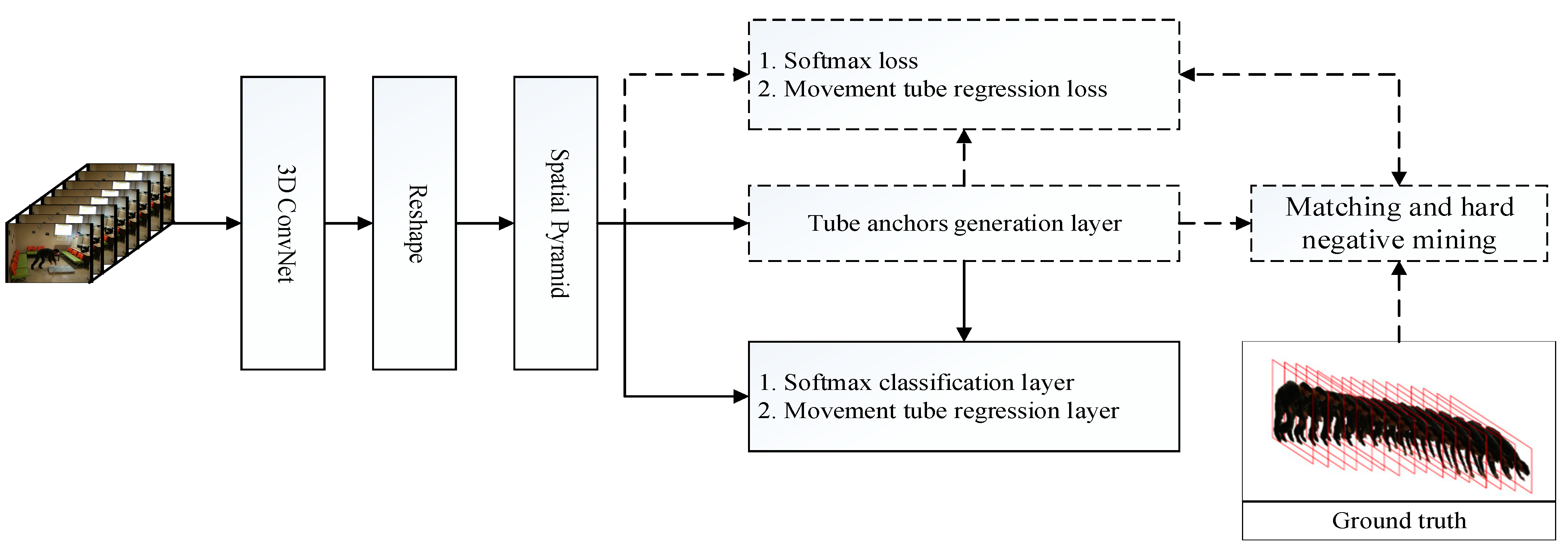

- A movement tube detection network is proposed to detect a human fall in both spatial and temporal dimensions simultaneously. Specifically, a 3D convolutional neural network integrating into tube anchors generation layer, a softmax classification layer, and a movement tube regression layer form the movement tube detection network for a human fall. Tested on an Le2i fall detection dataset with 3DIOU-0.25 and 3DIOU-0.5, the proposed algorithm outperforms the state-of-the-art fall detection methods.

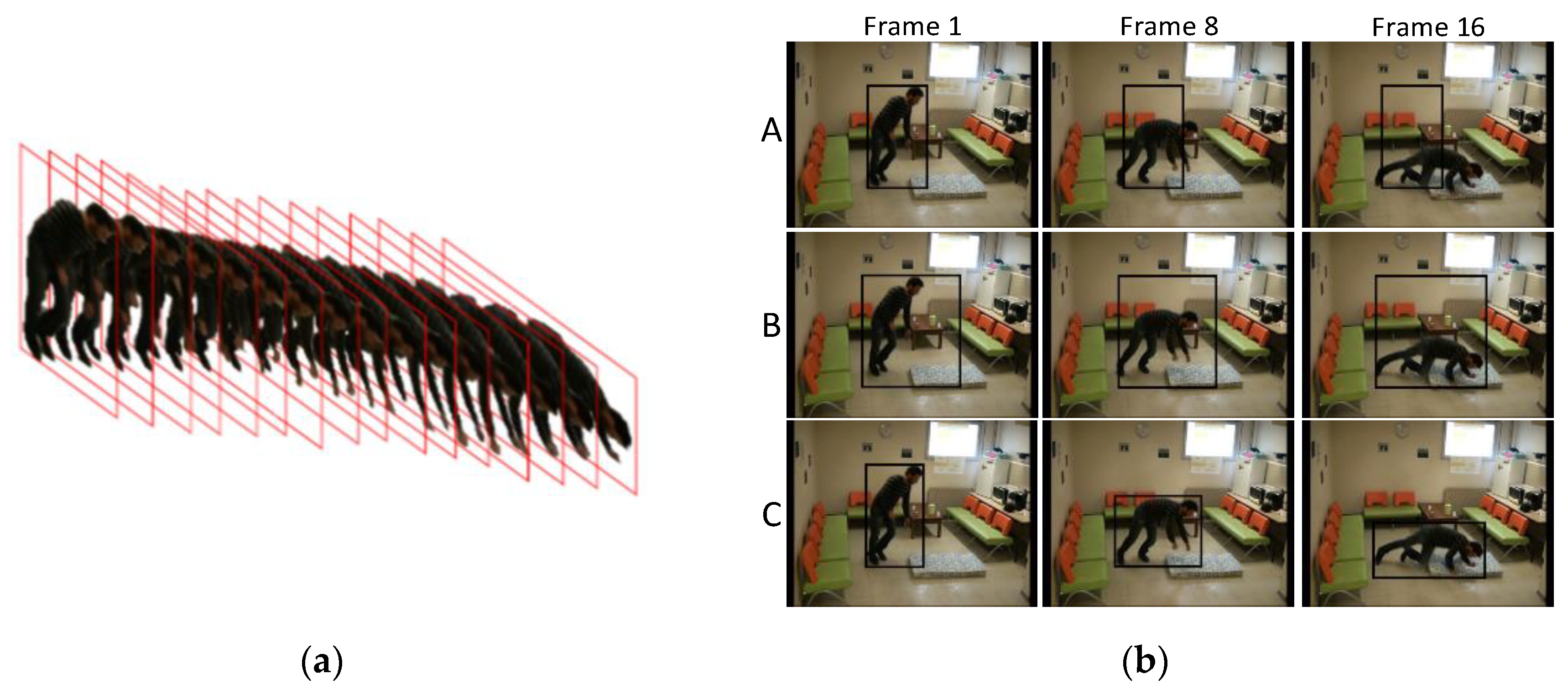

- To reduce the impact of irrelevant information in the process of a human fall, the constrained movement tube is used to encapsulate the person closely. The movement tube detection tube network can detect fall even in the case of interpersonal inference and partial occlusion because the constrained movement tube avoids peripheral interference.

- A large-scale spatio-temporal (denoted as LSST) fall detection dataset is collected. The dataset has the following three main characteristics: large scale, annotation, and posture and viewpoint diversities. The LSST fall dataset considers the diversities of the postures of human fall process and the diversities of the relative postures and the distances between the human fall and the camera. The LSST fall detection dataset aims to provide a data benchmark to encourage further research into human fall detection in both spatial and temporal dimensions.

2. Related Work

3. The Overview of Proposed Method

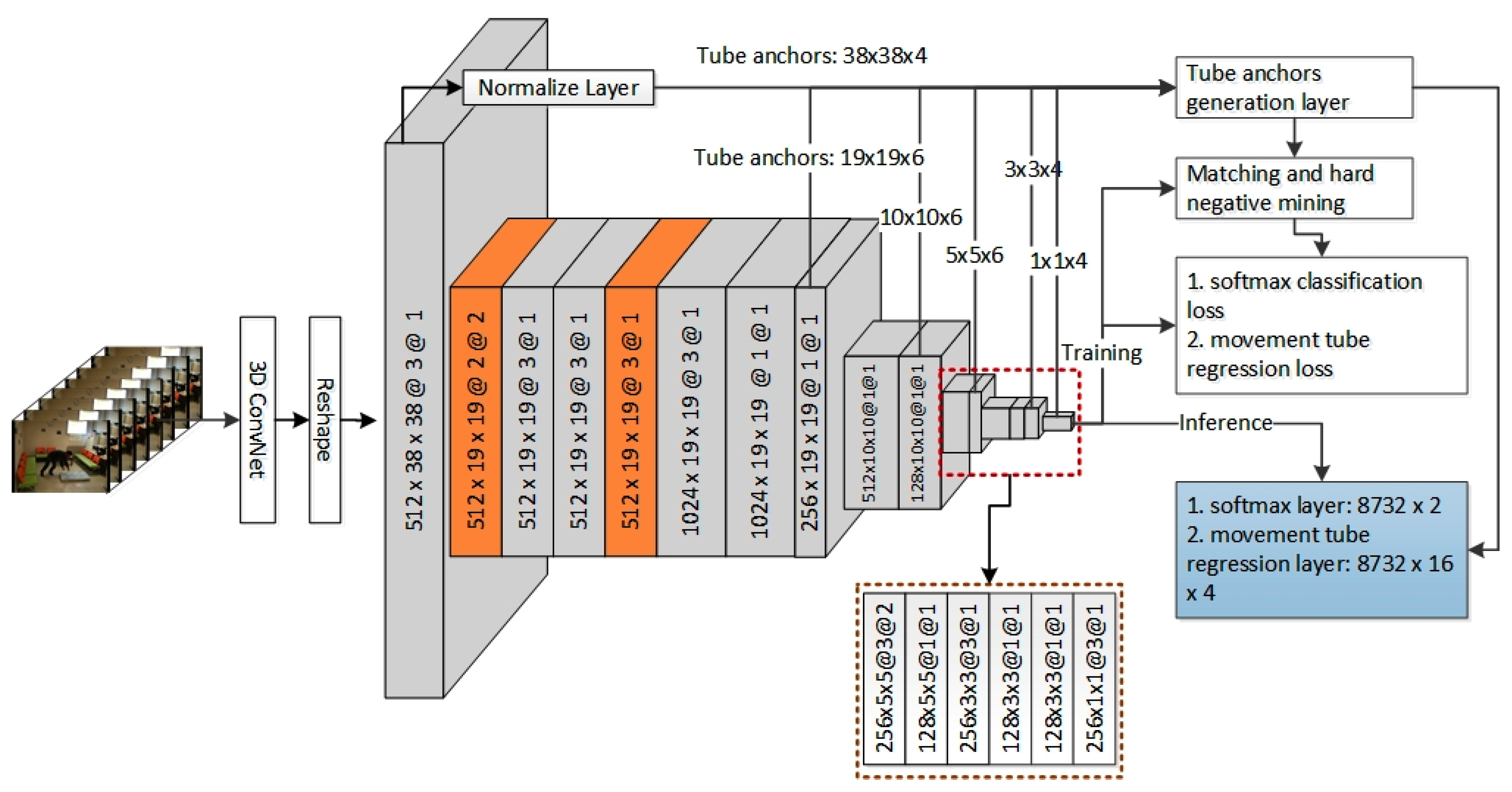

4. The Movement Tube Detection Network

4.1. Constrained Movement Tube

4.2. The Structure of the Proposed Neural Network

4.3. Loss Function

4.4. Data Augmentation

5. Post-Processing and Evaluation Metrics

5.1. Post-Processing

| Algorithm 1. The adjacent movement tube linking algorithm. |

| 1: Input: , in which is the j-th sequence of movement tubes of the i-th frame of video. L is the length of video. is all sequences of movement tubes of the i-th frame. 2: Output: Tube_list // The output Tube_list is a list of the complete constrained movement tubes. 3: List<List> CTs //CTs are current unfinished tubes. 4: for (i = 1; i <= ; i++) 5: for (j = 1; j <= ; j++) 6: Compute 3DIOU between and current all unfinished CTs; 7: Search the pair <, CT> corresponding to the max IOU.; 8: If the max 3DIOU is beyond a threshold 9: B is added to CT; 10: Else; 11: CT is added to the complete movement tube list Tube_list; 12: B is a new unfinished tube, add B to CTs; 13: return Tube_list; |

5.2. Evaluation Metrics

6. Dataset

6.1. Existing Fall Detection Datasets

6.2. LSST

7. Experiments and Discussion

7.1. Implementation Details

7.2. Ablation Study

7.3. Comparison to the State of the Art

7.4. The Result of the Proposed Method

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yao, C.; Hu, J.; Min, W.; Deng, Z.; Zou, S.; Min, W. A novel real-time fall detection method based on head segmentation and convolutional neural network. J. Real-Time Image Process. 2020, 17, 1939–1949. [Google Scholar] [CrossRef]

- Ren, L.; Peng, Y. Research of Fall Detection and Fall Prevention Technologies: A Systematic Review. IEEE Access 2019, 7, 77702–77722. [Google Scholar] [CrossRef]

- World Health Organization. WHO Global Report on Falls Prevention in Older Age; World Health Organization: Geneva, Switzerland, 2008; ISBN 978-92-4-156353-6. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D Convolutional Neural Networks for Human Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef]

- Singh, G.; Saha, S.; Sapienza, M.; Torr, P.; Cuzzolin, F. Online Real-Time Multiple Spatiotemporal Action Localisation and Prediction. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3657–3666. [Google Scholar]

- Kalogeiton, V.; Weinzaepfel, P.; Ferrari, V.; Schmid, C. Action Tubelet Detector for Spatio-Temporal Action Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4415–4423. [Google Scholar]

- Yang, H.; Liu, L.; Min, W.; Yang, X.; Xiong, X. Driver Yawning Detection Based on Subtle Facial Action Recognition. IEEE Trans. Multimed. 2021, 23, 572–583. [Google Scholar] [CrossRef]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Actions Classes From Videos in The Wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Dhiman, C.; Vishwakarma, D.K. A review of state-of-the-art techniques for abnormal human activity recognition. Eng. Appl. Artif. Intell. 2019, 77, 21–45. [Google Scholar] [CrossRef]

- Yu, X. Approaches and principles of fall detection for elderly and patient. In Proceedings of the HealthCom 2008—10th International Conference on e-Health Networking, Applications and Services, Singapore, 7–9 July 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 42–47. [Google Scholar]

- Wang, Z.; Ramamoorthy, V.; Gal, U.; Guez, A. Possible Life Saver: A Review on Human Fall Detection Technology. Robotics 2020, 9, 55. [Google Scholar] [CrossRef]

- Augustyniak, P.; Smoleń, M.; Mikrut, Z.; Kańtoch, E. Seamless Tracing of Human Behavior Using Complementary Wearable and House-Embedded Sensors. Sensors 2014, 14, 7831–7856. [Google Scholar] [CrossRef] [PubMed]

- Medrano, C.; Plaza, I.; Igual, R.; Sánchez, Á.; Castro, M. The Effect of Personalization on Smartphone-Based Fall Detectors. Sensors 2016, 16, 117. [Google Scholar] [CrossRef] [PubMed]

- Luque, R.; Casilari, E.; Morón, M.-J.; Redondo, G. Comparison and Characterization of Android-Based Fall Detection Systems. Sensors 2014, 14, 18543–18574. [Google Scholar] [CrossRef] [PubMed]

- Mubashir, M.; Shao, L.; Seed, L. A survey on fall detection: Principles and approaches. Neurocomputing 2013, 100, 144–152. [Google Scholar] [CrossRef]

- Min, W.; Zou, S.; Li, J. Human fall detection using normalized shape aspect ratio. Multimed. Tools Appl. 2018, 78, 14331–14353. [Google Scholar] [CrossRef]

- Alhimale, L.; Zedan, H.; Al-Bayatti, A. The implementation of an intelligent and video-based fall detection system using a neural network. Appl. Soft Comput. 2014, 18, 59–69. [Google Scholar] [CrossRef]

- Núñez-Marcos, A.; Azkune, G.; Arganda-Carreras, I. Vision-Based Fall Detection with Convolutional Neural Networks. Wirel. Commun. Mob. Comput. 2017, 2017, 9474806. [Google Scholar] [CrossRef]

- Charfi, I.; Miteran, J.; Dubois, J.; Atri, M.; Tourki, R. Definition and Performance Evaluation of a Robust SVM Based Fall Detection Solution. In Proceedings of the 2012 Eighth International Conference on Signal Image Technology and Internet Based Systems, Naples, Italy, 25–29 November 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 218–224. [Google Scholar]

- Zerrouki, N.; Houacine, A. Combined curvelets and hidden Markov models for human fall detection. Multimed. Tools Appl. 2018, 77, 6405–6424. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Sanchez, J.; Perronnin, F. High-dimensional signature compression for large-scale image classification. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1665–1672. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-Stream Convolutional Networks for Action Recognition in Videos. In Advances in Neural Information Processing Systems 27; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2014; pp. 568–576. [Google Scholar]

- Donahue, J.; Hendricks, L.A.; Rohrbach, M.; Venugopalan, S.; Guadarrama, S.; Saenko, K.; Darrell, T. Long-Term Recurrent Convolutional Networks for Visual Recognition and Description. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 677–691. [Google Scholar] [CrossRef] [PubMed]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.-Y.; Wong, W.; Woo, W. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the 28th International Conference on Neural Information Processing Systems—Volume 1, Montreal, QC, Canada, 8–13 December 2014; MIT Press: Cambridge, MA, USA, 2015; pp. 802–810. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks; IEEE: Piscataway, NJ, USA, 2015; pp. 4489–4497. [Google Scholar]

- Asif, U.; Mashford, B.; Cavallar, S.V.; Yohanandan, S.; Roy, S.; Tang, J.; Harrer, S. Privacy Preserving Human Fall Detection using Video Data. Proceedings of the Machine Learning for Health Workshop. 2020. Available online: http://proceedings.mlr.press/v116/asif20a.html (accessed on 21 November 2020).

- Fan, Y.; Levine, M.D.; Wen, G.; Qiu, S. A deep neural network for real-time detection of falling humans in naturally occurring scenes. Neurocomputing 2017, 260, 43–58. [Google Scholar] [CrossRef]

- Kong, Y.; Huang, J.; Huang, S.; Wei, Z.; Wang, S. Learning spatiotemporal representations for human fall detection in surveillance video. J. Vis. Commun. Image Represent. 2019, 59, 215–230. [Google Scholar] [CrossRef]

- Lu, N.; Wu, Y.; Feng, L.; Song, J. Deep Learning for Fall Detection: Three-Dimensional CNN Combined With LSTM on Video Kinematic Data. IEEE J. Biomed. Health Inform. 2019, 23, 314–323. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; IEEE: Santiago, Chile, 2015; pp. 1440–1448. [Google Scholar]

- Neubeck, A.; Gool, L.V. Efficient Non-Maximum Suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar]

- Charfi, I.; Miteran, J.; Dubois, J.; Atri, M.; Tourki, R. Optimised spatio-temporal descriptors for real-time fall detection: Comparison of SVM and Adaboost based classification. J. Electron. Imaging 2013, 22, 17. [Google Scholar] [CrossRef]

- Auvinet, E.; Rougier, C.; Meunier, J.; St-Arnaud, A.; Rousseau, J. Multiple Cameras Fall Data Set; Technical Report; DIRO-Université de Montréal: Montreal, QC, Canada, 2010; Volume 24. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional Architecture for Fast Feature Embedding. arXiv 2014, arXiv:1408.5093. [Google Scholar]

| Name | Input | Conv1a | Pool1 | Conv2a | Pool2 | Conv3a | Pool3 | Conv4a | Conv4b | Pool4 |

|---|---|---|---|---|---|---|---|---|---|---|

| Stride | - | 1 × 1 × 1 | 2 × 1 × 1 | 1 × 1 × 1 | 2 × 2 × 2 | 1 × 1 × 1 | 2 × 2 × 2 | 1 × 1 × 1 | 1 × 1 × 1 | 2 × 1 × 1 |

| F-size | 3 | 64 | 64 | 128 | 128 | 256 | 256 | 512 | 512 | 512 |

| T-size | 16 | 16 | 8 | 8 | 4 | 4 | 2 | 2 | 2 | 1 |

| S-size | 300 × 300 | 300 × 300 | 300 × 300 | 300 × 300 | 150 × 150 | 150 × 150 | 75 × 75 | 75 × 75 | 75 × 75 | 38 × 38 |

| Dataset | Falls/# | Total Frames/# | Fall Frames/# | No Fall Frames/# |

|---|---|---|---|---|

| Le2i | 192/118 | 108,476/29,237 | 3825/3540 | 104,651/25,697 |

| Multicams | 184/0 | 261,137/0 | 7880/0 | 253,257/0 |

| LSST | 928/928 | 331,755/331,755 | 27,840/27,840 | 303,915/303,915 |

| Minibatch Size | Type | Base_Lr | Max_Iter | Lr_Policy | Stepsize | Gamma | Momentum | Weight_Decay |

|---|---|---|---|---|---|---|---|---|

| 8 | SGD | 0.000005 | 40,000 | step | 10,000 | 0.1 | 0.9 | 0.00005 |

| Method | Sensitivity | Specificity | Accuracy | mAP |

|---|---|---|---|---|

| Núñez-Marcos A [21] | 99.00% | 97.00% | 97.00% | - |

| Zerrouki [23] | - | - | 97.02% | - |

| Asif, U [32] | 0.9245 | 0.9244 | - | - |

| Fan Y [33] | 100.00% | 98.43% | - | - |

| The method in this paper | 100.00% | 97.04% | 97.23% | 78.94% |

| Dataset | Sensitivity | Specificity | Accuracy | mAP | Sensitivity | Specificity | Accuracy | mAP |

|---|---|---|---|---|---|---|---|---|

| Le2i | 100.00% | 97.04% | 97.23% | 78.94% | 94.74% | 96.30% | 95.85% | 74.85% |

| LSST | 100.00% | 98.18% | 98.03% | 68.69% | 98.32% | 98.07% | 98.09% | 63.10% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, S.; Min, W.; Liu, L.; Wang, Q.; Zhou, X. Movement Tube Detection Network Integrating 3D CNN and Object Detection Framework to Detect Fall. Electronics 2021, 10, 898. https://doi.org/10.3390/electronics10080898

Zou S, Min W, Liu L, Wang Q, Zhou X. Movement Tube Detection Network Integrating 3D CNN and Object Detection Framework to Detect Fall. Electronics. 2021; 10(8):898. https://doi.org/10.3390/electronics10080898

Chicago/Turabian StyleZou, Song, Weidong Min, Lingfeng Liu, Qi Wang, and Xiang Zhou. 2021. "Movement Tube Detection Network Integrating 3D CNN and Object Detection Framework to Detect Fall" Electronics 10, no. 8: 898. https://doi.org/10.3390/electronics10080898

APA StyleZou, S., Min, W., Liu, L., Wang, Q., & Zhou, X. (2021). Movement Tube Detection Network Integrating 3D CNN and Object Detection Framework to Detect Fall. Electronics, 10(8), 898. https://doi.org/10.3390/electronics10080898