EREBOTS: Privacy-Compliant Agent-Based Platform for Multi-Scenario Personalized Health-Assistant Chatbots

Abstract

1. Introduction

- Multi-scenario agent-based chatbot framework: In EREBOTS, it is possible to combine several context-dependent behaviors that can be encapsulated in dedicate story lines, which can be modeled as isolated or interconnected scenarios. These behaviors are enacted by a network of user agents, doctor agents, and orchestrated through gateway agents.

- User personalization: User agents build a model of the user profile, his/her preferences, history, goals, and aggregated information. With this model, the user agents are able to tailor behaviors and provide a personalized experience.

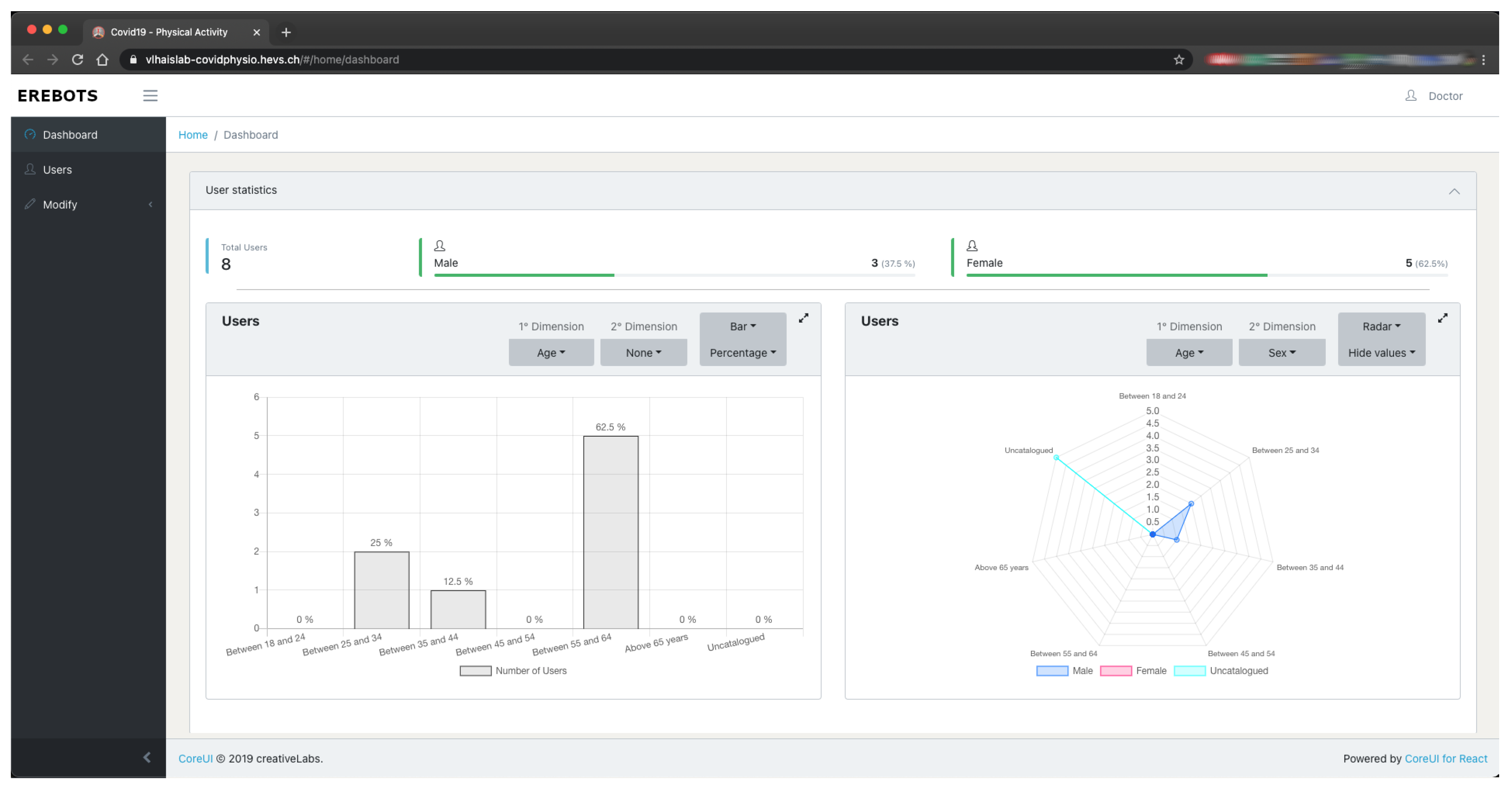

- Healthcare personnel control and monitoring: Medical doctors and healthcare providers have the possibility of defining possible goals, configure self-assessment interactions, or customize the types of activities proposed to patients/participants. Moreover, they can monitor users’ profiles with detailed analytic describing their behaviors and aggregated trends.

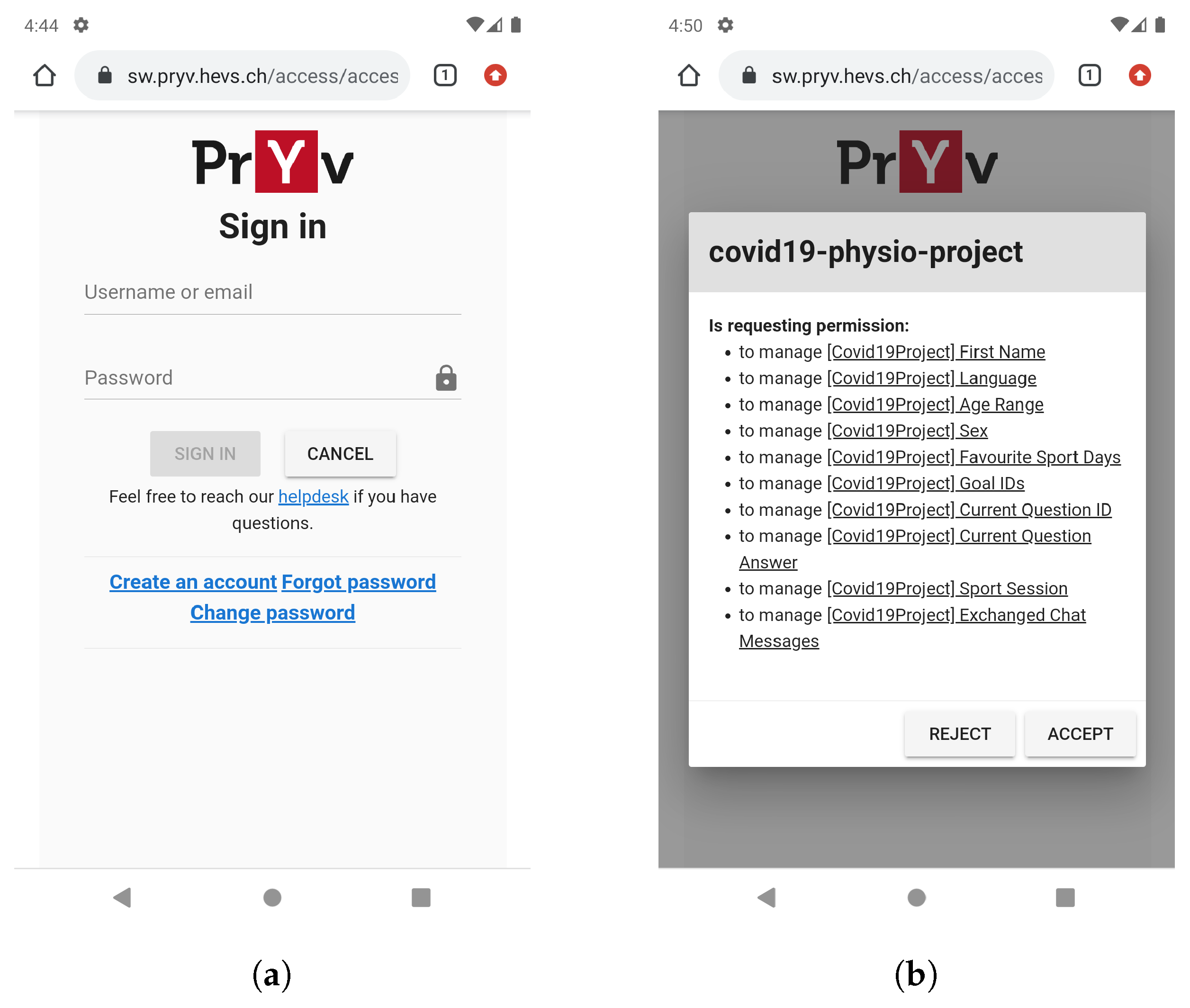

- Privacy and ethics compliance: In EREBOTS, all the sensitive/personal information are solely under the control of the user, who can make any decisions concerning storage and sharing of her information. Through the Pryv. platform [12] integrated into EREBOTS, users may configure fine-grained access control or even entirely remove their data if they decide so.

- Multi-campaign implementation and testing: EREBOTS has been employed and tested in scenarios such as smoking cessation and balance enhancement exercises (physical rehabilitation) for older adults during social confinement (due to COVID-19 restrictions).

2. State of the Art

2.1. HMI and Chatbots

- Amazon Lex: it supports the development of chatbots providing natural language understanding and automatic speech recognition [13].

- Dialogflow: it provides a framework aiming at understanding human conversations relying on Google’s machine learning techniques [14].

- Microsoft Bot Framework: it is a tool-set including APIs for text and speech analysis [15].

- SAP Conversational AI: based on SAP’s technology platform, it enables users to build and monitor intelligent chatbots, as well as to automate tasks and workflow [16].

- Rasa Open Source: it is a machine learning framework that allows the automation of text and voice-based chatbot assistants [17].

2.2. Quality of Experience

- (i)

- human factors such as user personality, expertise, health condition (visual acuity, auditory capacity, etc.);

- (ii)

- context factors such as the context in which a user is consuming a given service (e.g., alone, with friends, on the way to work, etc.); and

- (iii)

- system factors such as a system’s features characterizing the service provided (e.g., video resolution, sound quality, response rate, natural language processing quality, etc.).

2.3. Multi-Agent Systems & Chatbots

2.4. Chatbots in Assistive and eHealth Scenarios

2.5. Opportunities and Open Challenges

- C1

- Social A2A (Agent-to-Agent): While chatbots have been mainly employed in social campaigns, the social capabilities among the bots (i.e., to relate/extend/complete information) have yet to be fully exploited.

- C2

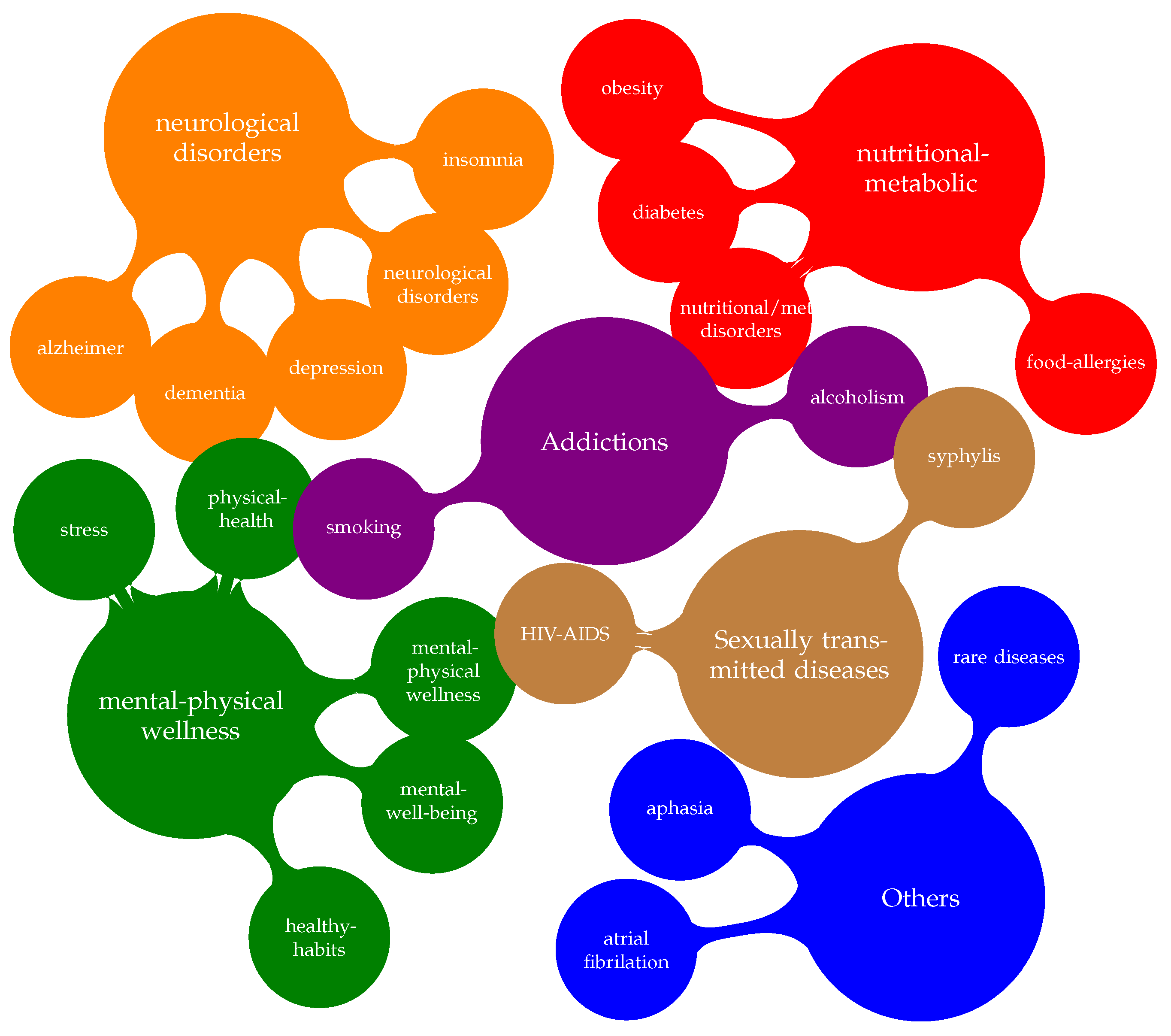

- Run-time healthcare supervision: Mental and physical wellness and nutritional and metabolic disorders are areas that can vastly benefit from employing chatbots to attain behavior change. Nevertheless, physicians consider unsafe to release unsupervised autonomous chatbots operating in safety-critical scenarios [61].

- C3

- Evolving models and behaviors: Chatbots can model the users quite comprehensively. However, the sociological dynamics and implications can quickly change, and current solutions cannot model nor properly embed evolving behaviors in the complex dynamics of current frameworks.

- C4

- Multi-stakeholder personalization: Chatbots are pervading increasingly complex healthcare applications. However, current solutions do not provide sufficient personalization for the diverse stakeholders’ roles (i.e., caregivers, physicians, or relatives [37]).

- C5

- Users’ QoE: The user is central in chatbot applications. Nevertheless, mechanisms to periodically collect, elaborate, and understand users’ feedback on their experience are missing [62].

- C6

- Dynamic update mechanisms: The repetitiveness of the solutions and/or functionalities suggested by the chatbots (usually due to static state machines and the lack of run-time updating mechanisms) can cause users to relapse and abandon the application.

- C7

- Semantics and Terminology: Often, the messages sent by the chatbot are predefined. However, due to the diversity of the stakeholders in healthcare scenarios, the terminology and related sentence formulation should be formulated dynamically (i.e., standardization vs. explanation).

- C8

- Delegation: Chatbots can replace humans in dealing with automated and repetitive tasks. However, the criteria for delegating a task (computation- and interaction-wise) to a chatbot need to be defined [63].

- C9

- Privacy compliance: While the chatbots’ interactions are mostly visible to the user, what occurs in the back-end is usually not as clear/transparent. In the best-case scenario, data management and visibility are described in human-made informative documentation, where the actual match with the system dynamics cannot be verified.

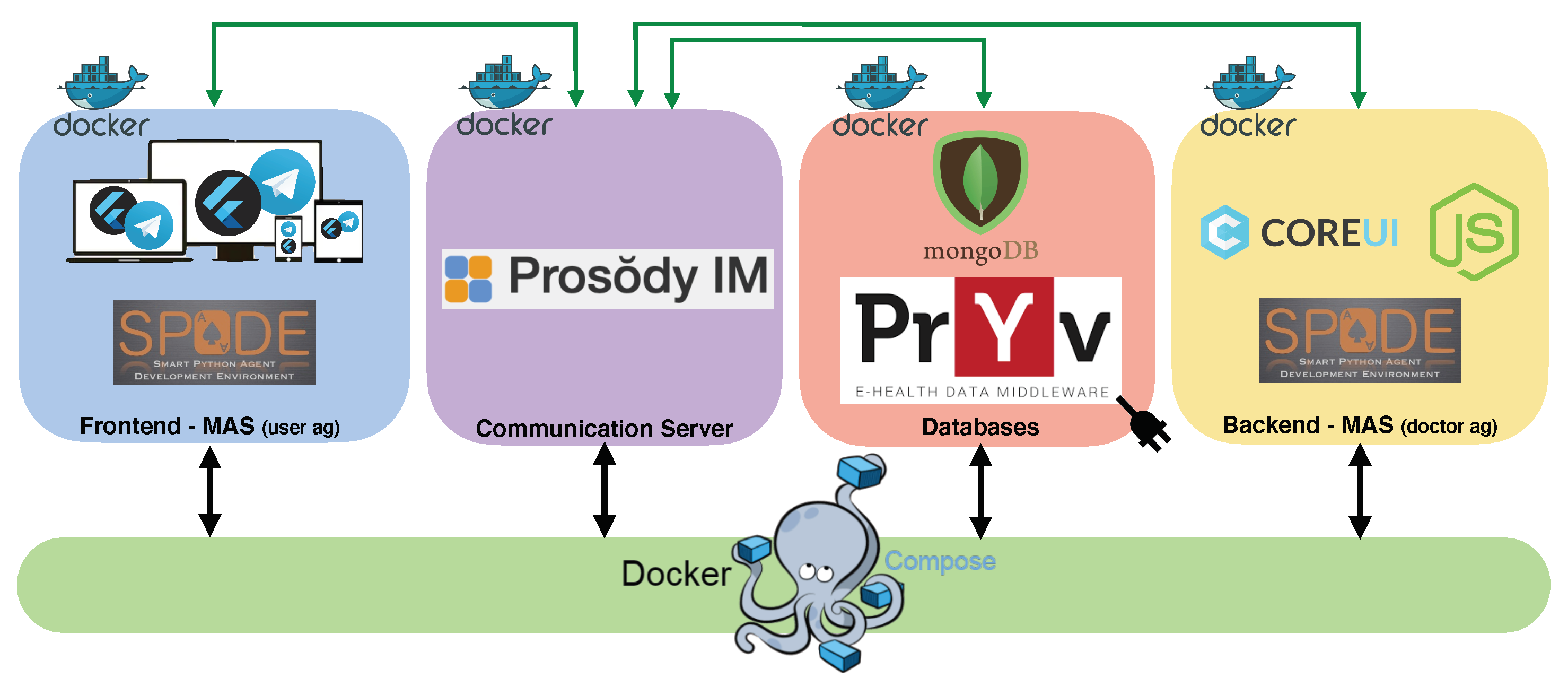

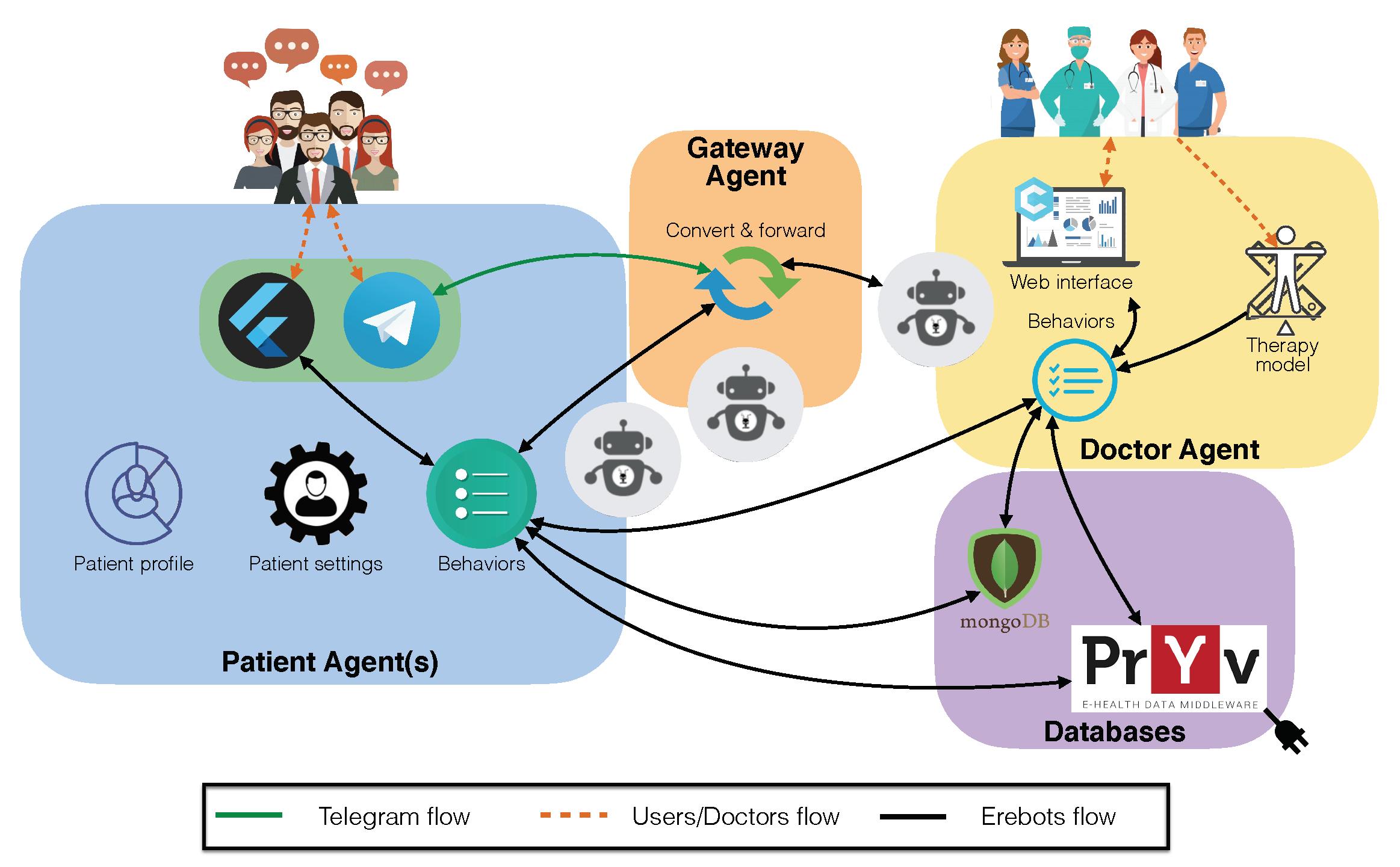

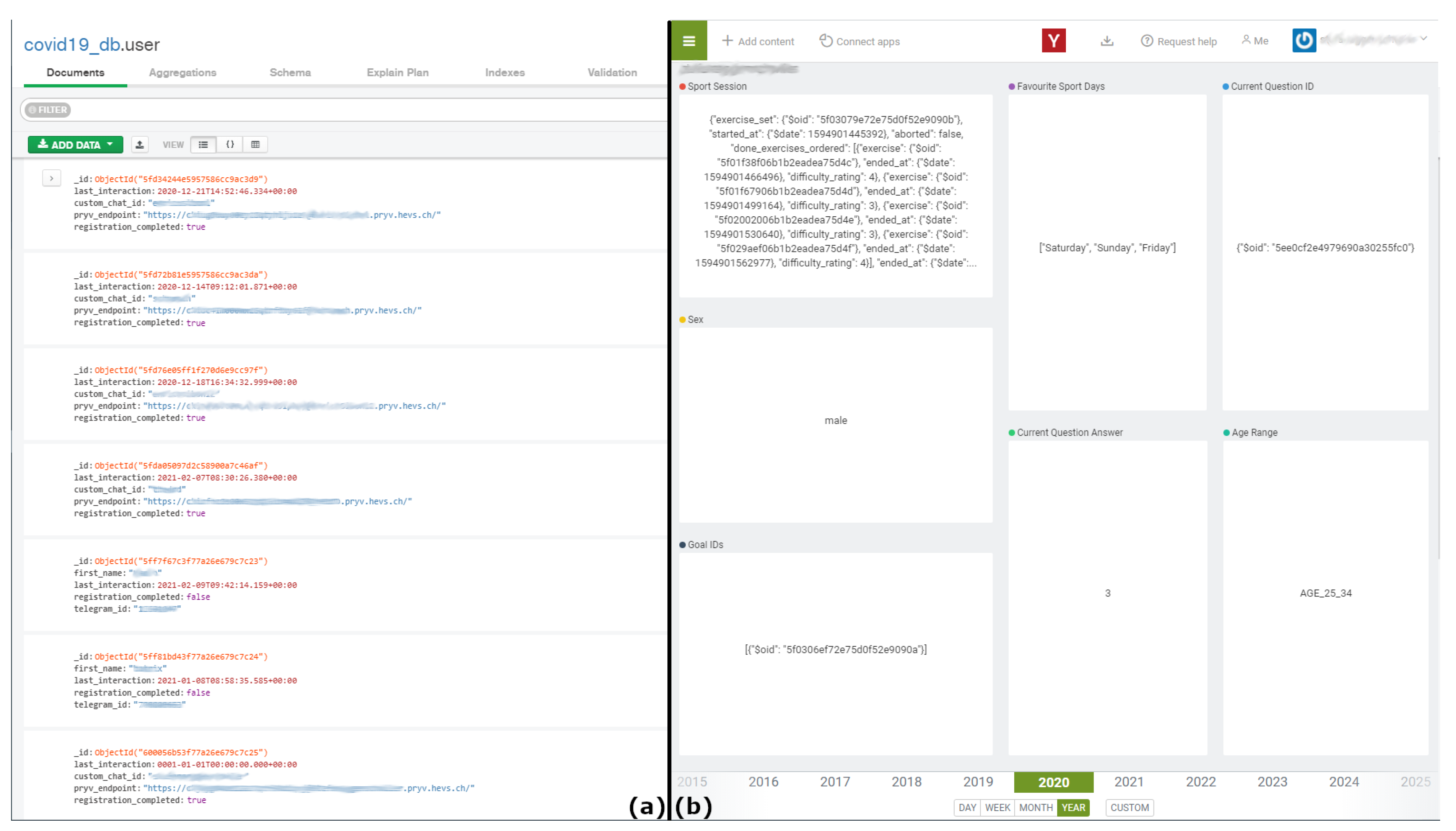

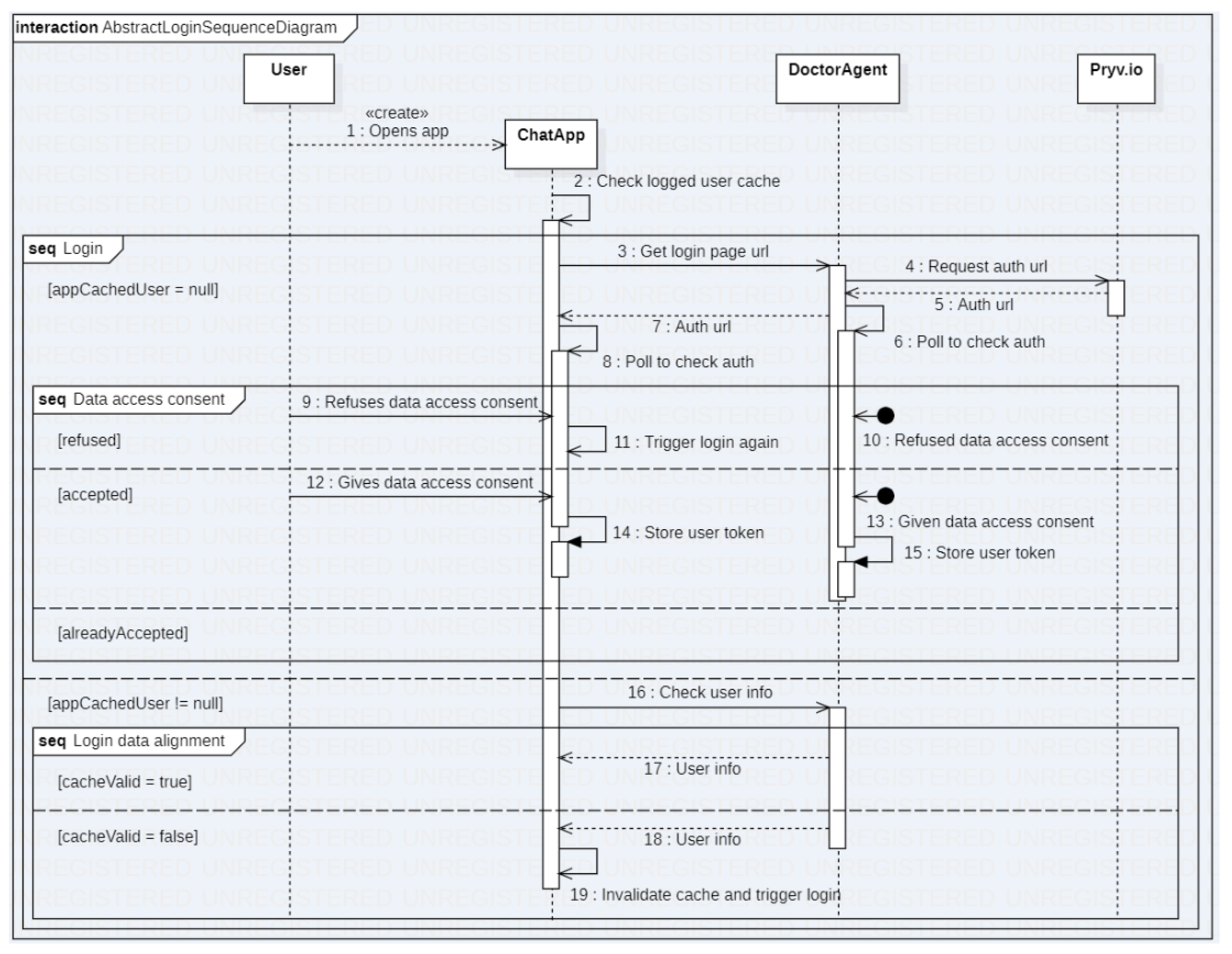

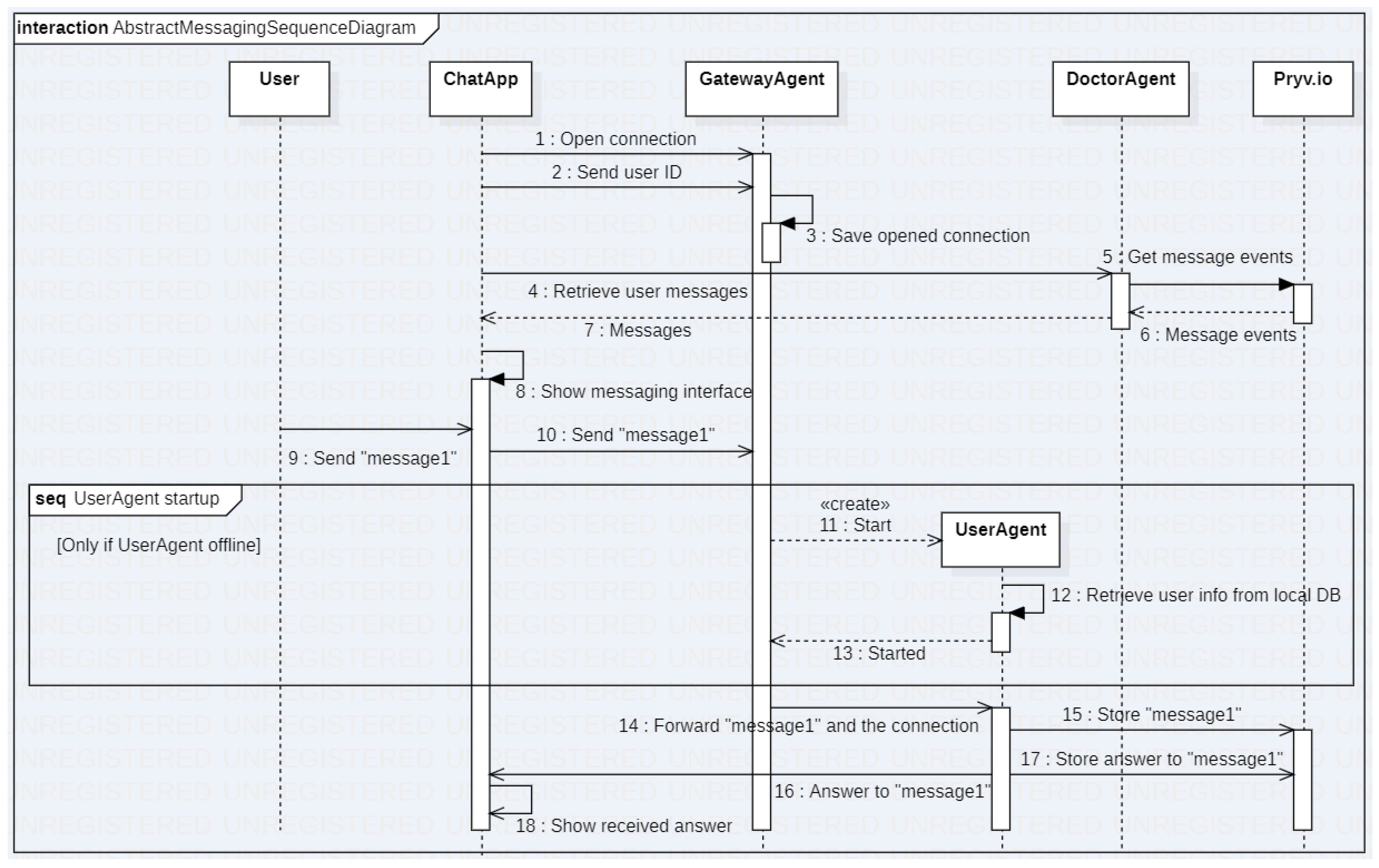

3. The EREBOTS Framework

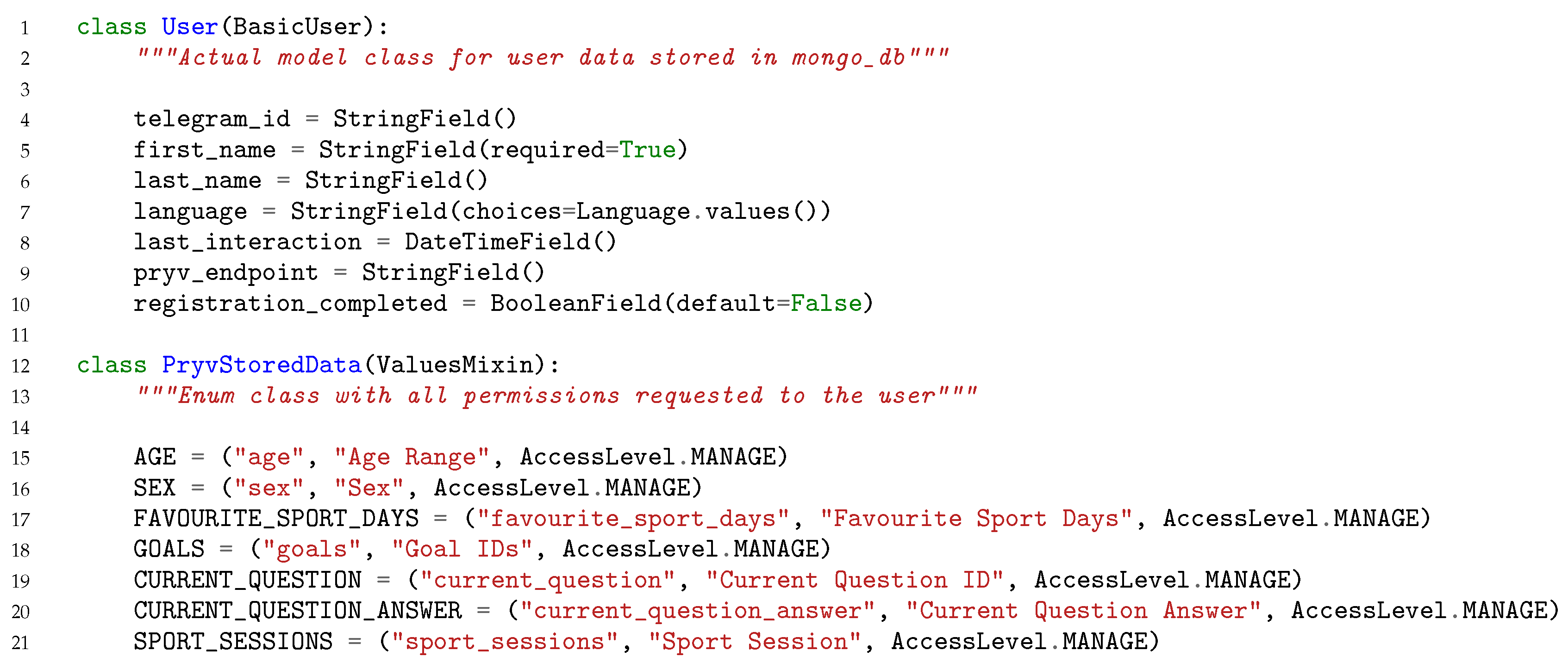

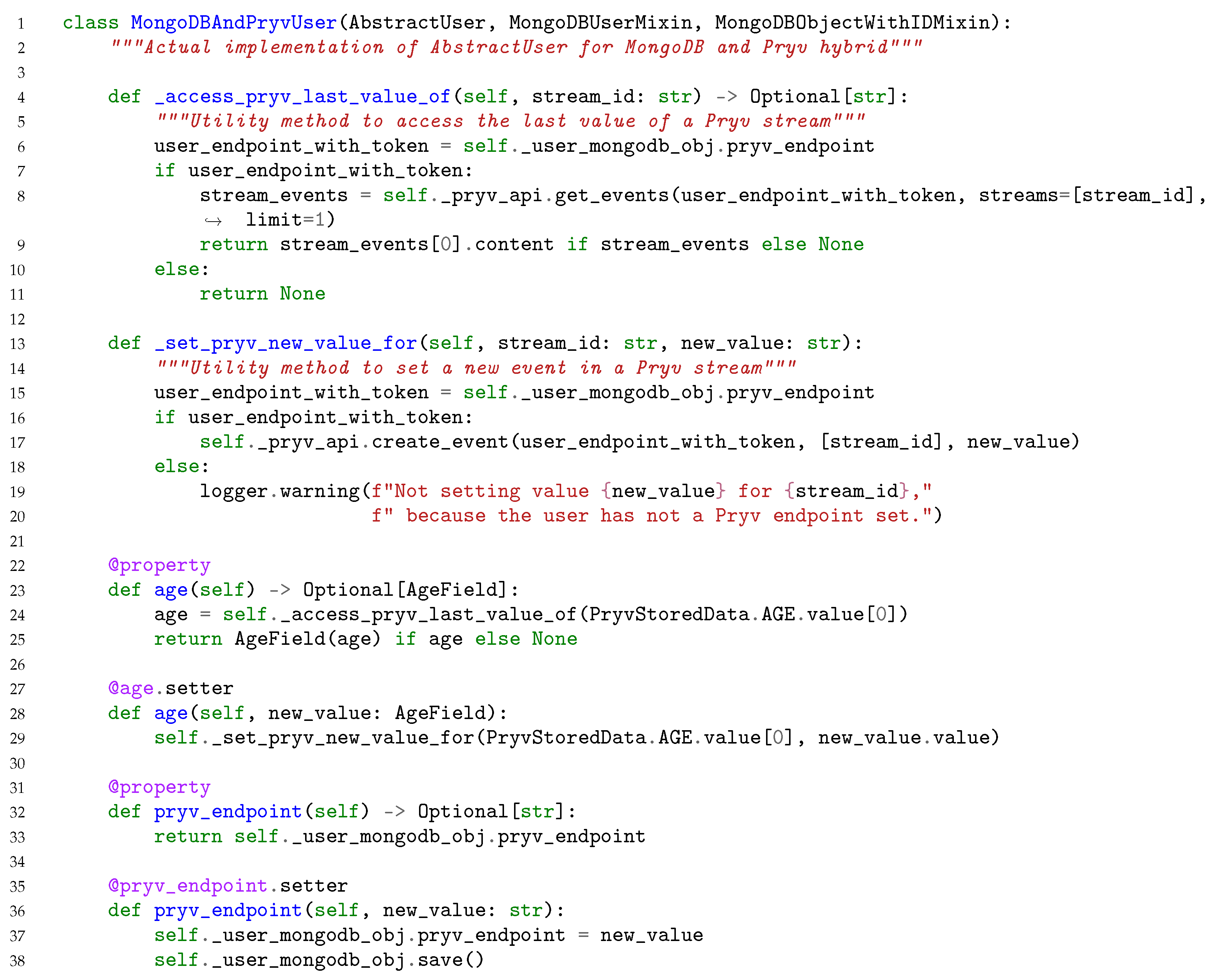

- The Database component encloses two different databases: (i) MongoDB, used as centralized storage only for non-personal data. In particular, it stores the user’s messenger service chat ID (e.g., Telegram) and the user-specific endpoint token for the personal data store. (ii) Pryv (Available online: https://www.pryv.com/ (accessed on 5 March 2021)), which is a platform enabling privacy regulation-compliant, stream-based personal data collection, and privacy management. Once a user has registered an account, the user can provide consent to external applications, which then can access and store specified data. EREBOTS uses an instance of Pryv to persist the user’s chat history and all personal data (e.g., age, name, and scenario-specific data). Employing Pryv, users gain exclusive control of their data, thus being able to revoke the consent at any point, disabling EREBOT access to it, and, if necessary, fully removing any stored piece of information.

- The Communication server acts as message space for the inter-agent communication within the MAS. It uses a Prosody (Available online: https://prosody.im/ (accessed on 5 March 2021)) XMPP server instance where each agent embodies a registered user. An agent can broadcast messages to all agents (in the form of a multi-user chat) or directly message a specific agent (in the form of peer-to-peer sessions).

- The Back-end relies on the SPADE framework [65] to instantiate and interconnect virtual agents. In particular, it endows the doctor agent, which serves the campaign-related functionalities and bridges them with the underlying system’s dynamics. Moreover, the doctor agent exposes a web application allowing the medical personnel in charge of the campaign to manage storylines (general or personalized therapies) and overview user treatments adherence/results.

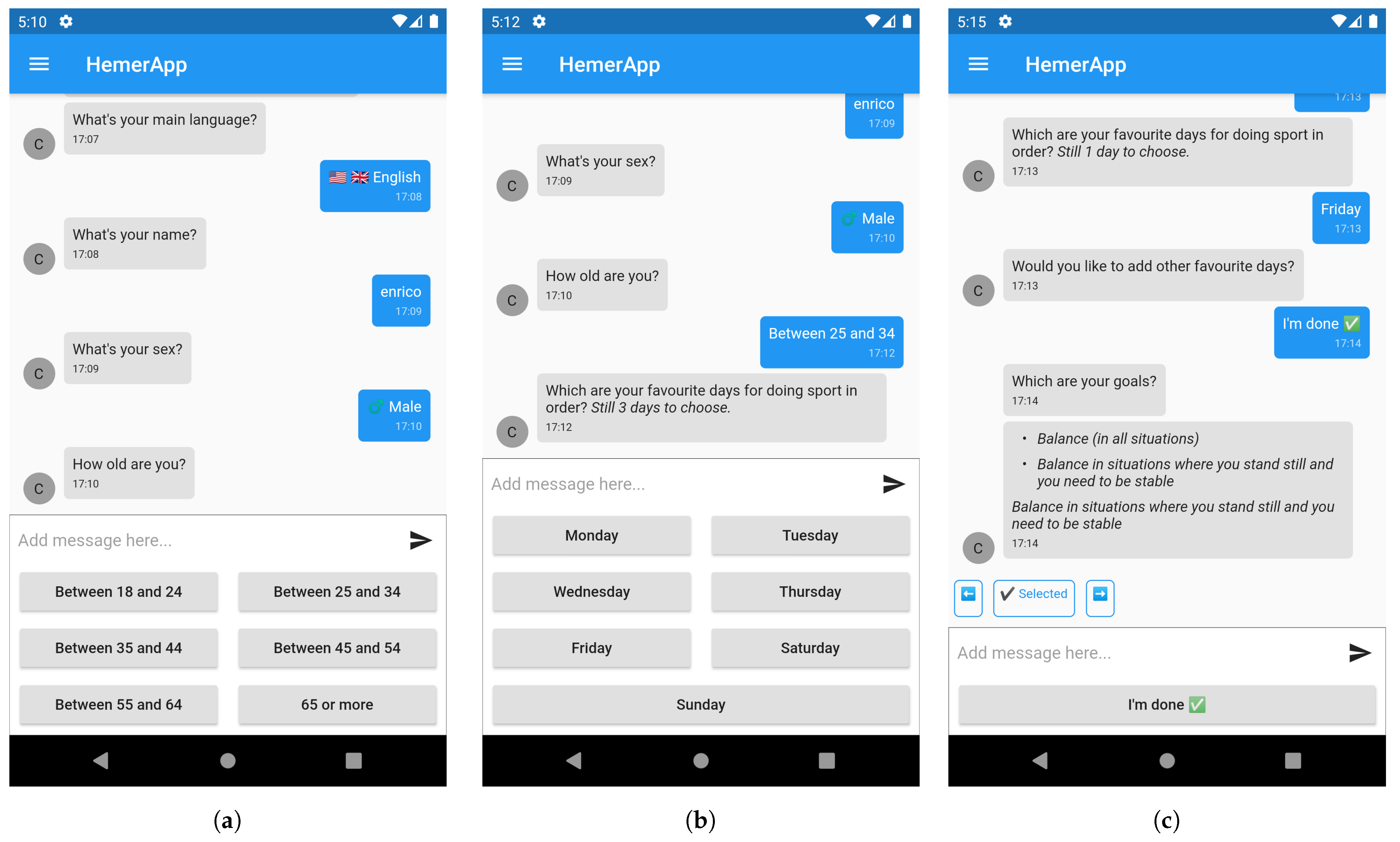

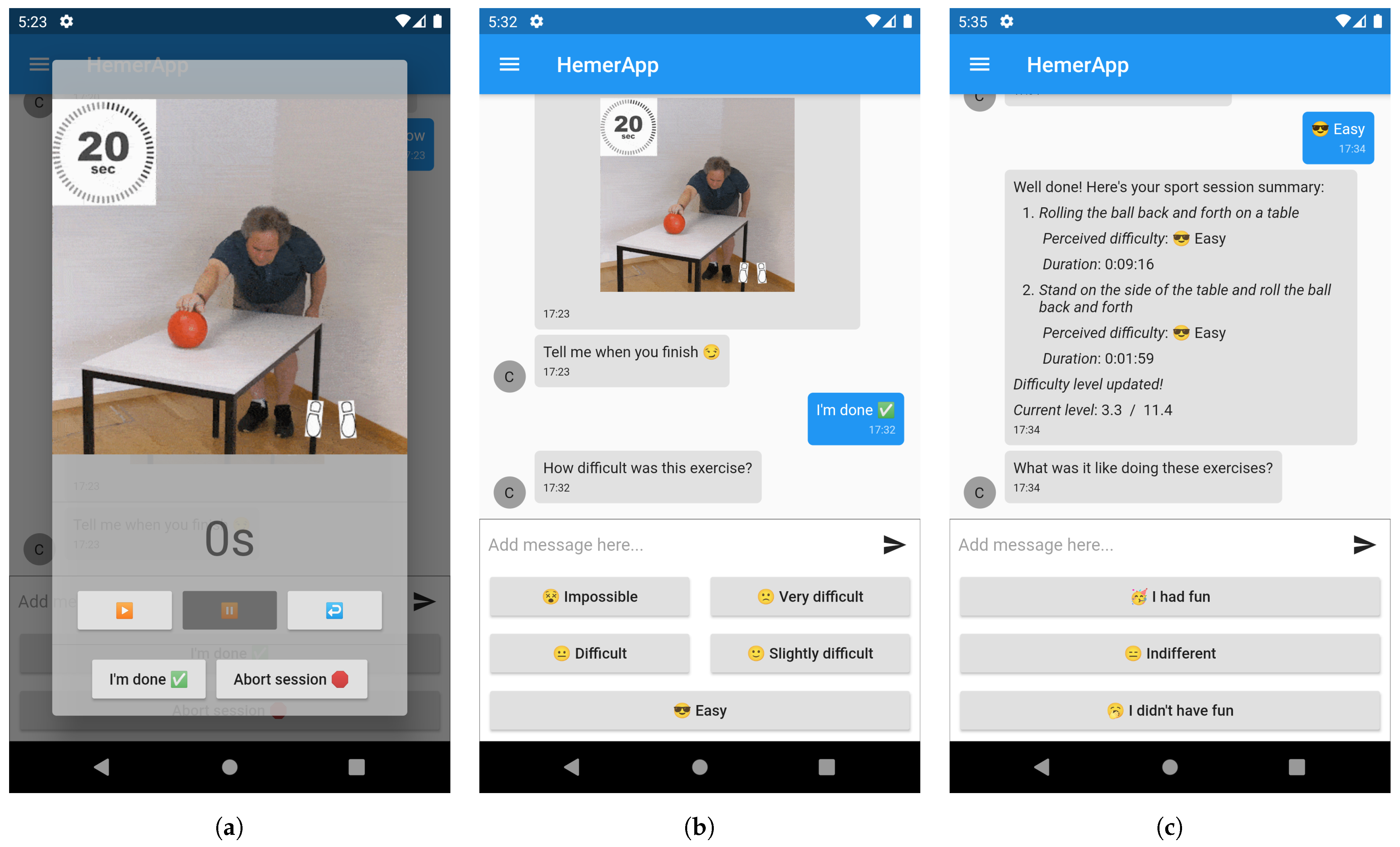

- The Front-end component is in charge of managing the users’ connections and their messages from the chat platform(s). Although extensible to other messaging systems, the framework currently supports the following communication interfaces. (i) Telegram (Available online: https://telegram.org/ (accessed on 5 March 2021)): a widely used free messaging application for mobile phones released in 2013, offering desktop applications for PC, Mac, and Linux. Since 2015, Telegram has enabled the development of chatbots with a dedicated bot API. (ii) HemerApp: a dedicated front-end based on Flutter (Available online: https://flutter.dev/ (accessed on 5 March 2021)), a framework for native multi-platform development. Therefore, the HemerApp can be used on iOS, Android, or web.While HemerApp allows a direct connection with the MAS (i.e., SPADES), all messages using Telegram have to pass through dedicated Telegram APIs. This requires the realization of a gateway agent. Moreover, such an agent handles the initial user communication (i.e., registration and user agent creation) for both interfaces. As of today, the two interfaces can coexist, although only one is allowed within a given campaign.

3.1. Scenario, Functionalities, Dynamics, and Behaviors

- SC1

- Preventive physical conditioning: it profiles the user according to a basic motor-balance assessment and his/her preferences and provides tailored exercises according to the user experience/profile both reactively and proactively.

- SC2

- Smoking cessations: it consists of a 2-phase campaign. In phase 1, the bot determines the severity of the addiction (i.e., daily consumption, nicotine dependency) while recording the user’s smoking habits. In phase 2, the bot assists the user during the craving episodes providing personalized mood boosters, health tips, behavioral tracking, feedback/reporting support, and adherence/efficacy evaluation.

- SC3

- Brest cancer survivors: The bot provides informational content and advice according to the type of cancer, demographics, stage, physical condition, etc. The bot may counsel exercise sets targeting regaining/maintaining muscular strength and minimum physical activity levels.

3.1.1. Scenario SC1

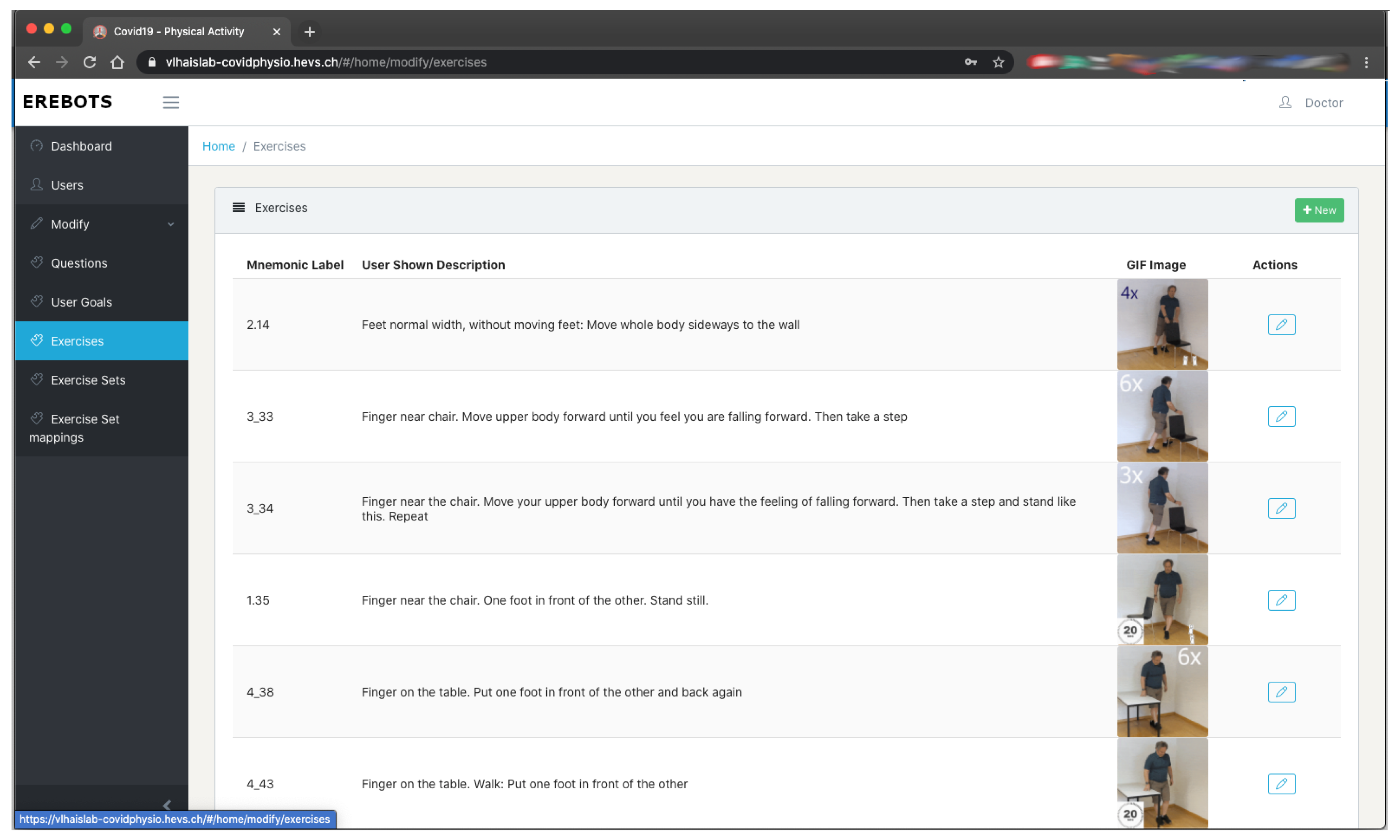

3.1.2. Functionalities

- DF1:

- Create, modify, and delete objectives, exercises, and relationships among them.

- DF2:

- Visualize a single user and her aggregated information.

- UF1:

- Register a new profile.

- UF2:

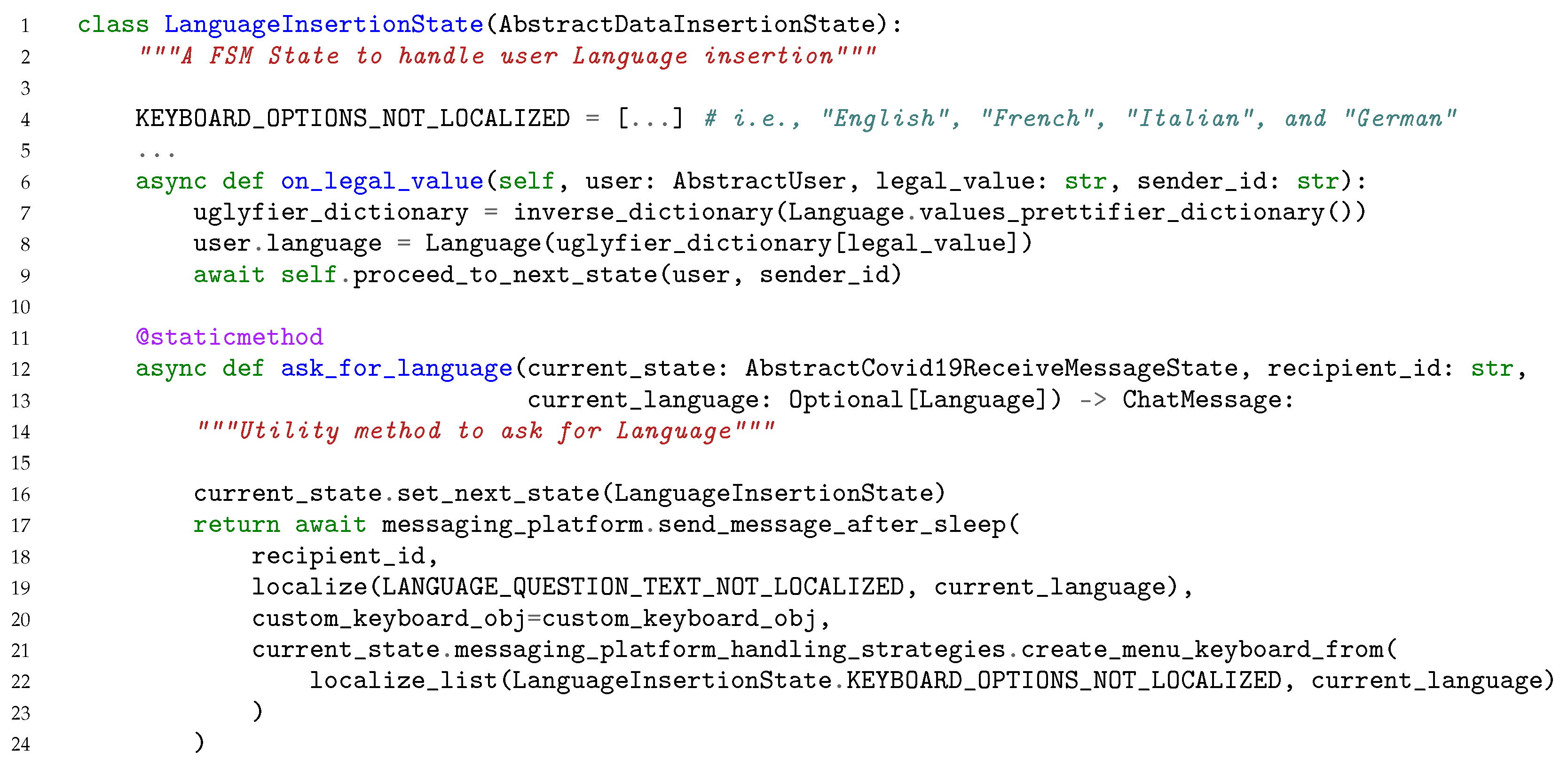

- Manage his/her profile and settings (i.e., language (As of today, SC1 supports English, Italian, French, and German), user goals, and ability re-evaluation).

- UF3:

- Ask for exercises (matching the user’s level).

- UF4:

- Visualize personal statistics and performance.

- UF5:

- Get detailed information about the system functionalities and data usage, visibility, and storage.

- (i)

- Define the user goals, such as the desired level of balance to be attained.

- (ii)

- Define the self-assessment questions, i.e., the set of questions to be asked to the user to determine her current situation with respect to the desired goals.

- (iii)

- Associate the questions to a specific difficulty level.

- (iv)

- Relate the questions to each other, defining the overall physical activity plan.

- (v)

- Define the exercises to be suggested, including their instructions, and related multimedia (see Figure 5).

- (vi)

- Assign the exercises to each difficulty level.

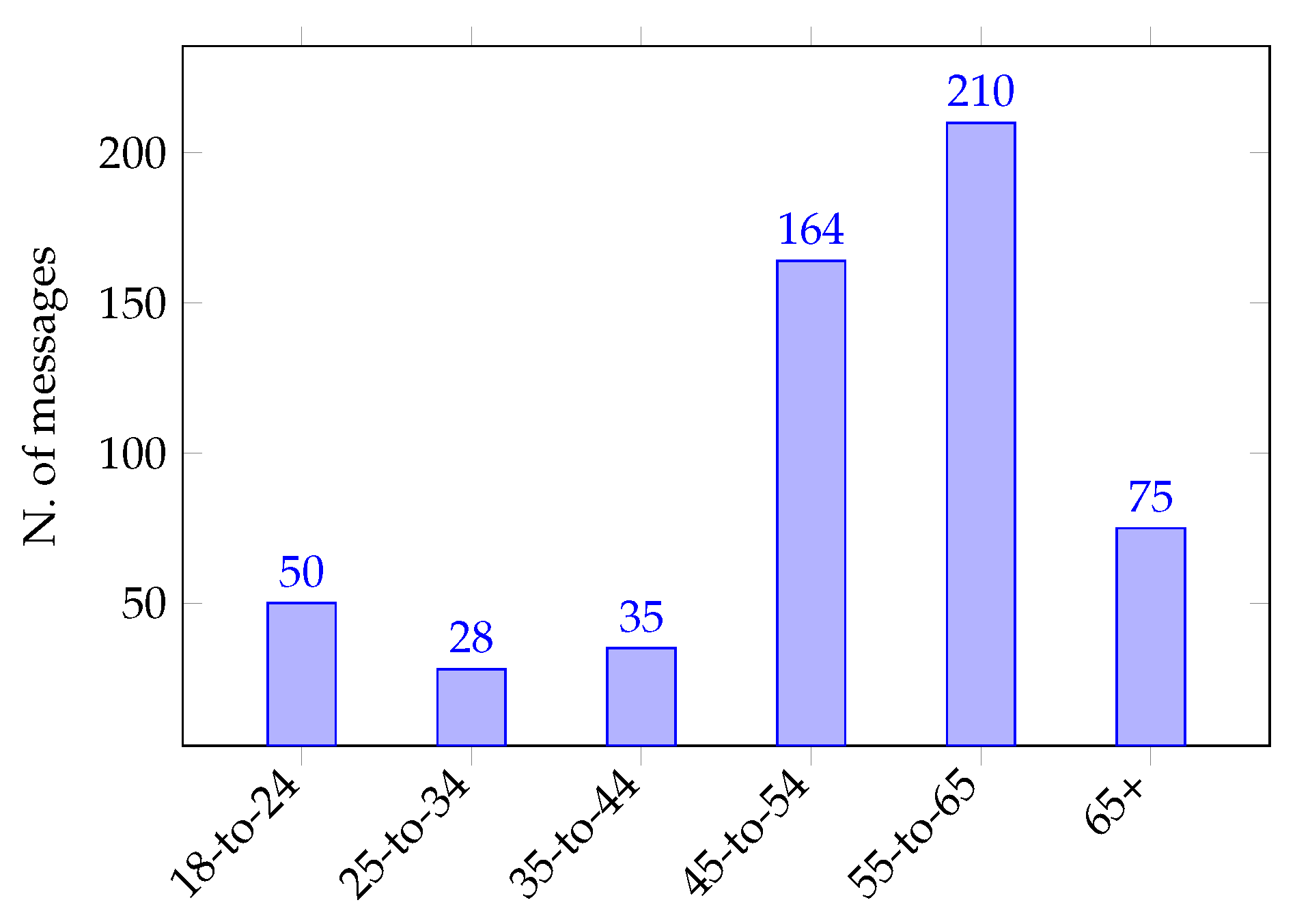

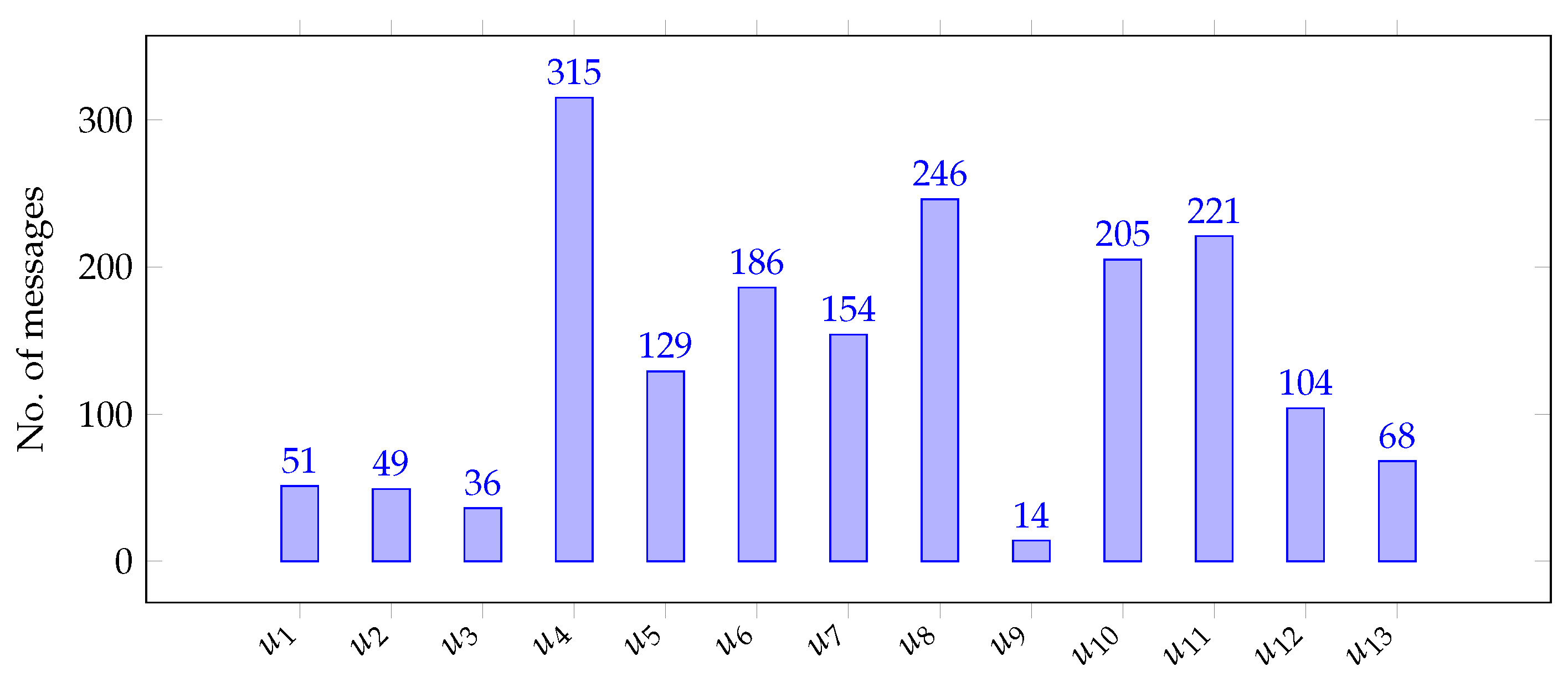

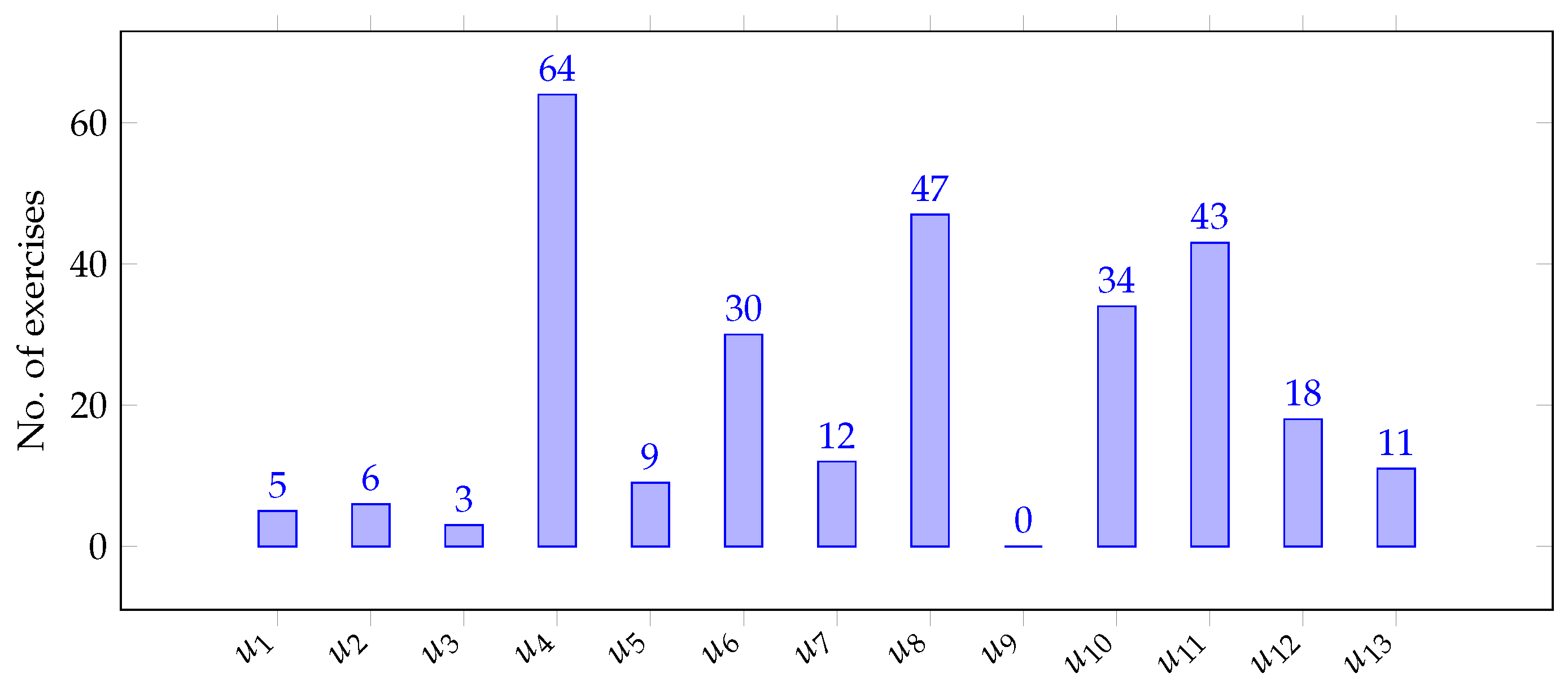

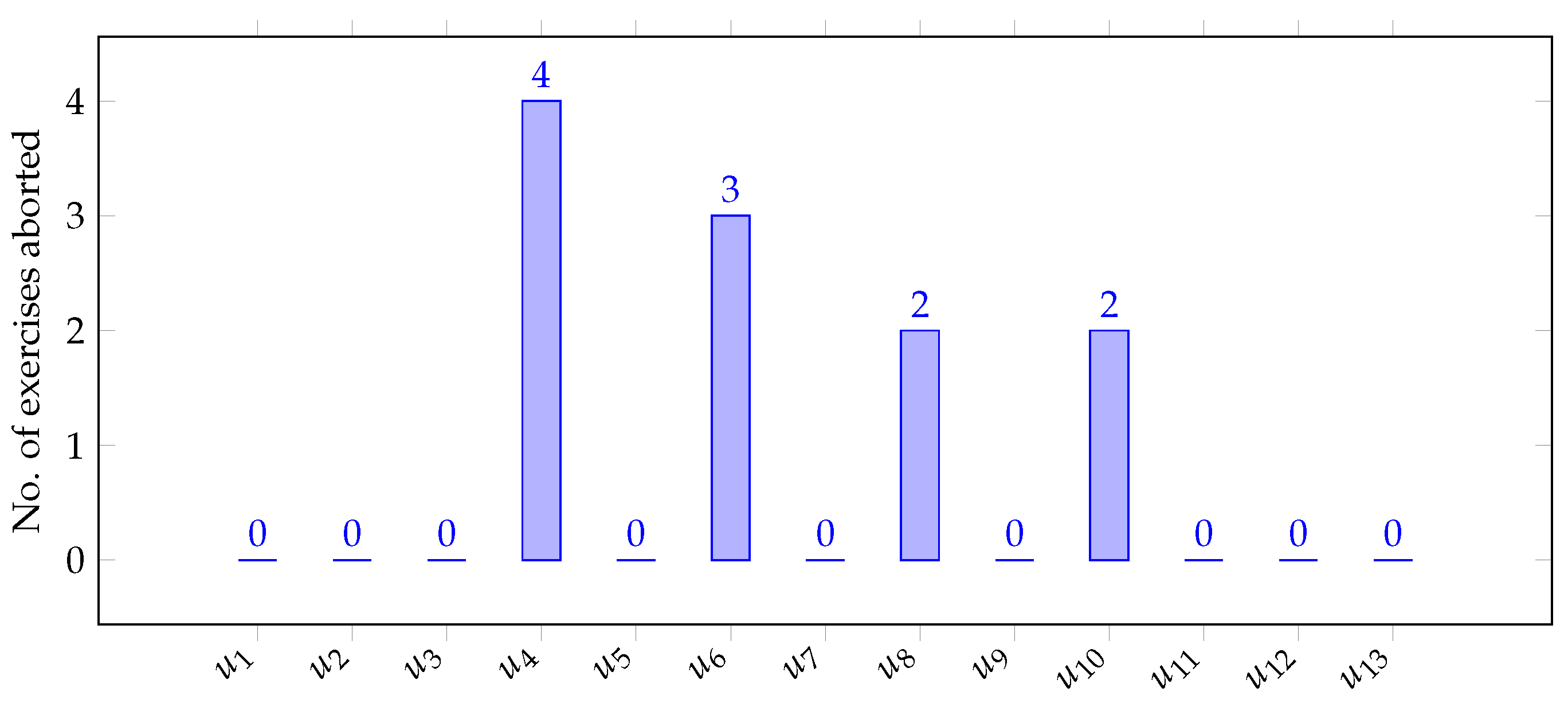

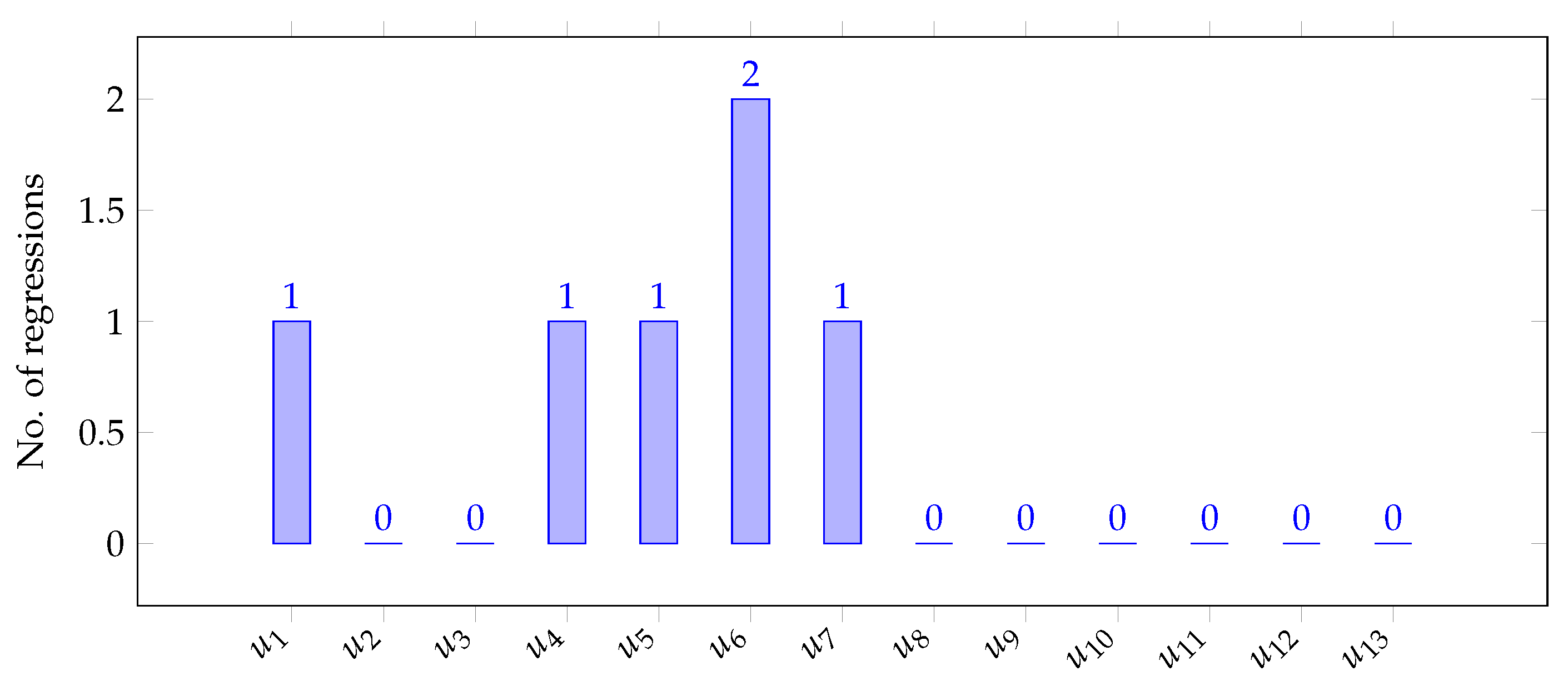

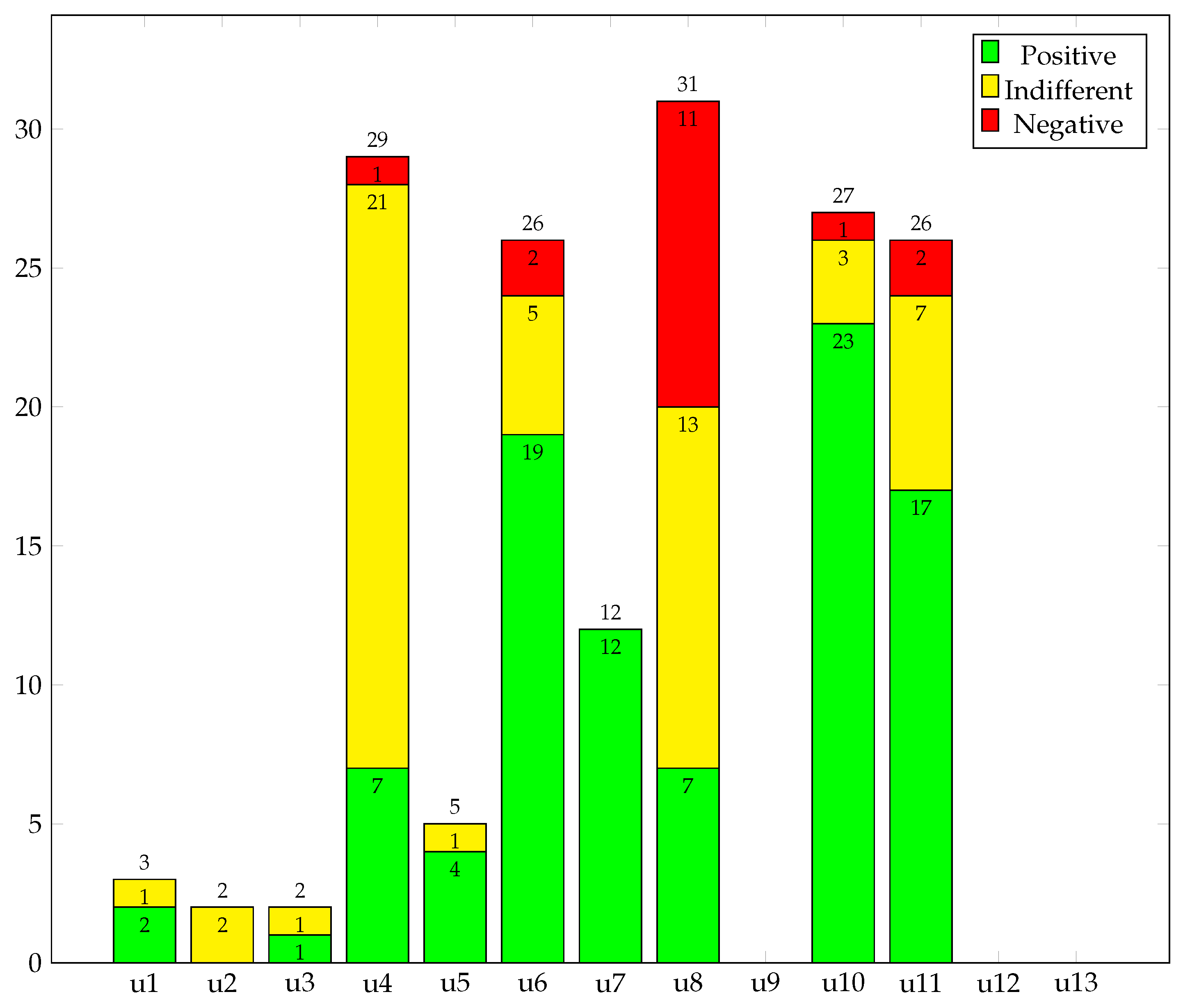

4. Experimentation

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- López, G.; Quesada, L.; Guerrero, L.A. Alexa vs. Siri vs. Cortana vs. Google Assistant: A comparison of speech-based natural user interfaces. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Los Angeles, CA, USA, 17–21 July 2017; Springer: Berlin, Germany, 2017; pp. 241–250. [Google Scholar]

- Johannsen, G. Human-machine interaction. Control Syst. Robot. Autom. 2009, 21, 132–162. [Google Scholar]

- Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM 1966, 9, 36–45. [Google Scholar] [CrossRef]

- Calvaresi, D.; Calbimonte, J.P.; Dubosson, F.; Najjar, A.; Schumacher, M. Social Network Chatbots for Smoking Cessation: Agent and Multi-Agent Frameworks. In Proceedings of the 2019 IEEE/WIC/ACM International Conference on Web Intelligence (WI), Thessaloniki, Greece, 14–17 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 286–292. [Google Scholar]

- Xu, A.; Liu, Z.; Guo, Y.; Sinha, V.; Akkiraju, R. A new chatbot for customer service on social media. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; ACM: New York, NY, USA, 2017; pp. 3506–3510. [Google Scholar]

- Calbimonte, J.P.; Calvaresi, D.; Dubosson, F.; Schumacher, M. Towards Profile and Domain Modelling in Agent-Based Applications for Behavior Change. In Proceedings of the International Conference on Practical Applications of Agents and Multi-Agent Systems, Ávila, Spain, 26–28 June 2019; Springer: Berlin, Germany, 2019; pp. 16–28. [Google Scholar]

- Fadhil, A.; Gabrielli, S. Addressing challenges in promoting healthy lifestyles: The al-chatbot approach. In Proceedings of the 11th EAI International Conference on Pervasive Computing Technologies for Healthcare, Barcelona, Spain, 23–26 May 2017; ACM: New York, NY, USA, 2017; pp. 261–265. [Google Scholar]

- Graham, A.L.; Papandonatos, G.D.; Erar, B.; Stanton, C.A. Use of an online smoking cessation community promotes abstinence: Results of propensity score weighting. Health Psychol. 2015, 34, 1286. [Google Scholar] [CrossRef]

- Roca, S.; Sancho, J.; García, J.; Alesanco, Á. Microservice chatbot architecture for chronic patient support. J. Biomed. Inform. 2020, 102, 103305. [Google Scholar] [CrossRef]

- Ni, L.; Lu, C.; Liu, N.; Liu, J. Mandy: Towards a smart primary care chatbot application. In International Symposium on Knowledge and Systems Sciences; Springer: Berlin, Germany, 2017; pp. 38–52. [Google Scholar]

- Voigt, P.; Von dem Bussche, A. The eu general data protection regulation (gdpr). In A Practical Guide, 1st ed.; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Calbimonte, J.P.; Dubosson, F.; Kebets, I.; Legris, P.M.; Schumacher, M.I. Semi-automatic Semantic Enrichment of Personal Data Streams. In SEMANTICS Posters & Demos; 2019; Available online: http://ceur-ws.org/Vol-2451/paper-08.pdf (accessed on 5 March 2021).

- AALex. Available online: https://aws.amazon.com/lex/ (accessed on 29 September 2018).

- DialogFlow. 2018. Available online: https://dialogflow.com (accessed on 29 December 2020).

- Microsoft. Microsoft Bot Framework. Available online: https://dev.botframework.com (accessed on 29 December 2020).

- SAP. SAP Conversational AI. 2020. Available online: https://www.sap.com/products/conversational-ai.html (accessed on 20 January 2021).

- Rasa. Rasa Open Source. 2020. Available online: https://rasa.com/ (accessed on 20 January 2021).

- Chaves, A.P.; Gerosa, M.A. How Should My Chatbot Interact? A Survey on Social Characteristics in Human–Chatbot Interaction Design. Int. J. Hum. Comput. Interact. 2020, 1–30. [Google Scholar] [CrossRef]

- Lee, M.; Lucas, G.; Mell, J.; Johnson, E.; Gratch, J. What’s on Your Virtual Mind? Mind Perception in Human-Agent Negotiations. In Proceedings of the 19th ACM International Conference on Intelligent Virtual Agents, Paris, France, 2–5 July 2019; pp. 38–45. [Google Scholar]

- Meany, M.M.; Clark, T. Humour Theory and Conversational Agents: An Application in the Development of Computer-based Agents. Int. J. Humanit. 2010, 8, 129–140. [Google Scholar] [CrossRef]

- Maroengsit, W.; Piyakulpinyo, T.; Phonyiam, K.; Pongnumkul, S.; Chaovalit, P.; Theeramunkong, T. A survey on evaluation methods for chatbots. In Proceedings of the 2019 7th International Conference on Information and Education Technology, Aizu-Wakamatsu, Japan, 29–31 March 2019; pp. 111–119. [Google Scholar]

- Goddard, M. The EU General Data Protection Regulation (GDPR): European regulation that has a global impact. Int. J. Mark. Res. 2017, 59, 703–705. [Google Scholar] [CrossRef]

- Huckvale, K.; Prieto, J.T.; Tilney, M.; Benghozi, P.J.; Car, J. Unaddressed privacy risks in accredited health and wellness apps: A cross-sectional systematic assessment. BMC Med. 2015, 13, 1–13. [Google Scholar] [CrossRef]

- Möller, S.; Raake, A. Quality of Experience: Advanced Concepts, Applications and Methods; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Reiter, U.; Brunnström, K.; De Moor, K.; Larabi, M.C.; Pereira, M.; Pinheiro, A.; You, J.; Zgank, A. Factors influencing quality of experience. In Quality of Experience; Springer International Publishing: Cham, Switzerland, 2014; pp. 55–72. [Google Scholar]

- Najjar, A.; Gravier, C.; Serpaggi, X.; Boissier, O. Modeling User Expectations & Satisfaction for SaaS Applications Using Multi-agent Negotiation. In Proceedings of the 2016 IEEE/WIC/ACM International Conference on Web Intelligence (WI), Omaha, NE, USA, 13–16 October 2016; pp. 399–406. [Google Scholar]

- Najjar, A.; Serpaggi, X.; Gravier, C.; Boissier, O. Multi-agent systems for personalized qoe-management. In Proceedings of the 2016 28th International Teletraffic Congress (ITC 28), Würzburg, Germany, 12–16 September 2016; Volume 3, pp. 1–6. [Google Scholar]

- Walgama, M.; Hettige, B. Chatbots: The Next Generation in Computer Interfacing—A Review. 2017. Available online: http://ir.kdu.ac.lk/bitstream/handle/345/1669/003.pdf?sequence=1&isAllowed=y (accessed on 5 March 2021).

- Cahn, J. CHATBOT: Architecture, Design, & Development; University of Pennsylvania School of Engineering and Applied Science Department of Computer and Information Science: Philadelphia, PA, USA, 2017. [Google Scholar]

- Bentivoglio, C.; Bonura, D.; Cannella, V.; Carletti, S.; Pipitone, A.; Pirrone, R.; Rossi, P.; Russo, G. Intelligent Agents supporting user interactions within self regulated learning processes. J. E-Learn. Knowl. Soc. 2010, 6, 27–36. [Google Scholar]

- Haddadi, A. Communication and Cooperation in Agent Systems: A Pragmatic Theory; Springer: Cham, Switzerland, 1996; Volume 1056. [Google Scholar]

- Chung, M.; Ko, E.; Joung, H.; Kim, S.J. Chatbot e-service and customer satisfaction regarding luxury brands. J. Bus. Res. 2018, 117, 587–595. [Google Scholar] [CrossRef]

- Calvaresi, D.; Calbimonte, A.I.J.P.; Schegg, R.; Fragniere, E.; Schumacher, M. The Evolution of Chatbots in Tourism: A Systematic Literature Review; Springer: Berlin, Germany, 2021. [Google Scholar]

- Żytniewski, M. Integration of knowledge management systems and business processes using multi-agent systems. Int. J. Comput. Intell. Stud. 2016, 5, 180–196. [Google Scholar] [CrossRef]

- Alencar, M.; Netto, J.M. Improving cooperation in Virtual Learning Environments using multi-agent systems and AIML. In Proceedings of the Frontiers in Education Conference (FIE), Rapid City, South Dakota, 12–15 October 2011. [Google Scholar]

- Hettige, B.; Karunananda, A. Octopus: A Multi Agent Chatbot. 2015. Available online: https://www.researchgate.net/publication/311652831_Octopus_A_Multi_Agent_Chatbot (accessed on 5 March 2021).

- Calvaresi, D.; Cesarini, D.; Sernani, P.; Marinoni, M.; Dragoni, A.F.; Sturm, A. Exploring the ambient assisted living domain: A systematic review. J. Ambient. Intell. Humaniz. Comput. 2016, 8, 239–257. [Google Scholar] [CrossRef]

- Pereira, J.; Díaz, Ó. Using health chatbots for behavior change: A mapping study. J. Med. Syst. 2019, 43, 135. [Google Scholar] [CrossRef] [PubMed]

- Brinkman, W.P. Virtual health agents for behavior change: Research perspectives and directions. In Proceedings of the Workshop on Graphical and Robotic Embodied Agents for Therapeutic Systems, Los Angeles, CA, USA, 20–23 September 2016. [Google Scholar]

- Teixeira, A.R. Social Media and Chatbots Use for Chronic Disease Patients Support: Case Study from an Online Community Regarding Therapeutic Use of Cannabis. 2019. Available online: https://repositorio-aberto.up.pt/bitstream/10216/121907/2/346390.pdf (accessed on 5 March 2021).

- Lisetti, C.; Amini, R.; Yasavur, U. Now all together: Overview of virtual health assistants emulating face-to-face health interview experience. KI-Künstliche Intell. 2015, 29, 161–172. [Google Scholar] [CrossRef]

- Schueller, S.M.; Tomasino, K.N.; Mohr, D.C. Integrating human support into behavioral intervention technologies: The efficiency model of support. Clin. Psychol. Sci. Pract. 2017, 24, 27–45. [Google Scholar] [CrossRef]

- Oh, K.J.; Lee, D.; Ko, B.; Choi, H.J. A chatbot for psychiatric counseling in mental healthcare service based on emotional dialogue analysis and sentence generation. In Proceedings of the 2017 18th IEEE International Conference on Mobile Data Management (MDM), Daejeon, Korea, 29 May–1 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 371–375. [Google Scholar]

- van Heerden, A.; Ntinga, X.; Vilakazi, K. The potential of conversational agents to provide a rapid HIV counseling and testing services. In Proceedings of the 2017 International Conference on the Frontiers and Advances in Data Science (FADS), Xi’an, China, 23–25 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 80–85. [Google Scholar]

- Cheng, A.; Raghavaraju, V.; Kanugo, J.; Handrianto, Y.P.; Shang, Y. Development and evaluation of a healthy coping voice interface application using the Google home for elderly patients with type 2 diabetes. In Proceedings of the 2018 15th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 12–15 January 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Richards, D.; Caldwell, P. Improving health outcomes sooner rather than later via an interactive website and virtual specialist. IEEE J. Biomed. Health Inform. 2017, 22, 1699–1706. [Google Scholar] [CrossRef] [PubMed]

- Richards, D.; Caldwell, P. Gamification to improve adherence to clinical treatment advice. In Health Literacy: Breakthroughs in Research and Practice: Breakthroughs in Research and Practice; IGI Global: Pennsylvania, PA, USA, 2017; Volume 80. [Google Scholar]

- Bickmore, T.W.; Puskar, K.; Schlenk, E.A.; Pfeifer, L.M.; Sereika, S.M. Maintaining reality: Relational agents for antipsychotic medication adherence. Interact. Comput. 2010, 22, 276–288. [Google Scholar] [CrossRef]

- Tanner, A.E.; Mann, L.; Song, E.; Alonzo, J.; Schafer, K.; Arellano, E.; Garcia, J.M.; Rhodes, S.D. weCARE: A social media–based intervention designed to increase HIV care linkage, retention, and health outcomes for racially and ethnically diverse young MSM. AIDS Educ. Prev. 2016, 28, 216–230. [Google Scholar] [CrossRef] [PubMed]

- Schmaltz, R.M.; Jansen, E.; Wenckowski, N. Redefining critical thinking: Teaching students to think like scientists. Front. Psychol. 2017, 8, 459. [Google Scholar] [CrossRef]

- Ramo, D.E.; Thrul, J.; Chavez, K.; Delucchi, K.L.; Prochaska, J.J. Feasibility and quit rates of the Tobacco Status Project: A Facebook smoking cessation intervention for young adults. J. Med. Internet Res. 2015, 17, e291. [Google Scholar] [CrossRef]

- Cole-Lewis, H.; Perotte, A.; Galica, K.; Dreyer, L.; Griffith, C.; Schwarz, M.; Yun, C.; Patrick, H.; Coa, K.; Augustson, E. Social network behavior and engagement within a smoking cessation Facebook page. J. Med. Internet Res. 2016, 18, e205. [Google Scholar] [CrossRef] [PubMed]

- Cheung, Y.T.D.; Chan, C.H.H.; Lai, C.K.J.; Chan, W.F.V.; Wang, M.P.; Li, H.C.W.; Chan, S.S.C.; Lam, T.H. Using WhatsApp and Facebook online social groups for smoking relapse prevention for recent quitters: A pilot pragmatic cluster randomized controlled trial. J. Med. Internet Res. 2015, 17, e238. [Google Scholar] [CrossRef]

- Pechmann, C.; Pan, L.; Delucchi, K.; Lakon, C.M.; Prochaska, J.J. Development of a Twitter-based intervention for smoking cessation that encourages high-quality social media interactions via automessages. J. Med. Internet Res. 2015, 17, e50. [Google Scholar] [CrossRef] [PubMed]

- Brixey, J.; Hoegen, R.; Lan, W.; Rusow, J.; Singla, K.; Yin, X.; Artstein, R.; Leuski, A. SHIHbot: A Facebook chatbot for sexual health information on HIV/AIDS. In Proceedings of the 18th Annual SIGdial Meeting on Discourse and Dialogue, Saarbrücken, Germany, 15–17 August 2017; pp. 370–373. [Google Scholar]

- Vita, S.; Marocco, R.; Pozzetto, I.; Morlino, G.; Vigilante, E.; Palmacci, V.; Fondaco, L.; Kertusha, B.; Renzelli, M.; Mercurio, V.; et al. The ’doctor apollo’ chatbot: A digital health tool to improve engagement of people living with HIV. J. Int. AIDS Soc. 2018, 21, e25187. [Google Scholar]

- Angara, P.; Jiménez, M.; Agarwal, K.; Jain, H.; Jain, R.; Stege, U.; Ganti, S.; Müller, H.A.; Ng, J.W. Foodie fooderson a conversational agent for the smart kitchen. In Proceedings of the 27th Annual International Conference on Computer Science and Software Engineering, Markham, ON, Canada, 6–8 November 2017; pp. 247–253. [Google Scholar]

- Hsu, P.; Zhao, J.; Liao, K.; Liu, T.; Wang, C. AllergyBot: A Chatbot technology intervention for young adults with food allergies dining out. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 74–79. [Google Scholar]

- Ghandeharioun, A.; McDuff, D.; Czerwinski, M.; Rowan, K. Towards understanding emotional intelligence for behavior change chatbots. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII), Cambridge, UK, 3–6 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 8–14. [Google Scholar]

- Ziebarth, S.; Kizina, A.; Hoppe, H.U.; Dini, L. A serious game for training patient-centered medical interviews. In Proceedings of the 2014 IEEE 14th International Conference on Advanced Learning Technologies (ICALT), Athens, Greece, 7–10 July 2014; pp. 213–217. [Google Scholar]

- Palanica, A.; Flaschner, P.; Thommandram, A.; Li, M.; Fossat, Y. Physicians’ perceptions of chatbots in health care: Cross-sectional web-based survey. J. Med. Internet Res. 2019, 21, e12887. [Google Scholar] [CrossRef]

- Eysenbach, G.; Group, C.E. CONSORT-EHEALTH: Improving and standardizing evaluation reports of Web-based and mobile health interventions. J. Med. Internet Res. 2011, 13, e126. [Google Scholar] [CrossRef]

- Kieffer, S.; Ko, A.; Ko, G.; Gruson, D. Six case-based recommendations for designing mobile health applications and chatbots. Clin. Chim. Acta 2019, 493, S29–S30. [Google Scholar] [CrossRef]

- Calvaresi, D.; Marinoni, M.; Dragoni, A.F.; Hilfiker, R.; Schumacher, M. Real-time multi-agent systems for telerehabilitation scenarios. Artif. Intell. Med. 2019, 96, 217–231. [Google Scholar] [CrossRef]

- Palanca, J.; Terrasa, A.; Julian, V.; Carrascosa, C. SPADE 3: Supporting the New Generation of Multi-Agent Systems. IEEE Access 2020, 8, 182537–182549. [Google Scholar] [CrossRef]

- Füzéki, E.; Groneberg, D.A.; Banzer, W. Physical activity during COVID-19 induced lockdown: Recommendations. J. Occup. Med. Toxicol. 2020, 15, 1–5. [Google Scholar] [CrossRef]

| # | Question |

|---|---|

| 1 | How difficult is it for you to keep your balance when you stand in a quiet environment? |

| 2 | How difficult is it for you to keep your balance when you walk around in the apartment? |

| 3 | How difficult is it for you to keep your balance when you climb up a stair? |

| 4 | How difficult is it for you to keep your balance when you reach for an object that is on the table far in front of you? |

| 5 | How difficult is it for you to keep your balance when you pick something up off the ground? |

| 6 | How difficult is it for you to keep your balance when you stand on tiptoe to get a cup from the cupboard? |

| 7 | How difficult is it for you to keep your balance when you are being pushed by your pet or by someone or when you stumble over something? |

| 8 | How difficult is it for you to keep your balance when you carry a package to the apartment? |

| 9 | How difficult is it for you to keep your balance when you step down a stair? |

| 10 | How difficult is it for you to keep your balance when you walk and look back? |

| 11 | How difficult is it for you to keep your balance when you walk across the wet bathroom floor? |

| Individual | Difficulty Entry-Level | Description |

|---|---|---|

| 7 | Difficult to keep balance being pushed. | |

| 4 | Difficult to reach objects far on a table. | |

| 5 | Difficult to pick something from the ground. | |

| 8 | Difficult to keep the balance while carrying a medium/big package. | |

| 8 | Difficult to keep the balance while carrying a medium/big package. | |

| 5 | Difficult to pick something from the ground. | |

| 9 | Difficult to keep balance stepping downstairs. | |

| 2 | Difficult to constantly keep balance when walking. | |

| 7 | Difficult to keep balance being pushed. | |

| 3 | Difficult to keep balance when climbing stairs. | |

| 4 | Difficult to reach objects far on a table. | |

| 10 | Difficult to keep balance when walking while looking back. | |

| 10 | Difficult to keep balance when walking while looking back. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Calvaresi, D.; Calbimonte, J.-P.; Siboni, E.; Eggenschwiler, S.; Manzo, G.; Hilfiker, R.; Schumacher, M. EREBOTS: Privacy-Compliant Agent-Based Platform for Multi-Scenario Personalized Health-Assistant Chatbots. Electronics 2021, 10, 666. https://doi.org/10.3390/electronics10060666

Calvaresi D, Calbimonte J-P, Siboni E, Eggenschwiler S, Manzo G, Hilfiker R, Schumacher M. EREBOTS: Privacy-Compliant Agent-Based Platform for Multi-Scenario Personalized Health-Assistant Chatbots. Electronics. 2021; 10(6):666. https://doi.org/10.3390/electronics10060666

Chicago/Turabian StyleCalvaresi, Davide, Jean-Paul Calbimonte, Enrico Siboni, Stefan Eggenschwiler, Gaetano Manzo, Roger Hilfiker, and Michael Schumacher. 2021. "EREBOTS: Privacy-Compliant Agent-Based Platform for Multi-Scenario Personalized Health-Assistant Chatbots" Electronics 10, no. 6: 666. https://doi.org/10.3390/electronics10060666

APA StyleCalvaresi, D., Calbimonte, J.-P., Siboni, E., Eggenschwiler, S., Manzo, G., Hilfiker, R., & Schumacher, M. (2021). EREBOTS: Privacy-Compliant Agent-Based Platform for Multi-Scenario Personalized Health-Assistant Chatbots. Electronics, 10(6), 666. https://doi.org/10.3390/electronics10060666