Abstract

Low-cost, high-performance embedded devices are proliferating and a plethora of new platforms are available on the market. Some of them either have embedded GPUs or the possibility to be connected to external Machine Learning (ML) algorithm hardware accelerators. These enhanced hardware features enable new applications in which AI-powered smart objects can effectively and pervasively run in real-time distributed ML algorithms, shifting part of the raw data analysis and processing from cloud or edge to the device itself. In such context, Artificial Intelligence (AI) can be considered as the backbone of the next generation of Internet of the Things (IoT) devices, which will no longer merely be data collectors and forwarders, but really “smart” devices with built-in data wrangling and data analysis features that leverage lightweight machine learning algorithms to make autonomous decisions on the field. This work thoroughly reviews and analyses the most popular ML algorithms, with particular emphasis on those that are more suitable to run on resource-constrained embedded devices. In addition, several machine learning algorithms have been built on top of a custom multi-dimensional array library. The designed framework has been evaluated and its performance stressed on Raspberry Pi III- and IV-embedded computers.

1. Introduction

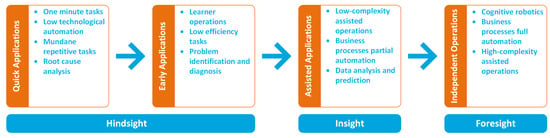

The Internet of Things (IoT) and Artificial Intelligence (AI) are probably two of the most popular research topics at present, driving the interest of both the academic and industrial sectors. The reason for this is their transversality, which makes them suitable for almost every existing application. IoT and AI have been successfully applied to several research and industrial fields, including health [1], life science [2], smart cities [3,4], environmental monitoring [5,6], precision agriculture [7,8], and education [4,9]. Figure 1 depicts the Machine Learning (ML) adoption process, used to target several kinds of problem or application. Machine learning can be effectively used to:

Figure 1.

Machine learning (ML) technology adoption process (adapted from [10]).

- Perform an a posteriori analysis of a given problem in order to detect business process issues and perform optimisation tasks;

- Gain more insight into a given problem or improve business processes by automating simple and repetitive tasks and assisting the end user in the decision-making process;

- Perform long-term optimisation by fully automating all the business processes and applying complex predictive techniques.

Both IoT and AI can be considered empowering technologies and, as such, they can converge into a unique framework that leverages their symbiotic relationship to improve, optimise and automate almost every business process.

Moreover, the rapid development of new hardware, software and communication technologies experienced in recent years has fostered the proliferation of low-cost devices and asynchronous communication protocols based on publish/subscribe mechanisms such as Message Queue Telemetry Transport (MQTT) and Constrained Application Protocol (CoAP), which are particularly suitable for IoT applications.

The new sensory boards available on the market now have enough computing power to run locally complex tasks, thus extending the dew computing paradigm to smart objects [11] and allowing for local processing and storage of the measured data before making them available to edge or cloud infrastructure for further processing and analysis.

According to a recent forecast (Source International Data Corporation (IDC), report available on-line at https://www.idc.com/getdoc.jsp?containerId=prUS45213219 (accessed on 10 February 2021)), by 2025, there will be 41.6 billion connected IoT devices generating 79.4 zettabytes (ZB) of data. Thus, IoT will be among the most significant data sources in the upcoming years. However, the effective management of data generated by IoT is not the only concern; besides data volume, IoT is also characterised by the extremely dynamic nature of its data. More specifically, IoT generates time-dependent geolocalised data with a variety of formats, modalities and amounts. Extracting knowledge from such a large amount of time-dependent and heterogeneous data is a challenging task. In this new, data-centric context, data science and AI will play a crucial role.

Data science is the combination of several scientific fields relying on data-mining, big data, machine learning and statistical techniques, aimed at making meaningful raw data through pattern matching.

Data analysis is the process of inspecting, cleansing and modelling data, and involves several steps, from data wrangling (namely, remapping raw data into another format more suitable for analysis), identification of the data attributes (either categorical or quantitative) and choice of a suitable data model (such as, for example, classification, clustering or neural networks), to applying efficient algorithms to match data characteristics.

Machine learning (ML) and IoT are converging [12,13] and the availability of high-performance embedded computing boards on the market is fostering the development of the embedded AI concept. In this new paradigm, data analysis and ML algorithms run in a distributed fashion across the complete IoT stack from device to cloud. In such a context, edge devices are not only a data source, since they must also perform simple data analysis on the raw data, provided they have enough computing power to carry out this task.

Some possible approaches to enabling embedded AI on edge devices may consist of either developing dedicated hardware [14,15] or of leveraging low-, mid- and high-end embedded computing boards to execute ad hoc frameworks that implement a classification or a prediction algorithm targeting a specific application [16,17,18,19]. Other solutions rely either on architectures where the edge/fog layer is in charge to run ML and sensor fusion algorithms on the data collected from the edge devices [20,21,22,23,24], or on cloud services that automatically compile pre-trained ML inference models into representations which are more suitable for running in resource-constrained edge devices [25].

There are several open-source ML frameworks available, with Tensorflow (https://www.tensorflow.org (accessed on 10 February 2021)) being the one with the largest community and user base [26]. The Tensorflow suite also includes a lite version called Tensorflow Lite (TfLite). However, unlike a full-fledged ML framework such as Tensorflow, TfLite is rather a strongly opinionated ML platform that includes a runtime environment and a set of tools to convert complex Tensforflow models into simplified ones that can run on edge devices using the platform’s built-in algorithms [23]. A flexible approach to embedding AI into edge devices requires the features of a full-fledged framework; unfortunately, the available ML frameworks cannot run on constrained devices and the complexity can be managed either at the cloud layer by leveraging a model compilation process to match the hardware requirements of the target platform [25], or at the edge layer by running the heavy tasks of an ML framework on an edge server [23].

To the authors’ knowledge, no attempt has been made to date to design and implement a lightweight and general purpose cross-platform ML framework suitable for running on edge devices. The implementation presented in this work leverages Node.js (https://nodejs.org/ (accessed on 10 February 2021)) and JavaScript to run seamlessly on a wide range of hardware platforms and operating systems, including extremely resource-constrained boards such as Tessel 2 (https://tessel.io (accessed on 10 February 2021)) and Neonious One (https://www.neonious.com/neoniousOne (accessed on 10 February 2021)). The framework architecture is loosely coupled and comprises several independent modules that implement core features for tensor and matrix manipulation, data wrangling, data analysis, etc., allowing the execution of the ML flow depicted in Figure 2 on the device. Each module exposes its own APIs and can operate both jointly with the other modules, wrapped by a common middleware layer, and alone. This approach allows the implementation of scenarios in which each module can be containerized, orchestrated and scaled independently as a microservice using Kubernete releases, such as as MicroKubernets (https://microk8s.io (accessed on 10 February 2021)) or K3s (https://k3s.io (accessed on 10 February 2021)), suitable for running on edge devices [27,28].

Figure 2.

Typical ML workflow.

Data science offers a rich set of algorithms to deal with classification, prediction and analysis; however, not all of them are suitable to run in constrained devices, as is required in IoT scenarios. In such a context, the objectives of this work are:

- Evaluate the features and requirements that are desirable in a modern ML framework targeted to IoT applications;

- Evaluate the impact of the ML software infrastructure on embedded hardware commonly used for IoT applications;

- Identify the constraints imposed by the underlying hardware on the ML algorithms used in the proposed framework.

The rest of the paper is organised as follows. Section 2 reviews the most common machine learning techniques and algorithms, putting particular emphasis on those that are more suitable for IoT and embedded applications, and that should be implemented as a library in the framework described in this work. Section 3 deals with the requirements and the features that are desirable in a modern ML framework targeted to embedded devices and introduces the software architecture of a novel ML engine targeted to IoT and embedded applications. Section 4 describes the test set up deployed to evaluate the performance of the ML introduced in this work and briefly reviews the hardware characteristics of the embedded microcontroller boards used in the experiments. Section 5 delves into the analysis of the experimental results, evaluating pros and cons of the proposed solution and proposing possible improvements to be targeted in future research. Finally, Section 6 summarises the key aspects of this work.

2. A Review of Machine Learning Algorithms and Applications

The term Machine Learning (ML) refers to algorithms with the ability to learn and autonomously perform a specific task, relying on pattern-matching and data inference. More specifically, an ML algorithm is designed to build a mathematical and statistical model based on a training dataset. This model is then used to perform either autonomous predictions or make decisions without human intervention. Machine learning algorithms are used in a plethora of applications including spam detection, fraud detection, precision agriculture, weather forecasting, banking, computer vision, handwriting recognition, speech recognition etc. Although machine learning in not a new research field, it is now experiencing a resurgence of interest in the scientific community and can be considered one of the fastest-growing fields in computer science. Often, the terms “artificial intelligence” and “machine learning” are used interchangeably; however, they are not the same thing. Machine learning can be considered a sub-field of artificial intelligence, in which the machines have the ability to learn from the experience they acquire by using statistical methods to analyse a dataset and infer a given behaviour for a certain problem.

Figure 2 depicts the typical workflow followed when defining a machine learning problem and proposing a solution.

The ML algorithm generates a mathematical model from the ingested data. The learning process is iterative. At the first iteration, the model is trained using historic data; however, when the model is deployed in a production environment, new data are ingested and used to train the model on a regular basis. The training operation depends on the size of the dataset and can be very time-consuming. For this reason, especially in resource-constrained environments, it is not advisable to start the training process from scratch at each iteration. Luckily, there are ML algorithms that are able to train the model incrementally, significantly reducing the training time [29]. The quality of predictions can be improved with a feedback data ingestion loop, in which real and predicted data can be used to improve the reliability and efficiency of the prediction model in the next iteration.

When the full process steps depicted in Figure 2 can be completely automated, if the machine is fast enough and the system feedback is available and ingested before a new prediction is performed, it is possible to train the ML algorithm at each new iteration. Machine learning devices using this method are called an online learning machine.

Two major issues must be addressed properly when defining an ML model: overfitting, and data preparation. Overfitting [30] occurs when the ML model matches the training data too closely, and hence lacks generality and has a negative impact on performance when analysing new data. The interested reader may refer to Appendix A for a brief discussion on overfitting and possible solutions. Data preparation and feature engineering [31] are of paramount importance in the machine learning pipeline. ML algorithms generate mathematical models that fit the training data in order to perform predictions. Features are the inputs of these mathematical models. A feature is a measurable property of the observed phenomenon; thus, feature engineering is the process of extracting measurable properties from the raw data and transforming them into a format that is more suitable for the ML model.

Constructing a valid dataset is a complex process that must go through several steps: first of all, collected raw data must be analysed and key features identified; if the data amount is too large, an adequate sampling strategy must be chosen to extract meaningful samples from the selected features. Sampling must be carefully performed and account for possible data unbalances that could eventually lead to skewed datasets that could bias the prediction. Finally, sampled data must be randomized and split into training, validation and testing sets for the ML model. The selected data must also undergo a mandatory transformation process (e.g., converting a non-numeric feature into a numeric one and resizing inputs to a fixed size). Optionally, quality transformations can also be applied to the dataset. Quality transformations are not mandatory but, in some cases, can help to improve the quality of a prediction, and may include tokenization, normalization of the numeric features, and introducing non-linearities into the feature space.

There are four main categories of machine learning algorithms: supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning. All of them will be thoroughly reviewed in the following sections.

2.1. Supervised Learning

In supervised learning [32], the dataset D is a finite set of feature vectors ; namely

Each feature vector contains a set of properties (with ) that describes an element of the dataset. For example, if each element of the dataset represents a house, the ’s could represent the location, the number of rooms, the square meters, etc. A label is assigned to each element of the dataset, which leads to a a set of examples formed by pairs of feature vectors and labels, namely

The label can be considered as the output associated to a given example. The output can either be numerical or categorical. In the former case, the learning process is called a regression in the latter case, the learning process is called a classification.

The objective of a regression problem is to predict the value of label (also called a target), given an unlabelled example . Conversely, the objective of a classification problem is to automatically assign a class to a label , given an unlabelled example . In this case, , where each represents a class or category to which an example may belong.

2.2. Unsupervised Learning

In supervised learning, a training dataset (i.e., a set of examples used for learning, whose correct outputs are known) is used to train a mathematical model that infers the relationship between system inputs and outputs. Conversely, in unsupervised learning, a set of unlabelled examples, , is used to gain a deeper understanding about the inherent data distribution and properties. In general, unsupervised learning is used for data exploratory analysis and dimensionality reduction. Dimensionality reduction refers to the methods used to reduce the number of features of a given example in order to ease further data processing (generally to pre-train supervised algorithms).

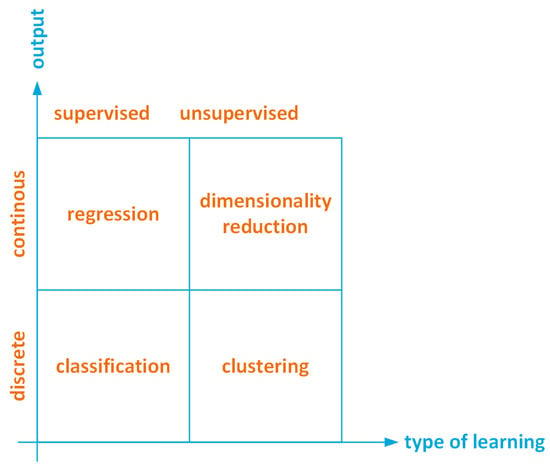

Figure 3, summarizes the four major classes of ML algorithms. The algorithms are classified according the type of output (either continuous or discrete) and the type of learning (either supervised or unsupervised).

Figure 3.

Types of learning and ML classes of algorithms.

2.3. Semi-Supervised Learning

Semi-supervised learning algorithms [33] are a class of algorithms able to learn from a partially labelled dataset. This approach is particularly beneficial when manipulating large datasets, since labelling is an expensive operation. Semi-supervised algorithms can be used when it is necessary to categorize large amounts of data, relying only on a few labelled examples. Typical applications include object segmentation, similarity detection and automatic labelling.

2.4. Reinforcement Learning

Reinforcement learning algorithms [34,35] rely on a decision-making agent that supervises the learning process. The learning algorithm samples the environment state (i.e., a vector of unlabelled features) and makes a decision according to the sampled data in a trial-and-error fashion. The decision leads to an action, and to a reward from the environment. The better the action, the higher the reward. The action to take is decided by a policy function of the feature vector . The policy function is a mapping from to a finite set of actions , namely

The reinforcement learning algorithm can either be model-based [36,37] or model-free [38]. Model-free algorithms are more popular at present, as they are easier to implement and able to converge faster to an optimal solution for large-scale Markov Decision Processes (MDPs).

2.5. Machine Learning for IoT

IoT technology can leverage machine learning algorithms as the enabling technology for a wide number of applications [15,39,40,41,42,43]. The choice of the right machine learning (ML) algorithm basically depends on the use one wants to make of the collected data, and on the design requirements in terms of accuracy, linearity, number of parameters and hyperparameters, number of features and training time. These requirements can be even more stringent if the algorithm is supposed to run in hardware-constrained embedded devices or smart objects.

ML algorithms are often categorized either by learning style (supervised, unsupervised, etc.) or by similarities (regression algorithms, instance-based algorithms, regularization algorithms, etc.); however, in the context of IoT, a classification based on the application domain and the ML technique used can help to gain a much deeper insight into ML learning’s role in the IoT echosystem as an empowering technology.

Table 1 categorizes several ML algorithms that are suitable for execution on embedded devices according to their application domain and the ML technique (i.e., classification, prediction or learning agent). This classification does not pretend to be exhaustive and for more comprehensive treatment, the interested reader may refer to [43,44,45]. In particular, Ref. [45] is a comprehensive survey that explores the applications of ML algorithms to the whole IoT stack, including Cloud and Edge layers. To date, ML algorithms have mainly been used in the IoT device context for classification, prediction and device management purposes. Classification and prediction tasks rely, almost exclusively, on supervised and deep learning algorithms, whereas device management tasks rely on reinforcement learning techniques (both model-free and model-based). The supervised algorithms used more at the device level are: Support Vector Machine (SVM), K-Nearest Neighbour (KNN), Decision Tree (DT), Naive Bayes (NB), Hierarchical Mixture of Naive Bayes (HMNB), Regression Tree (RT) and Multiple Linear Regression (MLR). Unsupervised techniques such as Hidden Markov Model (HMM) and K-Means have also been reported, as well as ensemble learning techniques such as Random Forest (RF).

Table 1.

ML algorithms used in internet of things (IoT) applications.

The deep learning techniques used in the IoT devices rely on Artificial Neural Networks (ANN) and Recurrent Neural Networks (RNN). Finally, the reinforcement learning techniques rely on Q-learning [38], Upper Confidence Bound (UCB) [61], Post-Decision State (PDS) [62], and Dyna [36,37].

ANNs can also be used to improve the quality of a prediction by tackling the problem of missing data. Techniques based on General Regression Neural Network (GRNN) networks and Successive Geometric Transformation Models (SGTM) [63,64] have been proven to be be very effective when dealing with the missing data problem.

The data collected can be used to predict a value or an outcome, to choose between two or more categories, to classify data or images, to analyse a text, to generate recommendations or find unusual occurrences such as in fraud detection, and to analyse and discover data structure. However, regardless of the target application, a trade-off must be found among the design constraints in order to match the expected results. Key requirements for ML models are reviewed in Appendix B.

3. Software Architecture

Machine learning is a very active field of research in both industrial and academic environments; consequently, there is a plethora of tools and libraries for ML and deep learning available [65]. However, all of them have been designed to manage huge amounts of data and rely on expensive hardware infrastructure to reduce the computation time by either increasing the parallelism (using, for example, computational models based on MapReduce [66]) or using GPUs as hardware accelerators.

The number of interconnected smart devices in the new IoT paradigm is increasing rapidly and there is a serious risk of saturating the network connection with the traffic between smart objects and the cloud infrastructure that has to process and analyse the sensed data. Consequently, part of the computational complexity must be shifted from cloud-to-edge infrastructure and end devices disclosing the path to a new computational paradigm for IoT. In the new computational model envisaged, raw data processing is mainly performed locally and in a hierarchical fashion from device to cloud, offloading the computation to the next level only when necessary [24,67]. However, to the authors’ knowledge, too little effort has been made to develop ML frameworks suitable for embedded devices to date.

Solutions based on Tensorflow lite are still not fully implementable in resource-constrained embedded devices [24]. In addition, Tensorflow lite is not a framework but a runtime environment that allows the running of pre-trained deep-learning models on mobile devices and microcontroller boards; thus, it lacks the flexibility of a full-fledged development framework and forces the programmers to develop applications only in Python or C/C++.

In recent years, Node.js has become a de facto industrial standard for the development of real-time distributed embedded systems and IoT applications [68,69,70,71] due to its suitability for running in embedded devices, its ability to implement both server and client applications, its low memory footprint and its asynchronous nature, which all make it very appealing for real-time applications. Despite all these advantages, there is still a widespread misconception that JavaScript (and hence Node.js) is not suitable for computation-intensive applications such as machine and deep learning, and Python is still the preferred language. Tensorflow.js (https://www.tensorflow.org/js (accessed on 10 February 2021)) is a first attempt to provide the JavaScript programming community with an industrial-proof tool to develop ML-based applications in JavaScript. Nonetheless, Tensorflow.js suffers the same limitations as its Python counterpart; namely, it is not a framework targeted towards embedded and resource constrained devices.

The dominant position of Node.js and JavaScript in the IoT panorama, and the necessity of shifting the computation from cloud to edge devices requires the development of a lightweight JavaScript ML framework that is able to run on embedded devices.

This section comprehensively targets the requirements and the architecture of such a framework. The rest of this section is devoted to the description of all the modules that form and integrate into the ML engine.

3.1. Overall Architecture

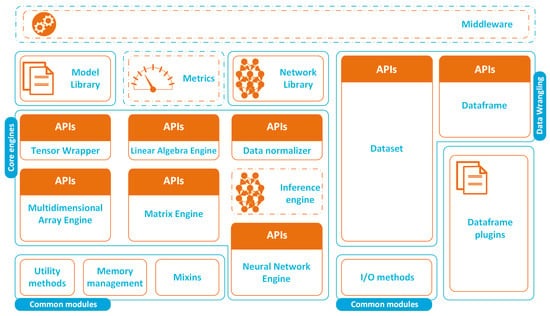

Figure 4 depicts the architecture of the ML framework described in this work. The software comprises several independent modules that expose Application Programming Interfaces (APIs). The core engine implements methods to manipulate multidimensional arrays, tensors and matrices, as well as first- and second-order Artificial Neural Networks [72].

Figure 4.

Software architecture of the ML framework (dotted lines denote modules still under development).

The framework also provides a small library of common ML algorithms and a neural network. This library is an abstraction layer that sits on top of the core engines and provides the programmer with an abstraction layer that allows the development of applications without directly accessing the core APIs.

A typical ML pipeline, like the one depicted in Figure 2, also includes a data preparation step prior to the execution of the machine learning algorithm. Data preparation comprises several tasks that have to be performed on raw data in order to extract meaningful information. These include data normalization, data structuring and data aggregation. The framework provides APIs to manage datasets (basically, import and export data) and to manipulate data in tabular form through the Dataframe module.

3.2. Multidimensional Array Engine

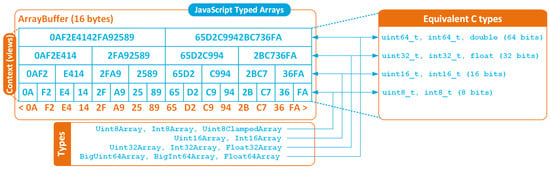

The multidimensional array engine is inspired by the NumPy package and provides primitives to efficiently manipulate large multidimensional arrays of any size. The engine provides getter and setter methods as well as methods for array slicing. In order to maximise the efficiency and the flexibility of the implementation, the engine has been built on top of JavaScript typed arrays. Typed arrays provide high-level views on top of a binary raw-data buffer of fixed size, and allow JavaScript to manage data in multiple integer (both signed and unsigned) and floating point (both single- and double-precision) formats, as depicted in Figure 5.

Figure 5.

JavaScript Typed Arrays: physical data structure, context and equivalence with C types.

A multidimensional array of k dimensions is characterised by its shape ; namely, a t-uple with the number of array elements along a given axis (or dimension). More specifically, (with and ) represents the number of elements along the axis j.

Array data are stored internally in row major format. The smaller indices represent the higher dimensions; thus, referring to the previous array shape definition, the pair represents, respectively, the number of rows and columns of a matrix.

The engine performs a mapping f from a multidimensional array with a given shape to a one-dimensional strided array, namely

where (with ) and . In other words, the multidimensional array and its 1-D mapping have different shapes but the same number of elements.

The stride property is a t-uple that shows how many elements must be skipped in memory to move to the next position when traversing the array. The number of bytes to move in memory is automatically inferred by the engine from the stride and the type of array.

The index j of the mapping described by Equation (4), is a function of the stride , the indices of the element of the multidimensional array, and of an offset x that denotes the position of the first element of the array within the ArrayBuffer data structure, namely

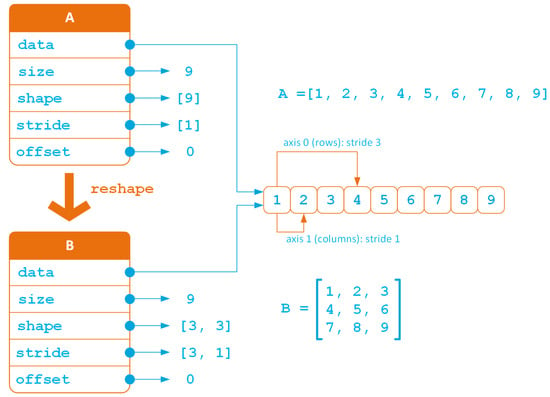

Defining an array through its shape, stride and offset leads to a more efficient internal representation, in which every transformation carried out on a given array does not imply either duplication or reordering of the array elements, as shown in Figure 6, in the case of a reshape operation.

Figure 6.

Memory organisation after array reshaping.

The reshape operation depicted in Figure 6 transforms a 1D array of nine elements into a three-by-three matrix. This operation implies a recomputation of the array strides. Observing that sizes and strides are given as number of array items, the engine automatically translates them into the right number of bytes according to the type of array element.

The multidimensional array engine allows for the represention of tensors with rank . In order to also represent scalar types (i.e., rank 0 tensors), the core engine is wrapped into a Tensor class that also provides a rich set of APIs to perform arithmetic operations on tensors. The Tensor Wrapper is compatible at API level with Tensorflow.js engine; thus, an application relying on the Tensorflow.js engine can be ported to our framework with very little effort, considering some slight differences that exist between the two frameworks. Table 2 summarises the API compatibility with Tensorflow.js. The implemented subset limits the compatibility only to ML learning algorithms, since Tensorflow.js wrappers for deep learning APIs relying on neural networks are still under development. In order to ensure software compatibility, coherent data types must be used. The multidimensional array engine supports all the JavaScript typed arrays (8, 16, 32 and 64 bits), whereas the Tensor wrapper also adds support to multidimensional arrays of Booleans and Strings. Conversely, Tensorflow.js only supports 32 bit data types, complex numbers encoded over 64 bit, Booleans and Strings.

Table 2.

Tensorflow.js API compatibility table.

Finally, the framework also supports broadcasting. Broadcasting consists of applying a given function to multidimensional arrays of different shapes, provided the shapes are compatible. For example, broadcasting allows for the addition of a multidimensional array to a scalar: the sum operation is broadcasted to all the individual elements of the array, adding them to the scalar term.

The multidimensional array engine is the core module used to build an ML model library, as depicted in Figure 4. The model library implements a set of prediction and classification algorithms that have been proven to be effective for IoT applications, as reported in Table 1. Table 3 reports the classification and prediction algorithms that were implemented in the framework model library.

Table 3.

Framework model library.

3.3. Linear Algebra Engine

Linear algebra is intensively used in machine learning [73] when working with datasets and data preparation (for example, for hot encoding and dimensionality reduction). Linear algebra is also used, for example, to solve linear regression problems and in Principal Component Analysis (PCA). Principal Component Analysis is an analysis method of multidimensional datasets that, generally, simplifies data analysis by projecting the high-dimensional data space onto a two-dimensional plane using the first two principal components. When the first two components fail to capture enough data variance, a 3D representation using the first three principal components should be considered in order to avoid loosing information. The structure of the projected data depends on the projection axis. Finding the information associated with a given projection is an eigenvalue problem. The eigenvectors of the covariance matrix represent the principal components, whereas the corresponding eigenvalues give an estimation of how much information is contained in each individual component. For this reason, the ML framework presented in this work also includes a set of advanced linear algebra features that can be used for data analysis and manipulation.

The Linear Algebra Engine (LAE) has been built on top of a Matrix Engine that provides all the basic matrix manipulation features (matrix transposition, basic matrix, rows and columns operations, Kroneker product, fast multiplication of large matrices using the Volker–Strassen algorithm, etc.) using the algorithms described in [73]. More specifically, the LAE implements the following features:

- Eigenvalue decomposition (EVD);

- LU decomposition;

- QR decomposition;

- Singular value decomposition (SVD);

- Cholesky decomposition;

- NIPALS algorithm;

- Pseudo-inverse matrix computation;

- Covariance matrix computation;

- Determinant computation;

- Linear dependencies computation.

Eigenvalue decomposition, a Nonlinear Iterative PArtial, Least Squares (NIPALS) algorithm [74] and a covariance matrix are used in machine learning for Principal Component Analysis. LU decomposition can be used to solve linear systems, compute determinants, invert a matrix and find a basis set of a vector space (i.e., finding linear independent rows of a matrix). The pseudo-inverse matrix can be used to compute a least-squares solution of a linear system.

Singular Value Decomposition (SVD) is a technique that is extensively used in machine learning and statistics for feature engineering, outlier detection, dimensionality reduction and noise removal. Cholesky decomposition is a special case of decomposition that can be applied to a symmetric and positive-definite matrix.

3.4. Artificial Neural Network Engine

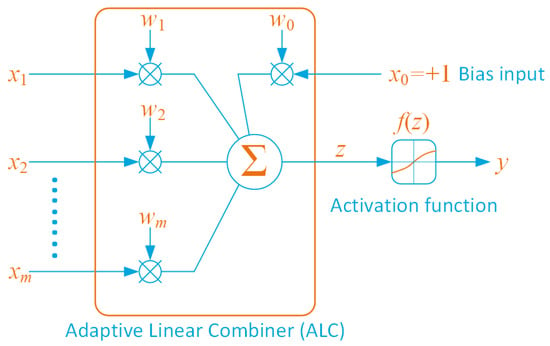

The Artificial Neural Network (ANN) engine, provides primitives to connect neurons in order to implement first- and second-order networks [72]. A Neural Network [75,76] is a collection of interconnected simple processing units, the neurons. The processing ability of the network lies in the weights associated to the neurons’ interconnections. The weights are obtained from a process of adaptation or learning in which the network is trained using specific data patterns. Figure 7 schematically depicts the operations performed by a neuron.

Figure 7.

An artificial neuron.

A neuron, i, of the network is formed by an Adaptive Linear Combiner (ALC) and by an activation function (or squashing function). The ALC performs the the sum of the weighted inputs , namely

where

with being the bias and being the weight of the j-th input of the i-th neuron of the network. The activation function is a function of the neuron activation potentialz and performs an output normalisation by returning a value . Several activation functions are available [77,78]; however, the ANN engine only implements the small subset reported in Table 4 that comprises both threshold, linear and non-linear activation functions.

Table 4.

Supported activation functions.

The neural network learning process is implemented by combining gradient descent and backpropagation algorithms, as described in [76], on a training dataset whose true outcomes are known.

Several neural network topologies have been developed to date [79,80]; some of them have been implemented in the ANN engine and are available as library components that expose a simple API which provides primitives for creating and training the network. The following subsections briefly review the implemented networks.

3.4.1. Perceptron

The perceptron is a linear binary classifier that implements the neuron depicted in Figure 7. Since it is used for binary classification, the activation function is a simple hard limiter; thus, for a vector of inputs, the results are

where is the vector of weights and is the dot product. The bias b is used to shift the decision boundary away from the origin and does not depend on the input values.

3.4.2. Long Short-Term Memory Network

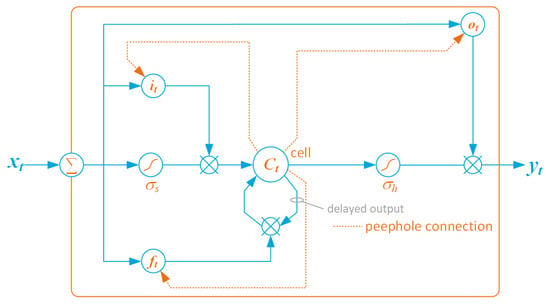

Long short-term memory (LSTM) is an artificial recurrent neural network (RNN) architecture, suitable for performing regression and classification based on a time series. It deals with the vanishing gradient problem (namely, an excessively low gradient that can prevent weights from changing during the training process and that, in the worst case, can completely stop the network training) that can occur when training recurrent neural networks. Several variants have been proposed [81]; however, the framework implements the most commonly used set-up, depicted in Figure 8.

Figure 8.

Detailed schematic of a basic LSTM cell (dotted lines denote peephole connections).

In its basic implementation, the LSTM is formed by three gates (the input gate , the forget gate , and the output gate ), a block input activation function , an output activation function and a single cell (the Constant Error Carousel (CEC)). Function is a sigmoid, whereas is usually a hyperbolic tangent. Moreover, cell has a hyperbolic tangent activation function denoted with .

Subindex t denotes the time-step. At , the initial cell values are and , respectively. Referring to Figure 8, is the input vector, is the cell state vector, the input gate activation vector, the forget gate activation vector, and the output gate activation vector.

The LSTM network implements the following functions:

where is the weight matrix for the CEC, input, forget and output cells; is the weight matrix for the peephole connections of the input, forget and output cells. Vector is the bias vector of the CEC, input, forget and output cells. Finally, operator × denotes matrix element-wise multiplication (i.e., the Hadamard product).

3.5. Data Frame and Data Wrangling

The framework presented in this work also provides APIs for data acquisition, exploration, cleaning and manipulation, as depicted in Figure 4. Data wrangling is essential to transform raw data into a format that can be processed more efficiently by the machine learning engine.

The framework allows for the importation and exportation of large data files in several text formats (namely, CSV, TSV, PSV, JSON) and mapping the data onto a dataframe. A dataframe is a simple, two-dimensional schema of heterogeneous data with labelled axes. Primitives are available to manipulate rows and columns of a dataframe, which include filtering, selection, and joining. The core functionalities can be easily extended through a plugin system. Plugins that allow for the performance of statistical and matrix operations on a dataframe are available. Table 5 summarises the core features implemented by the data-wrangling engine of the framework.

Table 5.

Framework support for data analysis and data wrangling.

4. Hardware Platforms and Framework Performance

A plethora of embedded computing platforms are available on the market [82,83]. Some new high-end embedded boards, such as Google Coral and NVIDIA Jetson Nano, also offer GPU acceleration. However, while an embedded GPU may be appealing for the acceleration of many machine learning algorithms, the increased cost of these platforms is a potential barrier for their adoption in many IoT applications, where the design is mainly cost-driven. In addition, server-side JavaScript GPU support is still at a very early stage, which limits the adoption of these boards for only Python and C/C++ applications.

Among all the boards available on the market, Raspberry Pis are the ones that probably offer the best trade-off between cost and performance. For this reason, the performance of the framework described in this work are stressed on a Raspberry Pi III (model B+) and a Raspberry Pi IV boards. Table 6 summarises the main features of the embedded computing boards used to evaluate the performance of the developed ML framework.

Table 6.

Raspberry Pi boards’ main characteristics.

Observe that, although both boards rely on a 64-bit ARMv8 architecture, Raspberry Pi III B+ runs a 32-bit operating system (Raspbian v10), since it has only 1 GB memory and running a 64-bit operating system would not lead to any significant performance improvement. Conversely, in the proposed test set-up, Raspberry Pi IV runs a 64-bit operating system (Ubuntu 20.04).

4.1. Set-Up of the Benchmarking Environment

The performances of the embedded development boards have been stressed using a set of ad-hoc benchmarks to determine their suitability to run embedded machine learning algorithms. The main goal is stressing the performance of the underlying mathematical core rather than the execution speed of a ML algorithm; thus, the benchmarks are focused on analysing the performance of those operations that are more likely to be used in an ML algorithm.

The benchmark suite used to evaluate the performance of the ML framework runs on Node.js v14.4 and is formed of 29 typical operations applied to rank 1 and rank 2 tensors: vectors and matrices. Table 7 reports the operations that form the benchmark suite. Appendix C describes the architecture and the implementation of the benchmarking tool used to evaluate the performances of the ML framework in detail.

Table 7.

Characteristics of the benchmark suite.

4.2. Performance Evaluation

Each benchmark operation is executed a variable number of times during a maximum timeframe of 5 s. The delay between consecutive test cycles is 5 ms, and the minimum number of samples measured during each cycle is 5. Test times and statistics are generated for each benchmark of the test suite. More specifically, the following timing metrics are calculated:

- The time (in seconds) taken to complete the last cycle (cycle);

- The elapsed time (in seconds) taken to complete the benchmark;

- The time (in seconds) taken to execute a test once (period).

Moreover, the following test statistics are computed:

- The number of samples acquired during the test;

- The sample standard deviation ;

- The sample variance ;

- The sample arithmetic mean m (i.e., the average execution time of the benchmark in seconds);

- The standard error of the mean (SEM) computed as , where n denotes the number of samples;

- The Margin of Error (MOE) computed over a confidence interval of 95%; namely, , where denotes the quantile computed for a confidence level ;

- The Relative Margin of Error (RME) expressed as percentage of the mean, namely, .

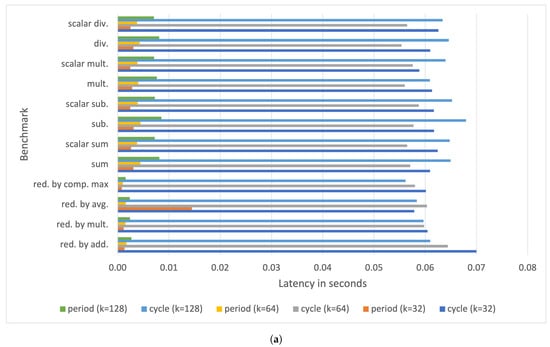

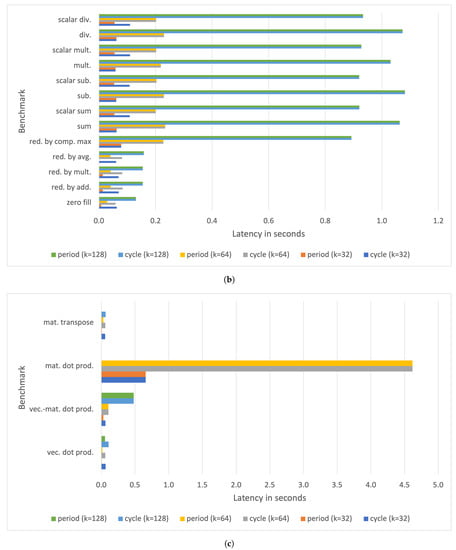

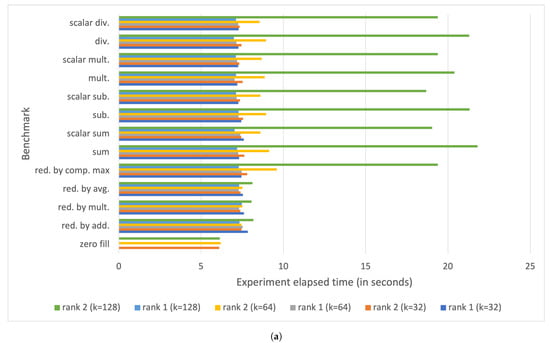

Performances have been evaluated for rank 1 tensors and vectors of dimension k and rank 2 tensors and matrices of dimension , where . Figure 9 shows the cycle and period latencies for a Raspberry Pi III B+ board measured after running the benchmarking tool. The delay of rank 1 tensor operations ranges from few ms (for ) to roughly 70 ms for (for ). The latency increases to up s for some rank 2 tensor operations (see Figure 9b).

Figure 9.

Measured period and cycle parameters with on a Raspberry Pi III B+ for (a) rank 1 tensor operations, (b) rank 2 tensor operations, and (c) typical vector and matrix operations.

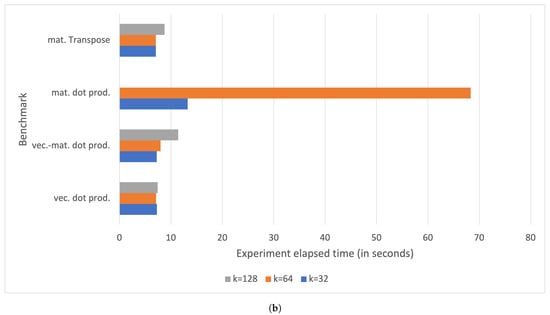

The most time-consuming operation is the matrix dot product, whose latency exceeds s for square matrices with . Observe that the delay for a square matrix dot product with is not reported since it exceeds 5 s, namely, the maximum time frame allocated for execution by the benchmarking tool.

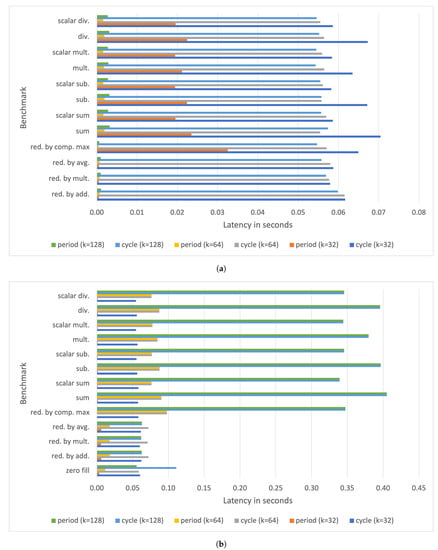

Figure 10 shows the cycle and period latencies for a Raspberry Pi IV board. Observe that for rank 1 tensor operations, the speedup with respect to the execution latencies measured for a Raspberry Pi III B+ board is roughly 2.

Figure 10.

Measured period and cycle parameters with on a Raspberry Pi IV for (a) rank 1 tensor operations, (b) rank 2 tensor operations, and (c) typical vector and matrix operations.

However, the improvement is even more significant for higher-order tensor and matrix operations, with speed-ups of up to with respect to the benchmark execution times on a Raspberry Pi III B+. Nonetheless, for the Raspberry Pi IV, the latency of the square matrix dot product with exceeds 5 s (the average delay is roughly s), and it is not reported in the plots.

4.2.1. Summary of Raspberry Pi III measurements

Table 8, Table 9, Table 10, Table 11, Table 12, Table 13, Table 14, Table 15 and Table 16 report the detailed benchmark statistics for rank 1 and rank 2 tensor operations, and for vector and matrix operations.

Table 8.

Raspberry Pi III B+ benchmark statistics for rank 1 tensor operations with (times in ms).

Table 9.

Raspberry Pi III B+ benchmark statistics for rank 1 tensor operations with (times in ms).

Table 10.

Raspberry Pi III B+ benchmark statistics for rank 1 tensor operations with (times in ms).

Table 11.

Raspberry Pi III B+ benchmark statistics for rank 2 tensor operations with (times in ms).

Table 12.

Raspberry Pi III B+ benchmark statistics for rank 2 tensor operations with (times in ms).

Table 13.

Raspberry Pi III B+ benchmark statistics for rank 2 tensor operations with (times in ms).

Table 14.

Raspberry Pi III B+ benchmark statistics for vector and matrix operations with (times in ms).

Table 15.

Raspberry Pi III B+ benchmark statistics for vector and matrix operations with (times in ms).

Table 16.

Raspberry Pi III B+ benchmark statistics for vector and matrix operations with (times in ms).

Observe that the latency of matrix dot product for is not reported, since it exceeds the maximum time allocated for the execution of a benchmark (namely, 5 s).

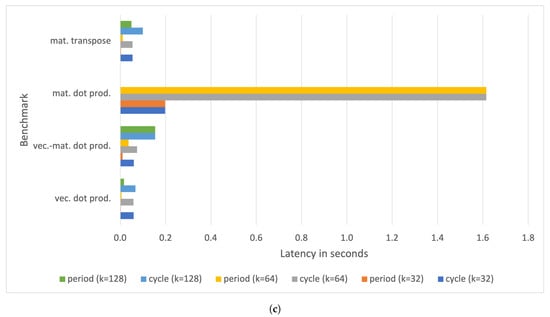

Finally, Figure 11 shows the elapsed times to compute the statistics for each benchmark on a Raspberry Pi III B+ board.

Figure 11.

Elapsed time for running all the measurements on a Raspberry Pi III B+ for (a) tensor operation benchmarks, and (b) vector and matrix operation benchmarks.

The overall time spent by the benchmarking tool performing all the measurements for a given benchmark ranges from a few seconds, in the case of rank 1 tensor operations with , up to approximately 180 s, in the case of the square matrix dot product with .

4.2.2. Summary of Raspberry Pi IV Measurements

Table 17, Table 18, Table 19, Table 20, Table 21, Table 22, Table 23, Table 24 and Table 25 report the detailed benchmark statistics for rank 1 and rank 2 tensor operations, and for vector and matrix operations executed on a Raspberry Pi IV embedded computer.

Table 17.

Raspberry Pi IV benchmark statistics for rank 1 tensor operations with (times in ms).

Table 18.

Raspberry Pi IV benchmark statistics for rank 1 tensor operations with (times in ms).

Table 19.

Raspberry Pi IV benchmark statistics for rank 1 tensor operations with (times in ms).

Table 20.

Raspberry Pi IV benchmark statistics for rank 2 tensor operations with (times in ms).

Table 21.

Raspberry Pi IV benchmark statistics for rank 2 tensor operations with (times in ms).

Table 22.

Raspberry Pi IV benchmark statistics for rank 2 tensor operations with (times in ms).

Table 23.

Raspberry Pi IV benchmark statistics for vector and matrix operations with (times in ms).

Table 24.

Raspberry Pi IV benchmark statistics for vector and matrix operations with (times in ms).

Table 25.

Raspberry Pi IV benchmark statistics for vector and matrix operations with (times in ms).

Observe that the latency of matrix dot product for is not reported, since it exceeds the maximum time allocated for the execution of a benchmark (namely, 5 s).

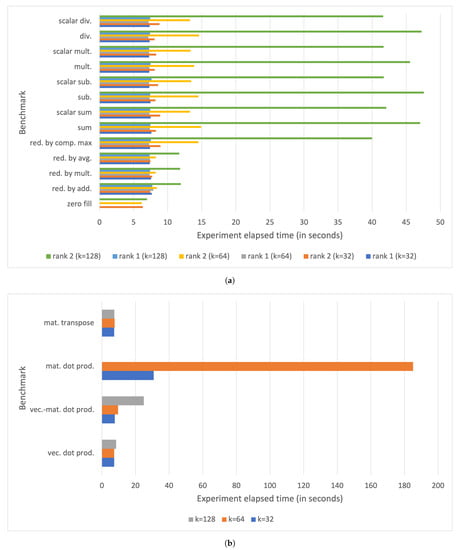

Finally, Figure 12 shows the elapsed times to compute the statistics for each benchmark on a Raspberry Pi IV board. The overall time spent by the benchmarking tool to perform all the measurements for a given benchmark ranges from a few seconds, in the case of rank 1 tensor operations with , up to approximately 68 s in the case of the square matrix dot product with .

Figure 12.

Elapsed time for running all the measurements on a Raspberry Pi IV for (a) tensor operation benchmarks, and (b) vector and matrix operation benchmarks.

5. Discussion

In the previous section, the performance of a new ML framework targeted to IoT and embedded devices was stressed on Raspberry Pi III and IV boards. The collected results showed that these devices have enough computing power to perform tensor and matrix arithmetic with acceptable execution times for datasets of reasonable size (namely, up to matrices of single-precision floating point numbers).

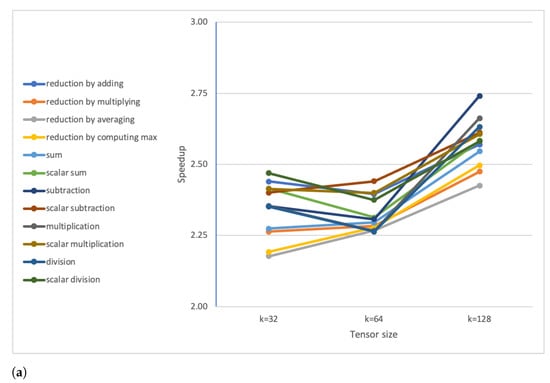

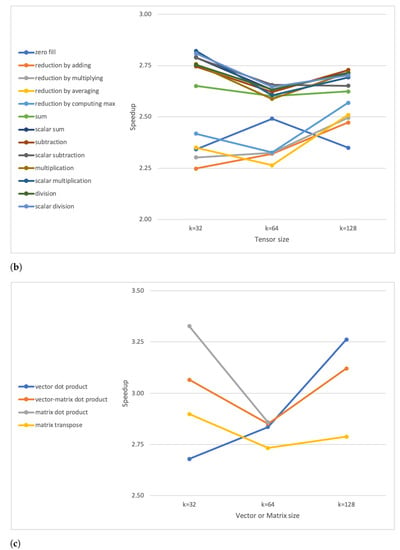

A substantial performance improvement was observed when executing the benchmark functions on a Raspberry Pi IV board, as also summarised in Figure 13.

Figure 13.

Computed average speedups for (a) rank 1 tensor operations, (b) rank 2 tensor operations, and (c) vector or matrix operations.

The speed-ups were computed using the measured benchmark average execution values on Raspberry Pi III and IV boards for , with k being the size of either a tensor or an array or a square matrix. The speed-ups, with respect to a Raspberry Pi III B+ board, were obtained by executing the framework on a Raspberry Pi IV ranging from roughly to . However, this improvement is exclusively due to the better hardware characteristics of the Raspberry Pi IV board, which is particularly suitable for computation- and memory-intensive operations.

Framework latency could be further decreased by optimising the code to increase the execution parallelism. Node.js is a single-threaded event-driven JavaScript runtime; however, by leveraging asynchronous constructions, it is possible to execute loop iterations in parallel as if they were executed by a multithreaded system.

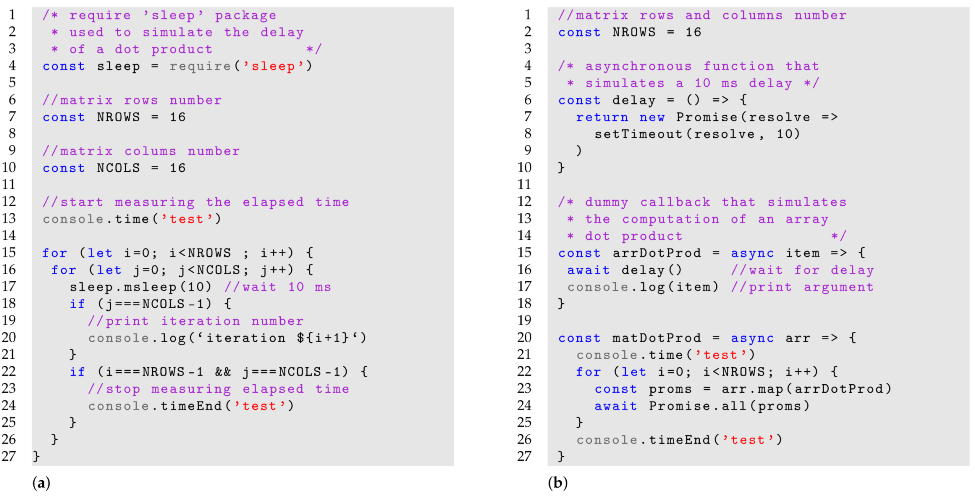

Consider the code snippets depicted in Listing 1. Snippet (a) simulates a matrix dot product using a synchronous programming style, whereas snippet (b) simulates the same operation using an asynchronous programming style with Promises.

| Listing1. JavaScript code snippets that simulate a matrix dot product using (a) a synchronous programming, and (b) an asynchronous programming style. |

|

It is assumed that the code operates on square matrices and that the array dot product has a delay of 10 ms. The execution latency is measured using the console.time() and console.timeEnd() function provided by the JavaScript console object.

After executing the two snippets on a Raspberry Pi III B+, the elapsed times are, respectively, s for snippet (a) and ms for snippet (b). Thus, writing code in an asynchronous fashion could lead to a theoretical speedup of with respect to the sequential code. The performance increase is due to the fact that lines 23 and 24 of snippet (b) will run the arrDotProd task in parallel.

Refactoring the code in an asynchronous fashion by either “promisifying” the functions, as depicted in snippet (b) of Listing 1, or relying on third-party libraries, such as Async.js (https://caolan.github.io/async/v3/ (accessed on 10 February 2021)), is an appealing design choice since this will lead to a drastic reduction in the execution times measured reported in Section 4.

However, the framework mathematical core was designed and implemented according to the following main guidelines:

- Implementation from scratch, without relying on any third-party package;

- Use of a synchronous programming style for the core mathematical functions.

Implementing the core from scratch with zero dependencies avoids the code malfunctioning due to either broken dependencies or changes in the APIs of the third-party libraries. On the other hand, using a synchronous programming style, although detrimental from a performance perspective, could significantly ease the porting of the mathematical core to other programming languages.

The main limitation of languages based on garbage collection, such as Java, JavaScript or Python, is their lack of performance when compared to low-level languages such as C or C++. This is why well-established ML frameworks such as Tensorflow.js come with a core written in C/C++ in order to achieve high performances.

In the specific case of Node.js, the garbage collector relies on the Mark and Sweep algorithm [84]. Mark and Sweep solves the problem of cross referencing objects; however, it is relatively slow when compared to other garbage-collection algorithms such as Automatic Reference Counting (ARC), and this could be a problem when striving for better performance. A possible way to improve performance is writing a native Node.js module in C/C++ and provide JavaScript bindings. However, there is a better solution, which could potentially lead to much better results. In recent years, Rust (https://www.rust-lang.org (accessed on 10 February 2021)) has attracted increasing attention from the programmer community. Rust is a low-level language designed for high speed, memory safety and thread safety, and it is particularly appealing for embedded applications. Rust does not rely on a garbage-collector, and memory management must be explicitly carried out by the programmer, as in C. However, unlike C, Rust relies on smart pointers, and the allocated memory is immediately released when a pointer falls out of scope. In addition, in most cases, Rust outperforms C and C++ [85] (especially when using concurrency and multithreading) and provides seamless integration with JavaScript and Node.js [86].

From this perspective, optimising JavaScript code makes no sense, since a much better performance can be achieved by porting computation- and memory-intensive operations to Rust and providing bindings for the JavaScript application. For this reason, the framework ML core is in the process of being ported to Rust. Combining the power and speed of Rust and the flexibility and the large ecosystem of Node.js has the potential to enable new ML applications in which part of the data analysis and processing is shifted to the embedded device itself.

The framework presented in this work is suitable for all those IoT applications that rely on batch-processing of the sampled data [87,88,89]; however, it is envisaged that the performance boost achievable by rewriting the mathematical core in Rust will make this framework suitable for that class of applications that requires stream and real-time processing.

A general-purpose and embeddable ML framework may potentially enable new architectures, in which some of the computational tasks are shifted to the edge devices. In such a context, the software presented in this work is now being tested in a staging environment for metabolomics applications [90]. Metabolomics requires batch processing of huge amounts of data; thus, the target requirements in terms of data-throughput perfectly match the framework current characteristics. The goals of this ongoing research are:

- Automating the whole ML workflow, as depicted in Figure 2, by leveraging the data-wrangling features and the ML algorithms bundled with the framework;

- Integrating data versioning systems such as DVC (https://dvc.org/ (accessed on 10 February 2021)) into the ML pipeline;

- Stressing and analysing the performances of the classification algorithms which are more suitable for metabolomic problems;

- Digitalising the existing metabolomics lab infrastructure, as envisaged in [91], using an evolution of the IoT architecture previously proposed in [9], in which each lab machine is provided with software wrappers that allow autonomous data analysis on the sampled data;

- Leveraging the API-level compatibility with Tensorflow.js to implement load-balancing algorithms that seamlessly and dynamically shift ML algorithms’ execution from edge devices to edge servers running Tensorflow.js;

- Deploying and stressing novel IoT architectures that rely on intra-lab sensor meshing and a distributed MapReduce with federated execution [92].

The framework also provides Artificial Neural Network (ANN) support; however, it suffers from the same limitations as other implementations, such as Tensorflow Lite, when dealing with Deep Neural Networks. Deep neural models are computationally and memory-expensive, and require a compilation step to simplify the network model and make it suitable for execution in a resource-constrained edge device. A possible way of simplifying Deep Neural Networks consists of using pruning algorithms to reduce the number of neurons and synapses [93]. As outlined in Section 3.1, the ANN inference engine is still under development, which limits the application field to algorithms that rely on shallow neural networks. This means, at this stage of development, that the framework cannot efficiently deal with some kinds of application, such as, for example, efficient power management in networked microgrids [94,95,96], since they require algorithms that can only be efficiently implemented using deep convolutional neural networks [97].

6. Conclusions

This work deals with the design and implementation of an ML learning framework suitable for embedded devices and IoT applications. The framework also provides APIs for artificial neural networks and data-wrangling and analysis. The following stages took place during the design process:

- First, a set of ML algorithms suitable for IoT applications were identified through a careful literature review;

- Then, the core supporting software infrastructure was developed, privileging, by design, portability to other programming languages;

- Finally, the core infrastructure was stressed using an ad-hoc bechmarking tool in order to gain a better insight into the limitations imposed by the software architecture and the underlying hardware.

Although development is still at an early stage, the results are encouraging, and the measurements demonstrate that it is possible to embed a fully fledged ML framework into a resource-constrained computing board.

The framework was demonstrated on Raspberry Pi III and IV boards, and its performances were evaluated for several load conditions with rank 1 and rank 2 tensors and matrices and for several values of the dimension k. The delay in rank 1 tensor operations on a Raspberry Pi III board ranges from a few ms (for ) to roughly 70 ms for (for ). The latency increases to up to s for some rank 2 tensor operations. The most time-consuming operation is the matrix dot product, measured for a matrix with , whose latency is approximately s.

Matrix and tensor operations are both computation- and memory-intensive; thus, migrating to a Raspberry Pi IV board leads to a significant speed-up of up to with respect to the performances measured for the Raspberry Pi III set up. Detailed measurements are reported in Table 8, Table 9, Table 10, Table 11, Table 12, Table 13, Table 14, Table 15, Table 16, Table 17, Table 18, Table 19, Table 20, Table 21 and Table 22.

The framework is suitable for all applications that rely on batch-data-processing and is now being tested in a set-up suitable for metabolomics applications, focusing on ML pipeline automation and algorithm performance and optimisation.

A further performance boost can be achieved by migrating the mathematical core to a high-performance language suitable for embedded applications like Rust, and implementing the bindings for Node.js to connect the new optimised core to the rest of the framework.

It must be highlighted that the proposed solution differs from other solutions, such as Tensorflow Lite. While the latter is a runtime environment that allows a pre-trained model to be run on a small device, the solution described in this work is a full-fledged and modular framework that offers the possibility of implementing the complete ML workflow onto an embedded device.

Hardware and software technologies are mature enough to allow the implementation of a lightweight, yet high-performance ML core, capable of running in embedded devices, thus offering a path to a new pervasive computing paradigm in which the IoT devices are not merely data collectors and forwarders, but real “smart” devices with enough computing power. In such a context, Node.js and Rust are really appealing technologies and seem to guarantee enough performance and flexibility to achieve this goal in the medium-term.

Author Contributions

Conceptualization, G.C. and A.T.; methodology, G.C. and A.T.; software, G.C.; validation, G.C. and A.T.; investigation, G.C. and A.T.; writing—original draft preparation, G.C.; writing—review and editing, G.C. and A.T.; supervision, A.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| ALC | Adaptive Linear Combiner |

| ANN | Artificial Neural Network |

| API | Application Programming Interface |

| ARC | Automatic Reference Counting |

| BLE | BlueTooth Low Emission |

| CEC | Constant Error Carousel |

| CoAP | Constrained Application Protocol |

| CSV | Comma Separated Value |

| CPU | Central Processing Unit |

| DT | Decision Tree |

| EVD | Eigenvalue Decomposition |

| GPIO | General Purpose Input Output |

| GPU | Graphics Processing Units |

| HMM | Hidden Markov Model |

| HMNB | Hierarchical Mixture of Naive Bayes |

| IoT | Internet of the Things |

| JSON | JavaScript Object Notation |

| KNN | K-Nearest Neighbour |

| LAE | Linear Algebra Engine |

| LSTM | Long Short-Term Memory |

| LDDDR | Low-Power Double Data Rate |

| LTS | Long-Term Support |

| MDP | Markov Decision Process |

| ML | Machine Learning |

| MLR | Multiple Liner Regression |

| MOE | Margin of Error |

| MQTT | Message Queue Telemetry Transport |

| NB | Naive Bayes |

| NIPALS | Nonlinear Iterative PArtial Least Squares |

| OS | Operating System |

| PCA | Principal Component Analysis |

| PDS | Post-Decision State |

| PSV | Pipe Separated Value |

| RAM | Random Access Memory |

| RNN | Recurrent Neural Network |

| ReLU | Rectified Linear Unit |

| RF | Random Forest |

| RME | Relative Margin of Error |

| RT | Regression Tree |

| SEM | Standard Error of Mean |

| SDRAM | Synchronous Dynamic RAM |

| SVD | Singular Value Decimposition |

| SVM | Support Vector Machine |

| TSV | Tab Separated Value |

| UCB | Upper Confidence Bound |

| USB | Universal Serial Bus |

| WiFi | Wireless Fidelity |

Appendix A. Optimal Data Fitting

Overfitting and underfitting are causes of poor performance in machine learning models. More specifically:

- Overfitting is related to a lack of generalization of the ML model due to a too-accurate training. Namely, the model is very specific and too fit for the training data, and fails when it is applied to data collected in the future, generating erroneous outcomes;

- Underfitting happes when the learning model is not accurate enough to capture the relationship among new data. Namely, the model fails to learn the problem from the training dataset.

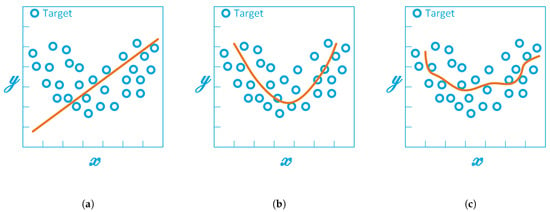

The approximation with a simple straight line of Figure A1a is not a good approximation because it does not render the nonlinear relationship between between the model variables x and y. This model is underfit to the data and it does not perform well, even with the training data. Conversely, the model of Figure A1c is an example of overfitting. This model learns all the details and noise of the training data; namely, the model captures the random fluctuations in the training data and incorporates them as part of the model. The problem is that irrelevant details and noise do not apply to new data and can negatively impact the model’s ability to generalise. Finally, Figure A1b depicts a model with optimal fit. Optimal fit lies between underfitting and overfitting. The optimal fit can be found by analysing the model’s behaviour for the training and test datasets. During the training process, the error on both training and test datasets decreases; however, when the model is overtrained, the error in the training dataset decreases (i.e., the model is overfitting), whereas the error in test dataset starts to increase. The optimal fit is the point just before the error on the test dataset starts increasing.

Figure A1.

Different model approximations: (a) model with underfitting, (b) model with optimal fit, and (c) model with overfitting.

Underfitting is not a problem, because it can be easily detected by selecting a good performance metric. Conversely, overfitting is a very common problem in nonparametric and nonlinear models, and can be tackled using resampling techniques or suitably tuning the model’s hyperparameters to limit or constrain the amount of details learned by the model.

Appendix B. Key Requirements for ML Models

Appendix B.1. Accuracy

In machine learning, accuracy is the measure of the effectiveness of an ML model in terms of correct predictions or classifications. Accuracy can be measured using classification accuracy. Classification accuracy (or simply Accuracy) is defined as the ratio of the number of correct predictions to the number of total predictions performed on the dataset, namely

However, relying only on this metric to evaluate the effectiveness of a ML algorithm could be misleading, especially in the case of heavily unbalanced datasets. This is not allowable in cases when the cost of a misclassification is high (e.g., not detecting a rare disease).

Several metrics can be used to suitably evaluate the performance of an ML algorithm; the interested reader may refer to [98,99] for a detailed treatment.

Appendix B.2. Training Time

In supervised learning, training time is the time spent training the ML model using historical data in order to build a model which minimises the prediction errors. The training time is strictly related to accuracy and to the type of ML algorithm used.

Appendix B.3. Linearity

A prediction function can be either linear or non-linear. Given a feature vector , a linear prediction function can be represented as

where denotes a property of the of the feature vector , is the predicted target (or label), and the coefficients are the parameters of the model. Examples of linear ML algorithms are linear regression, logistic regression, and support vector machine. Conversely, a non-linear ML algorithm is characterized by a non-linear prediction function. Examples of non-linear ML algorithms are naive Bayes, Gaussian naive Bayes, and K-nearest neighbours.

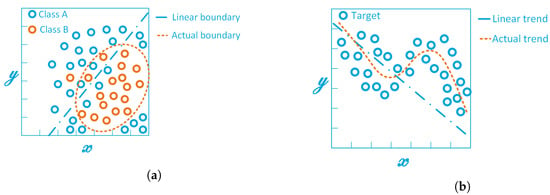

Usually, linear algorithms are a good starting point, since they used to be algorithmically simpler and faster to train with respect their non-linear counterparts. However, they are not always the best choice, as shown in the examples of Figure A2.

Figure A2.

Linear approximation of the prediction function for (a) a binary classifier with a non-linear boundary, and (b) a dataset with non-linear trend.

Appendix B.4. Number of Parameters and Hyperparameters

The terms parameter and hyperparameter are sometimes used interchangeably in machine learning; however, they are not the same thing. If we refer to the simple regression model of Equation (A2), the coefficients represent the model parameters. Thus, parameters are properties of the training data that are not set manually but tcan be learned or estimated from data during model training. An ML model can be either parametric or nonparametric, depending on whether it has a fixed number of parameters. Examples of model parameters are the coefficients in a linear or logistic regression, or the weights in an artificial neural network. Conversely, a hyperparameter is a configuration external to the model that cannot be learnt from the data and must be set manually and properly tuned to a specific problem. Hyperparameters are necessary for the correct set-up of the estimation process of the learning parameters. Examples of model hyperparameters are the k of the K-nearest neighbour algorithm, C and for support vector machine algorithm, and the number and size of the hidden layers of a neural network.

The number of parameters and hyperparameters of an ML model determine the algorithm performance, since their training time is sensitive to their number.

Appendix B.5. Number of Features

A feature is a measurable property or characteristic of the phenomenon under observation. A feature is the basic building block of a dataset. More specifically, one row of the dataset represents an observation or experiment , whereas each column of the dataset represents a feature. The number of features depends on the type of phenomenon observed; for example, genetic and textual data are characterized by a large number of features.

The performance of a machine-learning algorithm heavily depends on the number of features to be analysed, since this can make the training time extremely long.

The quality of a dataset can be improved with processes like feature selection and feature engineering. Feature selection is aimed at removing properties that are either redundant or not relevant from the dataset, without sacrificing the accuracy of the ML algorithm. The benefits of good feature selection are:

- Reducing the chance of overfitting the model;

- Reducing the ML algorithm training time;

- Improving the model’s interpretability by highlighting only those properties that are more relevant to performing a good prediction.

Feature engineering is the process of combining an existing model’s features to create new ones that can provide a deeper understanding of the problem, improving the accuracy of the model and helping it to converge faster to an optimal solution.

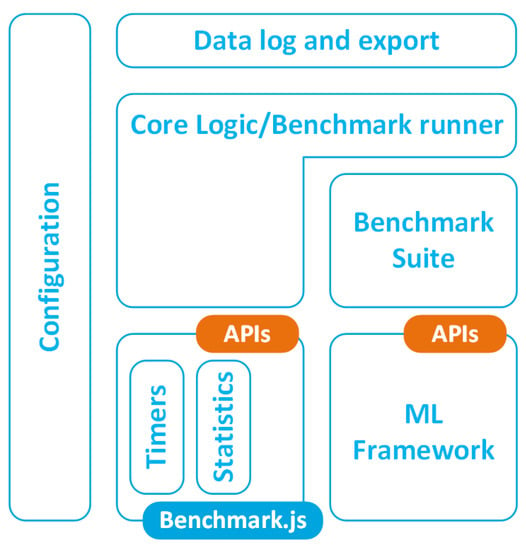

Appendix C. Benchmarking Tool Architecture and Implementation

Figure A3 depicts the architecture of the benchmark tool used to evaluate the performances of the ML framework presented in this work. The tool used was built on top of the Benchmark.js (https://benchmarkjs.com (accessed on 10 February 2021)) library. This library provides low-level APIs, to control system timers and perform statistical operations on the measured data.

The benchmark tool comprises the following modules:

- A benchmark suite; namely, a module that invokes the primitives of the ML framework that must be evaluated;

- A benchmark runner, in charge of executing the measurements on both synchronous and asynchronous modules of the ML framework and collecting the measured data by leveraging the primitives provided by Benchmark.js;

- A data log and export module in charge of logging results and preparing and formatting the data in several text-exporting formats (CSV, TSV, etc.) that are suitable for import into a spreadsheet;

- A configuration module that parses a configuration file with the general benchmark set-up (number of simulations, duration, data output format, etc.) and configures all the benchmark modules accordingly.

Figure A3.

Architecture of the benchmark tool.

References

- Ahamed, F.; Farid, F. Applying Internet of Things and Machine-Learning for Personalized Healthcare: Issues and Challenges. In Proceedings of the 2018 International Conference on Machine Learning and Data Engineering (iCMLDE), Sydney, Australia, 3–7 December 2018; pp. 19–21. [Google Scholar]

- Sen, S.; Datta, L.; Mytra, S. (Eds.) Machine Learning and IoT: A Biological Perspective; CRC Press: Boca Raton, FL, USA, 2019; ISBN 978-1-13-849269-1. [Google Scholar]

- Walter, K.-D. AI-based sensor platforms for the IoT in smart cities. In Big Data Analytics for Cyber-Physical Systems. Machine Learning for the Internet of Things; Dartmann, G., Song, H., Schmeink, A., Eds.; Elsevier: Amsterdam, The Netherlands, 2019; pp. 145–166. ISBN 978-0-12-816637-6. [Google Scholar]

- Cornetta, G.; Touhafi, A.; Muntean, G.-M. (Eds.) Social, Legal, and Ethical Implications of IoT, Cloud, and Edge Computing Technologies; IGI Global: Hershey, PA, USA, 2020; ISBN 978-1-79-983817-3. [Google Scholar]

- Kumar Koditala, N.; Shekar Pandey, P. Water Quality Monitoring System Using IoT and Machine Learning. In Proceedings of the 2018 International Conference on Research in Intelligent and Computing in Engineering (RICE), San Salvador, El Salvador, 22–24 August 2018; pp. 1–5. [Google Scholar]

- Ullo, S.L.; Sinha, G.R. Advances in Smart Environment Monitoring Systems Using IoT and Sensors. Sensors 2020, 20, 3113. [Google Scholar] [CrossRef]

- Hossam, M.; Kamal, M.; Moawad, M.; Maher, M.; Salah, M.; Abady, Y.; Hesham, A.; Khattab, A. PLANTAE: An IoT-Based Predictive Platform for Precision Agriculture. In Proceedings of the 2018 International Japan-Africa Conference on Electronics, Communications and Computations (JAC-ECC), Alexandria, Egypt, 17–19 December 2018; pp. 87–90. [Google Scholar]

- Araby, A.A.; Abd Elhameed, M.M.; Magdy, N.M.; Said, L.A.; Abdelaal, N.; Abd Allah, Y.T.; Darweesh, M.S.; Fahim, M.A.; Mostafa, H. Smart IoT Monitoring System for Agriculture with Predictive Analysis. In Proceedings of the 2019 8th International Conference on Modern Circuits and Systems Technologies (MOCAST), Thessaloniki, Greece, 13–15 May 2019; pp. 1–4. [Google Scholar]

- Cornetta, G.; Touhafi, A.; Togou, M.A.; Muntean, G.-M. Fabrication-as-a-Service: A Web-Based Solution for STEM Education Using Internet of Things. IEEE Internet Things J. 2020, 7, 1519–1530. [Google Scholar] [CrossRef]

- Mathur, P. Machine Learning Applications Using Python Cases Studies from Healthcare, Retail, and Finance; Springer Science + Business Media: New York, NY, USA, 2019; ISBN 978-1-4842-3787-8. [Google Scholar]

- Ray, P.P. An Introduction to Dew Computing: Definition, Concept and Implications. IEEE Access 2018, 6, 723–737. [Google Scholar] [CrossRef]

- Reddy, R.R.; Mamatha, C.; Reddy, R.G. A Review on Machine Learning Trends, Application and Challenges in Internet of Things. In Proceedings of the 2018 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Bangalore, India, 19–22 September 2018; pp. 2389–2397. [Google Scholar]

- Sharma, K.; Nandal, R. A Literature Study On Machine Learning Fusion with IOT. In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019; pp. 1440–1445. [Google Scholar]

- Zou, Z.; Jin, Y.; Nevalainen, P.; Huan, Y.; Heikkonen, J.; Westerlund, T. Edge and Fog Computing Enabled AI for IoT-An Overview. In Proceedings of the IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hsinchu, Taiwan, 18–20 March 2019; pp. 51–56. [Google Scholar]

- Shafique, M.; Theocharides, T.; Bouganis, C.-S.; Hanif, M.A.; Khalid, F.; Hafiz, R.; Rehman, S. An Overview of Next-Generation Architectures for Machine Learning: Roadmap, Opportunities and Challenges in the IoT Era. In Proceedings of the Design, Automation and Test in Europe Conference (DATE), Dresden, Germany, 19–23 March 2018; pp. 827–832. [Google Scholar]

- Lee, J.; Stanley, M.; Spanias, A.; Tepedelenlioglu, C. Integrating machine learning in embedded sensor systems for Internet-of-Things applications. In Proceedings of the 2016 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Limassol, Cyprus, 12–14 December 2016; pp. 290–294. [Google Scholar]

- Suresh, V.M.; Sidhu, R.; Karkare, P.; Patil, A.; Lei, Z.; Basu, A. Powering the IoT through embedded machine learning and LoRa. In Proceedings of the IEEE 4th World Forum on Internet of Things (WF-IoT), Singapore, 5–8 February 2018; pp. 349–354. [Google Scholar]

- Han, T.; Muhammad, K.; Hussain, T.; Lloret, J.; Baik, S.W. An Efficient Deep Learning Framework for Intelligent Energy Management in IoT Networks. IEEE Internet Things J. 2020, 8, 3170–3179. [Google Scholar] [CrossRef]

- Barba-Guaman, L.; Eugenio Naranjo, J.; Ortiz, A. Deep Learning Framework for Vehicle and Pedestrian Detection in Rural Roads on an Embedded GPU. Electronics 2020, 9, 589. [Google Scholar] [CrossRef]

- Li, H.; Ota, K.; Dong, M. Learning IoT in Edge: Deep Learning for the Internet of Things with Edge Computing. IEEE Netw. 2018, 32, 96–101. [Google Scholar] [CrossRef]

- Lyu, L.; Bezdek, J.C.; He, X.; Jin, J. Fog-Embedded Deep Learning for the Internet of Things. IEEE Trans. Ind. Inform. 2019, 15, 4206–4215. [Google Scholar] [CrossRef]

- El-Rashidy, N.; El-Sappagh, S.; Islam, S.M.R.; El-Bakry, H.M.; Abdelrazek, S. End-To-End Deep Learning Framework for Coronavirus (COVID-19) Detection and Monitoring. Electronics 2020, 9, 1439. [Google Scholar] [CrossRef]

- Sakr, F.; Bellotti, F.; Berta, R.; De Gloria, A. Machine Learning on Mainstream Microcontrollers. Sensors 2020, 20, 2638. [Google Scholar] [CrossRef]

- Merenda, M.; Porcaro, C.; Iero, D. Edge Machine Learning for AI-Enabled IoT Devices: A Review. Sensors 2020, 20, 2533. [Google Scholar] [CrossRef] [PubMed]

- Doyu, H.; Morabito, R.; Höller, J. Bringing Machine Learning to the Deepest IoT Edge with TinyML as-a-Service. IEEE IoT Newsletter. 2020. Available online: https://iot.ieee.org/newsletter/march-2020 (accessed on 10 February 2021).

- Khan, A.I.; Al-Badi, A. Open Source Machine Learning Frameworks for Industrial Internet of Things. Procedia Comput. Sci. 2020, 170, 571–577. [Google Scholar] [CrossRef]

- Dingee, D. k3OS Takes Kubernetes to the Edge. Available online: https://containerjournal.com/topics/container-ecosystems/k3os-takes-kubernetes-to-the-edge/ (accessed on 10 February 2021).

- Melendez, C. Architecture Patterns for Kubernetes at the Edge. Available online: https://blog.equinix.com/blog/2020/12/14/architecture-patterns-for-kubernetes-at-the-edge/ (accessed on 10 February 2021).

- Gepperth, A.; Hammer, B. Incremental learning algorithms and applications. In Proceedings of the European Symposium on Artificial Neural Networks (ESANN), Bruges, Belgium, 27–29 April 2016; pp. 357–368. [Google Scholar]

- Ying, X. An overview of overfitting and its solutions. J. Phys. Conf. Ser. 2019, 1168, 022022. [Google Scholar] [CrossRef]

- Zheng, A.; Casari, A. Feature Engineering for Machine Learning. Principles and Techniques for Data Scientists; O’Reilly Media: Sebastopol, CA, USA, 2018; ISBN 978-1-491-95324-2. [Google Scholar]