1. Introduction and Related Works

Gesture recognition is an attractive research direction because of its wide application in virtual reality, human–computer interaction, and sign recognition. However, it is also a big challenge for the research of continuous gesture recognition, because the number, order, and boundaries of gestures were unclear in a continuous gesture sequence [

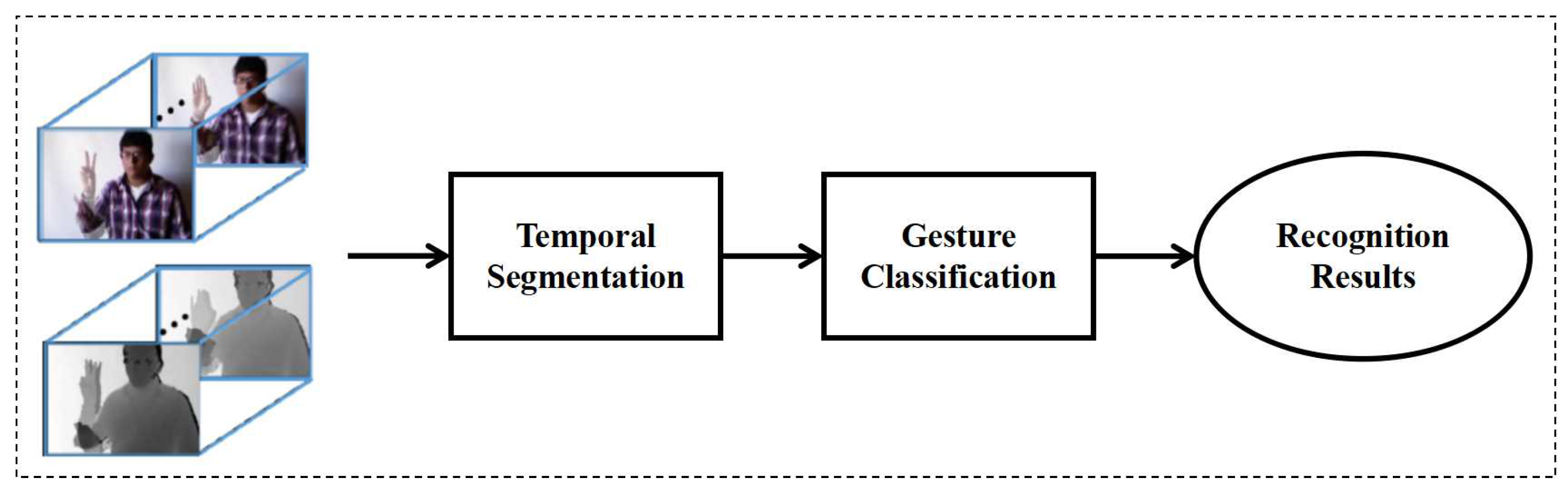

1]. Both the temporal segmentation and the recognition problems need to be solved in continuous gesture recognition. In fact, temporal segmentation and gesture recognition can be solved separately.

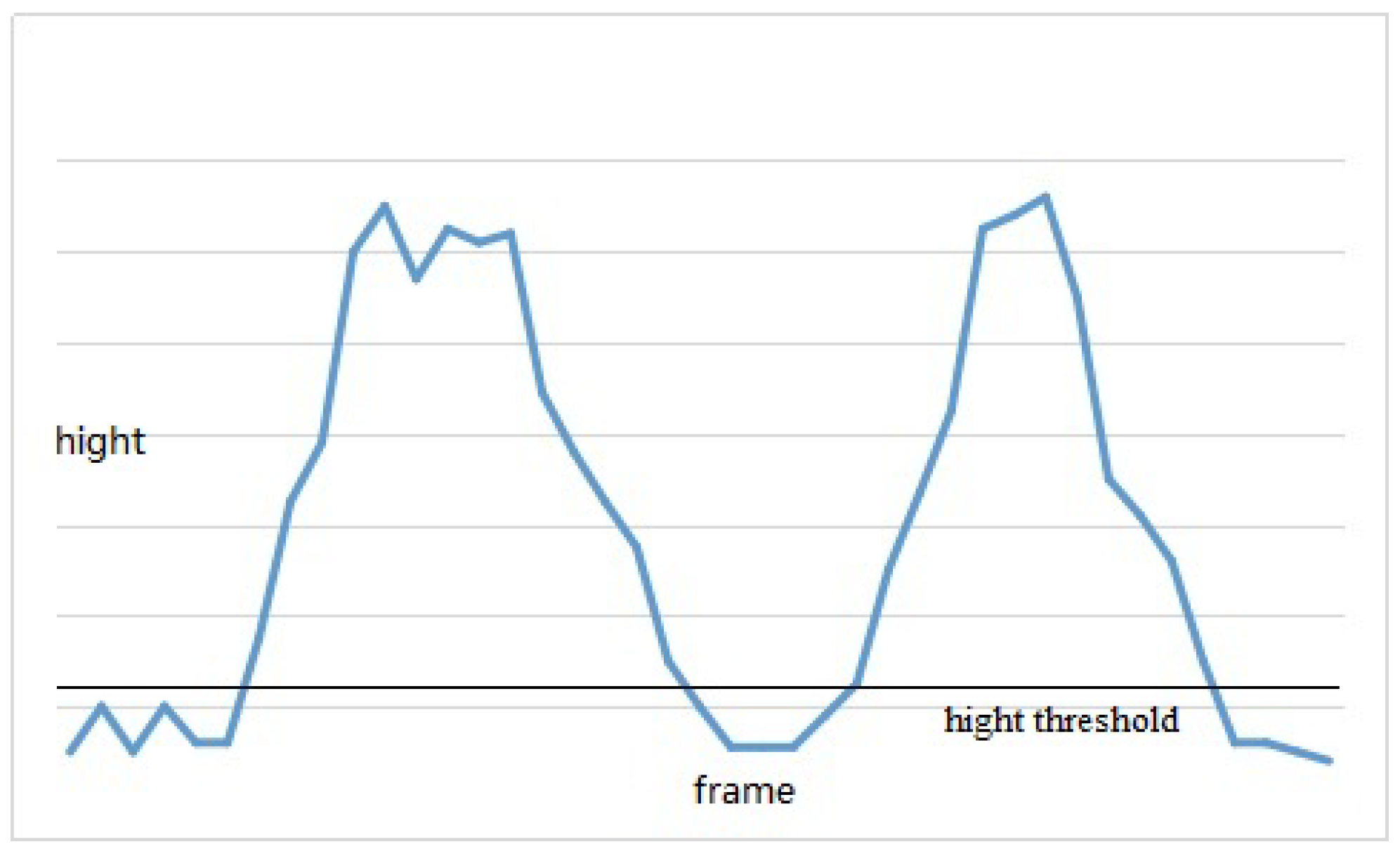

One typical challenge in continuous gesture recognition is temporal segmentation. The position and motion of hands were often employed for temporal segmentation [

2,

3]. However, these methods were sensitive to the complex background and built upon accurate hand detection. Sliding window is also a promising skill to obtain gesture instances with 3D convolutional neural networks (3DCNN) [

4]. Therefore, the computation of 3DCNN is expensive and the length of the sliding volume is fixed. To overcome the drawbacks of these works, a binary classification is proposed for temporal segmentation. As shown in

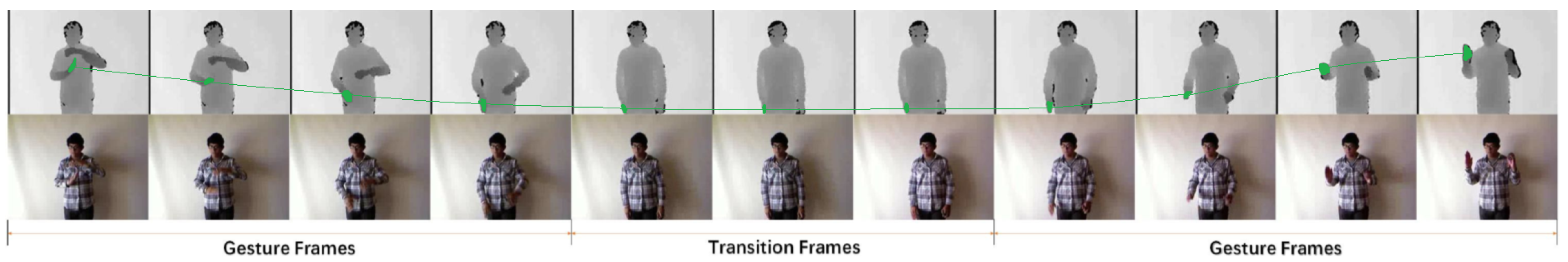

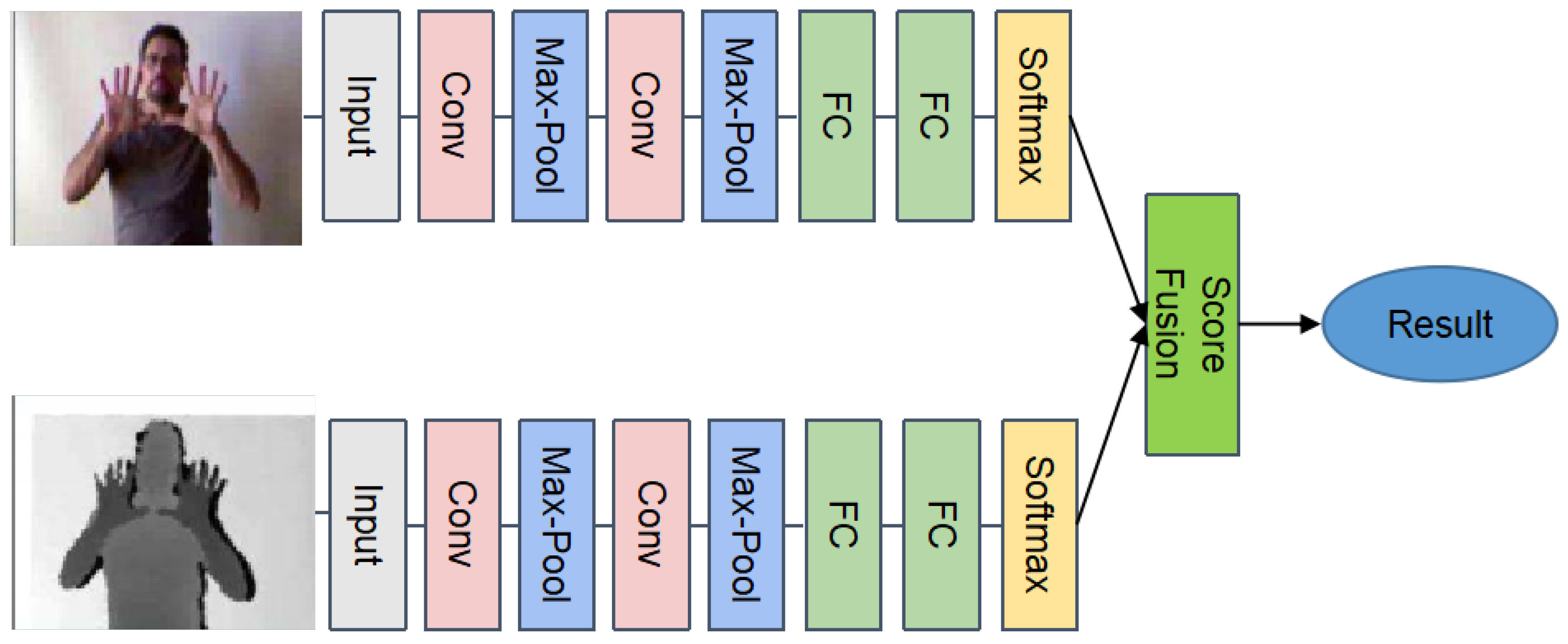

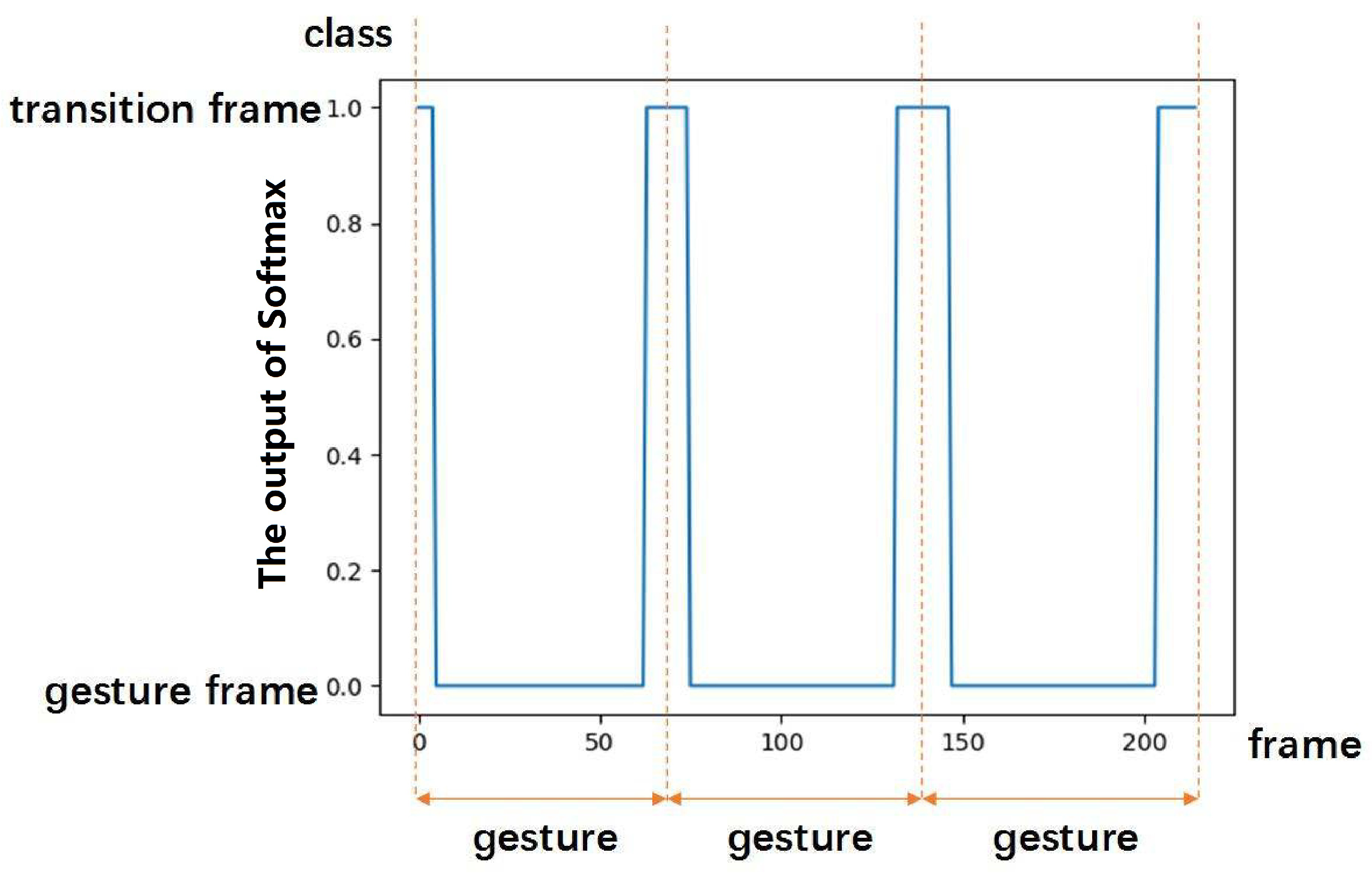

Figure 1, video frames can be classified into gesture frames that cover useful hand movement and transitional frames between adjacent gestures. We believe that appearance information and hand motion information are complementary in temporal segmentation. Therefore, a novel temporal segmentation method was proposed to distinguish between gesture frames and transitional frames by combining both appearance information and hand motion information.

After temporal segmentation, a continuous gesture sequence can be divided into several isolated gesture sequences. Therefore, isolated gesture recognition methods can be employed for the final recognition. Several attempts have been made to recognize gestures from RGB-D sequences with deep learning, including ConvNets combined with an RNN [

5,

6,

7,

8,

9,

10], 3D CNN [

11,

12,

13,

14,

15,

16,

17,

18,

19], Two-stream CNNs [

20,

21,

22,

23,

24,

25,

26], and Dynamic Image (DI)-based methods [

27,

28,

29,

30,

31,

32]. However, we argue that appropriate gesture recognition methods need to be selected according to the difference characteristics of RGB modality and depth modality. Therefore, we propose a novel gesture recognition network, which deals with RGB and deep modality in different ways, respectively. For the RGB modality, the proposed method adopts 3D ConvLSTM [

9] to learn spatiotemporal features from video frames of a RGB sequence and its saliency sequence. An example of a RGB sequence and its saliency sequence was shown in

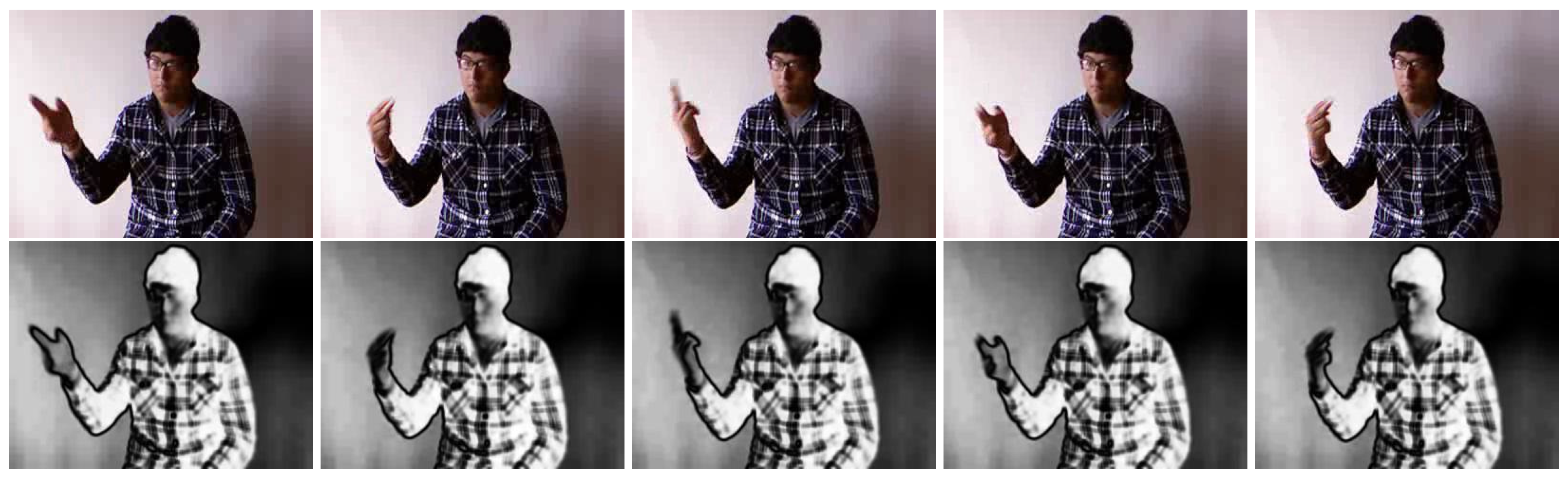

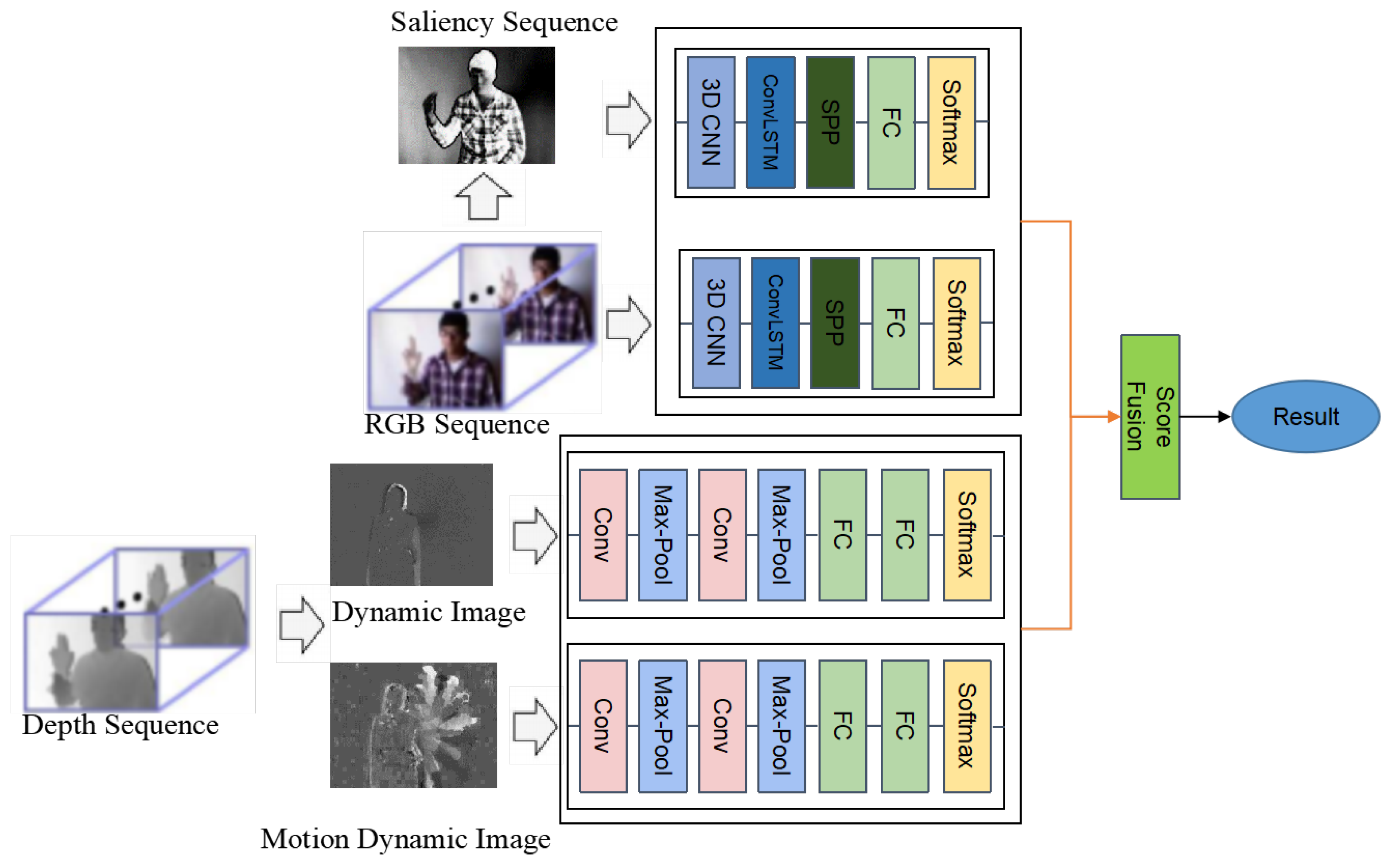

Figure 2. For depth modality, inspired by the outstanding performance of rank pooling [

27,

28,

30,

31,

33,

34,

35], this paper employs weighted rank pooling [

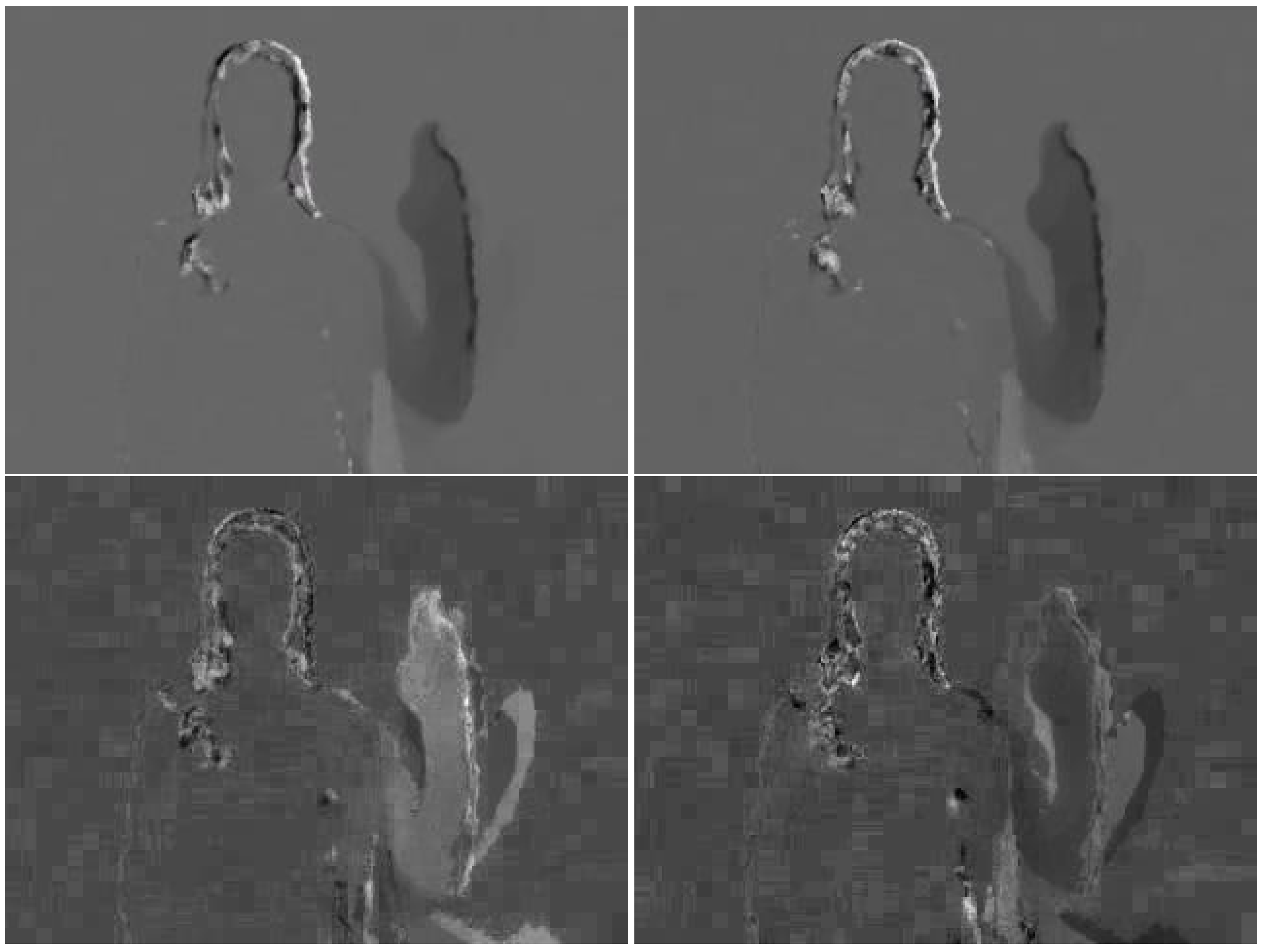

36] to encode depth sequences into Depth Dynamic Images (DDIs). To overcome temporal information loss, DMDI is also extracted from the absolute differences (motion energy) between consecutive frames of a depth sequence with weighted rank pooling. Then, both DDIs and DMDIs are fed into ConvNets for final recognition. Finally, multiple 3D ConvLSTMs and ConvNet are fused together by late fusion.

The proposed method achieved state-of-the-art performance on the ChaLearn LAP ConGD Datasets [

37] and Montalbano Gesture Recognition Dataset [

38]. Part of the work [

39] was reported in Chalearn Challenges on Action, Gesture, and Emotion Recognition: Large Scale Multimodal Gesture Recognition and Real versus Fake expressed emotions @ICCV17 [

40]. The key contribution of this paper is to segment the continuous gesture with both the appearance information and the hand motion information, and to encode the geometric, motion and structural information based on the different characteristics of the RGB modality and depth modality. Compared with the conference paper [

39], the extension includes:

Temporal segmentation with both the appearance information and the hand motion information;

The bidirectional rank pooling in [

39] is replaced with the weighted rank pooling [

36] to capture sequence-wide temporal evolution;

The method is also evaluated on Montalbano Gesture Dataset in addition to the ChaLearn LAP ConGD Datasets and state-of-the-art results are achieved;

More analysis and discussion are presented in this paper.

The remainder of this paper is organised as follows.

Section 2 gives the details of the proposed temporal segmentation and gesture recognition method.

Section 3 presents the experiments to verify the effectiveness of the proposed method and the discussions. The paper is concluded in

Section 4.

3. Experiments

The proposed method was evaluated on ChaLearn LAP ConGD Dataset [

37] and Montalbano Dataset [

44]. The evaluation protocols of continuous gesture recognition is Jaccard index (the higher the better). The network training and experimental results of the proposed methods on the dataset were reported.

3.1. Network Training

3.1.1. Network Training for Temporal Segmentation

To train the ConvNets for temporal segmentation, a dataset was collected for the binary classification. In the dataset, training samples of the class “transitional frames” were collected from eight frames around the bounary of two gestures, and training samples of the class “gesture frames” were picked from the rest frames. VGG-16 [

45] was fine-tuned for temporal segmentation from the pre-trained models on ImageNet [

29]. Both networks were trained using mini-batch SGD with the momentum and weight decay being set to 0.9 and 0.0001, respectively. The batch-size was 64. The activation functions in all hidden layers were RELU. To fit the input size of VGG-16, the input images were resized into

. The initial learning rate was

and decreased to

its every 40 K iterations. The training underwent 90 K iterations. The VGG-16 was implemented with Tensorflow and trained on one TITAN X Pascal GPU.

3.1.2. Network Training for Depth Modality

Four ConvNets were trained on the DDIs and DMDIs individually. In this paper, the ResNet-50 [

46] was adopted as the ConvNet model. For ChaLearn LAP ConGD Dataset, We fine-tuned the ConvNets for DDIs and DMDIs with pre-training models on ImageNet [

29]. The networks were fine-tuned for Montalbano Gesture Dataset based on the models trained on ChaLearn LAP ConGD Dataset. The data augmentation such as horizontal flip and standard color augmentation was used. We adopted batch normalization right after each convolution and before activation function. All hidden weight layers used the RELU. The network weights were learned using mini-batch SGD with the momentum and weight decay being set to 0.9 and 0.0001, respectively. The batch-size was set to 16. To fit the input size of ResNet-50, all inputs were resized to

. The learning rate was initially set to

and then dropped to its

every 40 K iterations. The total training iterations was 90 K and early stopping was also used to reduce the overfitting. The optical flow was extracted by the TVL1 optical flow algorithm implemented in OpenCV with CUDA. The ResNet-50 was implemented with Tensorflow and trained on one TITAN X Pascal GPU.

3.1.3. Network Training for RGB Modality

The 3D ConvLSTM was trained separately on RGB sequences and saliency sequences. For ChaLearn LAP ConGD Dataset, the network was fine-tuned on RGB modality from the pre-training model on SKIG [

47] provided by Zhu et al. [

9] and then this model was fine-tuned on saliency sequences. The network was fine-tuned for the Montalbano Gesture Dataset based on the models trained on ChaLearn LAP ConGD Dataset. Batch normalization was introduced to accelerate the training processes. The learning rate was set to 0.1 and then dropped to its

every 15 K iterations. The weight decay was initially

. At most 60 K iterations are needed for training. The batch-size was set to 13, the number of frames in each clip was 32, and each image was cropped into

. The 3D ConvLSTM was implemented based on the Tensorflow and Tensorlayer platforms and trained on one TITAN X Pascal GPU.

3.2. Evaluation of Different Settings and Comparision

3.2.1. Temporal Segmentation Evaluation

To evaluate the effectiveness of the proposed temporal segmentation method, we compared the performance of the proposed temporal segmentation method with the one of only using the hand motion information and the one of only using the appearance information on ChaLearn LAP ConGD Dataset. The continuous gesture sequence was divided into isolated gesture sequences with different segmentation methods, and then the isolated gesture sequences were recognized with our proposed gesture recognition network. The comparison on the validation set of Chalearn LAP ConGD Dataset is shown in

Table 1. Our proposed temporal segmentation method outperforms the method with only the hand motion information used and only the appearance information used. These results also demonstrated that the hand motion information and the appearance information were complementary in temporal segmentation.

3.2.2. Rank Pooling vs. Weighted Rank Pooling

Table 2 compares the performance using rank pooling and weighted rank pooling on the validation set of ChaLearn LAP ConGD Dataset. The results of three groups rank pooling are listed, including a convenient rank pooling, different spatial weight estimation methods, and different temporal weight estimation methods. In the second group, flow-guided aggregation is better than background-foreground segmentation and salient region detection. The foreground area was segmented by the most reliable background model (MRBM) [

48], and the salient region was extracted by global contrast-based salient region detection [

49]. The spatial weight of the pixel in the foreground area/the salient region is assigned to 1. Otherwise, the spatial weight is assigned to 0. In the third group, the flow-guided frame weight is better than the selection key frames. The key frames were selected by an unsupervised learning method [

50]. The temporal weight of key frames is assigned to 1, and the temporal weight of other frames is assigned to 0. These results show that flow-guided aggregation method outperforms rank pooling 0.0401 and flow-guided frame weight method outperforms rank pooling 0.0378. This verifies that weighted rank pooling are more robust and more discriminative in gesture recognition.

3.2.3. Different Features Evaluation

In this section, the features extracted from the RGB component and depth component were evaluated. The performance using features extracted by the DDIs + ConvNet, DMDIs + ConvNet, RGB + 3D ConvLSTM, Saliency + 3D ConvLSTM, and their combination was evaluated respectively. Average score fusion is used for the combination in this experiment. The evaluation result was listed in

Table 3, the symbol • denotes that the corresponding feature is selected for gesture recognition, and the symbol × denotes that the corresponding feature is not included for gesture recognition.

The ConvNet features from DDIs and DMDIs were compared on the validation set of ChaLearn LAP ConGD Dataset in

Table 3. Although the performance of DMDI was slightly lower than the one of DDI, the fusion of the ConvNet features extracted from DDIs and DMDIs achieved 0.1196 improvement (i.e., 0.6414 vs. 0.5218). The result demonstrated that variations in the background, shadows, or sudden changed variations in lighting conditions can have substantial impact on the performance and the ConvNet features extracted from DDIs and DMDIs are complementary.

Then the 3D ConvLSTM features extracted from RGB and Saliency were compared on the validation set of ChaLearn LAP ConGD Dataset. From

Table 3, we can see the performance of Saliency outperformed the one of RGB, which proved that the background can reduce the performance. The fusion of the 3D ConvLSTM features extracted from RGB and Saliency achieve 0.1302 improvement (i.e., 0.6127 VS. 0.4825). The results have also demonstrated that the 3D ConvLSTM features extracted from RGB and Saliency are complementary.

The Mean Jaccard Index achieved 0.6127 based on RGB modality, and the Mean Jaccard Index was 0.6414 based on depth modality. In addition, the fusion of all features offered 0.1686 improvement (i.e., 0.6904 vs. 0.5218) on the validation set of ChaLearn LAP ConGD Dataset. These results have demonstrated that all features from ConvNet and 3D ConvLSTM are complementary and different discriminative. The result also verified the effectiveness of our proposed network.

3.2.4. Score Fusion Evalution

In this paper, score fusion was employed to fuse the classification obtained from the ConvNets and 3D ConvLSTMs. The common score fusion methods are average, maximum, and multiply score function. The comparisons among the three score fusion methods were shown in

Table 4. These results showed that the average score fusion method achieved the best result.

3.3. Evaluation on ChaLearn LAP ConGD Dataset

3.3.1. Description

The ChaLearn Gesture Dataset (CGD) includes color and depth video sequences recorded by Microsoft Kinect [

51]. There are 22,535 RGB-D gesture videos and 47,933 RGB-D gesture instances in the ChaLearn LAP ConGD Dataset. A total of 249 gestures are included and performed by 21 different individuals. Detailed information is shown in

Table 5.

3.3.2. Experimental Results

Table 6 compared the performance of the proposed method and that of exiting methods on validation set. MFSK [

37] and MFSK + DeepID [

37] segmented the continuous gesture sequence to isolated gesture firstly and recognized the isolated gesture with the hand-craft features. Wang et al. [

52] employed the QOM method to segment the continuous gesture sequence and then extracted an improved depth motion map using color coding method over the segmented sequence, and CNN was adopted to train and classify the segmented gesture. Chai et al. [

3] first adopted Faster R-CNN to extract the hand for the temporal segmentation, and then two-stream RNNs were adopted to fuse multi-modality features for the recognition. Camgoz et al. [

4] applied 3D convolutional networks to RGB video and jointly learned the features and classifier. It can be seen that our proposed method achieved state-of-the-art results compared with existing methods.

The proposed method was also compared with the methods in ChaLearn LAP Large-scale Continuous Gesture Recognition Challenge [

40] in

Table 7. The mean Jaccard Index of our proposed method achieved

in the test set. Our proposed method achieved state-of-the-art results.

3.4. Evaluation on Montalbano Gesture Dataset

3.4.1. Description

Montalbano gesture dataset [

38] was also recorded by Microsoft Kinect Sensor. It contains 20 Italian cultural/anthropological. Four modalities, including RGB, depth, mask, and skeleton, can be found in this dataset. It is labeled frame-by-frame. The characteristics of Montalbano Gesture Dataset were:

the duration of each gesture varied greatly and there was no self-occlusion;

there was no information on the number or order of gestures;

the intra-class variability of gesture samples was high, while the inter-class variability of some gesture categories was low.

These characteristics brought lots of challenges. The detail of Montalbano Gesture Dataset was shown in

Table 8.

3.4.2. Experimental Results

Table 9 showed the result on Montalbano Gesture Dataset. Our proposed method achieved state-of-the-art performance. Left and right hand regions are treated as independent streams to improve the performance [

53] and skeleton information is used in [

54] to crop the specific area in videos. However, only RGB and depth modalities were used in our proposed method. The promising performance demonstrated the effectiveness of our proposed method.

3.5. Discussion

Temporal segmentation is crucial for continuous gesture recognition. The temporal segmentation and gesture recognition in continuous gesture recognition were performed separately in this paper. We assume that there are some transition frames between two consecutive gestures and one will puts hands down after performing a gesture. Although our proposed method has achieved good performance on both ChaLearn LAP ConGD Dataset and Montalbano Gesture Dataset, these assumptions limited the wider application of the proposed method. In our future work, we will explore a more general approach to address the problem of continuous gesture recognition.

In addition, current continuous gesture recognition methods can not address the problem of online gesture recognition. In actuality, important real-time applications including sign language interpreter and driver assistance systems require identifying gestures as soon as each video frame comes. How to improve the proposed method for online gesture recognition will be a good research direction.