The Impact of State-of-the-Art Techniques for Lossless Still Image Compression

Abstract

1. Introduction

- RI1:

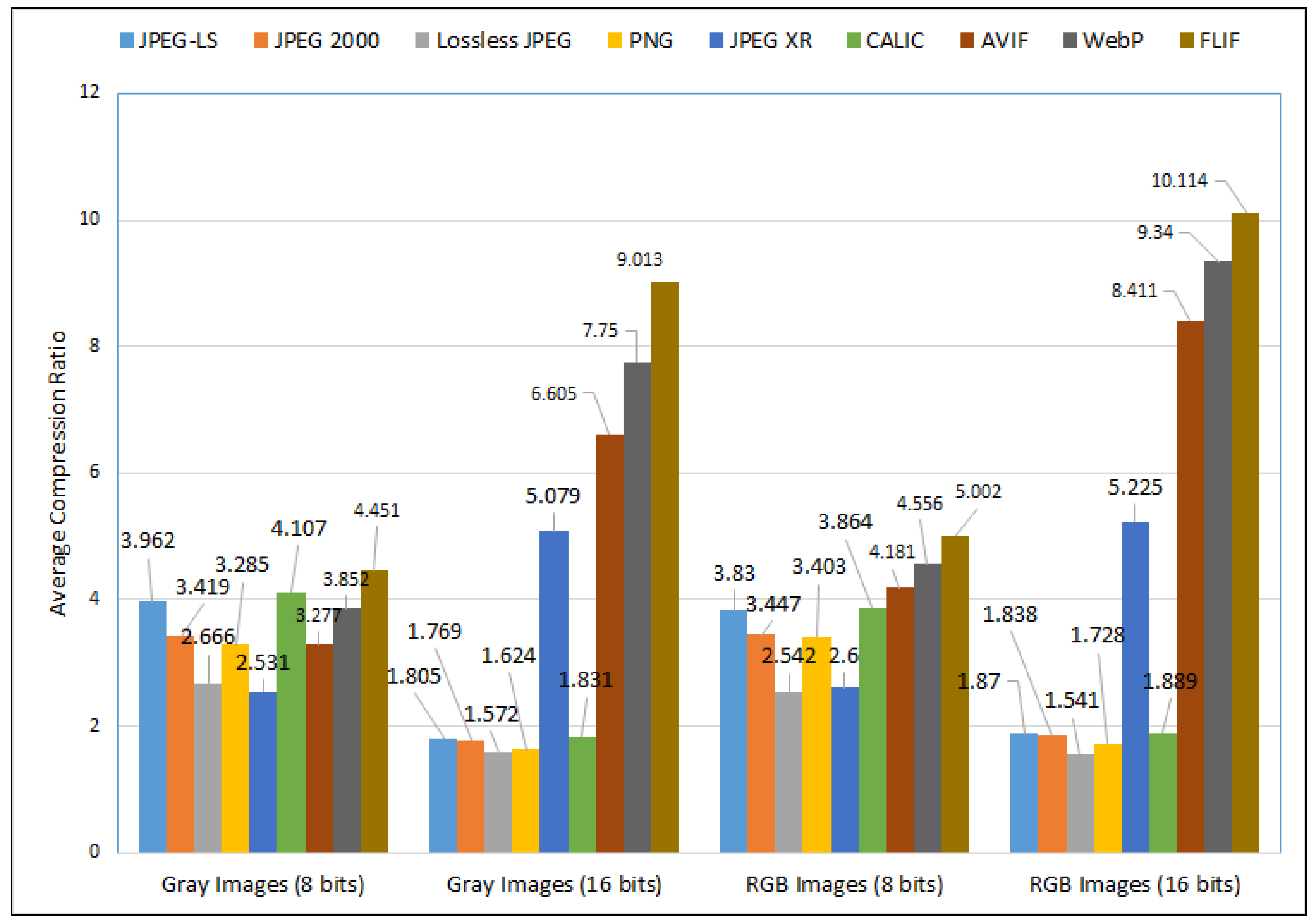

- How good is each algorithm in terms of the CR for 8-bit and 16-bit greyscale and RGB images?

- RI2:

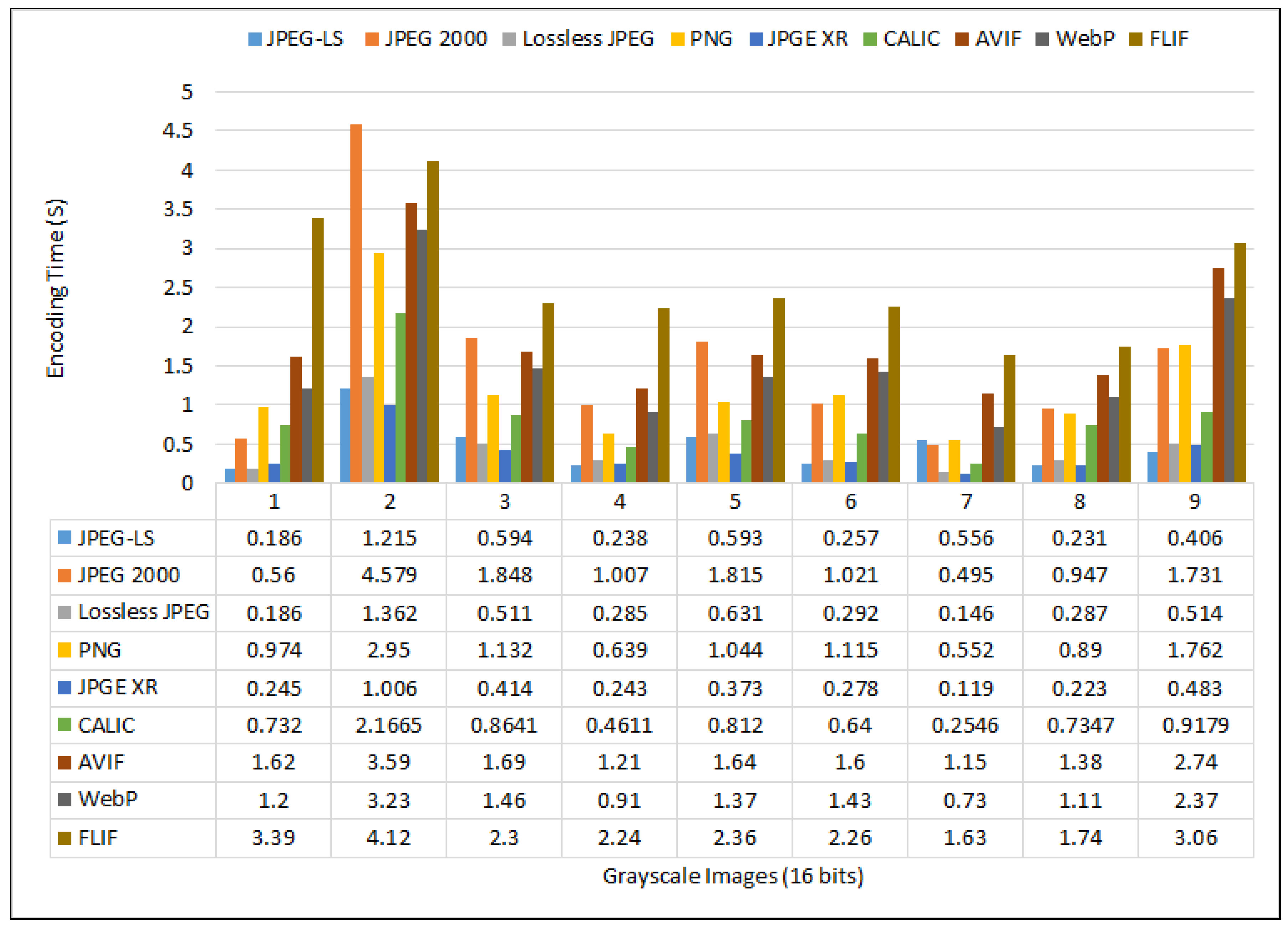

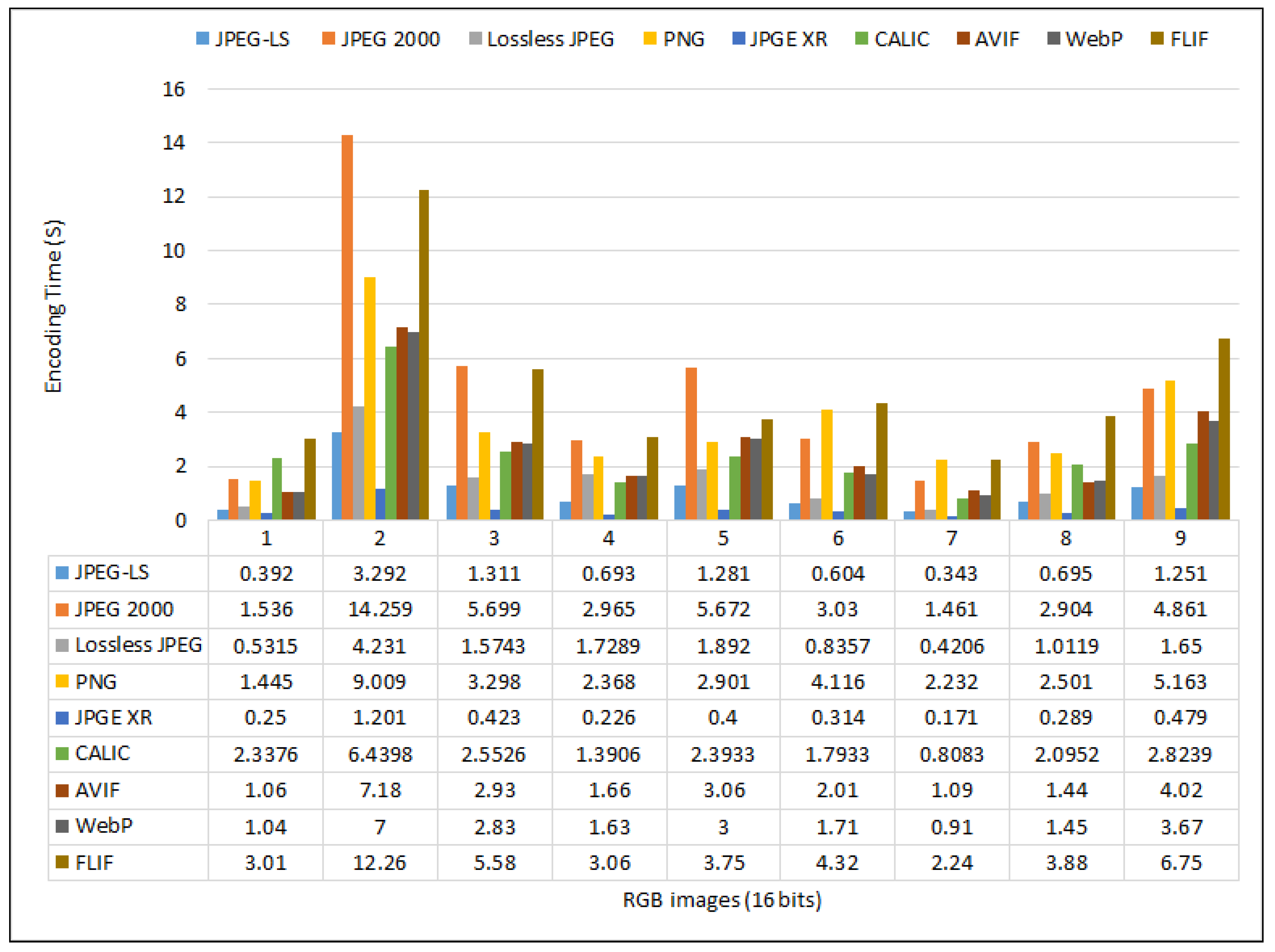

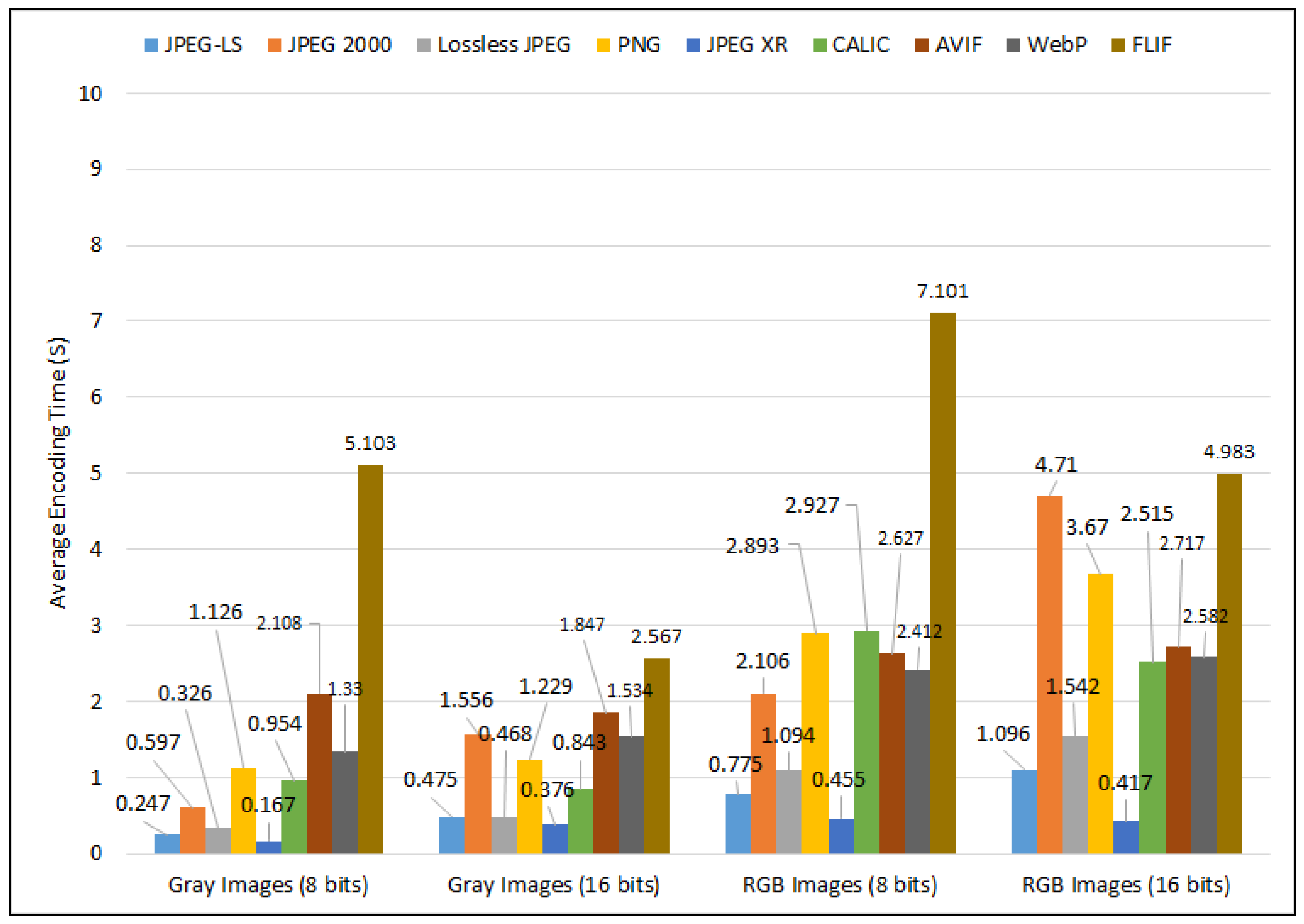

- How good is each algorithm in terms of the ET for 8-bit and 16-bit greyscale and RGB images?

- RI3:

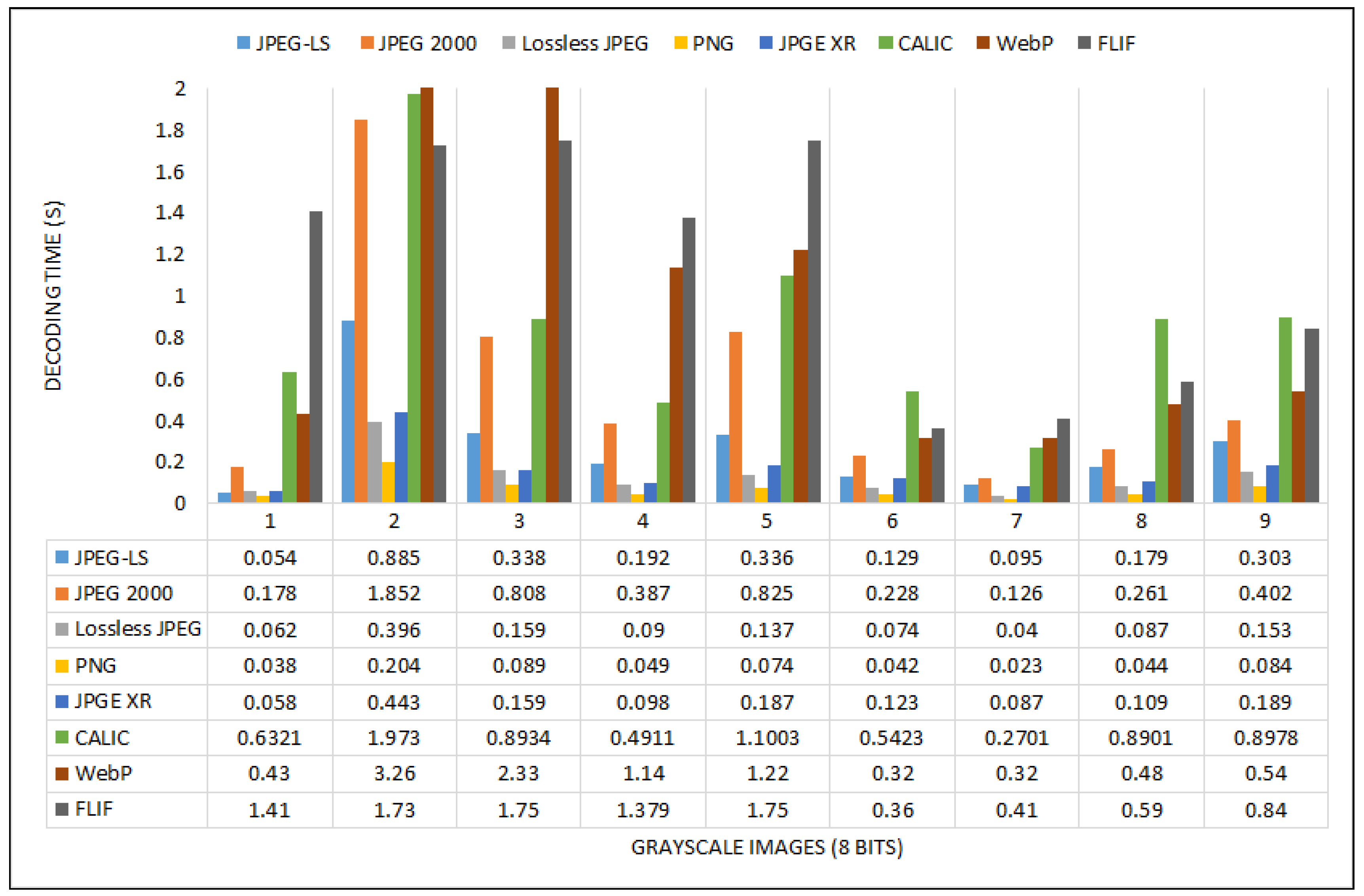

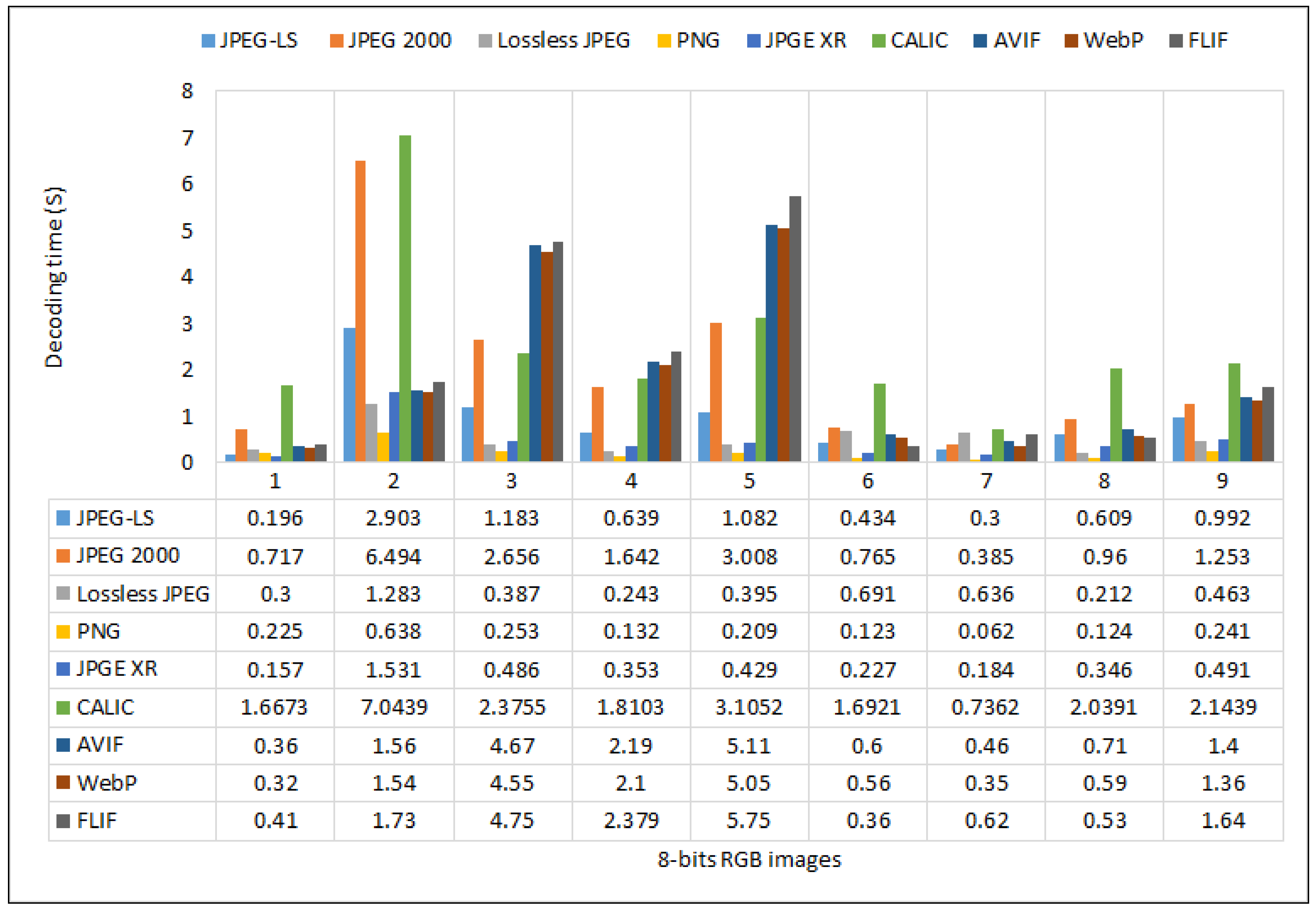

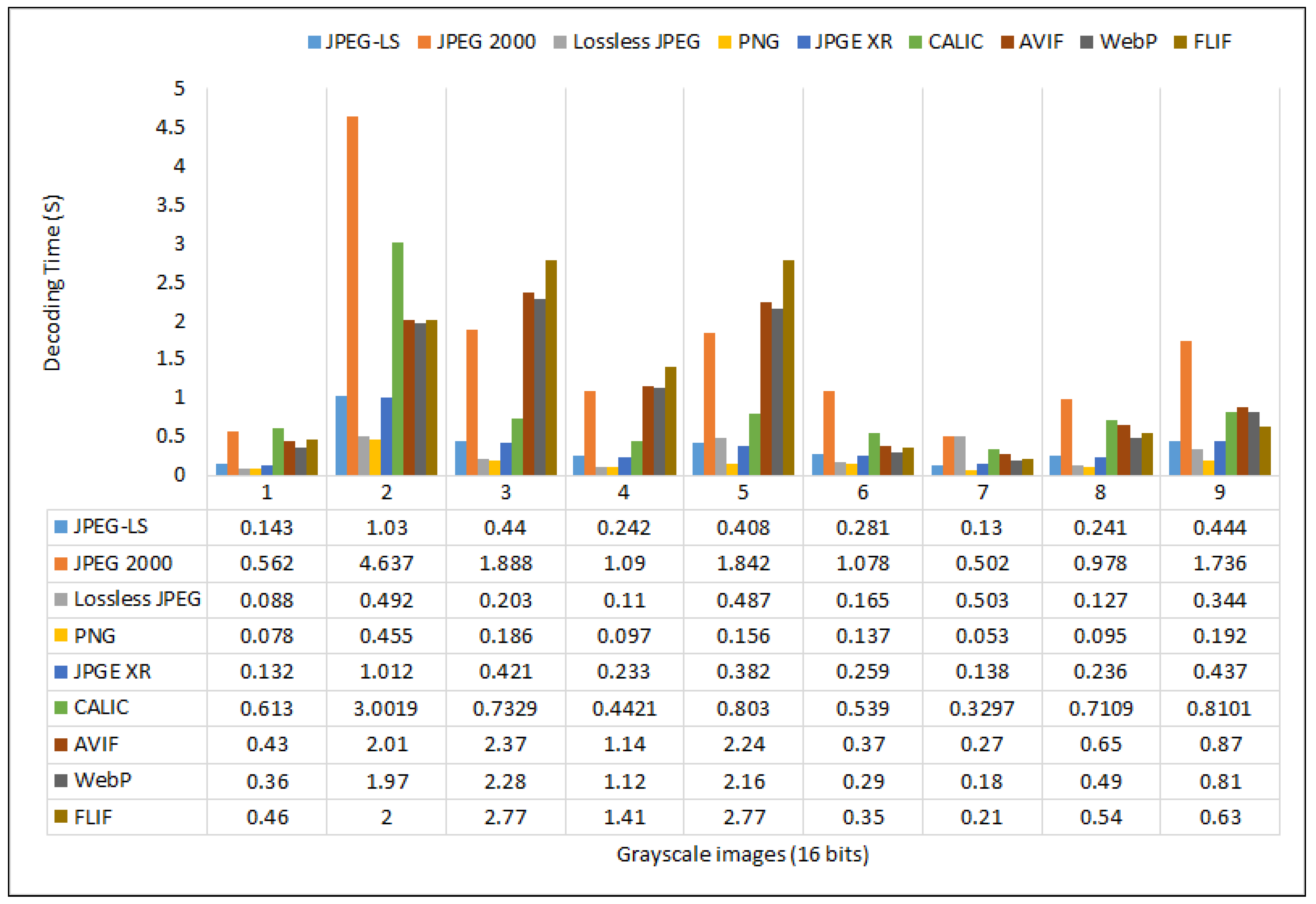

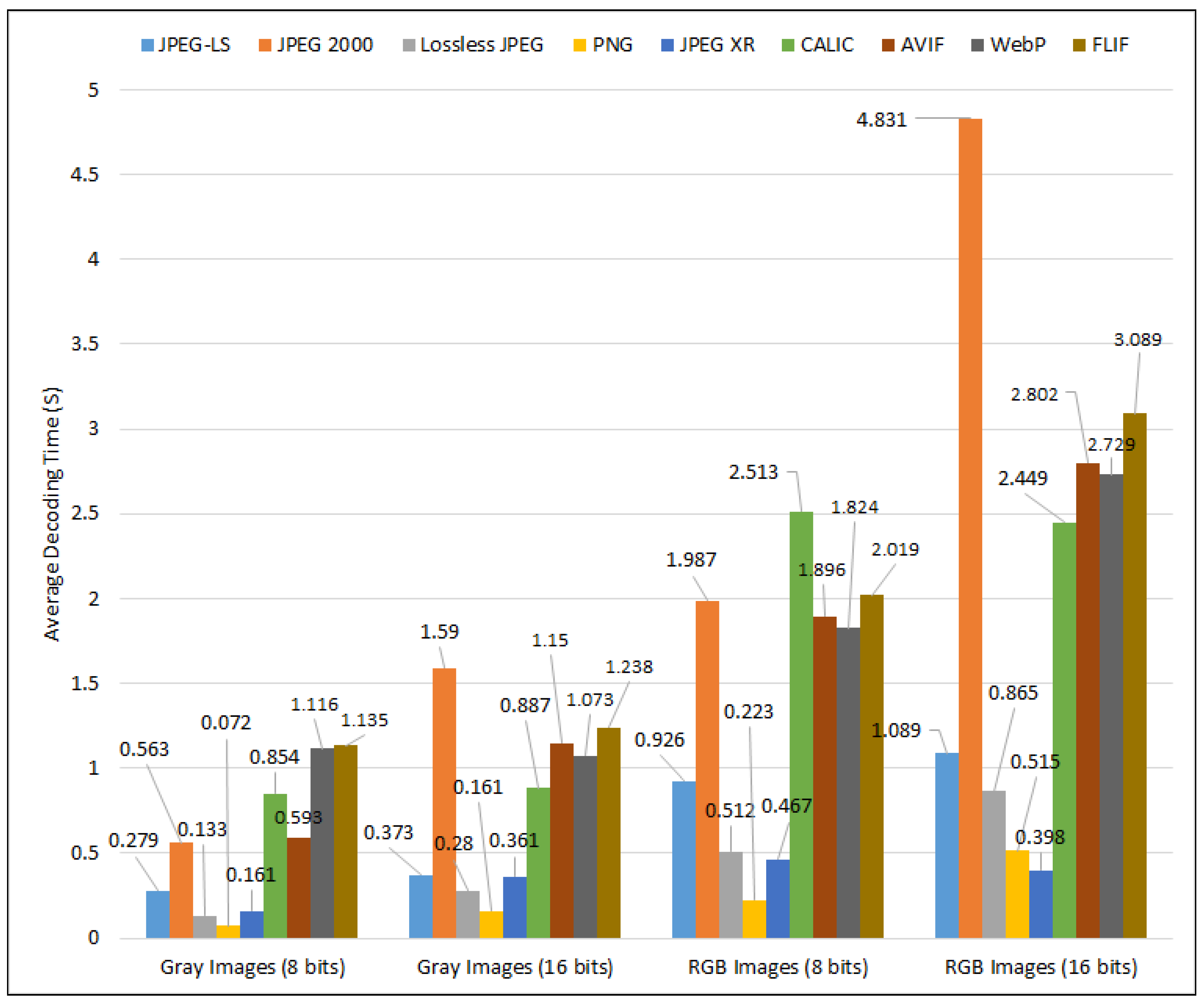

- How good is each algorithm in terms of the DT for 8-bit and 16-bit greyscale and RGB images?

- RI4:

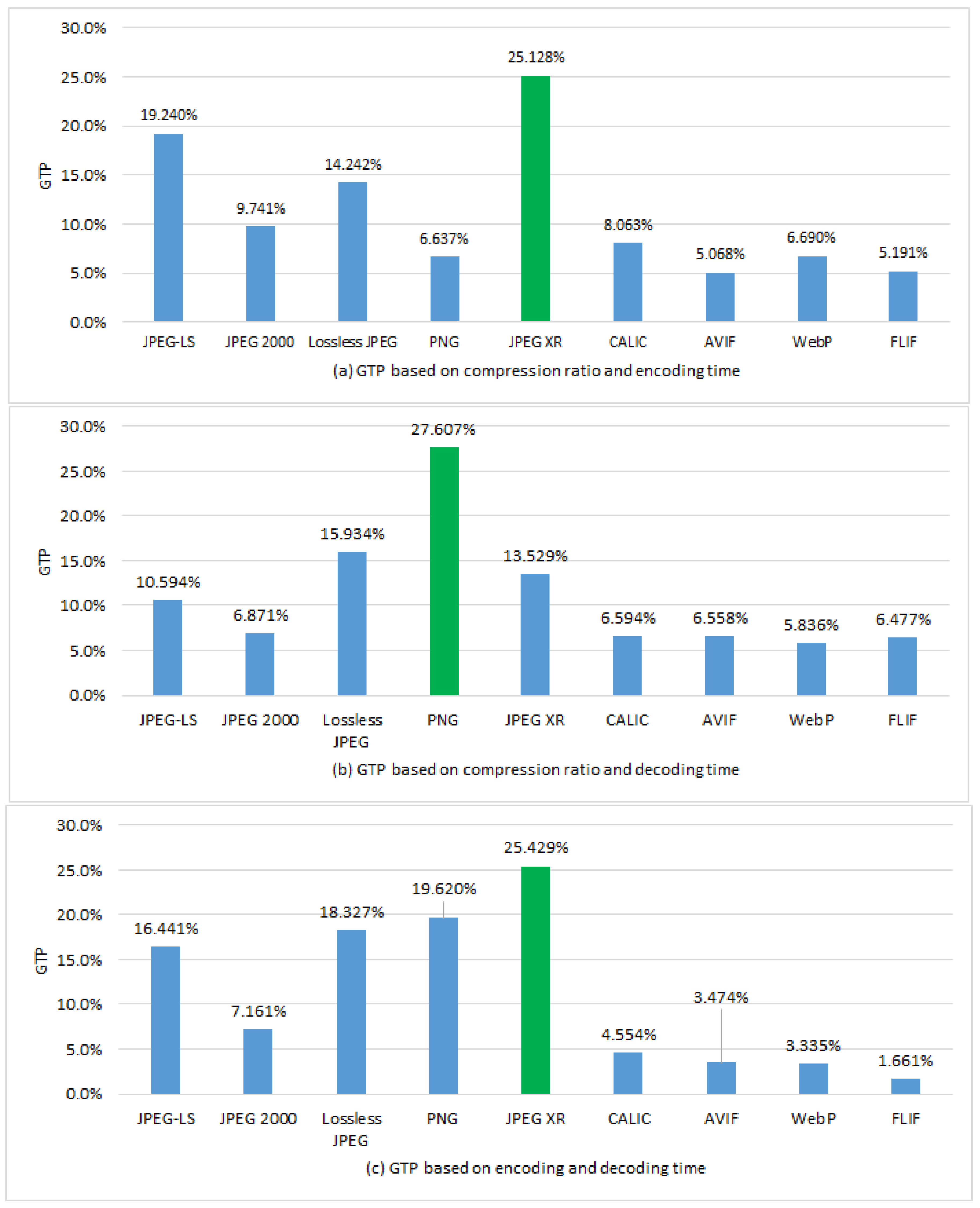

- How good is each algorithm in terms of the CR and ET for 8-bit and 16-bit greyscale and RGB images?

- RI5:

- How good is each algorithm in terms of the CR and DT for 8-bit and 16-bit greyscale and RGB images?

- RI6:

- How good is each algorithm in terms of the ET and DT for 8-bit and 16-bit greyscale and RGB images?

- RI7:

- How good is each algorithm when all parameters are equally important for 8-bit and 16-bit greyscale and RGB images?

- RI8:

- Which algorithms should be used for each kind of image?

2. Background

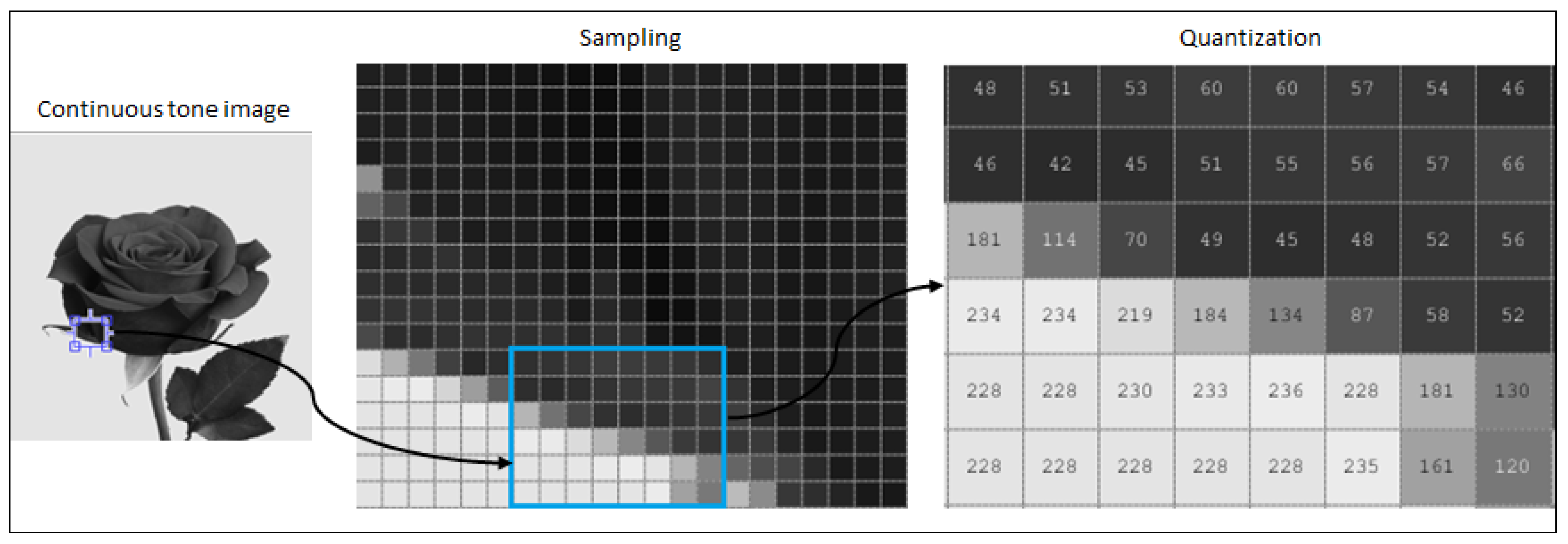

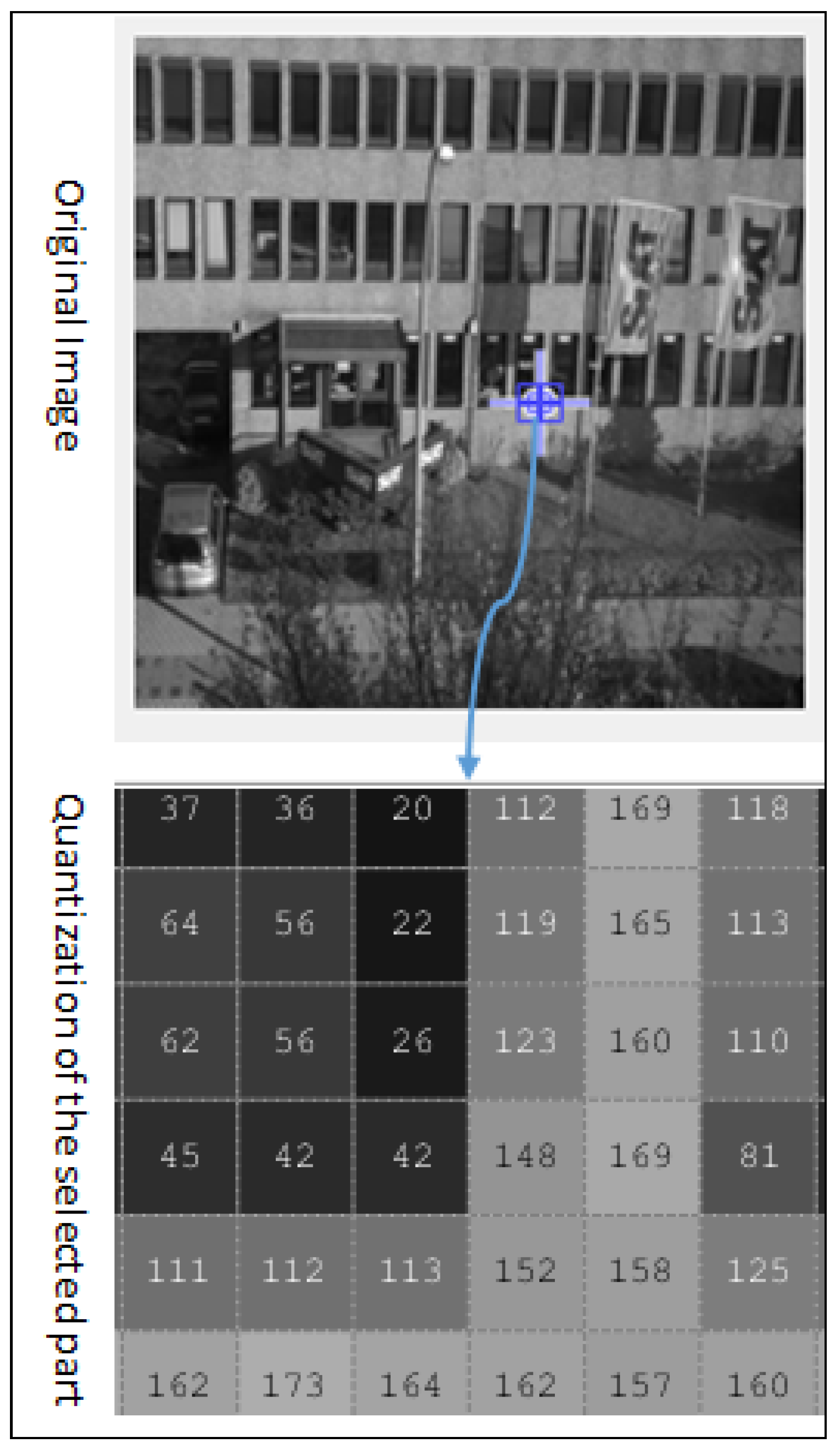

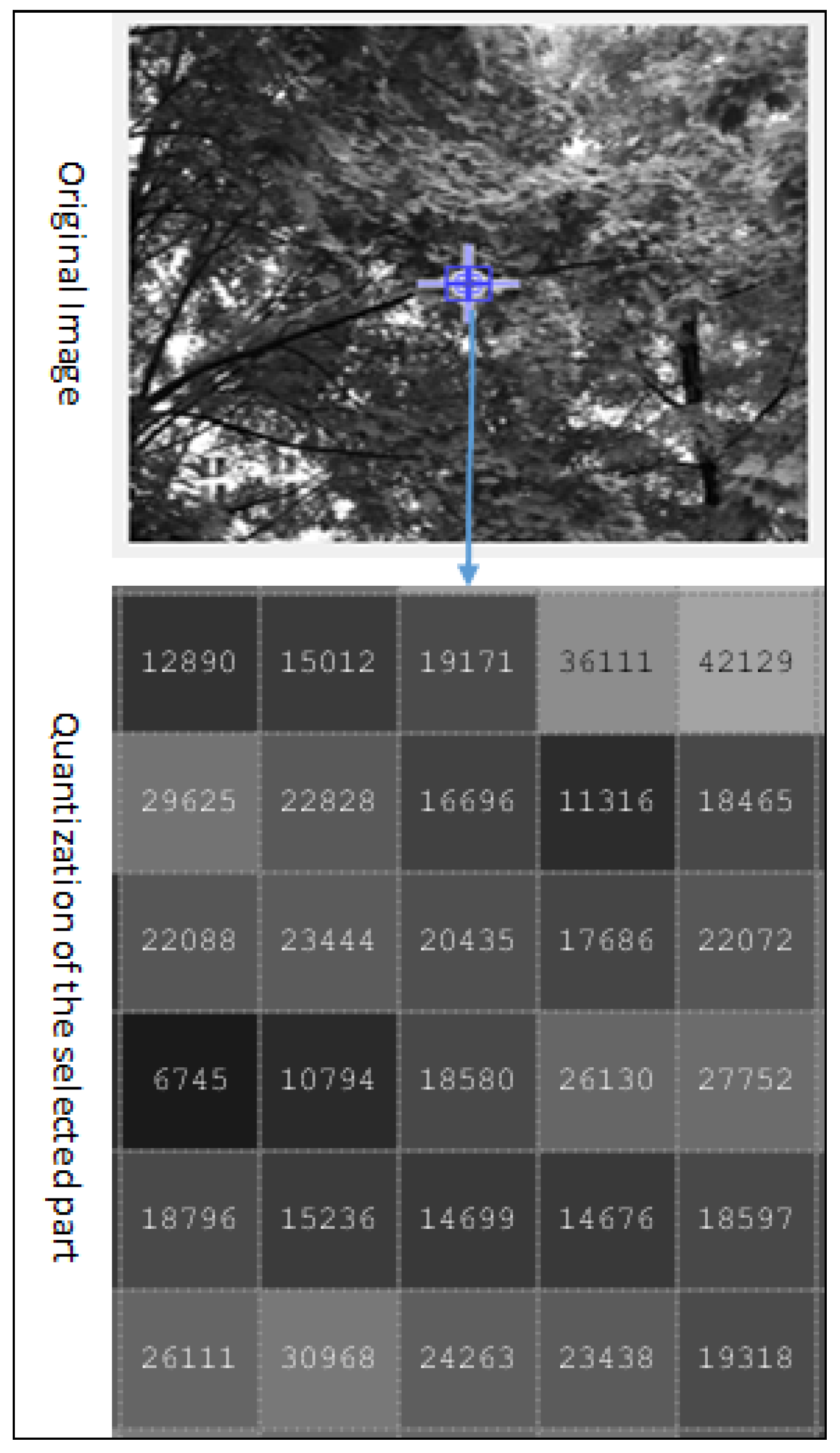

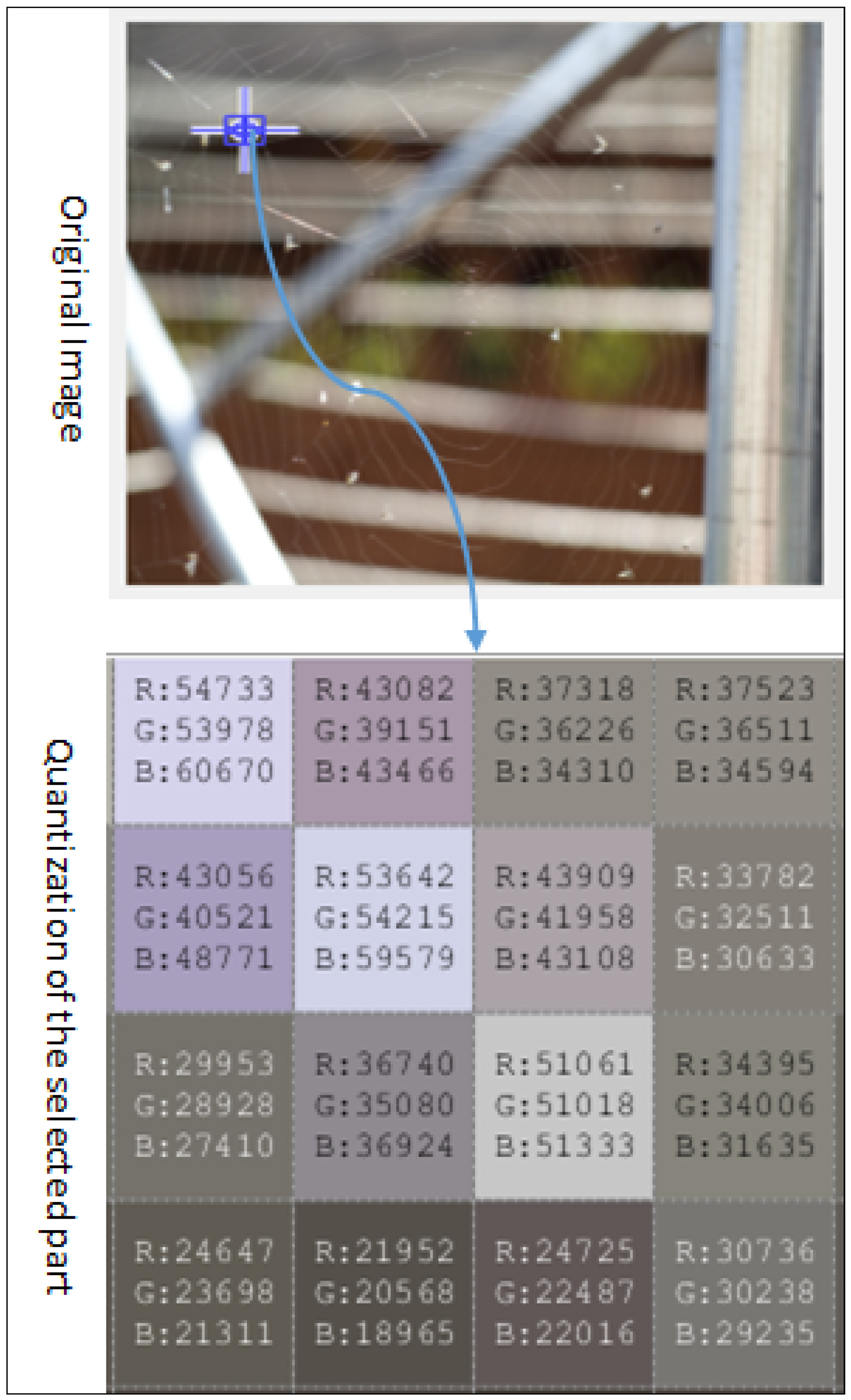

3. Data Compression

- When A = B, CR = 1, , there is no redundancy, and, hence, no compression.

- When B ≪ A, CR → infinite, , dataset A contains the highest redundancy and the greatest compression is achieved.

- When B ≫ A, CR → 0, , dataset B contains large memory than the original (A).

4. Measurement Standards

5. State-of-the-Art Lossless Image Compression Techniques

5.1. Lossless JPEG and JPEG-LS

5.2. Portable Network Graphics

5.3. Context-Based, Adaptive, Lossless Image Codec

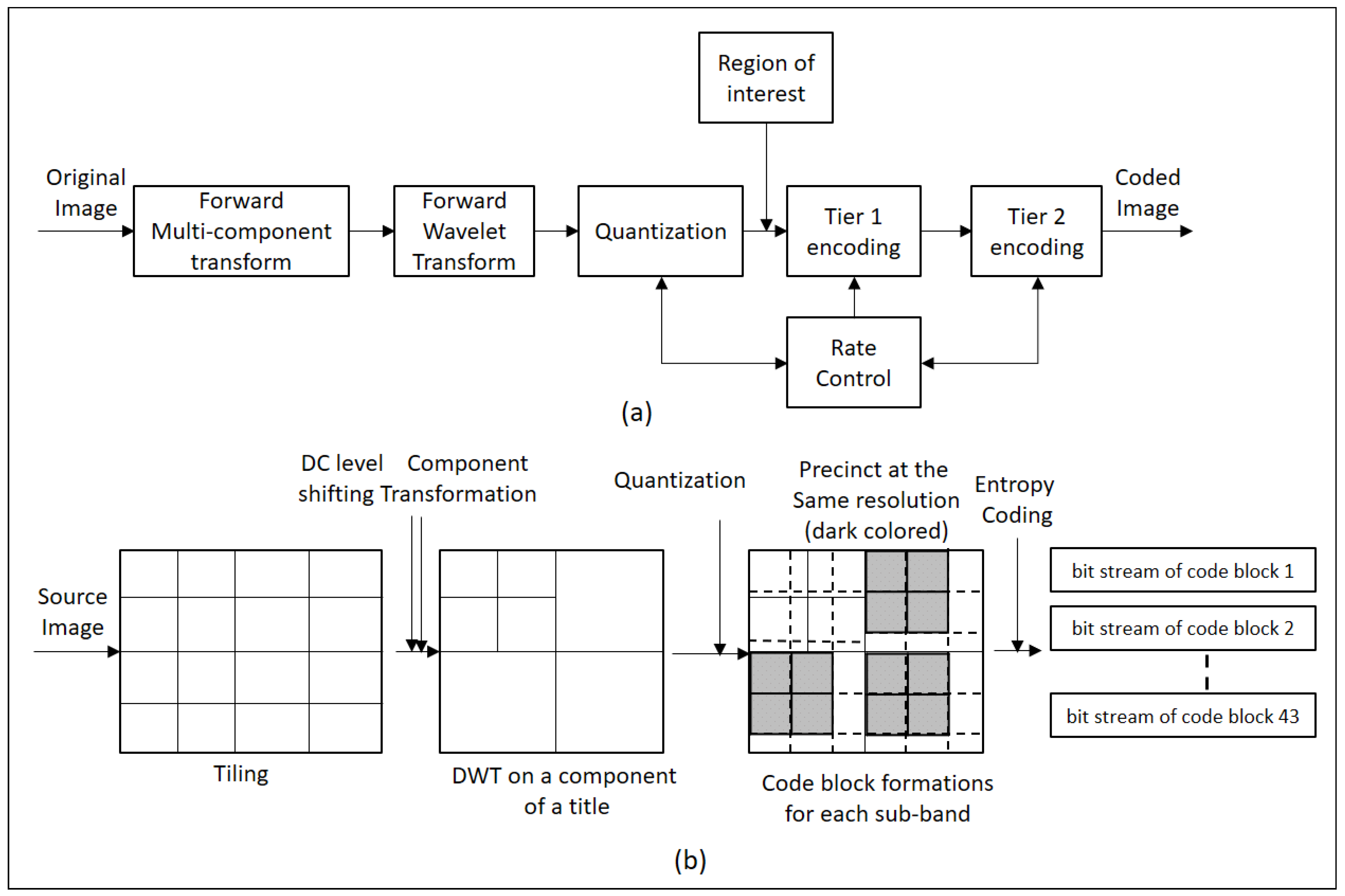

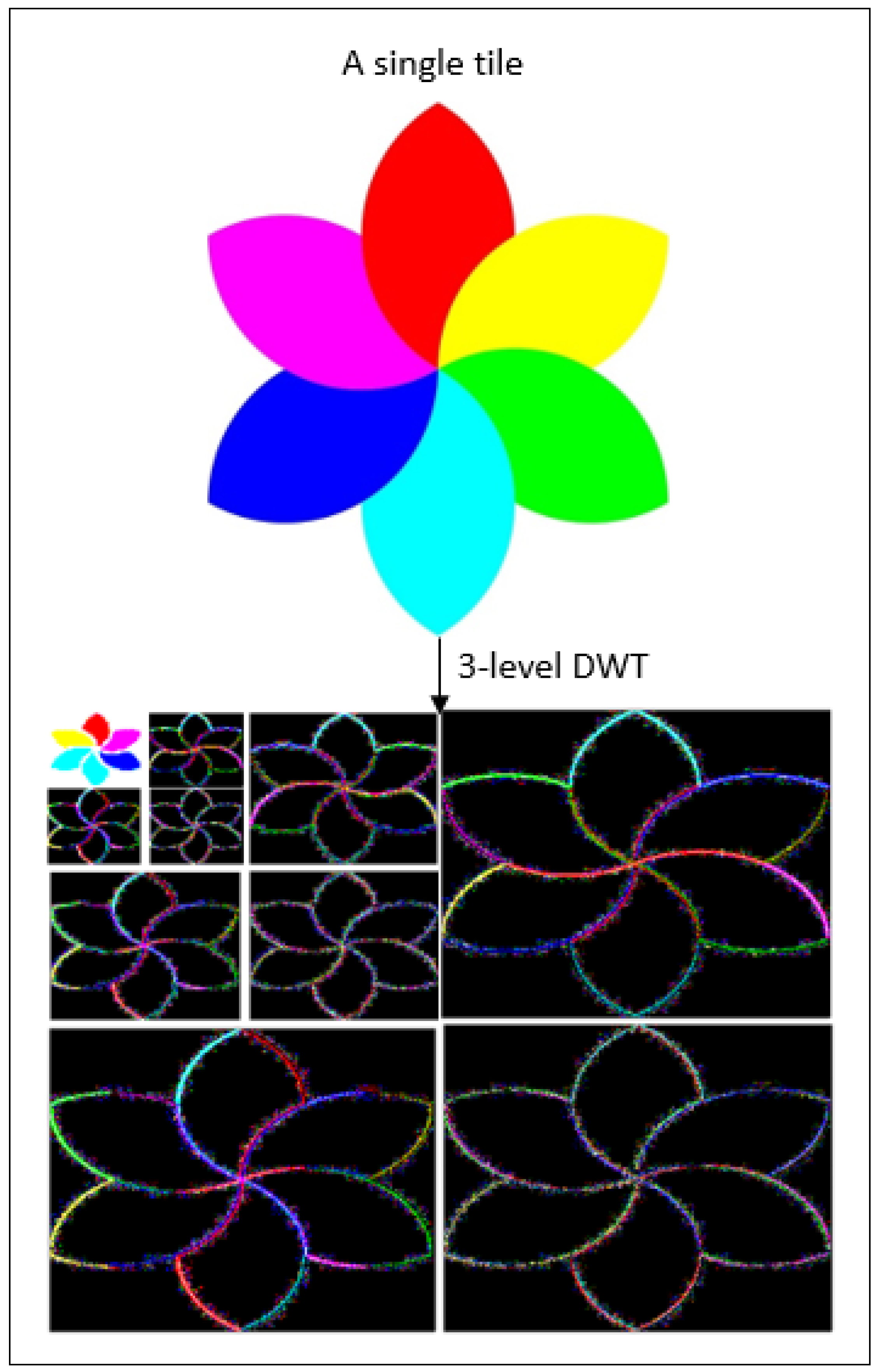

5.4. JPEG 2000

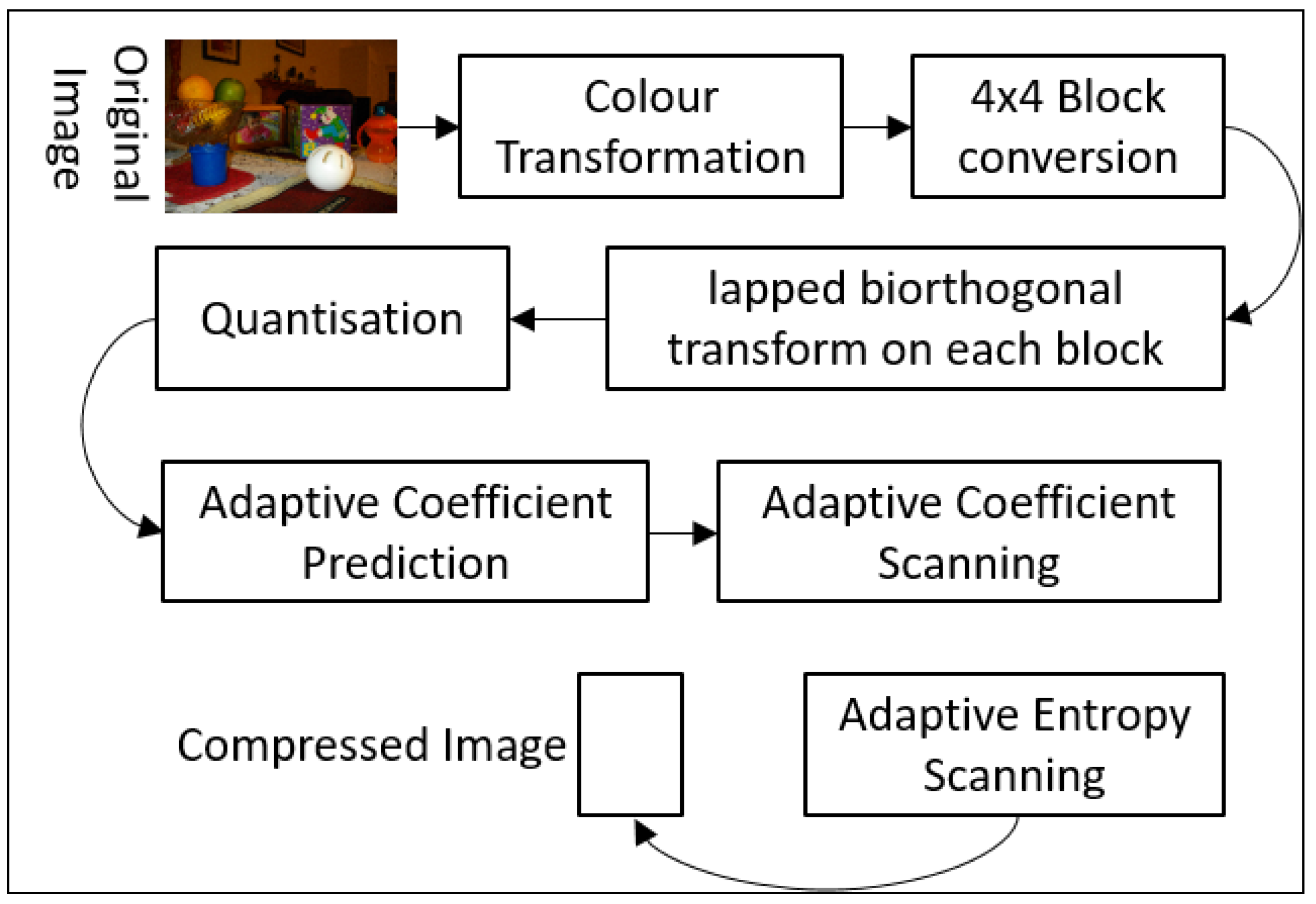

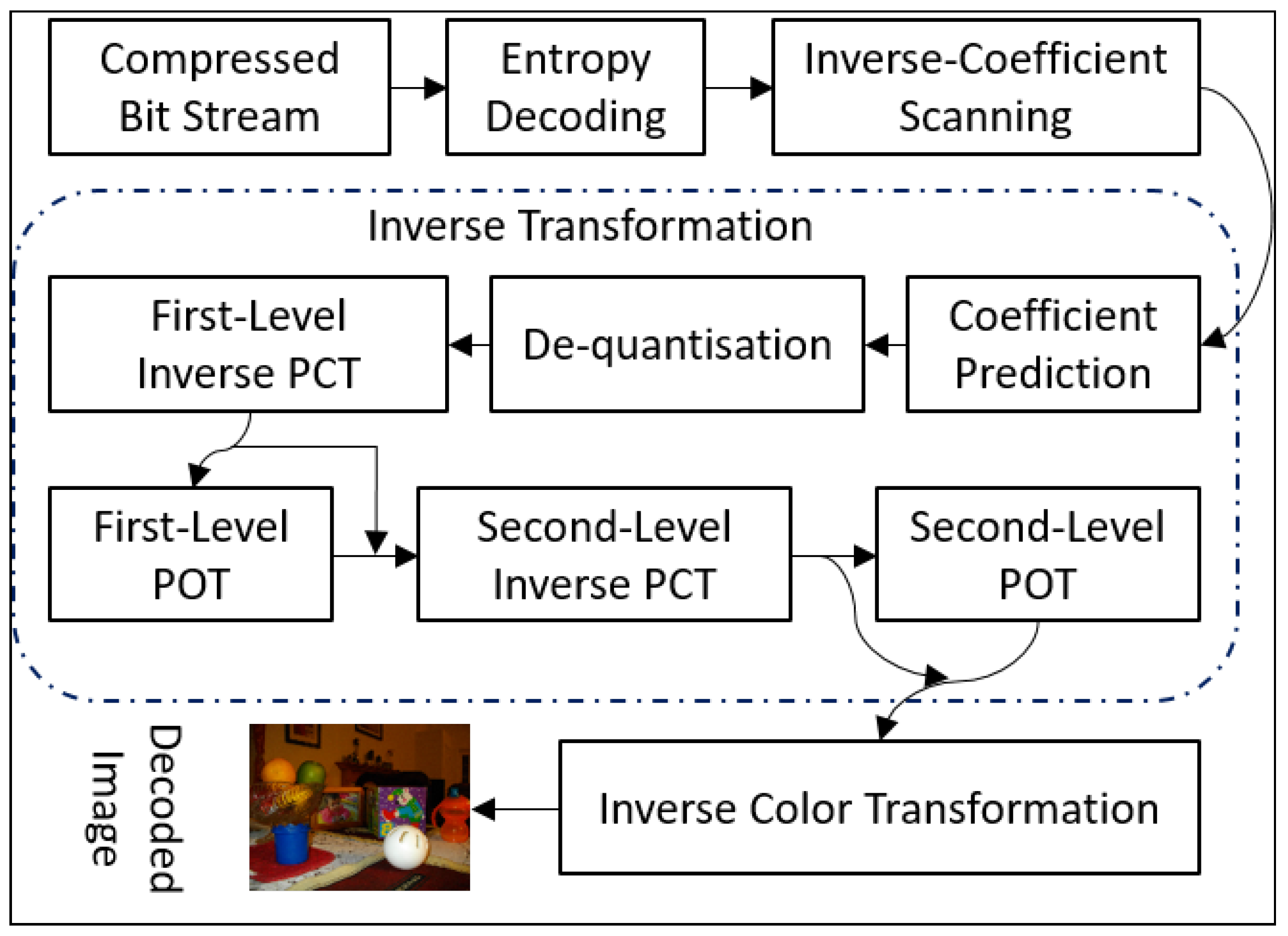

5.5. JPEG XR (JPEG Extended Range)

5.6. AV1 Image File Format (AVIF), WebP and Free Lossless Image Format (FLIF)

6. Experimental Results and Analysis

6.1. Analysis Based on Usual Parameters

6.2. Analysis Based on Our Developed Technique

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Domo.com. 2020. Becoming a Data-Driven CEO|Domo. Available online: https://www.domo.com/solution/data-never-sleeps-6 (accessed on 15 September 2020).

- Pan, W.; Li, Z.; Zhang, Y.; Weng, C. The new hardware development trend and the challenges in data management and analysis. Data Sci. Eng. 2018, 3, 266–276. [Google Scholar] [CrossRef]

- Rahman, M.; Hamada, M. Lossless image compression techniques: A state-of-the-art survey. Symmetry 2019, 11, 1274. [Google Scholar] [CrossRef]

- Rahman, M.; Hamada, M. Burrows–Wheeler transform based lossless text compression using keys and Huffman coding. Symmetry 2020, 12, 1654. [Google Scholar] [CrossRef]

- Rahman, M.A.; Shin, J.; Saha, A.K.; Islam, M.R. A novel lossless coding technique for image compression. In Proceedings of the IEEE 2018 Joint 7th International Conference on Informatics, Electronics & Vision (ICIEV) and 2018 2nd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Kitakyushu, Japan, 25–29 June 2018; pp. 82–86. [Google Scholar]

- Rahman, M.A.; Rabbi, M.F.; Rahman, M.M.; Islam, M.M.; Islam, M.R. Histogram modification based lossy image compression scheme using Huffman coding. In Proceedings of the 2018 4th International Conference on Electrical Engineering and Information & Communication Technology (iCEEiCT), Dhaka, Bangladesh, 13–15 September 2018; pp. 279–284. [Google Scholar]

- Liu, F.; Hernandez-Cabronero, M.; Sanchez, V.; Marcellin, M.W.; Bilgin, A. The current role of image compression standards in medical imaging. Information 2017, 8, 131. [Google Scholar] [CrossRef]

- Rahman, M.A.; Hamada, M. A semi-lossless image compression procedure using a lossless mode of JPEG. In Proceedings of the 2019 IEEE 13th International Symposium on Embedded Multicore/Many-Core Systems-on-Chip (MCSoC), Singapore, 1–4 October 2019; pp. 143–148. [Google Scholar]

- Bovik, A.C. (Ed.) The Essential Guide to Image Processing; Academic Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Syahrul, E. Lossless and Nearly-Lossless Image Compression Based on Combinatorial Transforms. Ph.D. Thesis, Université de Bourgogne, Dijon, France, 12 November 2011. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E.; Eddins, S.L. Digital Image Processing Using MATLAB; Pearson Education: Chennai, Tamil Nadu, India, 2004. [Google Scholar]

- Deigant, Y.; Akshat, V.; Raunak, H.; Pranjal, P.; Avi, J. A proposed method for lossless image compression in nano-satellite systems. In Proceedings of the 2017 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2017; pp. 1–11. [Google Scholar]

- Rusyn, B.; Lutsyk, O.; Lysak, Y.; Lukenyuk, A.; Pohreliuk, L. Lossless image compression in the remote sensing applications. In Proceedings of the 2016 IEEE First International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 23–27 August 2016; pp. 195–198. [Google Scholar]

- Miaou, S.G.; Ke, F.S.; Chen, S.C. A lossless compression method for medical image sequences using JPEG-LS and interframe coding. IEEE Trans. Inf. Technol. Biomed. 2009, 13, 818–821. [Google Scholar] [CrossRef]

- Taquet, J.; Labit, C. Hierarchical oriented predictions for resolution scalable lossless and near-lossless compression of CT and MRI biomedical images. IEEE Trans. Image Process. 2012, 21, 2641–2652. [Google Scholar]

- Parikh, S.S.; Ruiz, D.; Kalva, H.; Fernández-Escribano, G.; Adzic, V. High bit-depth medical image compression with HEVC. IEEE J. Biomed. Health Inform. 2017, 22, 552–560. [Google Scholar] [CrossRef]

- Lee, J.; Yun, J.; Lee, J.; Hwang, I.; Hong, D.; Kim, Y.; Kim, C.G.; Park, W.C. An effective algorithm and architecture for the high-throughput lossless compression of high-resolution images. IEEE Access 2019, 7, 138803–138815. [Google Scholar] [CrossRef]

- Blanchet, G.; Charbit, M. Digital Signal and Image Processing Using MATLAB (Vol. 4); Iste: London, UK, 2006. [Google Scholar]

- Dougherty, E.R. Digital Image Processing Methods; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Kitamura, M.; Shirai, D.; Kaneko, K.; Murooka, T.; Sawabe, T.; Fujii, T.; Takahara, A. Beyond 4K: 8K 60p live video streaming to multiple sites. Future Gener. Comput. Syst. 2011, 27, 952–959. [Google Scholar] [CrossRef]

- Yamashita, T.; Mitani, K. 8K extremely-high-resolution camera systems. Proc. IEEE 2012, 101, 74–88. [Google Scholar] [CrossRef]

- Usatoday.com. 2020. Available online: https://www.usatoday.com/story/tech/columnist/komando/2012/11/30/komando-computer-storage/1726835/ (accessed on 14 September 2020).

- Statista. 2020. Seagate Average HDD Capacity Worldwide 2015–2020|Statista. Available online: https://www.statista.com/statistics/795748/worldwide-seagate-average-hard-disk-drive-capacity/ (accessed on 14 September 2020).

- Cunningham, D.; Lane, B.; Lane, W. Gigabit Ethernet Networking; Macmillan Publishing Co., Inc.: London, UK, 1999. [Google Scholar]

- Lockie, D.; Peck, D. High-data-rate millimeter-wave radios. IEEE Microw. Mag. 2009, 10, 75–83. [Google Scholar] [CrossRef]

- Ramasubramanian, V.; Malkhi, D.; Kuhn, F.; Balakrishnan, M.; Gupta, A.; Akella, A. On the treeness of internet latency and bandwidth. In Proceedings of the 11th International Joint Conference on Measurement and Modeling of Computer Systems, Seattle, WA, USA, 15–19 June 2009; pp. 61–72. [Google Scholar]

- Rabbani, M.; Jones, P.W. Digital Image Compression Techniques; SPIE Press: Bellingham, Washington, USA, 1991; Volume 7. [Google Scholar]

- Nelson, M.; Gailly, J.L. The Data Compression Book, 2nd ed.; M & T Books: New York, NY, USA, 1995. [Google Scholar]

- Padmaja, G.M.; Nirupama, P. Analysis of various image compression techniques. ARPN J. Sci. Technol. 2012, 2, 371–376. [Google Scholar]

- Barni, M. (Ed.) Document and Image Compression; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Dhawan, S. A review of image compression and comparison of its algorithms. Int. J. Electron. Commun. Technol. 2011, 2, 22–26. [Google Scholar]

- Umbaugh, S.E. Computer Imaging: Digital Image Analysis and Processing; CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar]

- Sayood, K. Introduction to data compression. Morgan Kaufmann. 2017, 7, 301–305. [Google Scholar]

- Lu, X.; Wang, H.; Dong, W.; Wu, F.; Zheng, Z.; Shi, G. Learning a deep vector quantization network for image compression. IEEE Access 2019, 7, 118815–118825. [Google Scholar] [CrossRef]

- Zhang, Y.; Cao, H.; Jiang, H.; Li, B. Visual distortion sensitivity modeling for spatially adaptive quantization in remote sensing image compression. IEEE Geosci. Remote Sens. Lett. 2013, 11, 723–727. [Google Scholar] [CrossRef]

- Cai, C.; Chen, L.; Zhang, X.; Gao, Z. End-to-end optimized ROI image compression. IEEE Trans. Image Process. 2019, 29, 3442–3457. [Google Scholar] [CrossRef]

- Liu, S.; Bai, W.; Zeng, N.; Wang, S. A fast fractal based compression for MRI images. IEEE Access 2019, 7, 62412–62420. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Ohm, J.R.; Sullivan, G.J.; Schwarz, H.; Tan, T.K.; Wieg, T. Comparison of the coding efficiency of video coding standards—including high efficiency video coding (HEVC). IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1669–1684. [Google Scholar] [CrossRef]

- Pennebaker, W.B.; Mitchell, J.L. JPEG: Still Image Data Compression Standard; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1992. [Google Scholar]

- Jayant, N.S.; Noll, P. Digital Coding of Waveforms: Principles and Applications to Speech and Video; Prentice Hall Professional Technical Reference: Upper Saddle River, NJ, USA, 1984; pp. 115–251. [Google Scholar]

- Weinberger, M.J.; Seroussi, G.; Sapiro, G. LOCO-I: A low complexity, context-based, lossless image compression algorithm. In Proceedings of the Data Compression Conference-DCC’96, Snowbird, UT, USA, 31 March–3 April 1996; pp. 140–149. [Google Scholar]

- Weinberger, M.J.; Seroussi, G.; Sapiro, G. The LOCO-I lossless image compression algorithm: Principles and standardization into JPEG-LS. IEEE Trans. Image Process. 2000, 9, 1309–1324. [Google Scholar] [CrossRef] [PubMed]

- Ueno, I.; Ono, F. Proposed Modification of LOCO-I for Its Improvement of the Performance; ISO/IEC JTC1/SC29/WG1 document N297. 1996. Available online: https://scholar.google.com/scholar?hl=en&as_sdt=0%2C31&q=Proposed+Modification+of+LOCO-I+for+Its+Improvement+of+the+Performance&btnG= (accessed on 1 February 2021).

- Weinberger, M.J.; Seroussi, G.; Sapiro, G. Fine-Tuning the Baseline; IEC JTC1/SC29/WG1 Document; ISO: Geneva, Switzerland, 1996; Volume 341. [Google Scholar]

- Weinberger, M.J.; Seroussi, G.; Sapiro, G. Palettes and Sample Mapping in JPEG-LS; IEC JTC1/SC29/WG1 Document; ISO: Geneva, Switzerland, 1996; Volume 412. [Google Scholar]

- Weinberger, M.J.; Seroussi, G.; Sapiro, G.; Ordentlich, E. JPEG-LS with Limited-Length Code Words; IEC JTC1/SC29/WG1 Document; ISO: Geneva, Switzerland, 1997; Volume 538. [Google Scholar]

- Rissanen, J.J. Generalized Kraft inequality and arithmetic coding. IBM J. Res. Dev. 1976, 20, 198–203. [Google Scholar] [CrossRef]

- Rissanen, J.; Langdon, G. Universal modeling and coding. IEEE Trans. Inf. Theory 1981, 27, 12–23. [Google Scholar] [CrossRef]

- Weinberger, M.J.; Seroussi, G.; Sapiro, G. From LOGO-i to the JPEG-LS standard. In Proceedings of the 1999 International Conference on Image Processing (Cat. 99CH36348), Piscataway, NJ, USA, 24–28 October 1999; Volume 4, pp. 68–72. [Google Scholar]

- Merhav, N.; Seroussi, G.; Weinberger, M.J. Lossless Compression for Sources with Two Sided Geometric Distributions. IEEE Trans. Inform. Theory. 1998, 46, 121–135. [Google Scholar] [CrossRef]

- Memon, N.D.; Wu, X.; Sippy, V.; Miller, G. Interband coding extension of the new lossless JPEG standard. In Proceedings of the Visual Communications and Image Processing’97, International Society for Optics and Photonics, San Jose, CA, USA, 12–14 February 1997; Volume 3024, pp. 47–58. [Google Scholar]

- Roelofs, G.; Koman, R. PNG: The Definitive Guide; O’Reilly & Associates, Inc.: Sebastopol, CA, USA, 1999. [Google Scholar]

- Wilbur, C. PNG: The definitive guide. J. Comput. High. Educ. 2001, 12, 94–97. [Google Scholar] [CrossRef]

- Paeth, A.W. Image file compression made easy. In Graphics Gems II; Morgan Kaufmann: Burlington, MA, USA; NeuralWare, Inc.: Pittsburgh, PA, USA, 1991; pp. 93–100. [Google Scholar]

- Libpng.org. PNG Specification: Filter Algorithms. 2020. Available online: http://www.libpng.org/pub/png/spec/1.2/PNG-Filters.html (accessed on 5 October 2020).

- Wu, X. An algorithmic study on lossless image compression. In Proceedings of the Data Compression Conference-DCC’96, Snowbird, UT, USA, 31 March–3 April 1996; pp. 150–159. [Google Scholar]

- Wu, X.; Memon, N. CALIC—A context based adaptive lossless image codec. In Proceedings of the 1996 IEEE International Conference on Acoustics, Speech, and Signal, Atlanta, GA, USA, 9 May 1996; Volume 4, pp. 1890–1893. [Google Scholar]

- Boliek, M. JPEG 2000, Part I: Final Draft International Standard; (ISO/IEC FDIS15444-1), ISO/IEC JTC1/SC29/WG1 N1855; ISO: Geneva, Switzerland, 2000. [Google Scholar]

- Christopoulos, C.; Skodras, A.; Ebrahimi, T. The JPEG 2000 still image coding system: An overview. IEEE Trans. Consum. Electron. 2000, 46, 1103–1127. [Google Scholar] [CrossRef]

- Schelkens, P.; Skodras, A.; Ebrahimi, T. (Eds.) The JPEG 2000 Suite; John Wiley & Sons: Hoboken, NJ, USA, 2009; Volume 15. [Google Scholar]

- Santa-Cruz, D.; Ebrahimi, T.; Askelof, J.; Larsson, M.; Christopoulos, C.A. JPEG 2000 still image coding versus other standards. In Proceedings of the Applications of Digital Image Processing XXIII. International Society for Optics and Photonics, San Diego, CA, USA, 31 July–3 August 2000; Volume 4115, pp. 446–454. [Google Scholar]

- Sheikh, H.R.; Bovik, A.C.; Cormack, L. No-reference quality assessment using natural scene statistics: JPEG 2000. IEEE Trans. Image Process. 2005, 14, 1918–1927. [Google Scholar] [CrossRef]

- Sazzad, Z.P.; Kawayoke, Y.; Horita, Y. No reference image quality assessment for JPEG 2000 based on spatial features. Signal Process. Image Commun. 2008, 23, 257–268. [Google Scholar] [CrossRef]

- Swartz, C.S. Understanding Digital Cinema: A Professional Handbook; Taylor & Francis: Oxfordshire, UK, 2005. [Google Scholar]

- Rabbani, M. JPEG 2000: Image compression fundamentals, standards and practice. J. Electron. Imaging 2002, 11, 286. [Google Scholar]

- Skodras, A.; Christopoulos, C.; Ebrahimi, T. The JPEG 2000 still image compression standard. IEEE Signal Process. Mag. 2001, 18, 36–58. [Google Scholar] [CrossRef]

- Kim, T.; Kim, H.M.; Tsai, P.S.; Acharya, T. Memory efficient progressive rate-distortion algorithm for JPEG 2000. IEEE Trans. Circuits Syst. Video Technol. 2005, 15, 181–187. [Google Scholar]

- Liu, Z.; Karam, L.J.; Watson, A.B. JPEG 2000 encoding with perceptual distortion control. IEEE Trans. Image Process. 2006, 15, 1763–1778. [Google Scholar] [PubMed]

- Zhang, J.; Le, T.M. A new no-reference quality metric for JPEG 2000 images. IEEE Trans. Consum. Electron. 2010, 56, 743–750. [Google Scholar] [CrossRef]

- Bovik, A.C. The Essential Guide to Video Processing; Academic Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Unser, M.; Blu, T. Mathematical properties of the JPEG 2000 wavelet filters. IEEE Trans. Image Process. 2003, 12, 1080–1090. [Google Scholar] [CrossRef]

- Crow, B. Bill Crow’s Digital Imaging & Photography Blog. Docs.microsoft.com. 2020. Available online: https://docs.microsoft.com/en-us/archive/blogs/billcrow/ (accessed on 8 October 2020).

- Dufaux, F.; Sullivan, G.J.; Ebrahimi, T. The JPEG XR image coding standard [Standards in a Nutshell). IEEE Signal Process. Mag. 2009, 26, 195–204. [Google Scholar] [CrossRef]

- De Simone, F.; Goldmann, L.; Baroncini, V.; Ebrahimi, T. Subjective evaluation of JPEG XR image compression. In Proceedings of the Applications of Digital Image Processing XXXII. International Society for Optics and Photonics, San Diego, CA, USA, 3–5 August 2009; Volume 7443, p. 74430L. [Google Scholar]

- Tu, C.; Srinivasan, S.; Sullivan, G.J.; Regunathan, S.; Malvar, H.S. Low-complexity hierarchical lapped transform for lossy-to-lossless image coding in JPEG XR/HD photo. In Proceedings of the Applications of Digital Image Processing XXXI. International Society for Optics and Photonics, San Diego, CA, USA, 11–14 August 2008; Volume 7073, p. 70730C. [Google Scholar]

- Tran, T.D.; Liang, J.; Tu, C. Lapped transform via time-domain pre-and post-filtering. IEEE Trans. Signal Process. 2003, 51, 1557–1571. [Google Scholar] [CrossRef]

- International Telecommunication Union Telecommunication Standardization Sector. XR Image Coding System—Image Coding Specification. ITU-T Recommendation, 832. 2009. Available online: https://www.itu.int/rec/T-REC-T.832 (accessed on 1 February 2021).

- Si, Z.; Shen, K. Research on the WebP image format. In Advanced Graphic Communications, Packaging Technology and Materials; Springer: Singapore, 2016; pp. 271–277. [Google Scholar]

- Ginesu, G.; Pintus, M.; Giusto, D.D. Objective assessment of the WebP image coding algorithm. Signal Process. Image Commun. 2012, 27, 867–874. [Google Scholar] [CrossRef]

- Singh, H. Introduction to Image Processing. In Practical Machine Learning and Image Processing; Apress: Berkeley, CA, USA, 2019; pp. 7–27. [Google Scholar]

- Flif.info. FLIF—Example. 2021. Available online: https://flif.info/example.html (accessed on 2 January 2021).

- Google Developers. Compression Techniques|Webp|Google Developers. 2021. Available online: https://developers.google.com/speed/webp/docs/compression (accessed on 19 January 2021).

- Zimmerman, S. A Look At AV1 And The Future Of Video Codecs: Google’s Answer To HEVC. xda-Developers. 2021. Available online: https://www.xda-developers.com/av1-future-video-codecs-google-hevc/ (accessed on 2 January 2021).

- Ozer, J. What Is VP9? Streaming Media Magazine. 2021. Available online: https://www.streamingmedia.com/Articles/Editorial/-111334.aspx> (accessed on 2 January 2021).

- Wiegand, T.; Sullivan, G.J.; Bjontegaard, G.; Luthra, A. Overview of the H. 264/AVC video coding standard. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 560–576. [Google Scholar] [CrossRef]

- Chen, Y.; Mukherjee, D.; Han, J.; Grange, A.; Xu, Y.; Parker, S.; Chen, C.; Su, H.; Joshi, U.; Chiang, C.H.; et al. An Overview of Coding Tools in AV1: The First Video Codec from the Alliance for Open Media. APSIPA Trans. Signal Inf. Process. 2020, 9, e6. [Google Scholar] [CrossRef]

- Chen, Y.; Murherjee, D.; Han, J.; Grange, A.; Xu, Y.; Liu, Z.; Parker, S.; Chen, C.; Su, H.; Joshi, U.; et al. An overview of core coding tools in the AV1 video codec. In 2018 Picture Coding Symposium (PCS). San Francisco, CA, USA, 24–27 June 2018; pp. 41–45. [Google Scholar]

- LambdaTest.AVIF Image Format—The Next-Gen Compression Codec. 2021. Available online: https://www.lambdatest.com/blog/avif-image-format/ (accessed on 2 January 2021).

- En.wikipedia.org. 2021. AV1. Available online: https://en.wikipedia.org/wiki/AV1 (accessed on 19 January 2021).

- Sneyers, J.; Wuille, P. FLIF: Free lossless image format based on MANIAC compression. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 66–70. [Google Scholar]

- Soferman, N. FLIF, The New Lossless Image Format That Outperforms PNG, Webp And BPG. Cloudinary. 2021. Available online: https://cloudinary.com/blog/flif_the_new_lossless_image_format_that_outperforms_png_webp_and_bpg (accessed on 2 January 2021).

- Flif.info. 2021. FLIF—Free Lossless Image Format. Available online: https://flif.info/ (accessed on 2 January 2021).

- Flif.info. 2021. FLIF—Software. Available online: https://flif.info/software.html (accessed on 2 January 2021).

- Hussain, A.J.; Al-Fayadh, A.; Radi, N. Image compression techniques: A survey in lossless and lossy algorithms. Neurocomputing 2018, 300, 44–69. [Google Scholar] [CrossRef]

- Shukla, J.; Alwani, M.; Tiwari, A.K. A survey on lossless image compression methods. In Proceedings of the 2010 2nd International Conference on Computer Engineering and Technology, Chengdu, China, 16–18 April 2010; Volume 6, p. V6-136. [Google Scholar]

- Zhang, C.; Chen, T. A survey on image-based rendering—Representation, sampling and compression. Signal Process. Image Commun. 2004, 19, 1–28. [Google Scholar] [CrossRef]

- Blanes, I.; Magli, E.; Serra-Sagrista, J. A tutorial on image compression for optical space imaging systems. IEEE Geosci. Remote. Sens Mag. 2014, 2, 8–26. [Google Scholar] [CrossRef]

- Imagecompression.info. The New Test Images—IMAGE Compression Benchmark. 2020. Available online: https://imagecompression.info/test_images/ (accessed on 10 October 2020).

| Symbol (S) | Probability (P(k)) | Code-Word | Code-Word Length () | |

|---|---|---|---|---|

| 118 | 0.12 | 100 | 3 | 0.36 |

| 119 | 0.16 | 001 | 3 | 0.48 |

| 120 | 0.12 | 011 | 3 | 0.36 |

| 139 | 0.01 | 1111 | 4 | 0.04 |

| 140 | 0.17 | 000 | 3 | 0.51 |

| 141 | 0.12 | 010 | 3 | 0.36 |

| 169 | 0.11 | 101 | 3 | 0.33 |

| 170 | 0.09 | 1110 | 4 | 0.36 |

| 171 | 0.1 | 110 | 3 | 0.3 |

| = 3.1 bits |

| E | F | G | Past pixels | |||

| H | A | B | C | Current pixel | ||

| K | D | P | Future pixels | |||

| Mode | Predictor |

|---|---|

| 0 | No prediction |

| 1 | D |

| 2 | B |

| 3 | A |

| 4 | D + B − A |

| 5 | D + (B − A)/2 |

| 6 | B + (D − A)/2 |

| 7 | (D + B)/2 |

| Type Byte | Filter Name | Prediction |

|---|---|---|

| 0 | None | Zero |

| 1 | Sub | D |

| 2 | Up | B |

| 3 | Average | The rounded mean of D and B |

| 4 | Paeth [56] | One of D, B, or A (whichever is closest to P = D + B − A) |

| Image Name (Dimensions) | JPGE-LS | JPEG 2000 | Lossless JPEG | PNG | JPEG XR | CALIC | AVIF | WebP | FLIF |

|---|---|---|---|---|---|---|---|---|---|

| artificial.pgm () | 10.030 | 6.720 | 4.884 | 8.678 | 3.196 | 10.898 | 4.145 | 10.370 | 13.260 |

| big_tree.pgm () | 2.144 | 2.106 | 1.806 | 1.973 | 2.011 | 2.159 | 2.175 | 2.145 | 2.263 |

| bridge.pgm () | 1.929 | 1.910 | 1.644 | 1.811 | 1.846 | 1.929 | 1.973 | 1.943 | 1.979 |

| cathedral.pgm () | 2.241 | 2.160 | 1.813 | 2.015 | 2.038 | 2.254 | 2.222 | 2.217 | 2.365 |

| deer.pgm () | 1.717 | 1.748 | 1.583 | 1.713 | 1.685 | 1.727 | 1.872 | 1.794 | 1.775 |

| fireworks.pgm () | 5.460 | 4.853 | 3.355 | 4.095 | 3.054 | 5.516 | 4.486 | 4.858 | 5.794 |

| flower_foveon.pgm () | 3.925 | 3.650 | 2.970 | 3.054 | 2.932 | 3.935 | 4.115 | 3.749 | 3.975 |

| hdr.pgm () | 3.678 | 3.421 | 2.795 | 2.857 | 2.847 | 3.718 | 3.825 | 3.456 | 3.77 |

| spider_web.pgm () | 4.531 | 4.202 | 3.145 | 3.366 | 3.167 | 4.824 | 4.682 | 4.133 | 4.876 |

| Image Name (Dimensions) | JPGE-LS | JPEG 2000 | Lossless JPEG | PNG | JPEG XR | CALIC | AVIF | WebP | FLIF |

|---|---|---|---|---|---|---|---|---|---|

| artificial.pgm () | 10.333 | 8.183 | 4.924 | 10.866 | 4.316 | 10.41 | 6.881 | 17.383 | 18.446 |

| big_tree.pgm () | 1.856 | 1.823 | 1.585 | 1.721 | 1.719 | 1.886 | 2.254 | 1.835 | 1.922 |

| bridge.pgm () | 1.767 | 1.765 | 1.553 | 1.686 | 1.735 | 1.784 | 2.21 | 1.748 | 1.866 |

| cathedral.pgm () | 2.120 | 2.135 | 1.734 | 1.922 | 2.015 | 2.126 | 2.697 | 2.162 | 2.406 |

| deer.pgm () | 1.532 | 1.504 | 1.407 | 1.507 | 1.480 | 1.537 | 1.915 | 1.567 | 1.588 |

| fireworks.pgm () | 5.262 | 4.496 | 3.279 | 3.762 | 3.121 | 5.279 | 5.432 | 4.624 | 5.742 |

| flower_foveon.pgm () | 3.938 | 3.746 | 2.806 | 3.149 | 3.060 | 3.927 | 5.611 | 4.158 | 4.744 |

| hdr.pgm () | 3.255 | 3.161 | 2.561 | 2.653 | 2.716 | 3.269 | 4.614 | 3.213 | 3.406 |

| spider_web.pgm () | 4.411 | 4.209 | 3.029 | 3.365 | 3.234 | 4.555 | 6.017 | 4.317 | 4.895 |

| Image Name (Dimensions) | JPGE-LS | JPEG 2000 | Lossless JPEG | PNG | JPEG XR | CALIC | AVIF | WebP | FLIF |

|---|---|---|---|---|---|---|---|---|---|

| artificial.pgm () | 4.073 | 4.007 | 2.791 | 4.381 | 6.393 | 4.174 | 8.282 | 20.846 | 26.677 |

| big_tree.pgm () | 1.355 | 1.325 | 1.287 | 1.181 | 4.028 | 1.324 | 4.35 | 4.229 | 4.537 |

| bridge.pgm () | 1.309 | 1.279 | 1.244 | 1.147 | 3.696 | 1.373 | 3.944 | 3.843 | 3.971 |

| cathedral.pgm () | 1.373 | 1.337 | 1.293 | 1.191 | 4.084 | 1.342 | 4.450 | 4.398 | 4.749 |

| deer.pgm () | 1.252 | 1.241 | 1.225 | 1.132 | 3.371 | 1.339 | 3.742 | 3.549 | 3.557 |

| fireworks.pgm () | 1.946 | 1.809 | 1.740 | 1.604 | 6.116 | 1.955 | 8.967 | 9.773 | 11.647 |

| flower_foveon.pgm () | 1.610 | 1.591 | 1.523 | 1.316 | 5.884 | 1.663 | 8.273 | 7.626 | 8.029 |

| hdr.pgm () | 1.595 | 1.563 | 1.491 | 1.297 | 5.755 | 1.569 | 7.779 | 7.040 | 7.754 |

| spider_web.pgm () | 1.736 | 1.771 | 1.554 | 1.367 | 6.386 | 1.742 | 9.658 | 8.442 | 10.196 |

| Image Name (Dimensions) | JPGE-LS | JPEG 2000 | Lossless JPEG | PNG | JPEG XR | CALIC | AVIF | WebP | FLIF |

|---|---|---|---|---|---|---|---|---|---|

| artificial.pgm () | 4.335 | 4.734 | 2.695 | 4.896 | 8.644 | 4.404 | 13.783 | 36.292 | 37.021 |

| big_tree.pgm () | 1.302 | 1.261 | 1.249 | 1.150 | 3.441 | 1.284 | 4.504 | 3.614 | 3.841 |

| bridge.pgm () | 1.274 | 1.243 | 1.240 | 1.132 | 3.470 | 1.283 | 4.414 | 3.445 | 3.739 |

| cathedral.pgm () | 1.379 | 1.333 | 1.267 | 1.218 | 4.036 | 1.383 | 5.397 | 4.292 | 4.851 |

| deer.pgm () | 1.197 | 1.173 | 1.236 | 1.100 | 2.961 | 1.196 | 3.826 | 3.096 | 3.176 |

| fireworks.pgm () | 2.378 | 1.832 | 1.688 | 2.053 | 6.453 | 2.384 | 11.318 | 10.048 | 12.283 |

| flower_foveon.pgm () | 1.724 | 1.664 | 1.494 | 1.371 | 6.121 | 1.765 | 11.217 | 8.305 | 9.513 |

| hdr.pgm () | 1.535 | 1.518 | 1.473 | 1.275 | 5.432 | 1.586 | 9.219 | 6.391 | 6.819 |

| spider_web.pgm () | 1.702 | 1.784 | 1.531 | 1.360 | 6.466 | 1.718 | 12.018 | 8.576 | 9.787 |

| Grayscale Images (8 bits) | ||||

|---|---|---|---|---|

| Compression Ratio | Encoding Time | Decoding Time | First Choice | Second Choice |

| ✓ | ✓ | ✓ | JPEG XR | PNG |

| ✓ | ✓ | - | JPEG XR | JPEG-LS |

| ✓ | - | ✓ | PNG | Lossless JPEG |

| - | ✓ | ✓ | JPEG XR | PNG |

| ✓ | - | - | FLIF | CALIC |

| - | - | ✓ | PNG | Lossless JPEG |

| - | ✓ | - | JPEG XR | JPEG-LS |

| Grayscale Images (16 bits) | ||||

| ✓ | ✓ | ✓ | JPEG XR | PNG |

| ✓ | ✓ | - | JPEG XR | FLIF |

| ✓ | - | ✓ | PNG | JPEG XR |

| - | ✓ | ✓ | PNG | Lossless JPEG |

| ✓ | - | - | FLIF | WebP |

| - | - | ✓ | PNG | Lossless JPEG |

| - | ✓ | - | JPEG XR | Lossless JPEG |

| RGB Images (8 bits) | ||||

| ✓ | ✓ | ✓ | JPEG XR | PNG |

| ✓ | ✓ | - | JPEG XR | JPGE-LS |

| ✓ | - | ✓ | PNG | JPGE-XR |

| - | ✓ | ✓ | JPEG XR | PNG |

| ✓ | - | - | FLIF | WebP |

| - | - | ✓ | PNG | JPEG XR |

| - | ✓ | - | JPEG XR | JPEG-LS |

| RGB Images (16 bits) | ||||

| ✓ | ✓ | ✓ | JPEG XR | PNG |

| ✓ | ✓ | - | JPEG XR | WebP |

| ✓ | - | ✓ | JPEG XR | PNG |

| - | ✓ | ✓ | JPEG XR | PNG |

| ✓ | - | - | FLIF | WebP |

| - | - | ✓ | JPEG XR | PNG |

| - | ✓ | - | JPEG XR | JPEG-LS |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahman, M.A.; Hamada, M.; Shin, J. The Impact of State-of-the-Art Techniques for Lossless Still Image Compression. Electronics 2021, 10, 360. https://doi.org/10.3390/electronics10030360

Rahman MA, Hamada M, Shin J. The Impact of State-of-the-Art Techniques for Lossless Still Image Compression. Electronics. 2021; 10(3):360. https://doi.org/10.3390/electronics10030360

Chicago/Turabian StyleRahman, Md. Atiqur, Mohamed Hamada, and Jungpil Shin. 2021. "The Impact of State-of-the-Art Techniques for Lossless Still Image Compression" Electronics 10, no. 3: 360. https://doi.org/10.3390/electronics10030360

APA StyleRahman, M. A., Hamada, M., & Shin, J. (2021). The Impact of State-of-the-Art Techniques for Lossless Still Image Compression. Electronics, 10(3), 360. https://doi.org/10.3390/electronics10030360