Exploring the Role of Trust and Expectations in CRI Using In-the-Wild Studies

Abstract

1. Introduction

1.1. Distinguishing Trust and Expectations in CRI

1.2. Cognitive States

1.3. Existing Research on Developing Trust and Expectations

1.4. The Methodological Gap

1.5. Our Approach

- It allows interaction and collaboration among robots and children to unfold naturally in an environment that is familiar to the children, for example their classroom, as opposed to some HRI lab. This facilitates intuitive and natural expressions by children, which provides useful data to assess factors that promote or hinder trust and expectations in CRI.

- It allows unstructured, playful interactions between the children and the robot. The activity of playing is crucial for children’s development [50]: they find it easier to express their needs and emotions during free play, rather than in situations where they have to do structured tasks by following some rules [51].

- It allows us to study safety issues in robots when children interact with them in complex social contexts. This is crucial for designing robots that can be deployed in real-world settings.

- It helps to study the ethical aspects of CRI by observing children interacting with the robot in semi-supervised or unsupervised situations, thereby reducing the observer-expectancy effect [52] by the moderator.

- It allows conducting long-term, longitudinal studies in CRI.

2. Motivations

3. Methodology

3.1. Participants

Children as Participants—A Methodological Challenge

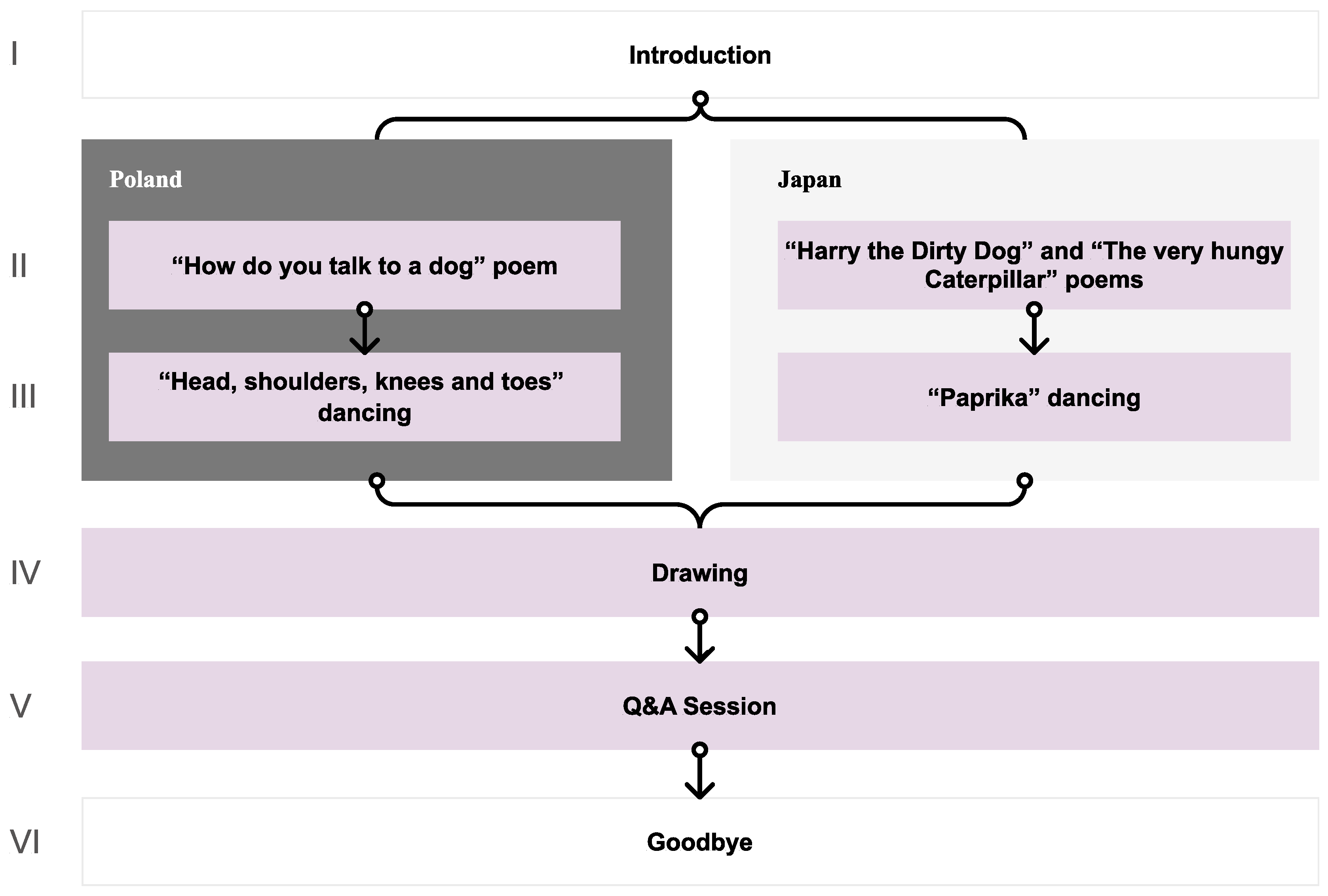

3.2. Procedure

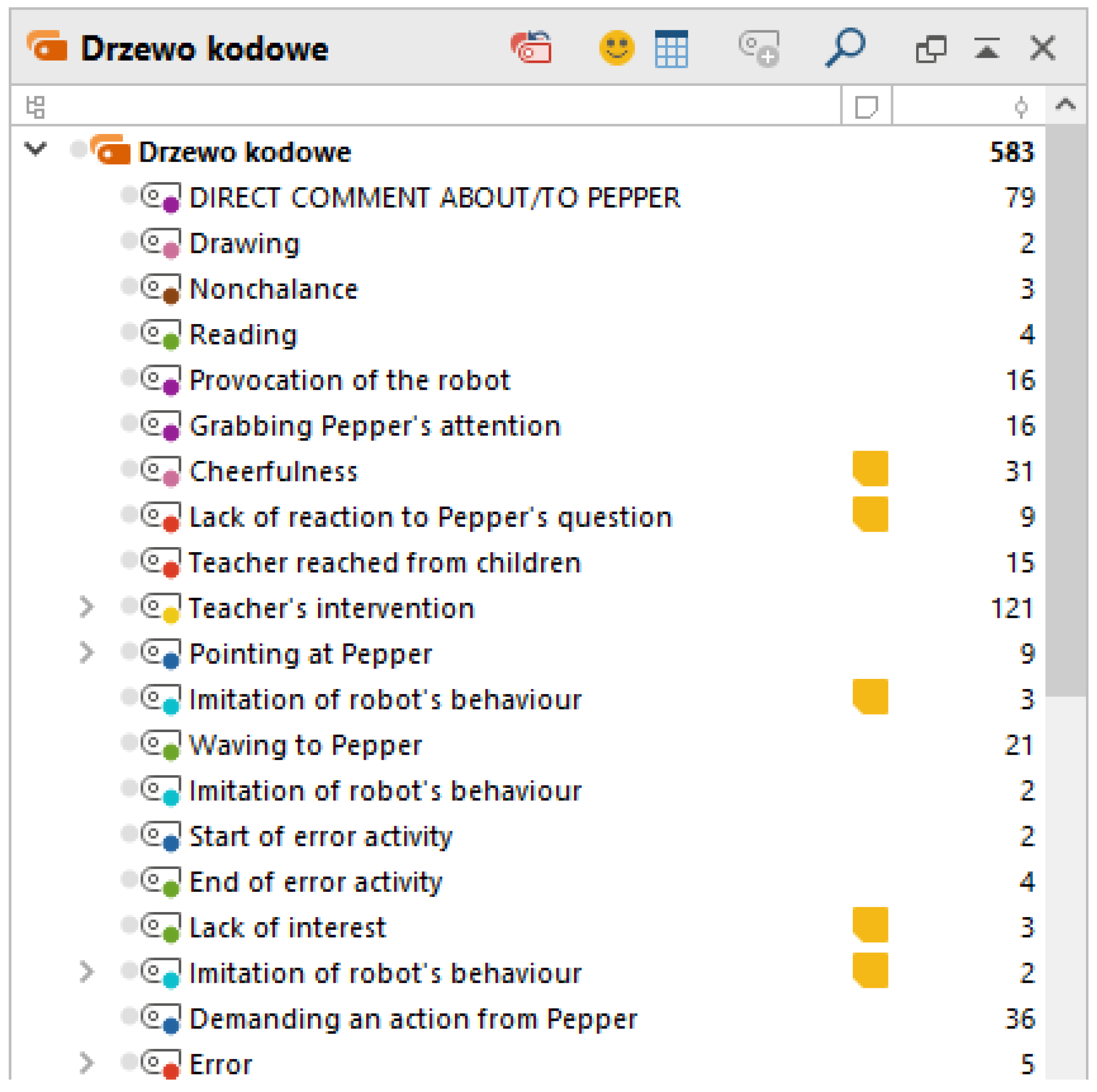

3.3. Data Collection and Analysis

3.4. Distinguishing Trust and Expectation towards the Robot

3.5. Environment

- Consideration of capacity and capability

- Developing ethical protocols and processes

- Developing trust and relationships

- Selecting appropriate methods

- Identifying appropriate forms of communication

- Consideration of context

3.6. Setting

4. Results: Two Significant Factors in CRI In-the-Wild

4.1. Trust

- The increasing number of social robots interacting with children (e.g., [76,81]) demands analysis of building trust in CRI. Some researchers already work on factors affecting trust for adults [30]. For example, the qualitative study by Bethel et al. [82] indicates that preschool children not only applied the same social rules to adults and a humanoid robot but also showed the same propensity to share a secret they were supposed to keep to themselves regarding adults and a humanoid robot.

- More research needs to be done on how different factors affect trust. Some studies on secret sharing behavior [82] have shown that children are inclined to trust robots as willingly as they trust adults. Other complementary studies showcase the importance of cognitive development and children’s personal attachment history for building trust [13]. However, Di Dio et al. [13] compared trust towards a NAO robot with that towards a human during play by testing children individually.

- Researchers need to know how to obtain trust in CRI and maintain the perception of being in a safe and engaging environment throughout the whole interaction with the participants, without them feeling overwhelmed or anxious by unfamiliar conditions such as interacting with robots. Preliminary studies (e.g., [13]) found that, for preschool and elementary school children, a robot can be perceived as a solid, trusted partner in the interaction, which can also contribute to building better human–human relationships.

4.1.1. Possible Signs of Trust towards Robots

- (a)

- Challenging behavior towards the robot to determine its capabilities: This refers to when the children challenge the robot to do some specific activity.Observation 1.“So you can’t walk?!”Observation 2.“But it can’t even say ‘Good Morning!’ ”Our observations led us to the assumption that one of the most interesting ways to show trust to a robot is to provoke it to specific actions. By challenging behavior, we mean the child demanding an answer or an action from the robot. These contentious exchanges allow us to get an insight into the child’s image of a robot’s performance. Challenging behaviors are also signs of direct, voluntary perceptions and assumptions about the robot based on the child’s mental model.Observation 3.“For sure you cannot do it!”Challenging behaviors also signal the level of trust in the child–robot relationship. For example, in our studies children challenged Pepper about its physical abilities (e.g., performing a very specific movement or asking it to do a breakdance and teasing it).

- (b)

- Challenging behavior as an indicator of the level of trust: This refers to when the children challenge the robot for some specific information or experience.Observation 4.Child 1: “Have you been to the cinema?” [children repeat this question a couple of times]Pepper: “No, I haven’t been to the cinema.”Child 2: “Do you know what cinema is at all?”Pepper: “I know.”Child 2: “So, tell us, what is that?”Child 3: “And do you know what McDonald’s is?”Child 4: “Do you know what McDonald’s is?”[Pepper replies after a delay]Pepper: “You can watch movies there.”[children laugh]Child 5: [amused] “At McDonald’s!”[Children laugh. Due to the delayed answer, they interpret Pepper’s last answer as a response to the questions about McDonald’s]Observation 5.“Do you know what France is?”Observation 6.“Can you even do anything?”Moreover, they asked some general knowledge questions such as whether it knows what is cinema. If it said yes, they dug deeper by asking it to describe it. The level of trust can be affected by the relevance of robot’s answers (irrelevant answers lead to laughter), the pace of answering questions, and the number of questions answered (some are skipped).Observation 7.Child1: “How did you like Paris? And do you like to play?”Teacher: “Wait, only one question at a time.”Child2: “Do you like to play?”Pepper: “I really enjoyed Paris!”Child2: [confused] “Paris...?”Observation 8.Child1: “Do you have parents?”[The robot doesn’t answer immediately]Child1 [urging]: “You have parents, well, Pepper?”Child2: “...it has to think about it.”As we followed the WOZ paradigm, the robot answered children’s questions that were typed in real-time by a researcher in the room. Due to the dynamics of the meeting (a large number of children and many questions asked simultaneously), sometimes the robot answered a question that was no longer relevant or interesting from the children’s perspective, or it was incomprehensible, which influenced the relevance of the robot’s answers. Sometimes, when there was a delay in the robot’s response (because the researcher was thinking what to say), the children may have felt the need to rush the robot.

- (c)

- Curiosity and the demonstration of desired actions as a sign of trust: This focuses on the children’s questions about the robot’s capabilities and competences.Observation 9.“Can you do that?”Observation 10.Children are sitting in front of the robot on the carpetPepper: “Hello!” [waves][Children are amused by this action and most of them wave to the robot]In our studies, we observed that children tried to challenge the robot by asking about its abilities while making a specific gesture towards the robot (e.g., waving or moving a fist). Some of them seemed curious about the robot’s abilities whereas others were seen taking a nonchalant approach and showing off their abilities.Observation 11.Child1: “You have a tablet, can you display a movie?”Pepper: “But now I have anything [any movie] close at hand.”Child1: “You always have armpits at hand.”Child2: “Simple!” [unintelligible][A few children start laughing]A demonstration of the desired actions from the robot is also an interesting issue. For example, we observed that when the robot reveals a lack of competence, children question it or make fun of it. Potentially, a violation of social norms by a robot, e.g., of knowing too much about a child, evokes negative effect (and thus decreases trust) in children [86], whereas the failure to complete a task decreases competency trust [14].

- (d)

- Physical interaction—catching attention in brave ways and approaching the robot: This refers to physical interactions and proactive behaviors to gain the attention of the robot.Observation 12.The start of the malfunction activity. The children are screeching, shouting: ‘What’s this?!’Child1: “Anything else?”[The children come closer to the robot. After a while, the teacher pulls the children back, while they keep asking questions “Do you know anything else?”]Children are also prone to catch attention in aggressive ways like waving in front of the robot’s eyes or repeating or reformulating questions, inviting the robot to play with them (demanding action from Pepper). This indicates that the children were feeling comfortable enough to decrease their physical distance with the robot and were willing to interact with it. Many children approached the robot right after entering the room where the interaction took place, eager to explore and touch it and had to be restrained by the teachers. During the encounter, pre-schoolers gradually came closer to the robot, not caring about the limit set by the teachers and the researchers.Observation 13.Child: “Can we pet him?”Researcher: “I don’t know. Probably not. But guess what, guess what, we don’t touch Pepper because he has very delicate skin. Okay?”Child1: “It’s not skin.”Child2: “This is metal!”Child3: “Metal!”Many children were intent on a physical exploration of the robot: petting it, grabbing its hands, looking in its eyes or touching its tablet. Thus, another sign of trust is based on physical interaction. The observations of children revealed that they came closer to the robot when they did not get a response immediately, especially when they were in the first row; children in the back stood up to see the robot and leaned towards it.

- (e)

- Social rules awareness and tool-like perspective: These observations refer to treating the robot as an object owned by someone and applying corresponding social rules.Observation 14.Child: “Who’s steering you?”Pepper: “[...] and IT specialists programmed me.”Child: “Do you have batteries in you?”An intriguing observation was that the children asked the teachers. This suggests two possible interpretations. One is that children are aware of social rules (in this case, “do not touch an object without asking its owner” or “always ask the teacher if you want to do something unusual”) and apply them to the interaction with the robot. The other is that children perceive the robot as a tool or an object without an ability to have autonomy or its preferences, which is not helpful for researchers wanting to create a companion-like interaction with the robot. They are building trust towards a dependent agent which is not the same as believing in a robot’s autonomy of agency [87]. There is a need for building further discussion around the role of teacher and how children would perceive the robot’s agency without her in the room.

- (f)

- Playing as a sign of trust: This refers to the verbal expressions of the children to encourage the robot to engage with them in fun activities.Observation 15.“Pepper, let’s play together!”Observation 16.“Will you play with us?”Observation 17.“Will you show us a story on the tablet?”Observation 18.“Please do some magic trick!”Some research [88] suggests that children have the most fun when playing with a friend compared to playing with a robot, given that playing alone as the least fun option. Play provides children with an opportunity to practice and learn basic social skills, including conflict management. It also requires a certain amount of trust in the relationship–children who dislike each other rarely start to play together [51]. In our observations, similar patterns of wanting to play with the robot could be noticed, as expressed by children’s questions. These questions to Pepper suggest trust towards the robot’s skills and abilities, and children’s willingness to engage in a joint play. As previous studies [89] point out, higher transparency of the robot’s lack of psychological capabilities (e.g., a lack of preferences or knowledge of social rules) negatively affects the formation of child–robot relationship in terms of the trust, whereas the children’s feelings of closeness remain unaffected. For this reason, an analysis of closeness-related behaviors and actions of children during CRI that indicate children’s trust towards the robot is a key factor in the interaction design.

- (g)

- Anthropomorphization: This focuses on children’s questions that reveal their beliefs that the robot is like a human.Observation 19.“Do you like me?”Observation 20.“Who gave birth to you?”Children tend to anthropomorphize social robots [90]. As a consequence, they expect a more unconstrained, substantive and useful interaction with the robot than is possible with the current state-of-the-art, however, their expectations towards robots will be addressed more directly in the next parts of this deduction. However, the tendency of children to anthropomorphize the robot, as natural as possible, has a significant impact on the trust that the user will place in the robot. If the child’s expectations built with the expectations gap are not met, it may reduce the child confidence in the robot.

4.1.2. General Remarks on Trust

4.2. Expectations

- Research on expectations assumes that children have a basic concept of the robot, and various studies aim to elicit features of this concept through observations and experiments. An in-the-wild methodology is ideal in this regard as it facilitates natural, spontaneous and voluntary interactions with the robot. This elicits behavior patterns that cannot be observed through laboratory experiments, surveys or individual interviews. By analyzing recordings and transcripts of such free CRIs, we can understand children’s mental models of a robot and their expectations towards it. Below in this section, we present some such observations.

- Another important reason for analyzing expectations is the low number of studies on this topic focusing on younger children. There are not as many measures for such young participants (questionnaires and interviews) as can be offered to older subjects.

4.2.1. Possible Signs of Expectations towards the Robot

- (a)

- Verifying expectations as a causal process and agency: This refers to the indicators of the children validating their initial beliefs about the robot.Observation 21.A boy is selected to ask a questionChild 1: “Pepper-kun… Pepper-kun. Can you run fast?”Pepper: “I am not good at running.”[All the children are surprised]Observation 22.“It’s kind of nervous.”Observation 23.“It’s a robot.”Observation 24.“It can’t even say ‘Good morning’!”.During the interaction, children commented on Pepper, performed different actions towards it and asked it different questions. The comments included voluntary remarks on the robot’s state of mind (Observation 23) and direct assumptions regarding Pepper’s identity (Observation 24) and “body” (“it’s not a skin” from Observation 13). They also commented on the ability to use speech communication Observation 25). These comments show children’s assumptions that Pepper must belong to some race or a kind, being a non-human robot with no communications abilities but with some cognitive abilities. Children provoked the robot by claiming different things about him and checking out what will be its reaction. This itself shows that they assumed a robot can react to things happening in his environment and can understand their words. This challenging behavior, following a simple conclusion that Pepper can speak children’s language, maybe a sample of causal learning. Moreover, the distress connected to what to expect from the robot was compounded by the error behavior the robot was designed to perform. Temporary lack of movement was interpreted by the children doubly: as a situation that happened to Pepper or as his decision to “freeze”. This again shows different assumptions about Pepper as a being.

- (b)

- Asking about origins: This focuses on the children’s questions about how the robot was created.Observation 25.“Where were you created?”Observation 26.“How were you made?”Observation 27.“Do you have parents, Pepper?”Observation 28.“Do you have mum and dad?”Children also made assumptions and tested them by asking about Pepper’s origins (created vs. born). This information seemed important for them, as the question appeared many times in different groups, interestingly more often children asked about Pepper’s parents then his creators.

- (c)

- Children’s questions as a source of insights: This focuses on the children’s hypotheses regarding their ideas and knowledge of the robot.Observation 29.Pepper encourages children to ask it questions. After a few general questions about its appearance.Child 1: “Why do you have this tablet attached?”Children: “Right, why?”Child 2: “So you don’t get sick!”Child 3: “Why don’t you have hair?”A few other children also repeat the last question, demanding the answer.”Observation 30.Child: “Pepper, can you play with a ball?”Observation 31.A Q&A session is in progress. Children speak through themselves, often without giving the robot time to answerChild 1: “Do you write something down?”Child 2: “Why do you have such a small mouth?”Child 3: “Where are your cameras?”Child 4: “How do you look inside?”Child 5: “In the forehead?” [laughter]Child 6: “Do you have a camera in your mouth too?”Child 7: “Can you fly?”Children asking questions is the most natural activity to observe for learning about their hypotheses. The ability to ask questions is a powerful tool that allows children to gather the information they need to learn about the world and solve problems in it [91]. In their questions, children focused on a set of human-like features which helped them to anthropomorphize the robot. Past research has shown that humans subconsciously anthropomorphize (ascribe human attributes to) and zoomorphism (ascribe animal attributes to) robots [94]. Some children, who lean towards transparency by asking de-anthropomorphizing questions, try to situate robots at the lower end of the human-likeness scale. Previous research has shown that transparency decreased the children’s humanlike perception of the robot in terms of animacy, anthropomorphism, social presence and perceived similarity [89]. In our research, children made assumptions regarding its movement abilities, mechanical equipment, gender, experience, knowledge, etc.

- (d)

- Robots can have preferences and relationships and children hypothesize relationships about the robot’s emotional life: This focuses on the children’s expectations towards the robot’s preferences and possible relationships with others.Observation 32.After a series of questions about the robot’s mobility—in particular, whether it will ride around the room—a girl asks a questionChild: “Do you like me?”Observation 33.A group of girls are standing together. One of them addresses the question to Pepper Girl: “Whom of us do you like the most?”Observation 34.“Do you like to play?”Testing ability to have preferences includes asking Pepper about a different set of abstract and non-abstract concepts such as travelling, food or music. Children assume the robot can have preferences and ask it to share them—we suspect they want to know its preferences for building a better relationship and seek common experiences. They are curious whether a robot can engage in social relationships. For this, they ask if the robot likes playing, music, reading, travelling or walking around and about its favorite food. They also ask if the robot likes the child who is asking and which child the robot likes the most.Observation 35.“Child 1: Why don’t you have a wife?Child 2: Or at least a friend!”Children expected Pepper to have some social relationships. They asked about its parents to understand its origins, about its friends to understand its willingness to build new relationships. They even asked about its wife, which shows they assumed Pepper can build more sophisticated relationships. Research shows that social robots that should be perceived by children as social agents worth engaging to play with [95] are more likely to be labeled as a ‘friend’ than as a “brother or sister” or a ‘classmate’. In our research, the children may assume to be in some kind of relationship with Pepper themselves. It is especially interesting as younger children are learning to regulate their emotions for building relationships [96]. As emotions provide the basic scaffolding for building human relationships, this behavior of children towards the robot shows that they presume Pepper can control and express emotions.

- (e)

- Human and non-human abilities: This focuses on the children’s comments related to the robot’s human and non-human capabilities.Observation 36.Child: [observing Pepper dancing] “This robot totally has an AI”Observation 37.Boy: “There is an AI in this robot probably.”Observation 38.Child: “It knows everything! More than human!”Observation 39.Child: “Can you help?”Observation 40.Child: “Who are you working with?”Observation 41.Child: “Are you a boy or a girl?”Human abilities refer to learning, experience travelling and doing things that are close to us such as playing instruments, reading books, hiking, singing and playing. Children assume that the robot engages in similar activities such as eating sweets, attending kindergarten/school, peeing, cleaning, sleeping, daily activities, brushing teeth and having vacations. Importantly, they assume that the robot can help them (Observation 40)—this quality of giving or being ready to give help is considered a human asset. They also make assumptions about robots age as they think Pepper should not be able to speak if it is two years old and even ask it to say tongue twisters, which is one-way children challenge one another. This shows that the children try to find the limit up to which the robot can be treated as a human regarding its abilities. Children assume Pepper should be able to button his clothes and are surprised when it appears that it is not able to do this. Children also explore the robot’s relationships—colleagues of Pepper (Observation 41)—which shows that children assume the robot to be the age of a working adult. In addition, simple questions about having hair, sweating, its birthday and asking for not getting ill is showing expectation towards Pepper as a human being. They were however not sure what to expect (Observation 42).Non-human abilities attributed to the robot by the children include being able to change shape and to fly. Children assume that a robot can move but are not sure what to expect in terms of its range of moving abilities compared to humans, for example its ability to dance. It is worth mentioning that a question about the robot’s capability is followed by a question about how this ability might be achieved/executed.Questions about the mechanical aspects of the robot reveal expectations towards it as a non-human being. They assume that the robot has an engine or batteries—external source of power. They ask how Pepper looks from inside, so they think about it as a toy. They are in general interested to know from what Pepper is built. When it comes to the appearance, expectations towards the robot include being made from metal, having a nose or a bigger one, being of another color than white or having wheels or two legs. They are also surprised by Pepper’s tablet and are interested to know about its function. Autonomy is another aspect of the robot’s characteristic which interested the children. They asked if someone is controlling or steering it, which clearly shows that they suspected Pepper to be a device or a toy rather than a human. This was further confirmed by the children asking the robot if it had any games on it (e.g., on a mobile phone) and petting it.

- (f)

- Shifting approaches to the robots’ abilities: This focuses on how, in certain circumstances, children changed their perception of robot or its behavior.Observation 42.Girl: “What kind of battery do you have?”[Children keep asking question after the robot says ‘bye’ as if they didn’t notice the end of the interaction. Pepper is silent for a while]Boy1: “It didn’t relax today.”Boy2: “No, it went to bed!”Children’s approach towards Pepper as a human or a non-human was constantly changing as they were physically exploring it. For them, the robot might be perceived as a toy, a device, a machine, or a human in the same interaction. They asked in general about its skills, regardless of whether they were human characteristics or not.

- (g)

- Surprise: This focuses on the observations where children were astonished at the robot’s behavior when it was different from their original assumptions.Observation 43.Throughout the interaction, children express an interest in the robot’s mobility. Finally, one of the surprised children addresses the issue directly to PepperChild: “You can’t walk?!”Surprise as an emotional reaction to a certain situation may be considered as an indicator of an unmet expectation. Additionally, children express it freely by a loud tone of voice tone and by mimicking. A verification of their assumptions comes with an eye-opening experience of redefining their hypothesis and renewed attempts of exploration. For example, during our observation children strongly and repeatedly expressed their surprise about the Pepper’s lack of ability to walk (Observation 45). For researchers, a surprise may serve as a sign followed either by a disappointment or relief—this should be observed and used for study and robot design in the future.

- (h)

- Challenging the robot: This focuses on those situations where the children asked for specific information or experience, trying to confront their expectations with reality.Observation 44.One boy is showing the flexibility of his wrist. Another one is watching him, repeating the movement and shouting to Pepper.Boy1: “Can you do it?”Pepper: “I think I cannot do it”[After the robot’s statement, children keep demanding for the action]Pepper: “I don’t have a ready program for that.”Boy2: “Damn, what does it say?”Observation 45.Boy: [impatiently] “Can you even fight?”[Some children immediately decline vehemently]Challenging behaviors can be interpreted as a negative reaction to the robot’s lack of ability to do something. In our observations, children provoked the robot by laughing at it or calling it by its name or asking it to perform a specific movement. We consider such provocative behaviors to be expressions of expectations.

4.2.2. General Remarks on Expectations towards Robots

4.3. Interplay of Expectations and Trust

5. Conclusions

- In our study, children were able to express their expectations and beliefs freely. They asked questions and shared their insights voluntarily, directly and frequently, which resulted in a better understanding of the dynamics of interactions with the robot and provided a natural environment for establishing social relationships between the robot and the children.

- We gathered a variety of qualitative data on children’s interaction with the robot, thanks to the children being able to naturally express their needs and emotions during free play episodes.

- In contrast to dyadic settings (one child with one robot), our group setting allowed us to observe real-life CRI at multiple levels; for example, the role of the facilitating teacher and the impact of group dynamics on CRI.

- By conducting observations in the natural settings of pre-schoolers, we can learn about potential undesirable behaviors from the children (e.g., a lack of interest or engagement) and about potential safety hazards of the robot (e.g., a child being accidentally hurt by the robot because of approaching it too close). This allows us to design more congenial and safe interaction CRI scenarios.

- We need to focus on children’s behaviors, including comments, challenging behaviors, performed actions towards the robot and the wide range of questions they ask to the robot. These provide direct expressions of children’s trust and expectations towards the robot.

- We need to prepare appropriate scripts for Q&A sessions with the robot. Our study shows that the children are interested to know the robot’s likes and dislikes, bodily functions and physical abilities. Thus, appropriate answers need to be provided for them in the conversation scripts given to the robot. Just providing some preferences for the robot for abstract concepts (e.g., assessing children’s ideas, cultural aspects, mathematics and art) and non-abstract concepts (e.g., favorite movies, countries, environment for interaction and robot’s body part) would help connect with the children.

- It is helpful to choose a robot that can move around and naturally interact with children using physical gestures. We need to help children with developing such expectations towards robots, that will help them understand its functioning, familiarize with its possibilities and become aware of its limitations even before the interaction.

- During CRI, it is helpful to use only those activities with which the child is familiar, or that can be easily learned within the group. We should avoid overwhelming children with the situation, and it is crucial to have a trusted adult (parent or a teacher) available to facilitate the interaction and comfort the child if needed.

6. Limitations

7. Future Research

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Su, H.; Qi, W.; Hu, Y.; Karimi, H.R.; Ferrigno, G.; De Momi, E. An Incremental Learning Framework for Human-like Redundancy Optimization of Anthropomorphic Manipulators. IEEE Trans. Ind. Inform. 2020. [Google Scholar] [CrossRef]

- Su, H.; Hu, Y.; Karimi, H.R.; Knoll, A.; Ferrigno, G.; De Momi, E. Improved recurrent neural network-based manipulator control with remote center of motion constraints: Experimental results. Neural Netw. 2020, 131, 291–299. [Google Scholar] [CrossRef] [PubMed]

- Loghmani, M.R.; Rovetta, S.; Venture, G. Emotional intelligence in robots: Recognizing human emotions from daily-life gestures. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1677–1684. [Google Scholar] [CrossRef]

- Fiorini, L.; Mancioppi, G.; Semeraro, F.; Fujita, H.; Cavallo, F. Unsupervised emotional state classification through physiological parameters for social robotics applications. Knowl. Based Syst. 2020, 190, 105217. [Google Scholar] [CrossRef]

- Pattar, S.P.; Coronado, E.; Ardila, L.R.; Venture, G. Intention and Engagement Recognition for Personalized Human-Robot Interaction, an integrated and Deep Learning approach. In Proceedings of the 2019 IEEE 4th International Conference on Advanced Robotics and Mechatronics (ICARM), Osaka, Japan, 3–8 July 2019; pp. 93–98. [Google Scholar] [CrossRef]

- Sünderhauf, N.; Brock, O.; Scheirer, W.; Hadsell, R.; Fox, D.; Leitner, J.; Upcroft, B.; Abbeel, P.; Burgard, W.; Milford, M.; et al. The limits and potentials of deep learning for robotics. Int. J. Robot. Res. 2018, 37, 405–420. [Google Scholar] [CrossRef]

- Károly, A.I.; Galambos, P.; Kuti, J.; Rudas, I.J. Deep Learning in Robotics: Survey on Model Structures and Training Strategies. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 266–279. [Google Scholar] [CrossRef]

- Wagner, A.R. The Role of Trust and Relationships in Human-Robot Social Interaction. Ph.D. Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 2009. [Google Scholar]

- Schaefer, K. The Perception and Measurement of Human-Robot Trust; University of Central Florida: Orlando, FL, USA, 2013. [Google Scholar]

- Spence, P.R.; Westerman, D.; Edwards, C.; Edwards, A. Welcoming our robot overlords: Initial expectations about interaction with a robot. Commun. Res. Rep. 2014, 31, 272–280. [Google Scholar] [CrossRef]

- Kwon, M.; Jung, M.F.; Knepper, R.A. Human expectations of social robots. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Chirstchurch, New Zealand, 7–10 March 2016; pp. 463–464. [Google Scholar]

- Jokinen, K.; Wilcock, G. Expectations and first experience with a social robot. In Proceedings of the 5th International Conference on Human Agent Interaction, Bielefeld, Germany, 17–20 October 2017; pp. 511–515. [Google Scholar]

- Di Dio, C.; Manzi, F.; Peretti, G.; Cangelosi, A.; Harris, P.L.; Massaro, D.; Marchetti, A. Shall I Trust You? From Child–Robot Interaction to Trusting Relationships. Front. Psychol. 2020, 11, 469. [Google Scholar] [CrossRef]

- van Straten, C.L.; Peter, J.; Kühne, R.; de Jong, C.; Barco, A. Technological and interpersonal trust in child-robot interaction: An exploratory study. In Proceedings of the 6th International Conference on Human-Agent Interaction, Southampton, UK, 15–18 December 2018; pp. 253–259. [Google Scholar]

- Ligthart, M.; Henkemans, O.B.; Hindriks, K.; Neerincx, M.A. Expectation management in child-robot interaction. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28–31 August 2017; pp. 916–921. [Google Scholar]

- Yadollahi, E.; Johal, W.; Dias, J.; Dillenbourg, P.; Paiva, A. Studying the Effect of Robot Frustration on Children’s Change of Perspective. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), Cambridge, UK, 3–6 September 2019; pp. 381–387. [Google Scholar]

- Charisi, V.; Davison, D.; Reidsma, D.; Evers, V. Evaluation methods for user-centered child-robot interaction. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 22–27 August 2016; pp. 545–550. [Google Scholar] [CrossRef]

- Fior, M.; Nugent, S.; Beran, T.N.; Ramirez-Serrano, A.; Kuzyk, R. Children’s Relationships with Robots: Robot Is Child’s New Friend. J. Phys. Agents 2010, 4, 9–17. [Google Scholar] [CrossRef]

- Cameron, D.; Fernando, S.; Millings, A.; Moore, R.; Sharkey, A.; Prescott, T. Children’s age influences their perceptions of a humanoid robot as being like a person or machine. In Conference on Biomimetic and Biohybrid Systems; Springer: Barcelona, Spain, 2015; pp. 348–353. [Google Scholar]

- Sullivan, A.; Bers, M.U. Robotics in the early childhood classroom: Learning outcomes from an 8-week robotics curriculum in pre-kindergarten through second grade. Int. J. Technol. Des. Educ. 2016, 26, 3–20. [Google Scholar] [CrossRef]

- van Straten, C.L.; Peter, J.; Kühne, R. Child–robot relationship formation: A narrative review of empirical research. Int. J. Soc. Robot. 2020, 12, 325–344. [Google Scholar] [CrossRef]

- Tonkin, M.; Vitale, J.; Herse, S.; Williams, M.A.; Judge, W.; Wang, X. Design Methodology for the UX of HRI: A Field Study of a Commercial Social Robot at an Airport. In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction (HRI), Chicago, IL, USA, 5–8 March 2018; HRI ’18. Association for Computing Machinery: New York, NY, USA, 2018; pp. 407–415. [Google Scholar] [CrossRef]

- de Graaf, M.M.A.; Allouch, S.B. Expectation Setting and Personality Attribution in HRI. In Proceedings of the 2014 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Bielefeld, Germany, 3–6 March 2014; pp. 144–145. [Google Scholar]

- Walters, M.L.; Syrdal, D.S.; Dautenhahn, K.; Te Boekhorst, R.; Koay, K.L. Avoiding the uncanny valley: Robot appearance, personality and consistency of behavior in an attention-seeking home scenario for a robot companion. Auton. Robot. 2008, 24, 159–178. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E. Can you trust your robot? Ergon. Des. 2011, 19, 24–29. [Google Scholar] [CrossRef]

- Szcześniak, M.; Colaço, M.; Rondón, G. Development of interpersonal trust among children and adolescents. Pol. Psychol. Bull. 2012, 43, 50–58. [Google Scholar] [CrossRef]

- Ullman, D.; Malle, B.F. What does it mean to trust a robot? Steps toward a multidimensional measure of trust. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot (HRI), Chicago, IL, USA, 5–8 March 2018; pp. 263–264. [Google Scholar]

- Kok, B.C.; Soh, H. Trust in robots: Challenges and opportunities. Curr. Robot. Rep. 2020, 1, 297–309. [Google Scholar] [CrossRef]

- Baker, A.L.; Phillips, E.K.; Ullman, D.; Keebler, J.R. Toward an understanding of trust repair in human-robot interaction: Current research and future directions. ACM Trans. Interact. Intell. Syst. 2018, 8, 1–30. [Google Scholar] [CrossRef]

- Lewis, M.; Sycara, K.; Walker, P. The role of trust in human-robot interaction. In Foundations of Trusted Autonomy; Springer: Cham, Switzerland, 2018; pp. 135–159. [Google Scholar]

- Vinanzi, S.; Patacchiola, M.; Chella, A.; Cangelosi, A. Would a robot trust you? Developmental robotics model of trust and theory of mind. Philos. Trans. R. Soc. B 2019, 374, 20180032. [Google Scholar] [CrossRef]

- Azevedo, C.R.; Raizer, K.; Souza, R. A vision for human-machine mutual understanding, trust establishment, and collaboration. In Proceedings of the 2017 IEEE Conference on Cognitive and Computational Aspects of Situation Management (CogSIMA), Savannah, GA, USA, 27–31 March 2017; pp. 1–3. [Google Scholar]

- Jian, J.Y.; Bisantz, A.M.; Drury, C.G. Foundations for an empirically determined scale of trust in automated systems. Int. J. Cogn. Ergon. 2000, 4, 53–71. [Google Scholar] [CrossRef]

- Möllering, G. The nature of trust: From Georg Simmel to a theory of expectation, interpretation and suspension. Sociology 2001, 35, 403–420. [Google Scholar]

- Bakała, E.; Visca, J.; Tejera, G.; Seré, A.; Amorin, G.; Gómez-Sena, L. Designing child-robot interaction with Robotito. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Zguda, P.; Kołota, A.; Jarosz, M.; Sondej, F.; Izui, T.; Dziok, M.; Belowska, A.; Jędras, W.; Venture, G.; Śnieżynski, B.; et al. On the Role of Trust in Child-Robot Interaction. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–6. [Google Scholar] [CrossRef]

- De Graaf, M.M.; Allouch, S.B. Exploring influencing variables for the acceptance of social robots. Robot. Auton. Syst. 2013, 61, 1476–1486. [Google Scholar] [CrossRef]

- Sanders, E.B.N.; Stappers, P.J. Co-creation and the new landscapes of design. Co-Design 2008, 4, 5–18. [Google Scholar] [CrossRef]

- Woods, S. Exploring the design space of robots: Children’s perspectives. Interact. Comput. 2006, 18, 1390–1418. [Google Scholar]

- Geiskkovitch, D.Y.; Thiessen, R.; Young, J.E.; Glenwright, M.R. What? That’s Not a Chair!: How Robot Informational Errors Affect Children’s Trust Towards Robots. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Korea, 11–14 March 2019; pp. 48–56. [Google Scholar] [CrossRef]

- Punch, S. Research with children: The same or different from research with adults? Childhood 2002, 9, 321–341. [Google Scholar] [CrossRef]

- Salem, M.; Lakatos, G.; Amirabdollahian, F.; Dautenhahn, K. Would You Trust a (Faulty) Robot? Effects of Error, Task Type and Personality on Human-Robot Cooperation and Trust. In Proceedings of the 2015 10th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Portland, OR, USA, 2–5 March 2015; pp. 1–8. [Google Scholar]

- Stower, R. The Role of Trust and Social Behaviours in Children’s Learning from Social Robots. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), Cambridge, UK, 3–6 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- de Jong, C.; Kühne, R.; Peter, J.; Straten, C.L.V.; Barco, A. What Do Children Want from a Social Robot? Toward Gratifications Measures for Child-Robot Interaction. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Paepcke, S.; Takayama, L. Judging a bot by its cover: An experiment on expectation setting for personal robots. In Proceedings of the 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Osaka, Japan, 2–5 March 2010; pp. 45–52. [Google Scholar] [CrossRef]

- Jung, M.; Hinds, P. Robots in the Wild: A Time for More Robust Theories of Human-Robot Interaction. ACM Trans. Hum. Robot. Interact. (THRI) 2018, 7, 2–5. [Google Scholar] [CrossRef]

- Jacobs, A.; Elprama, S.A.; Jewell, C.I. Evaluating Human-Robot Interaction with Ethnography. In Human-Robot Interaction; Springer: Berlin/Heidelberg, Germany, 2020; pp. 269–286. [Google Scholar]

- Hansen, A.K.; Nilsson, J.; Jochum, E.A.; Herath, D. On the Importance of Posture and the Interaction Environment: Exploring Agency, Animacy and Presence in the Lab vs. Wild using Mixed-Methods. In Proceedings of the Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 24–26 March 2020; pp. 227–229. [Google Scholar]

- Savery, R.; Rose, R.; Weinberg, G. Establishing Human-Robot Trust through Music-Driven Robotic Emotion Prosody and Gesture. arXiv 2020, arXiv:cs.HC/2001.05863. [Google Scholar]

- Yogman, M.; Garner, A.; Hutchinson, J.; Hirsh-Pasek, K.; Golinkoff, R.M. Committee on Psychosocial Aspects of Child; Family Health, AAP Council on Communications And Media; The power of play: A pediatric role in enhancing development in young children. Pediatrics 2018, 142, e20182058. [Google Scholar] [CrossRef]

- Cordoni, G.; Demuru, E.; Ceccarelli, E.; Palagi, E. Play, aggressive conflict and reconciliation in pre-school children: What matters? Behaviour 2016, 153, 1075–1102. [Google Scholar] [CrossRef]

- Balph, D.F.; Balph, M.H. On the psychology of watching birds: The problem of observer-expectancy bias. Auk 1983, 100, 755–757. [Google Scholar] [CrossRef]

- Coeckelbergh, M.; Pop, C.; Simut, R.; Peca, A.; Pintea, S.; David, D.; Vanderborght, B. A survey of expectations about the role of robots in robot-assisted therapy for children with ASD: Ethical acceptability, trust, sociability, appearance, and attachment. Sci. Eng. Ethics 2016, 22, 47–65. [Google Scholar] [CrossRef]

- Rossi, A.; Holthaus, P.; Dautenhahn, K.; Koay, K.L.; Walters, M.L. Getting to Know Pepper: Effects of People’s Awareness of a Robot’s Capabilities on Their Trust in the Robot. In Proceedings of the 6th International Conference on Human-Agent Interaction Southampton, Southampton, UK, 15–18 December 2018; HAI ’18. Association for Computing Machinery: New York, NY, USA, 2018; pp. 246–252. [Google Scholar] [CrossRef]

- Pandey, A.K.; Gelin, R. A mass-produced sociable humanoid robot: Pepper: The first machine of its kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Lee, H.R.; Cheon, E.; de Graaf, M.; Alves-Oliveira, P.; Zaga, C.; Young, J. Robots for Social Good: Exploring Critical Design for HRI. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Korea, 11–14 March 2019; pp. 681–682. [Google Scholar] [CrossRef]

- Sabanovic, S.; Michalowski, M.P.; Simmons, R. Robots in the wild: Observing human-robot social interaction outside the lab. In Proceedings of the 9th IEEE International Workshop on Advanced Motion Control, Istanbul, Turkey, 27–29 March 2006; pp. 596–601. [Google Scholar]

- Venture, G.; Indurkhya, B.; Izui, T. Dance with me! Child-robot interaction in the wild. In International Conference on Social Robotics; Springer: Berlin/Heidelberg, Germany, 2017; pp. 375–382. [Google Scholar]

- Salter, T.; Michaud, F.; Larouche, H. How wild is wild? A taxonomy to characterize the ‘wildness’ of child-robot interaction. Int. J. Soc. Robot. 2010, 2, 405–415. [Google Scholar]

- Shiomi, M.; Kanda, T.; Howley, I.; Hayashi, K.; Hagita, N. Can a social robot stimulate science curiosity in classrooms? Int. J. Soc. Robot. 2015, 7, 641–652. [Google Scholar] [CrossRef]

- Brain Balance Normal Attention Span Expectations by Age. Available online: https://blog.brainbalancecenters.com/normal-attention-span-expectations-by-age (accessed on 31 January 2021).

- Ruff, H.A.; Lawson, K.R. Development of sustained, focused attention in young children during free play. Dev. Psychol. 1990, 26, 85. [Google Scholar] [CrossRef]

- Lemaignan, S.; Edmunds, C.E.; Senft, E.; Belpaeme, T. The PInSoRo dataset: Supporting the data-driven study of child-child and child-robot social dynamics. PLoS ONE 2018, 13, e0205999. [Google Scholar] [CrossRef]

- de Jong, C.; van Straten, C.; Peter, J.; Kuhne, R.; Barco, A. Children and social robots: Inventory of measures for CRI research. In Proceedings of the 2018 IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO), Genova, Italy, 27–28 September 2018; pp. 44–45. [Google Scholar]

- Tanevska, A.; Ackovska, N. Advantages of using the Wizard-of-Oz approach in assistive robotics for autistic children. In Proceedings of the 13th International Conference for Informatics and Information Technology (CiiT), Bitola, Macedonia, 22–24 April 2016. [Google Scholar]

- Charisi, V.; Gomez, E.; Mier, G.; Merino, L.; Gomez, R. Child-Robot Collaborative Problem-Solving and the Importance of Child’s Voluntary Interaction: A Developmental Perspective. Front. Robot. AI 2020, 7, 15. [Google Scholar] [CrossRef]

- Ahmad, M.; Mubin, O.; Orlando, J. A systematic review of adaptivity in human-robot interaction. Multimodal Technol. Interact. 2017, 1, 14. [Google Scholar] [CrossRef]

- Bartneck, C.; Nomura, T.; Kanda, T.; Suzuki, T.; Kato, K. Cultural Differences in Attitudes towards Robots; AISB: Budapest, Hungary, 2005. [Google Scholar]

- Wang, N.; Pynadath, D.V.; Rovira, E.; Barnes, M.J.; Hill, S.G. Is it my looks? or something i said? the impact of explanations, embodiment, and expectations on trust and performance in human-robot teams. In International Conference on Persuasive Technology; Springer: Berlin/Heidelberg, Germany, 2018; pp. 56–69. [Google Scholar]

- Gompei, T.; Umemuro, H. Factors and development of cognitive and affective trust on social robots. In International Conference on Social Robotics; Springer: Berlin/Heidelberg, Germany, 2018; pp. 45–54. [Google Scholar]

- Van Straten, C.L.; Kühne, R.; Peter, J.; de Jong, C.; Barco, A. Closeness, trust, and perceived social support in child-robot relationship formation: Development and validation of three self-report scales. Interact. Stud. 2020, 21, 57–84. [Google Scholar] [CrossRef]

- Haring, K.S.; Watanabe, K.; Silvera-Tawil, D.; Velonaki, M. Expectations towards two robots with different interactive abilities. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Chirstchurch, New Zealand, 7–10 March 2016; pp. 433–434. [Google Scholar]

- Johnson, V.; Hart, R.; Colwell, J. Steps for Engaging Young Children in Research: The Toolkit; Education Research Centre, University of Brighton: Brighton, UK, 2014. [Google Scholar]

- Andriella, A.; Torras, C.; Alenya, G. Short-term human-robot interaction adaptability in real-world environments. Int. J. Soc. Robot. 2019, 12, 639–657. [Google Scholar] [CrossRef]

- Björling, E.A.; Rose, E.; Ren, R. Teen-robot interaction: A pilot study of engagement with a low-fidelity prototype. In Proceedings of the Companion of the 2018 ACM/IEEE International Conference on Human-Robot, Chicago, IL, USA, 5–8 March 2018; pp. 69–70. [Google Scholar]

- Coninx, A.; Baxter, P.; Oleari, E.; Bellini, S.; Bierman, B.; Henkemans, O.; Ca namero, L.; Cosi, P.; Enescu, V.; Espinoza, R.; et al. Towards Long-Term Social Child-Robot Interaction: Using Multi-Activity Switching to Engage Young Users. J. Hum. Robot. Interact. 2016, 5, 32–67. [Google Scholar] [CrossRef]

- Leite, I.; McCoy, M.; Lohani, M.; Ullman, D.; Salomons, N.; Stokes, C.; Rivers, S.; Scassellati, B. Emotional storytelling in the classroom: Individual versus group interaction between children and robots. In Proceedings of the 2015 10th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Portland, OR, USA, 2–5 March 2015; pp. 75–82. [Google Scholar]

- Green, A. Education robots offer leg-up to disadvantaged students. Financial Times 2020. Available online: https://www.ft.com/content/d8b3e518-3e0a-11ea-b84f-a62c46f39bc2 (accessed on 31 January 2021).

- Simpson, J.A. Psychological foundations of trust. Curr. Dir. Psychol. Sci. 2007, 16, 264–268. [Google Scholar] [CrossRef]

- Meghdari, A.; Alemi, M. Recent Advances in Social & Cognitive Robotics and Imminent Ethical Challenges (August 22, 2018). In Proceedings of the 10th International RAIS Conference on Social Sciences and Humanities, Princeton, NJ, USA, 22–23 August 2018. [Google Scholar]

- Kirstein, F.; Risager, R.V. Social robots in educational institutions they came to stay: Introducing, evaluating, and securing social robots in daily education. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Chirstchurch, New Zealand, 7–10 March 2016; pp. 453–454. [Google Scholar]

- Bethel, C.L.; Stevenson, M.R.; Scassellati, B. Secret-sharing: Interactions between a child, robot, and adult. In Proceedings of the 2011 IEEE International Conference on Systems, Man, and Cybernetics, Anchorage, AK, USA, 9–12 October 2011; pp. 2489–2494. [Google Scholar]

- Zacharaki, A.; Kostavelis, I.; Gasteratos, A.; Dokas, I. Safety bounds in human robot interaction: A survey. Saf. Sci. 2020, 127, 104667. [Google Scholar] [CrossRef]

- Lasota, P.A.; Fong, T.; Shah, J.A. A Survey of Methods for Safe Human-Robot Interaction; Now Publishers: Breda, The Netherlands, 2017. [Google Scholar]

- Backman, K.; Kyngäs, H.A. Challenges of the grounded theory approach to a novice researcher. Nurs. Health Sci. 1999, 1, 147–153. [Google Scholar] [CrossRef] [PubMed]

- Leite, I.; Lehman, J.F. The robot who knew too much: Toward understanding the privacy/personalization trade-off in child-robot conversation. In Proceedings of the 15th International Conference on Interaction Design and Children, Manchester, UK, 21–24 June 2016; pp. 379–387. [Google Scholar]

- Brink, K.A.; Wellman, H.M. Robot teachers for children? Young children trust robots depending on their perceived accuracy and agency. Dev. Psychol. 2020, 56, 1268. [Google Scholar] [CrossRef] [PubMed]

- Shahid, S.; Krahmer, E.; Swerts, M. Child-robot interaction: Playing alone or together? In CHI’11 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2011; pp. 1399–1404. [Google Scholar]

- Straten, C.L.v.; Peter, J.; Kühne, R.; Barco, A. Transparency about a robot’s lack of human psychological capacities: Effects on child-robot perception and relationship formation. ACM Trans. Hum. Robot Interact. 2020, 9, 1–22. [Google Scholar] [CrossRef]

- Damiano, L.; Dumouchel, P. Anthropomorphism in human–robot co-evolution. Front. Psychol. 2018, 9, 468. [Google Scholar] [CrossRef]

- Chouinard, M.M.; Harris, P.L.; Maratsos, M.P. Children’s questions: A mechanism for cognitive development. Monogr. Soc. Res. Child Dev. 2007, 72, i-129. [Google Scholar]

- Veríssimo, M.; Torres, N.; Silva, F.; Fernandes, C.; Vaughn, B.E.; Santos, A.J. Children’s representations of attachment and positive teacher–child relationships. Front. Psychol. 2017, 8, 2270. [Google Scholar] [CrossRef]

- Legare, C.H. Exploring explanation: Explaining inconsistent evidence informs exploratory, hypothesis-testing behavior in young children. Child Dev. 2012, 83, 173–185. [Google Scholar] [CrossRef]

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef]

- Belpaeme, T.; Baxter, P.; Read, R.; Wood, R.; Cuayáhuitl, H.; Kiefer, B.; Racioppa, S.; Kruijff-Korbayová, I.; Athanasopoulos, G.; Enescu, V.; et al. Multimodal child-robot interaction: Building social bonds. J. Hum. Robot Interact. 2012, 1, 33–53. [Google Scholar] [CrossRef]

- Denham, S.A. Dealing with feelings: How children negotiate the worlds of emotions and social relationships. Cogn. Brain Behav. 2007, 11, 1. [Google Scholar]

- Tatlow-Golden, M.; Guerin, S. ‘My favourite things to do’and ‘my favourite people’: Exploring salient aspects of children’s self-concept. Childhood 2010, 17, 545–562. [Google Scholar] [CrossRef]

- Shutts, K.; Roben, C.K.P.; Spelke, E.S. Children’s use of social categories in thinking about people and social relationships. J. Cogn. Dev. 2013, 14, 35–62. [Google Scholar] [CrossRef] [PubMed]

- Kushniruk, A.W.; Borycki, E.M. Development of a Video Coding Scheme for Analyzing the Usability and Usefulness of Health Information Systems; CSHI: Boston, MA, USA, 2015; pp. 68–73. [Google Scholar]

- Guneysu, A.; Karatas, I.; Asık, O.; Indurkhya, B. Attitudes of children towards dancing robot nao: A kindergarden observation. In Proceedings of the International Conference on Social Robotics (ICSR 2013): Workshop on Taking Care of Each Other: Synchronisation and Reciprocity for Social Companion Robots, Bristol, UK, 27–29 October 2013. [Google Scholar]

- Darling, K. Children Beating Up Robot Inspires New Escape Maneuver System. IEEE Spectrum, August 2015. Available online: https://spectrum.ieee.org/automaton/robotics/artificial-intelligence/children-beating-up-robot (accessed on 31 January 2021).

- Aaltonen, I.; Arvola, A.; Heikkilä, P.; Lammi, H. Hello Pepper, may I tickle you? Children’s and adults’ responses to an entertainment robot at a shopping mall. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 53–54. [Google Scholar]

- Tanaka, F.; Movellan, J.R.; Fortenberry, B.; Aisaka, K. Daily HRI evaluation at a classroom environment: Reports from dance interaction experiments. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 3–9. [Google Scholar]

- Dondrup, C.; Baillie, L.; Broz, F.; Lohan, K. How can we transition from lab to the real world with our HCI and HRI setups? In Proceedings of the 4th Workshop on Public Space Human-Robot Interaction (PubRob), Barcelona, Spain, 3 September 2018. [Google Scholar]

- Tolksdorf, N.F.; Siebert, S.; Zorn, I.; Horwath, I.; Rohlfing, K.J. Ethical Considerations of Applying Robots in Kindergarten Settings: Towards an Approach from a Macroperspective. Int. J. Soc. Robot. 2020, 1–12. [Google Scholar] [CrossRef]

| Polish Participants | |||

| Group | Size of the Group | Gender (F/M) | Age of Participants |

| 1 | 20 | 8/12 | 4–6 |

| 2 | 20 | 10/10 | 5–6 |

| 3 | 22 | 11/11 | 5–6 |

| 4 | 20 | 8/12 | 5–7 |

| 5 | 23 | 11/12 | 5–7 |

| Japanese Participants | |||

| Group | Size of the Group | Gender (F/M) | Age of Participants |

| 1 | 20 | 7/13 | 4–5 |

| 2 | 19 | 5/14 | 5–6 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zguda, P.; Kołota, A.; Venture, G.; Sniezynski, B.; Indurkhya, B. Exploring the Role of Trust and Expectations in CRI Using In-the-Wild Studies. Electronics 2021, 10, 347. https://doi.org/10.3390/electronics10030347

Zguda P, Kołota A, Venture G, Sniezynski B, Indurkhya B. Exploring the Role of Trust and Expectations in CRI Using In-the-Wild Studies. Electronics. 2021; 10(3):347. https://doi.org/10.3390/electronics10030347

Chicago/Turabian StyleZguda, Paulina, Anna Kołota, Gentiane Venture, Bartlomiej Sniezynski, and Bipin Indurkhya. 2021. "Exploring the Role of Trust and Expectations in CRI Using In-the-Wild Studies" Electronics 10, no. 3: 347. https://doi.org/10.3390/electronics10030347

APA StyleZguda, P., Kołota, A., Venture, G., Sniezynski, B., & Indurkhya, B. (2021). Exploring the Role of Trust and Expectations in CRI Using In-the-Wild Studies. Electronics, 10(3), 347. https://doi.org/10.3390/electronics10030347