Abstract

A set of efficient algorithmic solutions suitable to the fully parallel hardware implementation of the short-length circular convolution cores is proposed. The advantage of the presented algorithms is that they require significantly fewer multiplications as compared to the naive method of implementing this operation. During the synthesis of the presented algorithms, the matrix notation of the cyclic convolution operation was used, which made it possible to represent this operation using the matrix–vector product. The fact that the matrix multiplicand is a circulant matrix allows its successful factorization, which leads to a decrease in the number of multiplications when calculating such a product. The proposed algorithms are oriented towards a completely parallel hardware implementation, but in comparison with a naive approach to a completely parallel hardware implementation, they require a significantly smaller number of hardwired multipliers. Since the wired multiplier occupies a much larger area on the VLSI and consumes more power than the wired adder, the proposed solutions are resource efficient and energy efficient in terms of their hardware implementation. We considered circular convolutions for sequences of lengths 2, 3, 4, 5, 6, 7, 8, and 9.

1. Introduction

Digital convolution is used in various applications of digital signal and image processing. Its most interesting areas of application are wireless communication and artificial neural networks [1,2,3,4,5]. The general principles of developing convolution algorithms were described in [6,7,8,9,10,11,12]. Various algorithmic solutions have been proposed to speed up the computation of circular convolution [7,8,9,10,11,13,14,15,16]. The most common approach to efficiently computing the circular algorithm is the Fast Fourier Transform (FFT) algorithm, as well as a number of other discrete orthogonal transformations [17,18,19,20]. There are also known methods for implementing discrete orthogonal transformations using circular convolution [20,21,22]. FFT-based convolution relies on the fact that convolution can be performed as simple multiplication in the frequency domain [23]. The FFT-based approach to computing circular convolution is traditionally used for long-length sequences. However, in many practical applications, a situation arises where both convolving sequences are relatively short. As examples, we can refer to algorithms for calculating short linear convolutions, as well as overlap-save and overlap-add methods [24,25,26]. It is known that these methods use splitting a long data sequence into small segments, calculating short cyclic convolutions of these segments and the impulse response coefficients of a Finite Impulse Response (FIR) filter, and then, combining the short convolutions into a single whole.

To date, many algorithmic solutions have been developed that involve the computation of cyclic convolution in the time domain [7,8,9,10,21,27,28,29]. In the cited publications, methods for calculating short convolutions were presented either as a set of arithmetic relations or as a set of matrix–vector products. Such approaches to the description of computations do not at all give an idea of the organization of the structures of processor cores intended for the implementation of the convolution operation. The solutions presented in the literature do not give a complete picture of the structural organization of such cores, if only because they (except for the cases N = 2 and N = 3) do not show the corresponding signal flow graphs. The absence of signal flow graphs in known publications also does not allow us to assess the possibilities of the obtained solutions from the point of view of their parallel implementation.

Therefore, in this paper, we propose a set of algorithmic solutions for circular convolution of small length N sequences from 2–9.

2. Preliminary Remarks

Let and be two N-point sequences. Their circular convolution is the sequence , defined by [6]:

Usually, the elements of one of the convolved sequences are constants. For correctness, we assume that it will be the elements of sequence .

Because sequences and are finite in length, then their circular convolution (1) can also be represented as a matrix–vector product:

where:

In the following, we assume that will be the input data vector, will be the output data vector, and will be the vector containing constants.

Calculating (2) directly requires N multiplications and (N − 1)N additions. This leads to the fact that for a completely parallel hardware implementation of the circular convolution, N multipliers and N N-input adders are required. Since the multiplier is a very cumbersome device and, when implemented in hardware, requires much more hardware resources compared to the adder, minimizing the number of multipliers required for the fully parallel implementation of algorithms is an important task.

Thus, taking into account the above, the purpose of this article is to develop and describe fully parallel resource-efficient algorithms for N = 2, 3, 4, 5, 6, 7, 8, and 9.

3. Algorithms for Short-Length Circular Convolution

3.1. Circular Convolution for

Let and be two-dimensional data vectors being convolved and be an output vector representing a circular convolution. The task is reduced to calculating the following product:

where:

Calculating (4) directly requires four multiplications and two additions. It is easy to see that the matrix has an unusual structure. Taking into account this specificity leads to the fact that the number of multiplications in the calculation of the two-point circular convolution can be reduced [7].

The optimized computational procedure for computing the two-point circular convolution is as follows:

where:

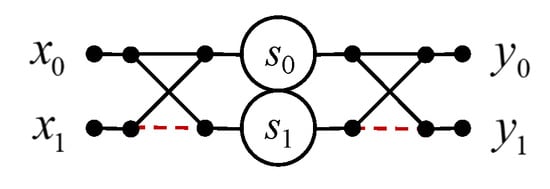

Figure 1 shows a signal flow graph for the proposed algorithm, which also provides a simplified algorithmic structure of a fully parallel processing core for resource-effective implementation of the two-point circular convolution. All signal flow graphs are oriented from left to right. Straight lines denote the data circuits. The circles in these figures show the hardwired multipliers by a constant inscribed inside a circle. Points, where lines converge, denote adders, and dotted lines indicate the sign-change data circuits (datapaths with multiplication by −1).

Figure 1.

Algorithmic structure of the processing core for the computation of the 2-point circular convolution.

Therefore, it only takes two multiplications and four additions to compute the two-point circular convolution. As for the arithmetic blocks, for a completely parallel hardware implementation of the processor core to compute the two-point convolution, you need two multipliers and four two-input adders, instead of four multipliers and two two-input adders in the case of a completely parallel implementation (4).

3.2. Circular Convolution for

Let and be three-dimensional data vectors being convolved and be an output vector representing circular convolution for N = 3. The task is reduced to calculating the following product:

where:

Calculating (6) directly requires nine multiplications and five additions. It is easy to see that the matrix has an unusual structure. Taking into account this specificity leads to the fact that the number of multiplications in the calculation of the three-point circular convolution can be reduced [7,8,11,27].

Therefore, the optimized computational procedure for computing the three-point circular convolution is as follows:

where:

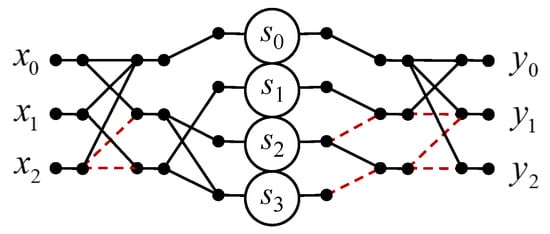

Figure 2 shows a signal flow graph of the proposed algorithm for the implementation of the three-point circular convolution.

Figure 2.

Algorithmic structure of the processing core for the computation of the 3-point circular convolution.

As for the arithmetic blocks, for a completely parallel hardware implementation of the processor core to compute the three-point convolution (7), you need four multipliers and eleven two-input adders, instead of nine multipliers and six two-input adders in the case of a completely parallel implementation (6). Therefore, we have exchanged five multipliers for five two-input adders.

3.3. Circular Convolution for

Let and be four-dimensional data vectors being convolved and be an output vector representing circular convolution for N = 4.

The task is reduced to calculating the following product:

where:

Calculating (8) directly requires 16 multiplications and 12 additions. It is easy to see that the matrix has an unusual structure. Taking into account this specificity leads to the fact that the number of multiplications in the calculation of the four-point circular convolution can be reduced.

Therefore, the optimized computational procedure for computing the four-point circular convolution is as follows:

where:

where is an identity N matrix, is the 2 × 2 Hadamard matrix, and signs “⊗” and “⊕” denote the Kronecker product and direct sum of two matrices, respectively [30,31].

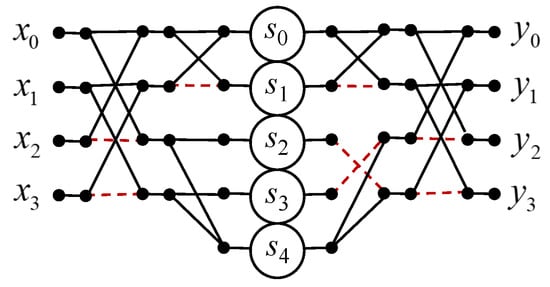

Figure 3 shows a signal flow graph of the proposed algorithm for the implementation of the four-point circular convolution.

Figure 3.

Algorithmic structure of the processing core for the computation of the 4-point circular convolution.

As for the arithmetic blocks, to compute the four-point convolution (9), you need five multipliers and fifteen two-input adders, instead of sixteen multipliers and twelve two-input adders in the case of a completely parallel implementation (8). The proposed algorithm saves eleven multiplications at the cost of three extra additions compared to the ordinary matrix–vector multiplication method.

3.4. Circular Convolution for

Let and be five-dimensional data vectors being convolved and be an output vector representing a circular convolution for N = 5.

The task is reduced to calculating the following product:

where:

Calculating (10) directly requires 25 multiplications and 20 additions. It is easy to see that the matrix has an unusual structure. Taking into account this specificity leads to the fact that the number of multiplications in the calculation of the five-point circular convolution can be reduced.

Therefore, an efficient algorithm for computing the five-point circular convolution can be represented using the following matrix–vector procedure:

where:

Figure 4 shows a data flow graph of the proposed algorithm for the implementation of the five-point circular convolution.

Figure 4.

Algorithmic structure of the processing core for the computation of the 5-point circular convolution.

As for the arithmetic blocks, to compute the five-point convolution (11), you need ten multipliers, and thirty two-input adders, instead of twenty-five multipliers and twenty two-input adders in the case of a completely parallel implementation (10). The proposed algorithm saves 15 multiplications at the cost of 11 extra additions compared to the ordinary matrix–vector multiplication method.

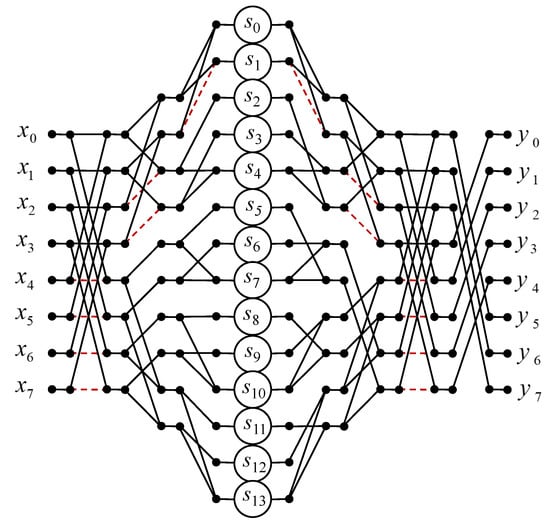

3.5. Circular Convolution for

Let and be six-dimensional data vectors being convolved and be an output vector representing a circular convolution for N = 6.

The task is reduced to calculating the following product:

where:

Calculating (12) directly requires 36 multiplications and 30 additions. It is easy to see that the matrix has an unusual structure. Taking into account this specificity leads to the fact that the number of multiplications in the calculation of the six-point circular convolution can be reduced.

Therefore, an efficient algorithm for computing the six-point circular convolution can be represented using the following matrix–vector procedure:

where:

Figure 5 shows a data flow graph of the proposed algorithm for the implementation of the six-point circular convolution.

Figure 5.

Algorithmic structure of the processing core for the computation of the 6-point circular convolution.

As far as arithmetic blocks are concerned, eight multipliers and thirty-four two-input adders are needed for the completely parallel hardware implementation of the processor core to compute the six-point convolution (13), instead of thirty-six multipliers and thirty two-input adders in the case of a completely parallel implementation (12). The proposed algorithm saves twenty-eight multiplications at the cost of six extra additions compared to the ordinary matrix–vector multiplication method.

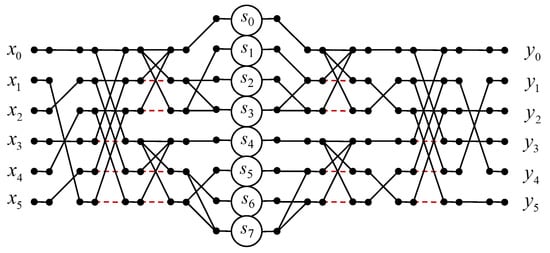

3.6. Circular Convolution for

Let and be seven-dimensional data vectors being convolved and be an output vector representing a circular convolution for N = 7.

The task is reduced to calculating the following product:

Calculating (14) directly requires 49 multiplications and 42 additions. It is easy to see that the matrix has an unusual structure. Taking into account this specificity leads to the fact that the number of multiplications in the calculation of the seven-point circular convolution can be reduced.

Therefore, an efficient algorithm for computing the seven-point circular convolution can be represented using the following matrix–vector procedure:

where:

Figure 6 shows a data flow graph of the proposed algorithm for the implementation of the seven-point circular convolution.

Figure 6.

Algorithmic structure of the processing core for the computation of the 7-point circular convolution.

As far as arithmetic blocks are concerned, sixteen multipliers and sixty-eight two-input adders are needed for the completely parallel hardware implementation of the processor core to compute the seven-point convolution (15), instead of forty-nine multipliers and forty-two two-input adders in the case of a completely parallel implementation (14). The proposed algorithm saves 33 multiplications at the cost of 26 extra additions compared to the ordinary matrix–vector multiplication method.

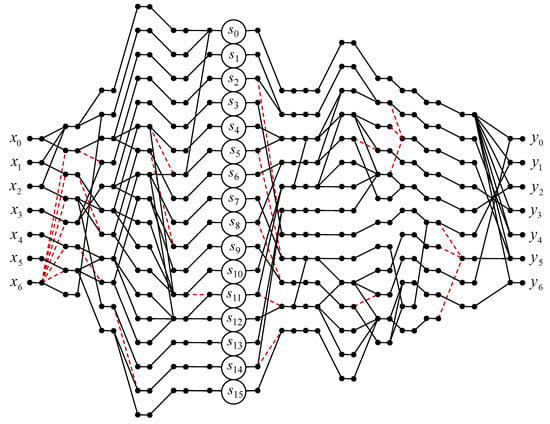

3.7. Circular Convolution for

Let and be eight-dimensional data vectors being convolved and be an output vector representing a circular convolution for N = 8.

The task is reduced to calculating the following product:

Calculating (16) directly requires 64 multiplications and 56 additions. It is easy to see that the matrix has an unusual structure. Taking into account this specificity leads to the fact that the number of multiplications in the calculation of the eight-point circular convolution can be reduced.

Therefore, an efficient algorithm for computing the eight-point circular convolution can be represented using the following matrix–vector procedure:

where:

Figure 7 shows a data flow graph of the proposed algorithm for the implementation of the eight-point circular convolution.

Figure 7.

Algorithmic structure of the processing core for the computation of the 8-point circular convolution.

As far as arithmetic blocks are concerned, fourteen multipliers and forty-six two-input adders are needed for the completely parallel hardware implementation of the processor core to compute the eight-point convolution (17), instead of sixty-four multipliers and fifty-six two-input adders in the case of a completely parallel implementation (16). The proposed algorithm saves 50 multiplications and 10 additions compared to the ordinary matrix–vector multiplication method.

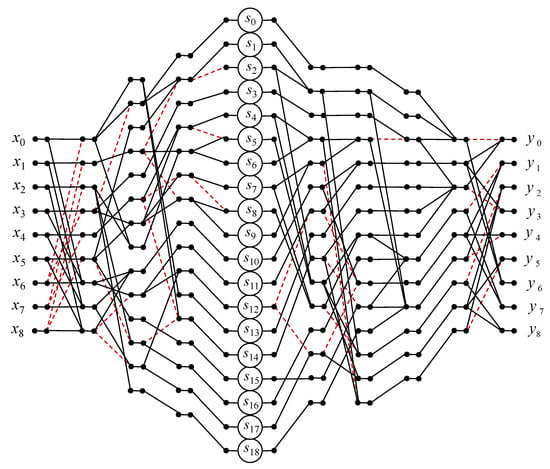

3.8. Circular Convolution for

Let and be nine-dimensional data vectors being convolved and be an output vector representing a circular convolution for N = 9.

The task is reduced to calculating the following product:

Calculating (18) directly requires 81 multiplications and 72 additions. It is easy to see that the matrix has an unusual structure. Taking into account this specificity leads to the fact that the number of multiplications in the calculation of the nine-point circular convolution can be reduced.

Therefore, an efficient algorithm for computing the nine-point circular convolution can be represented using the following matrix–vector procedure:

where:

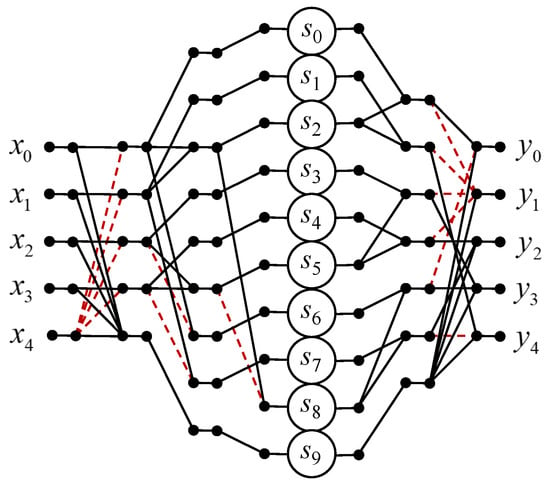

Figure 8 shows a data flow graph of the proposed algorithm for the implementation of the nine-point circular convolution.

Figure 8.

Algorithmic structure of the processing core for the computation of the 9-point circular convolution.

As far as arithmetic blocks are concerned, nineteen multipliers and seventy-four two-input adders are needed for the completely parallel hardware implementation of the processor core to compute the nine-point convolution (19), instead of eighty-one multipliers and seventy-two two-input adders in the case of a completely parallel implementation (18). The proposed algorithm saves sixty-two multiplications at the cost of the one extra addition compared to the ordinary matrix–vector multiplication method.

4. Implementation Complexity

We now estimate the hardware implementation costs of each solution. We assumed that the hardware implementation cost of the hardwired multiplier is and the hardware implementation cost of the two-input adder is . By the hardware implementation cost of the estimated solution, we mean a generalized assessment of the hardware complexity of implementing specific solutions, considering the area within the VLSI, the dissipation power, and therefore, the consumed energy. We also took into account that the N-input adder consists of N − 1 two-input adders. In this way, we treated the implementation cost of an N-input adder as the sum of the implementation costs of N − 1 two-input adders. Then, the total hardware implementation cost C of each solution is equal to:

where M and A mean, respectively, the number of multipliers and the number of two-input adders required for the fully parallel implementation of a particular solution. We can normalize the above equation regarding the cost of the adder, obtaining the normalized cost:

where is the relative cost coefficient of the multiplier.

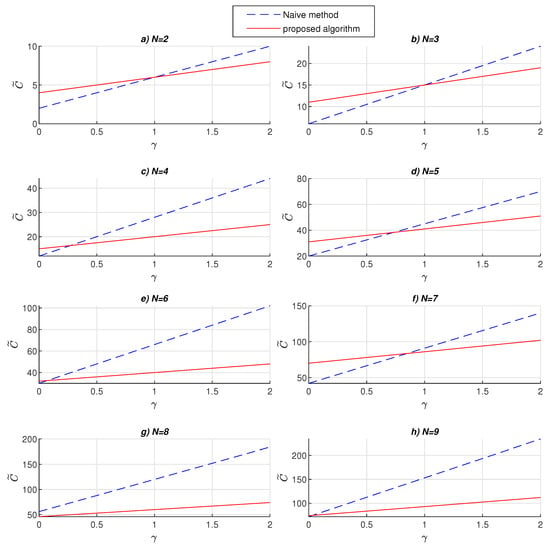

Table 1 shows estimates of the number of arithmetic blocks for the fully parallel implementation of the short-length circular convolution algorithms. The last two columns of the table show the unified hardware costs for the implementation of the corresponding solutions, expressed in terms of the implementation cost of one two-input adder. The charts presented in Figure 9 illustrate the normalized hardware implementation costs of the proposed solution and naive method for various values of and N.

Table 1.

Comparative estimates of the number of hardwired multipliers and adders for the case of completely parallel implementations of the naive-method-based solutions and of the proposed solutions.

Figure 9.

The normalized hardware implementation costs of the proposed solution and naive method, as a function of the relative cost coefficient of the multiplier, for various N.

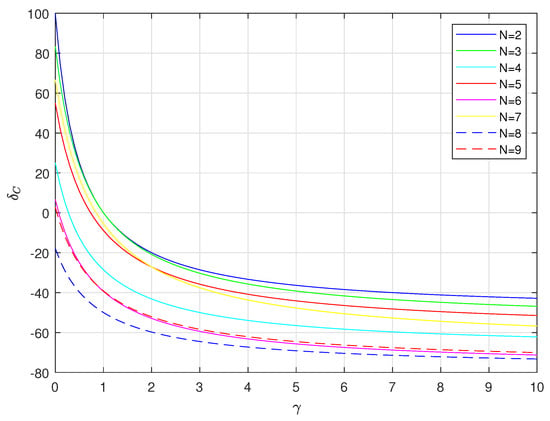

Cost comparisons can also be made using percentage changes:

where and are the normalized cost of proposed algorithm and naive method, respectively.

Figure 10 shows the value of percentage changes as a function of the relative cost coefficient for various values of N. Assuming that the cost of the multiplier is always at least equal to, and most often greater than, the cost of the adder , the cost of the proposed algorithm is never greater than that of the naive method, and in some cases, even cost savings of over 70% are obtained.

Figure 10.

The value of percentage changes as a function of the relative cost coefficient for various values of N.

5. Conclusions

In this article, we analyzed the possibilities of reducing the multiplicative complexity of computing circular convolutions for input sequences of small lengths. We synthesized new hardware-efficient, fully parallel algorithms to implement these operations for 3, 4, 5, 6, 7, 8, and 9. The reduced multiplicative complexity of the proposed algorithms is especially important when developing specialized fully parallel VLSI processors, since it minimizes the number of necessary hardware multipliers and reduces the power dissipation, as well as the total cost of the implementation of the entire system being introduced [30,31,32]. Thus, a decrease in the number of multipliers, even at the expense of a moderate increase in the number of adders, plays an important role in the hardware implementation of the proposed algorithms. Consequently, the use of the proposed solutions makes it possible to reduce the complexity of the hardware implementation of the cyclic convolution kernels. In addition, as can be seen from Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8, the algorithms presented in the article have a pronounced regular and modular structure. This facilitates the mapping of these algorithms to the ASIC structure and unifies their implementation in FPGAs. Thus, the acceleration of computations in the implementation of these algorithms can also be achieved by parallelizing the computations.

Author Contributions

Conceptualization, A.C. and J.P.P.; methodology, A.C. and J.P.P.; software, J.P.P.; validation, A.C. and J.P.P.; formal analysis, A.C. and J.P.P.; investigation, A.C. and J.P.P.; resources, A.C.; data curation, J.P.P.; writing—original draft preparation, A.C.; writing—review and editing, J.P.P.; visualization, A.C. and J.P.P.; supervision, A.C.; project administration, J.P.P.; funding acquisition, A.C. and J.P.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Loulou, A.; Yli-Kaakinen, J.; Renfors, M. Efficient fast-convolution based implementation of 5G waveform processing using circular convolution decomposition. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–7. [Google Scholar]

- Loulou, A.; Yli-Kaakinen, J.; Renfors, M. Advanced low-complexity multicarrier schemes using fast-convolution processing and circular convolution decomposition. IEEE Trans. Signal Process. 2019, 67, 2304–2319. [Google Scholar] [CrossRef]

- Mathieu, M.; Henaff, M.; LeCun, Y. Fast training of convolutional networks through ffts. arXiv 2013, arXiv:1312.5851. [Google Scholar]

- Lin, S.; Liu, N.; Nazemi, M.; Li, H.; Ding, C.; Wang, Y.; Pedram, M. FFT-based deep learning deployment in embedded systems. In Proceedings of the 2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 19–23 March 2018; pp. 1045–1050. [Google Scholar]

- Abtahi, T.; Shea, C.; Kulkarni, A.; Mohsenin, T. Accelerating convolutional neural network with fft on embedded hardware. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2018, 26, 1737–1749. [Google Scholar] [CrossRef]

- Burrus, S.C.; Parks, T.W. DFT/FFT and Convolution Algorithms; John Wiley & Sons: Hoboken, NJ, USA, 1985. [Google Scholar]

- Blahut, R.E. Fast Algorithms for Signal Processing; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- McClellen, J.H.; Rader, C.M. Number Theory in Digital Signal Processing; Professional Technical Reference; Prentice Hall: Hoboken, NJ, USA, 1979. [Google Scholar]

- Tolimieri, R.; An, M.; Lu, C. Algorithms for Discrete Fourier Transform and Convolution; Springer: Berlin/Heidelberg, Germany, 1989. [Google Scholar]

- Berg, L.; Nussbaumer, H. Fast Fourier Transform and Convolution Algorithms. Z. Angew. Math. Mech. 1982, 62, 282. [Google Scholar] [CrossRef]

- Garg, H.K. Digital Signal Processing Algorithms: Number Theory, Convolution, Fast Fourier Transforms, and Applications; Routledge: London, UK, 2017. [Google Scholar]

- Bi, G.; Zeng, Y. Transforms and Fast Algorithms for Signal Analysis and Representations; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Selesnick, I.W.; Burrus, C.S. Extending Winograd’s small convolution algorithm to longer lengths. In Proceedings of the IEEE International Symposium on Circuits and Systems-ISCAS’94, London, UK, 30 May–2 June 1994; Volume 2, pp. 449–452. [Google Scholar]

- Stasinski, R. Extending sizes of effective convolution algorithms. Electron. Lett. 1990, 26, 1602–1604. [Google Scholar] [CrossRef]

- Karas, P.; Svoboda, D. Algorithms for efficient computation of convolution. In Design and Architectures for Digital Signal Processing; IntechOpen: London, UK, 2013; pp. 179–208. [Google Scholar]

- Mohammad, K.; Agaian, S. Efficient FPGA implementation of convolution. In Proceedings of the 2009 IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; pp. 3478–3483. [Google Scholar]

- Duhamel, P.; Vetterli, M. Cyclic convolution of real sequences: Hartley versus fourier and new schemes. In Proceedings of the ICASSP’86. IEEE International Conference on Acoustics, Speech, and Signal Processing, Tokyo, Japan, 7–11 April 1986; Volume 11, pp. 229–232. [Google Scholar]

- Duhamel, P.; Vetterli, M. Improved Fourier and Hartley transform algorithms: Application to cyclic convolution of real data. IEEE Trans. Acoust. Speech Signal Process. 1987, 35, 818–824. [Google Scholar] [CrossRef] [Green Version]

- Meher, P.; Panda, G. Fast Computation of Circular Convolution of Real Valued Data using Prime Factor Fast Hartley Transform Algorithm. IEEE J. Res. 1995, 41, 261–264. [Google Scholar] [CrossRef]

- Reju, V.G.; Koh, S.N.; Soon, Y. Convolution using discrete sine and cosine transforms. IEEE Signal Process. Lett. 2007, 14, 445–448. [Google Scholar] [CrossRef]

- Cheng, C.; Parhi, K.K. Hardware efficient fast DCT based on novel cyclic convolution structures. IEEE Trans. Signal Process. 2006, 54, 4419–4434. [Google Scholar] [CrossRef]

- Chan, Y.H.; Siu, W.C. General approach for the realization of DCT/IDCT using convolutions. Signal Process. 1994, 37, 357–363. [Google Scholar] [CrossRef]

- Hunt, B. A matrix theory proof of the discrete convolution theorem. IEEE Trans. Audio Electroacoust. 1971, 19, 285–288. [Google Scholar] [CrossRef]

- Cariow, A.; Paplinski, J.P. Some algorithms for computing short-length linear convolution. Electronics 2020, 9, 2115. [Google Scholar] [CrossRef]

- Adámek, K.; Dimoudi, S.; Giles, M.; Armour, W. GPU fast convolution via the overlap-and-save method in shared memory. ACM Trans. Archit. Code Optim. (TACO) 2020, 17, 1–20. [Google Scholar] [CrossRef]

- Narasimha, M.J. Modified overlap-add and overlap-save convolution algorithms for real signals. IEEE Signal Process. Lett. 2006, 13, 669–671. [Google Scholar] [CrossRef]

- Huang, T.S. Two-dimensional digital signal processing II. Transforms and median filters. In Two-Dimensional Digital Signal Processing II. Transforms and Median Filters; Topics in Applied Physics; Springer: Berlin/Heidelberg, Germany, 1981; Volume 43. [Google Scholar]

- Parhi, K.K. VLSI Digital Signal Processing Systems: Design and Implementation; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Ju, C.; Solomonik, E. Derivation and Analysis of Fast Bilinear Algorithms for Convolution. arXiv 2019, arXiv:1910.13367. [Google Scholar] [CrossRef]

- Regalia, P.A.; Sanjit, M.K. Kronecker products, unitary matrices and signal processing applications. SIAM Rev. 1989, 31, 586–613. [Google Scholar] [CrossRef]

- Granata, J.; Conner, M.; Tolimieri, R. The tensor product: A mathematical programming language for FFTs and other fast DSP operations. IEEE Signal Process. Mag. 1992, 9, 40–48. [Google Scholar] [CrossRef]

- Saha, P.; Banerjee, A.; Dandapat, A.; Bhattacharyya, P. ASIC implementation of high speed processor for calculating discrete fourier transformation using circular convolution technique. WSEAS Trans. Circuits Syst. 2011, 10, 278–288. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).