Internet of Drones Intrusion Detection Using Deep Learning

Abstract

:1. Introduction

- A real-time data analytics framework for investigating FANET intrusion detection threads has been proposed.

- Utilizing deep learning algorithms for drone networks intrusion detection.

- An extensive set of experiments are conducted to examine the efficiency of the proposed framework.

- Examining the proposed framework on various datasets.

2. Related Work

2.1. Intrusion Detection System

2.2. Machine Learning Methods

2.3. Hybridization of IDSs

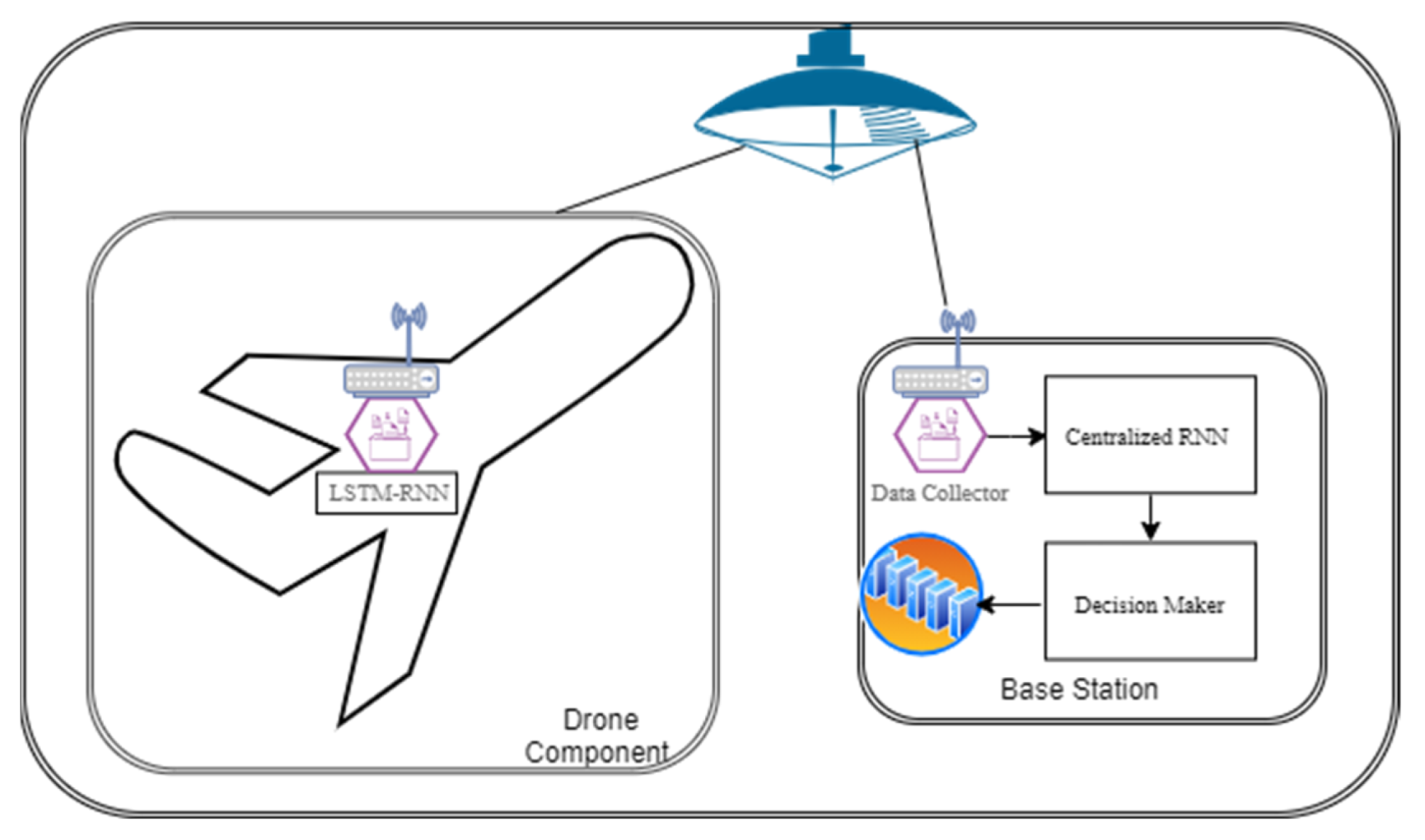

3. The Proposed Drone Intrusion Detection Framework

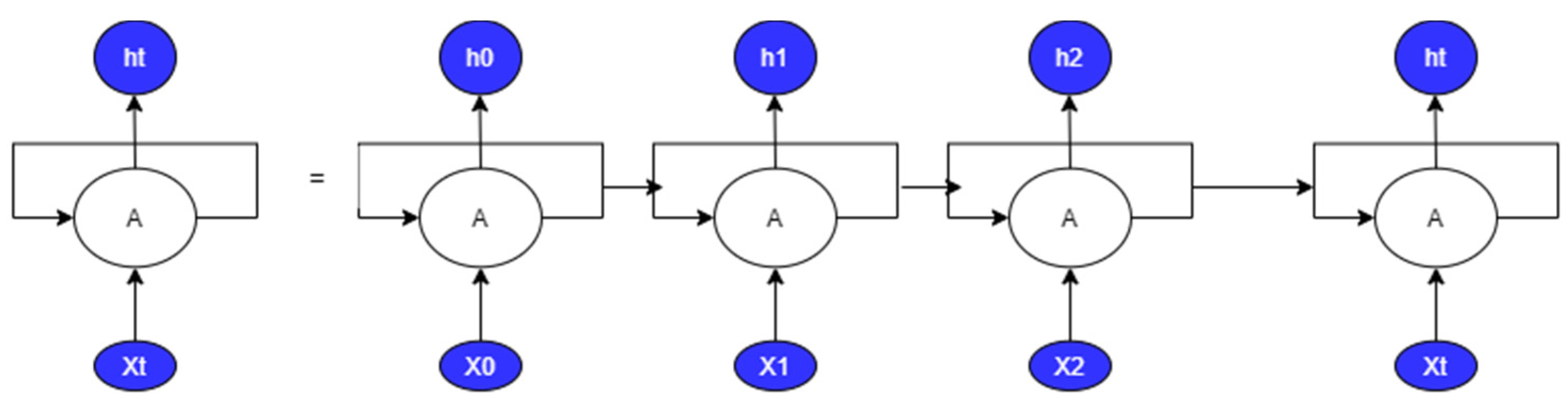

3.1. Drone RNN

3.2. Data Collector

3.3. Centralized RNN

3.4. Decision Maker

- Red: The red alarm means that the intrusion is certain and is identified by the two RNN modules. In this case, all of the networks are notified, and the exposed drone is isolated.

- Yellow: The yellow alarm means that one of the two modules identified the intrusion while the other module did not recognize the intrusion. They only allowed forwarding the received messages to the centralized RNN module. In such a case, the overall network is notified to stop dealing with the exposed drone for a certain period of time. This situation continues until further notification comes from the decision-making module.

- Green: This means that there is no need for any alarm where the drones are supposed to be safe. In this case, both the RNN modules confirm no intrusion on any of the drones.

4. Results

4.1. The Used Datasets

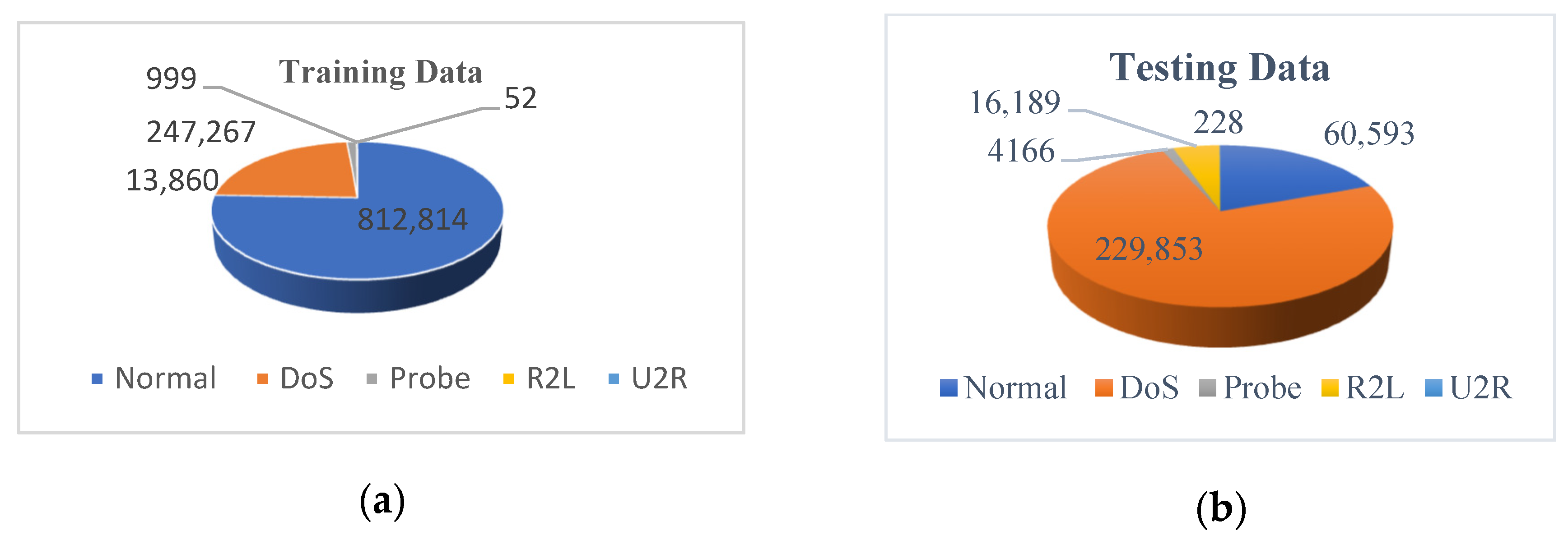

- KDDCup 99: This was collected based on tcpdump data DARPA intrusion detection challenge in 1998. The data were extracted at MIT Lincon laboratory through a setup of thousands of UNIX machines and hundreds of users accessing those machines. The data were collected for 10 weeks; the first 7 weeks’ data were used for training, and the rest were used for testing. The 10 weeks’ data are available to be used, but their size is huge to be used for the algorithm testing; 10% of the overall data has been made available for researchers and will be used in our experiments. The data, as shown in Figure 5, have 41 features to be examined and contain 5 classes of attacks (‘Normal’, ‘DoS’, ‘Probe’, ‘R2L’, ‘U2R’). The detailed statistics of KDDCup are shown in Figure 2, including the training and testing data.

- NSL-KDD: NSL-KDD is the distilled variant of KDD Cup 99 filters are used to eliminate duplicate records that are not required during data gathering. The discarded records were 136,489 and 136,497. The NSL-KDD dataset protects machine learning systems from overfitting. Using NSL-KDD in our implementation gave us further results over the KDDCup 99 dataset. However, the dataset suffers from not correctly reflecting the real-time traffic characteristics. NSL-DD statistical details, including the training and testing data per type of attack, are shown in Figure 6.

- UNSW-NB15: This is a new dataset that addresses the KDDCup 99 and NSL-KDD datasets’ problems. It was created by the Computer Security Research Team of the Australian Centre for Cyber Security (ACCS). It has the regular and attacks activities extracted by the IXIA Perfect Storm tool from a live network. Two servers were used to generate normal activities on a network, while another server was used to generate malicious activities. Tcpdump was used to collect network packet traces that compiled the network data from 100 GBs into 1000 MBs of pcaps. The pcap files were evaluated using Argus and Bro-IDS under Linux Ubuntu 14.0.3. In addition to the aforementioned methods, twelve algorithms were used to analyze the data in depth. The dataset comes in two forms: (i) full records with 2 million entries, and (ii) the data are partitioned into training and testing data. The training data consist of 82,332 records, whereas the testing data comprise 175,341 records, with 10 attacks included. The partitioned dataset includes 42 features and their classes are labeled as Normal and 9 different attacks. The detailed dataset statistics are given in Figure 7.

- WSN-DS: This is an IDS dataset for WSNs which includes four types of DoS attacks: Blackhole, Grayhole, Flooding, and Scheduling. The data were collected using the LEACH technique, a low-energy adaptive clustering hierarchy protocol, then processed to generate 23 features. The dataset statistics are presented in Figure 8.

- CICIDS2017: This dataset contains real-time network traffic details for with attacks. The key interest is focused on real-time monitoring of the background traffic. The B-profile system is used to collect benign background traffic. This traffic includes 26 user characteristics focused on HTTP, HTTPS, FTP, SSH, and email protocols. Attacks like BruteForce FTP, Brute Force SSH, DOS, Heartbleed, Botnet, and DDoS were injected into the data. The statistics of CICIDS2017 are given in Figure 9.

- TON_IoT [46]. TON_IoT uses a unique coordinated architecture that connects edge, fog, and cloud layers. The datasets include data from four sources, including operating systems, IoT/IIoT services, and network systems. The statistics were gathered via a realistic and large-scale testbed network built at UNSW Canberra’s IoT Lab, part of the School of Engineering and Information Technology (SEIT). Table 3 contains the attacks categories of TON_IoT dataset:

4.2. Performance Measures

- True Positive (TP): the number of correctly classified records toward the Normal class.

- True Negative (TN): the number of correctly classified records toward the Attack class.

- False Positive (FP): the number of Normal records wrongly classified toward the Attack class.

- False Negative (FN): the number of Attack records wrongly classified toward the Normal class.

- Accuracy: as can be seen in Equation (1), the accuracy is computed as the ratio of the correctly identified records to all of the records in the dataset. Certainly, as long as the accuracy is high, the model is considered performing better (Accuracy [0; 1]). The accuracy test helps calculate the balanced classes of the data we have.

- Precision: This is an attack measurement method used to calculate the ratio of the number of correctly classified attack records to the number of all identified attack records. Again, the precision is high, the model is considered to be performing better (Precision [0; 1]). The precision can be computed by Equation (2).

- True Positive Rate (TPR), Recall, or Sensitivity: This is the probability that an actual positive classification will test positive. Again, the higher the TPR, the higher the performance ( [0; 1]). The TPR can be computed using Equation (3).

- F1-Score: This is another valuable performance measure, and it is also called F1-measure. The F-score is the relation between the harmonic mean of the precision and recall measures. If the F1-score is high, the proposed model performance (F1Score [0; 1]) would be considered high. The F1-score is defined as given in Equation (4).

5. Simulation Results

5.1. Simulation Environment

5.2. Performance Analysis

5.2.1. LSTM_RNN Performance over UNSW-NB15 Dataset

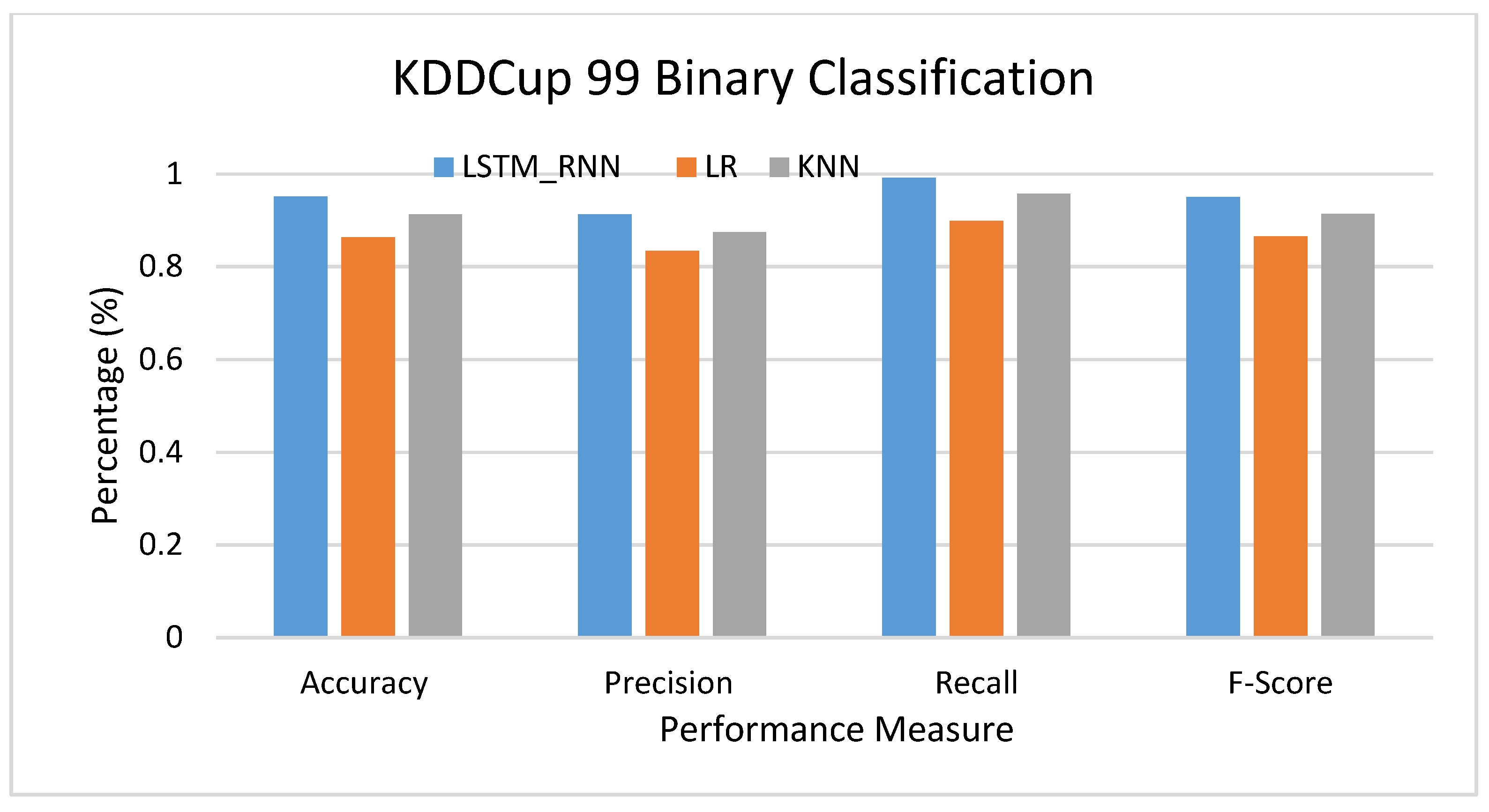

5.2.2. LSTM_RNN Performance over KDDCup 99 Dataset

5.2.3. LSTM_RNN Performance over NSL-KDD Dataset

5.2.4. LSTM_RNN Performance over WSN-DS Dataset

5.2.5. LSTM_RNN Performance over CICIDS2017 Dataset

5.2.6. LSTM_RNN Performance over ToN_IoT Dataset

5.2.7. Signature-Based Approach vs. LSTM_RNN

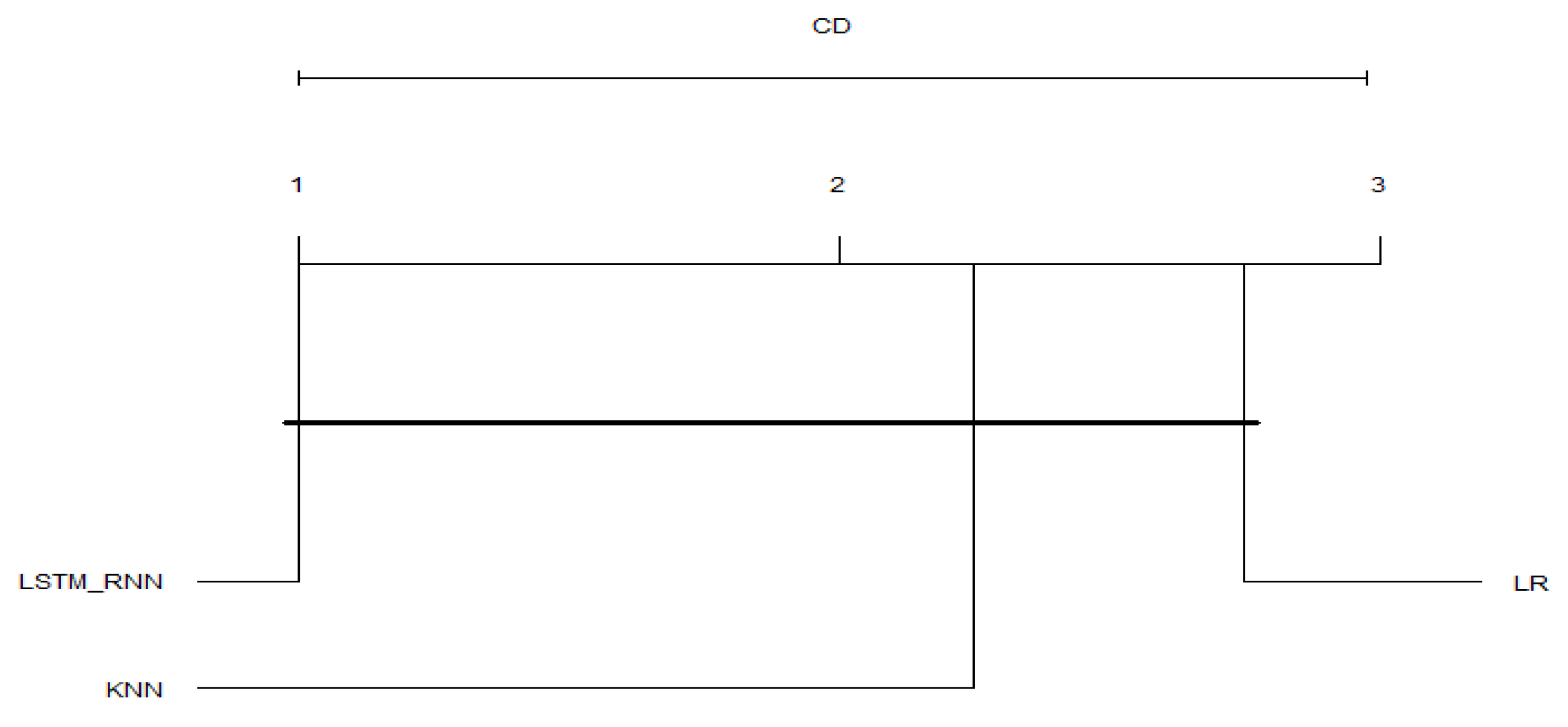

5.2.8. Average Results and Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Roopak, M.; Tian, G.Y.; Chambers, J. Deep Learning Models for Cyber Security in IoT Networks. In Proceedings of the 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 7–9 January 2019; pp. 452–457. [Google Scholar]

- Liang, C.; Shanmugam, B.; Azam, S.; Karim, A.; Islam, A.; Zamani, M.; Kavianpour, S.; Idris, N.B. Intrusion detection system for the internet of things based on blockchain and multi-agent systems. Electronics 2020, 9, 1120. [Google Scholar] [CrossRef]

- Statista Research Department. IoT: Number of Connected Devices Worldwide 2012–2025. Available online: https://www.statista.com/statistics/471264/iot-number-of-connected-devices-worldwide/ (accessed on 20 May 2020).

- Evans, D. The Internet of Things: How the Next Evolution of the Internet Is Changing Everything; Cisco Internet Business Solutions Group (IBSG): San Jose, CA, USA, 2011. [Google Scholar]

- Yuan, X.; Li, C.; Li, X. DeepDefense: Identifying DDoS Attack via Deep Learning. In Proceedings of the 2017 IEEE International Conference on Smart Computing (SMARTCOMP), Hong Kong, China, 29–31 May 2017; pp. 1–8. [Google Scholar]

- Gharibi, M.; Boutaba, R.; Waslander, S.L. Internet of Drones. IEEE Access 2016, 4, 1148–1162. [Google Scholar] [CrossRef]

- Tarter, A. Importance of cyber security. In Community Policing-A European Perspective: Strategies, Best Practices and Guidelines; Springer: New York, NY, USA, 2017; pp. 213–230. [Google Scholar]

- Li, J.; Qu, Y.; Chao, F.; Shum, H.P.; Ho, E.S.; Yang, L. Machine learning algorithms for network intrusion detection. In AI in Cybersecurity; Springer: New York, NY, USA, 2019; pp. 151–179. [Google Scholar]

- Lunt, T.F. A survey of intrusion detection techniques. Comput. Sec. 1993, 12, 405–418. [Google Scholar] [CrossRef]

- Ahmad, Z.; Khan, A.S.; Shiang, C.W.; Abdullah, J.; Ahmad, F. Network intrusion detection system: A systematic study of machine learning and deep learning approaches. Trans. Emerg. Telecommun. Technol. 2021, 32, e4150. [Google Scholar] [CrossRef]

- Debar, H.; Dacier, M.; Wespi, A. Towards a taxonomy of intrusion-detection systems. Comput. Netw. 1999, 31, 805–822. [Google Scholar] [CrossRef]

- Humphreys, T. Statement on the Vulnerability of Civil Unmanned Aerial Vehicles and Other Systems to Civil GPS Spoofing; University of Texas at Austin: Austin, TX, USA, 2012; pp. 1–16. [Google Scholar]

- He, D.; Chan, S.; Guizani, M. Drone-assisted public safety networks: The security aspect. IEEE Commun. Mag. 2017, 55, 218–223. [Google Scholar] [CrossRef]

- Gudla, C.; Rana, M.S.; Sung, A.H. Defense techniques against cyber attacks on unmanned aerial vehicles. In Proceedings of the International Conference on Embedded Systems, Cyber-Physical Systems, and Applications (ESCS), Athens, Greece, 30 July–2 August 2018; pp. 110–116. [Google Scholar]

- Shashok, N. Analysis of vulnerabilities in modern unmanned aircraft systems. Tuft Univ. 2017, 1–10. Available online: http://www.cs.tufts.edu/comp/116/archive/fall2017/nshashok.pdf (accessed on 1 May 2021).

- Hoque, M.S.; Mukit, M.; Bikas, M.; Naser, A. An implementation of intrusion detection system using genetic algorithm. Int. J. Netw. Secur. Its Appl. (IJNSA) 2012, 4. [Google Scholar] [CrossRef]

- Prasad, R.; Rohokale, V. Artificial intelligence and machine learning in cyber security. In Cyber Security: The Lifeline of Information and Communication Technology; Springer: New York, NY, USA, 2020; pp. 231–247. [Google Scholar]

- Lew, J.; Shah, D.A.; Pati, S.; Cattell, S.; Zhang, M.; Sandhupatla, A.; Ng, C.; Goli, N.; Sinclair, M.D.; Rogers, T.G.; et al. Analyzing machine learning workloads using a detailed GPU simulator. In Proceedings of the 2019 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), Madison, WI, USA, 24–26 March 2019; pp. 151–152. [Google Scholar]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Seliya, N.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. J. Big Data 2015, 2, 1. [Google Scholar] [CrossRef] [Green Version]

- Dong, B.; Xue, W. Comparison deep learning method to traditional methods using for network intrusion detection. In Proceedings of the 2016 8th IEEE International Conference on Communication Software and Networks (ICCSN), Beijing, China, 4–6 June 2016. [Google Scholar] [CrossRef]

- Alnaghes, M.S.; Fayez, G. A Survey on Some Currently Existing Intrusion Detection Systems for Mobile Ad Hoc Networks. In Proceedings of the Second International Conference on Electrical and Electronics Engineering, Clean Energy and Green Computing (EEECEGC2015), Antalya, Turkey, 26–28 May 2015; Volume 12. [Google Scholar]

- Sedjelmaci, H.; Senouci, S.M. An accurate and efficient collaborative intrusion detection framework to secure vehicular networks. Comput. Electr. Eng. 2015, 43, 33–47. [Google Scholar] [CrossRef]

- Daeinabi, A.; Rahbar, A.G.P.; Khademzadeh, A. VWCA: An efficient clustering algorithm in vehicular ad hoc networks. J. Netw. Comput. Appl. 2011, 34, 207–222. [Google Scholar] [CrossRef]

- Kumar, N.; Chilamkurti, N. Collaborative trust aware intelligent intrusion detection in VANETs. Comput. Elect. Eng. 2014, 40, 1981–1996. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J.; Alazab, A. Hybrid intrusion detection system based on the stacking ensemble of c5 decision tree classifier and one class support vector machine. Electronics 2020, 9, 173. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Dai, S.; Li, Y.; Zhang, W. Real-time distributed-random-forest-based network intrusion detection system using Apache spark. In Proceedings of the IEEE 37th International Performance Computing and Communications Conference (IPCCC), Orlando, FL, USA, 17–19 November 2018; pp. 1–7. [Google Scholar]

- Maglaras, L.A. A novel distributed intrusion detection system for vehicular ad hoc networks. Int. J. Adv. Comput. Sci. Appl. 2015, 6, 101–106. [Google Scholar]

- Parameshwarappa, P.; Chen, Z.; Gangopadhyay, A. Analyzing attack strategies against rule-based intrusion detection systems. In Proceedings of the Workshop Program of the 19th International Conference on Distributed Computing and Networking, Varanasi, India, 4–7 January 2018; pp. 1–4. [Google Scholar]

- Patel, S.K.; Sonker, A. Rule-based network intrusion detection system for port scanning with efficient port scan detection rules using snort. Int. J. Future Gener. Commun. Netw. 2016, 9, 339–350. [Google Scholar] [CrossRef]

- Cam, H.; Ozdemir, S.; Nair, P.; Muthuavinashiappan, D.; Sanli, H.O. Energy-efficient secure pattern based data aggregation for wireless sensor networks. Com. Commun. 2006, 29, 446–455. [Google Scholar] [CrossRef]

- Zhang, J.; Zulkernine, M.; Haque, A. Random-forests-based network intrusion detection systems. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2008, 38, 649–659. [Google Scholar] [CrossRef]

- Al-Jarrah, O.Y.; Siddiqui, A.; Elsalamouny, M.; Yoo, P.D.; Muhaidat, S.; Kim, K. Machine-learning-based feature selection techniques for large-scale network intrusion detection. In Proceedings of the 2014 IEEE 34th International Conference on Distributed Computing Systems Workshops, Madrid, Spain, 30 June 2014; pp. 177–181. [Google Scholar]

- Su, M.Y. Real-time anomaly detection systems for Denial-of-Service attacks by weighted k-nearest-neighbor classifiers. Expert Syst. Appl. 2011, 38, 3492–3498. [Google Scholar] [CrossRef]

- Rani, M.S.; Xavier, S.B. A hybrid intrusion detection system based on C5.0 decision tree and one-class SVM. Int. J. Curr. Eng. Technol. 2015, 5, 2001–2007. [Google Scholar]

- Yi, Y.; Wu, J.; Xu, W. Incremental SVM based on reserved set for network intrusion detection. Expert Syst. Appl. 2011, 38, 7698–7707. [Google Scholar] [CrossRef]

- Amor, N.B.; Benferhat, S.; Elouedi, Z. Naive bayes vs decision trees in intrusion detection systems. In Proceedings of the 2004 ACM symposium on Applied computing, Nicosia, Cyprus, 14–17 March 2004; pp. 420–424. [Google Scholar]

- Musafer, H.; Abuzneid, A.; Faezipour, M.; Mahmood, A. An enhanced design of sparse autoencoder for latent features extraction based on trigonometric simplexes for network intrusion detection systems. Electronics 2020, 9, 259. [Google Scholar] [CrossRef] [Green Version]

- Abdulhammed, R.; Musafer, H.; Alessa, A.; Faezipour, M.; Abuzneid, A. Features Dimensionality Reduction Approaches for Machine Learning Based Network Intrusion Detection. Electronics 2019, 8, 322. [Google Scholar] [CrossRef] [Green Version]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of intrusion detection systems: Techniques, datasets and challenges. Cybersecurity 2019, 2, 20. [Google Scholar] [CrossRef]

- Salo, F.; Injadat, M.; Nassif, A.B.; Shami, A.; Essex, A. Data mining techniques in intrusion detection systems: A systematic literature review. IEEE Access 2018, 6, 56046–56058. [Google Scholar] [CrossRef]

- Thaseen, I.S.; Kumar, C.A. Intrusion detection model using fusion of chi-square feature selection and multi class SVM. J. King Saud Univ. Comput. Inf. Sci. 2017, 29, 462–472. [Google Scholar]

- Kim, G.; Lee, S.; Kim, S. A novel hybrid intrusion detection method integrating anomaly detection with misuse detection. Expert Syst. Appl. 2014, 41, 1690–1700. [Google Scholar] [CrossRef]

- Al-Yaseen, W.L.; Othman, Z.A.; Nazri, M.Z.A. Multi-level hybrid support vector machine and extreme learning machine based on modified K-means for intrusion detection system. Expert Syst. Appl. 2017, 67, 296–303. [Google Scholar] [CrossRef]

- Muniyandi, A.P.; Rajeswari, R.; Rajaram, R. Network anomaly detection by cascading k-Means clustering and C4. 5 decision tree algorithm. Procedia Eng. 2012, 30, 174–182. [Google Scholar] [CrossRef] [Green Version]

- Jabbar, M.A.; Aluvalu, R. RFAODE: A novel ensemble intrusion detection system. Procedia Comput. Sci. 2017, 115, 226–234. [Google Scholar] [CrossRef]

- Moustafa, N. A new distributed architecture for evaluating AI-based security systems at the edge: Network TON_IoT datasets. Sustain. Cities Soc. 2021, 72, 102994. [Google Scholar] [CrossRef]

- Besharati, E.; Naderan, M.; Namjoo, E. LR-HIDS: Logistic regression host-based intrusion detection system for cloud environments. J. Ambient. Intell. Humaniz. Comput. 2019, 10, 3669–3692. [Google Scholar] [CrossRef]

- Awad, N.A. Enhancing Network Intrusion Detection Model Using Machine Learning Algorithms. CMC-Comput. Mater. Contin. 2021, 67, 979–990. [Google Scholar] [CrossRef]

- Snort Tool. Available online: https://www.snort.org/faq/what-is-snort (accessed on 23 September 2021).

- Calvo, B.; Santafé, R.G. scmamp: Statistical comparison of multiple algorithms in multiple problems. R J. 2016, 8/1, 248–256. [Google Scholar] [CrossRef] [Green Version]

| Paper | Findings | Settings/ Environment | Feature Set | Technique Applied | Dataset |

|---|---|---|---|---|---|

| [31] | Detection rate 94.7%, False positive rate 2% | Down-sampling/ Oversampling 66% training set/ 34% testing set WEKA | 41 features from the TCP dump data | Random Forest | KDD’99 |

| [26] | Execution time of the proposed time is less than other classifiers, GBDT achieved (98.2 Pre. 97.4 Recall, 97.8 F1) | 70% training set/30% testing set Spark2.2.0, kafka2.11 | 13 features | Distributed Random Forest, Gradient boosting decision tree (GBDT), multiclass SVM and Adaboost | CICIDS2017 |

| [32] | RF-FSR: Accuracy 99.9% | Normalization/ Discretization/ Downsamplig Balancing, 10-fold cross validation WEKA | 41 features as the original KDD’99 | RF-FSR/RF-BER | Filtered version of KDD’99 |

| [33] | Among of 35 features, 28 features yielded 78% accuracy for unknown attacks, 19 features yielded 97.42% accuracy for known attacks | Normalization/30 chromosomes/Microsoft Visual C++ | 35 features which are derived from packet headers | K-nearest Neighbour/Genetic Algorithm | Handmade dataset |

| [34] | Detection rate: 88.5%, False alarm ratio: 11.5%; Best achieved accuracy 96% | LIBSVM (MATLAB) | - | C4.5/one-class SVM | NSL-KDD |

| [35] | Detection rate: (91.83–97.731), False alarm ratio: (0.0375–0.188) | Resampling/Normalization Visual C++ and MATLAB | All features of the KDD’99 dataset | Improved incremental SVM (RS-ISVM) | KDD’99 |

| [36] | Decisions trees are slightly better than Naïve Bayes, Accuracy of DT:93.02%/Accuracy of NB:91.45% | Dataset granularities | All features of the KDD’99 dataset | Naïve Bayes/Decision Trees | KDD’99 |

| Paper | Machine Learning Classifier | Intrusion Detection System Techniques | Technique Applied | |||

|---|---|---|---|---|---|---|

| Ensemble | Hybrid | Hybrid IDS | AIDS | SIDS | ||

| [41] | x | √ | x | x | √ | Chi-square /multiclass SVM |

| [42] | x | √ | √ | x | x | C4.5/One-class SVM |

| [44] | x | √ | √ | √ | x | K-means/C4.5 |

| [25] | √ | √ | √ | √ | √ | Stacking Ensemble of C5.0/One-class SVM |

| [31] | √ | x | x | √ | √ | Random Forest |

| [45] | x | √ | x | x | √ | Random Forest /Average One-Dependence Estimator |

| [32] | √ | x | x | √ | x | Random Forest with Feature Selection |

| Attack Type | TON_IoT No. of Records |

|---|---|

| Backdoor | 508,116 |

| DDoS | 6,165,008 |

| DoS | 3,375,328 |

| Injection | 452,659 |

| MITM | 1052 |

| Password | 1,718,568 |

| Ransomware | 72,805 |

| Scanning | 7,140,161 |

| XSS | 2,108,944 |

| Benign | 796,380 |

| Attack | Training Set | Testing Set |

|---|---|---|

| Backdoor | 12,000 | 8000 |

| DDoS | 12,000 | 8000 |

| DoS | 12,000 | 8000 |

| Injection | 12,000 | 8000 |

| Mitm | 625 | 418 |

| Password | 12,000 | 8000 |

| Ransomware | 12,000 | 8000 |

| Scanning | 12,000 | 8000 |

| XSS | 12,000 | 8000 |

| Benign | 180,000 | 120,000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramadan, R.A.; Emara, A.-H.; Al-Sarem, M.; Elhamahmy, M. Internet of Drones Intrusion Detection Using Deep Learning. Electronics 2021, 10, 2633. https://doi.org/10.3390/electronics10212633

Ramadan RA, Emara A-H, Al-Sarem M, Elhamahmy M. Internet of Drones Intrusion Detection Using Deep Learning. Electronics. 2021; 10(21):2633. https://doi.org/10.3390/electronics10212633

Chicago/Turabian StyleRamadan, Rabie A., Abdel-Hamid Emara, Mohammed Al-Sarem, and Mohamed Elhamahmy. 2021. "Internet of Drones Intrusion Detection Using Deep Learning" Electronics 10, no. 21: 2633. https://doi.org/10.3390/electronics10212633

APA StyleRamadan, R. A., Emara, A.-H., Al-Sarem, M., & Elhamahmy, M. (2021). Internet of Drones Intrusion Detection Using Deep Learning. Electronics, 10(21), 2633. https://doi.org/10.3390/electronics10212633