Abstract

The paper presents a new memetic, cluster-based methodology for image registration in case of geometric perturbation model involving translation, rotation and scaling. The methodology consists of two stages. First, using the sets of the object pixels belonging to the target image and to the sensed image respectively, the boundaries of the search space are computed. Next, the registration mechanism residing in a hybridization between a version of firefly population-based search procedure and the two membered evolutionary strategy computed on clustered data is applied. In addition, a procedure designed to deal with the premature convergence problem is embedded. The fitness to be maximized by the memetic algorithm is defined by the Dice coefficient, a function implemented to evaluate the similarity between pairs of binary images. The proposed methodology is applied on both binary and monochrome images. In case of monochrome images, a preprocessing step aiming the binarization of the inputs is considered before the registration. The quality of the proposed approach is measured in terms of accuracy and efficiency. The success rate based on Dice coefficient, normalized mutual information measures, and signal-to-noise ratio are used to establish the accuracy of the obtained algorithm, while the efficiency is evaluated by the run time function.

1. Introduction

Often inspiration comes from nature and this extends into the field of computer science. Many algorithms mimic the behavior of biological organisms to solve problems that are difficult or impossible to solve in other way. Such algorithms are increasingly used to approach various problems. They are permanently adapted, modified, combined, developed and seem to have a bright future [1].

With the advancement of technology came an avalanche of images that are used in various sectors. More than often, they are images of the same object but they are not identical, being recorded by different sensors, at different times, angles, luminosity and other variations. These images must be processed in order to be used and the huge number of images makes this a perfect candidate for automation. The process of image registration has raised a lot of attention in the last two decades, reflected in numerous papers published. Due to the wide range of variations, many authors turn to bio-inspired evolutionary algorithms. Advancements are regularly surveyed and reported in scientific publications such as [2,3,4,5,6].

Image registration can be applied to both deformable images and rigid transformations and both types are studied through the use of bio-inspired evolutionary algorithms. In [7], an evolutionary algorithm (EA) with multiple objective optimization is used to find the best way to automate registration of deformable images in the medical field. The results indicate the algorithm is suitable for solving problems with limited deformations and can create better images to be used by experts, also freeing their time by automating image processing.

Registration of images with rigid transformations is advanced in [8] where authors propose a genetic algorithm (GA) that computes best parameters (for translation, rotation and scale) based on matching shapes of molecules. The results have been validated by applying the algorithm for registration of various medical image types: magnetic resonance image (MRI), computed tomography (CT) and positron emission tomography (PET). Images used for testing were obtained from the retrospective image registration evaluation (RIRE) project. The accuracy of the registration is enhanced by using a better fitness function for the genetic algorithm. Authors highlight the fact that the most commonly used similarity function (mutual information of two images) has local optimums which bring the risk of the algorithm becoming stuck in one such point. Improvements are needed in order to ensure the global optimum is found and for this purpose the authors combine the widely-used mutual information function with an interaction energy function.

All kinds of bio-inspired algorithms are used in reported works, from GA [8,9] and evolutionary algorithms (EA) [7] to newer approaches that employ hybridizations and metaheuristics.

Various articles report on the use of swarm intelligence and derivate algorithms for image registration. An in-depth study of particle swarm optimization, with shortcomings and numerous developments and hybridizations is reported in [10]. In [11], authors also review such algorithms and hybridization with evolutionary strategies (ES) for biological and medical image registration, indicating promising results for future developments.

The GA approach is compared to the artificial bee colony (ABC) approach in [9], highlighting the advantages of each algorithm: while GA is faster, ABC gives better quality of image registration. Another comparison between GA and swarm approach, using the correlation function of two images to estimate the quality of registration process, is reported in [12] with the conclusion that the PSO approach provides superior results.

Particle swarm optimization sample consensus (PSOSAC) is used in [13] to optimize registration efficiency. The results are compared against random sample consensus (RANSAC) algorithm and proved to lead to better results.

In [14] authors use an adaptation of coral reef optimization algorithm with substrate layers (CRO-SL) with real numbers for encoding the information. Both feature-based and intensity-based variants for registration are attempted. This approach is compared with others and yields very good results.

Bacterial foraging optimization (BFO) algorithm is applied on image registration in [15,16]. Results are compared with those of other recent algorithms proving to be competitive.

The intensive calculations required for the convergence of bio-inspired algorithms might lead to unfeasible computation time, which is why one of the main concerns is speeding up the algorithms. Use of multiple clusters of data in order to speed up the registration algorithms is an idea presented in several articles. In [17], multiple swarms of ABC are used to this purpose, with very good, reported results regarding the computation time. In [18], authors compare PSO with multi-swarm optimization (MSO) and cuckoo search algorithm (CSA) for image registration. For the dataset, used PSO offers the best precision, while PSO and MSO offer best speed and CSA and MSO offer the least scatter of results. As such, no algorithm prevails on all criteria, but authors mention that these results might be particular to the problem solved and may be different in other cases.

The Firefly paradigm is also used to approach the problem of image registration, with results reported in scientific publications. The many local optimums are a trap for algorithms that use mutual information as fitness indicator for image registration, as mentioned before. In [19], Firefly is used to overcome this problem by combining the use of lower and higher resolution variants of an image and the Powell algorithm. Firefly is used to produce an imprecise result using the lower resolution images, then Powell algorithm is applied on higher resolution images.

A hybrid firefly algorithm (HFA) is used in [20] to solve the problem of slow convergence and for a better coverage of the entire solution space during the search process.

This paper presents a new memetic, cluster-based methodology for image registration. The working assumption is that the sensed images are variants of the targets perturbed by the geometric transformation consisting in rotation, translation and scaling. The proposed approach is applied to align either binary or monochrome images. In both cases, the first step consists in computing the boundaries of the search space based on the object pixels of the processed images. Then, the memetic registration procedure is applied. The alignment of pairs of monochrome images is performed on binarized and resized images. The scaling step is meant to speed up processing of pairs of images and it is used in case of gray scale images only. The quality of the resulted algorithms is measured in terms of accuracy and efficiency. The success rate based on Dice coefficient, the normalized Shannon/Tsallis mutual information measures and signal-to-noise ratio are used to evaluate the accuracy, while the efficiency is established by the run time function. A comparative analysis against two of the most commonly used methods to align images in case of rigid/affine perturbation, namely one plus one evolutionary optimizer [21] and principal axes transform (PAT) [22] experimentally proves the quality of the proposed methodology.

The rest of the paper is organized as follows. The similarity measures used both to define the fitness function and to evaluate the accuracy of the alignment are supplied in Section 2. The proposed methodology is exposed in the core section of the paper. We describe the accuracy and efficiency indices in Section 4. A series of experimental results and the comparative analysis concerning the accuracy and the efficiency of the resulted algorithms are presented next. The final part of the paper includes conclusions and suggestions for further developments regarding bio-inspired methods for image registration.

2. Similarity Measures

Let and be two binary sets. The Dice coefficient measures the similarities between X and Y by:

where stands for the cardinal of . Obviously, and if and only if . The Dice coefficient can be directly applied to pairs of binary images.

In general cases of monochrome and colored images, more complex functions should be considered instead, one of the most commonly used being the normalized mutual information computed using entropic measures.

Let and be monochrome images with distributions and , respectively. Note that is the probability of intensity appearing in image . We denote by the joint probability, that is the probability that corresponding pixels in and have intensity and , respectively. The joint probability distribution of the images and reflects the relationship between intensities in and . Assuming that L is the number of grey levels of the images, the Shannon entropy of is defined by:

The joint Shannon entropy is given by:

Shannon normalized mutual information is defined by [23]:

where is the Shannon mutual information. The maximum value of Shannon normalized mutual information is one and it is reached when .

Shannon mutual information is widely used in image registration, but it is sensitive to noise. To reduce the influence of outliers, one can use similarity measures based on Tsallis entropy instead [22,24].

Tsallis entropy of order α is defined by [24]

The joint Tsallis entropy of order α is given by

Note that when α approaches to 1, Tsallis entropy approaches Shannon entropy.

For α > 1, Tsallis mutual information is expressed as:

and Tsallis normalized mutual information

For α > 1, the following properties hold [25]

- if X and Y are independent

- if .

In our work the fitness function is defined in terms of Dice coefficient, while and , α > 1, are used to evaluate the accuracy of the registration procedure.

3. The Proposed Methodology for Binary Image Alignment

The proposed methodology used to align two binary images is developed to deal with perturbations involving rotation, translation and scaling. Note that in image registration literature, a rigid transformation involves either rotation and translation [4,22], or rotation, translation and scale changes [26]. The first case corresponds to rigid geometric transformations which preserve distances and it is given by three parameters, the translation [a, b] and the rotation angle . In the second approach, objects in the images retain their relative shape and position, rigid transformation being defined by four parameters, the translation [a, b], the rotation angle and the scale factor s. From the geometrical point of view, the transformation corresponds to a similarity with stretching factor s. In our work, we used the second version of rigid transformations.

Let T be the target image of size . The sensed image S results as a geometric transformation of T, defined in terms of rotation, translation and scale changes. Let be the rotation angle defining the rotation matrix , s the scale factor and the translation vector. For each pixel , the output is given by:

where

The computation of the transformation parameters can be carried out by an evolutionary algorithm, where the boundaries of the search space are established using the sets of object pixels belonging to the target image and to the sensed image respectively.

The components of our methodology are described below.

3.1. The Search Space Boundaries

The first stage of our method consists in defining the search space. Based on the assumption that the deformation is reversible, that is each object in T corresponds to a certain object in S, namely its perturbed version, the computation of the search space boundaries is performed taking into account only the object pixels.

We consider that the initial image was rotated to the left, that is and the scale factor . Obviously, similar results can be obtained in case of right-side rotations. We denote by the set of object pixels belonging to S, let be the set of object pixels of T and

Using (11) we obtain

Using straightforward computation, since , we obtain

and

We obtain the following definition domain of parameter a

In the same way, since

we obtain

and consequently, the definition domain of b is given by

The proposed image registration method aims to compute the parameter such that the relations (10) and (11) hold, where:

3.2. Metaheuristics for Image Registration

The binary image registration procedure can be developed using evolutionary approaches. The proposed methodology uses a special tailored version of Firefly algorithm and standard two membered evolutionary strategy (2MES) to compute a solution of (10). In this section, we briefly describe the versions of Firefly algorithm and 2MES specially tailored to binary image registration [27,28].

From the evolutionary algorithms point of view, solving the problem (10) involves defining a search space and a fitness function, and applying an iterative procedure to compute an individual that maximizes the fitness. In our approach, the search space is defined by (19) and, for each candidate solution , the fitness function measures the similarity between the target image T and the image ,

where .

Evolutionary Strategies (ES) are self-adaptive methods for continuous parameter optimization. The simplest algorithm belonging to ES class is 2MES, a local search procedure that computes a sequence of candidate solutions based on Gaussian mutation with adaptive step size. Briefly, the search starts with a randomly generated/input vector , an initial step size and the values and implementing the self-adaptive Rechenberg rule [29]. At each iteration t, the algorithms computes:

where is randomly generated from the distribution . The dispersion is updated every steps according to Rechenberg rule:

where is the number of distinct vectors computed by the last updates. The search is over either when the fitness if good enough, i.e., the maximum value exceeds a threshold or when a maximum number of iterations MAX has been reached. Let us denote by 2MES(x,,,,, MAX, S, T) the 2MES procedure with the initial input vector . The procedure computes the improved version of x, , using the termination condition defined by the parameters and MAX, respectively.

Note that 2MES algorithm usually computes local optima and it is used to locally improve candidate solutions computed by global search procedures in hybrid or memetic approaches.

Firefly algorithm (FA) is a nature inspired optimization procedure, introduced in [30]. FA belongs to the class of swarm intelligence methods and it mimics the behavior of fireflies and their bioluminescent communication. The ideas underlying FA are that each firefly is attracted by the flashes emitted by all other fireflies, the attractiveness of an individual is linked to the brightness of its flashes, and influenced by the light absorption and the law of light variations with distance.

In terms of image registration problem (10), the position of a firefly i corresponds to a candidate solution , its light intensity being given by . For each pair of fireflies i and j, if j is brighter than i, that is , then i is attracted by j, its position being updated based on the following equation:

where controls the randomness, is randomly drawn from , is the attractiveness of j seen by i defined by:

where is the Euclidian distance between i and j. The constant is the brightness of any firefly at and represents the light absorption coefficient.

Usually, the update rule (25) is applied if the attractiveness of i in the new location is higher than the attractiveness corresponding to the old position . The termination criterion of the FA is formulated in terms of number of iterations. Obviously, if the maximum value of the brightness function is known, the FA ends when the current best individual is good enough.

The image registration problem (10) has been solved using the fixed-size model of FA, where the population at time t, , has individuals, , and [27]. Note that the search space used in the cited work is predefined, it does not change or adapt in any way depending on the image properties.

The initial population, , is randomly generated according to the uniform probability distribution . We denote by a constant scale factor and let be a draw from the uniform distribution on the interval [a, b]. The update rule introduced in [27] is given by:

In addition, a border reflection rule has been proposed to deal with unfeasibility:

where and represents a draw from uniform distribution on [a, b].

Note that in Attractiveness Formula (27) the quality of the attractor affects the randomness parameter. In case of high luminous intensity individual , less randomness value is added. If the flashes emitted by the firefly are weak then the perturbation grows.

3.3. Cluster-Based Memetic Registration

The proposed methodology is based on a core cluster-based memetic algorithm developed to register pairs of binary images. The global search procedure is directed by the variant of FA described in Section 3.2, where the positions of fireflies belong to the domain defined by (19). The fitness function defined by (22) implements Dice coefficient. Note that the maximum value of the fitness function is one.

The initial population is randomly generated and a small number of individuals are locally improved (a fixed percentage of population size). The local optimization is implemented by 2MES method provided in Section 3.2. We denote by nr the number of individuals to be initially processed by 2MES.

The population-based optimization is an iterative process, at each iteration t the algorithm selecting pairs of distinct individuals and, if , applies the update rule (27). If the brightness of the best individual in does not exceed the highest fitness value in , then the local optimization procedure 2MES is used. The individuals selected for further improvements are computed based on clustered data. The candidate solutions in are grouped in k clusters using the Euclidian distance metric, one individual per cluster being chosen to be locally improved. The selection can be random or deterministic, for instance, one may choose either the centroid or the best candidate solution of each cluster.

In addition, the proposed hybridization between the population-based search and the local optimization procedure is designed to reduce the risk of premature convergence. Basically, two mechanisms are developed to deal with the situation of premature convergence. On one hand, at each iteration t, the number of clusters is set inverse proportional to the fitness value of the best individual in , denoted by . Let be the initial number of clusters, set as a small percentage of population size. We propose the computation rule:

Moreover, the initial step size of 2MES procedure increases in case its consecutive iterations do not lead to quality improvement. The proposed update rule is given by:

Note that .

On the other hand, if the fitness is not improved over it2, it2 > it1, consecutive iterations, some new individuals are created to replace a set of randomly selected old ones. The newly created individuals are randomly generated using the uniform probability distribution, each one of them being improved next by 2MES procedure. We denote by NEW(ind) the procedure that refreshes by adding individuals, as we explained above.

The search is over after NMAX iterations or when the best computed fitness value is above a threshold .

The detailed description of the proposed algorithm is provided below. We denote by S and T the sensed image and the target image, respectively. The parameters ,,, and MAX correspond to the 2MES procedure applied to improve the initial population and we denote by , , , , MAX′ the parameters of the local optimizer applied on clustered data. The parameters and are specific to FA, according to Section 3.2 and let us denote by the current population at the tth iteration. The variable counter counts the number of consecutive populations having the same best fitness value.

| Algorithm 1 Cluster-based memetic algorithm |

|

| Algorithm 2 Computation of the initial population |

|

| Algorithm 3 FA iteration |

|

3.4. Monochrome Image Registration

The method described by Algorithm 1 can also be applied, after a preprocessing stage, when monochrome images should be registered. Obviously, the main idea is to binarize the images by representing them using only the boundaries of their objects. However, depending on the complexity and quality of the analyzed images, further specific image processing techniques may be needed, as for instance image enhancement, de-blurring and noise removal. Alternatively, one can use edge detectors insensitive to noise and variations in illumination. Examples of such filters are reported in [31,32,33].

In the following we assume that the input images have already been processed such that a contour detection mechanism can be applied.

Let S and T be the sized sensed and target image respectively and we assume that the rigid transformation is given by the parameters . The proposed registration procedure consists of the following steps. First, a contraction mechanism, for example a scale transformation with supra-unitary factor, is applied. If we denote by the contraction factor, the images S and T are transformed according to:

where and

The main aim of the transforms (31) and (32) is to reduce the size of objects in the processed images and hence the complexity of search.

The next step is to represent and using only the contour of the objects belonging to the images. We denote by and the results of applying an edge detector to and , respectively. Obviously, is a perturbed version of . We denote by the parameters of the corresponding perturbation. Using straightforward computation, we obtain:

Consequently, to compute the parameters we first apply the Algorithm 1 to obtain an approximation of , and then use Equation (34).

3.5. Monochrome Image Registration in Case of Scaling on Multiple Dimensions

The proposed methodology can be extended to the case of more general perturbation models, in which each dimension is scaled with a specific stretching factor. The transformation is given by:

where

for each . The scale matrix is such that .

The search space boundaries can be computed similarly to (10) and (11). If and , by straightforward computation we obtain,

Consequently, the proposed image alignment method aims to compute the parameters such that the relations (37) and (38) hold, where

For each candidate solution , the fitness function measures the similarity between the target image T and the image ,

where .

The methodology described in Section 3.4 can be applied to align images perturbed by (38) using the fitness function defined by (44).

4. Efficiency Measures

Let S be the sensed image and we denote by T the target. The images have the same size, . The accuracy of the registration method is measured through the success rate and using the similarity between T and the result of applying the alignment process on S, denoted by . We evaluate the similarity between T and using two metrics commonly used in image processing, signal-to-noise-ratio (SNR) and peak-signal-to-noise ratio (PSNR), and two entropic measures, Shannon normalized mutual information defined by (4) and Tsallis normalized mutual information given by (9). The values and are given by

Note that the fitness function used to align pairs of images is also a similarity measure computed between binary images, Dice coefficient (1).

Due to the fact that the proposed methodology is of stochastic type, one way to evaluate its effectiveness is to compute the success rate, that is the percentage of runs that led to the correct registration. From technical point of view, the result of applying Algorithm 1 to the pair (S, T) is correct if the obtained image corresponds to an individual such that . Consequently, the success rate of the algorithm is given by:

where NS is the number of successful runs and NR is the total number of algorithm executions.

To evaluate the accuracy of the proposed method, we compute the mean values of the above-mentioned similarities. If we denote by the registered versions computed by the algorithm and , we obtain

The efficiency of the proposed method is experimentally evaluated by the runtime function. If we denote by the execution times consumed by Algorithm 1 to register the pair (S, T), the mean runtime value is computed by

From theoretical point of view, computational complexity may be evaluated in several ways. In our analysis we use the size of population (n) and maximum number of iterations corresponding to FA (NMAX) and 2MES (MAX, MAX′, where MAX and MAX′ have the same magnitude) to estimate the worst-case scenario. Since 2MES performs at most MAX iterations, the initial population generation algorithm (Algorithm 2) has a complexity of O(n*MAX). The complexity of Algorithm 1 is influenced by the k-means clustering algorithm. Since in our method the number of clusters linearly depends on the population size and the complexity of k-means is O(k*n*chromosome size), the resulting complexity of the clustering algorithm is O(n2). The 2MES performs at most, MAX′ iterations and is called inside a loop depending on k, which means the complexity of this loop is O(n*MAX′). An iteration of FA algorithm (Algorithm 3) has a complexity of O(n2). Consequently, the complexity of the proposed method is O(NMAX*n*MAX). Note that in most cases n << MAX and NMAX and MAX have a similar magnitude, which leads to a quadratic complexity depending on the number of iterations.

5. Experimental Results and Discussion

To derive conclusions regarding the quality of the proposed approach a long series of test have been conducted on both binary and monochrome images. The results were obtained using the following configuration: processor Intel Core i7-10870H up to 5.0 GHz, 16 GB RAM DDR4, SSD 512 GB, NVIDIA GeForce GTX 1650Ti 4 GB GDDR6.

5.1. Binary Image Registration

Our tests have been conducted on a set of 16 binary images representing signatures, all having the same size pixels. The images, denoted by , are perturbed by the rigid transformation (10) and (11) with various perturbation parameters. The rotation angle is between and 0, while the scale factor was set in . The translation parameters are and . The rigid transformation parameters correspond to the working assumption that the perturbation process is totally reversible, that is the object pixels are completely encoded in the sensed images.

The search space is computed using (19). Note that the intervals and are significantly larger than and . For instance, in case of , and , while and .

Since the perturbation process is totally reversible, the fitness threshold is set close to the maximum value, one. In our test . The rest of the input parameters are set as follows: , , , , , , cf = 2, , , , , , , and .

The experimentally established results regarding the accuracy and the efficiency of Algorithm 1 are provided in Table 1. Note that the success rate is 100% for all pairs of images, and the SNR values are computed for images having the gray levels in . The computation is over when the maximum fitness value is at least 0.9.

Table 1.

The results of applying Algorithm 1 in case of pairs of binary images.

5.2. Monochrome Image Registration

In case of more complex, monochrome images, the assumption that the perturbation process is completely reversible is rather unrealistic. From the technical point of view, it means that the search procedure cannot manage to compute an individual with fitness 1, that is even when the rigid transformation parameters are correctly determined. Obviously, in such cases the threshold should be set on lower values and the evaluation of accuracy should take into account the similarity between the aligned version of S and the initial image with the missing parts instead of the target T.

Our tests were conducted on images belonging to the well-known Yale Face Database [34,35], which contains 165 greyscale images of 15 individuals, 11 images per subject/class. The results reported in Table 2, Table 3 and Table 4 refer to 30 images, two for each person, while the images displayed in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10 correspond to two classes.

Table 2.

The numerical results obtained by applying the proposed method.

Table 3.

The numerical results obtained by applying the PAT method.

Table 4.

The numerical results obtained by applying the EO method.

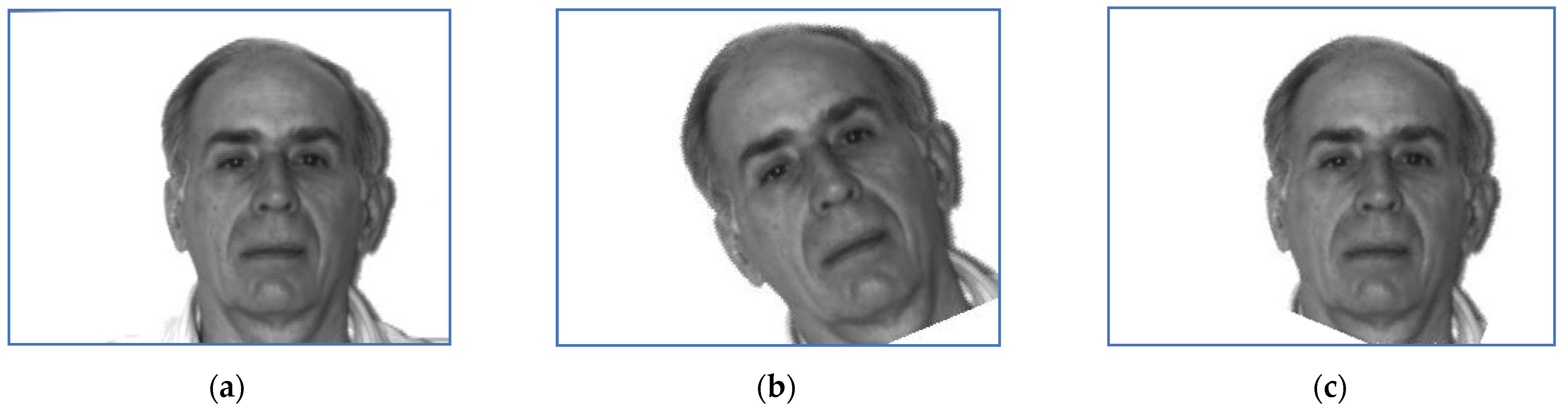

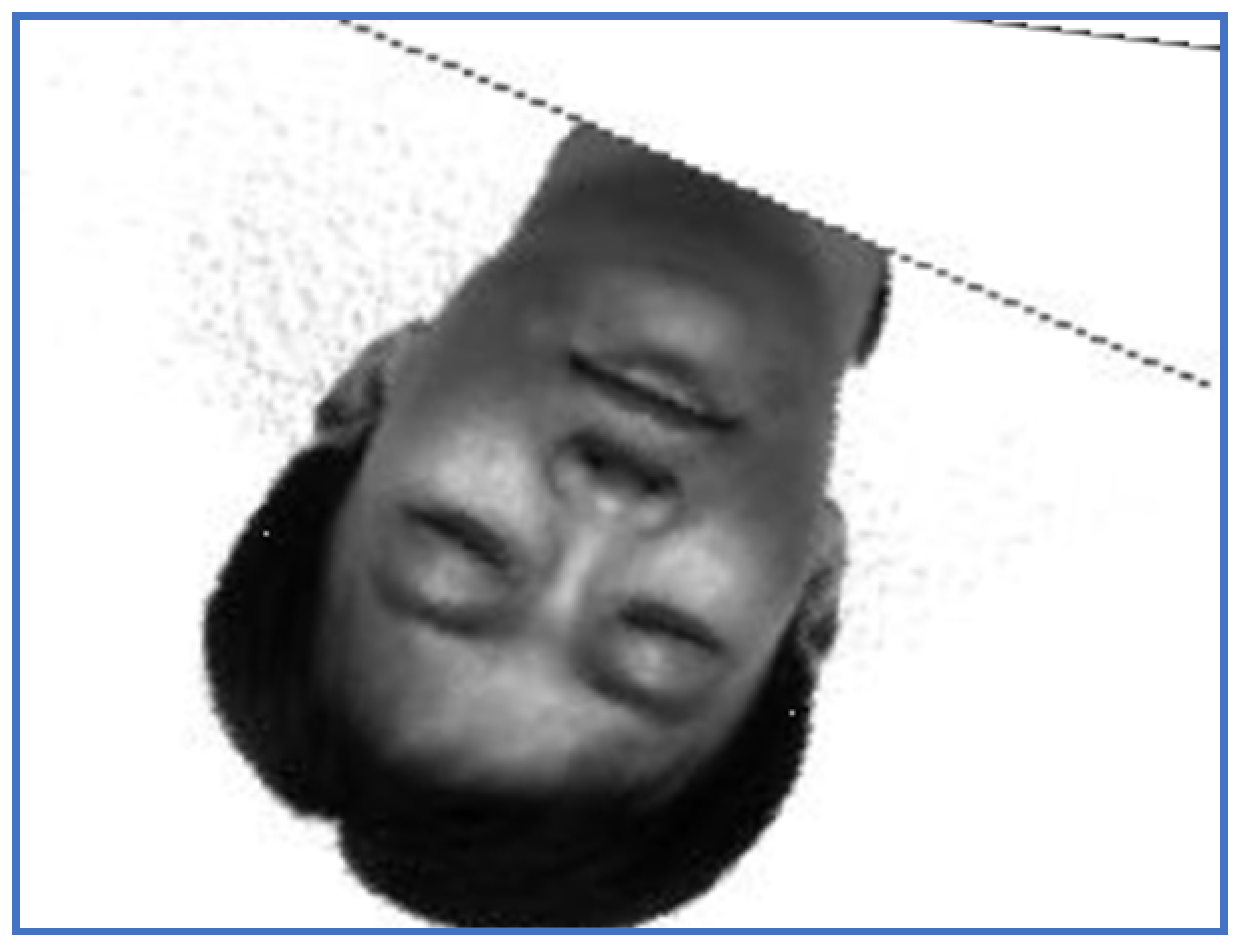

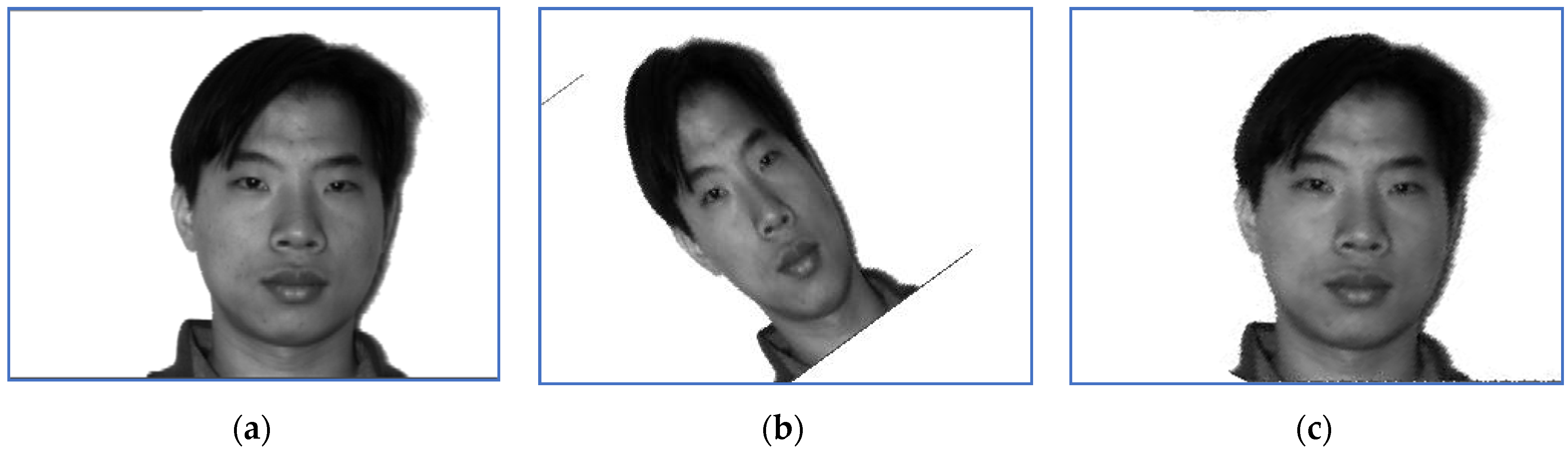

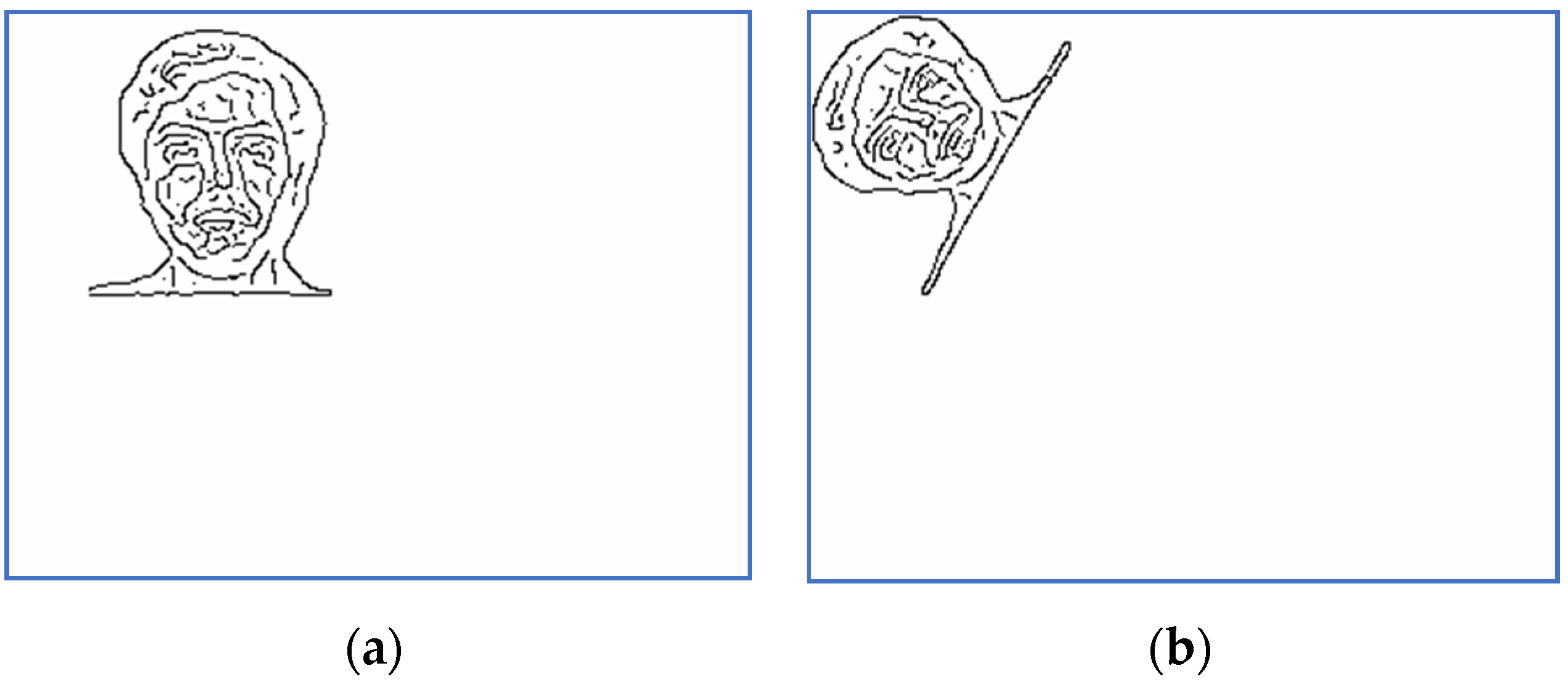

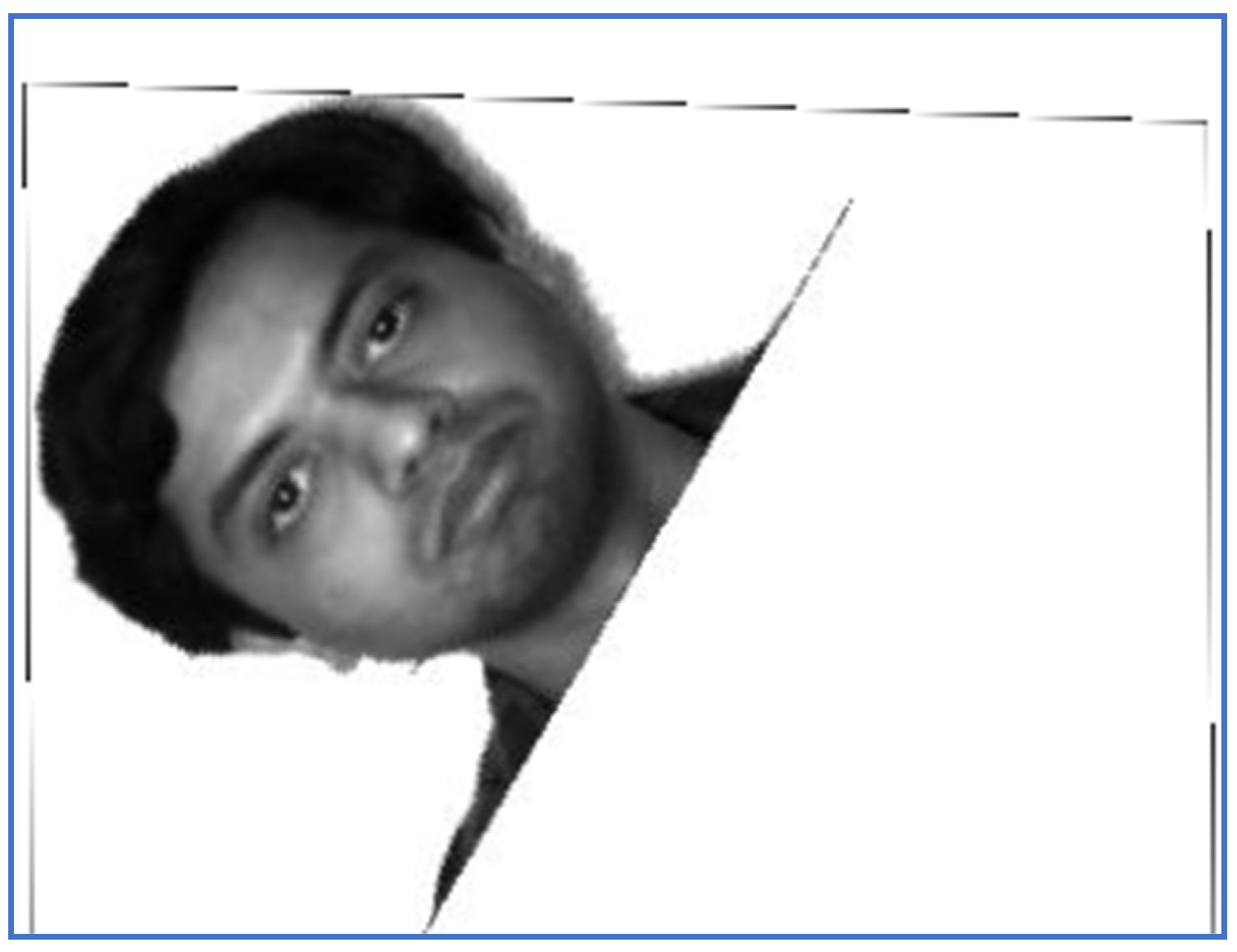

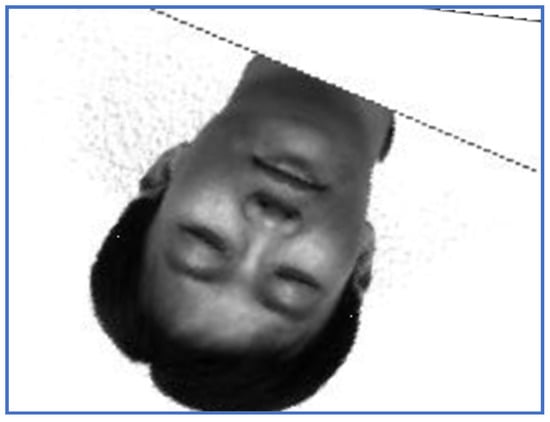

Figure 1.

An image of Subject 5. (a) Target image, (b) sensed image, (c) correctly restored image.

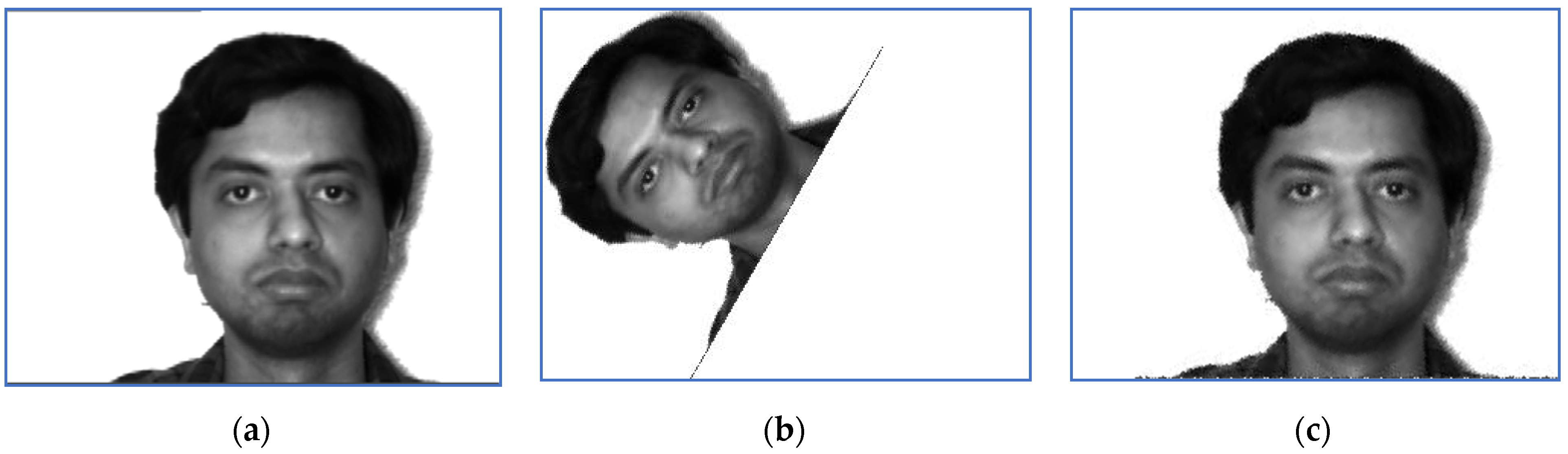

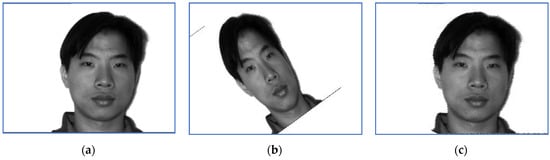

Figure 2.

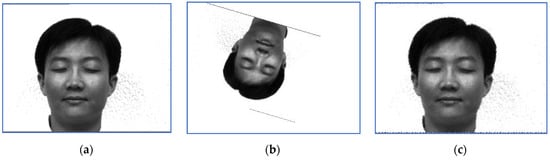

An image of Subject 14. (a) Target image, (b) sensed image, (c) correctly restored image.

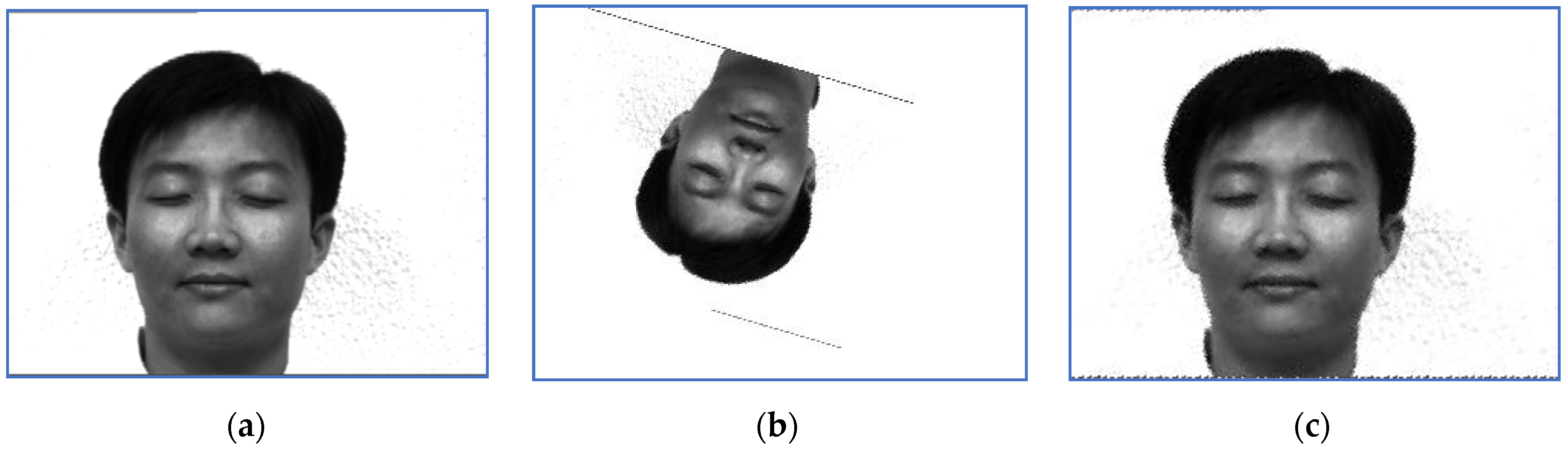

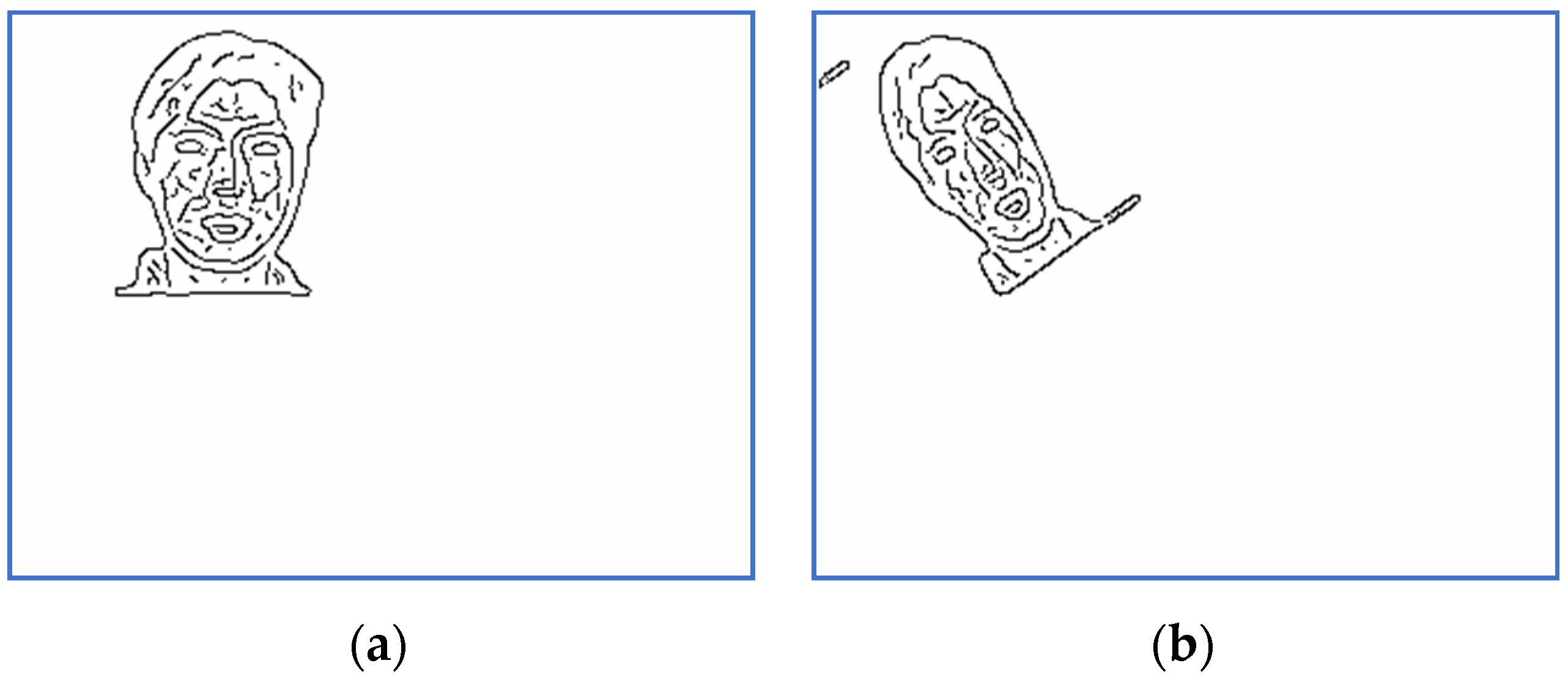

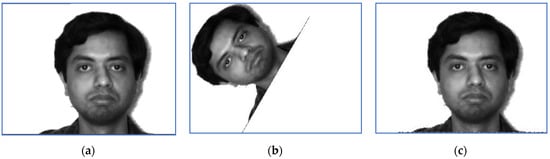

Figure 3.

Scaled and binarized images for Subject 5: (a) target; (b) sensed.

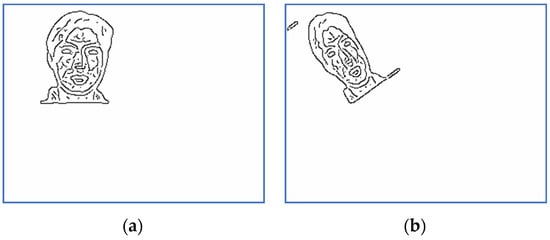

Figure 4.

Scaled and binarized images for Subject 14: (a) target; (b) sensed.

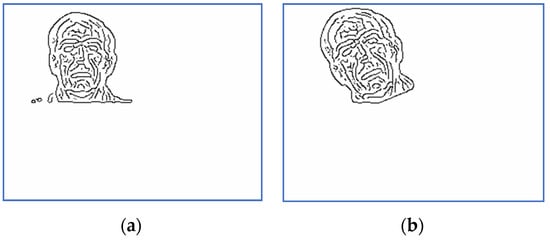

Figure 5.

The restored image—Subject 5.

Figure 6.

The restored image—Subject 14.

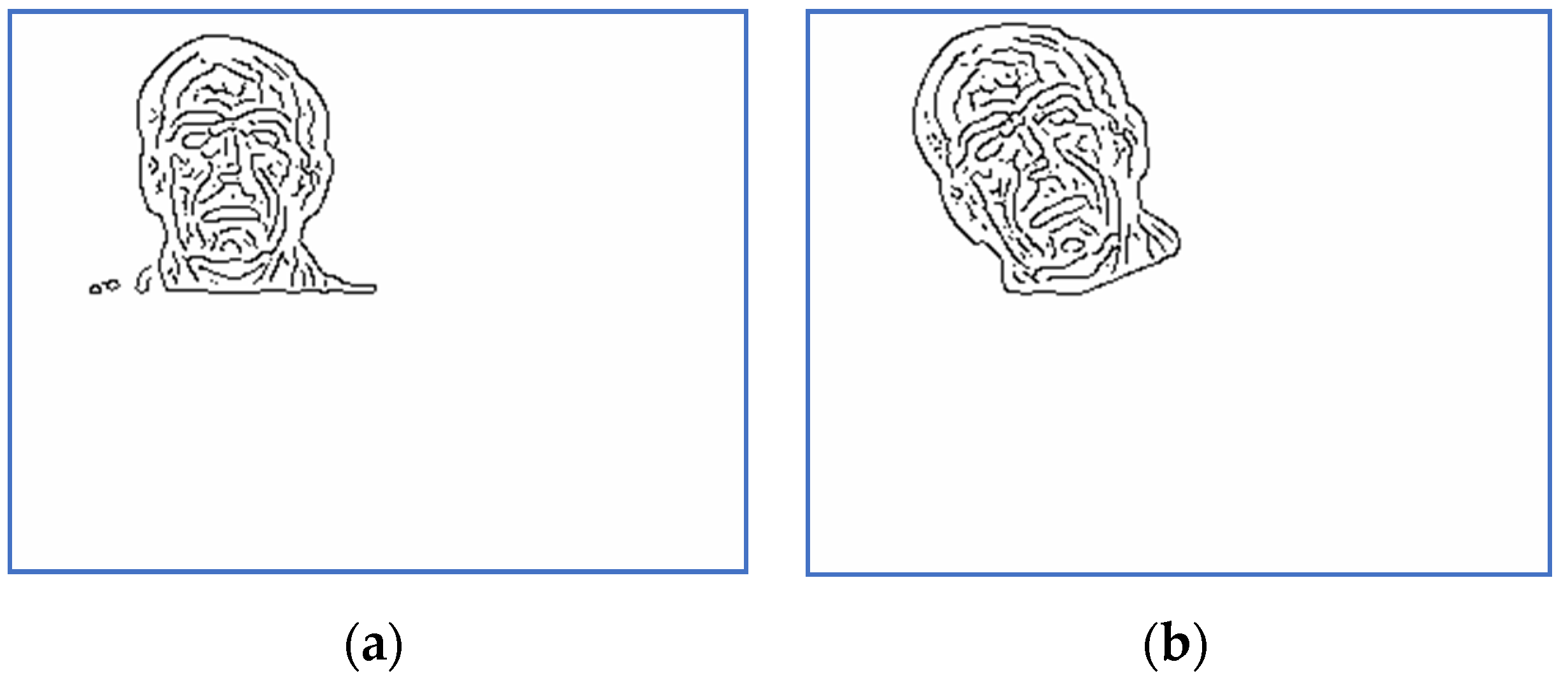

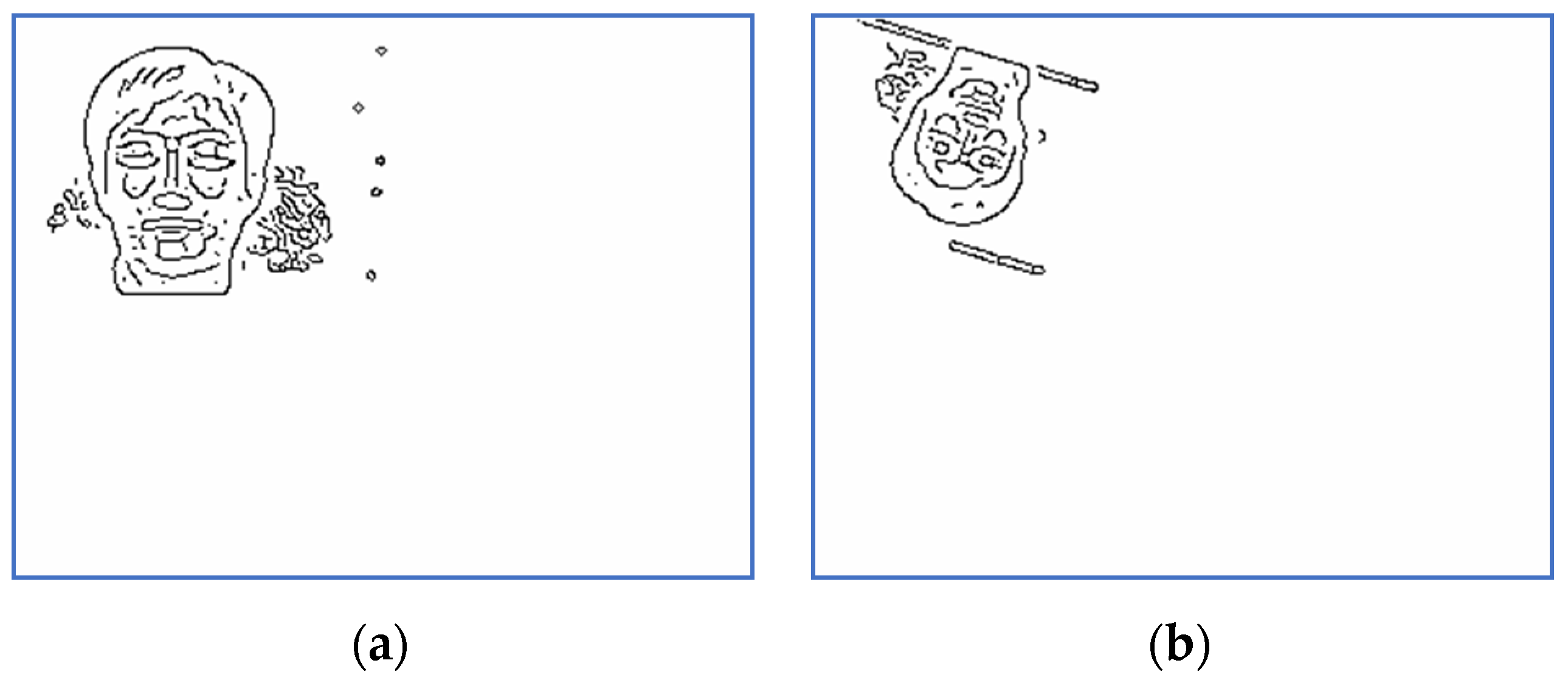

Figure 7.

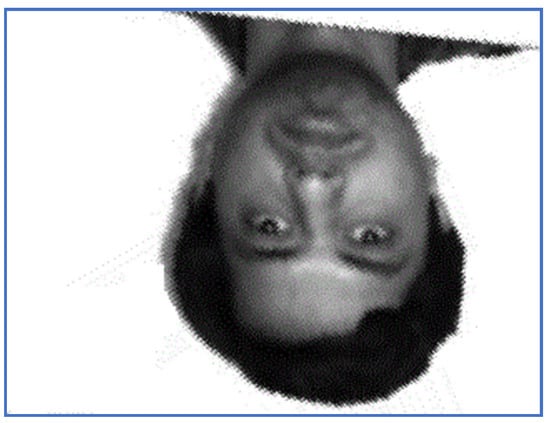

The restored image, PAT—Subject 5.

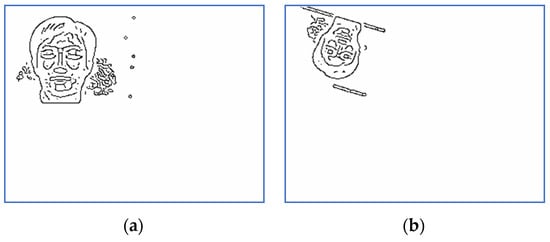

Figure 8.

The restored image, PAT—Subject 14.

Figure 9.

The restored image, EO—Subject 5.

Figure 10.

The restored image, EO—Subject 14.

First, we provide a report regarding the new method proposed in Section 3.4. Examples of target images, sensed ones and images obtained when the correct inverse rigid transformation is applied are presented in Figure 1 (Subject 5, the first image, corresponding to Row 5 in Table 2, Table 3 and Table 4) and Figure 2 respectively (Subject 14, the second image, corresponding to Row 29 in Table 2, Table 3 and Table 4).

The perturbation parameters are set as follows: the rotation angle is between and 0, the scale factor was set in , and the translation parameters are and . For instance, the target image displayed in Figure 1 suffered a perturbation with the parameter vector , while the one provided in Figure 2 was perturbed by the rigid transformation with .

Scaled and binarized versions of the pairs (S, T) are computed by the preprocessing step. The corresponding versions of the images displayed in Figure 1 and Figure 2 are depicted in Figure 3 and Figure 4. The scaling factor used for this step is 2.

Next, the Algorithm 1 is applied, where the inputs are the images computed by the preprocessing step. The search space is computed using (19). In our test . The rest of the input parameters are set as follows: , , , , , , , , , , , , and .

The results obtained by the proposed registration methodology are depicted in Figure 5 and Figure 6.

The accuracy and efficiency analysis are provided in Table 2. Note that the results concerning the similarity measures are computed by

where T is the target, is the restored version computed by the proposed methodology, and T’ is the version obtained when the correct inverse rigid transformation is applied. In our tests, Obviously, the ideal value of the ratios defined by (50) is 1. However, possible larger values may be obtained, due to calculation and rounding errors.

The success rate of the proposed method is 100% for all the tested images, and the SNR values are computed for images having the gray levels in .

In order to analyze the registration capabilities of the proposed method, we experimentally compared it against two of the most commonly used align procedures in case of rigid transformation, namely one plus one evolutionary optimizer (EO) [21] and principal axes transform (PAT) [22]. Note that the function EO was tested with 100 different parameter settings per pair of images to establish the best alignment from the similarity ratio point of view (48), where . The registered images using PAT method are displayed in Figure 7 and Figure 8, while the results produced by EO are depicted in Figure 9 and Figure 10.

Note that PAT image alignment method has a widely known problem that in some cases produces results rotated 180 degrees along principal axes. In practice, this leads to some results being rotated upside-down. PAT stops at computing the aligned image and does not go further into analyzing if it is rotated or not, from a visual point of view. Some research [36] aims to correct such results by automatically assessing which of the two possible rotations represents the correct image. In case of images rotated to the left with large angles, PAT and EO may fail to provide the correct alignment. In such cases, the ratios values are significantly smaller than one. In case of PAT registration, the run time values vary between 4 and 6 s, while EO method consumes significantly more time due to the need to establish the appropriate input parameters.

The results experimentally derived from a long series of tests lead to the conclusion that the proposed method outperforms PAT and EO from both accuracy points of view, informational and quantitative.

5.3. Monochrome Image Registration in Case of Scaling on Multiple Dimensions

The methodology introduced in Section 3.5 has been applied on images belonging to Yale Face Database perturbed according to (36). The results are as follows.

Examples of target images, sensed ones and images obtained when the correct inverse rigid transformation is applied are presented in Figure 11 (Subject 4, corresponding to Row 4 in Table 5, Table 6 and Table 7) and Figure 12 respectively (Subject 10, corresponding to Row 10 in Table 5, Table 6 and Table 7).

Figure 11.

An image of Subject 4: (a) target image; (b) sensed image and (c) correctly restored image.

Table 5.

The numerical results obtained by applying the proposed method.

Table 6.

The numerical results obtained by applying the PAT method.

Table 7.

The numerical results obtained by applying the EO method.

Figure 12.

An image of Subject 10: (a) target image; (b) sensed image and (c) correctly restored image.

The perturbation parameters are set as follows: the rotation angle is between and 0, the scale factors were set in , and the translation parameters are and . The target image displayed in Figure 11 suffered a perturbation with the parameter vector , while the one provided in Figure 12 was perturbed by the rigid transformation with .

The corresponding versions of scaled and binarized images displayed in Figure 11 and Figure 12 are depicted in Figure 13 and Figure 14. The scaling factor used for this step is two.

Figure 13.

Scaled and binarized images for Subject 4: (a) target; (b) sensed.

Figure 14.

Scaled and binarized images for Subject 10: (a) target; (b) sensed.

Next, the Algorithm 1 is applied, the input parameters being the same as those reported in Section 5.2. The results obtained by the proposed registration methodology are depicted in Figure 15 and Figure 16.

Figure 15.

The restored image—Subject 4.

Figure 16.

The restored image—Subject 10.

The accuracy and efficiency analysis are provided in Table 5. The results concerning the similarity measures are computed by (40). The success rate of the proposed method is 100% for all the tested images, and the SNR values are computed for images having the gray levels in .

Note that PAT and EO algorithms lead to similar results as those reported in Section 5.2. (Figure 17, Figure 18, Figure 19 and Figure 20). They fail to correctly perform the alignment from the rotation point of view, when the rotation angle is close to −180°. PAT may also misalign images in case of large magnitude distortions, mainly because the computation of the PAT solution involves arbitrary unitary matrices. Moreover, there are images with the same principal directions set and PAT cannot distinguish between them [37].

Figure 17.

The restored image using PAT—Subject 4.

Figure 18.

The restored image using PAT—Subject 10.

Figure 19.

The restored image using EO—Subject 4.

Figure 20.

The restored image using EO—Subject 10.

6. Conclusions

The work reported in this paper focuses on developing a novel and comprehensive methodology for image registration. The primary algorithm introduced in Section 3.3 addresses the problem of binary images, while its extended version deals with more complex, monochrome images. Note that the proposed approach could be further extended to colored images, the problem of representing the objects by their contour being solved either directly, using contour following algorithms or edge detectors, or after an additional procedure that computes the grey level versions of the inputs.

The main advantages of the proposed methodology are the comprehensiveness, due to the way we compute the search space, and the effectiveness, mainly due to the properties of the memetic firefly algorithm—ES approach, the embedded clustering procedure and the two mechanisms implemented to alleviate the risk of premature convergence.

The experimental results were derived based on very large number of tests and using various accuracy and efficiency measures. Both information-based similarity functions and quantitative measures, as for instance SNR and PSNR, were used to evaluate the effectiveness of our method and to compare it against two of the most commonly used align procedures in case of rigid transformation, EO algorithm and PAT method.

In case of binary images, the success rate is 100%, i.e., the target images are always identified by applying the inverse rigid transform on their corresponding sensed images, where the parameter vectors are computed by Algorithm 1. The recorded runtime values proved that the method is also efficient, especially being given its stochastic properties. The general methodology dealing with monochrome images also proved effective and efficient. Moreover, unlike PAT registration or EO, the proposed approach manages to correctly reverse the perturbation for all tested pair of images.

We conclude that the results are encouraging and entail future work toward extending this approach to more complex perturbation models as well as more advanced bio-inspired optimizations and evolutionary algorithms.

Author Contributions

Conceptualization, C.-L.C. and C.R.U.; formal analysis, C.-L.C. and C.R.U.; methodology, C.-L.C.; software, C.-L.C. and C.R.U.; supervision, C.-L.C.; validation, C.-L.C. and C.R.U.; writing—original draft, C.-L.C.; writing—review and editing, C.-L.C. and C.R.U. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lim, Y.O.M.H.; Chen, X. Memetic Computation—Past, Present & Future [Research Frontier]. IEEE Comput. Intell. Mag. 2010, 5, 24–31. [Google Scholar] [CrossRef]

- Rundo, L.; Militello, C.; Vitabile, S.; Russo, G.; Sala, E.; Gilardi, M.C. A Survey on Nature-Inspired Medical Image Analysis: A Step Further in Biomedical Data Integration. Fundam. Inform. 2020, 171, 345–365. [Google Scholar] [CrossRef]

- Sarvamangala, D.R.; Kulkarni, R.V. A Comparative Study of Bio-inspired Algorithms for Medical Image Registration. In Advances in Intelligent Computing. Studies in Computational Intelligence; Mandal, J., Dutta, P., Mukhopadhyay, S., Eds.; Springer: Singapore, 2019; Volume 687. [Google Scholar] [CrossRef]

- Santamaría, J.; Rivero-Cejudo, M.L.; Martos-Fernández, M.A.; Roca, F. An Overview on the Latest Nature-Inspired and Metaheuristics-Based Image Registration Algorithms. Appl. Sci. 2020, 10, 1928. [Google Scholar] [CrossRef]

- Shaw, K.; Pandey, P.; Das, S.; Ghosh, D.; Malakar, P.; Dhabal, S. Image Registration using Bio-inspired Algorithms. In Proceedings of the 2020 IEEE 1st International Conference for Convergence in Engineering (ICCE), Kolkata, India, 5–6 September 2020; pp. 330–334. [Google Scholar] [CrossRef]

- Valsecchi, A.; Bermejo, E.; Damas, S.; Cordón, O. Metaheuristics for Medical Image Registration. In Handbook of Heuristics; Martí, R., Panos, P., Resende, M., Eds.; Springer: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Pirpinia, K.; Bosman, P.A.; Sonke, J.J.; van Herk, M.; Alderliesten, T. Evolutionary Machine Learning for Multi-Objective Class Solutions in Medical Deformable Image Registration. Algorithms 2019, 12, 99. [Google Scholar] [CrossRef]

- Panda, R.; Agrawal, S.; Sahoo, M.; Nayak, R. A novel evolutionary rigid body docking algorithm for medical image registration. Swarm Evol. Comput. 2017, 33, 108–118. [Google Scholar] [CrossRef]

- Sarvamangala, D.R.; Kulkarni, R.V. Swarm Intelligence Algorithms for Medical Image Registration: A Comparative Study. In Computational Intelligence, Communications, and Business Analytics, Proceedings of the CICBA 2017, Communications in Computer and Information Science, Kolkata, India, 24–25 March 2017; Mandal, J., Dutta, P., Mukhopadhyay, S., Eds.; Springer: Singapore, 2017; Volume 776. [Google Scholar] [CrossRef]

- Freitas, D.; Lopes, L.G.; Morgado-Dias, F. Particle Swarm Optimisation: A Historical Review Up to the Current Developments. Entropy 2020, 22, 362. [Google Scholar] [CrossRef] [PubMed]

- Rundo, L.; Tangherloni, A.; Militello, C.; Gilardi, M.C.; Mauri, G. Multimodal medical image registration using Particle Swarm Optimization: A review. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Das, A.; Bhattacharya, M. Affine-based registration of CT and MR modality images of human brain using multiresolution approaches: Comparative study on genetic algorithm and particle swarm optimization. Neural Comput. Applic. 2011, 20, 223–237. [Google Scholar] [CrossRef]

- Wu, Y.; Miao, Q.; Ma, W.; Gong, M.; Wang, S. PSOSAC: Particle Swarm Optimization Sample Consensus Algorithm for Remote Sensing Image Registration. IEEE Geosci. Remote Sens. Lett. 2018, 15, 242–246. [Google Scholar] [CrossRef]

- Bermejo, E.; Chica, M.; Damas, S.; Salcedo-Sanz, S.; Cordón, O. Coral Reef Optimization with substrate layers for medical Image Registration. Swarm Evol. Comput. 2018, 42, 138–159. [Google Scholar] [CrossRef]

- Bermejo, E.; Valsecchi, A.; Damas, S.; Cordón, O. Bacterial Foraging Optimization for intensity-based medical image registration. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation (CEC), Sendai, Japan, 25–28 May 2015; pp. 2436–2443. [Google Scholar] [CrossRef]

- Costin, H.; Bejinariu, S.; Costin, D. Biomedical Image Registration by means of Bacterial Foraging Paradigm. Int. J. Comput. Commun. Control 2016, 11, 331–347. [Google Scholar] [CrossRef]

- Wen, T.; Liu, H.; Lin, L.; Wang, B.; Hou, J.; Huang, C.; Pan, T.; Du, Y. Multiswarm Artificial Bee Colony algorithm based on spark cloud computing platform for medical image registration. In Computer Methods and Programs in Biomedicine; Elsevier: Amsterdam, The Netherlands, 2020; Volume 192, p. 105432. ISSN 0169-2607. [Google Scholar] [CrossRef]

- Bejinariu, S.I.; Costin, H. A Comparison of Some Nature-Inspired Optimization Metaheuristics Applied in Biomedical Image Registration. Methods Inf. Med. 2018, 57, 280–286. [Google Scholar] [CrossRef]

- Xiaogang, D.; Jianwu, D.; Yangping, W.; Xinguo, L.; Sha, L. An Algorithm Multi-Resolution Medical Image Registration Based on Firefly Algorithm and Powell. In Proceedings of the 2013 Third International Conference on Intelligent System Design and Engineering Applications, Hong Kong, China, 16–18 January 2013; pp. 274–277. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, M.; Song, H.; Cheng, Z.; Chang, T.; Bi, Y.; Sun, K. Improvement and Application of Hybrid Firefly Algorithm. IEEE Access 2019, 7, 165458–165477. [Google Scholar] [CrossRef]

- Styner, M.; Brechbuehler, C.; Székely, G.; Gerig, G. Parametric estimate of intensity inhomogeneities, applied to MRI. IEEE Trans. Med Imaging 2000, 19, 153–165. [Google Scholar] [CrossRef]

- Goshtasby, A.A. Image Registration: Principles, Tools and Methods; Springer Science & Business Media: London, UK, 2012. [Google Scholar] [CrossRef]

- Kvålseth, T. On Normalized Mutual Information: Measure Derivations and Properties. Entropy 2017, 9, 631. [Google Scholar] [CrossRef]

- Vila, M.; Bardera, A.; Feixas, M.; Sbert, M. Tsallis Mutual Information for Document Classification. Entropy 2011, 13, 1694–1707. [Google Scholar] [CrossRef]

- Amigó, J.M.; Balogh, S.G.; Hernández, S. A Brief Review of Generalized Entropies. Entropy 2018, 20, 813. [Google Scholar] [CrossRef]

- Brown, L.G. A Survey of Image Registration Techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Cocianu, C.L.; Stan, A. A New Evolutionary-Based Techniques for Image Registration. Appl. Sci. 2019, 9, 176. [Google Scholar] [CrossRef]

- Cocianu, C.L.; Stan, A.D.; Avramescu, M. Firefly-Based Approaches of Image Recognition. Symmetry 2020, 12, 881. [Google Scholar] [CrossRef]

- Eiben, A.; Smith, J. Introduction to Evolutionary Computing; Springer: Berlin, Germany, 2015. [Google Scholar] [CrossRef]

- Yang, X.S. Nature-Inspired Metauristic Algorithms; Luniver Press: Frome, UK, 2008. [Google Scholar]

- Arandiga, F.; Cohen, A.; Donat, R.; Matei, B. Edge detection insensitive to changes of illumination in the image. Image Vis. Comput. 2010, 28, 553–562. [Google Scholar] [CrossRef]

- Song, F.; Jutamulia, S. New wavelet transforms for noise insensitive edge detection. Opt. Eng. 2002, 41, 50–54. [Google Scholar] [CrossRef]

- Wu, K.C. Heuristic edge detector for noisy range images. In Intelligent Robots and Computer Vision XIII: 3D Vision, Product Inspection, and Active Vision; International Society for Optics and Photonics: Bellingham, WA, USA, 1994; Volume 2354, pp. 292–299. [Google Scholar] [CrossRef]

- Yale. Yale Face Database. Available online: http://cvc.yale.edu/projects/yalefaces/yalefaces.html (accessed on 14 December 2020).

- Belhumeur, P.; Hespanha, J.; Kriegman, D. Eigenfaces vs. Fisherfaces: Recognition Using Class Specific Linear Projection. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 711–720. [Google Scholar]

- Rehman, H.Z.U.; Lee, S. Automatic Image Alignment Using Principal Component Analysis. IEEE Access 2018, 6, 72063–72072. [Google Scholar] [CrossRef]

- Modersitzki, J. Numerical Methods for Image Registration; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).