Estimation of Azimuth and Elevation for Multiple Acoustic Sources Using Tetrahedral Microphone Arrays and Convolutional Neural Networks

Abstract

1. Introduction

2. Materials and Methods

2.1. Justification of Tetrahedral Array Geometry

2.2. The Role of the CNN in DoA Estimation

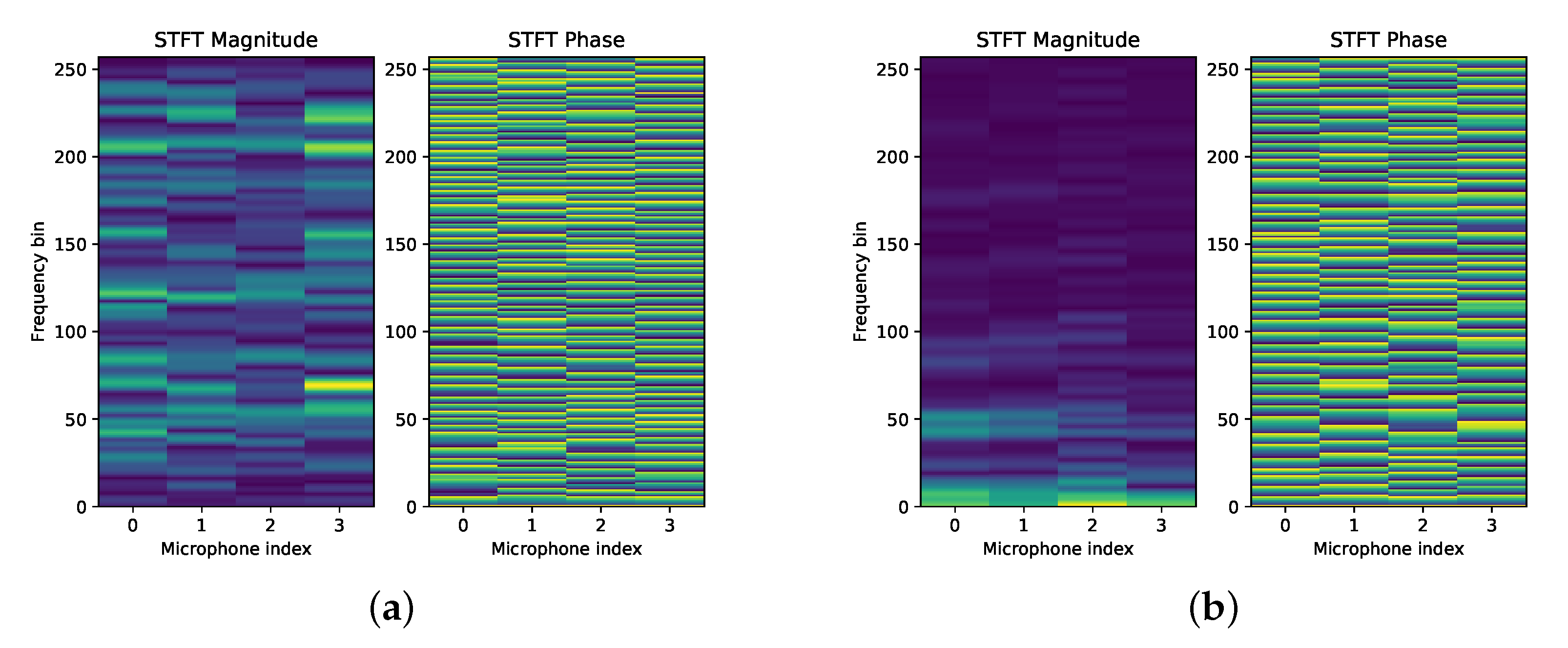

2.3. Estimation of Input Features

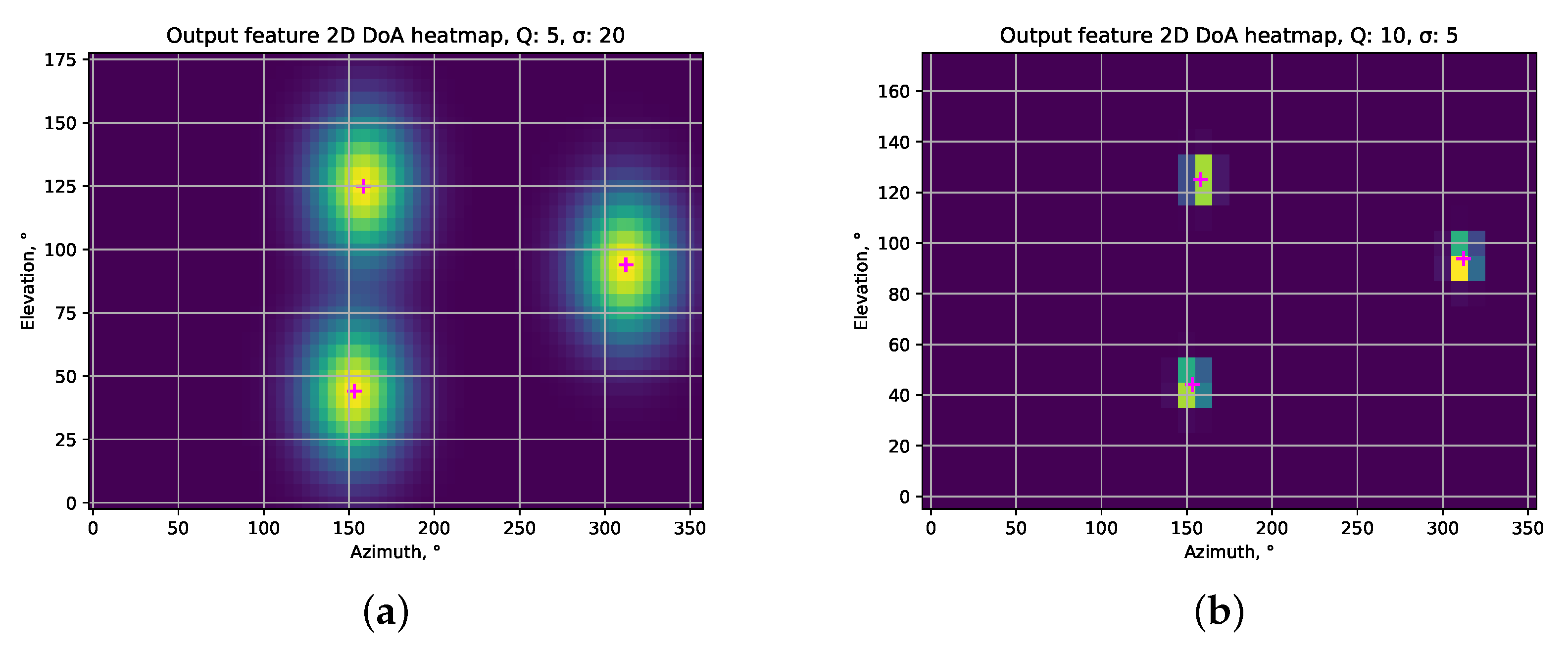

2.4. Desired Outputs

2.5. Post-Processing of the Outputs

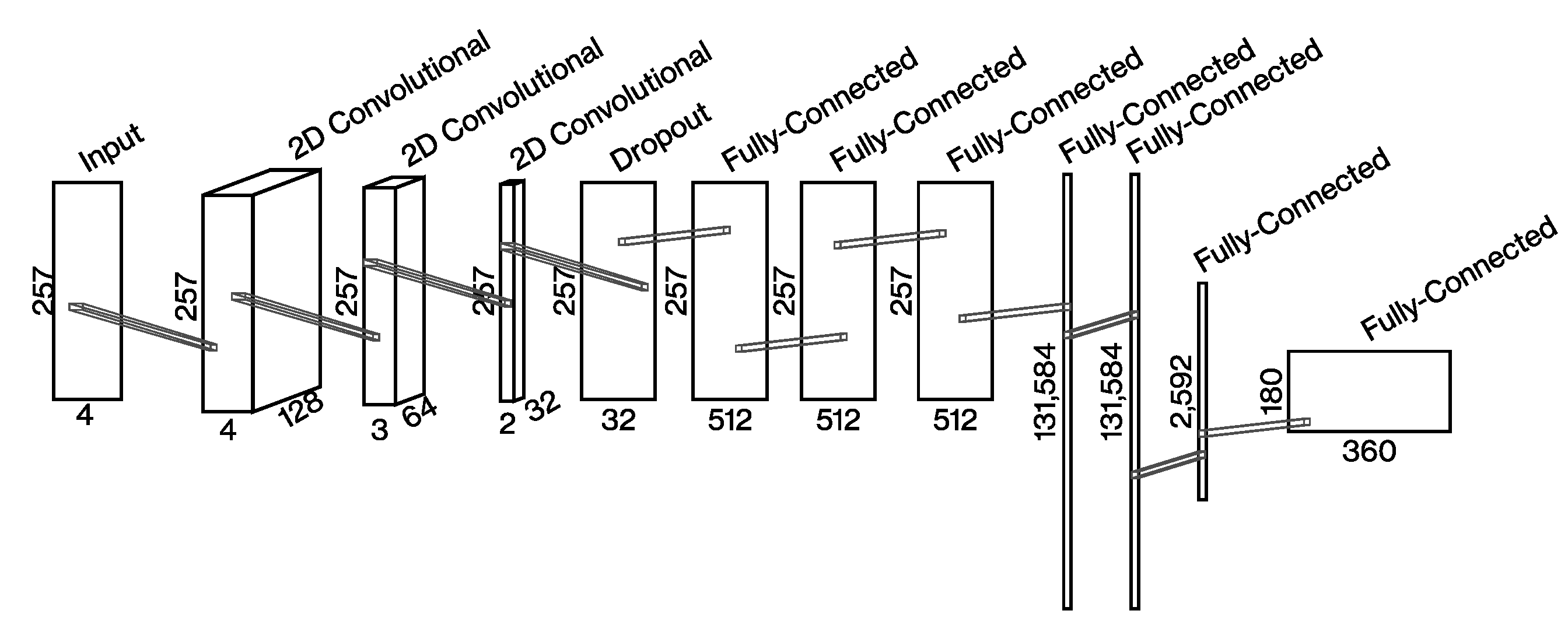

2.6. CNN Architecture

2.7. Preparation of Training and Testing Dataset

2.8. Evaluation of the Proposed Method Performance

- Euclidean distances between all pairs or ground truth and estimated DoAs were calculated;

- NSsmallest errors were selected as the DoA prediction errors for NS sources.

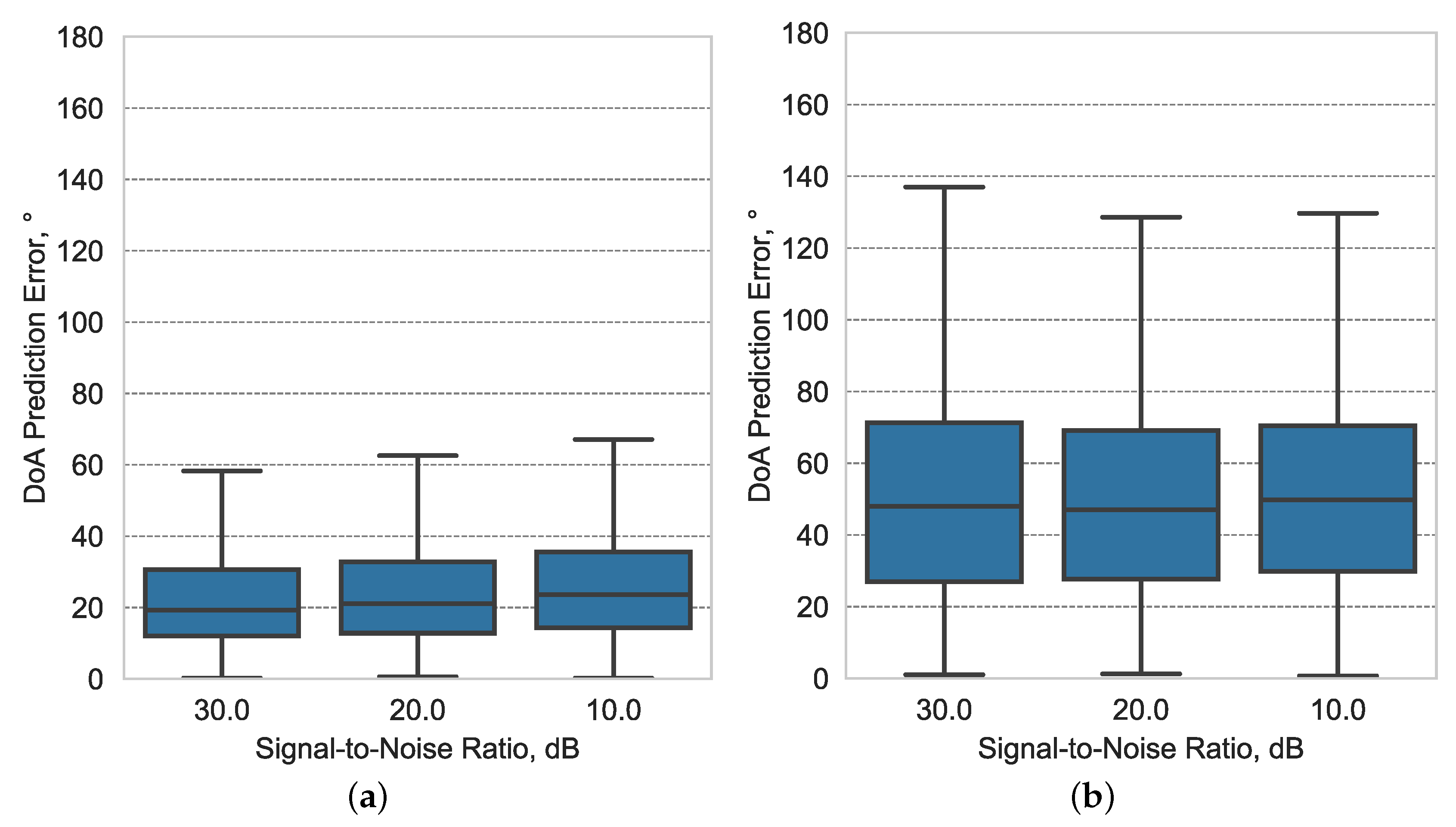

3. Results

4. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| DoA | Direction of Arrival |

| ANN | Artificial Neural Network |

| CNN | Convolutional Neural Network |

| SRP | Steered Response Power |

| PHAT | Phase Transform |

| MAE | Mean Average Error |

References

- Argentieri, S.; Danès, P.; Souères, P. A survey on sound source localization in robotics: From binaural to array processing methods. Comput. Speech Lang. 2015, 34, 87–112. [Google Scholar] [CrossRef]

- Kotus, J. Multiple sound sources localization in free field using acoustic vector sensor. Multimed. Tools Appl. 2013, 74, 4235–4251. [Google Scholar] [CrossRef] [PubMed]

- Zimroz, P.; Trybała, P.; Wróblewski, A.; Góralczyk, M.; Szrek, J.; Wójcik, A.; Zimroz, R. Application of UAV in Search and Rescue Actions in Underground Mine—A Specific Sound Detection in Noisy Acoustic Signal. Energies 2021, 14, 3725. [Google Scholar] [CrossRef]

- Ravaglioli, V.; Cavina, N.; Cerofolini, A.; Corti, E.; Moro, D.; Ponti, F. Automotive Turbochargers Power Estimation Based on Speed Fluctuation Analysis. In Proceedings of the 70th Conference of the Italian Thermal Machines Engineering Association (ATI2015), Rome, Italy, 9–11 September 2015; pp. 103–110. [Google Scholar] [CrossRef]

- Gagliardi, G.; Tedesco, F.; Casavola, A. An Adaptive Frequency-Locked-Loop Approach for the Turbocharger Rotational Speed Estimation via Acoustic Measurements. IEEE Trans. Control Syst. Technol. 2021, 29, 1437–1449. [Google Scholar] [CrossRef]

- The American Society of Mechanical Engineers. Full Load Performance Optimization Based on Turbocharger Speed Evaluation via Acoustic Sensing, Volume 2: Instrumentation, Controls, and Hybrids; Numerical Simulation; Engine Design and Mechanical Development; Keynote Papers, Internal Combustion Engine Division Fall Technical Conference. 2014. Available online: https://asmedigitalcollection.asme.org/ICEF/proceedings-pdf/ICEF2014/46179/V002T05A006/4241913/v002t05a006-icef2014-5677.pdf (accessed on 16 October 2021).

- Brutti, A.; Omologo, M.; Svaizer, P. Localization of multiple speakers based on a two step acoustic map analysis. In Proceedings of the 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 4349–4352. [Google Scholar] [CrossRef]

- Lopatka, K.; Czyzewski, A. Acceleration of decision making in sound event recognition employing supercomputing cluster. Inf. Sci. 2014, 285, 223–236. [Google Scholar] [CrossRef]

- Brandstein, M.; Silverman, H. A robust method for speech signal time-delay estimation in reverberant rooms. In Proceedings of the 1997 IEEE International Conference on Acoustics, Speech, and Signal Processing, Munich, Germany, 21–24 April 1997; Volume 1, pp. 375–378. [Google Scholar] [CrossRef]

- DiBiase, J.H.; Silverman, H.F.; Brandstein, M.S. Robust localization in reverberant rooms. In Microphone Arrays; Springer: Berlin, Germany, 2001; pp. 157–180. [Google Scholar]

- Martí Guerola, A.; Cobos Serrano, M.; Aguilera Martí, E.; López Monfort, J.J. Speaker Localization and Detection in Videoconferencing Environments Using a Modified SRP-PHAT Algorithm; Instituto de Telecomunicaciones y Aplicaciones Multimedia (ITEAM): Valencia, Spain, 2011; Volume 3, pp. 40–47. [Google Scholar]

- Xiao, X.; Zhao, S.; Zhong, X.; Jones, D.L.; Chng, E.S.; Li, H. A learning-based approach to direction of arrival estimation in noisy and reverberant environments. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 2814–2818. [Google Scholar] [CrossRef]

- Takeda, R.; Komatani, K. Discriminative multiple sound source localization based on deep neural networks using independent location model. In Proceedings of the 2016 IEEE Spoken Language Technology Workshop (SLT), South Brisbane, QLD, Australia, 19–24 April 2016; pp. 603–609. [Google Scholar] [CrossRef]

- Grumiaux, P.A.; Kitić, S.; Girin, L.; Guérin, A. A Survey of Sound Source Localization with Deep Learning Methods. arXiv 2021, arXiv:2109.03465. [Google Scholar]

- Sakavičius, S.; Plonis, D.; Serackis, A. Single sound source localization using multi-layer perceptron. In Proceedings of the 2017 Open Conference of Electrical, Electronic and Information Sciences (eStream), Vilnius, Lithuania, 27 April 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Datum, M.S.; Palmieri, F.; Moiseff, A. An artificial neural network for sound localization using binaural cues. J. Acoust. Soc. Am. 1996, 100, 372–383. [Google Scholar] [CrossRef] [PubMed]

- Takeda, R.; Komatani, K. Sound source localization based on deep neural networks with directional activate function exploiting phase information. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 405–409. [Google Scholar] [CrossRef]

- Vera-Diaz, J.M.; Pizarro, D.; Macias-Guarasa, J. Towards End-to-End Acoustic Localization using Deep Learning: From Audio Signal to Source Position Coordinates. Sensors 2018, 18, 3418. [Google Scholar] [CrossRef] [PubMed]

- Krolikowski, R.; Czyzewski, A.; Kostek, B. Localization of Sound Sources by Means of Recurrent Neural Networks. In Rough Sets and Current Trends in Computing; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2000; pp. 603–610. [Google Scholar] [CrossRef]

- Hirvonen, T. Classification of spatial audio location and content using convolutional neural networks. In Proceedings of the 138th Audio Engineering Society (AES) International Convention, Warsaw, Poland, 7–10 May 2015. [Google Scholar]

- Chakrabarty, S.; Habets, E.A.P. Multi-Speaker DOA Estimation Using Deep Convolutional Networks Trained with Noise Signals. IEEE J. Sel. Top. Signal Process. 2019, 13, 8–21. [Google Scholar] [CrossRef]

- Ma, W.; Liu, X. Compression computational grid based on functional beamforming for acoustic source localization. Appl. Acoust. 2018, 134, 75–87. [Google Scholar] [CrossRef]

- Roden, R.; Moritz, N.; Gerlach, S.; Weinzierl, S.; Goetze, S. On sound source localization of speech signals using deep neural networks. In Proceedings of the Fortschritte der Akustik, DAGA 2015, Nurnberg, Germany, 16–19 March 2015; pp. 1510–1513. [Google Scholar]

- Hao, Y.; Küçük, A.; Ganguly, A.; Panahi, I. Spectral fluxbased convolutional neural network architecture for speech source localization and its real-time implementation. IEEE Access 2020, 8, 197047–197058. [Google Scholar] [CrossRef] [PubMed]

- Hübner, F.; Mack, W.; Habets, E.A. Efficient Training Data Generation for Phase-Based DOA Estimation. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 456–460. [Google Scholar]

- Vargas, E.; Hopgood, J.; Brown, K.; Subr, K. On improved training of CNN for acoustic source localisation. IEEE/ACM Trans. Audio Speech Lang. Process 2021, 29, 720–732. [Google Scholar] [CrossRef]

- Grumiaux, P.A.; Kitic, S.; Girin, L.; Guérin, A. Improved feature extraction for CRNN-based multiple sound source localization. arXiv 2021, arXiv:2105.01897. [Google Scholar]

- Bohlender, A.; Spriet, A.; Tirry, W.; Madhu, N. Exploiting temporal context in CNN based multisource DoA estimation. IEEE/ACM Trans. Audio Speech Lang. Process 2021, 29, 1594–1608. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. arXiv 2014, arXiv:cs.CL/1408.5882. Available online: http://xxx.lanl.gov/abs/1408.5882 (accessed on 15 August 2021).

- Youssef, K.; Argentieri, S.; Zarader, J.L. A learning-based approach to robust binaural sound localization. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2927–2932. [Google Scholar]

- Pertilä, P.; Cakir, E. Robust direction estimation with convolutional neural networks based steered response power. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 6125–6129. [Google Scholar]

- Cao, Y.; Iqbal, T.; Kong, Q.; Galindo, M.; Wang, W.; Plumbley, M. Two-Stage Sound Event Localization and Detection Using Intensity Vector and Generalized Cross-Correlation. Technical Report of Detection and Classification of Acoustic Scenes and Events 2019 (DCASE) Challange. 2019. Available online: http://personal.ee.surrey.ac.uk/Personal/W.Wang/papers/CaoIKGWP_DCASE_2019.pdf (accessed on 15 August 2021).

- Grondin, F.; Glass, J.; Sobieraj, I.; Plumbley, M. Sound event localization and detection using CRNN on pairs of microphones. In Proceedings of the 4th Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE 2019), New York, NY, USA, 25–26 October 2019. [Google Scholar]

- Huang, Y.; Wu, X.; Qu, T. A time-domain unsupervised learning based sound source localization method. In Proceedings of the 2020 IEEE 3rd International Conference on Information Communication and Signal Processing (ICICSP), Shanghai, China, 12–15 September 2020; pp. 26–32. [Google Scholar]

- Park, S.; Suh, S.; Jeong, Y. Sound Event Localization and Detection with Various Loss Functions. Technical Report of Task 3 of DCASE Challenge. 2020, pp. 1–5. Available online: http://dcase.community/documents/challenge2020/technical_reports/DCASE2020_Park_89.pdf (accessed on 15 August 2021).

- Kapka, S.; Lewandowski, M. Sound Source Detection, Localization and Classification Using Consecutive Ensemble of CRNN Models. Technical Report of Detection and Classification of Acoustic Scenes and Events 2019 (DCASE) Challange. 2019. Available online: http://dcase.community/documents/challenge2019/technical_reports/DCASE2019_Kapka_26.pdf (accessed on 15 August 2021).

- Kim, Y.; Ling, H. Direction of arrival estimation of humans with a small sensor array using an artificial neural network. Prog. Electromagn. Res. 2011, 27, 127–149. [Google Scholar] [CrossRef]

- Yasuda, M.; Koizumi, Y.; Saito, S.; Uematsu, H.; Imoto, K. Sound event localization based on sound intensity vector refined by DNN-based denoising and source separation. In Proceedings of the 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 651–655. [Google Scholar]

- He, W.; Motlicek, P.; Odobez, J. Deep Neural Networks for Multiple Speaker Detection and Localization. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 74–79, ISSN 2577-087X. [Google Scholar] [CrossRef]

- Laufer-Goldshtein, B.; Talmon, R.; Gannot, S. Semi-Supervised Sound Source Localization Based on Manifold Regularization. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 24, 1393–1407. [Google Scholar] [CrossRef]

- Sakavičius, S.; Serackis, A. Estimation of Sound Source Direction of Arrival Map Using Convolutional Neural Network and Cross-Correlation in Frequency Bands. In Proceedings of the 2019 Open Conference of Electrical, Electronic and Information Sciences (eStream), Vilnius, Lithuania, 25 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Weng, J.; Guentchev, K.Y. Three-dimensional sound localization from a compact non-coplanar array of microphones using tree-based learning. J. Acoust. Soc. Am. 2001, 110, 310–323. [Google Scholar] [CrossRef] [PubMed]

- McCowan, I.; Carletta, J.; Kraaij, W.; Ashby, S.; Bourban, S.; Flynn, M.; Guillemot, M.; Hain, T.; Kadlec, J.; Karaiskos, V.; et al. The AMI meeting corpus. In Proceedings of the 5th international conference on methods and techniques in behavioral research, Wageningen, The Netherlands, 30 August–2 September 2005; Volume 88, p. 100. [Google Scholar]

- Scheibler, R.; Bezzam, E.; Dokmanić, I. Pyroomacoustics: A Python Package for Audio Room Simulation and Array Processing Algorithms. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 351–355, ISSN 2379-190X. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sakavičius, S.; Serackis, A. Estimation of Azimuth and Elevation for Multiple Acoustic Sources Using Tetrahedral Microphone Arrays and Convolutional Neural Networks. Electronics 2021, 10, 2585. https://doi.org/10.3390/electronics10212585

Sakavičius S, Serackis A. Estimation of Azimuth and Elevation for Multiple Acoustic Sources Using Tetrahedral Microphone Arrays and Convolutional Neural Networks. Electronics. 2021; 10(21):2585. https://doi.org/10.3390/electronics10212585

Chicago/Turabian StyleSakavičius, Saulius, and Artūras Serackis. 2021. "Estimation of Azimuth and Elevation for Multiple Acoustic Sources Using Tetrahedral Microphone Arrays and Convolutional Neural Networks" Electronics 10, no. 21: 2585. https://doi.org/10.3390/electronics10212585

APA StyleSakavičius, S., & Serackis, A. (2021). Estimation of Azimuth and Elevation for Multiple Acoustic Sources Using Tetrahedral Microphone Arrays and Convolutional Neural Networks. Electronics, 10(21), 2585. https://doi.org/10.3390/electronics10212585