Lung Segmentation and Characterization in COVID-19 Patients for Assessing Pulmonary Thromboembolism: An Approach Based on Deep Learning and Radiomics

Abstract

:1. Introduction

2. Materials

3. Methods

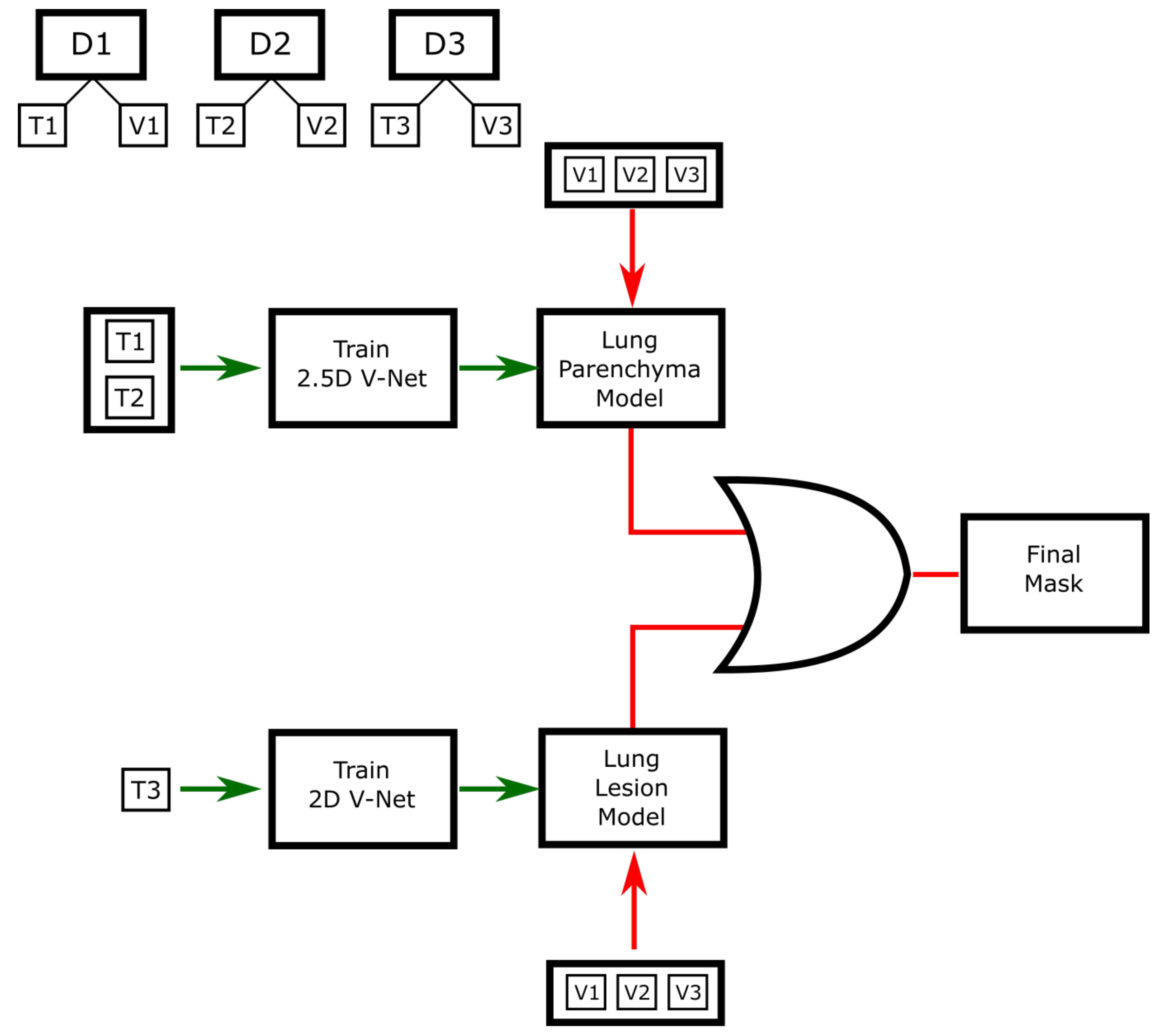

3.1. Semantic Segmentation

3.2. Radiomic Features

4. Results

4.1. Segmentation Evaluation Metrics

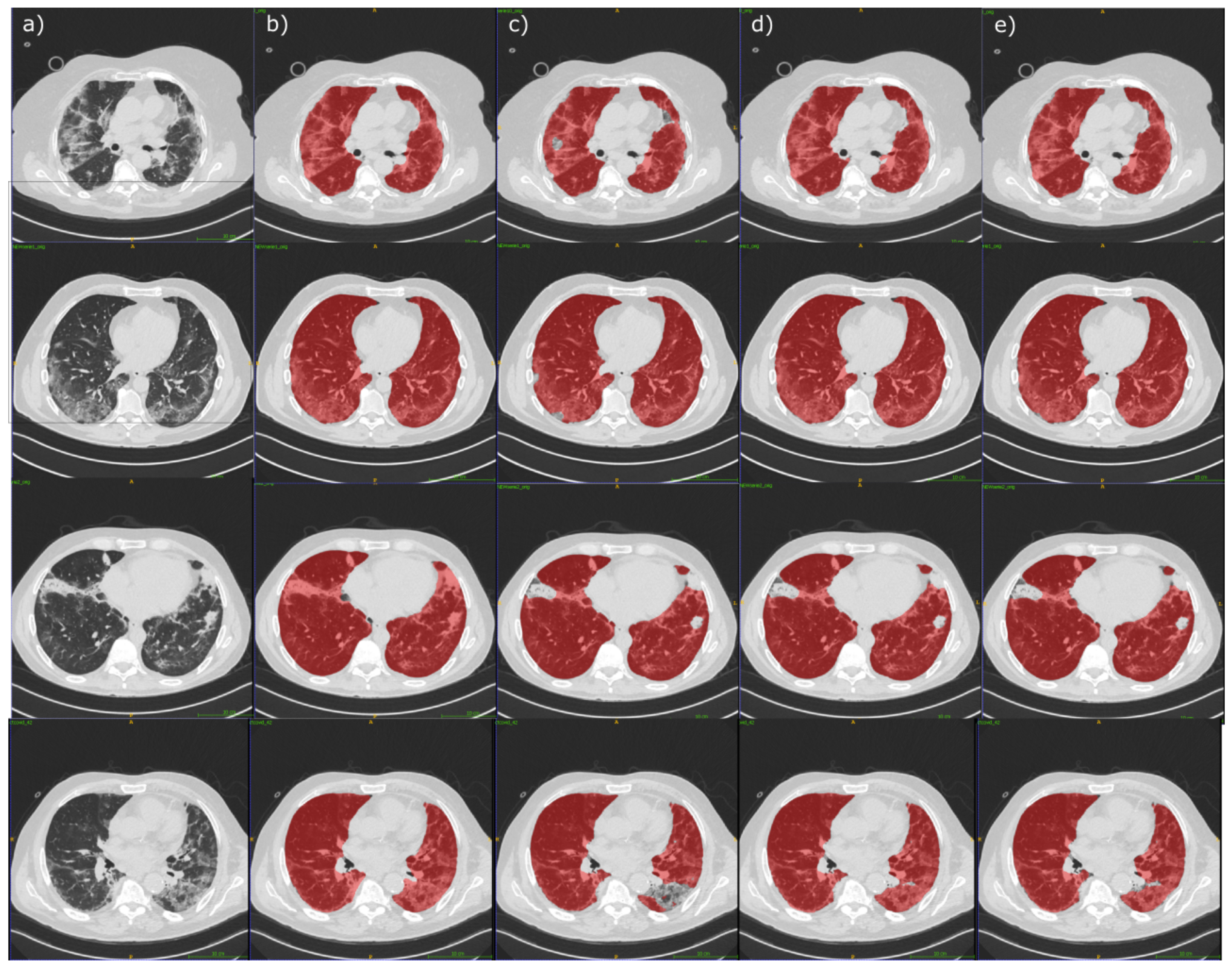

4.2. Segmentation Experimental Results

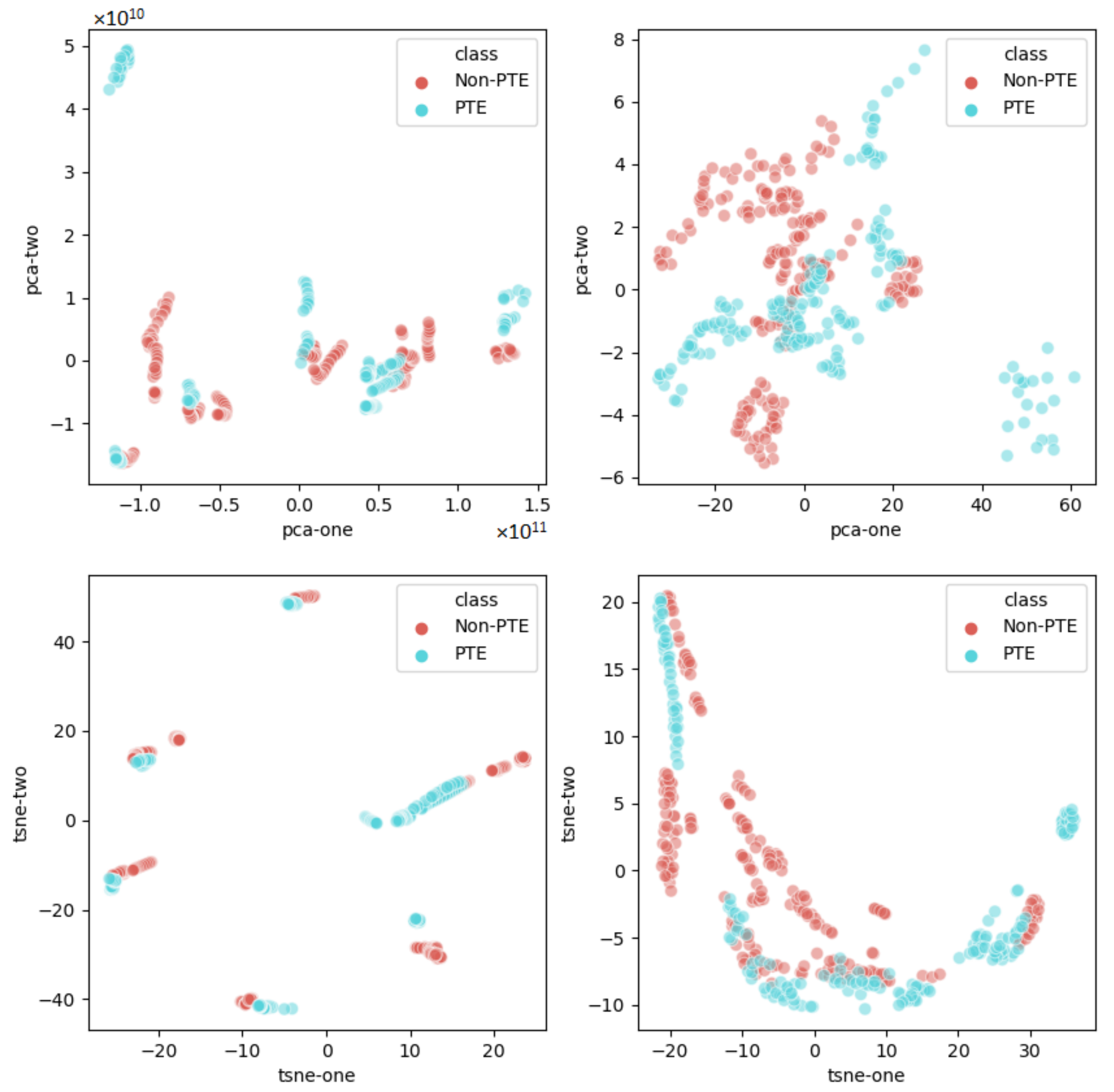

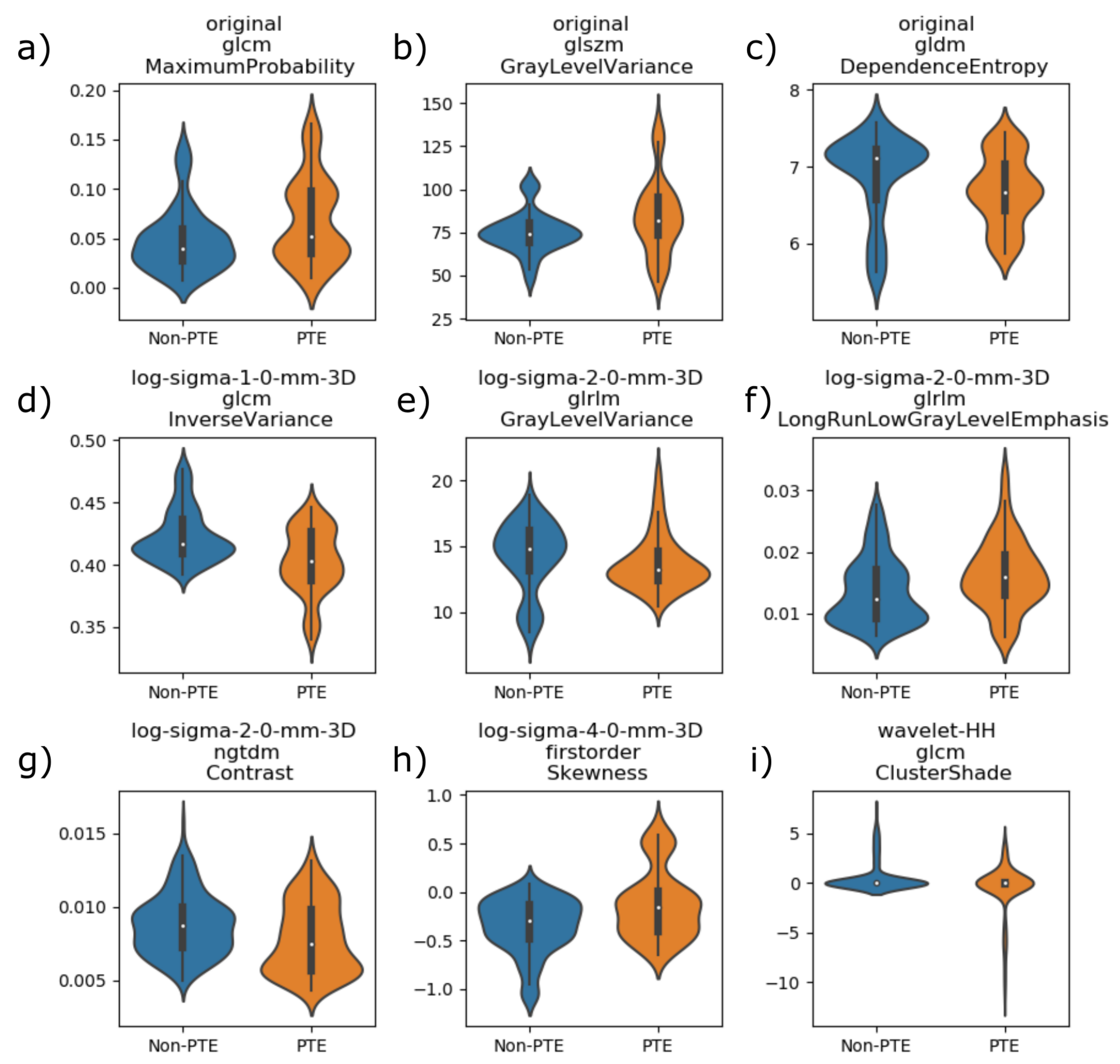

4.3. Radiomics Lung Characterization

- original_glcm_MaximumProbability, defined in Equation (A2);

- original_glszm_GrayLevelVariance, defined in Equation (A11);

- original_gldm_DependenceEntropy, defined in Equation (A14);

- log-sigma-1-0-mm-3D_glcm_InverseVariance, defined in Equation (A4);

- log-sigma-2-0-mm-3D_glrlm_GrayLevelVariance, defined in Equation (A16);

- log-sigma-2-0-mm-3D_glrlm_LongRunLowGrayLevelEmphasis, defined in Equation (A18);

- log-sigma-2-0-mm-3D_ngtdm_Contrast, defined in Equation (A20);

- log-sigma-4-0-mm-3D_firstorder_Skewness, defined in Equation (A21);

- wavelet-HH_glcm_ClusterShade, defined in Equation (A5).

| Algorithm 1: Correlated Features Removal. |

|

5. Discussion

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Radiomic Features Definitions

References

- Grillet, F.; Behr, J.; Calame, P.; Aubry, S.; Delabrousse, E. Acute Pulmonary Embolism Associated with COVID-19 Pneumonia Detected with Pulmonary CT Angiography. Radiology 2020, 296, E186–E188. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Léonard-Lorant, I.; Delabranche, X.; Séverac, F.; Helms, J.; Pauzet, C.; Collange, O.; Schneider, F.; Labani, A.; Bilbault, P.; Molière, S.; et al. Acute Pulmonary Embolism in Patients with COVID-19 at CT Angiography and Relationship to d-Dimer Levels. Radiology 2020, 296, E189–E191. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Scardapane, A.; Villani, L.; Bavaro, D.F.; Passerini, F.; Ianora, A.A.S.; Lucarelli, N.M.; Angarano, G.; Portincasa, P.; Palmieri, V.O.; Saracino, A. Pulmonary Artery Filling Defects in COVID-19 Patients Revealed Using CT Pulmonary Angiography: A Predictable Complication? BioMed Res. Int. 2021, 2021. [Google Scholar] [CrossRef] [PubMed]

- Bavaro, D.F.; Poliseno, M.; Scardapane, A.; Belati, A.; De Gennaro, N.; Stabile Ianora, A.A.; Angarano, G.; Saracino, A. Occurrence of Acute Pulmonary Embolism in COVID-19-A case series. Int. J. Infect. Dis. IJID Off. Publ. Int. Soc. Infect. Dis. 2020, 98, 225–226. [Google Scholar] [CrossRef]

- Mukherjee, H.; Ghosh, S.; Dhar, A.; Obaidullah, S.M.; Santosh, K.C.; Roy, K. Shallow Convolutional Neural Network for COVID-19 Outbreak Screening using Chest X-rays. Cogn Comput 2021. [Google Scholar] [CrossRef]

- Cobelli, R.; Zompatori, M.; De Luca, G.; Chiari, G.; Bresciani, P.; Marcato, C. Clinical usefulness of computed tomography study without contrast injection in the evaluation of acute pulmonary embolism. J. Comput. Assist. Tomogr. 2005, 29, 6–12. [Google Scholar] [CrossRef]

- Remy-Jardin, M.; Faivre, J.B.; Kaergel, R.; Hutt, A.; Felloni, P.; Khung, S.; Lejeune, A.L.; Giordano, J.; Remy, J. Machine Learning and Deep Neural Network Applications in the Thorax: Pulmonary Embolism, Chronic Thromboembolic Pulmonary Hypertension, Aorta, and Chronic Obstructive Pulmonary Disease. J. Thorac. Imaging 2020, 35, S40–S48. [Google Scholar] [CrossRef]

- Yousef, H.A.Z. The accuracy of non-contrast chest computed tomographic Scan in the detection of pulmonary thromboembolism. J. Curr. Med. Res. Pract. 2019, 61–66. [Google Scholar] [CrossRef]

- Sun, S.; Semionov, A.; Xie, X.; Kosiuk, J.; Mesurolle, B. Detection of central pulmonary embolism on non-contrast computed tomography: A case control study. Int. J. Cardiovasc. Imaging 2014, 30, 639–646. [Google Scholar] [CrossRef]

- Tourassi, G.D.; Floyd, C.E.; Sostman, H.D.; Coleman, R.E. Acute pulmonary embolism: Artificial neural network approach for diagnosis. Radiology 1993, 189, 555–558. [Google Scholar] [CrossRef]

- Jimenez-del Toro, O.; Dicente Cid, Y.; Platon, A.; Hachulla, A.L.; Lador, F.; Poletti, P.A.; Müller, H. A lung graph model for the radiological assessment of chronic thromboembolic pulmonary hypertension in CT. Comput. Biol. Med. 2020, 125, 103962. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.H.; Gao, S.H.; Mei, J.; Xu, J.; Fan, D.P.; Zhao, C.W.; Cheng, M.M. JCS: An explainable COVID-19 diagnosis system by joint classification and segmentation. arXiv 2020, arXiv:2004.07054. [Google Scholar]

- Akbari, Y.; Hassen, H.; Al-maadeed, S.; Zughaier, S. COVID-19 Lesion Segmentation using Lung CT Scan Images: Comparative Study based on Active Contour Models. Appl. Sci. 2021, 11, 8039. [Google Scholar] [CrossRef]

- Cao, Y.; Xu, Z.; Feng, J.; Jin, C.; Han, X.; Wu, H.; Shi, H. Longitudinal Assessment of COVID-19 Using a Deep Learning–based Quantitative CT Pipeline: Illustration of Two Cases. Radiol. Cardiothorac. Imaging 2020, 2, e200082. [Google Scholar] [CrossRef] [Green Version]

- Rajinikanth, V.; Kadry, S.; Thanaraj, K.P.; Kamalanand, K.; Seo, S. Firefly-algorithm supported scheme to detect COVID-19 lesion in lung CT scan images using shannon entropy and markov-random-field. arXiv 2020, arXiv:2004.09239. [Google Scholar]

- Rajinikanth, V.; Dey, N.; Raj, A.N.J.; Hassanien, A.E.; Santosh, K.C.; Raja, N.S.M. Harmony-Search and Otsu based System for Coronavirus Disease (COVID-19) Detection using Lung CT Scan Images. arXiv 2020, arXiv:2004.03431. [Google Scholar]

- Ter-Sarkisov, A. One Shot Model For The Prediction of COVID-19 and Lesions Segmentation In Chest CT Scans Through The Affinity Among Lesion Mask Features. medRxiv 2021. [Google Scholar] [CrossRef]

- Zhao, J.; He, X.; Yang, X.; Zhang, Y.; Zhang, S.; Xie, P. COVID-CT-Dataset: A CT image dataset about COVID-19. arXiv 2020, arXiv:2003.13865. [Google Scholar]

- Wang, B.; Jin, S.; Yan, Q.; Xu, H.; Luo, C.; Wei, L.; Zhao, W.; Hou, X.; Ma, W.; Xu, Z.; et al. AI-assisted CT imaging analysis for COVID-19 screening: Building and deploying a medical AI system. Appl. Soft Comput. 2021, 98, 106897. [Google Scholar] [CrossRef]

- Oulefki, A.; Agaian, S.; Trongtirakul, T.; Kassah Laouar, A. Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 2020, 107747. [Google Scholar] [CrossRef]

- Zheng, C.; Deng, X.; Fu, Q.; Zhou, Q.; Feng, J.; Ma, H.; Liu, W.; Wang, X. Deep Learning-based Detection for COVID-19 from Chest CT using Weak Label. medRxiv 2020, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Deng, X.; Fu, Q.; Zhou, Q.; Feng, J.; Ma, H.; Liu, W.; Zheng, C. A Weakly-Supervised Framework for COVID-19 Classification and Lesion Localization from Chest CT. IEEE Trans. Med. Imaging 2020, 39, 2615–2625. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Ma, J.; Ge, C.; Wang, Y.; An, X.; Gao, J.; Yu, Z.; Zhang, M.; Liu, X.; Deng, X.; Cao, S.; et al. COVID-19 CT Lung and Infection Segmentation Dataset. Zenodo 2020. [Google Scholar] [CrossRef]

- Zaffino, P.; Marzullo, A.; Moccia, S.; Calimeri, F.; De Momi, E.; Bertucci, B.; Arcuri, P.P.; Spadea, M.F. An open-source covid-19 ct dataset with automatic lung tissue classification for radiomics. Bioengineering 2021, 8, 26. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Altini, N.; Cascarano, G.D.; Brunetti, A.; De Feudis, D.I.; Buongiorno, D.; Rossini, M.; Pesce, F.; Gesualdo, L.; Bevilacqua, V. A Deep Learning Instance Segmentation Approach for Global Glomerulosclerosis Assessment in Donor Kidney Biopsies. Electronics 2020, 9, 1768. [Google Scholar] [CrossRef]

- Brunetti, A.; Carnimeo, L.; Trotta, G.F.; Bevilacqua, V. Computer-assisted frameworks for classification of liver, breast and blood neoplasias via neural networks: A survey based on medical images. Neurocomputing 2019, 335, 274–298. [Google Scholar] [CrossRef]

- Lateef, F.; Ruichek, Y. Survey on semantic segmentation using deep learning techniques. Neurocomputing 2019, 338, 321–348. [Google Scholar] [CrossRef]

- Altini, N.; Cascarano, G.D.; Brunetti, A.; Marino, F.; Rocchetti, M.T.; Matino, S.; Venere, U.; Rossini, M.; Pesce, F.; Gesualdo, L.; et al. Semantic Segmentation Framework for Glomeruli Detection and Classification in Kidney Histological Sections. Electronics 2020, 9, 503. [Google Scholar] [CrossRef] [Green Version]

- Bevilacqua, V.; Brunetti, A.; Cascarano, G.D.; Guerriero, A.; Pesce, F.; Moschetta, M.; Gesualdo, L. A comparison between two semantic deep learning frameworks for the autosomal dominant polycystic kidney disease segmentation based on magnetic resonance images. BMC Med. Inform. Decis. Mak. 2019, 19, 244. [Google Scholar] [CrossRef]

- Liu, L.; Cheng, J.; Quan, Q.; Wu, F.X.; Wang, Y.P.; Wang, J. A survey on U-shaped networks in medical image segmentations. Neurocomputing 2020, 409, 244–258. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. Lect. Notes Comput. Sci. (Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform.) 2015, 9351, 234–241. [Google Scholar] [CrossRef] [Green Version]

- Cicek, O.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. Lect. Notes Comput. Sci. (Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform.) 2016, 9901, 424–432. [Google Scholar] [CrossRef] [Green Version]

- Milletari, F.; Navab, N.; Ahmadi, S.A.A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 fourth international conference on 3D vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar] [CrossRef] [Green Version]

- Altini, N.; Prencipe, B.; Brunetti, A.; Brunetti, G.; Triggiani, V.; Carnimeo, L.; Marino, F.; Guerriero, A.; Villani, L.; Scardapane, A.; et al. A Tversky Loss-Based Convolutional Neural Network for Liver Vessels Segmentation; Springer: Cham, Switzerland, 2020; Volume 12463. [Google Scholar] [CrossRef]

- Altini, N.; De Giosa, G.; Fragasso, N.; Coscia, C.; Sibilano, E.; Prencipe, B.; Hussain, S.M.; Brunetti, A.; Buongiorno, D.; Guerriero, A.; et al. Segmentation and Identification of Vertebrae in CT Scans Using CNN, k-Means Clustering and k-NN. Informatics 2021, 8, 40. [Google Scholar] [CrossRef]

- Lambin, P.; Rios-Velazquez, E.; Leijenaar, R.; Carvalho, S.; Van Stiphout, R.G.; Granton, P.; Zegers, C.M.; Gillies, R.; Boellard, R.; Dekker, A.; et al. Radiomics: Extracting more information from medical images using advanced feature analysis. Eur. J. Cancer 2012, 48, 441–446. [Google Scholar] [CrossRef] [Green Version]

- Le, N.Q.K.; Kha, Q.H.; Nguyen, V.H.; Chen, Y.C.; Cheng, S.J.; Chen, C.Y. Machine Learning-Based Radiomics Signatures for EGFR and KRAS Mutations Prediction in Non-Small-Cell Lung Cancer. Int. J. Mol. Sci. 2021, 22, 9254. [Google Scholar] [CrossRef]

- Aerts, H.J.; Velazquez, E.R.; Leijenaar, R.T.; Parmar, C.; Grossmann, P.; Cavalho, S.; Bussink, J.; Monshouwer, R.; Haibe-Kains, B.; Rietveld, D.; et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat. Commun. 2014, 5. [Google Scholar] [CrossRef]

- Le, N.Q.K.; Hung, T.N.K.; Do, D.T.; Lam, L.H.T.; Dang, L.H.; Huynh, T.T. Radiomics-based machine learning model for efficiently classifying transcriptome subtypes in glioblastoma patients from MRI. Comput. Biol. Med. 2021, 132, 104320. [Google Scholar] [CrossRef]

- Bevilacqua, V.; Brunetti, A.; Trotta, G.F.; Dimauro, G.; Elez, K.; Alberotanza, V.; Scardapane, A. A novel approach for Hepatocellular Carcinoma detection and classification based on triphasic CT Protocol. In Proceedings of the 2017 IEEE Congress on Evolutionary Computation (CEC), Donostia, Spain, 5–8 June 2017; pp. 1856–1863. [Google Scholar]

- Pinker, K.; Chin, J.; Melsaether, A.N.; Morris, E.A.; Moy, L. Precision medicine and radiogenomics in breast cancer: New approaches toward diagnosis and treatment. Radiology 2018, 287, 732–747. [Google Scholar] [CrossRef]

- Van Griethuysen, J.J.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.; Fillion-Robin, J.C.; Pieper, S.; Aerts, H.J. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef] [Green Version]

- Zwanenburg, A.; Leger, S.; Vallières, M.; Löck, S. Image biomarker standardisation initiative. arXiv 2016, arXiv:1612.07003. [Google Scholar]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images are more than pictures, they are data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kumar, V.; Gu, Y.; Basu, S.; Berglund, A.; Eschrich, S.A.; Schabath, M.B.; Forster, K.; Aerts, H.J.; Dekker, A.; Fenstermacher, D.; et al. Radiomics: The process and the challenges. Magn. Reson. Imaging 2012, 30, 1234–1248. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rizzo, S.; Botta, F.; Raimondi, S.; Origgi, D.; Fanciullo, C.; Morganti, A.G.; Bellomi, M. Radiomics: The facts and the challenges of image analysis. Eur. Radiol. Exp. 2018, 2. [Google Scholar] [CrossRef]

- Heimann, T.; van Ginneken, B.; Styner, M.M.A.; Arzhaeva, Y.; Aurich, V.; Bauer, C.; Beck, A.; Becker, C.; Beichel, R.; Bekes, G.; et al. Comparison and Evaluation of Methods for Liver Segmentation From CT Datasets. IEEE Trans. Med. Imaging 2009, 28, 1251–1265. [Google Scholar] [CrossRef]

- Bilic, P.; Christ, P.F.; Vorontsov, E.; Chlebus, G.; Chen, H.; Dou, Q.; Fu, C.W.; Han, X.; Heng, P.A.; Hesser, J.; et al. The Liver Tumor Segmentation Benchmark (LiTS). arXiv 2019, arXiv:1901.04056. [Google Scholar]

- Prencipe, B.; Altini, N.; Cascarano, G.D.; Guerriero, A.; Brunetti, A. A Novel Approach Based on Region Growing Algorithm for Liver and Spleen Segmentation from CT Scans. In Intelligent Computing Theories and Application; Huang, D.S., Bevilacqua, V., Hussain, A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 398–410. [Google Scholar]

- Haralick, R.M.; Dinstein, I.; Shanmugam, K. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Bevilacqua, V.; Pietroleonardo, N.; Triggiani, V.; Brunetti, A.; Di Palma, A.M.; Rossini, M.; Gesualdo, L. An innovative neural network framework to classify blood vessels and tubules based on Haralick features evaluated in histological images of kidney biopsy. Neurocomputing 2017, 228, 143–153. [Google Scholar] [CrossRef]

- Altini, N.; Marvulli, T.M.; Caputo, M.; Mattioli, E.; Prencipe, B.; Cascarano, G.D.; Brunetti, A.; Tommasi, S.; Bevilacqua, V.; De Summa, S.; et al. Multi-class Tissue Classification in Colorectal Cancer with Handcrafted and Deep Features. In Intelligent Computing Theories and Application; Huang, D.S., Jo, K.H., Li, J., Gribova, V., Bevilacqua, V., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 512–525. [Google Scholar]

- Galloway, M.M. Texture analysis using gray level run lengths. Comput. Graph. Image Process. 1975, 4, 172–179. [Google Scholar] [CrossRef]

- Chu, A.; Sehgal, C.M.; Greenleaf, J.F. Use of gray value distribution of run lengths for texture analysis. Pattern Recognit. Lett. 1990, 11, 415–419. [Google Scholar] [CrossRef]

- Thibault, G.; Fertil, B.; Navarro, C.; Pereira, S.; Cau, P.; Levy, N.; Sequeira, J.; Mari, J.-L. Texture Indexes and Gray Level Size Zone Matrix Application to Cell Nuclei Classification. In Proceedings of the 10th International Conference on Pattern Recognition and Information Processing, Minsk, Belarus, 19–21 May 2009; pp. 140–145. [Google Scholar]

- Sun, C.; Wee, W.G. Neighboring gray level dependence matrix for texture classification. Comput. Vis. Graph. Image Process. 1983, 23, 341–352. [Google Scholar] [CrossRef]

- Amadasun, M.; King, R. Textural features corresponding to textural properties. IEEE Trans. Syst. Man Cybern. 1989, 19, 1264–1274. [Google Scholar] [CrossRef]

- Song, F.; Guo, Z.; Mei, D. Feature Selection Using Principal Component Analysis. In Proceedings of the 2010 International Conference on System Science, Engineering Design and Manufacturing Informatization, Yichang, China, 12–14 November 2010; Volume 1, pp. 27–30. [Google Scholar] [CrossRef]

- der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Šidák, Z. Rectangular Confidence Regions for the Means of Multivariate Normal Distributions. J. Am. Stat. Assoc. 1967, 62, 626–633. [Google Scholar] [CrossRef]

- Weiß, M.; Göker, M. Chapter 12 - Molecular Phylogenetic Reconstruction. In The Yeasts (Fifth Edition), 5th ed.; Kurtzman, C.P., Fell, J.W., Boekhout, T., Eds.; Elsevier: London, UK, 2011; pp. 159–174. [Google Scholar] [CrossRef]

- Beylkin, G.; Coifman, R.; Rokhlin, V. Fast wavelet transforms and numerical algorithms. In Fundamental Papers in Wavelet Theory; Princeton University Press: Princeton, NJ, USA, 2009; pp. 741–783. [Google Scholar]

- Daubechies, I. Ten Lectures on Wavelets; SIAM: Philadelphia, PA, USA, 1992. [Google Scholar]

| Dataset | Acronym | Sample | Disease | Annotation |

|---|---|---|---|---|

| Jun et al. [24] | D1 | 20 CT | COVID-19 | LP, LL |

| VESSEL12 | D2 | 20 CT | Other lung diseases | LP |

| Ours | D3 | 20 CT | COVID-19 | LP, GGO, LC |

| Zaffino et al. [25] | D4 | 50 CT | COVID-19 | A, LP, GGO, LC, DT |

| Model | Precision [%] | Recall [%] | Dice [%] | RVD [%] | ASSD [mm] | MSSD [mm] |

|---|---|---|---|---|---|---|

| LM | ||||||

| LLM | ||||||

| LLMPP |

| Feature | Mean | Median | Std | IQR | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Non-PTE | PTE | Non-PTE | PTE | Non-PTE | PTE | Non-PTE | PTE | |||

| 1 | 0.0485 | 0.0683 | 0.0390 | 0.0519 | 0.0322 | 0.0430 | 0.0325 | 0.0644 | 24,999 | 14,601 |

| 2 | 749.736 | 843.911 | 742.459 | 821.026 | 119.081 | 218.129 | 110.752 | 218.576 | 25,849 | 13,751 |

| 3 | 68.824 | 66.891 | 71.056 | 66.667 | 0.5538 | 0.4505 | 0.6482 | 0.5939 | 14,637 | 24,963 |

| 4 | 0.4233 | 0.4029 | 0.4162 | 0.4032 | 0.0210 | 0.0258 | 0.0269 | 0.0389 | 11,459 | 28,141 |

| 5 | 142.651 | 137.572 | 148.364 | 132.101 | 26.378 | 20.881 | 30.981 | 22.403 | 14,731 | 24,869 |

| 6 | 0.0137 | 0.0164 | 0.0123 | 0.0160 | 0.0053 | 0.0057 | 0.0079 | 0.0065 | 25,470 | 14,130 |

| 7 | 0.0088 | 0.0078 | 0.0087 | 0.0074 | 0.0021 | 0.0024 | 0.0027 | 0.0041 | 14,680 | 24,920 |

| 8 | −0.3484 | −0.1261 | −0.2963 | −0.1591 | 0.2789 | 0.3489 | 0.3635 | 0.4178 | 25,838 | 13,762 |

| 9 | 0.6364 | −0.4777 | −0.0010 | −0.0092 | 15.061 | 24.794 | 0.0544 | 0.0322 | 14,646 | 24,954 |

| Author | Method | Materials | Task | Results |

|---|---|---|---|---|

| Wu et al. [12] | Joint classification and segmentation (JCS) | 144,167 CT images (3855 annotated) | Real-time and explainable COVID-19 classification | Classification: sensitivity = 0.95, specificity = 0.93; segmentation: Dice = 0.78 |

| Akbari et al. [13] | Active Contour | 100 CT images | COVID-19 lesions segmentation | FRAGL: Dice = 0.96, Jaccard = 0.93, F1 = 0.66, precision = 0.91, recall = 0.53 |

| Cao et al. [14] | U-Net-like CNN | 2 CT scans | Objective assessment of pulmonary involvement and therapy response in COVID-19 | Qualitative |

| Rajinikanth et al. [15] | Firefly algorithm and multi-thresholding based on Shannon entropy + Markov Random Field segmentation | 100 CT images | COVID-19 lesions segmentation | Jaccard = 0.84, Dice = 0.89, accuracy = 0.92, precision = 0.92, sensitivity = 0.95, specificity = 0.94, NPV = 0.93 |

| Rajinikanth et al. [16] | Harmony search optimization and Otsu thresholding | 90 CT coronal slices + 20 CT axial slices | COVID-19 lesion segmentation | Infection rate |

| Ter-Sarkisov et al. [17] | One shot model based on Mask R-CNN | 750 CT images for segmentation + 1492 for classification | COVID-19 classification and lesions segmentation | Segmentation: AP@0.5 IoU = 0.614, Classification: accuracy = 0.91 |

| Zhao et al. [18] | Multi-task learning and self-supervised learning | 349 COVID-19 CT images + 463 non-COVID-19 CT images | COVID-19 classification | F1 = 0.90, AUC = 0.98, accuracy = 0.89 |

| Wang et al. [19] | Segmentation: FCN, U-Net, V-Net, 3D U-Net++; Classification: ResNet-50, inception, DPN-92, attention ResNet-50 | 1136 CT images | COVID-19 pneumonia detection | Sensitivity = 0.97, specificity = 0.92, AUC = 0.991 |

| Oulefki et al. [20] | Improved Kapur entropy-based multilevel thresholding procedure | 275 CT images from COVID-CT-dataset + 22 CT images (local) | COVID-19 lesions segmentation | Accuracy = 0.98, sensitivity = 0.73, F1 = 0.71, precision = 0.73, MCC = 0.71, Dice = 0.71, Jaccard = 0.57, specificity = 0.99 |

| Zheng, Wang et al. [21,22] | U-Net + 3D deep neural network | 499 CT volumes (train) + 131 CT volumes (test) | Lung segmentation + COVID-19 classification | ROC AUC = 0.96, PR AUC = 0.98, sensitivity = 0.91, specificity = 0.91 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bevilacqua, V.; Altini, N.; Prencipe, B.; Brunetti, A.; Villani, L.; Sacco, A.; Morelli, C.; Ciaccia, M.; Scardapane, A. Lung Segmentation and Characterization in COVID-19 Patients for Assessing Pulmonary Thromboembolism: An Approach Based on Deep Learning and Radiomics. Electronics 2021, 10, 2475. https://doi.org/10.3390/electronics10202475

Bevilacqua V, Altini N, Prencipe B, Brunetti A, Villani L, Sacco A, Morelli C, Ciaccia M, Scardapane A. Lung Segmentation and Characterization in COVID-19 Patients for Assessing Pulmonary Thromboembolism: An Approach Based on Deep Learning and Radiomics. Electronics. 2021; 10(20):2475. https://doi.org/10.3390/electronics10202475

Chicago/Turabian StyleBevilacqua, Vitoantonio, Nicola Altini, Berardino Prencipe, Antonio Brunetti, Laura Villani, Antonello Sacco, Chiara Morelli, Michele Ciaccia, and Arnaldo Scardapane. 2021. "Lung Segmentation and Characterization in COVID-19 Patients for Assessing Pulmonary Thromboembolism: An Approach Based on Deep Learning and Radiomics" Electronics 10, no. 20: 2475. https://doi.org/10.3390/electronics10202475

APA StyleBevilacqua, V., Altini, N., Prencipe, B., Brunetti, A., Villani, L., Sacco, A., Morelli, C., Ciaccia, M., & Scardapane, A. (2021). Lung Segmentation and Characterization in COVID-19 Patients for Assessing Pulmonary Thromboembolism: An Approach Based on Deep Learning and Radiomics. Electronics, 10(20), 2475. https://doi.org/10.3390/electronics10202475