Non-Linear Chaotic Features-Based Human Activity Recognition

Abstract

1. Introduction

- We present a novel method for human activity recognition that is based on non-linear chaotic features. Because the time series with the chaotic feature can be reconstructed into a nonlinear dynamical system, this system studies the qualitative and quantitative changes of various human motion states.

- The human activity acceleration sensor data can be described as a chaotic time series. As such, we attempt to reconstruct the activity time series in a phase space by time-delay embedding technology. In the meantime, in the process of reconstructing motions phase space, we leverage the C-C method and G-P algorithm in order to estimate the optimal delay time and embedding dimension, respectively.

- We construct a two-dimensional chaotic feature matrix, where the chaotic feature is composed of the correlation dimension and LLE of attractor trajectory in the reconstruction phase space. Additionally, the chaotic feature is different from the time-frequency domain features and it can fully describe the human activity potential dynamic information.

2. Non-Linear Chaotic Features-Based Human Activity Recognition

2.1. Chaotic Analysis of Motion Time Series

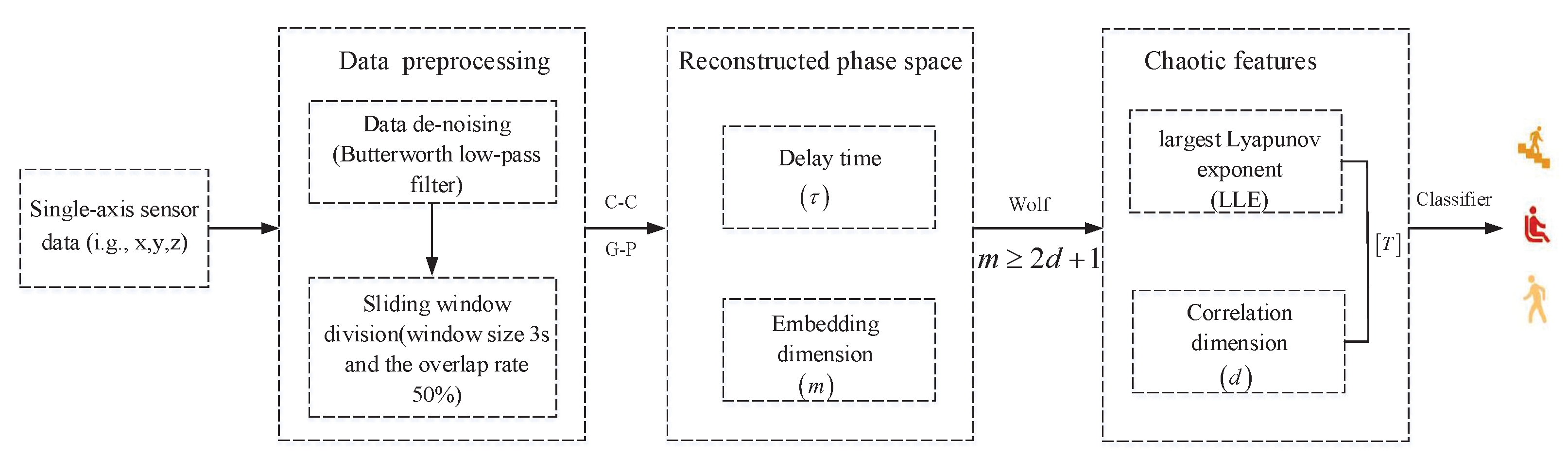

2.2. System Model

2.3. Reconstructed Phase Space

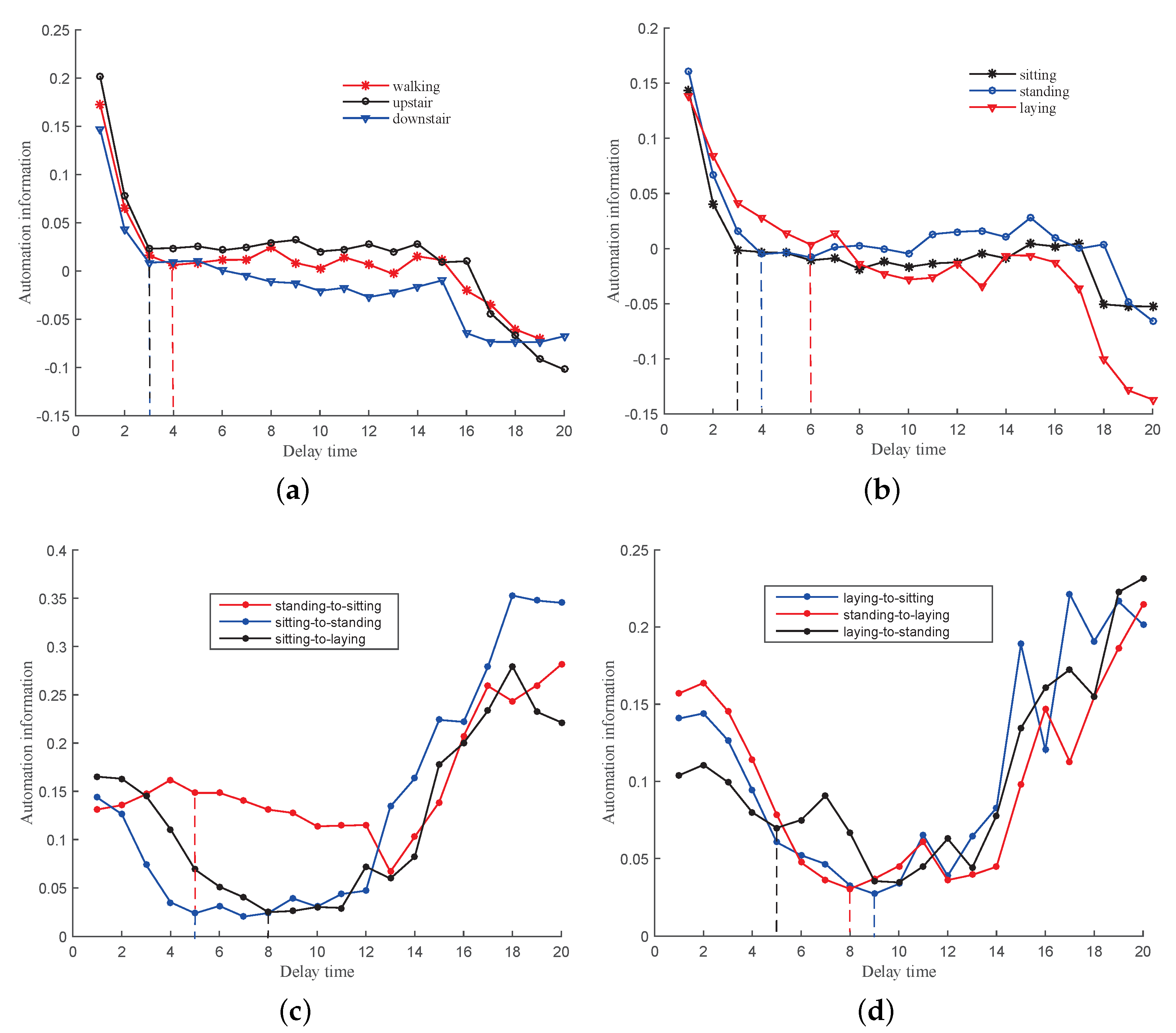

2.3.1. Delay Time

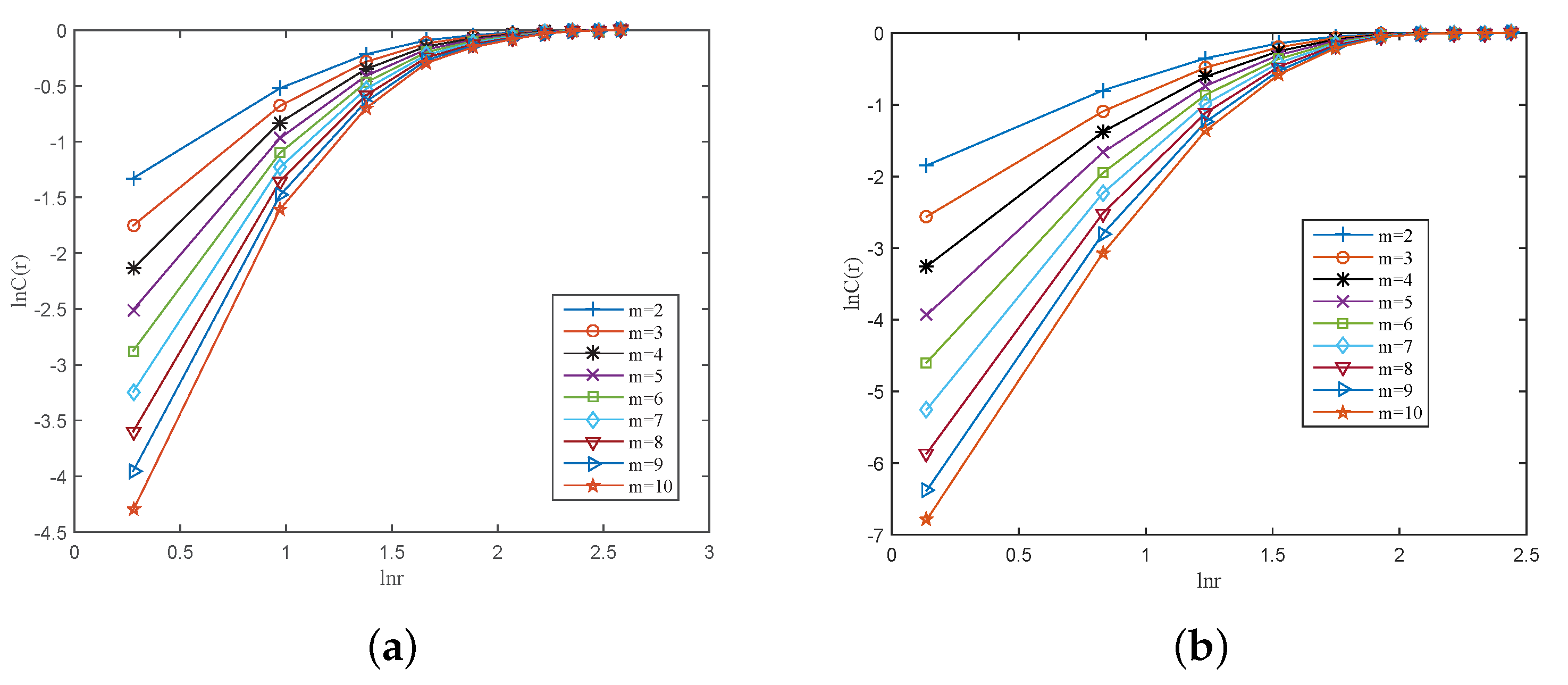

2.3.2. Embedding Dimension

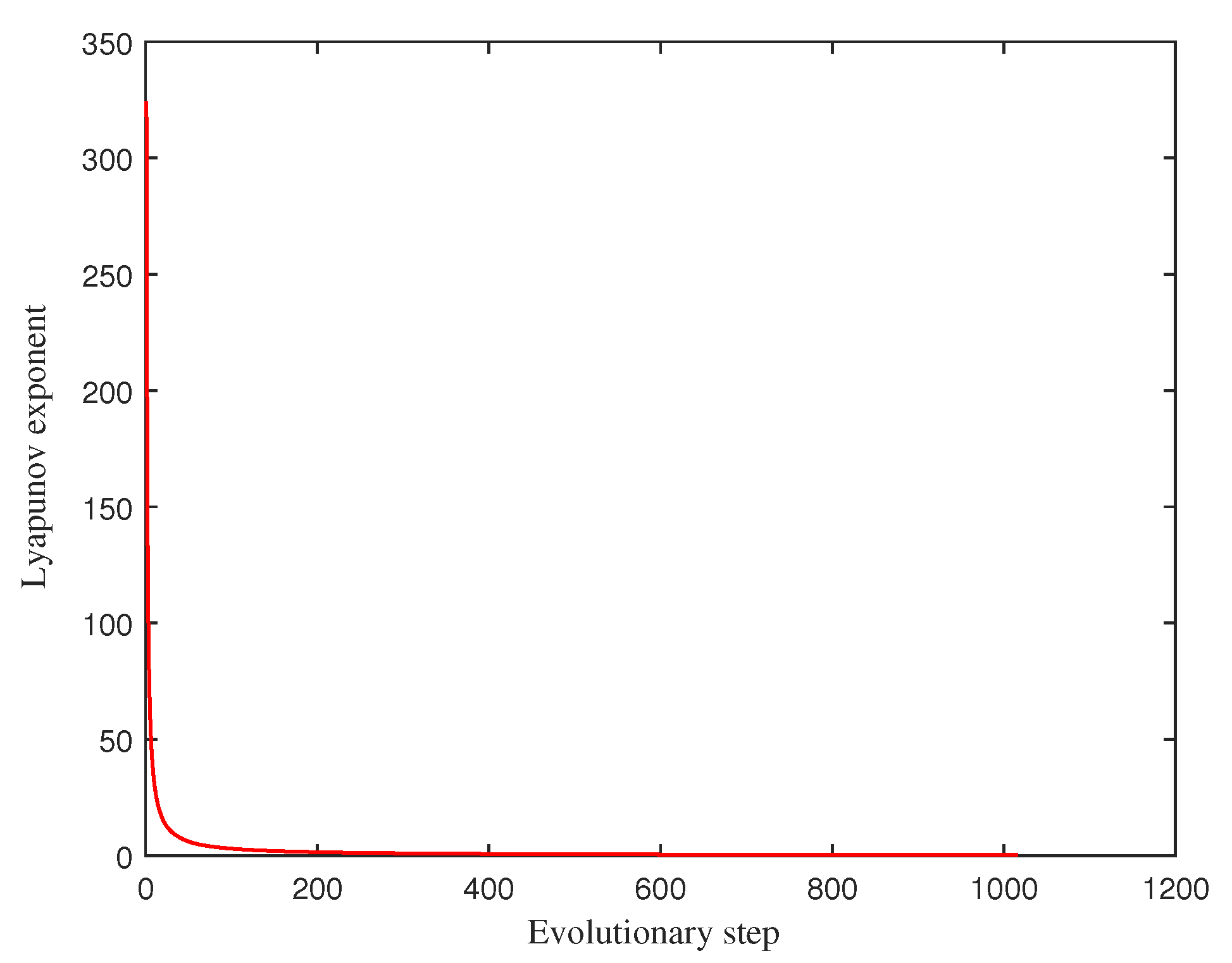

2.3.3. Lyapunov Exponent

- For a set of motion time series , the optimal delay time and embedding dimension m are determined, and then the corresponding reconstructed phase space Y can be obtained based on the Equation (4).

- The initial point is obtained from the Y. The distance between and its nearest neighbor point is estimated , which is the evolution of the two points with time.

- At the time , the distance , which is, the is a threshold value, then retained at the .

- The is the adjacent point of the another point , the distance is the and the angle between and as small as possible.

- Repeat the above steps, until reaches the end of the entire time series n.

- In the process of tracking the evolution, the total number of iterations is set as M. The LLE can be achieved from Equation (12).

2.4. Non-Linear Chaotic Features-Based Human Activity Recognition

- Data preprocessing. The Butterworth low-pass filter is used to separate gravity from the single-axis acceleration sensor data . In addition, we further use a sliding window with a window size 3s and an overlap rate of 50% in order to segment the sensor data.

- Reconstructed phase space. We respectively leverage the C-C method and G-P algorithm in order to estimate the appropriate delay time and embedding dimension m. Additionally, the each activity phase space is reconstructed using Equation (4).

- The feature matrix construction. A two-dimensional chaotic feature matrix is constructed, where the chaotic feature is correlation dimension d and LLE of attractor trajectory in the Y.

- Classification and recognition. The two different human activity classes, such as basic and transition activities, are classified and recognized by the machine learning algorithm.

| Algorithm 1 Pseudo-code of non-linear chaotic features-based human activity recognition |

|

3. Experimental Results and Analysis

3.1. Experimental Materials and Methods

3.2. Experimental Results and Analysis

3.2.1. Reconstructed Phase Space

3.2.2. Experimental Results and Analysis

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, Y.; Tian, G.; Zhang, S.; Li, C. A Knowledge-Based Approach for Multiagent Collaboration in Smart Home: From Activity Recognition to Guidance Service. IEEE Trans. Instrum. Meas. 2019, 69, 1–13. [Google Scholar] [CrossRef]

- Torres, C.; Alvarez, A. Accelerometer-Based Human Activity Recognition in Smartphones for Healthcare Services. Mob. Health 2015, 5, 147–169. [Google Scholar]

- Md, G.; Taskina, F.; Richard, J.; Roger, S.; Muhammad, A.; Ahmed, J.; Sheikh, A. A light weight smartphone based human activity recognition system with high accuracy. J. Netw. Comput. Appl. 2019, 147, 59–72. [Google Scholar]

- Terry, U.; Vahid, B.; Dana, K. Exercise Motion Classification from Large-Scale Wearable Sensor Data Using Convolutional Neural Networks. In Proceedings of the IEEE International Workshop on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 2385–2390. [Google Scholar]

- Wang, A.; Chen, G.; Yang, J.; Zhao, S.; Chang, C. A Comparative Study on Human Activity Recognition Using Inertial Sensors in a Smartphone. IEEE Sens. J. 2016, 19, 4566–4578. [Google Scholar] [CrossRef]

- Chen, Y.; Shen, C. Performance Analysis of Smartphone-Sensor Behavior for Human Activity Recognition. IEEE Access 2017, 5, 3095–3110. [Google Scholar] [CrossRef]

- Qin, Z.; Zhang, Y.; Meng, S.; Qin, Z.; Kim, C. Imaging and fusing time series for wearable sensor-based human activity recognition. Inf. Fusion 2020, 53, 80–87. [Google Scholar] [CrossRef]

- Guan, Y.; Thomas, P. Ensembles of deep lstm learners for activity recognition using wearables. ACM 2017, 1. [Google Scholar] [CrossRef]

- Quaid, M.; Jalal, A. Wearable sensors based human behavioral pattern recognition using statistical features and reweighted genetic algorithm. Multimed. Tools Appl. 2019, 79, 1–23. [Google Scholar] [CrossRef]

- Ahmad, J.; Majid, A.; Abdul, S. Wearable Sensor-Based Human Behavior Understanding and Recognition in Daily Life for Smart Environments. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 17–19 December 2018; pp. 105–110. [Google Scholar]

- Zhu, Q.; Chen, Z.; Soh, Y. A Novel Semisupervised Deep Learning Method for Human Activity Recognition. IEEE Trans. Ind. Inform. 2019, 15, 3821–3830. [Google Scholar] [CrossRef]

- Zhang, H.; Xiao, Z.; Wang, J.; Li, F.; Szczerbicki, E. A Novel IoT-Perceptive Human Activity Recognition (HAR) Approach Using Multihead Convolutional Attention. IEEE Internet Things J. 2020, 7, 1072–1080. [Google Scholar] [CrossRef]

- Nguyen, T.; Dong, S. Utilization of Postural Transitions in Sensor-based Human Activity Recognition. In Proceedings of the International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020; pp. 177–181. [Google Scholar]

- Ignatov, A. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. 2018, 61, 915–922. [Google Scholar] [CrossRef]

- Saad, S.; Arslan, B. Chaotic invariants for human action recognition. In Proceedings of the IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007. [Google Scholar]

- Jordan, F.; Shie, M.; Doina, P. Activity and Gait recognition with time-delay embeddings. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–11 February 2020; pp. 407–408. [Google Scholar]

- Kawsar, F.; Hasan, M.K.; Love, R.; Ahamed, S.I. A Novel Activity Detection System Using Plantar Pressure Sensors and Smartphone. In Proceedings of the IEEE Computer Software and Applications Conference, Taichung, Taiwan, 1–5 July 2015; pp. 44–49. [Google Scholar]

- Bao, J.; Ye, M.; Dou, Y. Mobile phone-based internet of things human action recognition for E-health. In Proceedings of the IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 957–962. [Google Scholar]

- Kim, D.H.; Park, J.S.; Kim, I.Y.; Kim, S.I.; Lee, J.S. Personal recognition using geometric features in the phase space of electrocardiogram. In Proceedings of the IEEE Life Sciences Conference (LSC), Sydney, NSW, Australia, 13–15 December 2017; pp. 198–201. [Google Scholar]

- Li, Y.; Song, Y.; Li, C. Selection of parameters for phase space reconstruction of chaotic time series. In Proceedings of the IEEE Fifth International Conference on Bio-Inspired Computing: Theories & Applications, Changsha, China, 23–26 September 2010. [Google Scholar]

- Takens, F. Detecting strange attractors in turbulence. In Dynamical Systems and Turbulence, Warwick; Springer: Berlin, Germany, 1981; pp. 366–381. [Google Scholar]

- Fang, S.; Chan, H. Qrs detection-free electrocardiogram biometrics in the reconstructed phase. Pattern Recognit. Lett. 2013, 34, 595–602. [Google Scholar] [CrossRef]

- Michael, T.; James, J.; Carlo, T. A practical method for calculating largest Lyapunov exponents from small data sets. Phys. D Nonlinear Phenom. 1993, 65, 117–134. [Google Scholar]

- Kim, H.; Eykholt, R.; Salas, J. Nonlinear dynamics, delay times, and embedding windows. Phys. D Nonlinear Phenom. 1999, 127, 48–60. [Google Scholar] [CrossRef]

- Grassberger, P.; Procaccis, I. Measuring the strangeness f strange attractors. Phys. D Nonlinear Phenom. 1983, 9, 189–208. [Google Scholar] [CrossRef]

- Leng, X.; Chen, H.; Li, P.; Liu, X. Research of chaotic characteristics of low-voltage air arc. J. Eng. 2019, 16, 2484–2487. [Google Scholar] [CrossRef]

- Wolf, A.; Swift, B.; Swinney, L.; Vastano, A. Determining Lyapunov exponents form a time series. Phys. D Nonlinear Phenom. 1985, 16, 285–317. [Google Scholar] [CrossRef]

- HAPT. Available online: http://archive.ics.uci.edu/ml/datasets/Smartphone-Based+Recoginition+of+Human+Activities+and+Postural+Transitions (accessed on 19 August 2020).

- UCI. Available online: https://archive.ics.uci.edu/ml/datasets/Human+Activity+Recoginition+Using+Smartphones (accessed on 19 August 2020).

| Activity Type | d | m |

|---|---|---|

| A1 | 1.7756 | 5 |

| A2 | 1.9543 | 5 |

| A3 | 2.1152 | 6 |

| A4 | 0.6508 | 3 |

| A5 | 0.5624 | 3 |

| A6 | 0.2754 | 2 |

| A7 | 1.1577 | 4 |

| A8 | 1.4746 | 4 |

| A9 | 1.9683 | 5 |

| A10 | 1.3771 | 4 |

| A11 | 1.7147 | 5 |

| A12 | 1.5681 | 5 |

| Activity Type | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | A10 | A11 | A12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LLE | 2.180 | 2.080 | 2.125 | 1.991 | 2.014 | 1.980 | 1.955 | 2.025 | 2.013 | 2.012 | 2.023 | 2.014 |

| Activity Type | Five Classification Algorithms | Average Accuracy | ||||

|---|---|---|---|---|---|---|

| SVM | NB | DT | KNN | RF | ||

| A1 | 98.34% | 97.26% | 93.84% | 94.48% | 97.48% | 96.28% |

| A2 | 100% | 97.77% | 95.65% | 96.57% | 96.37% | 97.27% |

| A3 | 98.42% | 98% | 97.48% | 95.79% | 96.47% | 97.23% |

| A4 | 98.46% | 98.35% | 95.36% | 97.46% | 95.47% | 97.02% |

| A5 | 99.63% | 96.65% | 96.64% | 94.64% | 94.90% | 96.49% |

| A6 | 98.95% | 95.29% | 98.10% | 95.28% | 94.89% | 96.50% |

| A7 | 92.30% | 89.00% | 87.60% | 89.00% | 89.10% | 89.40% |

| A8 | 91.90% | 88.90% | 89.00% | 88.30% | 90.00% | 89.62% |

| A9 | 90.50% | 90.40% | 87.70% | 90.00% | 90.40% | 89.80% |

| A10 | 92.20% | 88.80% | 89.60% | 88.20% | 91.60% | 90.08% |

| A11 | 92.70% | 89.90% | 90.70% | 87.10% | 91.00% | 90.28% |

| A12 | 93.00% | 87.60% | 92.60% | 90.80% | 92.90% | 91.38% |

| Activity Type | A1 | A2 | A3 | A4 | A5 | A6 | A7 | A8 | A9 | A10 | A11 | A12 | Recall |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A1 | 1150 | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 99.3% |

| A2 | 17 | 1108 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 98.0% |

| A3 | 6 | 0 | 1022 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 99.4% |

| A4 | 0 | 0 | 0 | 941 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 99.5% |

| A5 | 0 | 0 | 0 | 14 | 946 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 98.5% |

| A6 | 0 | 0 | 3 | 0 | 0 | 993 | 0 | 0 | 0 | 0 | 0 | 0 | 99.6% |

| A7 | 0 | 0 | 0 | 1 | 0 | 0 | 95 | 5 | 0 | 0 | 1 | 0 | 93.1% |

| A8 | 0 | 0 | 0 | 0 | 0 | 3 | 3 | 72 | 0 | 0 | 0 | 0 | 92.3% |

| A9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 100 | 0 | 4 | 1 | 95.2% |

| A10 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 2 | 0 | 97 | 2 | 4 | 88.1% |

| A11 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | 9 | 0 | 121 | 1 | 88.9% |

| A12 | 0 | 0 | 1 | 0 | 0 | 3 | 0 | 0 | 1 | 8 | 3 | 84 | 84.0% |

| Precision | 98.3% | 100% | 98.4% | 98.5% | 99.6% | 98.9% | 92.3% | 91.9% | 90.5% | 92.2% | 92.7% | 93.0% |

| Related Method | Feature | Activity Class | Recognition Rate |

|---|---|---|---|

| Literature [3] | embedding dimension, delay time | A1–A6 | 90% |

| Our work | correlation dimension, Lyapunov exponent | A1–A6 | 96.80% |

| A7–A12 | 90.08% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tu, P.; Li, J.; Wang, H.; Cao, T.; Wang, K. Non-Linear Chaotic Features-Based Human Activity Recognition. Electronics 2021, 10, 111. https://doi.org/10.3390/electronics10020111

Tu P, Li J, Wang H, Cao T, Wang K. Non-Linear Chaotic Features-Based Human Activity Recognition. Electronics. 2021; 10(2):111. https://doi.org/10.3390/electronics10020111

Chicago/Turabian StyleTu, Pengjia, Junhuai Li, Huaijun Wang, Ting Cao, and Kan Wang. 2021. "Non-Linear Chaotic Features-Based Human Activity Recognition" Electronics 10, no. 2: 111. https://doi.org/10.3390/electronics10020111

APA StyleTu, P., Li, J., Wang, H., Cao, T., & Wang, K. (2021). Non-Linear Chaotic Features-Based Human Activity Recognition. Electronics, 10(2), 111. https://doi.org/10.3390/electronics10020111