Implementation of an Award-Winning Invasive Fish Recognition and Separation System

Abstract

:1. Introduction

- Threaten endangered species.

- Affect commercial or recreational navigation.

- Prevent water flow and wastewater or storm discharge.

- Have negative effects on resident fish, plans, or insects.

- Limit commercial and sport fishing.

- Fail to maintain state and federal standards for water quality.

- Introduce another potentially invasive species.

2. Materials and Methods

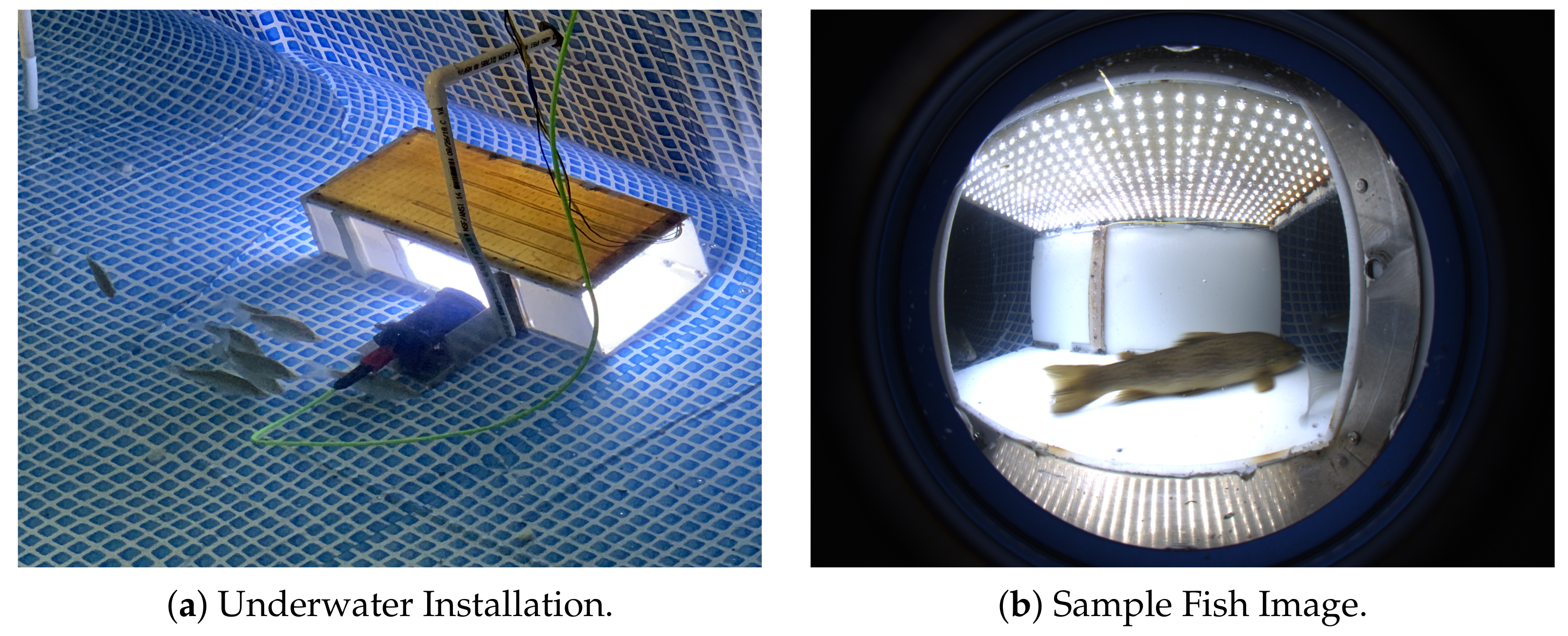

2.1. Underwater Imaging System

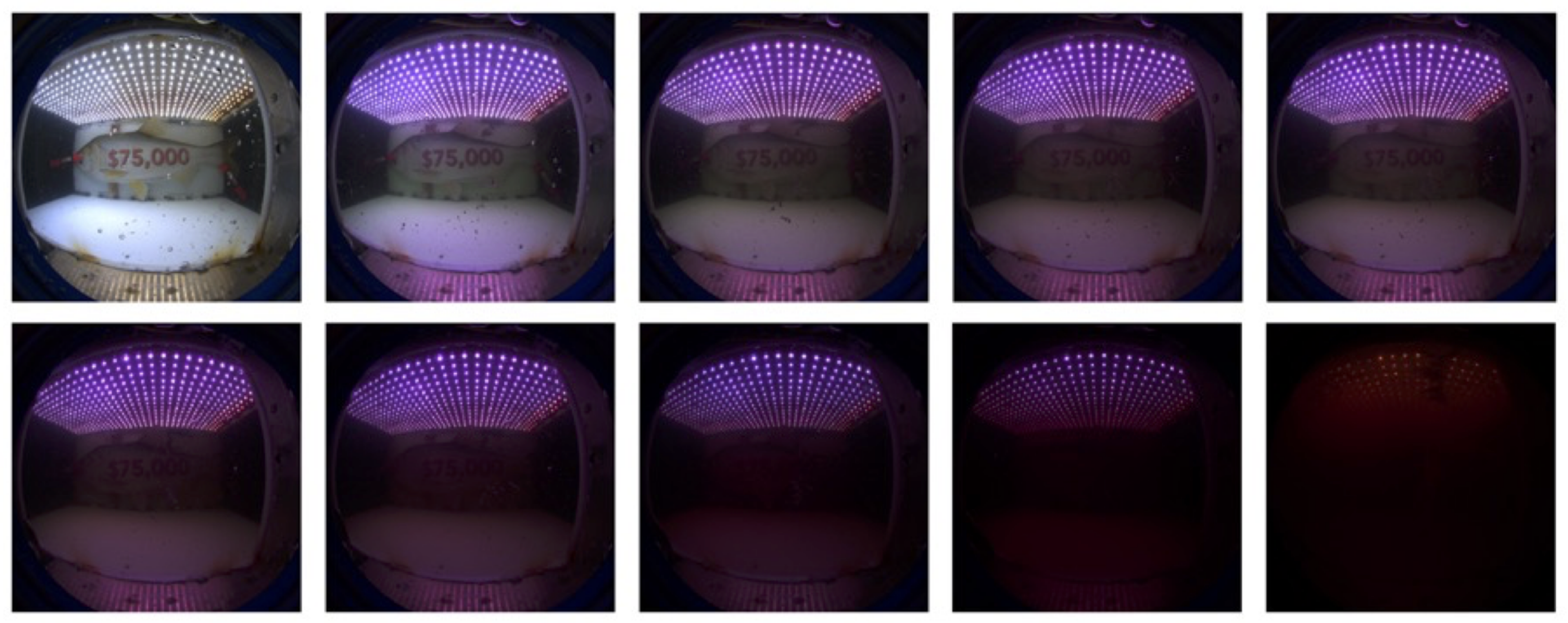

2.2. Water Turbidity

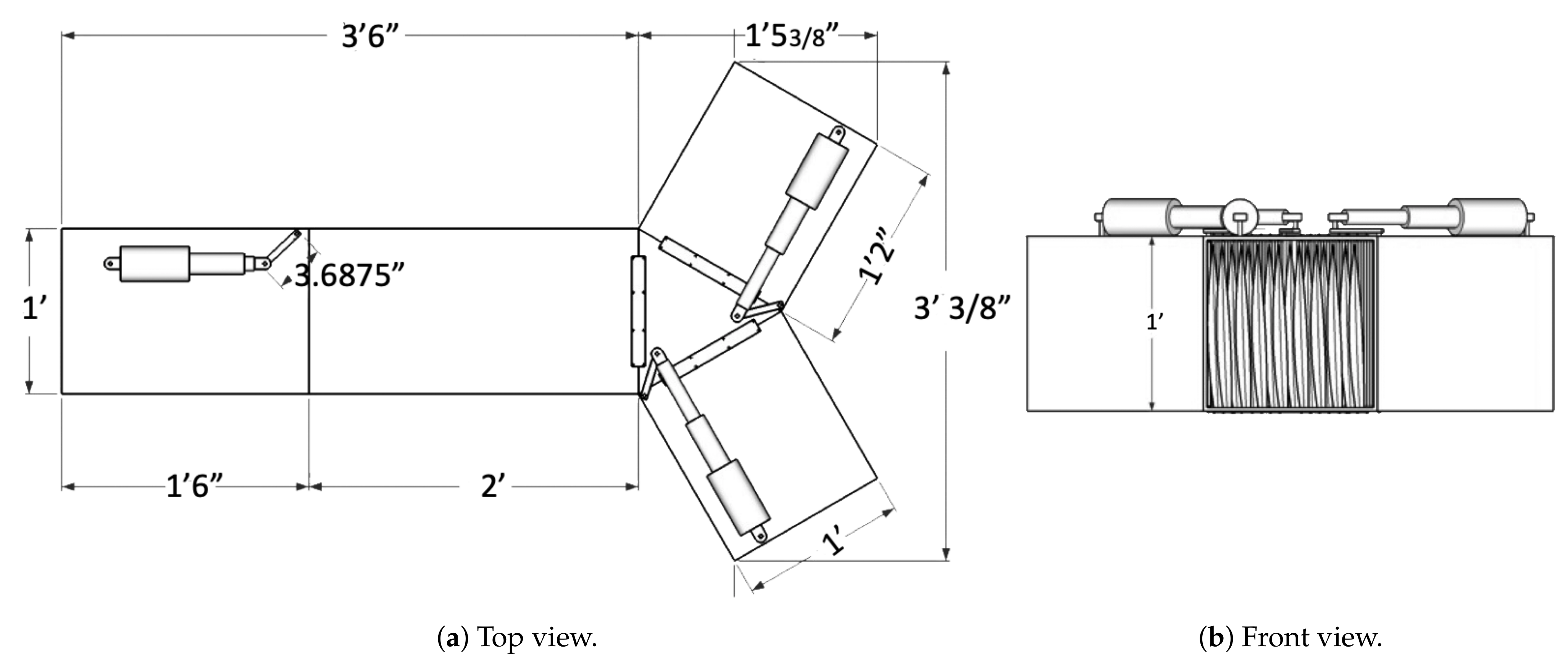

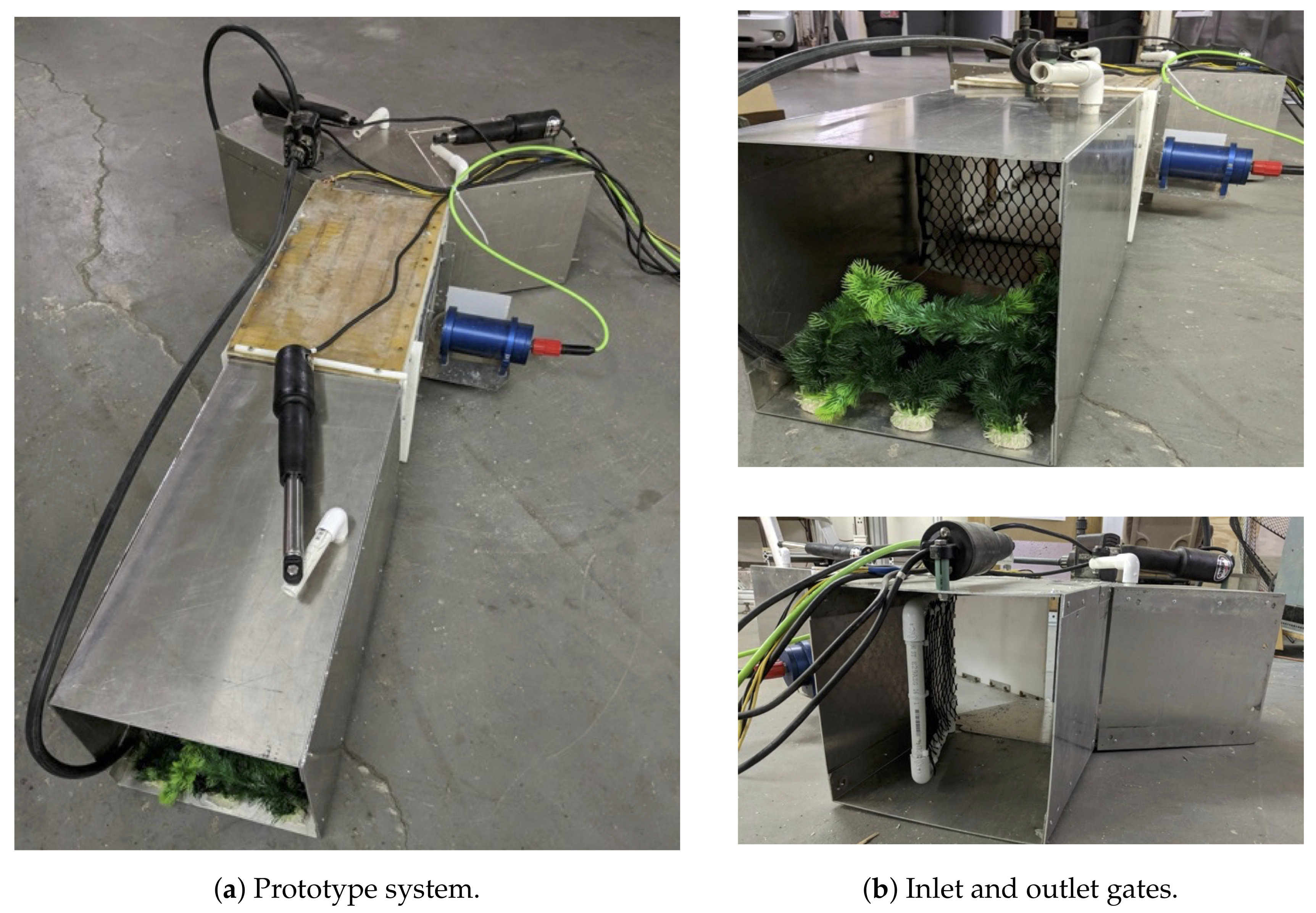

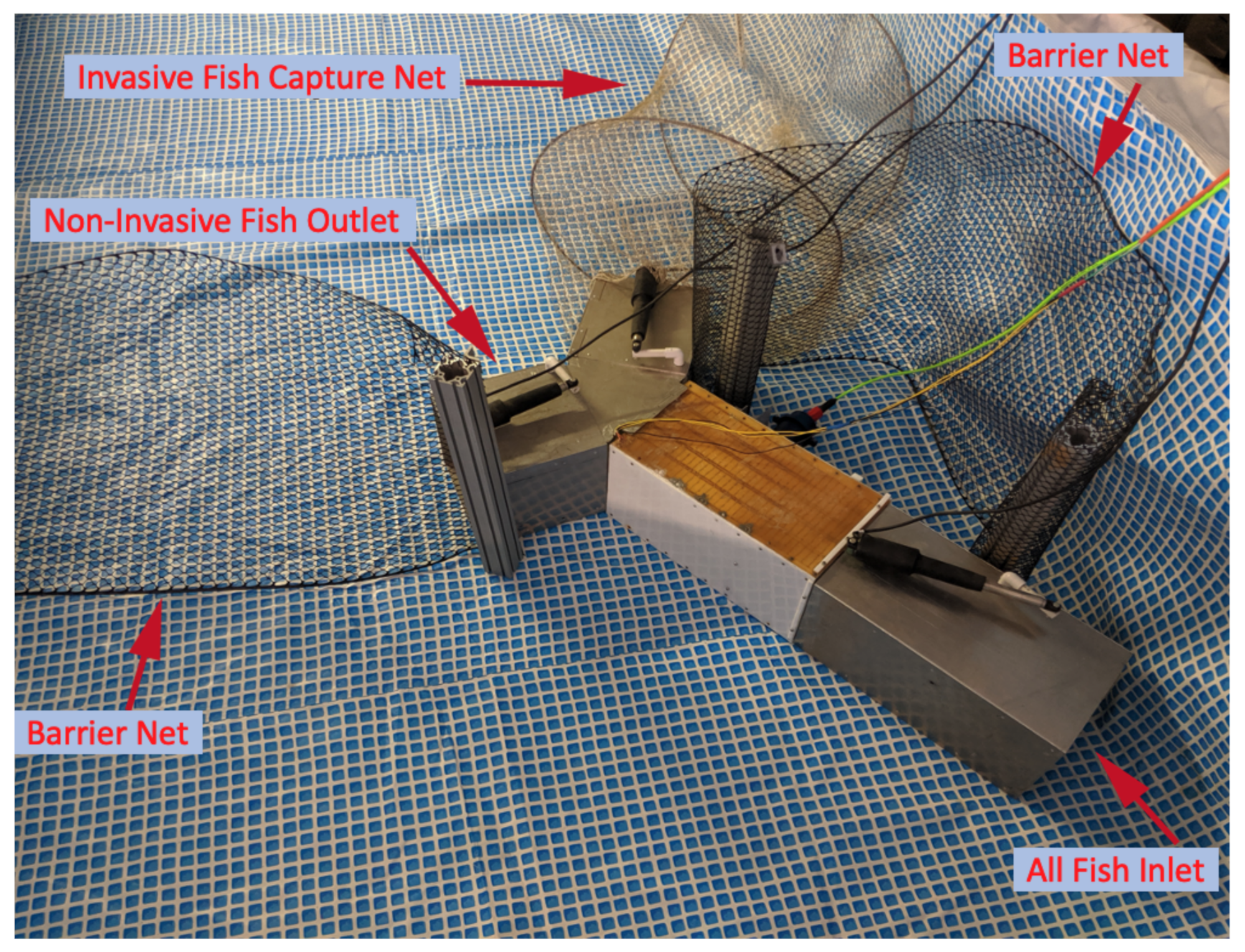

2.3. Fish Separation Mechanism

2.4. Control and Functionality

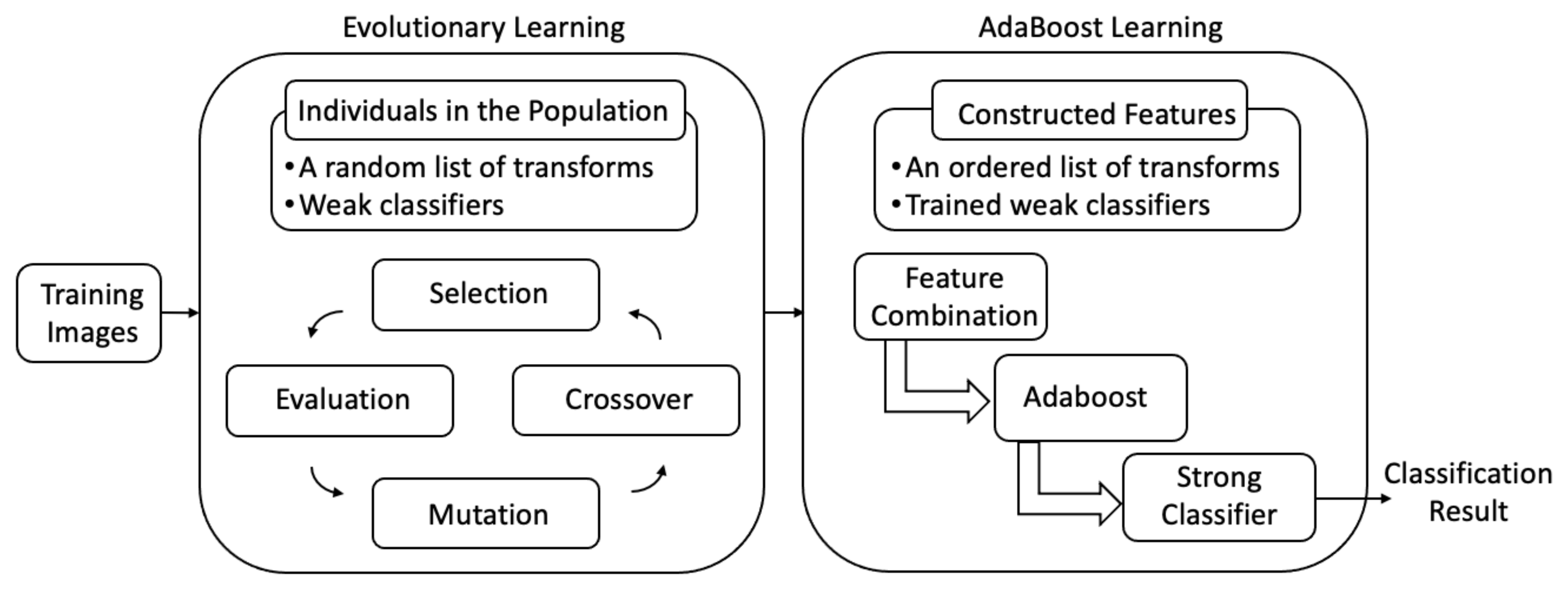

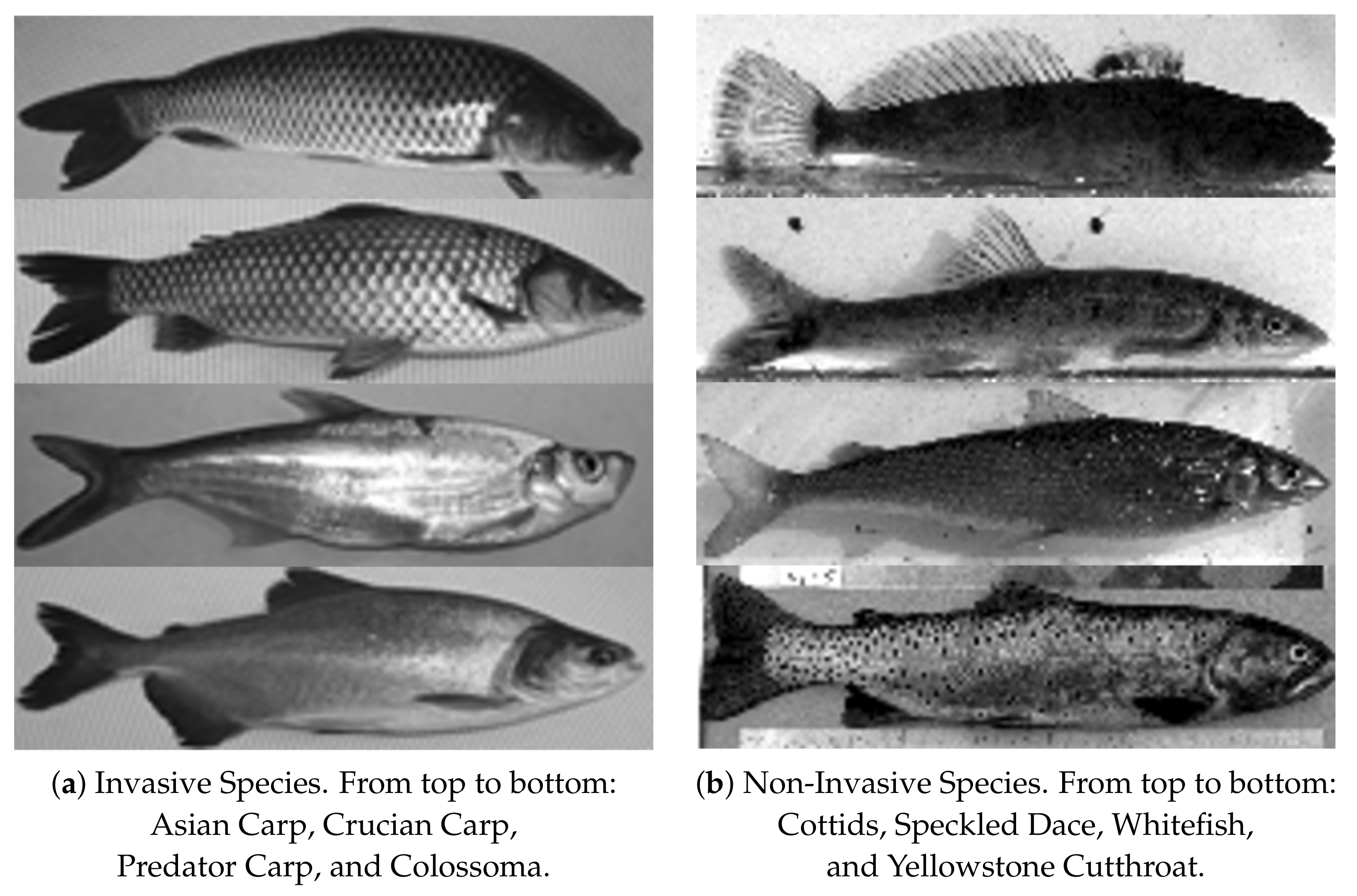

2.5. Fish Species Recognition

3. Results

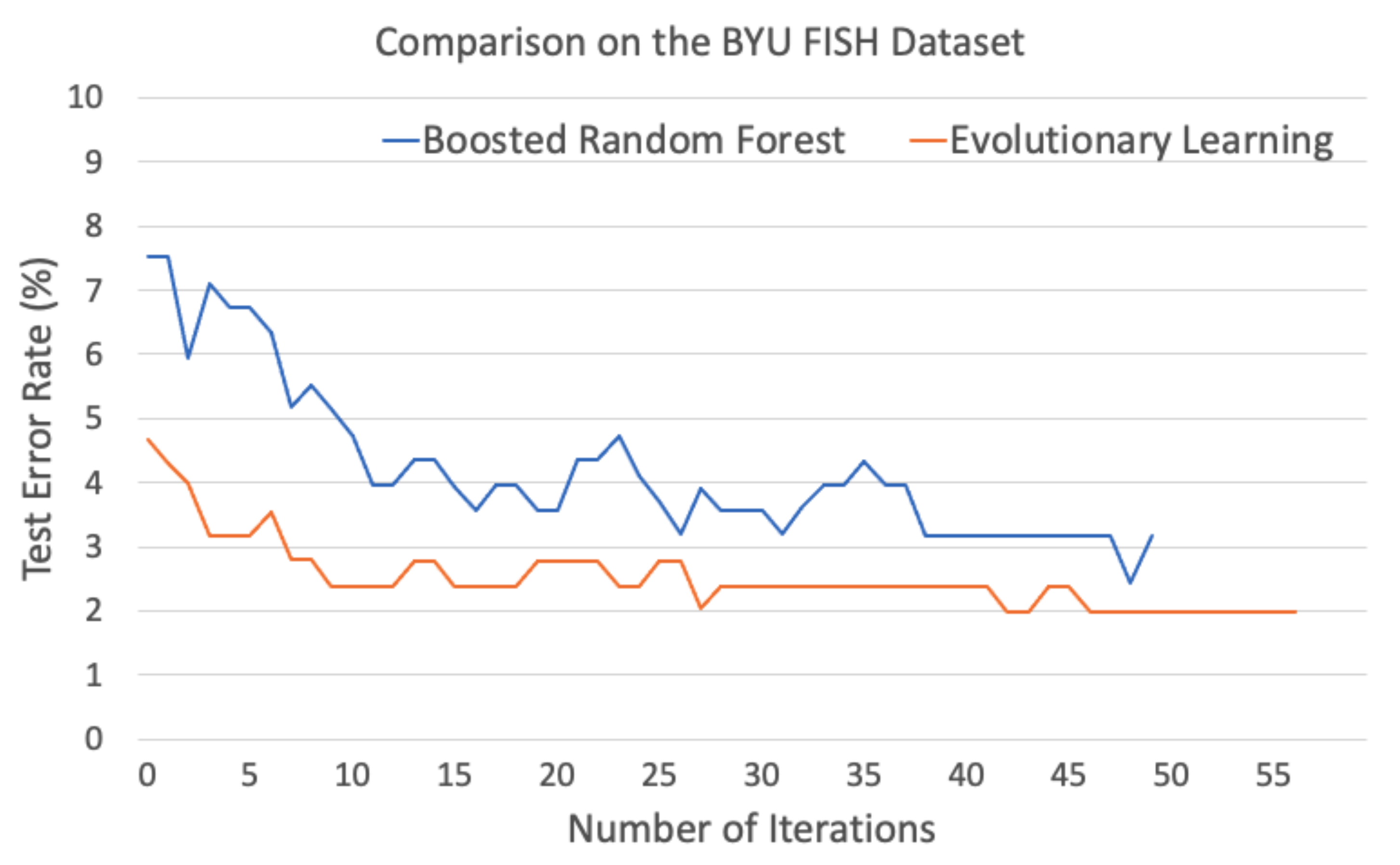

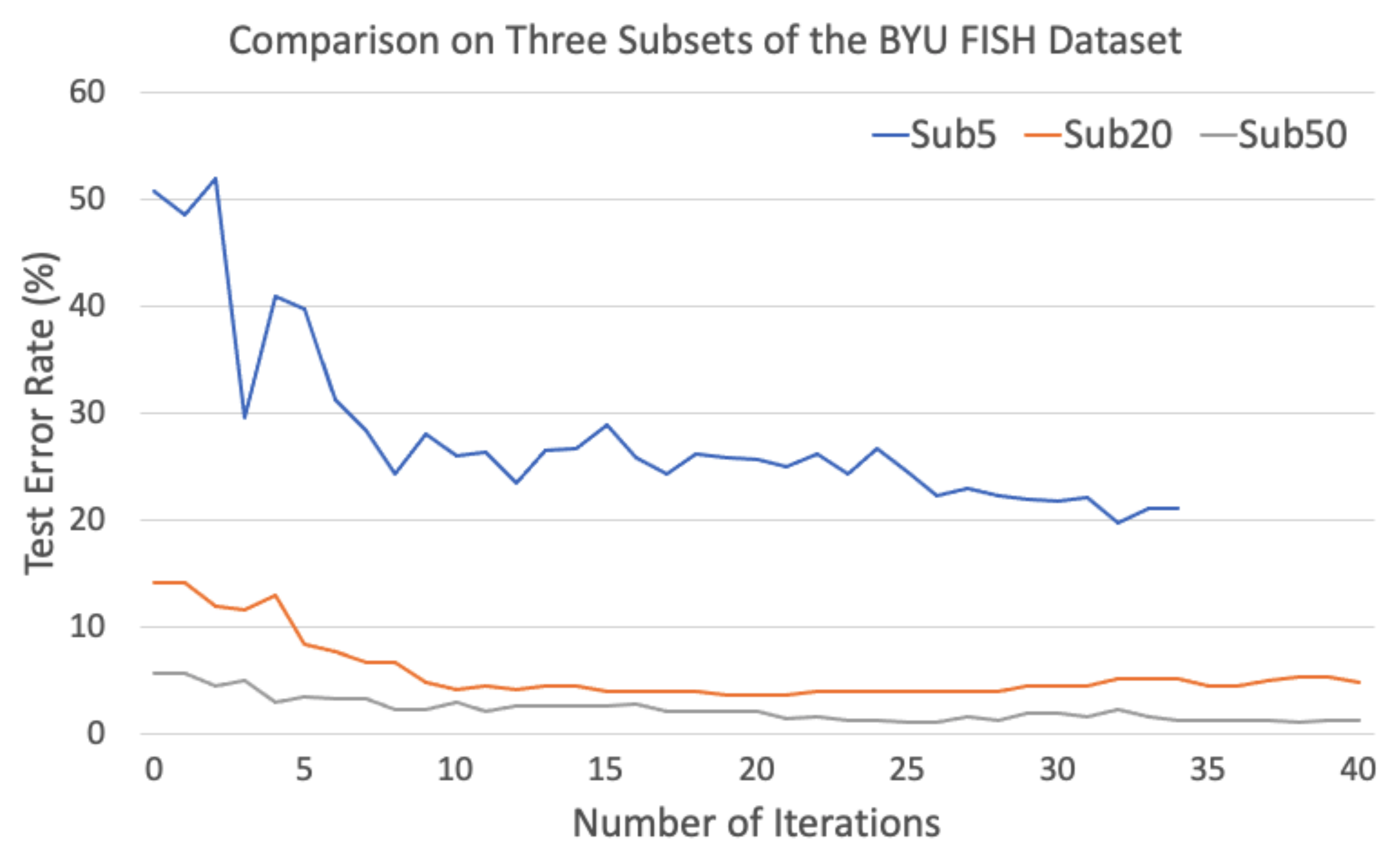

3.1. Error Rate

3.2. Population and Training Data

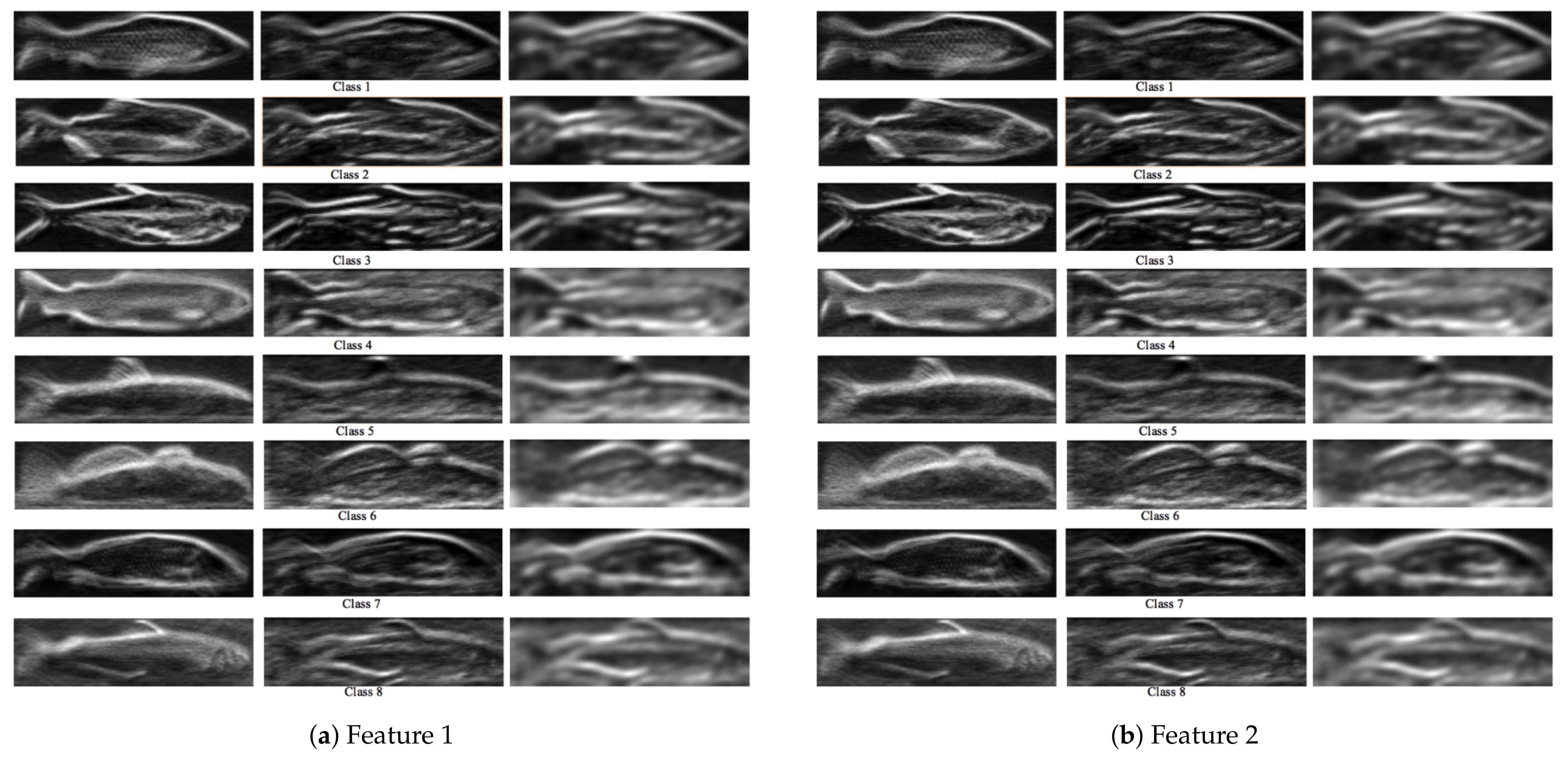

3.3. Visualization of Features

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- The Great Lakes Invasive Carp Challenge. Available online: https://www.michigan.gov/dnr/0,4570,7-350-84430_84439---,00.html (accessed on 20 July 2021).

- Facts About Invasive Bighead and Silver Carps. Available online: https://pubs.usgs.gov/fs/2010/3033/pdf/FS2010-3033.pdf (accessed on 20 July 2021).

- Sass, G.; Hinz, C.; Erickson, A.; McClelland, N.; McClelland, M.; Epifanio, J. Invasive Bighead and Silver Carp Effects on Zooplankton Communities in the Illinois River, Illinois, USA. J. Great Lakes Res. 2014, 40, 911–921. [Google Scholar] [CrossRef]

- Asian Carp—Aquatic Invasive Species. Available online: https://www.fws.gov/midwest/fisheries/library/fact-asiancarp.pdf (accessed on 20 July 2021).

- Electric Barriers. Available online: https://www.lrc.usace.army.mil/Missions/Civil-Works-Projects/ANS-Portal/Barrier/ (accessed on 20 July 2021).

- Application of Broadband Sound for Bigheaded Carp Deterrence. Available online: https://www.usgs.gov/centers/umesc/science/application-broadband-sound-bigheaded-carp-deterrence?qt-science_center_objects=0#qt-science_center_objects (accessed on 20 July 2021).

- Evaluating the Behavioral Response of Silver and Bighead Carp to CO2 across Three Temperatures. Available online: https://www.usgs.gov/centers/umesc/science/evaluating-behavioral-response-silver-and-bighead-carp-co2-across-three?qt-science_center_objects=0#qt-science_center_objects (accessed on 20 July 2021).

- Asian Carp Behavior in Response to Static Water Gun Firing. Available online: https://pubs.er.usgs.gov/publication/fs20133098 (accessed on 20 July 2021).

- Tix, J.A.; Cupp, A.R.; Smerud, J.R.; Erickson, R.A.; Fredricks, K.T.; Amberg, J.J.; Suski, C.D. Temperature Dependent Effects of Carbon Dioxide on Avoidance Behaviors in Bigheaded Carps. Biol. Invasions 2018, 20, 3095–3105. [Google Scholar] [CrossRef]

- Chicago Canal Flooded With Toxin To Kill Asian Carp. Available online: https://www.npr.org/templates/story/story.php?storyId=121104335 (accessed on 31 August 2021).

- The Sexton Corporation. Available online: https://thesextonco.com/ (accessed on 20 July 2021).

- Teledyne FLIR. Available online: https://www.flir.com/ (accessed on 20 July 2021).

- Mukherjee, S.; Valenzise, G.; Cheng, I. Potential of Deep Features for Opinion-Unaware, Distortion-Unaware, No-Reference Image Quality Assessment. In Proceedings of the International Conference on Smart Multimedia in Lecture Notes in Computer Science, San Diego, CA, USA, 16–18 December 2019; Volume 12015. [Google Scholar]

- Yang, L.; Liu, Y.; Yu, H.; Fang, X.; Song, L.; Li, D.; Chen, Y. Computer Vision Models in Intelligent Aquaculture with Emphasis on Fish Detection and Behavior Analysis: A Review. Arch. Comput. Methods Eng. 2021, 28, 2785–2816. [Google Scholar] [CrossRef]

- Hasija, S.; Buragohain, M.J.; Indu, S. Fish Species Classification Using Graph Embedding Discriminant Analysis. In Proceedings of the International Conference on Machine Vision and Information Technology, Singapore, Singapore, 17–19 February 2017. [Google Scholar]

- Marini, S.; Fanelli, E.; Sbragaglia, V.; Azzurro, E.; RioFernandez, J.D.; Aguzzi, J. Tracking Fish Abundance by Underwater Image Recognition. Sci. Rep. 2018, 8, 13748. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pudaruth, S.; Nazurally, N.; Appadoo, C.; Kishnah, S.; Vinayaganidhi, M.; Mohammoodally, I.; Ally, Y.A.; Chady, F. SuperFish: A Mobile Application for Fish Species Recognition using Image Processing Techniques and Deep Learning. Int. J. Comput. Digit. Syst. 2021, 10. [Google Scholar] [CrossRef]

- Demertzisa, K.; Iliadisb, L.S.; Anezakis, V.D. Extreme Deep Learning in Biosecurity: The Case of Machine Hearing for Marine Species Identification. J. Inf. Telecommun. 2018, 2, 492–510. [Google Scholar] [CrossRef]

- Urbanova, P.; Bozhynov, V.; Císař, P.; Železný, M. Classification of Fish Species Using Silhouettes. In Proceedings of the International Work-Conference on Bioinformatics and Biomedical Engineering, Granada, Spain, 6–8 May 2020; pp. 310–319. [Google Scholar]

- Lee, D.J.; Archibald, J.K.; Schoenberger, R.B.; Dennis, A.W.; Shiozawa, D.K. Contour Matching for Fish Species Recognition and Migration Monitoring. In Applications of Computational Intelligence in Biology: Current Trends and Open Problems; Smolinski, T., Milanova, M., Hassanien, A., Eds.; Springer: Berlin, Germany, 2008; Chapter 8; pp. 183–207. [Google Scholar]

- Ding, G.; Song, Y.; Guo, J.; Feng, C.; Li, G.; He, B.; Yan, T. Fish Recognition Using Convolutional Neural Network. In Proceedings of the Oceans 2017, Anchorage, AK, USA, 18–21 September 2017. [Google Scholar]

- Hridayami, P.; Putra, K.G.D.; Wibawa, K.S. Fish Species Recognition Using VGG16 Deep Convolutional Neural Network. J. Comput. Sci. Eng. 2019, 13, 124–130. [Google Scholar] [CrossRef]

- Pramunendar, R.A.; Wibirama, S.; Santosa, P.I. Fish Classification Based on Underwater Image Interpolation and Back-Propagation Neural Network. In Proceedings of the International Conference on Science and Technology, Yogyakarta, Indonesia, 30–31 July 2019. [Google Scholar]

- Deep, B.V.; Dash, R. Underwater Fish Species Recognition Using Deep Learning Techniques. In Proceedings of the International Conference on Signal Processing and Integrated Networks, Noida, India, 7–8 March 2019. [Google Scholar]

- Lillywhite, K.; Tippetts, B.; Lee, D.J. Self-tuned Evolution-COnstructed Features for General Object Recognition. Pattern Recognit. 2012, 45, 241–251. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, M.; Lee, D.J.; Simons, T. Smart Camera for Quality Inspection and Grading of Food Products. Electronics 2020, 9, 505. [Google Scholar] [CrossRef] [Green Version]

- Zhang, D.; Lillywhite, K.; Lee, D.J.; Tippetts, B. Automatic Shrimp Shape Grading Using Evolution Constructed Features. Comput. Electron. Agric. 2014, 100, 116–122. [Google Scholar] [CrossRef]

- Zhang, D.; Lee, D.J.; Zhang, M.; Tippetts, B.; Lillywhite, K. Object Recognition Algorithm for the Automatic Identification and Removal of Invasive Fish. Biosyst. Eng. 2016, 145, 65–75. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, M.; Lee, D.J. Efficient Evolutionary Learning Algorithm for Real-Time Embedded Vision Applications. Electronics 2019, 8, 1367. [Google Scholar] [CrossRef] [Green Version]

- Cisar, P.; Bekkozhayeva, D.; Movchan, O.; Saberioon, M.; Schraml, R. Computer Vision Based Individual Fish Identification Using Skin Dot Pattern. Sci. Rep. 2021, 11, 16904. [Google Scholar] [CrossRef]

- BYU Fish Dataset. Available online: https://brightspotcdn.byu.edu/33/c5/1cdd22624b88bbff648161bca828/8fish.zip (accessed on 22 July 2021).

| Image Transform | Number of Parameters |

|---|---|

| Gabor | 6 |

| Gaussian | 1 |

| Laplacian | 1 |

| Median Blur | 1 |

| Sobel | 4 |

| Gradient | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chai, J.; Lee, D.-J.; Tippetts, B.; Lillywhite, K. Implementation of an Award-Winning Invasive Fish Recognition and Separation System. Electronics 2021, 10, 2182. https://doi.org/10.3390/electronics10172182

Chai J, Lee D-J, Tippetts B, Lillywhite K. Implementation of an Award-Winning Invasive Fish Recognition and Separation System. Electronics. 2021; 10(17):2182. https://doi.org/10.3390/electronics10172182

Chicago/Turabian StyleChai, Jin, Dah-Jye Lee, Beau Tippetts, and Kirt Lillywhite. 2021. "Implementation of an Award-Winning Invasive Fish Recognition and Separation System" Electronics 10, no. 17: 2182. https://doi.org/10.3390/electronics10172182

APA StyleChai, J., Lee, D.-J., Tippetts, B., & Lillywhite, K. (2021). Implementation of an Award-Winning Invasive Fish Recognition and Separation System. Electronics, 10(17), 2182. https://doi.org/10.3390/electronics10172182