Secure Mobile Edge Server Placement Using Multi-Agent Reinforcement Learning

Abstract

:1. Introduction

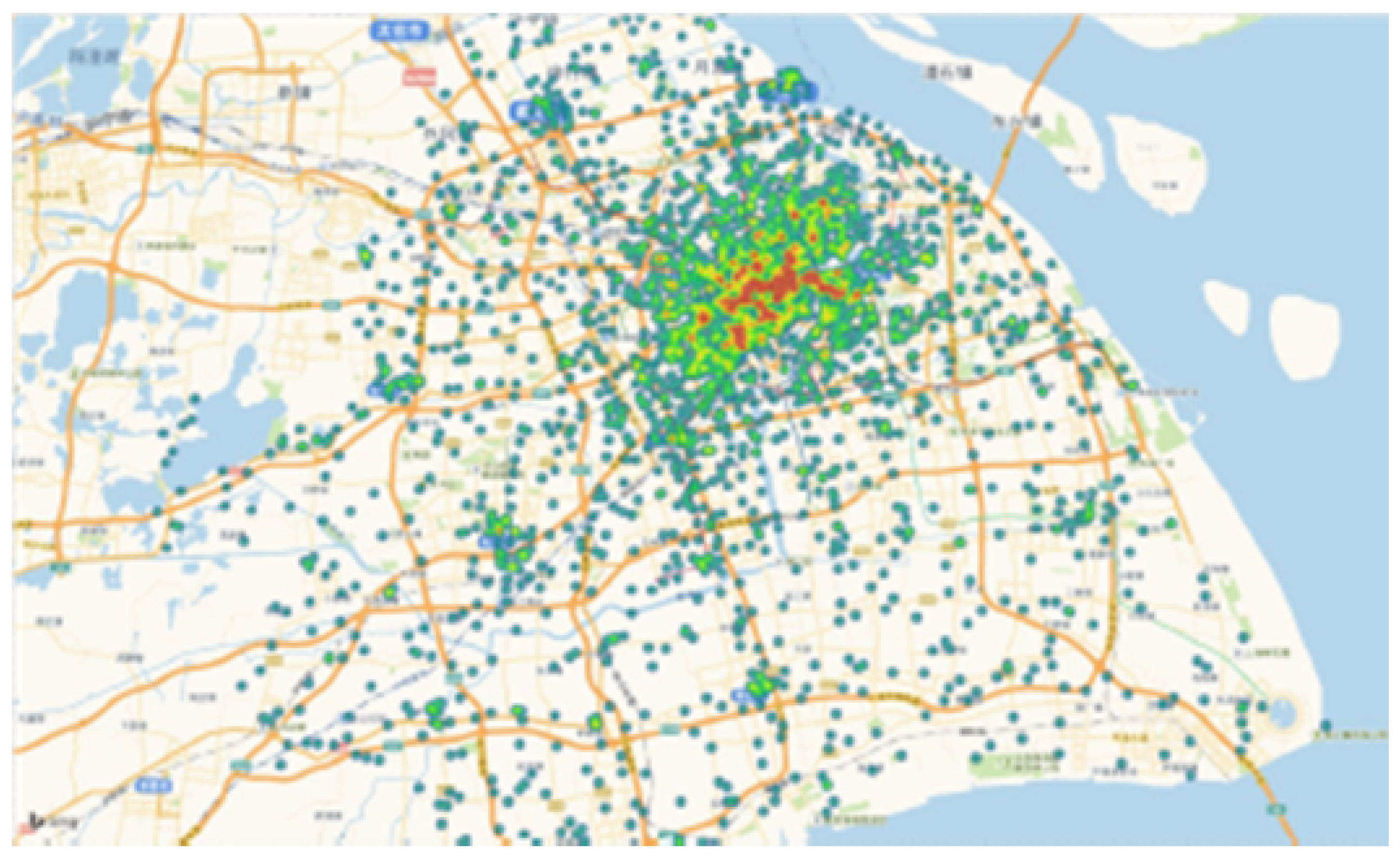

- The mobile edge server placement is formulated as multi-objective optimization problem which is then solved using a multi-agent RL approach. The objectives of the proposed solution include reducing the access delay and balancing edge servers workload. Further, experimental results are obtained by applying the proposed solution on base station dataset provided by Shanghai Telecom to analyze the performance of the proposed technique;

- We discuss different scenarios in which the proposed architecture’s security can be breached if the exchanged data between RL agents is altered. Further, we discuss the counter strategies to tackle with the arising security issues.

2. Literature Review

3. Reinforcement Learning

3.1. One-Agent RL

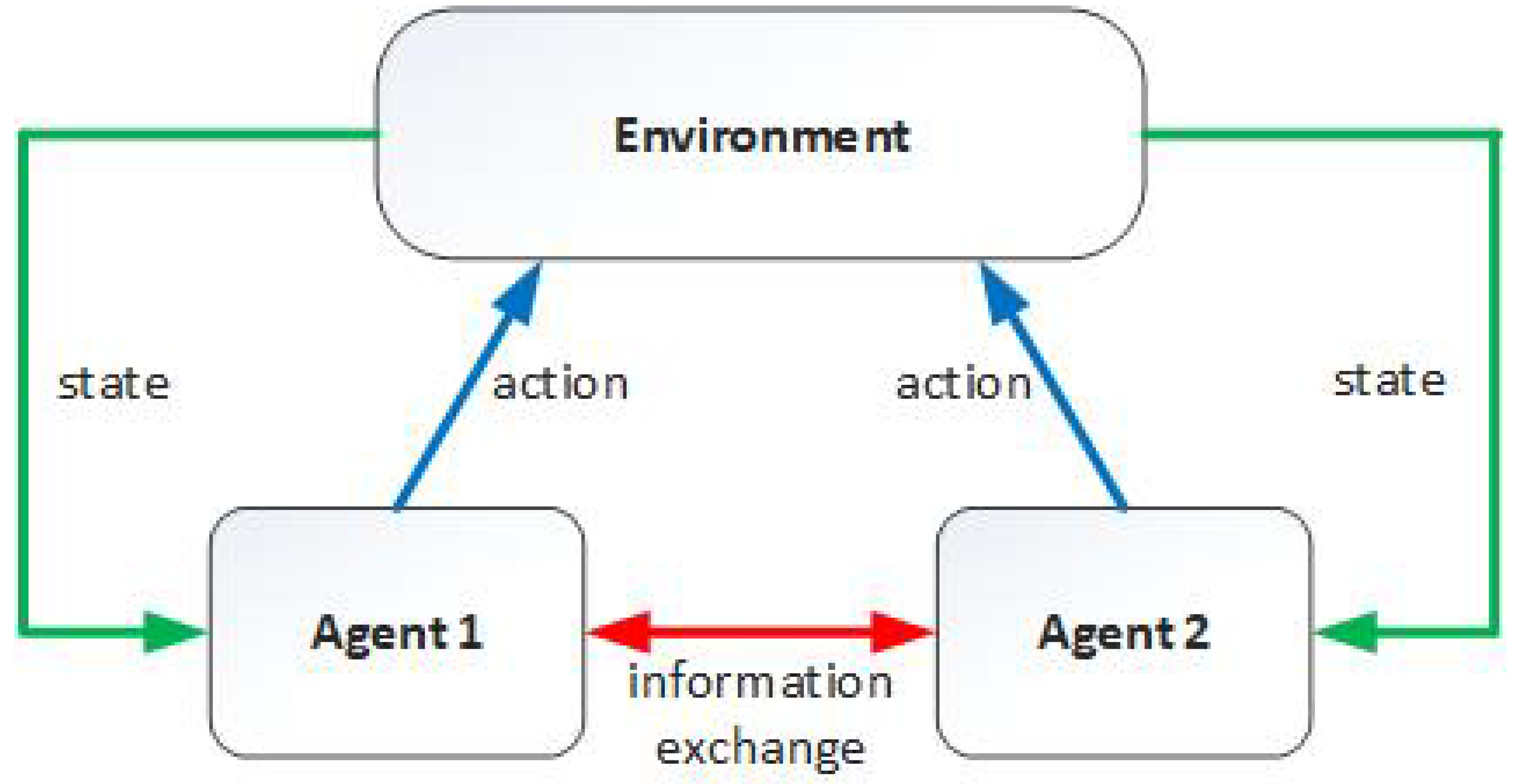

3.2. Multi-Agent RL

3.2.1. Independent Agents

3.2.2. Indirect-Coordinating Agents

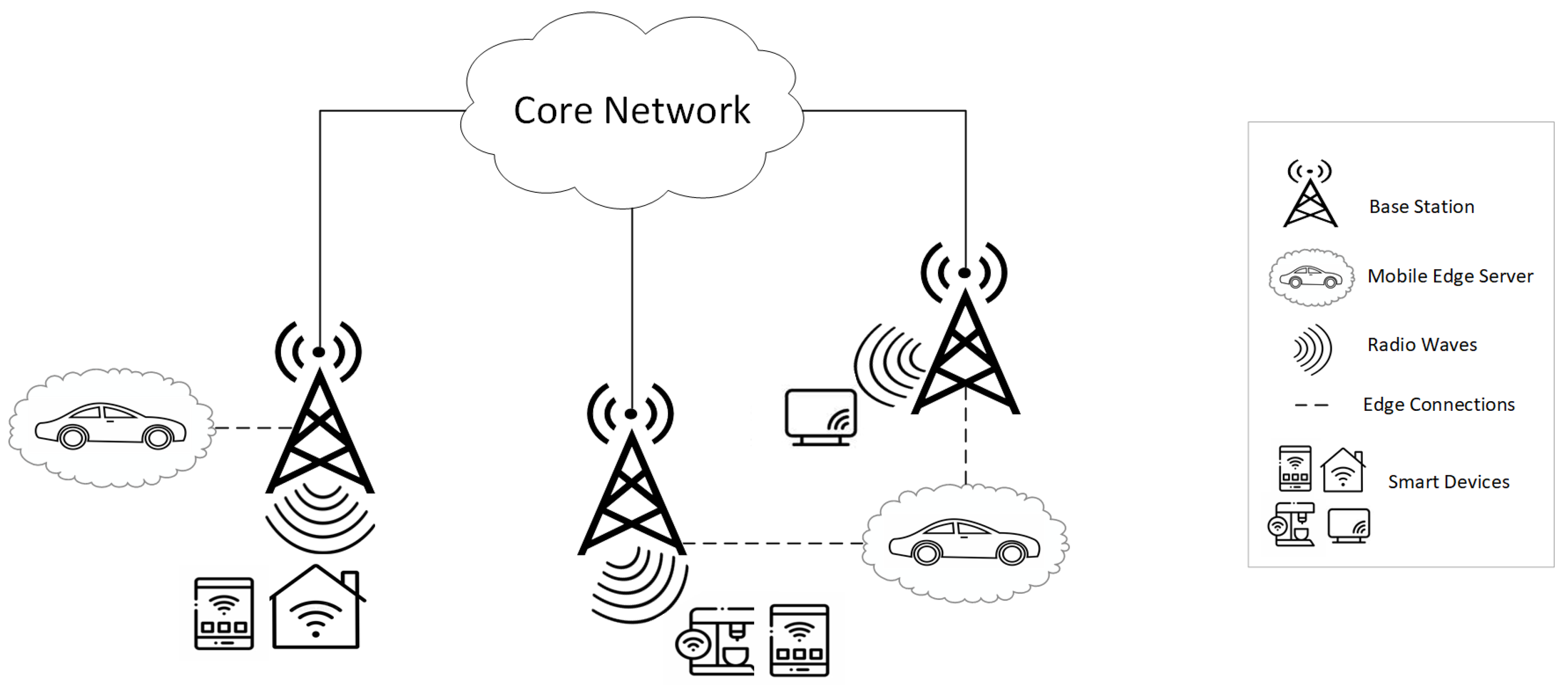

4. Multi-Objective Problem Formulation

- A mobile edge server can offload processing and storage requests from more than one base station;

- A base station can offload processing and storage requests to one or more mobile edge servers. If a base station is offloading processing and storage requests to more than one mobile edge server then the workload of incoming mobile call and flow requests at a base station from edge devices will be shared among connected mobile edge servers;

- A mobile edge server is hosted and collocated at a location where a base station in an already existing network infrastructure is present.

- Find mobile edge server positions such that network access delay is minimized; and,

- Find the edge connections (, ) for which the mobile edge server’s workload is balanced where is an indicator function whose value is 1 if a base station is connected to sth mobile edge server otherwise 0 if its not connected to sth mobile edge server.

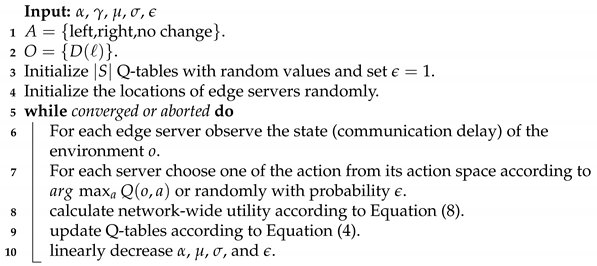

5. Proposed Solution

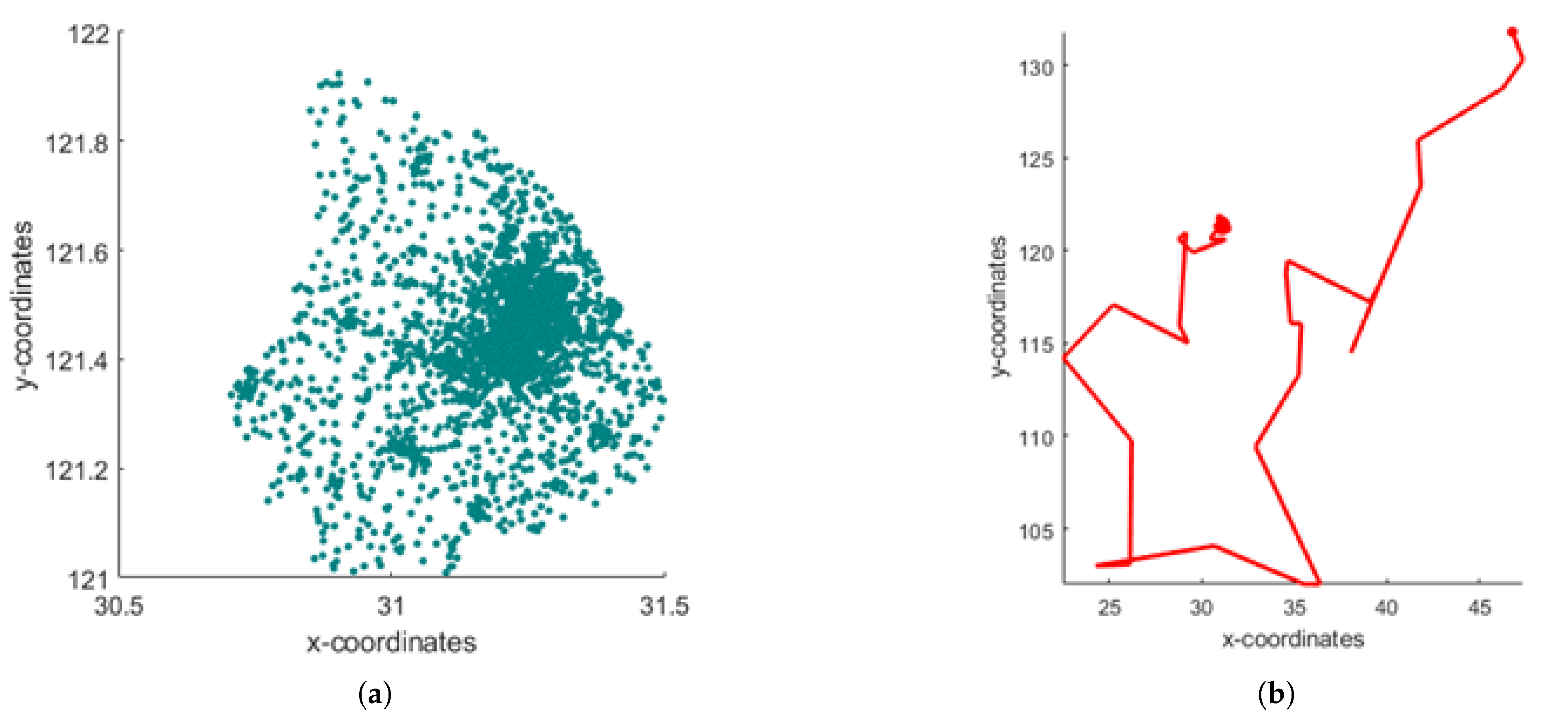

5.1. Environment Design

- Base stations nearest to each other connected with a line allowing the mobile edge servers to move between nearest base station locations in the search of optimal placement strategy;

- The search space of mobile edge server placement becomes scalable. Even if the number of base stations are increased in the MEC network, the solution space will not explode.

5.2. RL Agent Design

5.2.1. Action Space

| Algorithm 1: Multi-agent RL assisted mobile edge computing. |

|

5.2.2. State Space

5.2.3. Reward

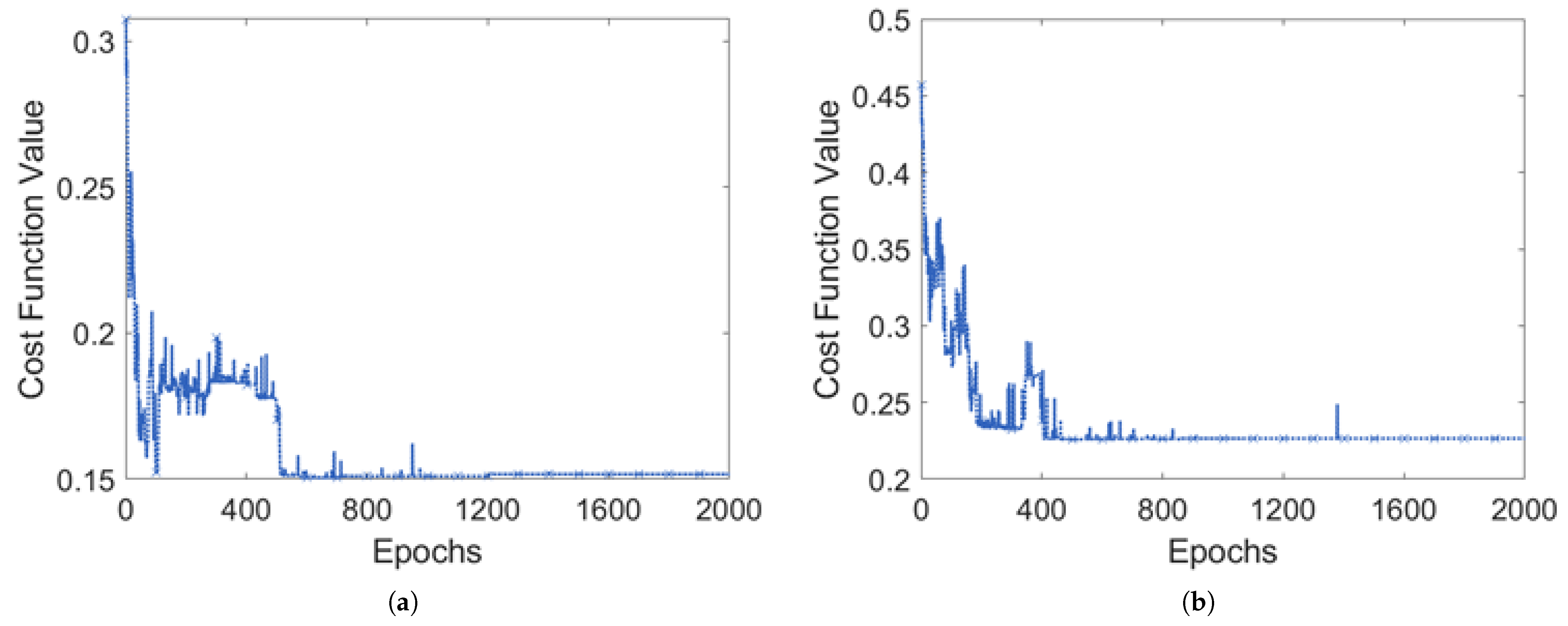

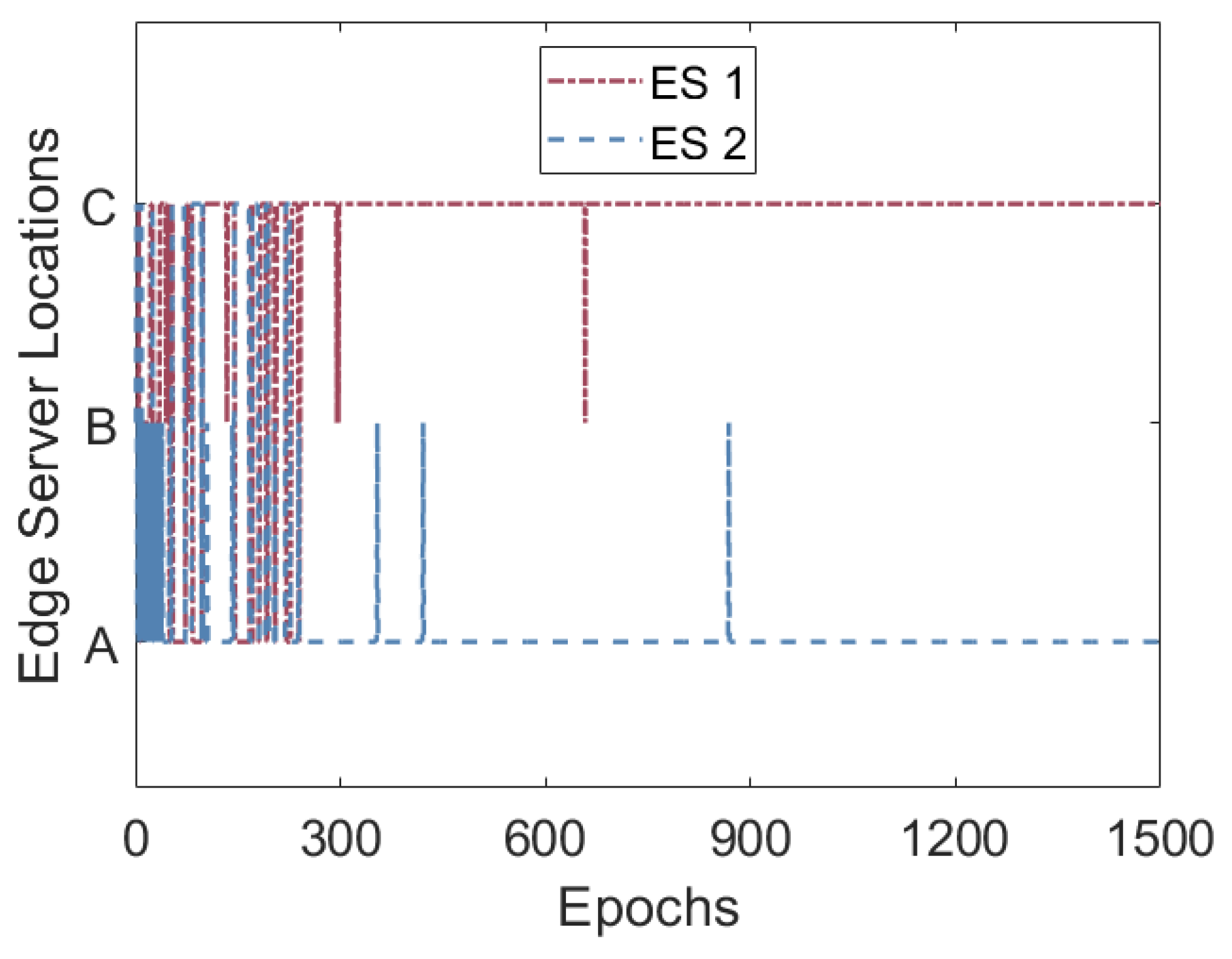

6. Results

- Does the proposed solution generalize across different random initialized values used in the experimentation?

- Is the proposed solution effective in finding the best placement for mobile edge servers when different number of base stations are present in the network?

- Is the proposed solution effective in finding the best placement for mobile edge servers when the number of mobile edge servers present in the network is varied?

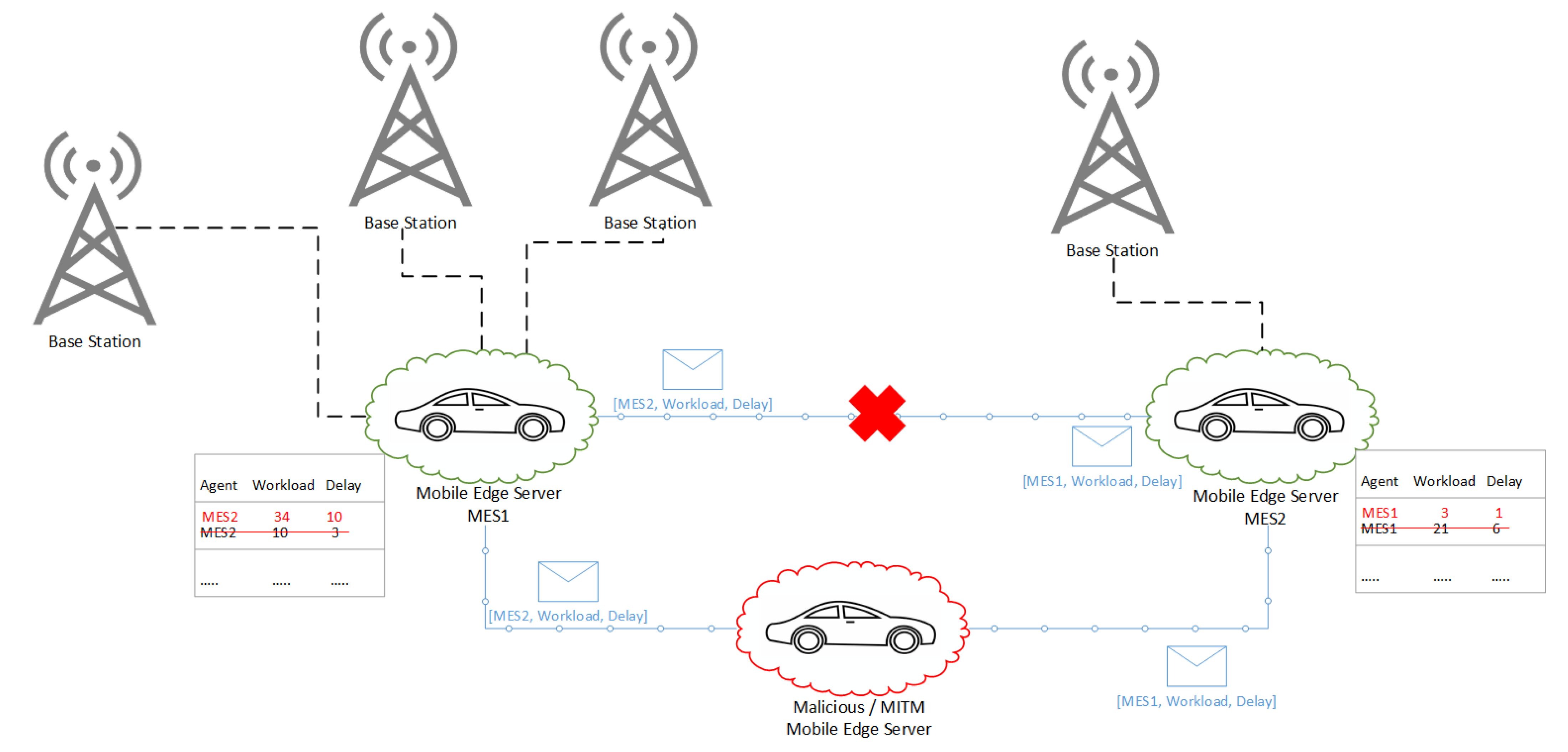

7. Security Perspective

7.1. Scenarios

7.2. Countermeasures

7.2.1. Countermeasure to First Security Scenario

- Stage 1 Mutual Authentication Phase:We assume that both parties already registered with CA, trust the same CA, and possess their own public key, own private key, own implicit certificate, and CA’s public key. Both entities the base station and mobile edge server perform a handshake where both parties exchange their digital certificate to verify the authenticity of each party.

- Stage 2 Key Agreement:After authentication is done in both parties, they should agree on a shared master key. In our protocol, we will use the elliptic curve Diffie Hellman (ECDH) protocol that is most suitable for constrained environments. The elliptic curve cryptosystems are used for implementing protocols such as the Diffie–Hellman key exchange scheme [26] as follows:

- A particular rational base point P is published in a public domain.

- The base station and mobile edge server choose random integers k and k respectively, which they use as private keys.

- The base station computes:

- The mobile edge server computes:and then both entities exchange these values over an insecure network.

- Using the information, they received from each other and their private keys, both entities compute:andrespectively. This is simply equal to,which serves as the shared master key that only base station and mobile edge server possess.

- Stage 3 Key Derivation:To reduce the computational complexity on both parties, we assume that the mutual authentication phase is done periodically, only the session secret key is generated from the shared master key for achieving integrity protection algorithm, such as message authentication code (MAC) on each session.The proposed protocol should use the best option for key derivation function (KDF) that ensures randomness, and we advocate the KDF recommendations in [27], which takes into consideration randomness through the use of random numbers (Nonce) and key expansion. Each peer computes the actual session key PK via the chosen key KDF , as:

- Stage 4 Message Exchange:The exchanged data, such as workload and delay, need to be protected against unauthorized modification, hence HMAC is used to ensure the integrity.The base station calculatesThe base station sends W(ℓ), D(ℓ) and D to the mobile edge server.The mobile edge server uses the agreed derived session key to calculate HMAC of W(ℓ) and D(ℓ) and verifies its integrity with D.

7.2.2. Countermeasure to Second Security Scenario

7.2.3. Countermeasure to Third Security Scenario

7.2.4. Countermeasure to Fourth Security Scenario

8. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Lee, J.; Kim, D.; Lee, J. Zone-based multi-access edge computing scheme for user device mobility management. Appl. Sci. 2019, 9, 2308. [Google Scholar] [CrossRef] [Green Version]

- Bilal, K.; Khalid, O.; Erbad, A.; Khan, S.U. Potentials, trends, and prospects in edge technologies: Fog, cloudlet, mobile edge, and micro data centers. Comput. Netw. 2018, 130, 94–120. [Google Scholar] [CrossRef] [Green Version]

- Porambage, P.; Okwuibe, J.; Liyanage, M.; Ylianttila, M.; Taleb, T. Survey on multi-access edge computing for internet of things realization. IEEE Commun. Surv. Tutor. 2018, 20, 2961–2991. [Google Scholar] [CrossRef] [Green Version]

- Lähderanta, T.; Leppänen, T.; Ruha, L.; Lovén, L.; Harjula, E.; Ylianttila, M.; Riekki, J.; Sillanpää, M.J. Edge server placement with capacitated location allocation. arXiv 2019, arXiv:1907.07349. [Google Scholar]

- Kasi, S.K.; Kasi, M.K.; Ali, K.; Raza, M.; Afzal, H.; Lasebae, A.; Naeem, B.; ul Islam, S.; Rodrigues, J.J. Heuristic edge server placement in Industrial Internet of Things and cellular networks. IEEE Internet Things J. 2020, 8, 10308–10317. [Google Scholar] [CrossRef]

- Xu, X.; Shen, B.; Yin, X.; Khosravi, M.R.; Wu, H.; Qi, L.; Wan, S. Edge Server Quantification and Placement for Offloading Social Media Services in Industrial Cognitive IoV. IEEE Trans. Ind. Inform. 2020, 17, 2910–2918. [Google Scholar] [CrossRef]

- Yin, H.; Zhang, X.; Liu, H.H.; Luo, Y.; Tian, C.; Zhao, S.; Li, F. Edge provisioning with flexible server placement. IEEE Trans. Parallel Distrib. Syst. 2016, 28, 1031–1045. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, S.; Zhou, A.; Xu, J.; Yuan, J.; Hsu, C.H. User allocation-aware edge cloud placement in mobile edge computing. Softw. Pract. Exp. 2019, 50, 489–502. [Google Scholar] [CrossRef]

- Jia, M.; Cao, J.; Liang, W. Optimal cloudlet placement and user to cloudlet allocation in wireless metropolitan area networks. IEEE Trans. Cloud Comput. 2015, 5, 725–737. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Y.; Xu, J.; Yuan, J.; Hsu, C.H. Edge server placement in mobile edge computing. J. Parallel Distrib. Comput. 2019, 127, 160–168. [Google Scholar] [CrossRef]

- Bouet, M.; Conan, V. Mobile edge computing resources optimization: A geo-clustering approach. IEEE Trans. Netw. Serv. Manag. 2018, 15, 787–796. [Google Scholar] [CrossRef] [Green Version]

- Zeng, D.; Gu, L.; Pan, S.; Cai, J.; Guo, S. Resource Management at the Network Edge: A Deep Reinforcement Learning Approach. IEEE Netw. 2019, 33, 26–33. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, L.; Liu, J.; Kato, N. Smart Resource Allocation for Mobile Edge Computing: A Deep Reinforcement Learning Approach. IEEE Trans. Emerg. Top. Comput. 2019, 6750, 1. [Google Scholar] [CrossRef]

- Huang, L.; Bi, S.; Zhang, Y.J. Deep Reinforcement Learning for Online Computation Offloading in Wireless Powered Mobile-Edge Computing Networks. IEEE Trans. Mob. Comput. 2019, 1233, 1. [Google Scholar] [CrossRef] [Green Version]

- Zhai, Y.; Bao, T.; Zhu, L.; Shen, M.; Du, X.; Guizani, M. Toward Reinforcement-Learning-Based Service Deployment of 5G Mobile Edge Computing with Request-Aware Scheduling. IEEE Wirel. Commun. 2020, 27, 84–91. [Google Scholar] [CrossRef]

- Li, B.; Hou, P.; Wu, H.; Hou, F. Optimal edge server deployment and allocation strategy in 5G ultra-dense networking environments. Pervasive Mob. Comput. 2021, 72, 101312. [Google Scholar] [CrossRef]

- Zeng, F.; Ren, Y.; Deng, X.; Li, W. Cost-effective edge server placement in wireless metropolitan area networks. Sensors 2019, 19, 32. [Google Scholar] [CrossRef] [Green Version]

- Cao, K.; Li, L.; Cui, Y.; Wei, T.; Hu, S. Exploring placement of heterogeneous edge servers for response time minimization in mobile edge-cloud computing. IEEE Trans. Ind. Inform. 2020, 17, 494–503. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Littman, M.L. Value-function reinforcement learning in Markov games. Cogn. Syst. Res. 2001, 2, 55–66. [Google Scholar] [CrossRef] [Green Version]

- Busoniu, L.; Babuska, R.; De Schutter, B. Multi-agent reinforcement learning: A survey. In Proceedings of the 2006 9th International Conference on Control, Automation, Robotics and Vision, Singapore, 5–8 December 2006; pp. 1–6. [Google Scholar]

- Matignon, L.; Laurent, G.J.; Le Fort-Piat, N. Hysteretic q-learning: An algorithm for decentralized reinforcement learning in cooperative multi-agent teams. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 64–69. [Google Scholar]

- Wang, S.; Guo, Y.; Zhang, N.; Yang, P.; Zhou, A.; Shen, X.S. Delay-aware Microservice Coordination in Mobile Edge Computing: A Reinforcement Learning Approach. IEEE Trans. Mob. Comput. 2019, 20, 939–951. [Google Scholar] [CrossRef]

- Xu, J.; Wang, S.; Bhargava, B.K.; Yang, F. A blockchain-enabled trustless crowd-intelligence ecosystem on mobile edge computing. IEEE Trans. Ind. Inform. 2019, 15, 3538–3547. [Google Scholar] [CrossRef] [Green Version]

- Hajiyev, A. Optimal Choice of Server’s Number and the Various Control Rules for Systems with Moving Servers. In International Conference on Management Science and Engineering Management; Springer: Berlin/Heidelberg, Germany, 2021; pp. 379–399. [Google Scholar]

- Haakegaard, R.; Lang, J. The Elliptic Curve Diffie-Hellman (Ecdh). 2015. Available online: https://koclab.cs.ucsb.edu/teaching/ecc/project/2015Projects/Haakegaard+Lang.pdf (accessed on 10 June 2020).

- Charan, K.S.; Nakkina, H.V.; Chandavarkar, B.R. Generation of Symmetric Key Using Randomness of Hash Function. In Proceedings of the 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; pp. 1–7. [Google Scholar]

| Parameter | Description | Value |

|---|---|---|

| BS | number of BSs sampled from the Shanghai Telecom’s dataset | 120, 240, 360 |

| ES | number of mobile edge servers controlled by RL agents | 20, 30, 40 |

| learning rate | 0.35 | |

| learning rate for hysteretic | 0.30 | |

| weightage of delay over workload in utility function | 0.5 | |

| discount factor | 0.9 | |

| random exploration | 0.15 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kasi, M.K.; Abu Ghazalah, S.; Akram, R.N.; Sauveron, D. Secure Mobile Edge Server Placement Using Multi-Agent Reinforcement Learning. Electronics 2021, 10, 2098. https://doi.org/10.3390/electronics10172098

Kasi MK, Abu Ghazalah S, Akram RN, Sauveron D. Secure Mobile Edge Server Placement Using Multi-Agent Reinforcement Learning. Electronics. 2021; 10(17):2098. https://doi.org/10.3390/electronics10172098

Chicago/Turabian StyleKasi, Mumraiz Khan, Sarah Abu Ghazalah, Raja Naeem Akram, and Damien Sauveron. 2021. "Secure Mobile Edge Server Placement Using Multi-Agent Reinforcement Learning" Electronics 10, no. 17: 2098. https://doi.org/10.3390/electronics10172098

APA StyleKasi, M. K., Abu Ghazalah, S., Akram, R. N., & Sauveron, D. (2021). Secure Mobile Edge Server Placement Using Multi-Agent Reinforcement Learning. Electronics, 10(17), 2098. https://doi.org/10.3390/electronics10172098