Prospects of Robots in Assisted Living Environment

Abstract

:1. Introduction

- Section 3 includes a general perspective of research opportunities and challenges that the field of robots in ALEs offers. It exhibits that, when combined with emerging modern technologies, it enables a broad spectrum of research opportunities and challenges. This section also includes a special subsection that discusses the tremendous applicability of embedded systems pertaining to the combination of AI/ML, robots, and the need for executing on-robot computation-intensive algorithms.

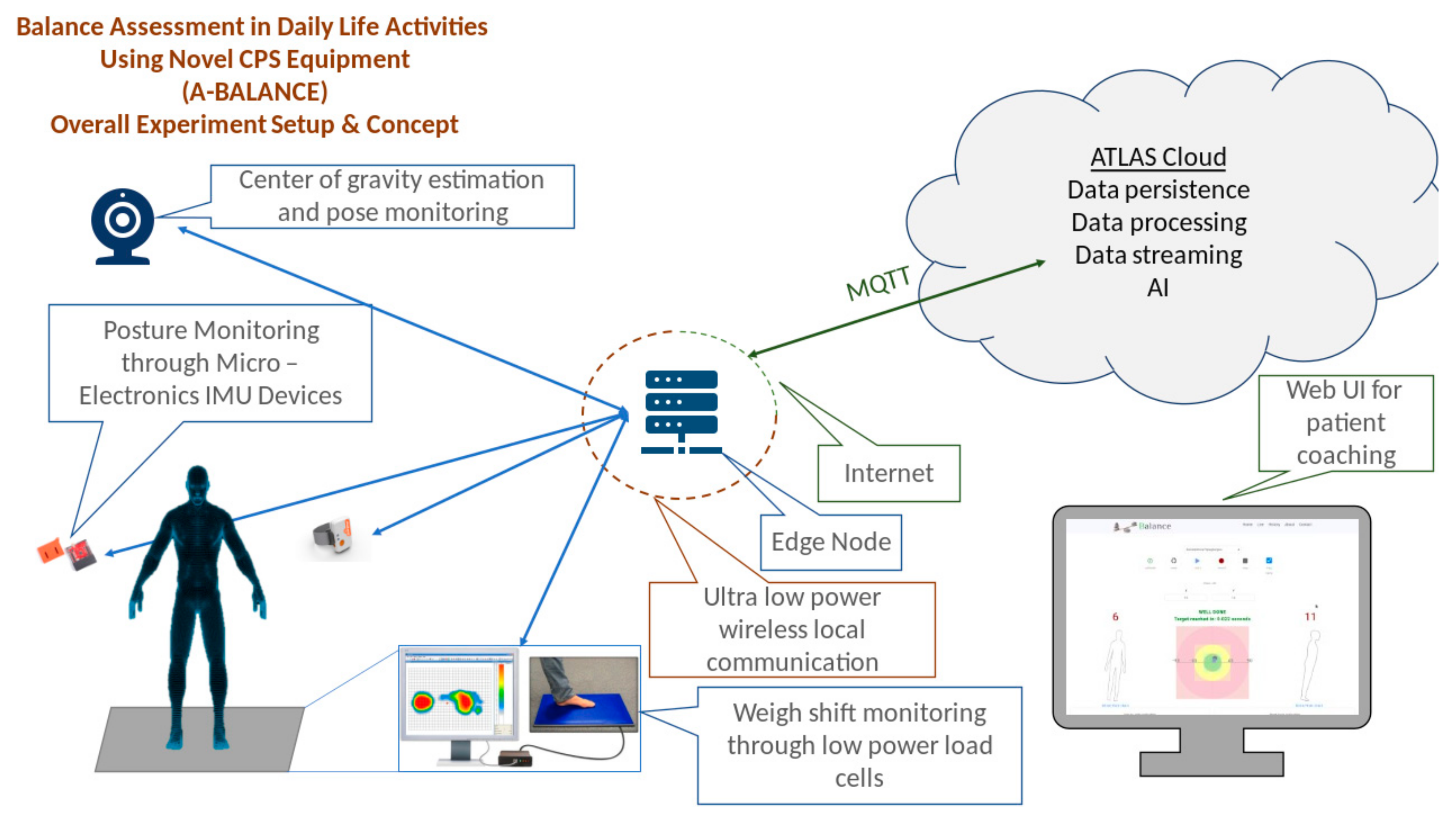

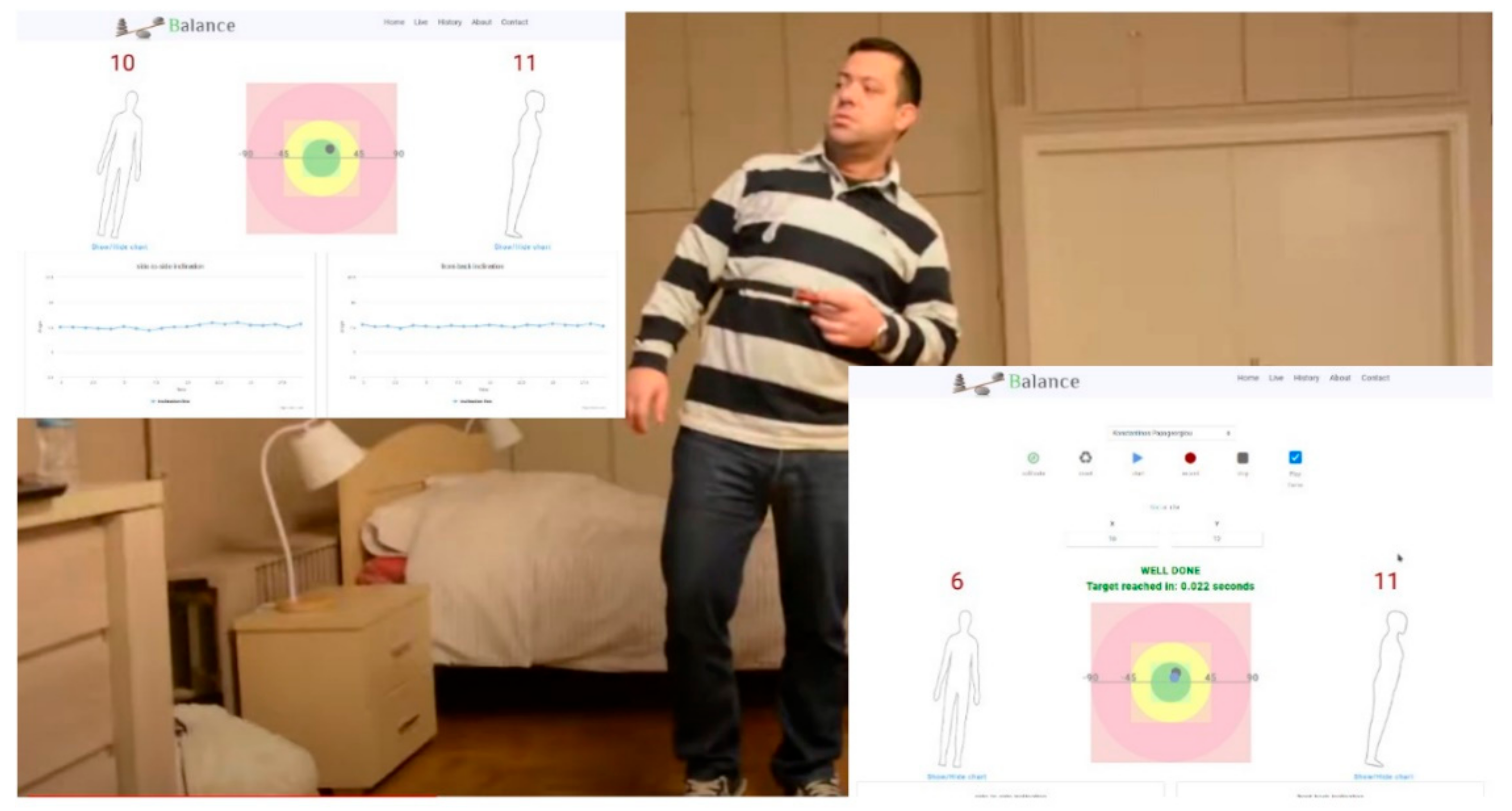

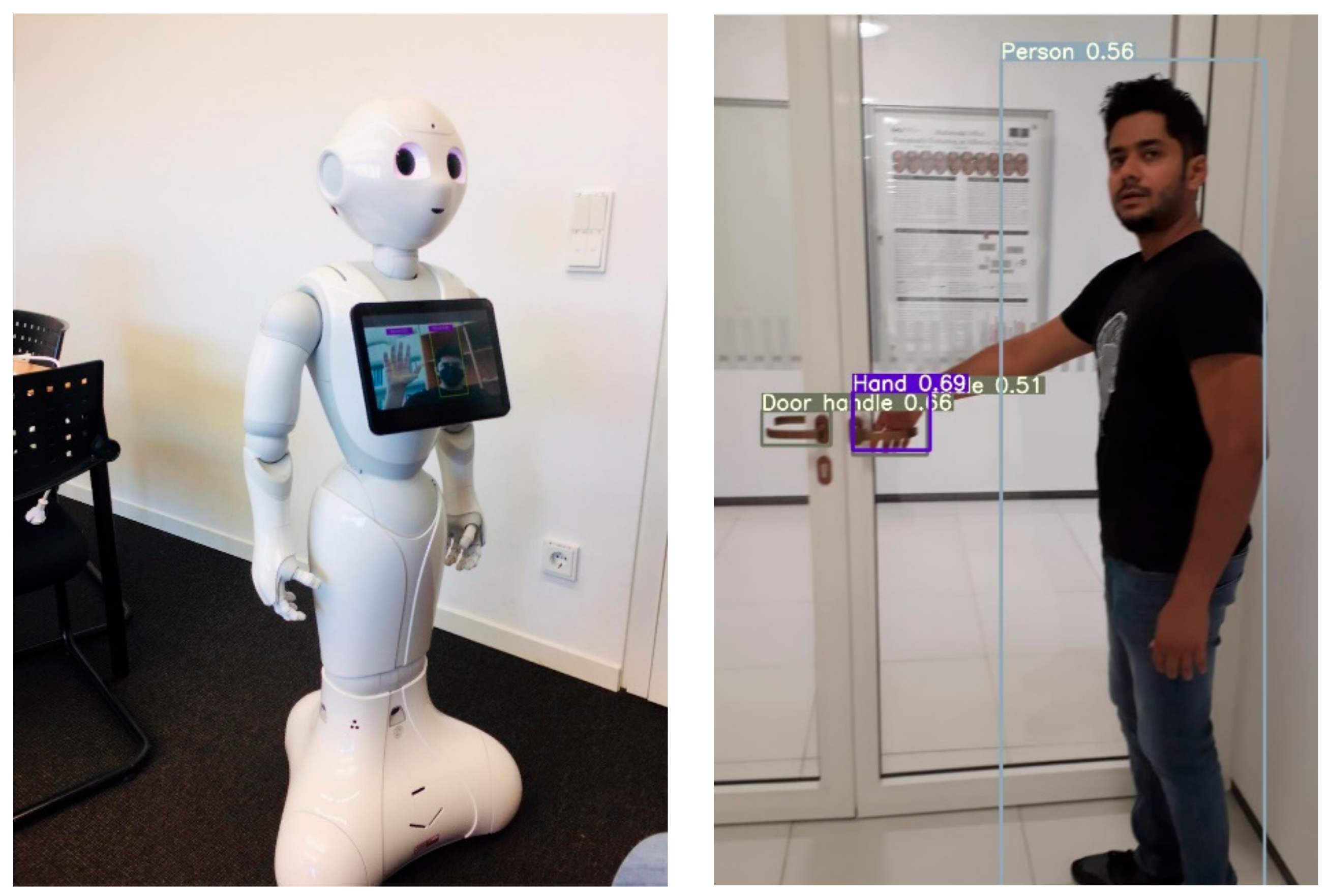

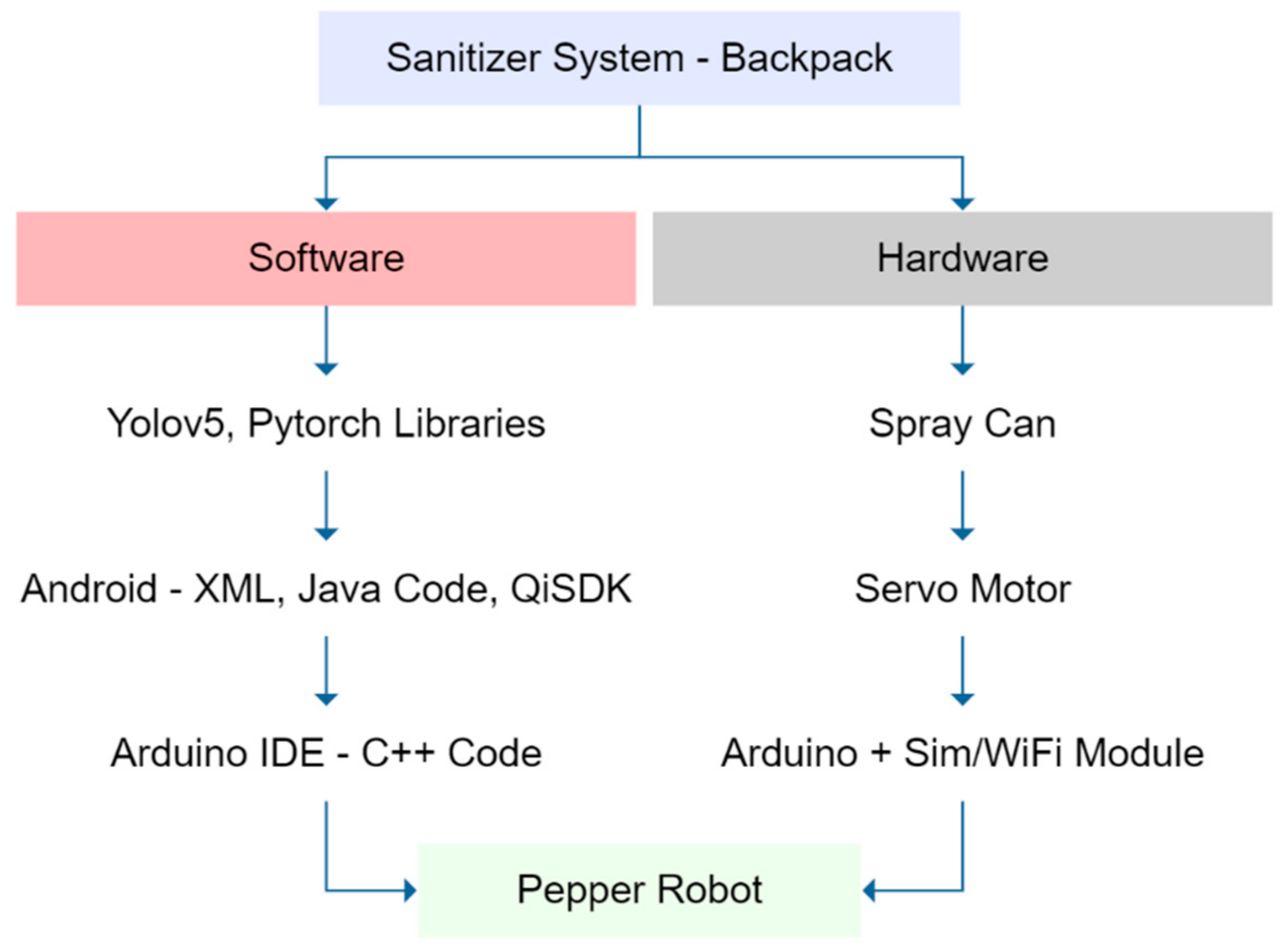

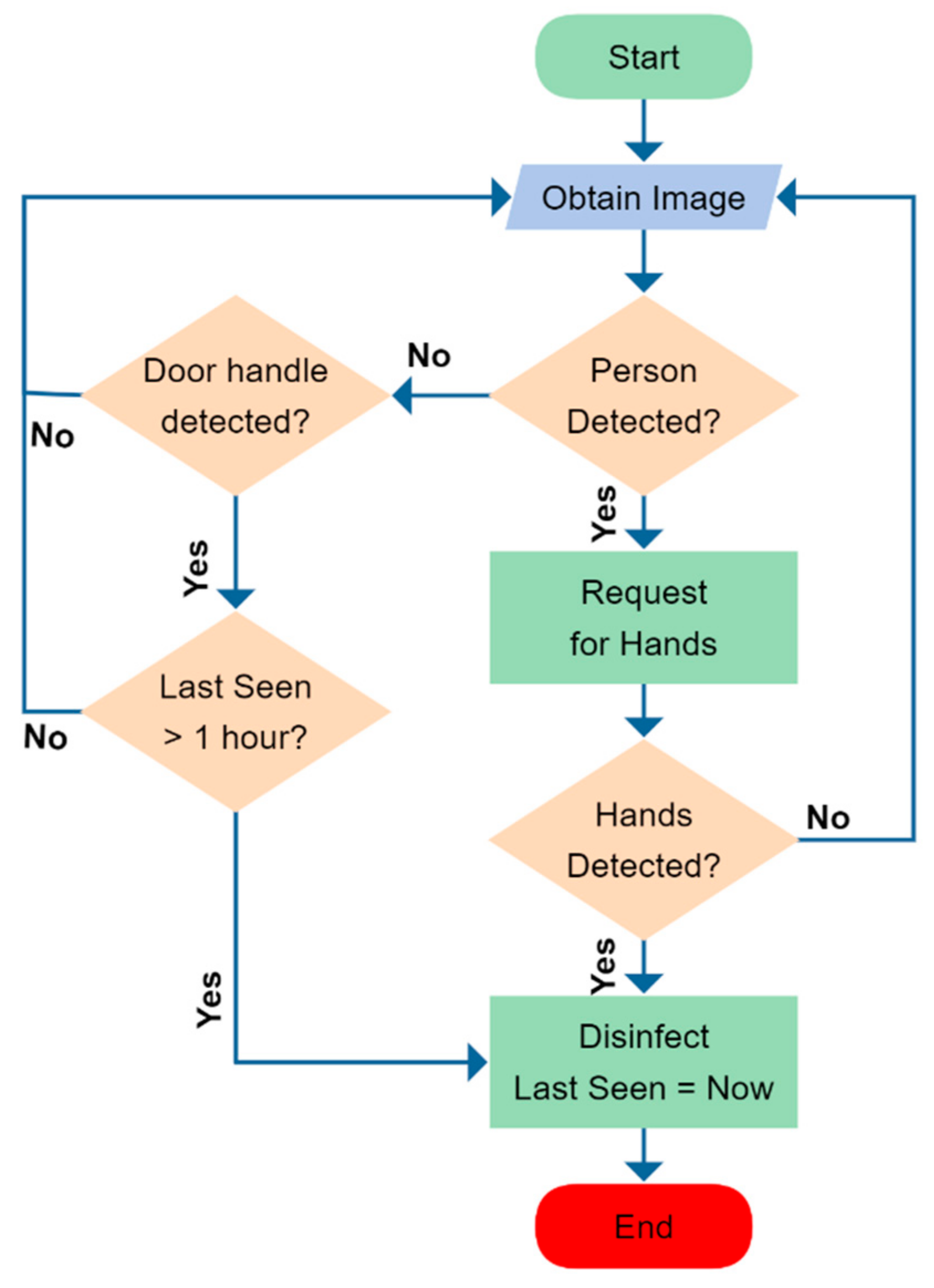

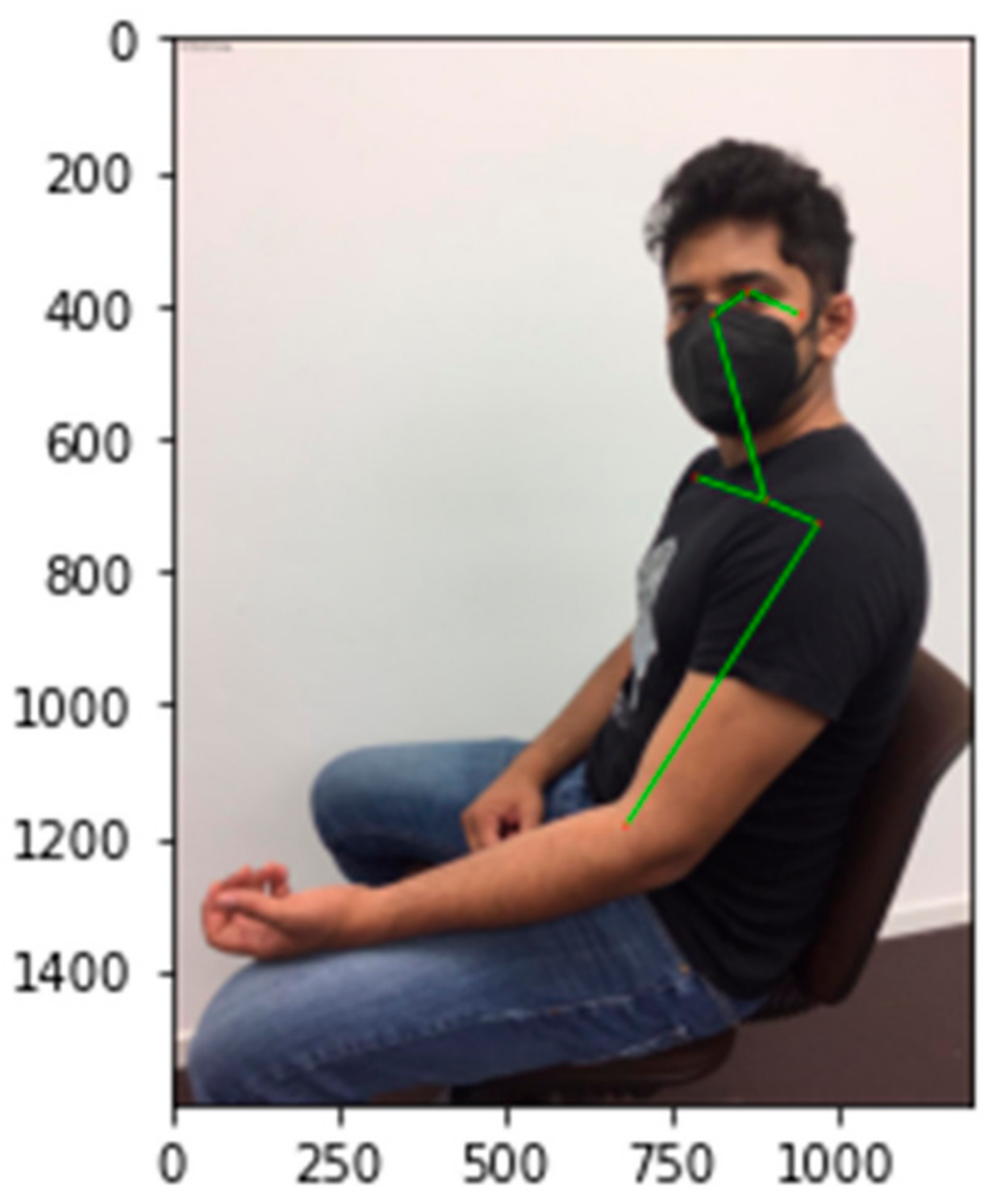

- Section 4 gives detailed insight into our research contributions under two different scenarios: (1) robots in rehabilitation and (2) robots in the hospital environment and pandemics. Under these scenarios we propose methodologies to address given use-cases discussed in the sub-sections therein. Particularly, systems discussed in robots in rehabilitation i.e., A-balance system and indoor localization, are an extension to approaches also developed under the project roadmap of the RADIO project [14,15,16], which used Turtlebot [17] as a base robot platform. Under robots in hospital environments, we propose methodologies that use machine learning and computer vision algorithms implemented on the Pepper robot [18], to address sanitization and social distancing.

- Section 5 concludes the work.

2. Background: Existing Research Work and Projects

2.1. Related Work in the Field of Robots in Assisted Living Environment

2.2. Research Projects on Assisted Living

3. Robots in Assisted Living Environment: General Perspective, Research Opportunities and Challenges

3.1. Research Opportunities in Conjunction with Embedded Systems

- 1.

- Modeling: An abstraction in the design process has advantages towards simplified programmability. This would have to take into account how hardware architectures for robotics need to be modeled to comply with the requirements dictated by pre-existing software models and specifications. DPR techniques need to be studied to determine how models should express them. The end goal is to obtain hardware models from software specifications.

- 2.

- Automation techniques: Considering that the workflow to obtain an RTL design could become cumbersome, code generation and automation techniques, leveraging the models, need to be explored and developed to provide effortless deployment. Further research has to focus on how robotic architectures and applications can be modeled and which modeling techniques can be useful for FPGA designs.

- 3.

- Adaptivity and reusability: One of the key features of ROS implementation is the reusability of its components. Thereby, the hardware-based ones that result from automation techniques should also follow this. This could be ensured by relying on model-driven engineering and automation tools. This would also be beneficial for adaptivity of said components for multiple platforms, whether robotics or FPGAs.

3.2. Challenges

4. Robots in Assisted-Living Environment: Application Scenarios and Use Cases

4.1. Robots in Rehabilitation

4.1.1. System Description

- Layer 1: Layer of the physical devices (accelerometers, pressure sensors, cameras, etc.);

- Layer 2: Layer of network integration and cloud interconnection (gateway);

- Layer 3: Atlas [49] cloud.

4.1.2. End Layer

- Multiple low power wireless interface support (BLE, ZigBee);

- Based on ARM® Cortex®-M3 CC2650 wireless MCU;

- Ultra-low power operation;

- Small form factor;

- Support for 10 low-power sensors, including ambient light, digital microphone, magnetic sensor, humidity, pressure, accelerometer, gyroscope, magnetometer, object temperature and ambient temperature;

- Mainly ambient/kinetic sensor oriented;

- Low cost;

- Highly Configurable.

4.1.3. Network Integration

4.1.4. Cloud Layer

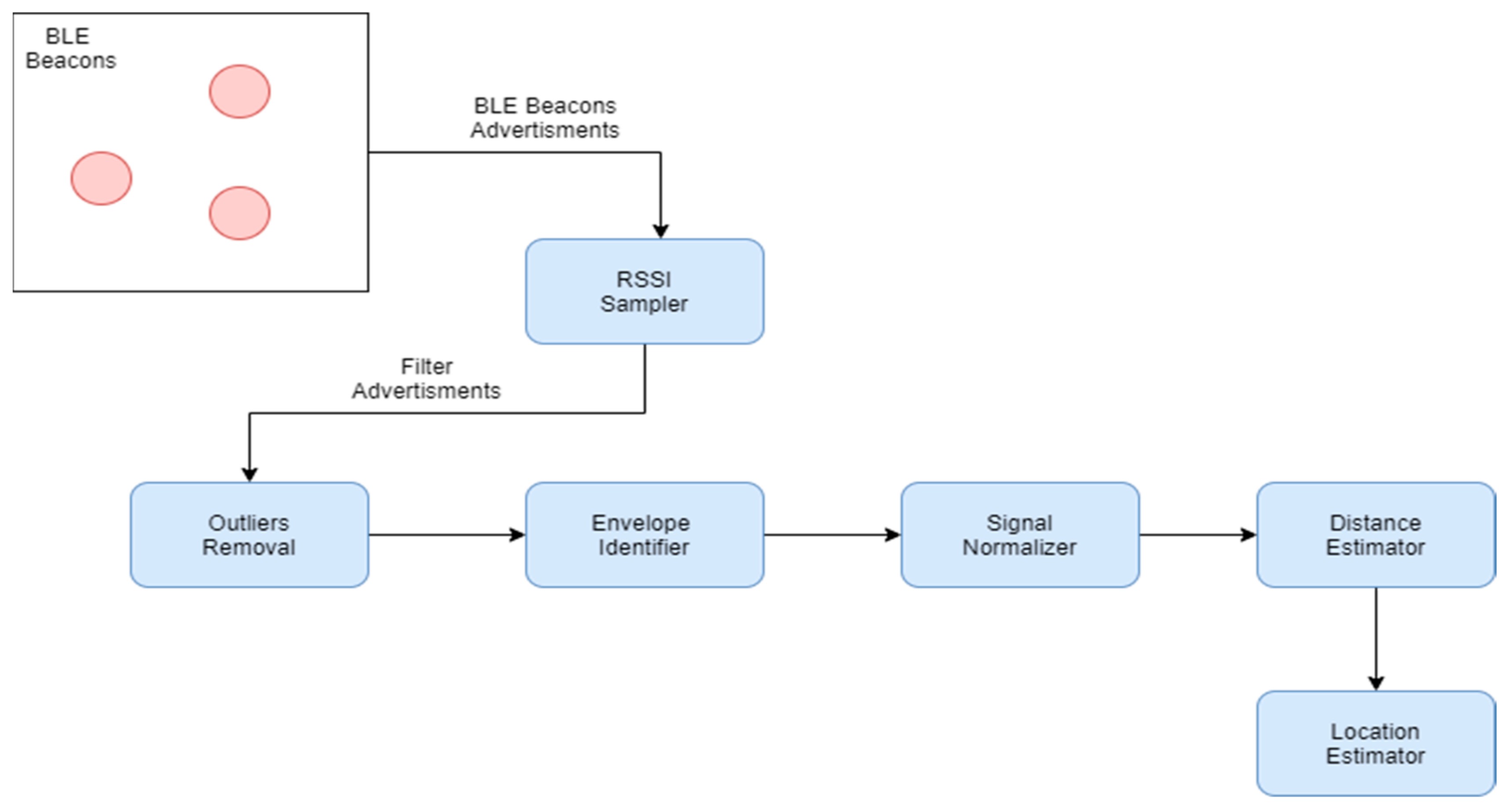

4.1.5. Indoor Localization

4.1.6. Results

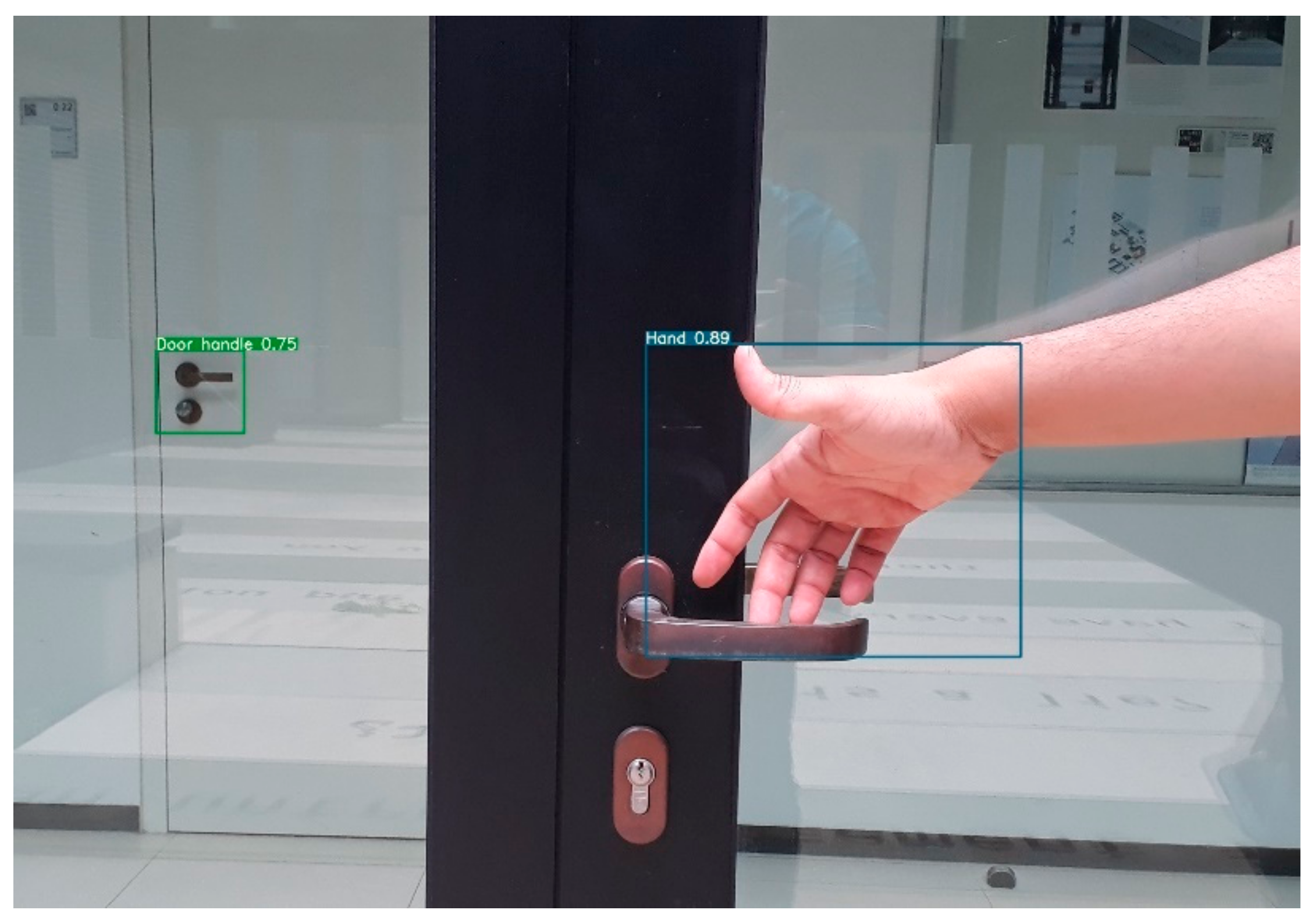

4.2. Assistive Robots in a Hospital Environment

4.2.1. Pandemic and Robotics

4.2.2. Implementation

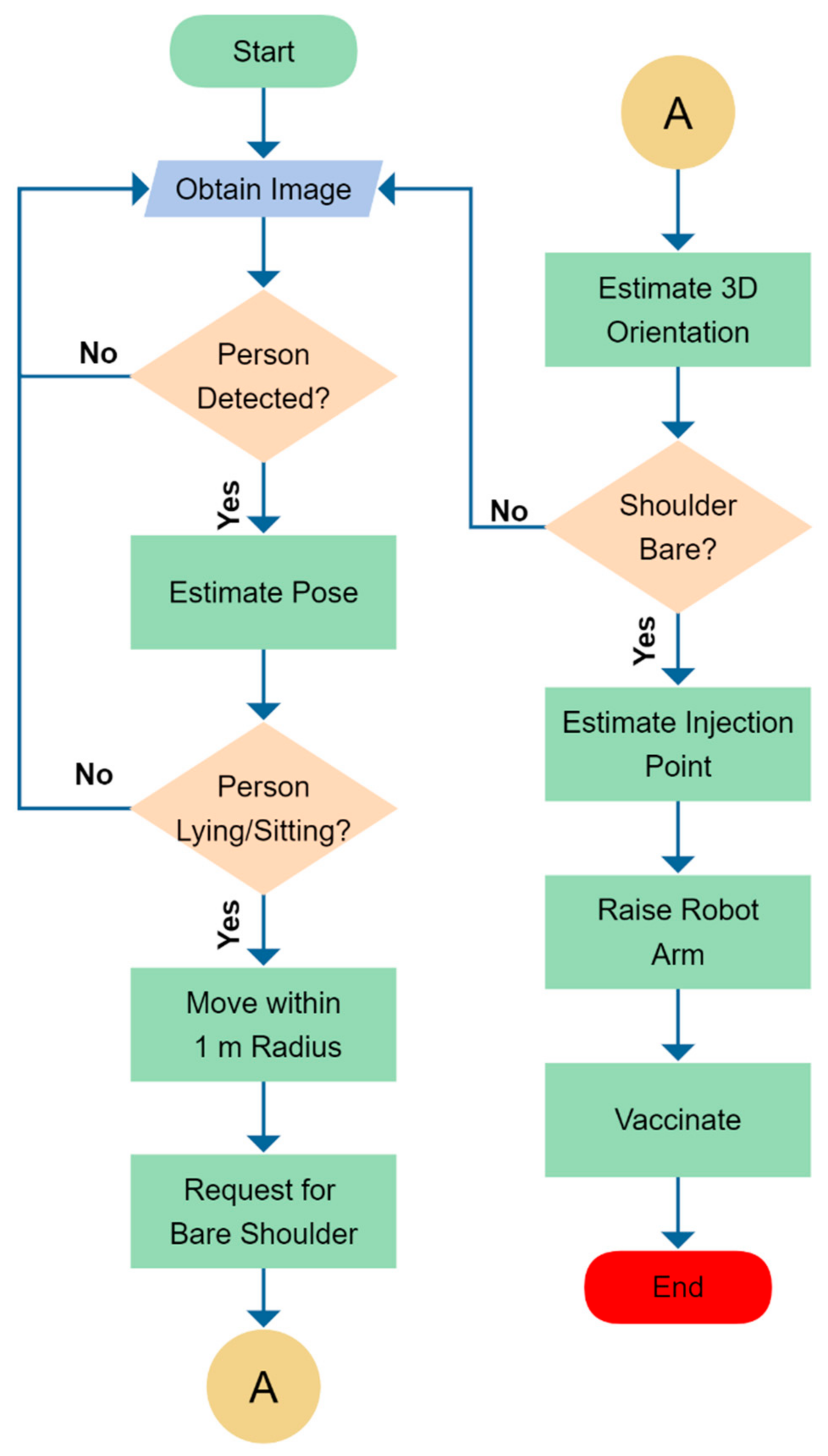

4.2.3. Equipping Robots with Cognitive Skills

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Dimitrievski, A.; Zdravevski, E.; Lameski, P.; Trajkovik, V. A survey of Ambient Assisted Living systems: Challenges and opportunities. In Proceedings of the 2016 IEEE 12th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 8–10 September 2016. [Google Scholar]

- Pineau, J.; Montemerlo, M.; Pollack, M.; Roy, N. Towards Robotic Assistants in Nursing Homes: Challenges and Results. Rob. Auton. Syst. 2002, 42, 271–281. [Google Scholar] [CrossRef]

- Tavakoli, M.; Carriere, J.; Torabi, A. Robotics For COVID-19: How Can Robots Help Health Care in the Fight against Coronavirus. 2020. Available online: https://archive.ph/N4Hlf (accessed on 26 August 2021).

- Khan, Z.H.; Siddique, A.; Lee, C.W. Robotics Utilization for Healthcare Digitization in Global COVID-19 Management. Int. J. Environ. Res. Public Health 2020, 17, 3819. [Google Scholar] [CrossRef]

- Nagano, A.; Wakabayashi, H.; Maeda, K.; Kokura, Y.; Miyazaki, S.; Mori, T.; Fujiwara, D. Respiratory Sarcopenia and Sarcopenic Respiratory Disability: Concepts, Diagnosis, and Treatment. J. Nutr. Health Aging 2021, 25, 1–9. [Google Scholar] [CrossRef]

- Larsson, L.; Degens, H.; Li, M.; Salviati, L.; Lee, Y.I.; Thompson, W.; Kirkland, J.L.; Sandri, M. Sarcopenia: Aging-Related Loss of Muscle Mass and Function. Physiol. Rev. 2019, 99, 427–511. [Google Scholar] [CrossRef]

- NEW Balance SystemTM SD—Balance—Physical Medicine | Biodex 2021. Available online: https://archive.ph/nMJoQ (accessed on 24 August 2021).

- Kanasi, E.; Ayilavarapu, S.; Jones, J. The aging population: Demographics and the biology of aging. Periodontology 2000 2016, 72, 13–18. [Google Scholar] [CrossRef]

- Rashidi, P.; Mihailidis, A. A Survey on Ambient-Assisted Living Tools for Older Adults. IEEE J. Biomed. Health Inform. 2013, 17, 579–590. [Google Scholar] [CrossRef]

- Chiarini, G.; Ray, P.; Akter, S.; Masella, C.; Ganz, A. mHealth Technologies for Chronic Diseases and Elders: A Systematic Review. IEEE J. Sel. Areas Commun. 2013, 31, 6–18. [Google Scholar] [CrossRef] [Green Version]

- Bloom, D.E.; Cadarette, D. Infectious Disease Threats in the Twenty-First Century: Strengthening the Global Response. Front. Immunol. 2019, 10, 549. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nande, A.; Adlam, B.; Sheen, J.; Levy, M.Z.; Hill, A.L. Dynamics of COVID-19 under social distancing measures are driven by transmission network structure. PLOS Comput. Biol. 2021, 17, e1008684. [Google Scholar] [CrossRef] [PubMed]

- Horizon 2020 Sections. Horiz. 2020 Eur. Comm. 2021. Available online: https://ec.europa.eu/programmes/horizon2020/en/home (accessed on 24 August 2021).

- RADIO | Unobtrusive, Efficient, Reliable and Modular Solutions for Independent Ageing; Springer: Berlin/Heidelberg, Germany, 2021.

- Antonopoulos, C.; Keramidas, G.; Voros, N.; Huebner, M.; Schwiegelshohn, F.; Goehringer, D.; Dagioglou, M.; Stavrinos, G.; Konstantopoulos, S.; Karkaletsis, V. Robots in Assisted Living Environments as an Unobtrusive, Efficient, Reliable and Modular Solution for Independent Ageing: The RADIO Experience. In Proceedings of the International Symposium on Applied Reconfigurable Computing, Darmstadt, Germany, 9–11 April 2018; ISBN 978-3-319-78889-0. [Google Scholar]

- Schwiegelshohn, F.; Hubner, M.; Wehner, P.; Gohringer, D. Tackling the New Health-Care Paradigm Through Service Robotics: Unobtrusive, efficient, reliable, and modular solutions for assisted-living environments. IEEE Consum. Electron. Mag. 2017, 6, 34–41. [Google Scholar] [CrossRef]

- TurtleBot2 2021. Available online: https://www.turtlebot.com/turtlebot2/ (accessed on 24 August 2021).

- Inc, R. Business Robots—Pepper Humanoid Robot 2021. Available online: https://business.robotlab.com/pepper-use-cases/ (accessed on 24 August 2021).

- Iglesias, A.; José, R.V.; Perez-Lorenzo, M.; Ting, K.L.H.; Tudela, A.; Marfil, R.; Dueñas, Á.; Bandera, J.P. Towards long term acceptance of Socially Assistive Robots in retirement houses: Use case definition. In Proceedings of the 2020 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Ponta Delgada, Portugal, 15–17 April 2020; pp. 134–139. [Google Scholar]

- Bui, H.; Chong, N.Y. An Integrated Approach to Human-Robot-Smart Environment Interaction Interface for Ambient Assisted Living. In Proceedings of the 2018 IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO), Genova, Italy, 27–29 September 2018; pp. 32–37. [Google Scholar]

- Elmer, A.; Matusiewicz, D.; Sulzberger, C.; Göttelmann, P.V.; Codourey, M. Die Digitale Transformation der Pflege | Wandel. Innovation. In Smart Services; MeadWestvaco: Richmond, VA, USA, 2019; pp. 280–281. ISBN 978-3-95466-404-7. [Google Scholar]

- Achirei, S.D.; Zvoristeanu, O.; Alexandrescu, A.; Botezatu, N.A.; Stan, A.; Rotariu, C.; Lupu, R.G.; Caraiman, S. SMARTCARE: On the Design of an IoT Based Solution for Assisted Living. In Proceedings of the 2020 International Conference on e-Health and Bioengineering (EHB), Iasi, Romania, 29–30 October 2020; pp. 1–4. [Google Scholar]

- Call for Proposals for Personalising Health and Care. Available online: https://euroalert.net/call/2868/call-for-proposals-for-personalising-health-and-care (accessed on 24 August 2021).

- RAMCIP. RAMCIP 2015. Available online: https://ramcip-project.eu/system/files/ramcip_1st_newsletter_v1.0.pdf (accessed on 24 August 2021).

- GrowMeUp Project: An Innovative Service Robot for Ambient Assisted Living Environments; European Commission: Brussels, Belgium, 2015.

- EnrichMe—PAL Robotics: Leading Service Robotics. PAL Robot: Barcelona, Spain. Available online: https://pal-robotics.com/collaborative-projects/enrichme/ (accessed on 24 August 2021).

- De Gauquier, L.; Brengman, M.; Willems, K. The Rise of Service Robots in Retailing: Literature Review on Success Factors and Pitfalls. In Retail Futures; Emerald Insight: Bingley, UK, 2020; ISBN 978-1-83867-664-3. [Google Scholar]

- Delfanti, A.; Frey, B. Humanly Extended Automation or the Future of Work Seen through Amazon Patents. Sci. Technol. Hum. Values 2020, 46, 655–682. [Google Scholar] [CrossRef]

- Pires, G.; Nunes, U. A Wheelchair Steered through Voice Commands and Assisted by a Reactive Fuzzy-Logic Controller. J. Intell. Robot. Syst. 2002, 34, 301–314. [Google Scholar] [CrossRef]

- Chen, Y.; Song, K. Voice control design of a mobile robot using shared-control approach. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 105–110. [Google Scholar]

- Sinyukov, D.A.; Li, R.; Otero, N.W.; Gao, R.; Padir, T. Augmenting a voice and facial expression control of a robotic wheelchair with assistive navigation. In Proceedings of the 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC), San Diego, CA, USA, 5–8 October 2014; pp. 1088–1094. [Google Scholar]

- Silva, J.R.; Simão, M.; Mendes, N.; Neto, P. Navigation and obstacle avoidance: A case study using Pepper robot. In Proceedings of the IECON 2019—45th Annual Conference of the IEEE Industrial Electronics Society, Lisbon, Portugal, 14–17 October 2019; Volume 1, pp. 5263–5268. [Google Scholar]

- Goto, K.; Nishino, H.; Yatsuda, A.; Tsutsumi, H.; Haramaki, T. A method for driving humanoid robot based on human gesture. Int. J. Mech. Eng. Robot. Res. 2020, 9, 447–452. [Google Scholar] [CrossRef]

- Haseeb, M.A.; Kyrarini, M.; Jiang, S.; Ristic-Durrant, D.; Gräser, A. Head gesture-based control for assistive robots. In Proceedings of the 11th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 26–29 June 2018; pp. 379–383. [Google Scholar]

- Zhang, L.; Jiang, M.; Farid, D.; Hossain, M.A. Intelligent facial emotion recognition and semantic-based topic detection for a humanoid robot. Expert Syst. Appl. 2013, 40, 5160–5168. [Google Scholar] [CrossRef]

- Kuon, I.; Rose, J. Measuring the Gap Between FPGAs and ASICs. IEEE Trans. Comput. Des. Integr. Circuits Syst. 2007, 26, 203–215. [Google Scholar] [CrossRef] [Green Version]

- Kamaleldin, A.; Hosny, S.; Mohamed, K.; Gamal, M.; Hussien, A.; Elnader, E.; Shalash, A.; Obeid, A.M.; Ismail, Y.; Mostafa, H. A reconfigurable hardware platform implementation for software defined radio using dynamic partial reconfiguration on Xilinx Zynq FPGA. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1540–1543. [Google Scholar]

- Pepper the humanoid and programmable robot | SoftBank Robotics 2021. Available online: https://www.softbankrobotics.com/emea/en/pepper (accessed on 14 October 2019).

- Podlubne, A.; Göhringer, D. FPGA-ROS: Methodology to Augment the Robot Operating System with FPGA Designs. In Proceedings of the 2019 International Conference on ReConFigurable Computing and FPGAs (ReConFig), Cancun, Mexico, 9–11 December 2019; pp. 1–5. [Google Scholar]

- Cresswell, K.; Sheikh, A. Can Disinfection Robots Reduce the Risk of Transmission of SARS-CoV-2 in Health Care and Educational Settings? J. Med. Internet Res. 2020, 22, e20896. [Google Scholar] [CrossRef]

- Shi, Z. Cognitive Machine Learning. Int. J. Intell. Sci. 2019, 09, 111–121. [Google Scholar] [CrossRef] [Green Version]

- Guo, K.; Zeng, S.; Yu, J.; Wang, Y.; Yang, H. A Survey of FPGA-Based Neural Network Accelerator. arXiv 2018, arXiv:1712.08934 [cs]. [Google Scholar]

- World Health Organization Spinal Cord Injury. Available online: https://www.who.int/news-room/fact-sheets/detail/spinal-cord-injury (accessed on 24 August 2021).

- Korebalance19 2021. Available online: https://archive.ph/CxThq (accessed on 26 August 2021).

- Rodríguez-Martín, D.; Samà Monsonís, A.; Pérez, C.; Català, A. Posture Transitions Identification Based on a Triaxial Accelerometer and a Barometer Sensor. In International Work-Conference on Artificial Neural Networks; Springer: Cham, Switzerland, 2017; ISBN 978-3-319-59147-6. [Google Scholar]

- Pitt, W.; Chou, L.-S. Reliability and practical clinical application of an accelerometer-based dual-task gait balance control assessment. Gait Amp Posture 2019, 71, 279–283. [Google Scholar] [CrossRef]

- Hou, Y.-R.; Chiu, Y.-L.; Chiang, S.-L.; Chen, H.-Y.; Sung, W.-H. Development of a Smartphone-Based Balance Assessment System for Subjects with Stroke. Sensors 2019, 20, 88. [Google Scholar] [CrossRef] [Green Version]

- Hou, Y.-R.; Chiu, Y.-L.; Chiang, S.-L.; Chen, H.-Y.; Sung, W.-H. Feasibility of a Smartphone-Based Balance Assessment System for Subjects with Chronic Stroke. Comput. Methods Programs Biomed. 2018, 161. [Google Scholar] [CrossRef]

- Antonopoulos, C.P.; Antonopoulos, K.; Panagiotou, C.; Voros, N.S. Tackling Critical Challenges towards Efficient CyberPhysical Components & Services Interconnection: The ATLAS CPS Platform Approach. J. Signal Process. Syst. 2019, 91, 1273–1281. [Google Scholar] [CrossRef]

- CC2650 Data Sheet, Product Information and Support | TI.com 2016. Available online: https://www.ti.com/product/CC2650 (accessed on 24 August 2021).

- Singh, S.P.; Sharma, S.C. Range Free Localization Techniques in Wireless Sensor Networks: A Review. Procedia Comput. Sci. 2015, 57, 7–16. [Google Scholar] [CrossRef] [Green Version]

- Saadat, S.; Rawtani, D.; Hussain, C.M. Environmental perspective of COVID-19. Sci. Total Environ. 2020, 728, 138870. [Google Scholar] [CrossRef] [PubMed]

- Facilitate a Smooth Connection between People with Pepper’s Telepresence Capabilities! | SoftBank Robotics 2021. Available online: https://www.softbankrobotics.com (accessed on 24 August 2021).

- Pepper Telepresence Toolkit. Available online: https://github.com/softbankrobotics-labs/pepper-telepresence-toolkit (accessed on 24 August 2021).

- Provide New Services | SoftBank Robotics EMEA 2021. Available online: https://archive.ph/pgfJF (accessed on 24 August 2021).

- Cure, L.; Van Enk, R. Effect of hand sanitizer location on hand hygiene compliance. Am. J. Infect. Control 2015, 43, 917–921. [Google Scholar] [CrossRef]

- Pradhan, D.; Biswasroy, P.; Kumar Naik, P.; Ghosh, G.; Rath, G. A Review of Current Interventions for COVID-19 Prevention. Arch. Med. Res. 2020, 51, 363–374. [Google Scholar] [CrossRef]

- Pandey, A.K.; Gelin, R. A Mass-Produced Sociable Humanoid Robot: Pepper: The First Machine of Its Kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Ding, Z.; Qian, H.; Xu, B.; Huang, Y.; Miao, T.; Yen, H.-L.; Xiao, S.; Cui, L.; Wu, X.; Shao, W.; et al. Toilets dominate environmental detection of severe acute respiratory syndrome coronavirus 2 in a hospital. Sci. Total Environ. 2021, 753, 141710. [Google Scholar] [CrossRef]

- ultralytics/yolov5: v5.0—YOLOv5-P6 1280 Models, AWS, Supervise.ly and YouTube Integrations 2021. Available online: https://archive.ph/HJqRN (accessed on 24 August 2021).

- Sato, M.; Yasuhara, Y.; Osaka, K.; Ito, H.; Dino, M.J.S.; Ong, I.L.; Zhao, Y.; Tanioka, T. Rehabilitation care with Pepper humanoid robot: A qualitative case study of older patients with schizophrenia and/or dementia in Japan. Enfermería Clínica 2020, 30, 32–36. [Google Scholar] [CrossRef]

- Beyer-Wunsch, P.; Reichstein, C. Effects of a Humanoid Robot on the Well-being for Hospitalized Children in the Pediatric Clinic—An Experimental Study. Procedia Comput. Sci. 2020, 176, 2077–2087. [Google Scholar] [CrossRef]

- Pepper SDK for Android—QiSDK 2021. Available online: https://qisdk.softbankrobotics.com/sdk/doc/pepper-sdk/index.html (accessed on 24 August 2021).

- Kursumovic, E.; Lennane, S.; Cook, T.M. Deaths in healthcare workers due to COVID-19: The need for robust data and analysis. Anaesthesia 2020, 75, 989–992. [Google Scholar] [CrossRef] [PubMed]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime Multi-person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 1302–1310. [Google Scholar]

- Ramalingam, B.; Yin, J.; Rajesh Elara, M.; Tamilselvam, Y.K.; Mohan Rayguru, M.; Muthugala, M.A.V.J.; Félix Gómez, B. A Human Support Robot for the Cleaning and Maintenance of Door Handles Using a Deep-Learning Framework. Sensors 2020, 20, 3543. [Google Scholar] [CrossRef] [PubMed]

- Yin, J.; Koppaka, G.S.A.; Tamilselvam, Y.; Mohan, R.E.; Ramalingam, B.; Anh Vu, L. Table Cleaning Task by Human Support Robot Using Deep Learning Technique. Sensors 2020, 20, 1698. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bačík, J.; Tkáč, P.; Hric, L.; Alexovič, S.; Kyslan, K.; Olexa, R.; Perduková, D. Phollower—The Universal Autonomous Mobile Robot for Industry and Civil Environments with COVID-19 Germicide Addon Meeting Safety Requirements. Appl. Sci. 2020, 10, 7682. [Google Scholar] [CrossRef]

- Sundar raju, G.; Sivakumar, K.; Ramakrishnan, A.; Selvamuthukumaran, D.; Sakthivel Murugan, E. Design and fabrication of sanitizer sprinkler robot for COVID-19 hospitals. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2021; Volume 1059, p. 12070. [Google Scholar] [CrossRef]

| Actual Distance (m) | Estimated Distance (m) |

|---|---|

| 0.5 | 0.35 |

| 1 | 1.20 |

| 1.5 | 1.35 |

| 2 | 2.15 |

| 2.5 | 2.65 |

| 3 | 3.10 |

| 3.5 | 3.30 |

| 4 | 4.10 |

| Actual Position (m) | Estimated Position (m) | ||

|---|---|---|---|

| X | Y | X | Y |

| 1.5 | 1 | 1.7 | 1.2 |

| 2 | 0.5 | 2.2 | 0.6 |

| 1 | 1 | 1 | 0.7 |

| 1.8 | 1 | 1.95 | 0.9 |

| 2.5 | 1 | 2.5 | 0.5 |

| Research Work | Cleans Human Hands | Cleans Door Handles | Scalable Cleaning Agent | Overcomes Vaccination Challenges | Human Emotion Support | Adaptable to COVID-19 Operations |

|---|---|---|---|---|---|---|

| [a] [66] | ≈ | ≈ | ||||

| [b] [67] | ≈ | |||||

| [c] [68] | ≈ | ≈ | ||||

| [d] [69] | ≈ | ≈ | ||||

| CleanMeAI | ≈ | ≈ | ≈ | ≈ | ≈ | |

| InjectMeAI | ≈ | ≈ | ≈ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mahmood, S.; Ampadu, K.O.; Antonopoulos, K.; Panagiotou, C.; Mendez, S.A.P.; Podlubne, A.; Antonopoulos, C.; Keramidas, G.; Hübner, M.; Goehringer, D.; et al. Prospects of Robots in Assisted Living Environment. Electronics 2021, 10, 2062. https://doi.org/10.3390/electronics10172062

Mahmood S, Ampadu KO, Antonopoulos K, Panagiotou C, Mendez SAP, Podlubne A, Antonopoulos C, Keramidas G, Hübner M, Goehringer D, et al. Prospects of Robots in Assisted Living Environment. Electronics. 2021; 10(17):2062. https://doi.org/10.3390/electronics10172062

Chicago/Turabian StyleMahmood, Safdar, Kwame Owusu Ampadu, Konstantinos Antonopoulos, Christos Panagiotou, Sergio Andres Pertuz Mendez, Ariel Podlubne, Christos Antonopoulos, Georgios Keramidas, Michael Hübner, Diana Goehringer, and et al. 2021. "Prospects of Robots in Assisted Living Environment" Electronics 10, no. 17: 2062. https://doi.org/10.3390/electronics10172062

APA StyleMahmood, S., Ampadu, K. O., Antonopoulos, K., Panagiotou, C., Mendez, S. A. P., Podlubne, A., Antonopoulos, C., Keramidas, G., Hübner, M., Goehringer, D., & Voros, N. (2021). Prospects of Robots in Assisted Living Environment. Electronics, 10(17), 2062. https://doi.org/10.3390/electronics10172062