A Lightweight Dense Connected Approach with Attention on Single Image Super-Resolution

Abstract

1. Introduction

- We introduce the attention mechanism to the dense connection structure as well as the reconstruction layer, which helps to suppress the less beneficial information during model training. Extensive experiments verify the effectiveness of this attention-based structure.

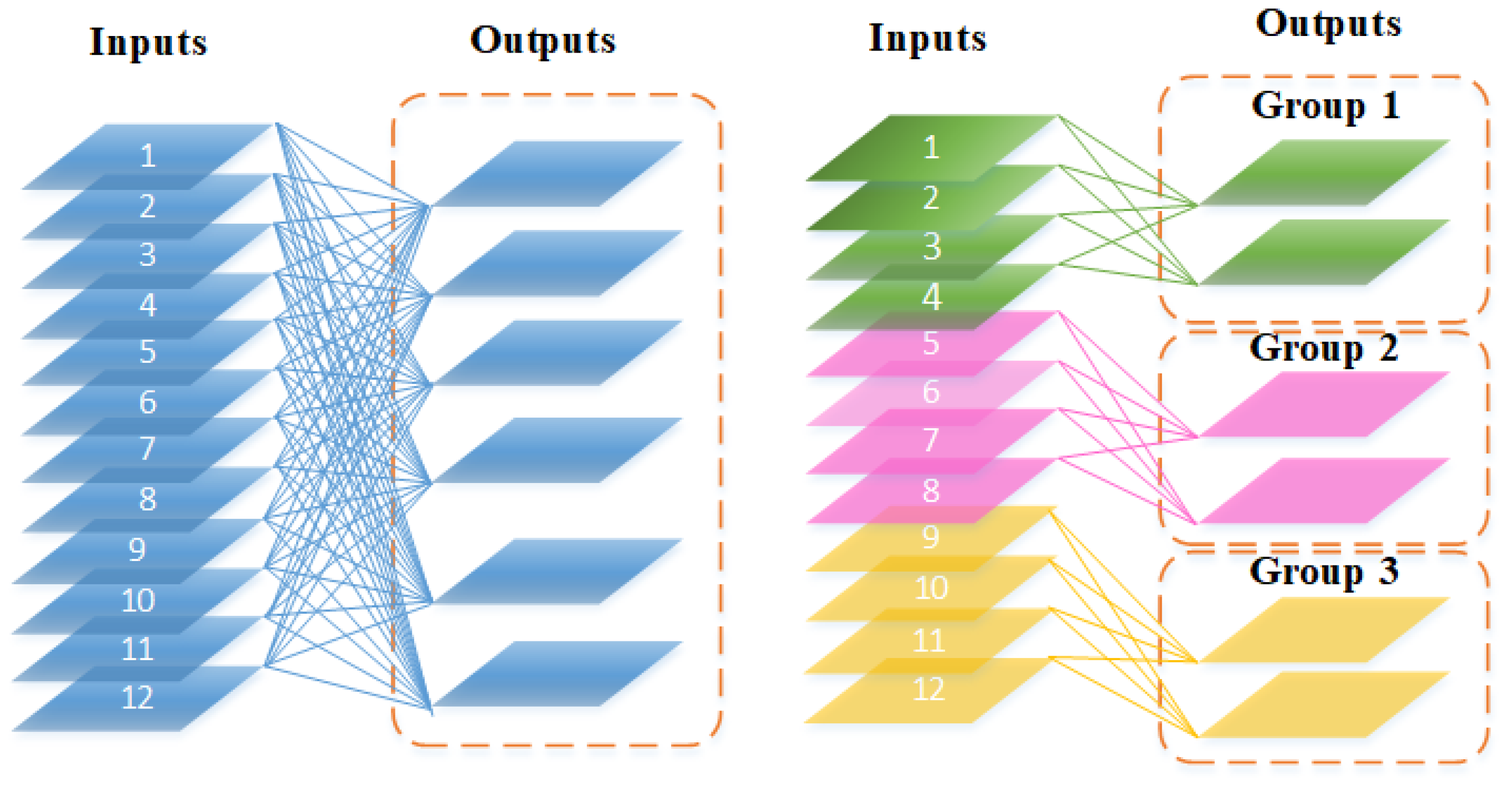

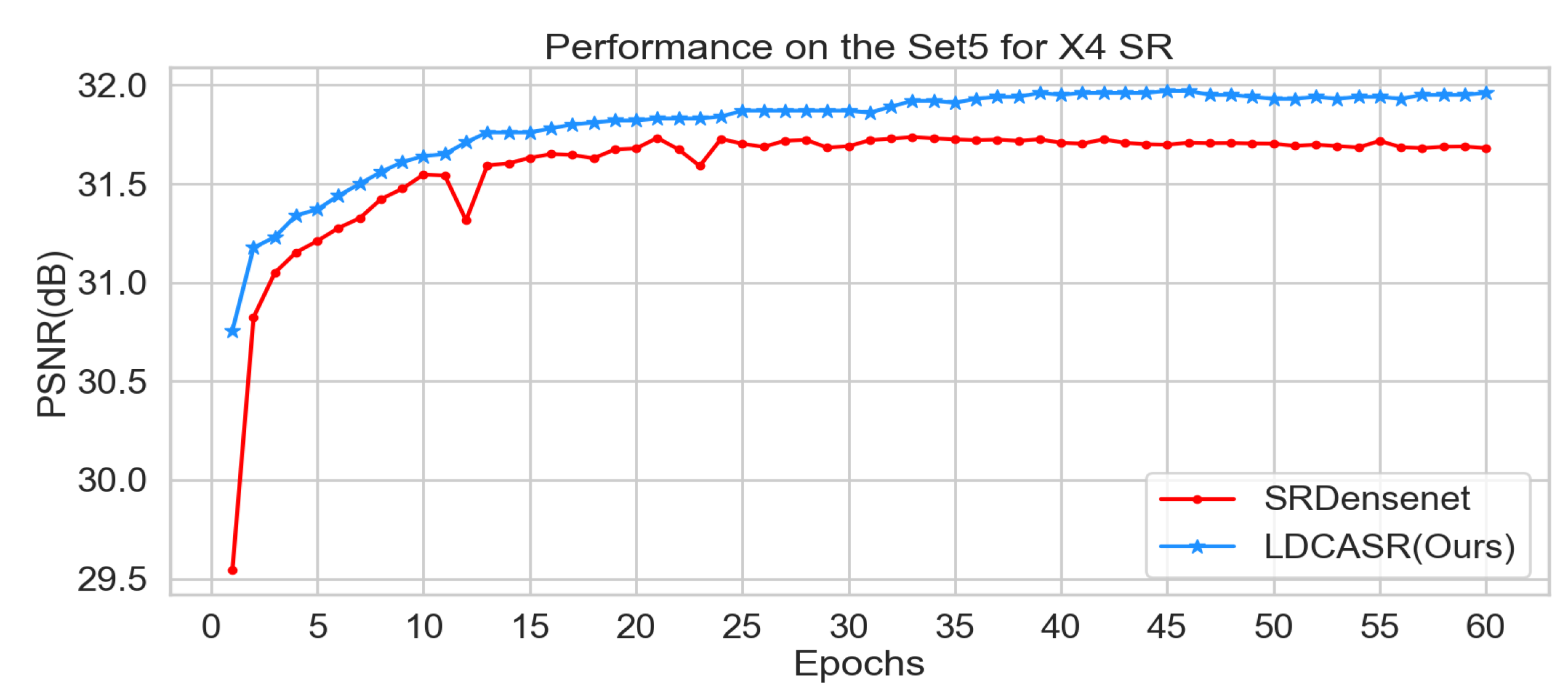

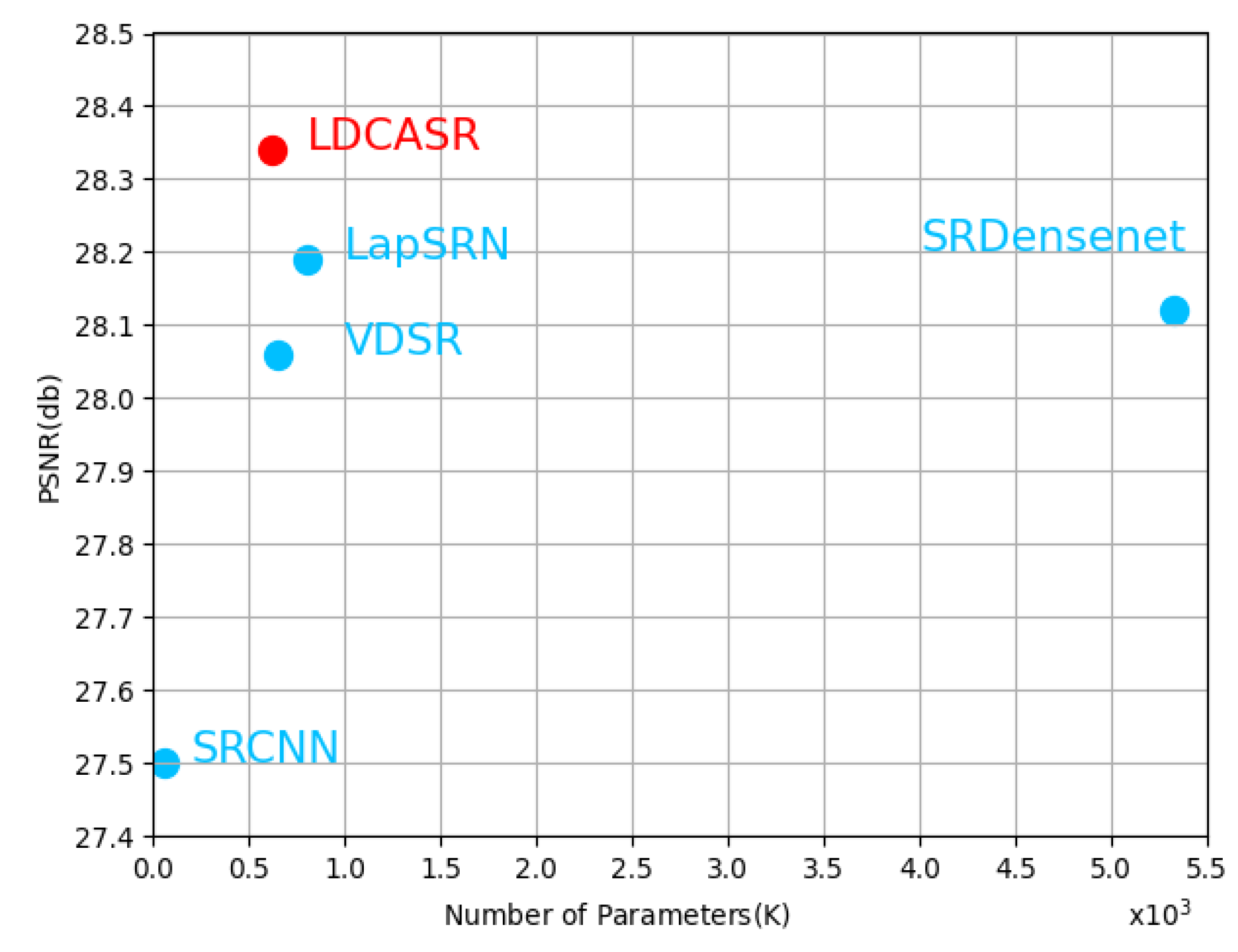

- Our model can extract the important features by using a lightweight approach. By introducing the group convolution, we reduce the number of parameters to 0.6 M, which is around 1/9 of the original SRDensenet.

2. Related Work

2.1. CNN-Based SISR

2.2. Dense Skip Connections in SISR

2.3. Attention Mechanism

3. Our Model

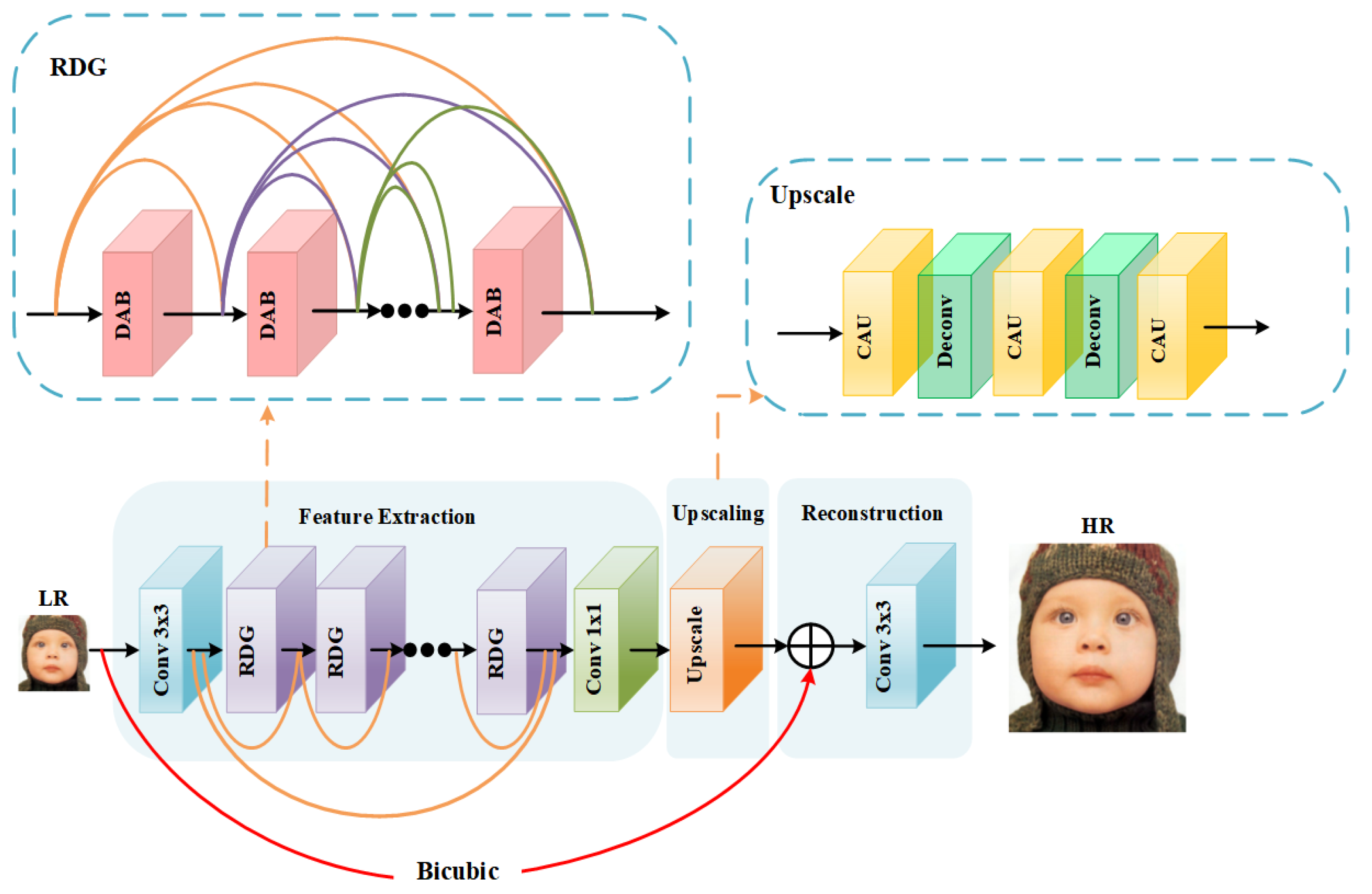

3.1. Network Architecture

3.1.1. Feature Extraction Module

3.1.2. Upscale Module

3.1.3. Reconstruction Module

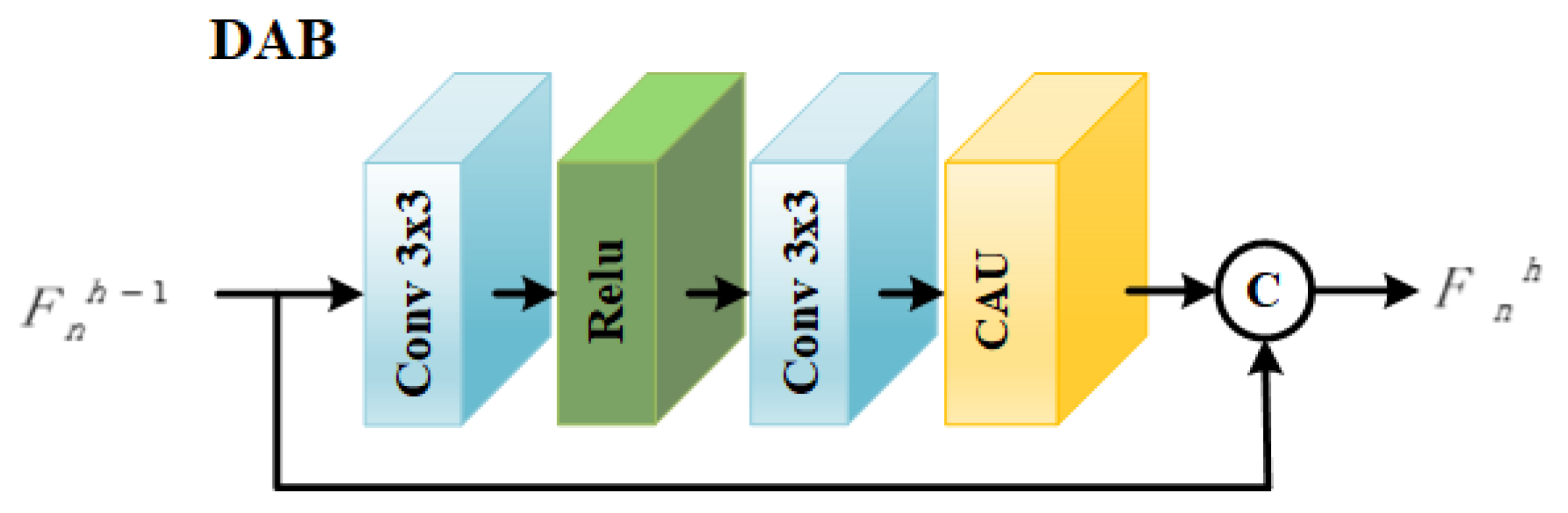

3.2. Dense Attention Block (DAB) with Channel Attention Unit (CAU)

3.3. Group Convolution

3.4. Loss Functions

4. Experiments and Analysis

4.1. Training and Testing Datasets

4.2. Implement Details

4.3. Different Loss Function Analysis

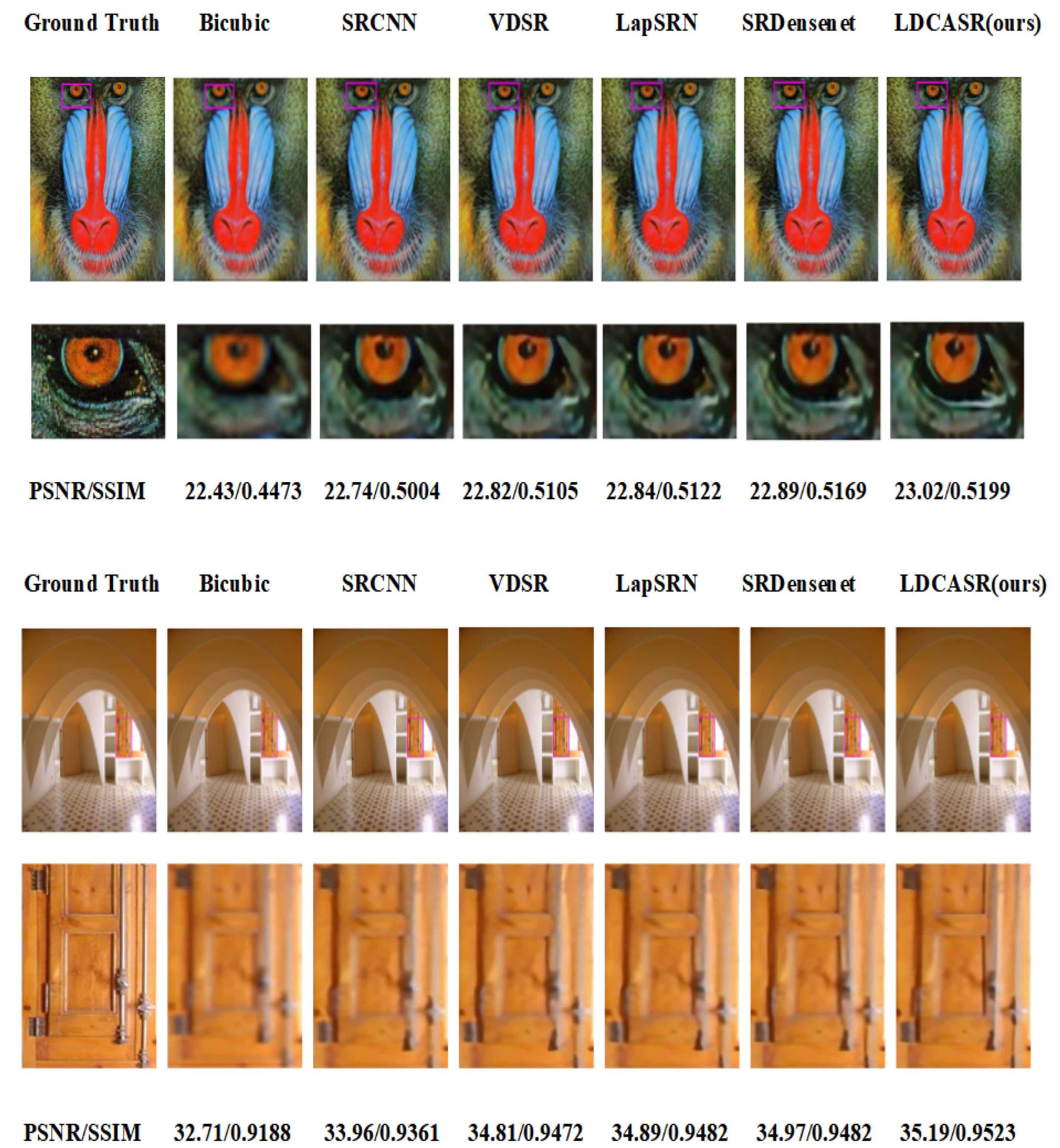

4.4. SISR Performance Comparison

4.5. Model Size Comparison

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huang, Y.; Shao, L.; Frangi, A.F. Simultaneous Super-Resolution and Cross-Modality Synthesis of 3D Medical Images Using Weakly-Supervised Joint Convolutional Sparse Coding. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5787–5796. [Google Scholar]

- Zhang, L.; Zhang, H.; Shen, H.; Li, P. A super-resolution reconstruction algorithm for surveillance images. Signal Process. 2010. [Google Scholar] [CrossRef]

- Rasti, P.; Uiboupin, T.; Escalera, S.; Anbarjafari, G. Convolutional Neural Network Super Resolution for Face Recognition in Surveillance Monitoring. In Proceedings of the International Conference on Articulated Motion and Deformable Objects, Palma de Mallorca, Spain, 12–13 July 2016. [Google Scholar]

- Yang, X.; Wu, W.; Liu, K.; Kim, P.W.; Sangaiah, A.K.; Jeon, G. Long-distance object recognition with image super resolution: A comparative study. IEEE Access 2018, 6, 13429–13438. [Google Scholar] [CrossRef]

- Mario, H.J.; Ruben, F.B.; Paoletti, M.E.; Javier, P.; Antonio, P.; Filiberto, P. A New Deep Generative Network for Unsupervised Remote Sensing Single-Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6792–6810. [Google Scholar]

- Bai, Z.; Li, Y.; Chen, X.; Yi, T.; Wei, W.; Wozniak, M.; Damasevicius, R. Real-Time Video Stitching for Mine Surveillance Using a Hybrid Image Registration Method. Electronics 2020, 9, 1336. [Google Scholar] [CrossRef]

- Huang, S.; Yang, Y.; Jin, X.; Zhang, Y.; Jiang, Q.; Yao, S. Multi-Sensor Image Fusion Using Optimized Support Vector Machine and Multiscale Weighted Principal Component Analysis. Electronics 2020, 9, 1531. [Google Scholar] [CrossRef]

- Xin, Y.; Yan, Z.; Dake, Z.; Ruigang, Y. An improved iterative back projection algorithm based on ringing artifacts suppression. Neurocomputing 2015, 162, 171–179. [Google Scholar] [CrossRef]

- Stark, H.; Oskoui, P. High-resolution image recovery from image-plane arrays, using convex projections. J. Opt. Soc. Am. A 1989, 6, 1715–1726. [Google Scholar] [CrossRef]

- Fattal, R. Image upsampling via imposed edge statistics. ACM Trans. Graph. (TOG) 2007, 26, 95. [Google Scholar] [CrossRef]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef]

- Aràndiga, F. A nonlinear algorithm for monotone piecewise bicubic interpolation. Appl. Math. Comput. 2016, 100–113. [Google Scholar] [CrossRef]

- Chang, H.; Yeung, D.-Y.; Xiong, Y. Super-resolution through neighbor embedding. Super-resolution Through Neighbor Embedding. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 1, p. I-275. [Google Scholar] [CrossRef]

- Timofte, R.; De, V.; Gool, L.V. Anchored Neighborhood Regression for Fast Example-Based Super-Resolution. In Proceedings of the IEEE International Conference on Computer Vision, Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- Jiang, J.; Yu, Y.; Wang, Z.; Tang, S.; Hu, R.; Ma, J. Ensemble Super-Resolution With a Reference Dataset. IEEE Trans. Cybern. 2019, 1–15. [Google Scholar] [CrossRef]

- Jiang, J.; Xiang, M.; Chen, C.; Tao, L.; Ma, J. Single Image Super-Resolution via Locally Regularized Anchored Neighborhood Regression and Nonlocal Means. IEEE Trans. Multimed. 2017, 19, 15–26. [Google Scholar] [CrossRef]

- Yang, J.; Wright, J.; Huang, T.; Ma, Y. Image Super-Resolution Via Sparse Representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the Super-Resolution Convolutional Neural Network. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Volume 9906, pp. 391–407. [Google Scholar] [CrossRef]

- Lai, W.-S.; Huang, J.-B.; Ahuja, N.; Yang, M.-H. Deep Laplacian pyramid networks for fast and accurate super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 624–632. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2015. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Deeply-Recursive Convolutional Network for Image Super-Resolution. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X. Image Super-Resolution via Deep Recursive Residual Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2790–2798. [Google Scholar]

- Tong, T.; Li, G.; Liu, X.; Gao, Q. Image Super-Resolution Using Dense Skip Connections. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent Models of Visual Attention. arXiv 2014, arXiv:1406.6247. [Google Scholar]

- Liu, C.; Liang, Y.; Xue, Y.; Qian, X.; Fu, J. Food and Ingredient Joint Learning for Fine-Grained Recognition. IEEE Trans. Circuits Syst. Video Technol. 2020, 1. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2016, arXiv:1409.0473. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual Attention Network for Image Classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6450–6458. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. arXiv 2018, arXiv:1807.02758. [Google Scholar]

- Dai, T.; Cai, J.; Zhang, Y.; Xia, S.; Zhang, L. Second-Order Attention Network for Single Image Super-Resolution. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11057–11066. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Timofte, R.; Agustsson, E.; Gool, L.V.; Yang, M.H.; Zhang, L.; Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M.; et al. NTIRE 2017 Challenge on Single Image Super-Resolution: Methods and Results. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1110–1121. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi Morel, M.-L. Low-Complexity Single-Image Super-Resolution based on Nonnegative Neighbor Embedding. In Proceedings of the British Machine Vision Conference (BMVC), Guildford, UK, 3–7 September 2012. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On Single Image Scale-Up Using Sparse-Representations. Lecture Notes Comput. Sci. 2010, 6920, 711–730. [Google Scholar] [CrossRef]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour Detection and Hierarchical Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Singh, A.; Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar]

| Method | Scale | Set5 | Set14 | BSD100 | Urban100 |

|---|---|---|---|---|---|

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | ||

| Bicubic | 33.69/0.9309 | 30.33/0.8702 | 29.56/0.8451 | 26.88/0.8419 | |

| SRCNN | 36.65/0.9542 | 32.45/0.9067 | 31.40/0.8902 | 29.54/0.8956 | |

| VDSR | 37.53/0.9590 | 33.05/0.9136 | 31.95/0.8960 | 30.77/0.9140 | |

| LapSRN | 37.52/0.9591 | 33.08/0.9130 | 31.08/0.8950 | 30.41/0.9103 | |

| SRDensenet | 37.83/0.9604 | 33.26/0.9160 | 32.08/0.8994 | 31.58/0.9235 | |

| LDCASR(Ours) | 38.12/0.9618 | 33.56/0.9186 | 32.21/0.8998 | 32.07/0.9288 | |

| Bicubic | 28.42/0.8104 | 26.00/0.7027 | 25.96/0.6675 | 23.14/0.6603 | |

| SRCNN | 30.48/0.8628 | 27.50/0.7513 | 26.90/0.7101 | 24.52/0.7221 | |

| VDSR | 31.42/0.8824 | 28.06/0.7689 | 27.26/0.7265 | 25.21/0.7757 | |

| LapSRN | 31.54/0.8850 | 28.19/0.7720 | 27.32/0.7270 | 25.21/0.7560 | |

| SRDensenet | 31.54/0.8834 | 28.12/0.7712 | 27.32/0.7296 | 25.36/0.7640 | |

| LDCASR(Ours) | 31.94/0.8898 | 28.36/0.7758 | 27.44/0.7328 | 25.77/0.7781 | |

| Bicubic | 24.39/0.6582 | 23.10/0.5660 | 23.67/0.5480 | 20.75/0.5160 | |

| SRCNN | 25.34/0.6900 | 23.76/0.5926 | 24.15/0.5665 | 20.73/0.5540 | |

| VDSR | 25.93/0.7246 | 24.26/0.6150 | 24.48/0.5836 | 21.72/0.5713 | |

| LapSRN | 26.15/0.7380 | 23.38/0.6208 | 24.55/0.5865 | 21.83/0.5816 | |

| SRDensenet | 25.92/0.7284 | 24.19/0.6178 | 24.43/0.5859 | 21.73/0.5843 | |

| LDCASR(Ours) | 26.21/0.7383 | 24.43/0.6238 | 24.57/0.5886 | 21.89/0.5895 |

| Dataset | Scale | SRCNN | VDSR | LapSRN | SRDensenet | LDCASR (Ours) |

|---|---|---|---|---|---|---|

| Set5 | 0.15036 | 0.33625 | 0.27346 | 1.47526 | 0.22986 | |

| 0.16908 | 0.31396 | 0.91862 | 1.09084 | 0.26533 | ||

| 0.10912 | 0.87221 | 0.56385 | 0.90353 | 0.33260 | ||

| Set14 | 0.23497 | 0.65412 | 0.47673 | 2.75815 | 0.43869 | |

| 0.25417 | 0.62675 | 1.03996 | 2.16803 | 0.46524 | ||

| 0.21497 | 1.75756 | 0.54369 | 1.13913 | 0.48765 | ||

| BSD100 | 0.04776 | 0.43824 | 0.32861 | 1.49976 | 0.26857 | |

| 0.05159 | 0.44911 | 0.71945 | 1.46824 | 0.28653 | ||

| 0.15037 | 1.16250 | 0.35662 | 0.76287 | 0.28375 | ||

| Urban100 | 0.56531 | 2.54659 | 2.88541 | 3.57819 | 1.98290 | |

| 0.54750 | 2.05804 | 3.67770 | 3.98965 | 1.65246 | ||

| 0.71415 | 3.70353 | 1.79873 | 3.80090 | 1.40827 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zha, L.; Yang, Y.; Lai, Z.; Zhang, Z.; Wen, J. A Lightweight Dense Connected Approach with Attention on Single Image Super-Resolution. Electronics 2021, 10, 1234. https://doi.org/10.3390/electronics10111234

Zha L, Yang Y, Lai Z, Zhang Z, Wen J. A Lightweight Dense Connected Approach with Attention on Single Image Super-Resolution. Electronics. 2021; 10(11):1234. https://doi.org/10.3390/electronics10111234

Chicago/Turabian StyleZha, Lei, Yu Yang, Zicheng Lai, Ziwei Zhang, and Juan Wen. 2021. "A Lightweight Dense Connected Approach with Attention on Single Image Super-Resolution" Electronics 10, no. 11: 1234. https://doi.org/10.3390/electronics10111234

APA StyleZha, L., Yang, Y., Lai, Z., Zhang, Z., & Wen, J. (2021). A Lightweight Dense Connected Approach with Attention on Single Image Super-Resolution. Electronics, 10(11), 1234. https://doi.org/10.3390/electronics10111234