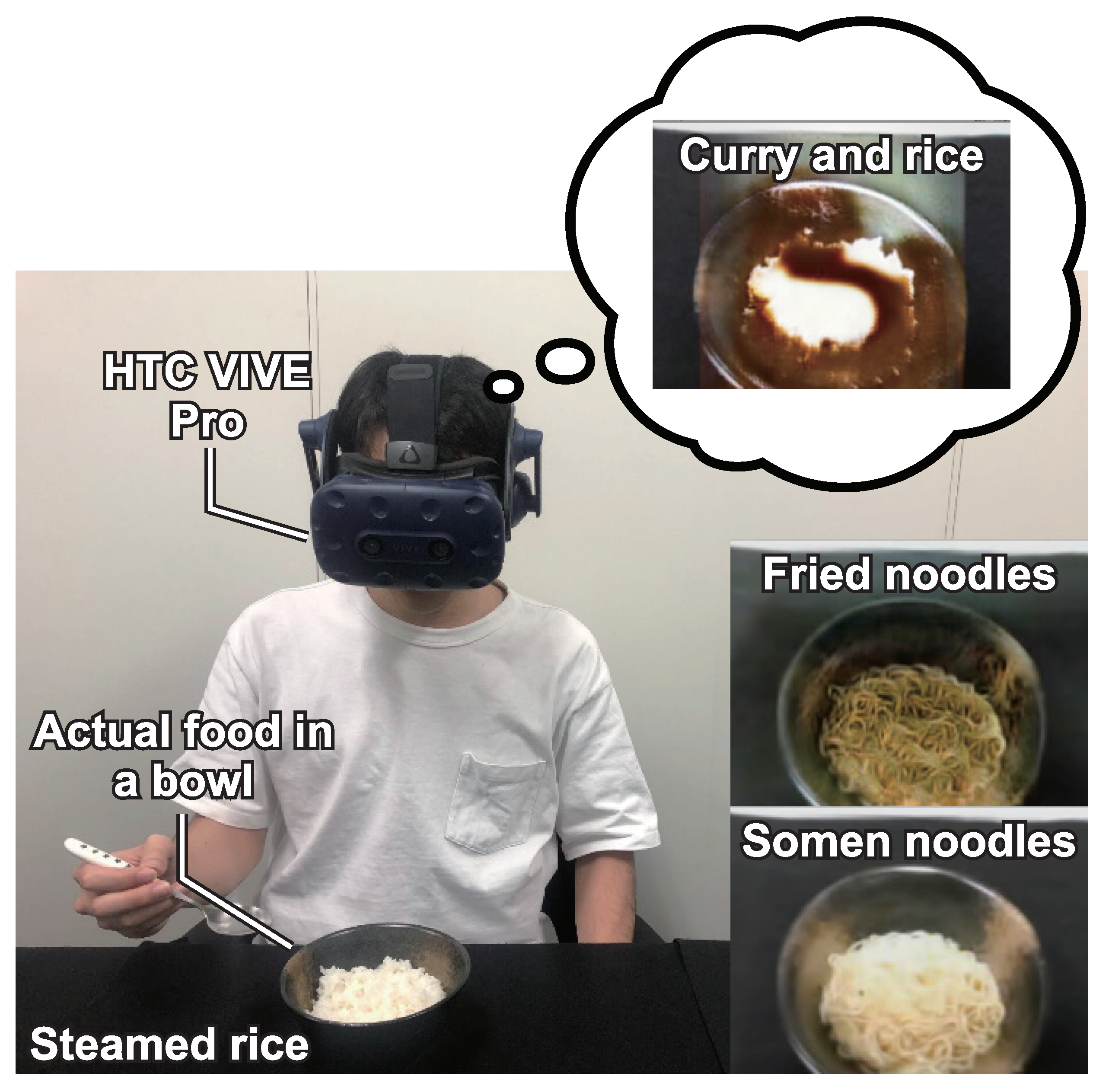

Figure 1.

Our GAN-based real-time food-to-food translation system in action. (left) User with a video see-through HMD experiencing vision-induced gustatory manipulation of Rice Conditions. (right bottom) Examples of Noodle Conditions.

Figure 1.

Our GAN-based real-time food-to-food translation system in action. (left) User with a video see-through HMD experiencing vision-induced gustatory manipulation of Rice Conditions. (right bottom) Examples of Noodle Conditions.

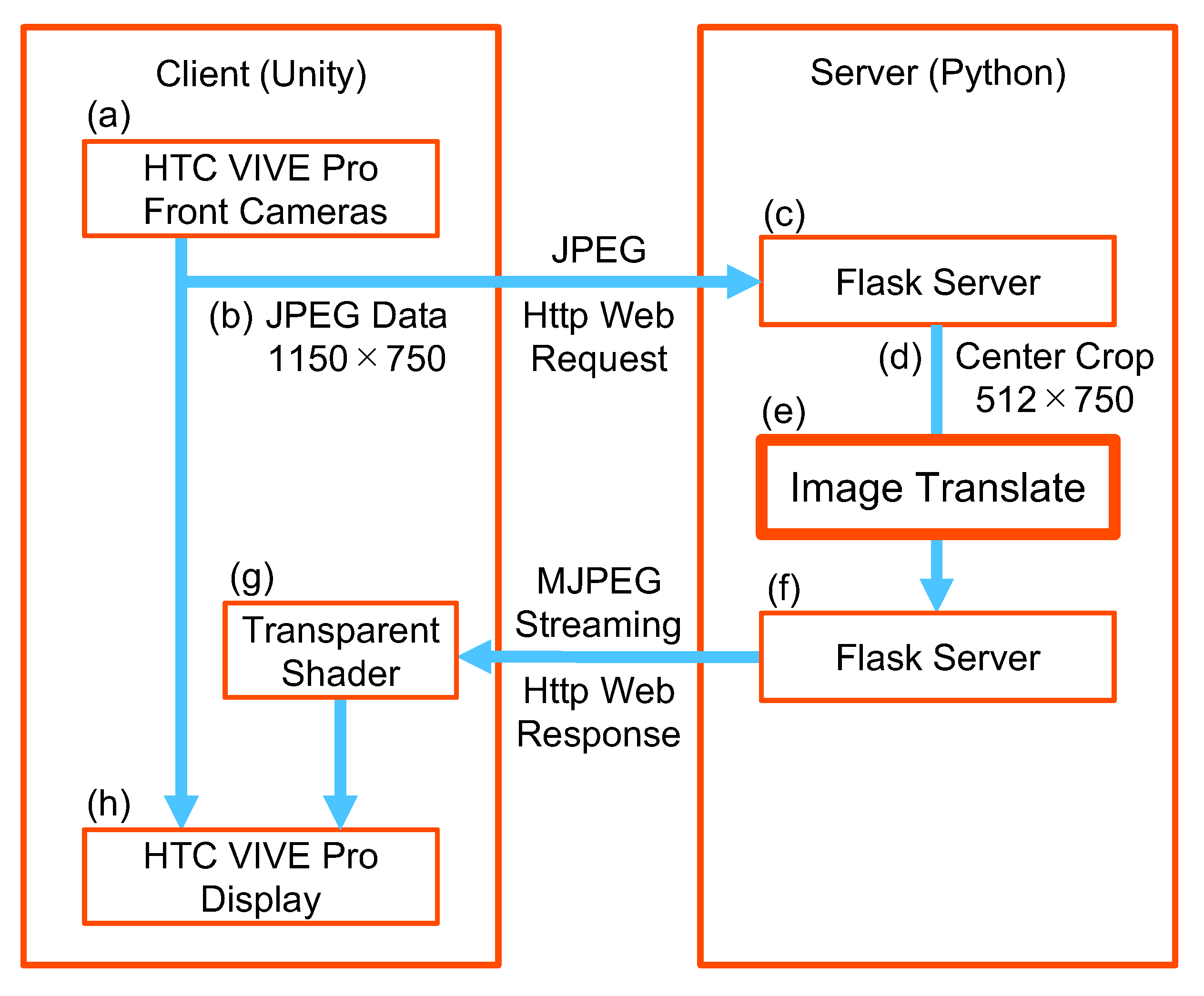

Figure 2.

System configuration of the GAN-based food-to-food translation system. The client module acquires an RGB image from the camera (a), sends it to the server (b), overlays the processed image on the video background (g), and presents the scene to the user (h). The server module receives the sent image (c), crops the center (d), translates it to another food image (e), and sends it back (f).

Figure 2.

System configuration of the GAN-based food-to-food translation system. The client module acquires an RGB image from the camera (a), sends it to the server (b), overlays the processed image on the video background (g), and presents the scene to the user (h). The server module receives the sent image (c), crops the center (d), translates it to another food image (e), and sends it back (f).

Figure 3.

A participant in a noodle condition. They ate food placed on a black table surrounded by a white wall while wearing HMDs.

Figure 3.

A participant in a noodle condition. They ate food placed on a black table surrounded by a white wall while wearing HMDs.

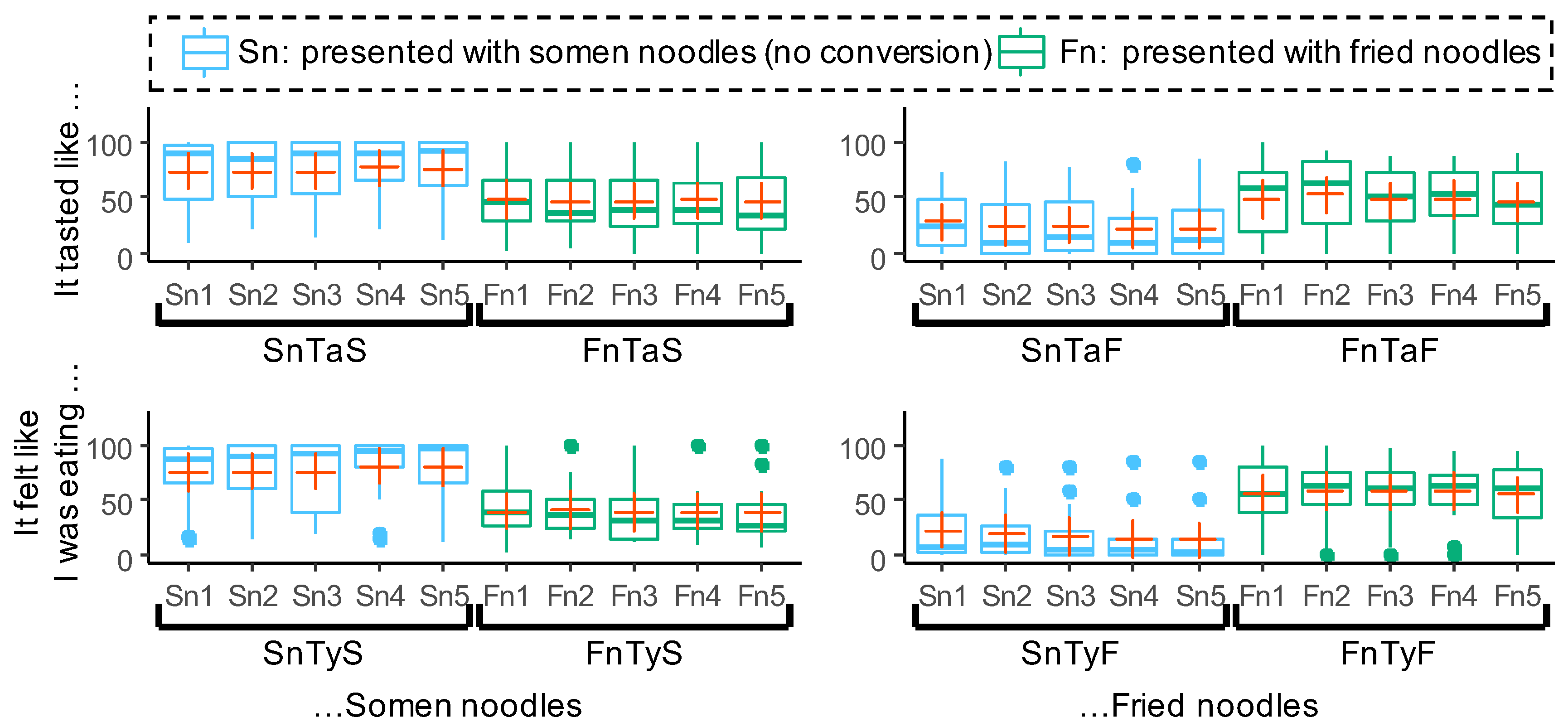

Figure 4.

VAS scores in the noodle conditions. The box plots in the upper and lower rows correspond to the results for Q1 and Q2, and Q3 and Q4, respectively. The red crosses indicate the mean values, and the dots indicate the outliers. For example, the upper left graph, where “It tasted like …” and “…Somen noodles” intersect, shows the results of Q1, where “It tasted like somen noodles” was asked, and Sn1 shows the VAS score of the first bite under Sn conditions. In addition, the entire Sn1–Sn5 is denoted as SnTaS for identification purposes.

Figure 4.

VAS scores in the noodle conditions. The box plots in the upper and lower rows correspond to the results for Q1 and Q2, and Q3 and Q4, respectively. The red crosses indicate the mean values, and the dots indicate the outliers. For example, the upper left graph, where “It tasted like …” and “…Somen noodles” intersect, shows the results of Q1, where “It tasted like somen noodles” was asked, and Sn1 shows the VAS score of the first bite under Sn conditions. In addition, the entire Sn1–Sn5 is denoted as SnTaS for identification purposes.

Figure 5.

Relative change in VAS scores from the first to the fifth bite for each participant. Participants were divided into three groups based on the differences in VAS scores between the first and fifth bites: Up group (those increased by 10 points or more), Down group (those decreased by 10 points or more), and Stay group (those changed by less than 10 points). For example, the upper left graph, where “It tasted like…” and “…Somen noodles” intersect, shows the results of SnTaS, where “It tasted like somen noodles” was asked, and the n-th VAS score shows the VAS score of the n-th bite under Sn conditions.

Figure 5.

Relative change in VAS scores from the first to the fifth bite for each participant. Participants were divided into three groups based on the differences in VAS scores between the first and fifth bites: Up group (those increased by 10 points or more), Down group (those decreased by 10 points or more), and Stay group (those changed by less than 10 points). For example, the upper left graph, where “It tasted like…” and “…Somen noodles” intersect, shows the results of SnTaS, where “It tasted like somen noodles” was asked, and the n-th VAS score shows the VAS score of the n-th bite under Sn conditions.

Figure 6.

VAS scores with regard to nationality and gender for Q1 and Q2 in the noodle conditions. Interactions are indicated to the right of result group names SnTaS, FnTaS, and FnTaF. The red crosses indicate the mean values, and the dots indicate the outliers. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). The upper graphs show the nationality classification, where Int indicates international participants and Jpn indicates Japanese participants. The lower graphs show the classification of gender, where F indicates female participants and M indicates male participants. For example, the upper left graph shows the values of Sn1–Sn5 of SnTaS focusing on nationality.

Figure 6.

VAS scores with regard to nationality and gender for Q1 and Q2 in the noodle conditions. Interactions are indicated to the right of result group names SnTaS, FnTaS, and FnTaF. The red crosses indicate the mean values, and the dots indicate the outliers. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). The upper graphs show the nationality classification, where Int indicates international participants and Jpn indicates Japanese participants. The lower graphs show the classification of gender, where F indicates female participants and M indicates male participants. For example, the upper left graph shows the values of Sn1–Sn5 of SnTaS focusing on nationality.

Figure 7.

VAS scores with regard to nationality and gender for Q3 and Q4 in the noodle conditions. Interactions are indicated to the right of result group names SnTyS and FnTyS. The red crosses indicate the mean values, and the dots indicate the outliers. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). The upper graphs show the nationality classification, where Int indicates international participants and Jpn indicates Japanese participants. The lower graphs show the classification of gender, where F indicates female participants and M indicates male participants. For example, the upper left graph shows the values of Sn1–Sn5 of SnTyS focusing on nationality.

Figure 7.

VAS scores with regard to nationality and gender for Q3 and Q4 in the noodle conditions. Interactions are indicated to the right of result group names SnTyS and FnTyS. The red crosses indicate the mean values, and the dots indicate the outliers. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). The upper graphs show the nationality classification, where Int indicates international participants and Jpn indicates Japanese participants. The lower graphs show the classification of gender, where F indicates female participants and M indicates male participants. For example, the upper left graph shows the values of Sn1–Sn5 of SnTyS focusing on nationality.

Figure 8.

VAS scores in the rice conditions. The box plots in the upper and lower rows correspond to the results for Q1 and Q2, and Q3 and Q4, respectively. The red crosses indicate the mean values, and the dots indicate the outliers. For example, the upper left graph, where “It tasted like ...” and “...Steamed rice” intersect, shows the results of Q1, where “It tasted like steamed rice” was asked, and Sr1 shows the VAS score of the first bite under Sr conditions. In addition, the entire Sr1–Sr5 is denoted as SrTaS for identification purposes.

Figure 8.

VAS scores in the rice conditions. The box plots in the upper and lower rows correspond to the results for Q1 and Q2, and Q3 and Q4, respectively. The red crosses indicate the mean values, and the dots indicate the outliers. For example, the upper left graph, where “It tasted like ...” and “...Steamed rice” intersect, shows the results of Q1, where “It tasted like steamed rice” was asked, and Sr1 shows the VAS score of the first bite under Sr conditions. In addition, the entire Sr1–Sr5 is denoted as SrTaS for identification purposes.

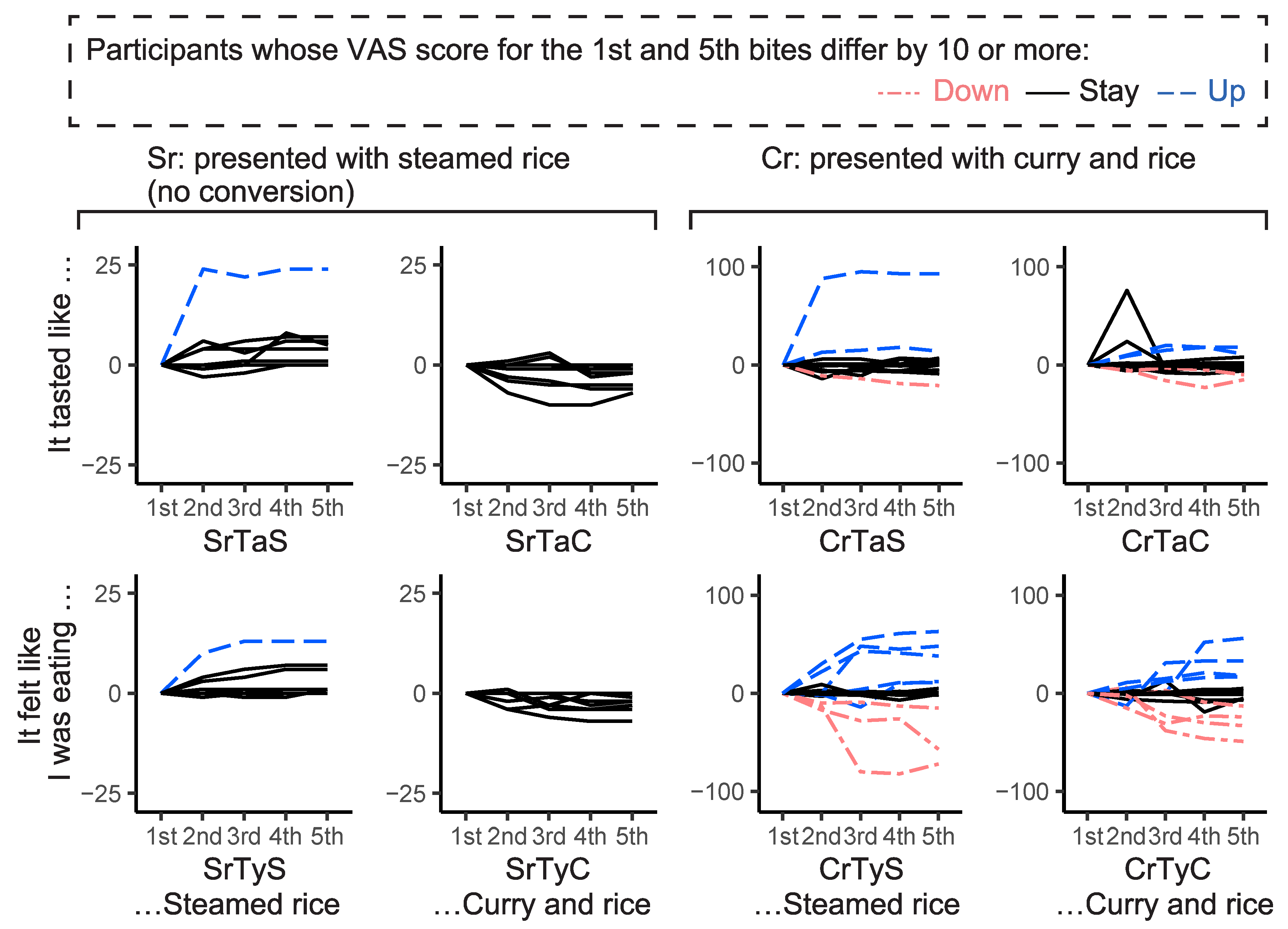

Figure 9.

Relative change in VAS scores from the first to the fifth bite for each participant. Participants were divided into three groups based on the difference in VAS scores between the first and fifth bites: Up group (those increased by 10 points or more), Down group (those decreased by 10 points or more), and Stay group (those changed by less than 10 points). For example, the upper left graph, where “It tasted like …” and “…Steamed rice” intersect, shows the results of SrTaS, where “It tasted like steamed rice” was asked, and the n-th VAS score shows the VAS score of the n-th bite under Sr conditions.

Figure 9.

Relative change in VAS scores from the first to the fifth bite for each participant. Participants were divided into three groups based on the difference in VAS scores between the first and fifth bites: Up group (those increased by 10 points or more), Down group (those decreased by 10 points or more), and Stay group (those changed by less than 10 points). For example, the upper left graph, where “It tasted like …” and “…Steamed rice” intersect, shows the results of SrTaS, where “It tasted like steamed rice” was asked, and the n-th VAS score shows the VAS score of the n-th bite under Sr conditions.

Figure 10.

VAS scores with regard to nationality and gender for Q1 and Q2 in the rice conditions. Interactions are indicated to the right of result group names CrTaS and CrTaC. The red crosses indicate the mean values, and the dots indicate the outliers. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). The upper graphs show the nationality classification, where Int indicates international participants and Jpn indicates Japanese participants. The lower graphs show the classification of gender, where F indicates female participants and M indicates male participants. For example, the upper left graph shows the values of Sn1–Sn5 of SrTaS focusing on nationality.

Figure 10.

VAS scores with regard to nationality and gender for Q1 and Q2 in the rice conditions. Interactions are indicated to the right of result group names CrTaS and CrTaC. The red crosses indicate the mean values, and the dots indicate the outliers. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). The upper graphs show the nationality classification, where Int indicates international participants and Jpn indicates Japanese participants. The lower graphs show the classification of gender, where F indicates female participants and M indicates male participants. For example, the upper left graph shows the values of Sn1–Sn5 of SrTaS focusing on nationality.

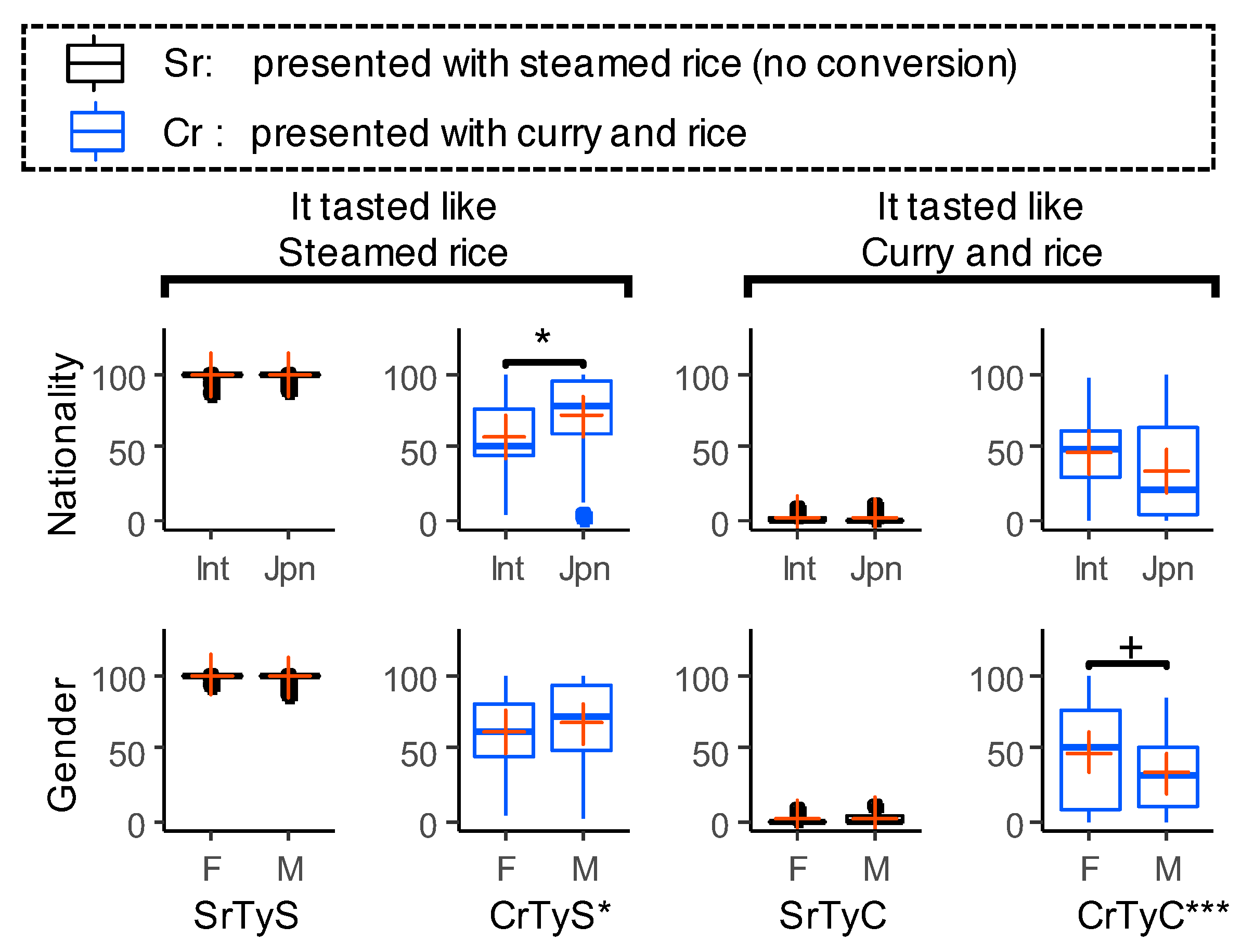

Figure 11.

VAS scores with regard to nationality and gender for Q3 and Q4 in the rice conditions. Interactions are indicated to the right of result group names CrTyS and CrTyC. The red crosses indicate the mean values, and the dots indicate the outliers. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). The upper graphs show the nationality classification, where Int indicates international participants and Jpn indicates Japanese participants. The lower graphs show the classification of gender, where F indicates female participants and M indicates male participants. For example, the upper left graph shows the values of Sn1–Sn5 of SrTyS focusing on nationality.

Figure 11.

VAS scores with regard to nationality and gender for Q3 and Q4 in the rice conditions. Interactions are indicated to the right of result group names CrTyS and CrTyC. The red crosses indicate the mean values, and the dots indicate the outliers. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). The upper graphs show the nationality classification, where Int indicates international participants and Jpn indicates Japanese participants. The lower graphs show the classification of gender, where F indicates female participants and M indicates male participants. For example, the upper left graph shows the values of Sn1–Sn5 of SrTyS focusing on nationality.

Table 1.

Image dataset used to learn the network.

Table 1.

Image dataset used to learn the network.

| Category | Numbers of Images |

|---|

| Ramen noodles | 75,350 |

| Fried noodles | 28,400 |

| Steamed rice | 7390 |

| Curry & rice | 9830 |

| Fried rice | 28,400 |

| Total | 149,370 |

Table 2.

Number of participants whose VAS scores for the first and fifth bites differ by 10 or more in the noodle conditions (up: number of participants with improved VAS scores, stay: number of participants with little change in VAS scores, down: number of participants with reduced VAS scores).

Table 2.

Number of participants whose VAS scores for the first and fifth bites differ by 10 or more in the noodle conditions (up: number of participants with improved VAS scores, stay: number of participants with little change in VAS scores, down: number of participants with reduced VAS scores).

| | Sn | | Fn |

|---|

| | Taste | | Type | | Taste | | Type |

| | SnTaS | SnTaF | | SnTyS | SnTyF | | FnTaS | FnTaF | | FnTyS | FnTyF |

| up | 3 | 2 | | 4 | 0 | | 4 | 5 | | 5 | 6 |

| stay | 11 | 8 | | 12 | 11 | | 6 | 6 | | 4 | 5 |

| down | 2 | 6 | | 0 | 5 | | 6 | 5 | | 7 | 5 |

Table 3.

ANOVA results for Q1 and Q2 in the noodle conditions. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). “Nationality: Gender” shows the interaction between nationality and gender. The interactions of “Persistency: Nationality”, “Persistency: Gender,” and “Persistency: Nationality: Gender” are omitted because there was no significant difference.

Table 3.

ANOVA results for Q1 and Q2 in the noodle conditions. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). “Nationality: Gender” shows the interaction between nationality and gender. The interactions of “Persistency: Nationality”, “Persistency: Gender,” and “Persistency: Nationality: Gender” are omitted because there was no significant difference.

| | It Tasted Like… |

|---|

| | …Somen Noodles | …Fried Noodles |

| Condition | SnTaS | FnTaS | SnTaF | FnTaF |

| Persistency | n.s. | n.s. | n.s. | n.s. |

| Nationality | *** | n.s. | + | * |

| Gender | *** | *** | + | *** |

| Nationality: Gender | *** | *** | n.s. | * |

Table 4.

ANOVA results for Q3 and Q4 in the noodle conditions. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). “Nationality: Gender” shows the interaction between nationality and gender. The interactions of “Persistency: Nationality”, “Persistency: Gender,” and “Persistency: Nationality: Gender” are omitted because there was no significant difference.

Table 4.

ANOVA results for Q3 and Q4 in the noodle conditions. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). “Nationality: Gender” shows the interaction between nationality and gender. The interactions of “Persistency: Nationality”, “Persistency: Gender,” and “Persistency: Nationality: Gender” are omitted because there was no significant difference.

| | It Felt Like I Was Eating… |

|---|

| | …Somen Noodles | …Fried Noodles |

| Condition | SnTyS | FnTyS | SnTyF | FnTyF |

| Persistency | n.s. | n.s. | n.s. | n.s. |

| Nationality | *** | n.s. | * | * |

| Gender | *** | n.s. | * | n.s. |

| Nationality:Gender | *** | ** | n.s. | n.s. |

Table 5.

Number of participants whose VAS scores for the first and fifth bites differ by 10 or more in the rice conditions (up: number of participants with improved VAS scores, stay: number of participants with no change in VAS score, down: number of participants with reduced VAS scores).

Table 5.

Number of participants whose VAS scores for the first and fifth bites differ by 10 or more in the rice conditions (up: number of participants with improved VAS scores, stay: number of participants with no change in VAS score, down: number of participants with reduced VAS scores).

| | Sr | | Cr |

|---|

| | Taste | | Type | | Taste | | Type |

| | SrTaS | SrTaC | | SrTyS | SrTyC | | CrTaS | CrTaC | | CrTyS | CrTyC |

| up | 1 | 0 | | 1 | 0 | | 2 | 2 | | 5 | 4 |

| stay | 15 | 16 | | 15 | 16 | | 13 | 12 | | 8 | 8 |

| down | 0 | 0 | | 0 | 0 | | 1 | 2 | | 3 | 4 |

Table 6.

ANOVA results for Q1 and Q2 in the rice conditions. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). “Nationality: Gender” shows the interaction between nationality and gender. The interactions of “Persistency: Nationality”, “Persistency: Gender,” and “Persistency: Nationality: Gender” are omitted because there was no significant difference.

Table 6.

ANOVA results for Q1 and Q2 in the rice conditions. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). “Nationality: Gender” shows the interaction between nationality and gender. The interactions of “Persistency: Nationality”, “Persistency: Gender,” and “Persistency: Nationality: Gender” are omitted because there was no significant difference.

| | It Tasted Like… |

|---|

| | …Steamed Rice | …Curry and Rice |

| Condition | SrTaS | CrTaS | SrTaC | CrTaC |

| Persistency | ** | n.s. | n.s. | n.s. |

| Nationality | * | *** | + | *** |

| Gender | + | *** | n.s. | *** |

| Nationality:Gender | n.s. | *** | n.s. | ** |

Table 7.

ANOVA results for Q3 and Q4 in the rice conditions. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). “Nationality: Gender” shows the interaction between nationality and gender. The interactions of “Persistency: Nationality”, “Persistency: Gender,” and “Persistency: Nationality: Gender” are omitted because there was no significant difference.

Table 7.

ANOVA results for Q3 and Q4 in the rice conditions. Significant differences are indicated with symbols (*** for , ** for , * for , and + for ). “Nationality: Gender” shows the interaction between nationality and gender. The interactions of “Persistency: Nationality”, “Persistency: Gender,” and “Persistency: Nationality: Gender” are omitted because there was no significant difference.

| | It Felt Like I Was Eating… |

|---|

| | …Steamed Rice | …Curry and Rice |

| Condition | SrTyS | CrTyS | SrTyC | CrTyC |

| Persistency | n.s. | n.s. | n.s. | n.s. |

| Nationality | n.s. | * | n.s. | n.s. |

| Gender | n.s. | n.s. | n.s. | + |

| Nationality:Gender | n.s. | * | n.s. | *** |