PF-TL: Payload Feature-Based Transfer Learning for Dealing with the Lack of Training Data

Abstract

:1. Introduction

2. Preliminaries

2.1. Transfer Learning

2.1.1. Categorization According to Similarity between Domains and Feature Space

2.1.2. Categorization According to Transition Content

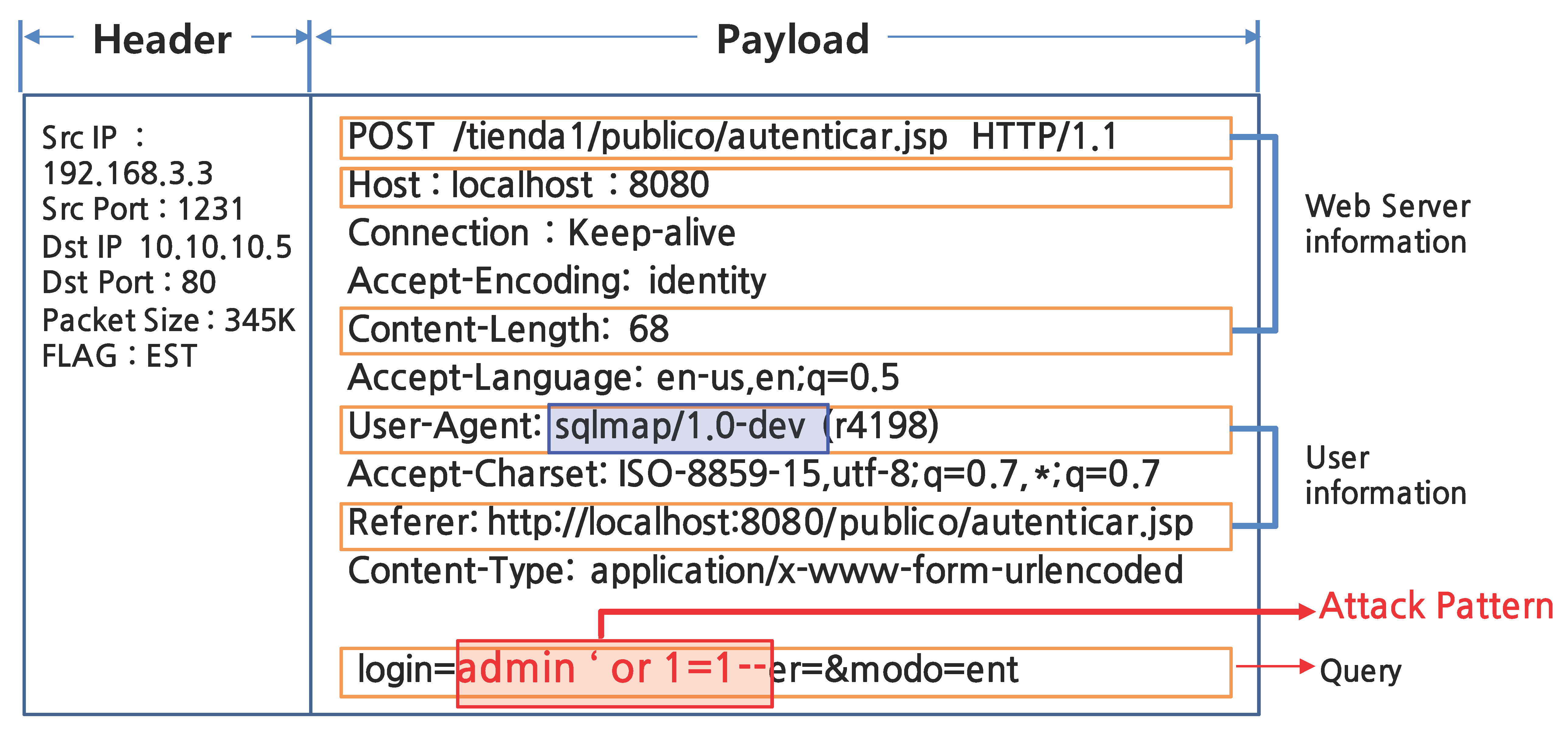

2.2. Intrusion Detection

2.2.1. Signature-Based Intrusion Detection

2.2.2. Anomaly-Based Intrusion Detection

3. PF-TL: Payload Feature-Based Transfer Learning

3.1. Overall Process of Proposed Approach

3.2. Hybrid Feature Extraction for Identifying Attack and Domain Characteristics

3.3. Optimizing Latent Subspace for Both Source and Target Domain Data

- All operations are calculated based on matrix operations.

- Initialize with random number N (0, 1) for the initial value of and .

- Estimates of changes () are estimated using Frobenius Norm.

- Then, select the relation that is orthogonal to each other through QR decomposition as the result value for and , either at the initial or calculated value.

| Algorithm 1: Optimization performance algorithm. |

| input: |

| step = 10,000, tol = |

| Output: |

| Step 1. Normalize T′, S′ |

| Step 2. PCA(S), PCA(T), for Features k |

| Step 3. Initialize: |

| ***QR is QR Decomposition |

| ***QR is QR Decomposition |

| , , |

| Step 4. While Optimized Step 4-1~4-6 not converge or step < steps |

| Step 4-1. Calculate Gradient, |

| , |

| Step 4-2. Calculate Frobenius Norm, |

| , |

| Step 4-3. Update |

| , |

| Step 4-4. Update |

| , , |

| , |

| Step 4-5. Update |

| , , |

| , |

| Step 4-6. Calculate |

| End while |

| Step 5. Calculate orthogonal matrix Q |

| ***QR is QR Decomposition |

| ***QR is QR Decomposition |

4. Experiments and Evaluation

4.1. Dataset

4.1.1. PKDD2007

4.1.2. CSIC2012

4.1.3. WAF2020

4.2. Data Preprocessing

4.2.1. Normalization

| Sample 1: Contents of xml File [54]. |

| <sample id=“88888”> <reqContext> <os>WINDOWS</os> ….. </reqContext> <class> <type>xxxxxxx</type> <inContext>FALSE</inContext> <attackIntervall>xxxxxx</attackIntervall> </class> <request> <method>POST</method> <protocol>HTTP/1.0</protocol> <uri></cxxxxcktp/WKKj_l3333/iAdgxxxx6mMT.gif]></uri> <query><D1d=%5Bddt%2Fsxl&loh=5nddd5Ni=aL1]></query> …… Accept-Language: ++insert+??+++from+++ith+ Referer: http://www.xxxxx.biz/icexxx/uoxxx.zip User-Agent: Mozilla/7.9 (Machintosh; K; PPD 6.1]></headers> </request> </sample> |

4.2.2. Field Selection

4.2.3. Feature Extractor and Selection

4.2.4. Sampling

4.3. Evaluation Environment & Metrics

4.4. Experimental Result

4.4.1. Scenario 1: Transfer Learning Comparison for Different Attack Types on the Same Equipment

4.4.2. Scenario 2: Transfer Learning Comparison for Attack Types from other Equipment

4.4.3. Scenario 3: Application of Scenario 1 and 2 to the Real Environment

4.5. Limitations and Discussion

4.5.1. Algorithm Selection

4.5.2. Parameter Sensitivity

4.5.3. Comparison of Methods of Creating Training Data

5. Related Works

5.1. Payload Based Intrusion Detection

5.2. Transfer Learning for Intrusion Detection

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Hussain, A.; Mohamed, A.; Razali, S. A Review on Cybersecurity: Challenges & Emerging Threats. In Proceedings of the Proceedings of the 3rd International Conference on Networking, Information Systems & Security, Marrakech, Morocco, 31 March 2020; pp. 1–7. [Google Scholar]

- Ibor, A.E.; Oladeji, F.A.; Okunoye, O.B. A Survey of Cyber Security Approaches for Attack Detection, Prediction, and Prevention. IJSIA 2018, 12, 15–28. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Soman, K.P.; Poornachandran, P.; Akarsh, S. Application of Deep Learning Architectures for Cyber Security. In Cybersecurity and Secure Information Systems; Hassanien, A.E., Elhoseny, M., Eds.; Advanced Sciences and Technologies for Security Applications; Springer International Publishing: Cham, Switzerland, 2019; pp. 125–160. ISBN 978-3-030-16836-0. [Google Scholar]

- Prasad, R.; Rohokale, V. Artificial Intelligence and Machine Learning in Cyber Security. In Cyber Security: The Lifeline of Information and Communication Technology; Springer Series in Wireless Technology; Springer International Publishing: Cham, Switzerland, 2020; pp. 231–247. ISBN 978-3-030-31702-7. [Google Scholar]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of Intrusion Detection Systems: Techniques, Datasets and Challenges. Cybersecurity 2019, 2, 20. [Google Scholar] [CrossRef]

- Pachghare, V.K.; Khatavkar, V.K.; Kulkarni, P.A. Pattern Based Network Security Using Semi-Supervised Learning. IJINS 2012, 1, 228–234. [Google Scholar] [CrossRef]

- Gou, S.; Wang, Y.; Jiao, L.; Feng, J.; Yao, Y. Distributed Transfer Network Learning Based Intrusion Detection. In Proceedings of the 2009 IEEE International Symposium on Parallel and Distributed Processing with Applications, Chengdu, China, 10–12 August 2009; pp. 511–515. [Google Scholar]

- Epp, N.; Funk, R.; Cappo, C. Anomaly-based Web Application Firewall using HTTP-specific features and One-Class SVM. In Proceedings of the 2nd Workshop Regional de Segurança da Informação e de Sistemas Computacionais, UFSM, Sta María, Brazil, 25–29 September 2017. [Google Scholar]

- RFC 7540-Hypertext Transfer Protocol Version 2 (HTTP/2). Available online: https://tools.ietf.org/html/rfc7540 (accessed on 18 February 2021).

- Ojagbule, O.; Wimmer, H.; Haddad, R.J. Vulnerability Analysis of Content Management Systems to SQL Injection Using SQLMAP. In Proceedings of the SoutheastCon 2018, St. Petersburg, FL, USA, 19–22 April 2018; pp. 1–7. [Google Scholar]

- Blowers, M.; Williams, J. Machine Learning Applied to Cyber Operations. In Network Science and Cybersecurity; Pino, R.E., Ed.; Advances in Information Security; Springer: New York, NY, USA, 2014; Volume 55, pp. 155–175. ISBN 978-1-4614-7596-5. [Google Scholar]

- Zhang, M.; Xu, B.; Bai, S.; Lu, S.; Lin, Z. A Deep Learning Method to Detect Web Attacks Using a Specially Designed CNN. In Neural Information Processing; Liu, D., Xie, S., Li, Y., Zhao, D., El-Alfy, E.-S.M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 10638, pp. 828–836. ISBN 978-3-319-70138-7. [Google Scholar]

- Pastrana, S.; Torrano-Gimenez, C.; Nguyen, H.T.; Orfila, A. Anomalous Web Payload Detection: Evaluating the Resilience of 1-Grams Based Classifiers. In Intelligent Distributed Computing VIII; Camacho, D., Braubach, L., Venticinque, S., Badica, C., Eds.; Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2015; Volume 570, pp. 195–200. ISBN 978-3-319-10421-8. [Google Scholar]

- Betarte, G.; Pardo, A.; Martinez, R. Web Application Attacks Detection Using Machine Learning Techniques. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 1065–1072. [Google Scholar]

- Chen, C.; Gong, Y.; Tian, Y. Semi-Supervised Learning Methods for Network Intrusion Detection. In Proceedings of the 2008 IEEE International Conference on Systems, Man and Cybernetics, Singapore, 12–15 October 2008; pp. 2603–2608. [Google Scholar]

- Kim, J.; Jeong, J.; Shin, J. M2m: Imbalanced Classification via Major-to-Minor Translation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 13893–13902. [Google Scholar]

- Csurka, G. A Comprehensive Survey on Domain Adaptation for Visual Applications. In Domain Adaptation in Computer Vision Applications; Csurka, G., Ed.; Advances in Computer Vision and Pattern Recognition; Springer International Publishing: Cham, Switzerland, 2017; pp. 1–35. ISBN 978-3-319-58346-4. [Google Scholar]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A Comprehensive Survey on Transfer Learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A Survey of Transfer Learning. J. Big Data 2016, 3, 9. [Google Scholar] [CrossRef] [Green Version]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Microsoft Researchers Work with Intel Labs to Explore New Deep. Available online: https://www.microsoft.com/security/blog/2020/05/08/microsoft-researchers-work-with-intel-labs-to-explore-new-deep-learning-approaches-for-malware-classification/ (accessed on 18 February 2021).

- Use Transfer Learning for Efficient Deep Learning Training on Intel. Available online: https://software.intel.com/content/www/us/en/develop/articles/use-transfer-learning-for-efficient-deep-learning-training-on-intel-xeon-processors.html (accessed on 18 February 2021).

- Wu, P.; Guo, H.; Buckland, R. A Transfer Learning Approach for Network Intrusion Detection. In Proceedings of the 2019 IEEE 4th International Conference on Big Data Analytics (ICBDA), Suzhou, China, 15–18 March 2019; pp. 281–285. [Google Scholar]

- Zhao, J.; Shetty, S.; Pan, J.W.; Kamhoua, C.; Kwiat, K. Transfer Learning for Detecting Unknown Network Attacks. EURASIP J. Inf. Secur. 2019, 1. [Google Scholar] [CrossRef]

- Zhao, J.; Shetty, S.; Pan, J.W. Feature-Based Transfer Learning for Network Security. In Proceedings of the MILCOM 2017-2017 IEEE Military Communications Conference (MILCOM), Baltimore, MD, USA, 23–25 October 2017; pp. 17–22. [Google Scholar]

- KDD Cup 1999 Dataset. Available online: http://kdd.ics.uci.edu/databases/kddcup99/kddcup99.html (accessed on 18 February 2021).

- Wang, J.; Chen, Y.; Yu, H.; Huang, M.; Yang, Q. Easy Transfer Learning By Exploiting Intra-Domain Structures. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 1210–1215. [Google Scholar]

- Buczak, A.L.; Guven, E. A Survey of Data Mining and Machine Learning Methods for Cyber Security Intrusion Detection. IEEE Commun. Surv. Tutor. 2016, 18, 1153–1176. [Google Scholar] [CrossRef]

- Althubiti, S.; Yuan, X.; Esterline, A. Analyzing HTTP requests for web intrusion detection. In Proceedings of the 2017 KSU Conference on Cybersecurity Education, Research and Practice, Kennesaw, GA, USA, 20–21 October 2017; pp. 1–11. [Google Scholar]

- Appelt, D.; Nguyen, C.D.; Panichella, A.; Briand, L.C. A Machine-Learning-Driven Evolutionary Approach for Testing Web Application Firewalls. IEEE Trans. Rel. 2018, 67, 733–757. [Google Scholar] [CrossRef]

- Wang, J.; Zhou, Z.; Chen, J. Evaluating CNN and LSTM for Web Attack Detection. In Proceedings of the 2018 10th International Conference on Machine Learning and Computing, Macau, China, 26 February 2018; pp. 283–287. [Google Scholar]

- Betarte, G.; Giménez, E.; Martínez, R.; Pardo, Á. Machine Learning-Assisted Virtual Patching of Web Applications. arXiv 2018, arXiv:1803.05529. [Google Scholar]

- Mac, H.; Truong, D.; Nguyen, L.; Nguyen, H.; Tran, H.A.; Tran, D. Detecting Attacks on Web Applications using Autoencoder. In Proceedings of the Ninth International Symposium on Information and Communication Technology (SoICT 2018), Danang, Vietnam, 6–7 December 2018; pp. 1–6. [Google Scholar]

- Yin, C.; Zhu, Y.; Fei, J.; He, X. A Deep Learning Approach for Intrusion Detection Using Recurrent Neural Networks. IEEE Access 2017, 5, 21954–21961. [Google Scholar] [CrossRef]

- Xin, Y.; Kong, L.; Liu, Z.; Chen, Y.; Li, Y.; Zhu, H.; Gao, M.; Hou, H.; Wang, C. Machine Learning and Deep Learning Methods for Cybersecurity. IEEE Access 2018, 6, 35365–35381. [Google Scholar] [CrossRef]

- Wang, K.; Stolfo, S.J. Anomalous Payload-Based Network Intrusion Detection. In Recent Advances in Intrusion Detection; Jonsson, E., Valdes, A., Almgren, M., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3224, pp. 203–222. ISBN 978-3-540-23123-3. [Google Scholar]

- Torrano-Gimenez, C.; Nguyen, H.T.; Alvarez, G.; Petrovic, S.; Franke, K. Applying Feature Selection to Payload-Based Web Application Firewalls. In Proceedings of the 2011 Third International Workshop on Security and Communication Networks (IWSCN), Gjovik, Norway, 18–20 May 2011; pp. 75–81. [Google Scholar]

- Raissi, C.; Brissaud, J.; Dray, G.; Poncelet, P.; Roche, M.; Teisseire, M. Web Analysis Traffic Challenge: Description and Results. In Proceedings of the ECML/PKDD, Warsaw, Poland, 17–21 September 2007; pp. 1–6. [Google Scholar]

- Liu, X.; Liu, Z.; Wang, G.; Cai, Z.; Zhang, H. Ensemble Transfer Learning Algorithm. IEEE Access 2018, 6, 2389–2396. [Google Scholar] [CrossRef]

- Ge, W.; Yu, Y. Borrowing Treasures from the Wealthy: Deep Transfer Learning through Selective Joint Fine-Tuning. arXiv 2017, arXiv:1702.08690. [Google Scholar]

- Gao, X.; Shan, C.; Hu, C.; Niu, Z.; Liu, Z. An Adaptive Ensemble Machine Learning Model for Intrusion Detection. IEEE Access 2019, 7, 82512–82521. [Google Scholar] [CrossRef]

- Kreibich, C.; Crowcroft, J. Honeycomb: Creating intrusion detection signatures using honeypots. ACM SIGCOMM Comput. Commun. Rev. 2004, 34, 51–56. [Google Scholar] [CrossRef]

- Nguyen, H.; Franke, K.; Petrovic, S. Improving Effectiveness of Intrusion Detection by Correlation Feature Selection. In Proceedings of the 2010 International Conference on Availability, Reliability and Security, Krakow, Poland, 15–18 February 2010; pp. 17–24. [Google Scholar]

- Chanthini, S.; Latha, K. Log based internal intrusion detection for web applications. Int. J. Adv. Res. Ideas Innov. Technol. 2019, 5, 350–353. [Google Scholar]

- Vinayakumar, R.; Alazab, M.; Soman, K.P.; Poornachandran, P.; Al-Nemrat, A.; Venkatraman, S. Deep Learning Approach for Intelligent Intrusion Detection System. IEEE Access 2019, 7, 41525–41550. [Google Scholar] [CrossRef]

- Zhong, X.; Guo, S.; Shan, H.; Gao, L.; Xue, D.; Zhao, N. Feature-Based Transfer Learning Based on Distribution Similarity. IEEE Access 2018, 6, 35551–35557. [Google Scholar] [CrossRef]

- Zeiler, M.D. ADADELTA: An Adaptive Learning Rate Method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Gallagher, B.; Eliassi-Rad, T. Classification of HTTP Attacks: A Study on the ECML/PKDD 2007 Discovery Challenge. Available online: https://www.osti.gov/biblio/1113394-classification-http-attacks-study-ecml-pkdd-discovery-challenge (accessed on 18 February 2021).

- Csic Torpeda 2012, http Data Sets. Available online: http://www.tic.itefi.csic.es/torpeda (accessed on 18 February 2021).

- IGLOO Security. Available online: http://www.igloosec.com/ (accessed on 18 February 2021).

- Kok, J.; Koronacki, J.; Mantaras, R.L.; Matwin, S.; Mladeni, D.; Skowron, A. Knowledge Discovery in Databases: PKDD 2007. In Proceedings of the 11th European Conference on Principles and Practice of Knowledge Discovery in Databases, Warsaw, Poland, 17–21 September 2007. [Google Scholar]

- Torrano-Gimenez, C.; Perez-Villegas, A.; Alvarez, G. TORPEDA: Una Especificacion Abierta de Conjuntos de Datos para la Evaluacion de Cortafuegos de Aplicaciones Web; TIN2011-29709-C0201; RECSI: San Sebastián, Spain, 2012. [Google Scholar]

- Web Attacks Detection Based on CNN-Csic Torpedo 2012 http Data Sets-GitHub. Available online: https://github.com/DuckDuckBug/cnn_waf (accessed on 18 February 2021).

- ECML/PKDD2007 Datasets. Available online: http://www.lirmm.fr/pkdd2007-challenge/index.html#dataset (accessed on 18 February 2021).

- Kim, A.; Park, M.; Lee, D.H. AI-IDS: Application of Deep Learning to Real-Time Web Intrusion Detection. IEEE Access 2020, 8, 70245–70261. [Google Scholar] [CrossRef]

- Wu, H.; Yan, Y.; Ye, Y.; Min, H.; Ng, M.K.; Wu, Q. Online Heterogeneous Transfer Learning by Knowledge Transition. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Yuan, D.; Ota, K.; Dong, M.; Zhu, X.; Wu, T.; Zhang, L.; Ma, J. Intrusion Detection for Smart Home Security Based on Data Augmentation with Edge Computing. In Proceedings of the ICC 2020–2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Wang, H.; Gu, J.; Wang, S. An Effective Intrusion Detection Framework Based on SVM with Feature Augmentation. Knowl.-Based Syst. 2017, 136, 130–139. [Google Scholar] [CrossRef]

- Gu, J.; Wang, L.; Wang, H.; Wang, S. A Novel Approach to Intrusion Detection Using SVM Ensemble with Feature Augmentation. Comput. Secur. 2019, 86, 53–62. [Google Scholar] [CrossRef]

- Zhang, H.; Yu, X.; Ren, P.; Luo, C.; Min, G. Deep Adversarial Learning in Intrusion Detection: A Data Augmentation Enhanced Framework. arXiv 2019, arXiv:1901.07949. [Google Scholar] [CrossRef]

- Merino, T.; Stillwell, M.; Steele, M.; Coplan, M.; Patton, J.; Stoyanov, A.; Deng, L. Expansion of Cyber Attack Data from Unbalanced Datasets Using Generative Adversarial Networks. In Software Engineering Research, Management and Applications; Lee, R., Ed.; Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2020; Volume 845, pp. 131–145. ISBN 978-3-030-24343-2. [Google Scholar]

- Min, E.; Long, J.; Liu, Q.; Cui, J.; Chen, W. TR-IDS: Anomaly-Based Intrusion Detection through Text-Convolutional Neural Network and Random Forest. Secur. Commun. Netw. 2018, 2018, 4943509. [Google Scholar] [CrossRef] [Green Version]

- The Benefits of Transfer Learning with AI for Cyber Security. Available online: https://www.patternex.com/blog/benefits-transfer-learning-ai-cyber-security (accessed on 18 February 2021).

- Kneale, C.; Sadeghi, K. Semisupervised Adversarial Neural Networks for Cyber Security Transfer Learning. arXiv 2019, arXiv:1907.11129. [Google Scholar]

| Attack Class | Feature List |

|---|---|

| XSS | ’&’, ’%’, ’/’, ’\\’, ’+’, ”’”, ’?’, ’!’, ’;’, ’#’, ’=’, ’[’, ’]’, ’$’, ’(’, ’)’, ’∧’, ’*’, ’,’, , ’<’, ’>’, ’@’, ’ ’, ’:’, ’{’, ’}’, ’ ’, ’.’, ’ ’, ’|’, ’”’, ’<>’, ’‘’, ’<>’, ’[]’, ”==”, ’createelement’, ’cookie’, ’document’, ’div’, ’eval()’, ’href’, ’http’, ’iframe’, ’img’, ’location’, ’onerror’, ’onload’, ’string.fromcharcode’, ’src’, ’search’,’this’, ’var’, ’window’, ’.js’, ’<script’ |

| SQL Injection | char’, ’,’, ’’, ’<’, ’>’, ’ ’, ’.’, ’|’, ’”’, ’(’, ’)’, ’<>’, ’<=’, ’>=’, ’&&’, ’||’, ’:’, ’!=’, ’+’, ’;’, ’ ’, ’count’, ’into’, ’or’, ’and’, ’not’,’null’, ’select’, ’union’, ’#’, ’insert’, ’update’, ’delete’, ’drop’, ’replace’, ’all’, ’any’, ’from’, ’count’, ’user’, ’where’, ’sp’, ’xp’, ’like’, ’exec’, ’admin’, ’table’, ’sleep’, ’commit’, ’()’, ’between’ |

| LDAP Injection | ’\\’, ’*’, ,(’, ’)’, ’/’, ’+’, ’<’, ’>’, ’;’, ’”’, ’&’, ’|’, ’(&’, ’(|’, ’)(’, ’,’, ’!’, ’=’, ’)&’, ’ ’, ’*)’, ’))’, ’&(’, ’+)’, ’=)’,’cn=’, ’sn=’, ’=*’, ’(|’,’mail’, ’objectclass’, ’name’ |

| SSL | ’<!−’, ’−−>’, ’#’, ’+’, ’,’, ’”’, ’access.log’, ’bin/’, ’cmd’, ’dir’, ’dategmt’, ’etc/’, ’#exec’, ’email’, ’fromhost’, ’httpd’, ’log/’ ’message’, ’odbc’,’replyto’, ’sender’, ’toaddress’, ’virtual’, ’windows’,’#echo’, ’#include’, ’var’, ’+connect’, ’+statement’, ’/passwd’, ’.conf’, ’+l’, ’.com’, ’:\\’ |

| Feature | Description |

|---|---|

| url_length | Length of URL area |

| url_kwd_wget_cnt | Number of frequency of inclusion of ‘wget’ in URL area |

| url_kwd_cat_cnt | Number of frequency of inclusion of ‘cat’ in URL area |

| url_kwd_passwd_cnt | Number of frequency of inclusion of ‘passwd’ in URL area |

| url_query_length | Length of QUERY area |

| query_kwd_wget_cnt | Number of frequency of inclusion of ‘wget’ in QUERY area |

| body_length | Length of BODY area |

| body_kwd_wget_cnt | Number of frequency of inclusion of ‘wget’ in BODY area |

| http_method_HEAD | Whether http method value is ‘HEAD’ |

| http_method_PUT | Whether http method value is ‘PUT’ |

| digits_freq | Frequency of inclusion of numbers in URL and QUERY areas |

| Scenario 1 | Scenario 2 | Scenario 3 | |

|---|---|---|---|

| Objective | Is an accurate detection possible when a new type of attack occurs that does not exist in the training dataset? | Is a well-trained model reusable in other network environments? | Will it perform well in the real environment? |

| Experiment setting | Transfer learning comparison experiment for different attack types on the same equipment | Transfer learning comparison experiment for attack types on different equipment of the same model | Scenario 1 Scenario 2 |

| Source Domain | Specific attack type dataset with label | Specific target dataset with label | Labeled dataset |

| Target Domain | Unlabeled specific attack type dataset | Unlabeled specific target dataset | Unlabeled dataset |

| Inter-domain Dataset | Same (PKDD2007) | Different (PKDD2007 and CSIC2012) | Different (PKCS, WAF2020) |

| Inter-domain Feature space | Same | Same | Same |

| Inter-domain Feature distribution | Different | Different | Different |

| Datasets | Labeled | Class | Payload | Sum | Etc. |

|---|---|---|---|---|---|

| PKDD2007 | O | 7 EA | O | 50,116 | Normal: 35,006 Attack: 15,110 |

| CSIC2012 | O | 10 EA | O | 65,767 | Normal: 8363 Attack: 57,404 |

| WAF2020 | O | 13 EA | O | 67,407 | Normal: 10,000 Attack: 57,407 |

| Predicted | |||

|---|---|---|---|

| Positive | Negative | ||

| Labeled | Positive | True Positive (TP) | False Negative (FN) |

| Negative | False Positive (FP) | True Negative (TN) | |

| Rule | Formula |

|---|---|

| Accuracy | (TP + TN)/(TP + TN + FP + FN) |

| Precision | TP/(TP + FP) |

| Recall | TN/(TP + FN) |

| F1-Score | (2 × Recall × Precision)/(Recall + Precision) |

| Source | PKDD2007 | SQLi | LDAPi | XSS |

|---|---|---|---|---|

| Target | PKDD2007 | LDAPi | XSS | SSI |

| Accuracy | No-TL | 0.6548 | 0.8134 | 0.8004 |

| HeTL(2017) | 0.9696 | 0.9690 | 0.9668 | |

| PF-TL | 0.9988 | 0.9990 | 0.9996 | |

| F1-Score | No-TL | 0.4418 | 0.4398 | 0.0060 |

| HeTL(2017) | 0.9180 | 0.9109 | 0.9096 | |

| PF-TL | 0.9997 | 0.9990 | 0.9990 | |

| Source | CSIC2012 | SQLi | LDAPi | XSS |

| Target | CSIC2012 | LDAPi | XSS | SSI |

| Accuracy | No-TL | 0.9730 | 1 | 0.8638 |

| HeTL(2017) | 0.9676 | 0.9686 | 0.9678 | |

| PF-TL | 1 | 1 | 1 | |

| F1-Score | No-TL | 0.9368 | 1 | 0.4837 |

| HeTL(2017) | 0.9120 | 0.9150 | 0.9126 | |

| PF-TL | 1 | 1 | 1 |

| Source | PKDD2007 | SQLi | LDAPi | XSS |

|---|---|---|---|---|

| Target | PKDD2007 | LDAPi | XSS | SSI |

| Accuracy | No-TL | 0.6548 | 0.7954 | 0.8598 |

| HeTL(2017) | 0.9696 | 0.9678 | 0.9704 | |

| PF-TL | 0.9988 | 0.9994 | 0.9992 | |

| F1-Score | No-TL | 0.4418 | 0.6129 | 0.5980 |

| HeTL(2017) | 0.9180 | 0.9127 | 0.9203 | |

| PF-TL | 0.9997 | 0.9985 | 0.9980 | |

| Source | CSIC2012 | SQLi | SQLi | SQLi |

| Target | CSIC2012 | LDAPi | XSS | SSI |

| Accuracy | No-TL | 0.9730 | 0.9730 | 0.8176 |

| HeTL(2017) | 0.9696 | 0.9678 | 0.9704 | |

| PF-TL | 0.9988 | 0.9994 | 0.9992 | |

| F1-Score | No-TL | 0.9368 | 0.0.936 | 0.1648 |

| HeTL(2017) | 0.9180 | 0.9127 | 0.9203 | |

| PF-TL | 0.9997 | 0.9985 | 0.9980 |

| Source | PKDD2007 | XSS | XSS | XSS | XSS |

|---|---|---|---|---|---|

| Target | CSIC2012 | XSS | SSI | SQLi | LDAPi |

| Accuracy | No-TL | 0.1204 | 0.8086 | 0.8370 | 0.2166 |

| HeTL(2017) | 0.9690 | 0.9694 | 0.9698 | 0.9682 | |

| PF-TL | 0.9998 | 1 | 0.9994 | 0.9996 | |

| F1-Score | No-TL | 0.1804 | 0.0825 | 0.3369 | 0.3301 |

| HeTL(2017) | 0.9162 | 0.9172 | 0.9187 | 0.9138 | |

| PF-TL | 0.9995 | 1 | 0.9985 | 0.9990 |

| Source | WAF2020 | SQLi | XSS | OS Cmd |

| Target | WAF2020 | XSS | OS Cmd1 | SQLi |

| Accuracy | No-TL | 0.8755 | 0.8157 | 0.2116 |

| HeTL(2017) | 0.9720 | 0.9726 | 0.9732 | |

| PF-TL | 0.9999 | 0.9999 | 0.9999 | |

| F1-Score | No-TL | 0.5546 | 0.1456 | 0.2196 |

| HeTL(2017) | 0.9247 | 0.9265 | 0.9282 | |

| PF-TL | 0.9999 | 0.9999 | 0.9999 |

| Source | PKCS | SQLi | SQLi | XSS | XSS |

|---|---|---|---|---|---|

| Target | WAF2020 | SQLi | XSS | XSS | SQLi |

| Accuracy | No-TL | 0.1951 | 0.2555 | 0.5296 | 0.5333 |

| HeTL(2017) | 0.9715 | 0.9727 | 0.9708 | 0.9716 | |

| PF-TL | 0.9998 | 0.9998 | 0.9999 | 0.9999 | |

| F1-Score | No-TL | 0.2512 | 0.3112 | 0.4596 | 0.4405 |

| HeTL(2017) | 0.9234 | 0.9269 | 0.9215 | 0.9236 | |

| PF-TL | 0.9995 | 0.9995 | 0.9997 | 0.9997 |

| Source Target | PKDD2007 CSIC2012 | RF 1 | SVM | MLP | KNN |

|---|---|---|---|---|---|

| Accuracy | No-TL | 0.2010 | 0.1900 | 0.2112 | 0.6946 |

| HeTL(2017) | 0.9624 | 0.5162 | 0.7414 | 0.8132 | |

| PF-TL | 0.9638 | 0.8988 | 0.8966 | 0.9146 | |

| F1-Score | No-TL | 0.3135 | 0.3193 | 0.3219 | 0.1724 |

| HeTL(2017) | 0.9260 | 0.3091 | 0.1465 | 0.2634 | |

| PF-TL | 0.9032 | 0.6645 | 0.7608 | 0.7303 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, I.; Lim, J.; Kim, H.K. PF-TL: Payload Feature-Based Transfer Learning for Dealing with the Lack of Training Data. Electronics 2021, 10, 1148. https://doi.org/10.3390/electronics10101148

Jung I, Lim J, Kim HK. PF-TL: Payload Feature-Based Transfer Learning for Dealing with the Lack of Training Data. Electronics. 2021; 10(10):1148. https://doi.org/10.3390/electronics10101148

Chicago/Turabian StyleJung, Ilok, Jongin Lim, and Huy Kang Kim. 2021. "PF-TL: Payload Feature-Based Transfer Learning for Dealing with the Lack of Training Data" Electronics 10, no. 10: 1148. https://doi.org/10.3390/electronics10101148

APA StyleJung, I., Lim, J., & Kim, H. K. (2021). PF-TL: Payload Feature-Based Transfer Learning for Dealing with the Lack of Training Data. Electronics, 10(10), 1148. https://doi.org/10.3390/electronics10101148