Abstract

With increasing core-count, the cache demand of modern processors has also increased. However, due to strict area/power budgets and presence of poor data-locality workloads, blindly scaling cache capacity is both infeasible and ineffective. Cache bypassing is a promising technique to increase effective cache capacity without incurring power/area costs of a larger sized cache. However, injudicious use of cache bypassing can lead to bandwidth congestion and increased miss-rate and hence, intelligent techniques are required to harness its full potential. This paper presents a survey of cache bypassing techniques for CPUs, GPUs and CPU-GPU heterogeneous systems, and for caches designed with SRAM, non-volatile memory (NVM) and die-stacked DRAM. By classifying the techniques based on key parameters, it underscores their differences and similarities. We hope that this paper will provide insights into cache bypassing techniques and associated tradeoffs and will be useful for computer architects, system designers and other researchers.

1. Introduction

In face of increasing performance demands and on-chip core count, the processor industry has steadily increased the depth of cache hierarchy and cache size on modern processors. As a result, the size of last level cache on CPUs has reached tens of megabytes, for example, POWER8 and Haswell processors have 96 MB and 128 MB eDRAM (embedded DRAM) last level caches, respectively [1,2]. GPUs have also followed this trend in recent years, and thus, the size of last level cache (LLC) has increased from 768 KB on Fermi to 1536 KB on Kepler and 2048 KB on Maxwell [3,4,5,6].

Over-provisioning of cache resources, however, is unlikely to continue providing performance benefits for a long time. Caches already occupy more than 30% of the chip area and power budget and this constrains the area/power budget available for cores. For applications with little data reuse, caches harm performance since every cache access only adds to the total latency. Due to this, performance with cache can even be worse than that with no cache [7,8]. These factors have motivated the researchers to explore alternate techniques to improve performance without incurring the overheads of a large-size cache.

Cache bypassing is a promising approach for striking a balance between cache capacity scaling and its efficient utilization. Also known as selective caching [9] and cache exclusion [10], cache bypassing scheme skips placing certain data of selected cores/thread-blocks in the cache to improve its efficiency and save on-die interconnect bandwidth. However, to be fully effective, cache bypassing techniques need to account for several factors and emerging trends, such as nature of processing unit (CPU or GPU), memory technology (SRAM, NVM or DRAM), cache level (first or last level cache), application characteristics, etc. For example, cache bypassing techniques (CBTs) proposed for CPUs may not fully exploit the optimization opportunities in GPUs [11] and those proposed for SRAM caches may not be effective for NVM caches [12]. It is clear that naively applying bypassing can even harm performance by greatly increasing the off-chip traffic and hence, intelligent techniques are required for realizing the full potential of bypassing. Several recently proposed techniques seek to address these challenges.

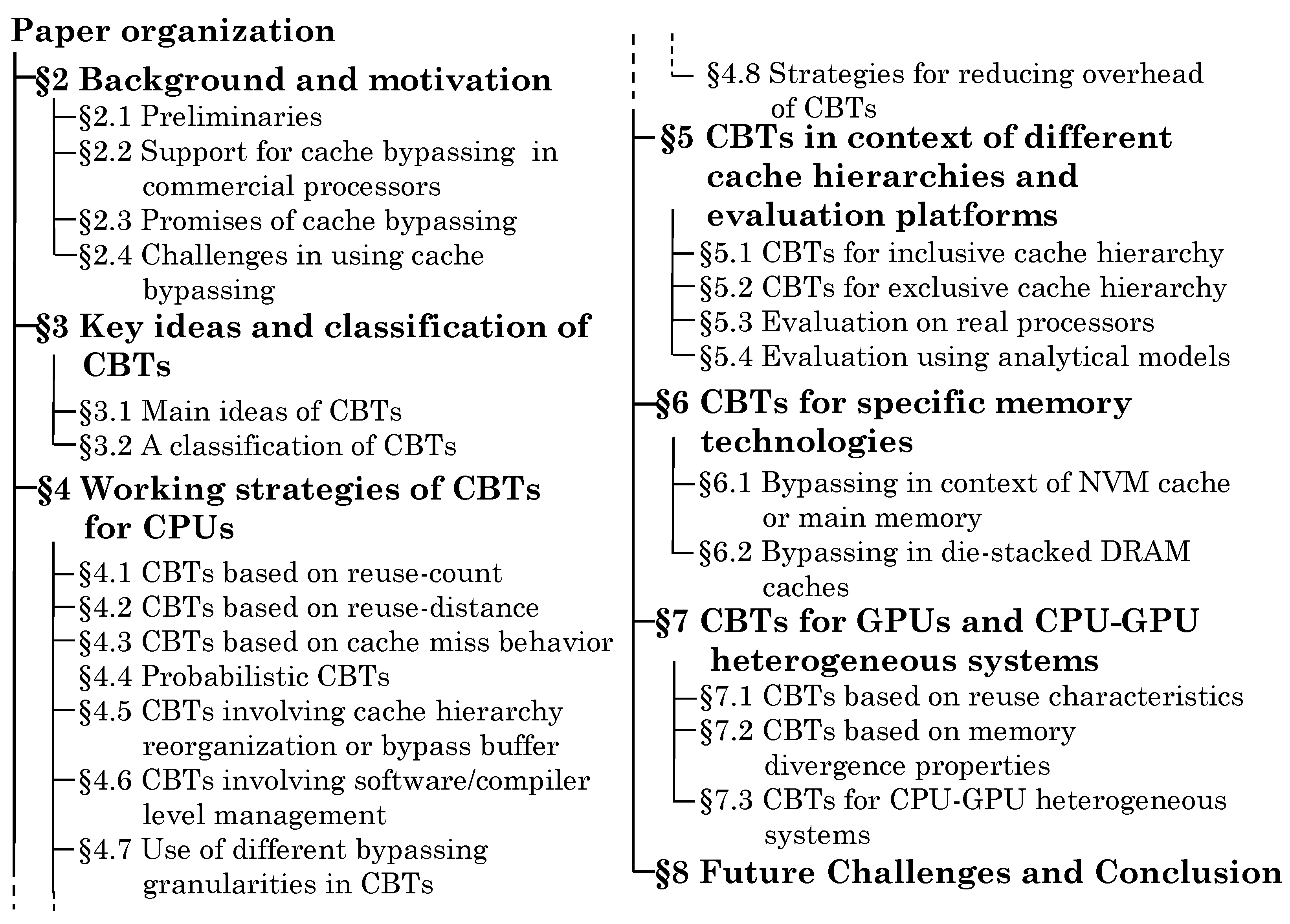

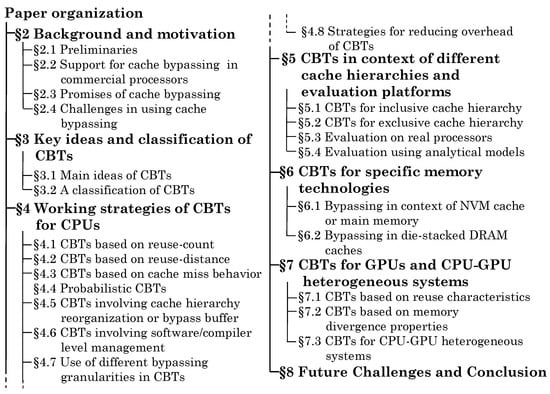

This paper presents a survey of techniques for cache bypassing in CPUs, GPUs and CPU-GPU heterogeneous systems. Figure 1 shows the organization of this paper. Section 2 discusses some concepts related to cache bypassing and support for it in commercial processors. It also discusses opportunities and obstacles in using cache bypassing. Section 3 summarizes the main ideas of several CBTs and classifies the CBTs based on key parameters to highlight their differences and similarities.

Figure 1.

Organization of the paper in different sections.

Section 4 presents CBTs proposed for CPUs in context of conventional SRAM caches. Section 5 reviews CBTs proposed for inclusive/exclusive cache hierarchies. It also discusses techniques evaluated using analytical models and real processors. Section 6 discusses bypassing techniques specific to caches designed with NVM and DRAM memory technologies. Section 7 presents CBTs for GPUs and CPU-GPU systems. In many works, bypassing is used along with other approaches e.g., cache insertion policies. While discussing these works, we mainly focus on the bypassing technique, but also briefly discuss other approaches for showing their connection and the overall approach. Since different works have used different evaluation platforms and methodologies, we mainly focus on their qualitative results. Section 8 presents the conclusion and also discusses some future challenges. We use the following acronyms frequently in this paper: cache bypassing technique (CBT), dead block predictor (DBP), explicitly parallel instruction computing (EPIC), instruction set architecture (ISA), last level cache (LLC), least recently used (LRU), miss-status holding register (MSHR), most recently used (MRU), network-on-chip (NoC), non-volatile memory (NVM), program counter (PC), spin transfer torque RAM (STT-RAM), thread-level parallelism (TLP).

2. Background and Motivation

In this section, we first discuss some concepts and terminologies which will be useful for understanding several CBTs. We then show the support for cache bypassing in commercial processors. Finally, we discuss the promises and challenges of using cache bypassing.

2.1. Preliminaries

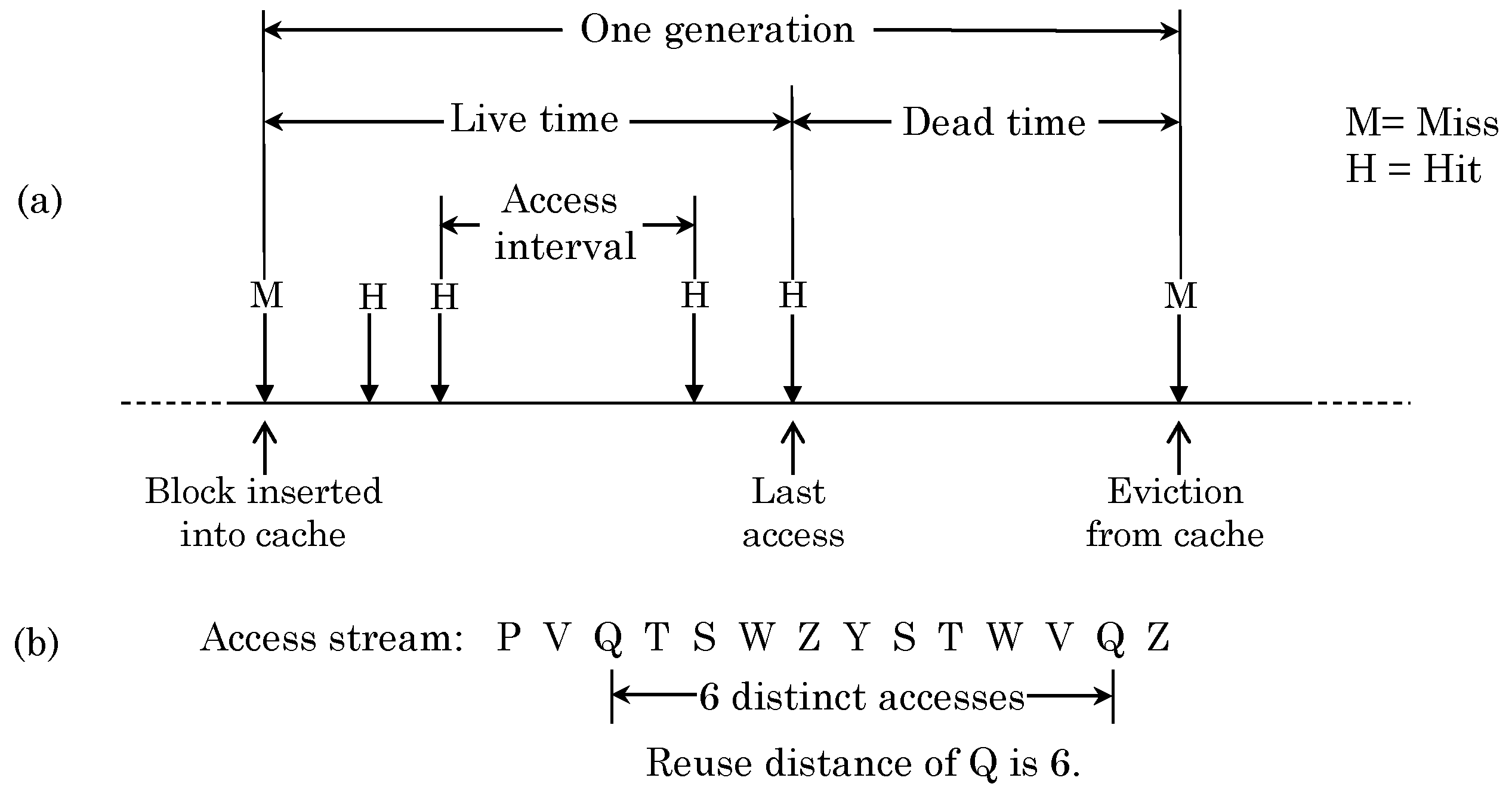

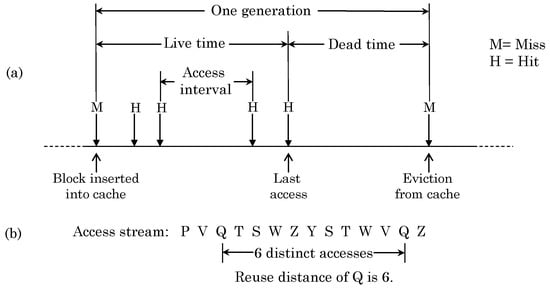

The access stream to a given cache block can be logically divided into multiple generations. Figure 2a shows a typical access stream for one cache block. A cache miss brings a block into the cache, which begins a generation. The time period during which the block sees multiple accesses is termed as live time and time periods between different accesses are termed as access intervals. The reuse count shows the number of references to a block while staying in the cache and in Figure 2a, the reuse count is 4. The last access/write before eviction is termed as closing access/write, respectively [13]. After the last access, the block is termed as dead because it has no more reuse. Clearly, a block with zero reuse count is called dead-on-arrival. Eviction of a block from the cache ends one generation and the time period from insertion to eviction is called generation time.

Figure 2.

(a) Illustration of one generation in cache access stream and (b) Determining reuse distance.

The reuse distance shows the number of accesses seen by a cache set between two accesses to the same cache line (For other definitions of reuse distance, we refer the reader to [14]). This is illustrated in Figure 2b. The program working set is defined as the unique addresses referenced in a given time window [15]. Belady’s OPT [16] is an offline replacement scheme that evicts the block accessed furthest in the future and thus provides a theoretical lower bound on miss-count.

2.2. Support for Cache Bypassing in Commercial Processors

Several commercial processors provide support for cache bypassing. For instance, Intel’s i860 processor [17] provides a special load instruction termed PFLD (pipelined floating-point load). The items fetched using PFLD instruction are bypassed from cache to avoid thrashing or displacing the existing useful data in cache. The additional latency of off-chip access is avoided by virtue of pipelining, such that the load is issued several cycles before those data are actually required. The result is stored in a FIFO (first-in first-out) buffer, which is used by the processor. Due to this, use of PFLD provides better performance than making data noncacheable using page-table entries. Use of PFLD allows mixing load commands to the cache and to the external memory. Also, coherence for PFLD is maintained by first checking the cache since a normal load instruction may have brought the requested data in the cache already.

Similarly, x86 ISA provides bypass instructions for reads/writes with no temporal locality. For example, using MOVNTI instruction, a write can be sent directly to memory through a write-combining buffer, bypassing the cache [18]. For GPUs of compute capability 2.0 or higher, PTX (parallel thread execution) ISA provides load/store instructions to support bypassing [19]. For example, ld.cg specifies that a load bypasses L1 cache and is cached only in L2 cache and below. This request also evicts any existing L1 cache block with the same address [19].

2.3. Promises of Cache Bypassing

Cache bypassing is a promising approach for several reasons.

2.3.1. Performance and Energy Benefits

As discussed earlier, caching data of poor-locality applications can harm performance and this effect becomes increasingly pronounced with non-uniform cache access (NUCA) designs where the latency to the farthest bank greatly exceeds the average access latency. Similarly, in deep cache hierarchies, blocks which are frequently reused in higher-level caches may not show a high reuse in lower-level caches due to filtering by higher-level caches [20] and hence, bypassing these blocks can improve performance.

With an already good replacement policy (e.g., Belady’s OPT policy), a bypass policy does not improve LLC hit rate, although bypassing may still save on-die interconnect bandwidth [21]. However, with inferior replacement policies (e.g., random policy), bypassing can provide large improvement in hit rate [21].

CBTs can also be helpful for saving cache energy. For example, cache reconfiguration techniques work by turning off portions of cache for applications/phases with low data locality [22,23,24]. Since bypassing reduces the data traffic to cache, it can allow cache reconfiguration techniques to more aggressively turn-off cache for saving even larger amount of energy.

2.3.2. Benefits in NVM and DRAM Caches

High leakage and low density of SRAM has motivated researchers to explore its alternatives for designing on-chip caches, such as NVMs and die-stacked DRAM, which provide high density and consume lower leakage power than SRAM [25,26]. However, these technologies also have some limitations, for example, NVMs have low write-endurance and high write latency/energy [27,28] and hence, harmful impact of low-reuse data can be more severe in NVM caches than in SRAM caches [29]. In addition, at small feature size (e.g., smaller than 32 nm), STT-RAM suffers from read-disturbance error where a read operation can disturb the cell being read. CBTs reduce read/write traffic to NVM caches since bypassed blocks need not be accessed from cache, and thus, CBTs can address the above mentioned issues in NVM caches.

Similarly, gigabyte size DRAM caches use large block size (e.g., 2 KB) to reduce metadata overhead [30] which increases cache pollution due to low-reuse data and also wastes the bandwidth. CBTs can allow placing only high-reuse data in the DRAM caches to avoid destructive interference from low-reuse data. Clearly, CBTs can provide additional benefits for these emerging technologies.

2.3.3. Benefits in GPUs

Typical graphics applications have little locality and caching them can lead to severe thrashing. Further, the design philosophy in GPUs is to dedicate large fraction of chip resources for computation, which leaves little resources for caches. Hence, GPUs share small caches between large number of threads, for example, NVIDIA Fermi and Kepler have (up to) 48 KB L1 cache shared between 1536 and 2048 threads/core (respectively), for a per-thread capacity of 32 B and 24 B, respectively [3,4]. Similarly, the per-thread L1 cache capacity for NVIDIA Maxwell is 16 B (24 KB for 2048 threads/core) and for AMD Radeon-7, it is only 6.4 B (16 KB for 2560 threads/core) [5,31,32]. By comparison, per-thread L1 capacity in CPUs is in few KBs, for example, Intel’s Broadwell processor has 32 KB L1 cache for 2 threads per core [33].

Due to the limited cache capacity, equally caching data from all threads can lead to cache pollution and hence, interference in L1D cache and in L1D-L2 interconnect generally causes major bottlenecks in GPU performance [34]. CBTs are vital for addressing these challenges since they can allow achieving performance of a larger cache (e.g., a double size cache [35,36]) without incurring the associated area/power overheads of a larger cache.

2.4. Challenges in Using Cache Bypassing

Despite its promises, cache bypassing also present several challenges.

2.4.1. Implementation Overhead

Since performing naive bypassing for all data structures or/and for entire execution can degrade performance [21,32,37], accurate identification of bypassing candidates is required for reaping the benefits of bypassing. This necessitates predicting future program behavior using either static profiling or dynamic profiling [38]; however, each of these have their limitations. Static profiling techniques use compiler to identify memory access pattern. However, lack of runtime information and variation in input datasets limits the effectiveness of these approaches.

Dynamic profiling techniques infer application characteristics based on runtime behavior. Although they can account for input variation, they incur large latency/storage overhead due to the need of maintaining large predictor tables (e.g., [39]) or per-block counters (e.g., [40]) that need to be accessed/updated frequently. Also, the techniques which make predictions based on PC (e.g., [23]) require this information to be sent to LLC with every access, which requires special circuitry.

2.4.2. Memory Bandwidth and Performance Overhead

Since bypassed requests go directly to the next level cache or memory, they may saturate the network bandwidth and create severe congestion. This increases cache/memory access latency sharply which leads to memory stalls. Further, compute resources remain un-utilized and dissipate power without performing useful work [11]. Further, in CPU-GPU systems, blindly bypassing all GPU requests may increase the cache hit rate of CPU, however, it can degrade the performance of both CPU and GPU [41]. This is because the huge number of bypassed GPU requests cause main memory contention and due to their high row-buffer locality, they may be scheduled before CPU requests.

2.4.3. Challenges in GPUs

Use of cache bypassing in GPUs often requires co-management of thread-scheduling policies (refer to Section 7), such as thread-throttling. However, reducing the degree of multithreading for improving cache utilization may lead to under-utilization of computational and off-chip memory resources [42]. Also, bypassing and thread throttling may have unforeseen impacts on algorithm and avoiding this may demand reformulation of algorithm. This requires significant programmer efforts.

2.4.4. Challenges in Inclusive Caches

A multi-level cache hierarchy is said to be inclusive if the contents of all higher level caches are subset of the LLC and is termed as non-inclusive when the higher level caches may not be subsets of LLC. An exclusive hierarchy guarantees that no two cache levels have common cache contents. While processors such as AMD Opteron use non-inclusive LLC, other processors such as Intel Core i7 use inclusive LLC.

Bypassing violates the assumption of inclusion and hence, using bypassing with inclusive cache hierarchies requires special provisions (refer to Section 5 for more details). For example, the bypassed block can be inserted into the LRU position [39] which ensures that the block is evicted on the next miss to cache set. This, however, still replaces one potentially useful block from the cache, which can be especially harmful for low-associativity caches. Also, in a corner case, where many consecutive accesses are mapped to a cache set, bypassed blocks compete for the LRU position. This reduces their lifetime and causes victimization of same blocks in upper level caches, degrading the performance of inclusive LLCs [43]. Other works use additional storage to track the tags of bypassed blocks for satisfying inclusion property [44]. The limitations of this approach are the additional design complexity and latency/energy overheads.

The techniques presented in next sections aim to address these challenges.

3. Key Ideas and Classification of CBTs

In this section, we first discuss some salient ideas used by different CBTs and then classify the CBTs on key parameters to underscore their features.

3.1. Main Ideas of CBTs

To get insights, we now discuss the essential ideas of architecture-level cache management which are used by various CBTs. Note that these ideas are not mutually exclusive.

- Criterion for making bypass decisions:

- To perform bypassing, different CBTs make decisions based on reuse count [7,9,12,20,21,23,35,36,37,40,45,46,47,48,49,50] or reuse distance [11,14,43,45,51,52,53,54,55,56,57] which are both related (refer to Section 4.1 and Section 4.2).

- Some other CBTs take decision based on miss-rate [10,38,48,58,59,60,61,62], while a few others make decision based on NoC congestion [11], cache port obstruction [29], ratio of read/write energy of the cache [12] or stacked-DRAM bandwidth-utilization [37] (refer to Section 4.3).

- Some techniques bypass thread-private data from shared cache [63], while others bypass physical pages that are shared by multiple page tables [48].

- Some techniques keep counters for every data-block and to make bypassing decision or getting feedback, they compare counters of incoming and existing data to see which one is accessed first or more frequently [39,43,54,56,64,65]. Thus, these and a few other techniques [35,66] use a learning approach where the value of its parameters (e.g., threshold) are continuously updated based on correctness of a bypassing decision.

- Some techniques predict reuse behavior of a line based on its behavior in its previous generation (i.e., last residency in cache) [20,23,40,49,66]. Other techniques infer reuse behavior of a line from that of another line adjacent to it in memory address space, since adjacent lines show similar access properties [54]. Similarly, the reuse pattern of a block in one cache (e.g., L2) can guide bypassing decisions for this block in another cache (e.g., L3) [21,45].

- Classifying accesses/warps for guiding bypassing: Some works classify the accesses, misses or warps into different categories to selectively bypass certain categories. Ahn et al. [13] classify the writes into dead-on-arrival fills, dead-value fills and closing writes (refer to Section 5.1). Wang et al. [53] classify the LLC write accesses into core-write (write to LLC through a higher-level write-through cache or eviction of dirty data from a higher-level writeback cache), write due to prefetch-miss and due to demand miss (refer to Section 6.1). Similarly, Chaudhuri et al. [45] classify cache blocks based on the number of reuses seen by it and its state at the time of eviction from L2, etc. In the work of Wang et al. [67], the LLC blocks which are frequently written back to memory in an access interval are termed as frequent writeback blocks and remaining blocks (either dirty or clean) are termed as infrequent writeback blocks.Collins et al. [10] classify the misses into conflict and capacity (which includes compulsory) misses. Tyson et al. [61] classify the misses based on whether they fetch useful or dead-on-arrival data. For GPUs, Wang et al. [68] classify the warps into locality warps and thrashing warps depending on the reuse pattern shown by them. Liang et al. [69] classify access patterns as partial or full sharing (few or all threads share the same data, respectively) and streaming pattern.

- Adaptive bypassing: Since bypassing all requests degrades performance, some techniques perform bypass only when no invalid block is available [21,45,64] or a no-reuse block is available [14,20].

- Cache hierarchy organization: Some CBTs work by reorganizing the cache and/ore cache hierarchy (refer to Section 4.5). Malkowski et al. [70] split L1 cache into a regular and a bypass cache. B. Wang et al. [68] assume logical division of a cache into a locality region and a thrashing region for storing data with different characteristics and Z. Wang et al. [67] logically divide each cache set into a frequent writeback and an infrequent writeback list. Das et al. [55] divide a large wire-delay-dominated cache into multiple sublevels based on distance of cache banks from processor, e.g., three sublevels may consist of the nearest 4, next 4 and furthest 8 banks from the processor, respectively. Gonzalez et al. [71] divide the data cache into a spatial cache and a temporal cache, which exploit spatial and temporal locality, respectively.Xu and Li [46] study page-mapping in systems with a main cache (8 KB) and a mini cache (512 B), where a page can be mapped to either of them or bypassed. Etsion and Feitelson [36] propose replacing a 32 K 4-way cache with a 16 KB direct-mapped cache (for storing frequently reused data) and a 2 K filter (for storing transient data).Wu et al. [57] present a CBT for micro-cache in EPIC processor. Xu and Li [46] present a technique for bypassing data from main cache or mini cache or both caches. Wang et al. [53] evaluate their CBT for an SRAM-NVM hybrid cache.

- Use of bypass buffers: Some works use buffer/table to store both tags and data [9,10,36,49,65,66,72] or only tags [43,56] of the bypassed blocks. Access to the cache is avoided for the blocks found in these buffers and with effective bypassing algorithms, the size of these buffers are expected to be small [43,49]. The bypassed blocks stored in the buffer may be moved to the main cache only if they show temporal reuse [9,49,73,74]. Chou et al. [37] buffer tags of recently accessed adjacent DRAM cache lines. On a miss to last level SRAM cache, the request is first searched in this buffer and a hit result avoids the need of miss probe in DRAM cache.

- Granularity: Most techniques make prediction at the granularity of a block of size 64 B or 128 B. Stacked-DRAM cache designs may use 64 B block size [37] to reduce cache pollution or 4 KB block size [30,48] to reduce metadata overhead. By comparison, Alves et al. [23] predict when a sub-block (8 B) is dead, while Johnson and Hwu [65] make prediction at the level of a macroblock (1 KB) which consists of multiple adjacent blocks. Lee et al. [48] also discuss bypassing at superpage (2 MB to 1 GB) level (refer to Section 6.2). Khairy et al. [58] disable the entire cache and thus, all data bypass the cache (refer to Section 4.7). Use of larger granularity allows lowering the metadata overhead at the cost of reducing the accuracy of information collected about reuse pattern.

- Use of compiler: Many CBTs use a compiler for their functioning [8,38,46,51,52,57,63,69], while most other CBTs work based on runtime information only (refer to Section 4.6). The compiler can identify thread-sharing behavior [69], communication pattern [52,63], reuse count [8,38,46] and reuse distance [51,57]. This information can be used by compiler itself (e.g., for performing intelligent instruction scheduling [57]) or by hardware for making bypassing decisions.

- Co-management policies: In addition to bypassing, the information about cache accesses or dead blocks has been used for other optimizations such as power-gating [23,75], prefetching [10,50] and intelligent replacement policy decisions [14,23,50,76]. For example, data can be prefetched into dead blocks and while replacing, first preference can be given to dead blocks. The energy overhead of CBTs (e.g., due to predictors) can be offset by using dynamic voltage/frequency scaling (DVFS) technique [70].

- Solution algorithm: Liang et al. [69] present an integer linear programming (ILP) based and a greedy algorithm for solving L2 traffic reduction problem. Xu and Li [46] present a greedy algorithm to solve page-to-cache mapping problem.

- Other features: While most CBTs work with any cache replacement policy, some CBTs assume specific replacement policy (e.g., LRU replacement policy [8]).

Several strategies have been used for reducing implementation overhead of CBTs.

- 11.

- Probabilistic bypassing: To avoid the overhead of maintaining full metadata, many CBTs use probabilistic bypassing approach [36,37,43,56] (refer to Section 4.4).

- 12.

- Set sampling: Several key characteristics (e.g., miss rate) of a set associative cache can be estimated by evaluating only a few of its sets. This strategy, known as set sampling, has been used for reducing the overhead of cache profiling [13,21,37,43,45,51,56,67,76,77]. Also, it has been shown that keeping only a few bits of tags is sufficient for achieving reasonable accuracy [10,76] (refer to Section 4.8).

- 13.

- Predictor organization: Many CBTs use predictors (e.g., dead block predictors) for storing metadata and making bypassing decisions. The predictors indexed by PC of memory instructions incur less overhead than those indexed by addresses [20,23,35,39,53,61,70,71].

3.2. A Classification of CBTs

To emphasize the differences and similarities of the CBTs, Table 1 classifies them based on key parameters. We first categorize the CBTs based on their objectives and from this, it is clear that CBTs have been used for multiple optimizations such as performance, energy, etc. Generally, CBTs aimed at improving performance also save energy, for example, by reducing miss-rate and main memory accesses. The key difference between techniques to improve performance and energy efficiency are that their algorithms may be guided by a performance or energy metric. For example, an energy saving CBT may occasionally bypass a block for saving energy even at the cost of performance loss (e.g., due to higher miss-rate). Similarly, a bypassed block may later need to be accessed directly from main memory which may incur larger energy than the case where the block was in cache already. A CBT designed to improve performance may still bypass this block if it improves performance, whereas a technique designed for saving energy may not bypass this block.

Table 1.

A classification of research works.

As for CBTs designed to ensure timing predictability, they work on the idea that the access latency of a bypassed block is equal to the memory access latency. Also, bypassing some blocks can allow ensuring that other blocks always remain in cache and the access latency for these blocks is equal to cache access latency. Since caches are major sources of execution time variability [78], CBTs can be useful in alleviating the impact of such timing unpredictability. Thus, these CBTs may primarily focus on removing uncertainty in hit/miss prediction instead of improving performance.

Table 1 also classifies the works based on the level in cache hierarchy where a CBT is used and the nature of cache hierarchy. First-level and last-level caches show different properties [79,80], for example, filtering by first-level cache reduces the locality seen by last level cache and hence, dead-block prediction schemes, the length of access intervals, etc. are different in those caches (also see Section 2.3.1).

4. Working Strategies of CBTs for CPUs

In this and the coming sections, we discuss many CBTs by roughly organizing them into several groups. Although many of these techniques fall into multiple groups, we discuss them in a single group only.

4.1. CBTs Based on Reuse-Count

Kharbutli and Solihin [20] present cache replacement and bypassing schemes which utilize counter-based dead block predictors. They note that both live times and access intervals (refer to Section 2.1) are predictable across generations. Based on it, they design two predictors, a live-time predictor and an access interval predictor. The former predictor counts the references to a cache block during the time it stays in the cache continuously and the latter predictor counts the references to a set between two successive references to a given cache block. When these counters reach a threshold, the block is considered dead and becomes a candidate for replacement. The threshold is chosen as the largest of all live times (or access intervals) in current and previous generations. Thus, the threshold of every block is potentially different and is learnt dynamically. These generations are identified by the program counter of the instruction that misses on the block. A predictor table stores this information for blocks that are not in cache and that are fetched again in cache. They further note that bursty accesses to blocks are typically filtered by L1 cache and hence, many blocks in L2 cache are never-reused. Their predictors identify such blocks by seeing whether their thresholds were zero in previous two generations, implying that they were not reused while residing in L2 cache. If the target set has no dead block, then the predicted no-reuse block is bypassed from the cache, otherwise, it is allocated in the L2 cache. They show that their CBT improves performance significantly.

Xiang et al. [49] note that CBTs generally bypass never-reused lines, however, such lines do not occur frequently in many applications and this limits the effectiveness of those CBTs. Instead of bypassing only never reused lines, they propose bypassing less reused lines (LRLs). Their technique predicts the reuse frequency of a miss line based on reuse frequency observed in the previous occurrence of that miss. Then, LRLs are bypassed and kept in a separate buffer. The short lifespan of LRLs enables their technique to use a small buffer and quickly retire majority of LRLs. The lines which cannot be retired are inserted back to L2, or are discarded based on a per-application retirement threshold. Thus, bypassing LRLs enables L2 cache to effectively serve applications with large working set sizes. They show that their technique reduces cache miss-rate and improves performance.

Kharbutli et al. [40] present a CBT that makes bypass decisions on a cache miss based on previous access/bypass pattern of the blocks. With each cache line, a ”USED” bit is employed that is set to zero when the block is allocated in cache and set to one on a cache hit. Thus, at the time of replacement, USED bit shows whether an access was made to the block during its residency in cache. Their technique also uses a history table to record the access/bypass history of every block in their previous generations using 2-bit saturating counter per block. On a cache miss on block P, its counter in the table is read. If the counter value is smaller than 3, P is not expected to be reused while residing in cache and hence, P is bypassed and its counter is incremented. However, if counter value is 3, P is inserted into cache. A victim block is found using cache replacement policy and the counter value of this victim block in the table is updated depending on whether it was accessed while residing in cache (counter set to 3) or not (counter set to 0). Thus, a block is allocated in the cache, if it was accessed in the cache during its residency in the last generation or if has been bypassed 3 times. To adapt to changing application behavior, all counter values are periodically set to zero. Their technique provides speedup by reducing the miss rate.

4.2. CBTs Based on Reuse-Distance

Duong et al. [14] propose a reuse-distance based technique for optimizing replacement and performing bypass. Their technique aims to keep a line in the cache only until expected reuse happens and cache pollution is avoided. This reuse distance is termed `protecting distance’ (PD) and it balances timely eviction with maximal reuse. For LRU policy, PD equals cache associativity, but their technique can also provide PD larger than the associativity. When a line is inserted in cache or promoted, its distance is set to be PD. On each access to the set, this value for each line in the set is decreased by 1 and when this value for a line reaches 0, it becomes unprotected and hence, a candidate for replacement (victim). Since protected lines have higher likelihood of reuse than missed lines, on a miss fetch, if no unprotected line is found in the set, the fetched block bypasses the cache and is allocated in higher level cache. Thus, both replacement scheme and bypass scheme together protect the cache lines. PD is periodically recomputed based on dynamic reuse history such that the hit rate is maximized. By reducing the miss rate, their technique improves performance significantly.

Das et al. [55] note that wire energy contributes significantly to the total energy consumption of large LLCs. They propose a technique which reduces this overhead by controlling data placement and movement in wire-energy-dominated caches. They partition the cache into multiple cache sublevels of dissimilar sizes. Each sublevel is a group of ways with similar access energy, e.g., sublevel 0, 1 and 2 may consist of the nearest 4, next 4 and furthest 8 banks from the processor, respectively. Based on the recent reuse distance distribution of a line, a suitable insertion and movement scheme is used for it to save energy. For example, if a line is expected to receive only one hit after insertion in the cache, moving it to closer cache locations incurs larger energy than accessing them once from a farther location. Similarly, if some lines show reuse within first 4 ways, but no further reuse until cache capacity is exceeded, then these lines can be inserted in the 4 nearest ways and when they are evicted from these ways, they can be evicted from the cache (instead of being placed in the remaining 12 ways). In a similar vein, the lines which are expected to show no reuse are bypassed from the cache. They use their technique for both L2 and L3 caches and achieve large energy saving.

Yu et al. [54] note that cache lines which are adjacent in memory address space show similar access properties, for example re-reference intervals (RRI) [86], reuse distance, etc. For example, if P and Q are consecutive, and P is a dead block, then Q is also expected to be dead. Based on this, they use a table for recording the RRI of cache blocks. When a cache block is to be inserted in an LLC set, the table is accessed for obtaining the expected RRI from that of an adjacent block. This is compared with a threshold (maximum RRI) and if the RRI of the new block is greater than the threshold, it is considered dead and bypassed from the cache. Otherwise, the cache block with the largest RRI from that LLC set is considered and its RRI is compared with that of incoming block. If the RRI of incoming block is greater, the incoming block is bypassed, otherwise, it is inserted in the cache. The RRI of an entry in the table is decreased on a hit in LLC and is increased when a corresponding victim block is replaced. They show that their technique reduces cache misses and improves performance.

Feng et al. [51] present a CBT for avoiding thrashing in LLC. They note that in case of cache thrashing, the forward reuse distance of most accesses are greater than cache associativity. Their technique inserts additional phantom blocks in the regular LRU stack, which gives the illusion of higher associativity. A phantom block does not store tag or data, but otherwise works in the same way as the normal block in LRU stack. When the replacement candidate chosen is a phantom block, the cache is bypassed and data are directly sent to the processor. To find the suitable number of added phantom blocks, they use different number of phantom blocks (e.g., 0, 16, 48 etc.) with a few sampled sets. Periodically, the phantom-block count which leads to fewest cache misses is selected for the whole cache and this helps in adapting to different applications/phases and keeping high-locality data in cache while bypassing dead data. They show that their technique improves performance by reducing cache misses. They also study use of compiler to provide hints. For this, the application is executed with training data set and for each main loop in the application, optimal phantom-block count is obtained by experimenting with different values. These hints are inserted in the application before each main loop and are used during application execution. They show that by using these hints, further reduction in cache misses can be obtained for benchmarks with high miss-rate.

Li et al. [39] note that an optimal bypass policy (that bypasses a fetched block if its reuse distance equals or exceeds that of the victim chosen by replacement policy) achieves performance close to Belady’s OPT plus bypass policy (that first allocates blocks with smallest reuse distance in cache and then bypasses remaining blocks). Since optimal bypass policy cannot be practically implemented, they present a CBT that makes bypass decisions by emulating the operation of optimal bypass policy. Their technique uses a `replacement history table’ that tracks recent incoming-victim block tuples. Then, every incoming-victim block tuple are compared with this table to ascertain the decision of optimal bypass on a recorded tuple. For example, if incoming block is accessed before victim block, no bypassing should be done, but if victim block is accessed first or none of them are accessed in future, bypassing should be performed. To record these learning results, PC-indexed `bypass decision counters’ are used, which are decremented or incremented, depending on whether replacement or bypassing (respectively) is performed for an incoming block. On a future miss, this counter is consulted for an incoming block. A non-negative value of the counter signifies that optimal bypass policy would perform larger number of bypasses for this block in a recent execution window, and hence, their technique decides to perform bypassing. Conversely, a negative value leads to insertion of the incoming block in the cache with replacement of a victim block. They show that their technique provides speedup by reducing the miss rate.

4.3. CBTs Based on Cache Miss Behavior

Collins et al. [10] present a CBT which is based on a miss-classification scheme. They use a table which stores the tag of most recently evicted cache block from each set. If the tag of the next miss in a set is same as that stored in the table, it is marked as conflict miss, since it might have been hit with slightly-higher associativity. Otherwise, the miss is a capacity miss (which also includes compulsory miss). Even storing few (e.g., lower eight) bits of the tag provides reasonably high classification accuracy, although the accuracy increases with increasing tag bits that are stored. They use this information for multiple optimizations, such as cache prefetching, victim cache and cache bypassing, etc. For bypassing, they note that accesses leading to capacity misses show short and temporary bursts of activity. Based on it, their technique bypasses any capacity miss and places it in a bypass buffer. By reducing miss rate, their technique provides large performance improvement.

Tyson et al. [61] note that a small fraction of load instructions are responsible for the majority of data cache misses. They present a CBT which measures miss rates of individual load/store instructions. The data references generated by the instructions, which lead to highest miss rate, are bypassed from the cache. They also propose another version of this technique which records the instruction address which brought a line into the cache. Using this, a distinction is made between those misses that fetch useful (i.e., later reused) data into cache and those misses that fetch dead-on-arrival data. Based on this, only the latter category of data references are bypassed from the cache. They show that their technique improves hit rate and bandwidth utilization.

4.4. Probabilistic CBTs

Etsion et al. [36] note that of the blocks comprising program working set, a few blocks are accessed very frequently and for the longest duration of time, while remaining blocks are accessed infrequently and in a bursty manner. Hence, set-associative caches serve majority of references from the MRU position and thus, they effectively work as direct-mapped caches, while expending energy and latency of set-associative caches. Based on this, they propose a technique which serves hot blocks efficiently and bypasses transient (cold) blocks. They propose two approaches for identifying hot blocks. In threshold based approach, a block that is accessed more than a threshold number of times (e.g., 16) is considered `hot’ and in probabilistic approach, a hot block is identified by randomly selecting memory references by running a Bernoulli trial on every memory access since the long-residency blocks are most likely to get selected. Of these, probabilistic approach does not require any state information and provides comparable accuracy as the threshold-based predictor. In place of a set-associative cache, they propose using a direct-mapped cache for serving hot blocks and a small fully-associative filter to serve the transient blocks. To reduce the overhead of the filter, they use a buffer that caches recent lookups. They show that with a 16 KB direct-mapped L1 cache and a 2 K filter, their technique outperforms a 32 K 4-way cache and also provides energy savings.

Gao et al. [56] present a CBT which performs random bypassing of cache lines based on a probability. This probability is increased or reduced depending on the effectiveness of bypassing, which is recorded based on whether a bypassed line is referenced before the replacement victim. For this, an additional tag and a competitor pointer is used with each set. On a line bypass, this tag holds the tag of the bypassed line and competitor pointer records the replacement victim which would have been evicted without bypassing. Bypassing is considered effective or ineffective, depending on whether the competitor or bypassed tag (respectively) is accessed before the another. When a cache fill happens at the location pointed by competitor pointer, both the competitor pointer and additional tag are invalidated. To evaluate the effect of bypassing when `no-bypassing’ decision is chosen, some recently allocated lines are randomly selected for `virtual bypassing’. Also, the additional tag holds the tag of replacement victim and competitor pointer holds the position of incoming block. If access to replacement victim happens before the incoming block, bypassing is deemed effective. Using set sampling, two dueling policies are evaluated and the winner policy is finally used for the cache.

4.5. CBTs Involving Cache Hierarchy Reorganization or Bypass Buffer

Malkowski et al. [70] present a CBT which reduces memory latencies by bypassing L2 cache for load requests which are expected to miss. They divide the 32 KB L1D cache into a 16 KB regular and a 16 KB bypass portion. The regular L1D cache uses line size of 32 B, while bypass L1D cache uses line size of 128 KB, which is the line size of L2 and data-size transferred from memory in each request. A load-miss predictor (LMP) is also used, which is indexed by PC of the load instruction. The data request of a load instruction accesses both regular and bypass caches. If both show a miss, LMP is accessed, which predicts either a hit or a miss. LMP tracks only those loads that miss in both bypass and regular cache and a newly encountered load is always predicted hit. A predicted hit progresses along the regular cache hierarchy. Depending on whether data are found on a predicted hit, a correct or incorrect prediction is noted in the LMP. If data are found at any cache level, they are not fetched into bypass cache. On a predicted miss, a request is sent to L2 cache by regular L1 and in parallel, an early load request is sent to main memory by bypass cache. If data are found in L2 cache, the ongoing memory request is cancelled, data are stored in regular cache, and the load is considered a correct prediction. If data are not found in L2 cache, main memory provides the data and since the memory access was issued early, its latency is partially hidden, which improves performance. The prediction is flagged as correct and the data are stored in bypass cache. A store instruction proceeds along the path used by load instruction to that address. The L2 cache acts as victim cache for the bypass cache. They show that their technique provides speedup but increases power consumption. By using DVFS along with their technique, both performance and power efficiency can be improved.

John and Subramanian [9] present a CBT which uses an assist structure called annex cache to store blocks which are bypassed from main cache. In their design, all entries to main cache come from annex cache, except for filling at cold start. A block in annex cache is exchanged with a conflicting block in main cache, only when the former has seen two accesses after the latter was accessed. Thus, low-reuse items are bypassed from main cache and only those blocks which have shown locality are stored in main cache. The main difference between annex cache and victim cache is that the annex cache can be directly accessed by the processor. They show that their technique outperforms conventional cache, and performs comparably to victim caches.

Jalminger and Stenström [66] present a CBT which makes bypassing decisions based on reuse behavior of a block in previous generations. Since the reuse history pattern of a block may span over multiple lifetimes in cache, they use a predictor to estimate future reuse behavior by finding repetitive patterns in blocks’ reuse history. A block with no predicted reuse is stored in a bypass buffer while remaining blocks are stored in the cache. For both allocated and bypassed blocks, their actual reuse pattern is used to find whether the prediction was correct and to update the predictor. The predictor is organized as a two-level design such that one table tracks reuse history of each block and using this as an index, a second table is accessed whose output is used for predicting future reuse. They show that even with a single-entry bypass buffer, their technique reduces the L1 cache miss rate significantly.

4.6. CBTs Involving Software/Compiler Level Management

Wu et al. [57] present a bypassing technique for micro-cache (μcache) in EPIC processor, such as Itanium. The μcache is a small cache between core and L1 cache and its size may be 64 B with a 2 KB L1 cache. In statically scheduled EPIC processors, compiler is aware of the distance between a load and it reuse. Based on it, their technique uses compiler analysis and profiling to find loads which should bypass μcache. Assuming L1 latency as T1 cycles, μcache should only store data that will be required before next T1 cycles, otherwise, the load should directly access L1. Thus, an effective bypassing technique can allow μcache to store only critical data that are immediately reused. In their technique, the compiler performs program dependency analysis before instruction scheduling for identifying loads which are reused T1 (or more) cycles after they are issued. The scheduler tries to schedule these loads with T1 cycle latency, since otherwise, they would be scheduled such that their results are required in Tμ cycles (the latency of accessing μcache) due to the fact that by default, the scheduler assumes the load to hit in μcache. At the completion of instruction scheduling, the loads with no reuse in next T1 cycles are marked to bypass the μcache. Finally, cache profiling is done to identify additional loads for bypassing. If a load misses μcache and the loaded data are not reused, the load is marked for bypassing μcache, which avoids the overhead of accessing μcache. They show that their technique reduces μcache miss rate and improves program performance.

Chi et al. [8] present a CBT which makes bypassing decisions based on cost and benefit of allocating a data-item in the cache. The cost of caching is the time to access memory for fetching data and is doubled if caching a block replaces a dirty block. The benefit from caching is the product of number of accesses to data during its cache residency and the difference between access time of memory and cache. In their technique, the compiler builds the control flow graph of the program. For each control flow path, initially all the references are assumed to be cached. Then, for each reference, the cost and benefit from caching the associated line are evaluated assuming LRU replacement policy. At the end, all references for which the cost of caching exceeds its benefit are marked for bypassing. They show that their technique provides large application speedup.

Park et al. [52] note that several memory access patterns such as streaming and producer-consumer communication may lead to inefficient use of caches. They propose two instructions for controlling the level where data structures are stored. Unallocating load signifies a read to a data-item that should not be inserted in caches smaller than reuse distance of the data. Pushing store is a write which stores data in a specific cache-level on a specific core (e.g., a consumer thread’s core). For example, in producer-consumer communication, the data written by one thread are read by other threads. On using an invalidation-based coherence protocol, for each cache block, the producer invalidates its current sharers, fetches the block in its cache(s) and completes the write. After this, the consumer searches the producer and copies the block from the cache of the producer. However, any block fetched in any cache of the producer is not read by it, unless both producer and consumer share a cache. Using pushing store, the block can be directly pushed to the cache of the consumer, instead of invalidating it. On a subsequent read operation to the shared data by the consumer, it’s local cache will already have the updated block. Thus, these instructions decrease coherence traffic and coherence misses for the consumer. Similarly, to improve cache efficiency with streaming pattern, the reuse distance can be provided with their proposed instructions for any variable, based on which the variable can be bypassed from any cache level. Thus, through these instructions, application knowledge can be conveyed to the hardware and they are useful primarily when the working set exceeds a certain cache level. Their approach maintains program correctness and improves performance and energy efficiency.

4.7. Use of Different Bypassing Granularities in CBTs

Alves et al. [23] note that a large fraction of cache subblocks (e.g., 8 B subblock in a 64 B block) brought in the cache are never reused. Also, most of the remaining subblocks are only used a few times (e.g., 2 or 3 times). They present a technique for predicting the reference pattern of cache sub-blocks, including which subblocks will be accessed and how many times they will be accessed. They store past usage pattern at subblock level in a table. This table is indexed by PC of load/store instruction which led to cache miss and the cache block offset of the miss address. Use of PC with offset provides high coverage even with reasonable-size table because a memory instruction sequence generally references the same fields (subblock) of a record (block). Based on the information from this table, on a cache miss, only the subblocks that are expected to be useful are fetched. Also, when a subblock has been touched for expected number of times, it is turned-off. They also optimize the replacement policy to first evict those blocks for which all subblocks have become dead. This helps in offsetting any cache misses caused due to mispredictions in their scheme. They show that their technique saves both leakage and dynamic energy.

Johnson and Hwu [65] present a CBT that performs cache management based on memory usage pattern of the application. Since tracking the access frequency of each cache block incurs prohibitive overheads, they combine adjacent blocks into larger-sized `macroblocks’, although the limitation of using large granularity is that their technique cannot distinguish whether a single block was accessed N times or N blocks saw one access each. The size of macroblocks is chosen such that the cache blocks in a macroblock see relatively uniform access frequency, and the total number of macroblocks in the accessed portion of memory still remains small. For example, by experimenting with 256 B, 1 KB, 4 KB and 16 KB, they find that 1 KB macroblock size provides a good balance. They also use a memory access table (MAT), which uses one counter for each macroblock. MAT is accessed in parallel to data cache and its counters are incremented on every access to the corresponding macroblocks. On a cache miss, the MAT counter of victim candidate is decremented and then compared with the MAT counter of the fetched block. If the former is larger, the fetched block is bypassed, otherwise, the victim block is replaced as done in normal caches. Decreasing the counter of victim block ensures that after a change in the program phase, new blocks can replace existing blocks which have now become useless. In case where data show temporal locality but low access frequency, many useful blocks may be bypassed from the cache. To avoid this, they place the bypassed blocks in a small buffer which allows accessing them with low latency and exploiting temporal locality. To exploit spatial locality, they provision dynamically choosing the size of fetched data on a cache bypass to balance bus traffic reduction and miss-rate reduction. They show that their technique provides large application speedup.

4.8. Strategies for Reducing Overhead of CBTs

Khan et al. [76] note that consistency of memory access patterns across sets allows sampling references to a fraction of sets for making accurate prediction compared to the techniques which track every reference [22]. Further, a majority of temporal locality from LLC access stream is filtered by the mid-level cache which reduces the effectiveness of trace-based predictors in LLC. Based on these, they propose a sampling dead block predictor which samples PCs to find dead blocks. It uses a sampler with partial tag array. For example, with a sampling ratio of 1/64 and 2048 sets in LLC, the sampler has only 32 sets. Only lower 15 bits of tags are maintained since exact matching is not required. Use of sampling reduces area and power requirements. Further, sampler decouples the prediction scheme and LLC design and hence, while LLC may use a low-cost replacement policy, the predictor can use LRU policy since by virtue of being deterministic, LRU allows easier learning and is not affected by random evictions. Also, the sampler can have different associativity than LLC, e.g., using a 12-way sampler with a 16-way LLC provides better accuracy than a 16-way sampler as it evicts the dead blocks more quickly. To predict if a block is dead, their technique uses only the PC of last-access instruction, instead of the trace of instructions referring to that block. Although the sampler still stores the trace metadata, the small size of sample tag array keeps the area and timing overhead small. To reduce the conflicts in prediction table, they use a skewed organization whereby three tables are used instead of one, and each table is indexed by a different hash function. If their DBP predicts a block to be dead-on-arrival, it is bypassed from LLC. Also, their replacement policy preferentially evicts dead blocks. They show that their technique reduces cache misses and improves performance.

5. CBTs for Different Hierarchies and Evaluation Using Different Platforms

Due to the inclusion/non-inclusion requirement, the nature of cache hierarchy impacts the design and operation of the bypassing technique (also see Section 2.4.4). For this reason, in Table 2 we classify the CBTs based on cache hierarchy for which they are proposed.

Table 2.

A classification based on cache hierarchy and evaluation platform.

Table 2 also classifies the works based on their evaluation platform. This is important since real systems allow accurate evaluation and full design-space exploration whereas simulators offer flexibility to evaluate variety of techniques which may even be infeasible to implement on real hardware. Analytical performance models show limit gains from CBTs, independent of a particular application or hardware. Clearly, all three approaches are indispensable for obtaining important insights about CBTs.

We now discuss the CBTs for inclusive and exclusive cache hierarchies and those evaluated on real processors (also see Section 2.2) and using analytical models.

5.1. CBTs for Inclusive Cache Hierarchy

Gupta et al. [43] present a CBT for inclusive caches. Their technique uses a bypass buffer (BB) which stores the tags (but no data) of the cache lines that are bypassed (skipped) from LLC. When BB becomes full, a victim tag is evicted from it and the corresponding cache lines in higher level caches are invalidated to satisfy inclusion property. They note that with an effective bypassing algorithm, the lifetime of a bypassed line in higher level caches should be relatively short and these lines are expected to be dead or already evicted when the tag is evicted from the BB. Hence, a small BB is adequate for ensuring inclusion and achieving most performance gains of bypassing. They show that use of BB enables a bypassing algorithm designed for non-inclusive caches [56] to provide nearly same performance gains for inclusive caches. They also use BB to reduce the implementation cost of CBT proposed by [56] (refer to Section 4.4). For this, a competitor pointer is added with each BB-entry and not with each cache set. Also, for virtual bypassing, a BB-entry is allocated for the replaced block. Thus, the reuse information collected by BB can help in simplifying the design of bypassing algorithms.

Ahn et al. [13] present a technique which bypasses dead writes to reduce write overhead in NVM LLCs. They classify the writes into three types, viz. (1) dead-on-arrival fills; (2) dead-value fills and (3) closing writes (refer to Section 2.1). A dead-on-arrival fill happens due to streaming pattern (a memory region is never re-accessed after a cache fill) and thrashing pattern (between two accesses to the block, many other blocks in the same set are also accessed). Dead-value fill write is one where the filled block gets overwritten before being read. They use a dead-block predictor which predicts (1) and (2) by correlating dead blocks with instruction addresses that lead to those cache accesses. Further, (3) is predicted using the last-touch instruction address of the block to be written back. This scheme works well for non-inclusive caches. For inclusive caches, however, bypassing (1) and (2) violates inclusion property. To address this, they insert these blocks into the LRU position without writing their data and flag it as ”void”. Accesses to ”void” blocks are treated as misses, but the coherence state bits of `void’ blocks are updated as if they were valid. This maintains inclusion while still reducing write-energy through bypassing. They show that their technique provides large speedup and energy saving.

5.2. CBTs for Exclusive Cache Hierarchy

Chaudhuri et al. [45] present a cache hierarchy-aware bypassing scheme for exclusive LLC (L3) and replacement scheme for inclusive LLC. They note that at the end of a block’s residency in L2 cache, the future reuse pattern can be estimated based on that observed during its stay in L2 cache. Based on the factors such as number of reuses seen by a block, its state at the time of eviction from L2 and the request (L3 hit or L3 miss) that inserted the block in L2, they categorize L2 blocks in different classes. For example, one class shows the blocks that were filled in L2 on LLC miss, had `modified’ state at the time of eviction and observed exactly one demand use while it was resident in L2. Their technique dynamically learns the reuse probabilities of these classes and by comparing them with a threshold, flags an L2 evicted block as dead or live. Based on this, if the upcoming reuse distance of this block is much larger than LLC capacity, then this block is marked as a candidate for early eviction in LLC, which allows keeping high locality blocks in the LLC. Further, this information is used to make bypassing decisions in an exclusive LLC. When an L2 evicted block is dead, if the target L3 set has an invalid way, the evicted block is allocated in L3 at the LRU position (i.e., highest age). However, if the L3 set has no invalid way, the evicted dead block is bypassed from L3 and is written to memory (if dirty) or dropped (if clean). Their experiments show that their technique reduces cache misses and improves performance.

Gaur et al. [21] present bypass and insertion algorithms for an exclusive LLC (L3). A block resides in an exclusive LLC from the time of eviction from L2 to the time it is evicted from LLC or is recalled by L2. For an LLC block, they define the recall distance as the average number of LLC allocations between this block’s allocation in LLC and its recall from L2. With an exclusive LLC (L3), a block is allocated in L2 after being fetched from main memory. When it is evicted, it makes its first trip to LLC, which is defined as trip count (TC) being zero. If it is recalled by L2, it makes second trip to LLC (TC = 1). Thus, a large value of trip count shows low average recall distance for a block and the blocks with TC = 0 are candidates for bypassing. Further, the L2 use count of a block shows the number of demand fills plus demand hits seen by it while it stays in L2. Thus, trip count relates with the mean distance between short-term reuse clusters of a block and the use count shows the size of last such cluster. Using these, their technique identifies dead and live blocks and based on this, dead blocks are inserted in LLC only if an invalid location exists in the corresponding set, otherwise, they are bypassed from LLC. They show that their technique improves the performance significantly.

5.3. Evaluation on Real Processor

HP-7200 processor [73] uses a fully-associative on-chip assist cache (2 KB) which is placed parallel to a large direct-mapped data cache (4 KB to 1 MB). A block fetched from memory is first allocated in assist cache. Only when a block shows temporal reuse, it is moved to the data cache, otherwise, it is written-back to memory, bypassing the data cache. This avoids thrashing commonly-observed in direct-mapped data caches.

Xu and Li [46] present a CBT for processors which allow specifying the cache mapping for every virtual page (i.e., whether it is mapped to main cache, mini-cache or is bypassed). For example, Intel StrongARM SA-1110 processor [87] uses an 8 KB 32-way main cache and an 512 B 2-way mini-cache, both of which have 32 B line size and are indexed and tagged by virtual addresses. These caches are mutually exclusive and the compiler can map a page to either of them or bypass it by setting suitable bits. The purpose of mini cache is to hold large data structures so that cache thrashing in main cache is avoided. They show that the optimal page-to-cache mapping problem, which minimizes average memory access time, is NP-hard. Hence, they propose a polynomial-time heuristic that uses greedy strategy to map most accessed pages in the main cache. This memory-profiling guided heuristic begins with the assumption that all pages are mapped to main cache. Then, pages are considered in decreasing order of access count, and they are selectively mapped to mini-cache or are bypassed such that memory access time does not increase. They show that their technique reduces execution time and energy consumption.

5.4. Evaluation Using Analytical Models

Some CBTs use analytical models to guide their bypassing algorithm. Use of analytical models does not incur overhead at run-time, however the limitation of these models is that they may not accurately account for input and runtime variations. We now discuss some of these techniques.

Zhang et al. [12] present a CBT for NVM caches that works based on statistical behavior of the entire cache, and not merely a single block. They define data reuse count (DRC) as the total number of references to a block after its allocation in cache. They analytically model the energy cost of bypassing or not-bypassing a block in L2 cache, in terms of read and write energy-values of L2 and L3 cache. They note that for symmetric memory technologies (e.g., SRAM and eDRAM), L2 write energy is much smaller than L3 read energy, but for asymmetric technologies (NVMs), they can be comparable. Hence, only those blocks which show DRC higher than a threshold (called bypassing depth) should be allocated in L2. For example, a block with DRC ≥ 1 can be allocated in an SRAM L2, but only those with DRC ≥ 6 should be allocated in an STT-RAM L2 (L3 is STT-RAM in both cases). The value of bypassing depth is updated periodically. They show that their technique improves the performance and energy efficiency significantly.

Wang et al. [29] note that for area optimization, large LLCs are typically designed using single-port memory bitcells instead of multi-port bitcells. However, in a single-port cache, an ongoing write may block the port and delay subsequent performance-critical read requests. Also, in a multicore processor, write requests from one core may obstruct accesses from other cores. Also, due to the high latency of NVM, this issue is more severe in NVM caches than in SRAM caches. They propose a technique to mitigate such port obstruction in NVM LLCs. They analytically model the cost and benefit from cache bypassing in terms of read/write latency of LLC and main memory. Based on it, the processes which may cause LLC port obstruction in any execution interval are detected and the data from these processes are bypassed from LLC. They show that their technique saves energy and also improves performance.

6. CBTs for Specific Memory Technologies

As discussed in Section 2.3.2, CBTs can be highly effective in addressing limitations and exploiting opportunities presented by NVMs and DRAM. For example, cache bypassing reduces accesses to cache which improves the lifetime of NVM caches [72]. Similarly, cache bypassing can mitigate bandwidth bottleneck in large DRAM caches [37]. Table 3 summarizes the CBTs proposed for these technologies and we now discuss them briefly.

Table 3.

A classification of CBTs for NVM and DRAM caches.

6.1. Bypassing in Context of NVM Cache or Main Memory

Wang et al. [53] present a block placement and migration policy for SRAM-NVM hybrid caches. They classify the LLC write accesses into three classes: core-write (write to LLC through a higher-level write-through cache or eviction of dirty data from a higher-level writeback cache), prefetch-write (write due to prefetch-miss) and demand-write (write due to demand miss). They use access pattern predictors to identify dead blocks and write-burst blocks. These predictors work on the intuition that the future access pattern of a memory access instruction PC is likely to be similar to that in previous accesses. They define the read-range of a demand/prefetch-write access as the largest interval between consecutive reads of the block from the time of filling until time of eviction. The demand-write blocks with zero read range are dead-on-arrival and such blocks are bypassed from the LLC. Further, demand-write blocks with immediate or distant read-range are placed in NVM (e.g., STT-RAM) ways, which reduces the pressure on SRAM ways and leverages the large capacity provided by NVM. They show that their technique reduces writes to NVM and improves performance.

Wang et al. [67] note that writing back dirty data to NVM main memory incurs high latency and energy overheads. They propose a technique which aims to keep frequently used data blocks in LLC, based on the insight that such data are also frequently written-back data. They dynamically partition each LLC set into a `frequent’ and an `infrequent’ writeback list. Then, the optimal size of each list is found based on the miss penalty for clean and dirty blocks. For example, for a 16-way cache, the size of these lists can be 4 and 12, respectively. If the optimal size of frequent writeback list equals the associativity of cache, their technique further uses set-sampling to check whether bypassing the read requests from cache provides smaller number of misses than not bypassing them. Based on this, the decision about bypassing the cache is taken. They show that thrashing workloads especially benefit from bypassing and overall, their technique leads to significant reduction in writebacks to NVM main memory.

6.2. Bypassing in Die-Stacked DRAM Caches

Chou et al. [37] note that DRAM caches consume bandwidth not only for data transfers on cache hits, but also for secondary operations e.g., miss detection, fill on a miss and writeback probe. They propose a technique which minimizes bandwidth used for each of these secondary operations. Since DRAM caches can stream multiple tags on every access, their technique buffers the tags of recently referenced adjacent cache lines in a separate storage. On a miss to on-chip last level SRAM cache (LLC), the request is first looked up in this storage and a hit in this avoids the need of miss probe in DRAM cache. To reduce bandwidth of cache fills, no-reuse lines can be bypassed. Since naive bypassing hurts hit rate, they perform bandwidth-aware bypass. They define a probabilistic-bypassing scheme which bypasses certain fraction (e.g., 90%) of total misses from the cache. Their technique uses set-dueling to dynamically choose a scheme from no-bypassing and probabilistic-bypassing that provides least miss-rate and then uses this scheme for the whole cache. Thus, their technique trades off bandwidth-saving with hit-rate degradation and allows controlling the hit-rate loss. To reduce bandwidth wasted in writeback probe, they use one bit with each cache line in LLC that tracks whether the line is present in DRAM cache. On eviction of a dirty line from LLC, this bit is checked, and if this bit indicates that the line is not present in DRAM cache, a writeback probe is avoided. By virtue of reducing bandwidth consumption, their technique reduces queuing delay which leads to reduced cache hit latency and improved performance.

Lee et al. [48] note that traditional DRAM caches use both TLB and cache tag array for performing virtual-to-physical and physical-to-cache address translation. However, these designs incur significant tag store overhead. They propose using caching granularity to be the same as OS page size (e.g., 4 KB) which avoids the need of tags altogether. They use a cache-map TLB (cTLB) which holds virtual-to-cache address mappings, instead of virtual-to-physical mappings. On a TLB miss, the requested block is allocated in cache (if not there already) and both page table and cTLB are updated with virtual-to-cache mapping. With large DRAM caches, an access to memory region within TLB reach always produces a cache hit since TLB directly provides the cache address of the desired block without requiring tag-checking. The remaining cache space works as victim cache for recently evicted memory pages of cTLB. For performing bypassing, they use an additional bit in page table which decides whether a page bypasses the DRAM cache (but not the on-chip caches e.g., L1 and L2). Using this, pages containing no or few useful blocks can be bypassed from DRAM cache. Similarly, for architectures that use superpages (e.g., 2 MB–1 GB), a superpage can be bypassed from DRAM cache if it does not have sufficient temporal or spatial locality. Further, when a same physical page is shared by multiple page tables, a physical page may be cached at multiple locations and to avoid this, shared pages can be bypassed from DRAM cache. To illustrate the potential of their design, they propose a CBT which sets bypassing flag for pages that have access count smaller than 32, assuming a page size of 4 KB and block size of 64 B. Their technique improves performance by reducing bandwidth consumption and increasing DRAM cache hit-rate.

7. CBTs for GPUs and CPU-GPU Heterogeneous Systems

Table 4 summarizes the CBTs proposed for GPUs and CPU-GPU systems and also highlights their characteristics.

Table 4.

A classification of CBTs proposed for GPUs and CPU-GPU systems.

We first discuss some key ideas used by these CBTs and then discuss several CBTs.

- In CPU-GPU systems, requests from GPUs can be bypassed by leveraging latency tolerance of GPU accesses (Table 4).

- Several techniques perform bypassing primarily based on reuse characteristics (or utility) of a block (Table 4). For example, these techniques may bypass streaming or thrashing blocks.

- Under GPU’s lock-step execution model, using different cache/bypass decision for different threads of a warp would create differences in their latencies and hence, all the threads would be stalled till the completion of last memory request. By making identical caching/bypassing decision for all threads, and by caching few warps at a time, these memory divergence issues can be avoided (Table 4). Based on these, some techniques seek to cache a warp fully and not partially [11,32,34,38,59,60,68]. Some techniques work by caching/bypassing two warps together or individually [69] or performing request reordering [34,64]. Thus, these techniques perform bypassing together with a thread management scheme.

- Some techniques perform bypassing when the resources (e.g., MSHR) for servicing a miss cannot be allocated (Table 4).

- For several GPU applications, the cores show symmetric behavior and hence, by comparatively evaluating different policies on just few cores, the optimal policy can be selected for all the cores. This strategy, referred to as core sampling, has been used by several CBTs to reduce their metadata overheads (Table 4). Li et al. [47] use core-sampling to ascertain cache friendliness of an application such that one core uses their bypassing scheme and another core uses default caching scheme and best scheme is found by comparing their miss-rates. Mekkat et al. [77] determine the impact of bypassing on GPU performance by using two different bypassing thresholds with two different cores. Chen et al. [11] estimate `protecting distance’ on a few cores and use this value for the remaining cores.

7.1. CBTs Based on Reuse Characteristics

Li et al. [47] propose a CBT for L1D caches in GPU. Their technique decouples the tag and data stores of L1D cache and uses locality filtering in tag store to decide which memory requests can allocate data blocks in data store. Each tag store entry keeps a reference counter (RC) to record the reuse frequency for that address. On a memory request, the tag store is probed. On a miss, a new tag entry is allocated and if no free entry is available, the entry with the smallest RC is selected as victim. In both cases, the request is bypassed from the cache. If tag store probe shows a hit, the corresponding data store entry is checked. If such an entry exists, the request proceeds as the regular cache hit. Otherwise, RC is incremented and compared against a threshold. If RC is lower than the threshold, the block is assumed to show little or no reuse and hence, is bypassed from the cache. However, if RC exceeds the threshold, a new entry is allocated in the data store and if no free block exists, a victim block is evicted. Also, the RC value of all other entries in the set are reduced by one, to ensure that entries with no reuse or distant reuse are eventually evicted from the tag store. This approach benefits cache-unfriendly applications, however, for cache-friendly applications, it delays storage of data in the cache which harms performance. To avoid this, they detect cache friendliness of an application during execution. Using core-sampling, one core uses their approach, while another core uses default caching approach. Periodically, the miss-rates of both the cores are compared and the approach used in the core showing lower miss-rate is then used for all the cores. They show that their technique provides energy saving and speedup in cache-unfriendly irregular applications without affecting cache-friendly regular applications.

Tian et al. [35] present a CBT which bypasses streaming values from L1 cache. They use PC of the last memory instruction to predict dead blocks since indexing the predictor using PCs of memory instructions incurs smaller storage overhead than indexing using addresses accessed as there are only few distinct PCs. On every access to the predictor table, a confidence value is obtained. If this value exceeds a threshold, the block accessed by that PC is predicted to be dead. Since wrong predictions lead to additional accesses to lower-level caches, they propose a scheme to correct mispredictions. On an L1 cache bypass, the information is sent to the L2 cache and is stored with the L2 block. If the block is accessed again before eviction from L2, this information is also sent along with the requested data. This indicates a possible error in prior bypass prediction. Based on it, this block is not bypassed the next time, but is inserted in L1 cache for verification and exploiting possible data reuse. By virtue of reducing cache pollution and avoiding unbeneficial cache fills/evictions, their technique improves performance and saves energy.

Choi et al. [63] propose write-buffering and read-bypassing for managing GPU caches. These techniques control data placement in shared L2 cache for reducing the memory traffic. They identify the data usage characteristics by code analysis and use this information to perform data placement for individual load/store instructions in the cache. By leveraging this, write-buffering uses shared cache for inter-block communication so that intermediate data need not be stored in off-chip memory. Read-bypassing avoids allocating streaming data in shared cache which are used by one thread-block only. These data are directly allocated in per-block shared memory or L1 cache. This frees L2 cache for storing shared data and/or data allocated for write-buffering. By virtue of reducing off-chip traffic, their techniques achieve large performance improvement.