Abstract

Neuromorphic circuits emulate the brain’s massively parallel, energy-efficient, and robust information processing by reproducing the behavior of neurons and synapses in dense networks. Memristive technologies have emerged as key enablers of such systems, offering compact and low-power implementations. In particular, locally active memristors (LAMs), with their ability to amplify small perturbations within a locally active domain to generate action potential-like responses, provide powerful building blocks for neuromorphic circuits and offer new perspectives on the mechanisms underlying neuronal firing dynamics. This paper introduces a novel second-order locally active memristor (LAM) governed by two coupled state variables, enabling richer nonlinear dynamics compared to conventional first-order devices. Even when the capacitances controlling the states are equal, the device retains two independent memory states, which broaden the design space for hysteresis tuning and allow flexible modulation of the current–voltage response. The second-order LAM is then integrated into a FitzHugh–Nagumo neuron circuit. The proposed circuit exhibits oscillatory firing behavior under specific parameter regimes and is further investigated under both DC and AC external stimulation. A comprehensive analysis of its equilibrium points is provided, followed by bifurcation diagrams and Lyapunov exponent spectra for key system parameters, revealing distinct regions of periodic, chaotic, and quasi-periodic dynamics. Representative time-domain patterns corresponding to these regimes are also presented, highlighting the circuit’s ability to reproduce a rich variety of neuronal firing behaviors. Finally, two unknown system parameters are estimated using the Aquila Optimization algorithm, with a cost function based on the system’s return map. Simulation results confirm the algorithm’s efficiency in parameter estimation.

1. Introduction

Spiking and bursting activities represent fundamental signaling patterns in biological nervous systems, forming the basis for complex processes involved in computation, communication, and neurological disorders [1]. Over the past decades, researchers have proposed a variety of electronic and computational models to emulate the intricate behaviors of neurons and synapses, demonstrating their potential in artificial intelligence applications [2,3,4]. Neuromorphic computing has thus emerged as a transformative approach for developing future generations of intelligent hardware [5,6]. Recent studies have further highlighted the diversity of memristor-based neuromorphic applications. For example, a cross-hemispheric memristive neural network with a discrete corsage memristor has been proposed for secure telemedicine encryption, demonstrating how neuromorphic circuits can be leveraged in medical IoT contexts [7]. Similarly, memristor-based GFMM neural network circuits have been applied to multi-objective decision making in industrial autonomous firefighting systems [8]. More recently, tri-memristor hyperchaotic ring neural networks have been reported, combining dynamic analysis with hardware implementation, and applied to image encryption [9]. These works underline the increasing maturity of memristive neuromorphic technologies across different application domains. Such systems rely on networks of artificial neurons and synapses capable of processing sensory inputs with massive parallelism while consuming minimal energy [10,11]. Achieving scalable, high-density, and high-performance neuromorphic architectures, however, critically depends on the integration of memory-based devices that can function as both synaptic connections and neuron-like elements [12,13,14].

Various approaches have been explored for implementing artificial neurons in analog neural network circuits, including designs based on operational amplifiers, analog comparators, and CMOS inverters [15,16,17,18]. Among these, the memristor has emerged as one of the most promising components for constructing brain-inspired analog systems [19,20]. Its unique combination of non-volatility, low power operation, high integration density, and tunable resistance makes it an ideal candidate for neuromorphic circuits. Memristors have been extensively utilized in the design of neuron models, synaptic elements, and large-scale neural networks [21,22,23,24,25,26]. Compared with traditional silicon-based devices, memristors offer smaller device dimensions, superior energy efficiency, and seamless scalability. Moreover, their intrinsic nonlinear and non-volatile behavior mirrors the adaptive properties of biological synapses, while single-compartment memristors can emulate ion-channel dynamics underlying neuronal spiking [27,28]. Consequently, memristors have become a key building block for developing compact, energy-efficient neuromorphic systems capable of complex information processing [29,30,31]. Recent application-oriented contributions include memristive neural networks designed for secure telemedicine [32] and for multi-objective decision-making [33], which highlight the growing role of memristive devices in system-level neuromorphic applications.

Replicating the dynamic behaviors of biological neural networks through memristive neuromorphic systems has become a compelling strategy for advancing artificial intelligence devices. A significant development in this context is the concept of the locally active memristor (LAM), first introduced by Chua in 2014 [34]. Unlike conventional passive memristive devices, a LAM exhibits negative differential resistance or conductance in its current-voltage characteristic [35,36]. This unique property enables it to operate within a locally active domain (LAD), where even small perturbations can be amplified to generate action potential-like responses. Such intrinsic excitability makes LAMs particularly advantageous for implementing spiking and bursting mechanisms in neuromorphic circuits, providing a natural pathway toward compact and energy-efficient neuron emulators [37,38]. Xu et al. [39] proposed an implementable Hodgkin–Huxley (HH) circuit incorporating two N-type LAMs to emulate ion channel properties, successfully reproducing both periodic and chaotic firing activities. Ding et al. [40] developed a fully memory-element-emulator-based bionic circuit and investigated its rich dynamical behaviors and firing patterns. More recently, Mou et al. [41] employed a novel LAM model to couple FitzHugh–Nagumo and Hindmarsh–Rose neurons, revealing complex dynamical characteristics and further proposing a digital signal processing (DSP)-based implementation. These studies collectively demonstrate the promise of first-order LAM devices for modeling neuronal excitability, hardware prototyping, and application-driven architectures. However, they remain fundamentally constrained by the single-state nature of the memristor models employed.

The FitzHugh–Nagumo (FHN) neuron circuit, derived as a simplification of the Hodgkin–Huxley model, is widely used due to its simplicity and ability to reproduce spiking dynamics. Over the years, this circuit and its enhanced variants have been extensively studied in the literature [42,43,44]. Incorporating locally active memristors into the FHN framework represents an important advancement, as it enhances the circuit’s capability to generate diverse neural firing behaviors. In this paper, we propose a novel second-order locally active memristor (LAM) integrated into the FHN circuit.

The main contributions of this work are summarized as follows. First, we design a second-order LAM featuring two coupled state variables. Unlike conventional first-order LAMs, which contain only a single state variable and therefore a single memory timescale, the proposed second-order structure provides a higher-dimensional dynamic memory. This enables richer hysteresis characteristics, flexible modulation of nonlinear responses, and the reproduction of complex firing transitions such as quasi-periodic oscillations and mixed-mode bursting that are difficult to capture with first-order devices. Even when the two capacitances are equal, the device maintains independent state evolutions, broadening the potential for neuromorphic design. Second, we integrate the proposed second-order LAM into the FitzHugh–Nagumo neuron model and study its response under both direct current (DC) and alternating current (AC) external excitations. Compared with previously reported N-type LAM-based FHN circuits [37], which successfully reproduced periodic and chaotic firing behaviors with hardware validation but remained limited to first-order memristor dynamics, our approach introduces an additional degree of freedom in the device itself. This higher-order formulation allows the system to exhibit a broader range of firing regimes, including chaotic bursting even in the absence of AC stimulation and periodic spiking even in the absence of any stimulation. Third, we address the parameter estimation problem by applying the Aquila Optimization algorithm with a return-map-based cost function. The parameter estimation task in this work focuses on identifying the two key capacitances of the second-order LAM which are essential for reproducing the correct firing behaviors of the circuit. While earlier LAM–FHN studies primarily focused on dynamical analysis and experimental verification, our work complements them by demonstrating that robust parameter identification is feasible even in chaotic regimes, where traditional estimation methods fail.

2. Circuit Modeling

2.1. Description of the Second-Order LAM

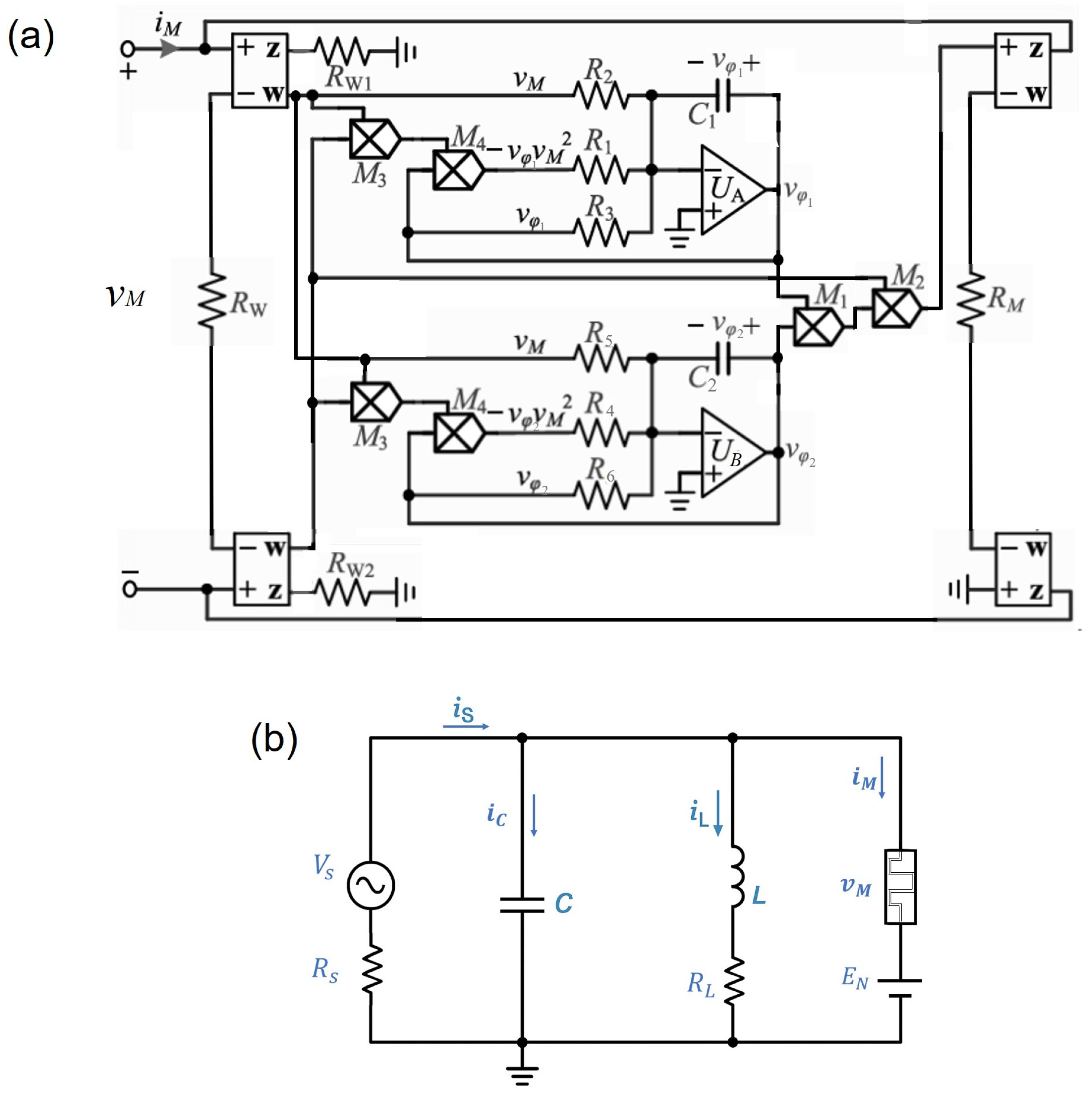

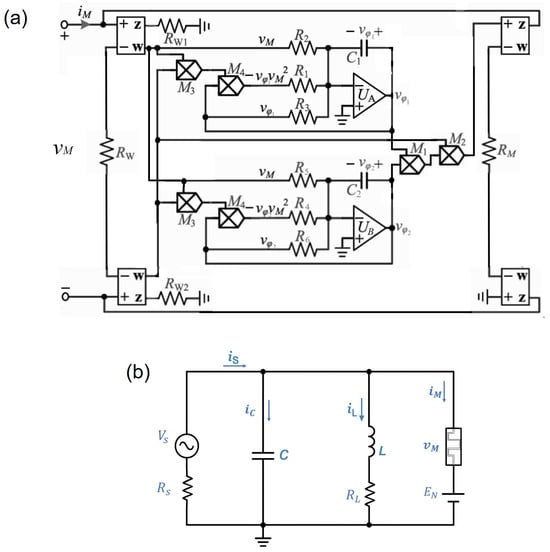

In this study, a second-order LAM is introduced, depicted schematically in Figure 1a. The LAM is constructed using a combination of operational amplifiers and analog multipliers to emulate the properties of a memristor. The setup consists of two main components: a floating ground module and an integrator module. The floating ground module is implemented using current-feedback operational amplifiers, which create a virtual ground decoupled from the rest of the system, enabling precise control of voltage and current states. The integrator module, implemented with an operational amplifier, is responsible for capturing the internal state of the memristor and providing feedback into the system. This configuration enables the LAM to simulate memristive behaviors, with the voltage and current relationship governed by two internal state variables. The state equations for this system can be expressed as:

where represents the current passing through the LAM, the terminal voltage, and and are the internal state variables. are the gains of analog multipliers to . We adjust the circuit parameters of the N-type LAM as , , kΩ, kΩ, kΩ, kΩ, kΩ, kΩ. The capacitance values are initially set to C1 = C2 = 100 nF, and later varied during dynamical analysis to obtain richer behaviors.

Figure 1.

(a) Schematic of the second-order locally active memristor (LAM); (b) Schematic of the Fitzhugh-Nagumo circuit containing the second-order LAM.

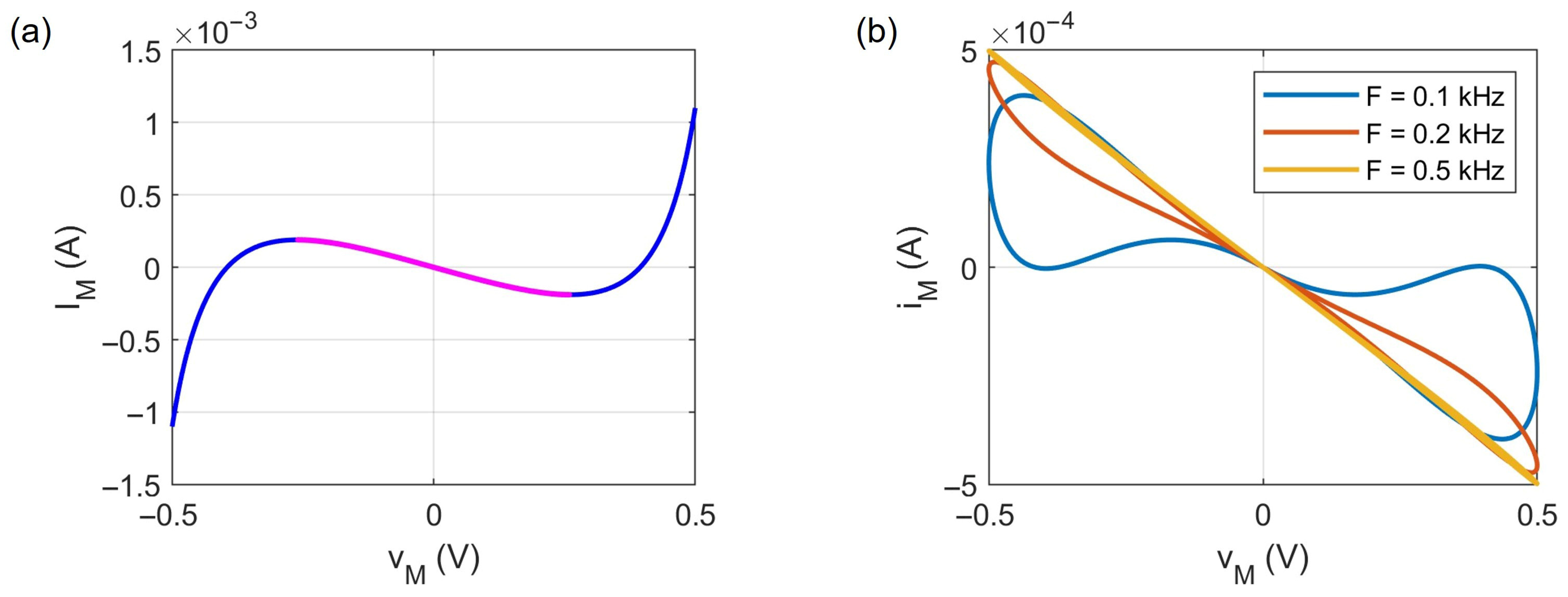

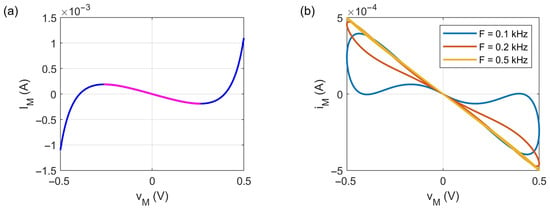

For validating the behavior of the second-order LAM, the DC curve and hysteresis loops are essential. The DC voltage-current (V-I) characteristic provides insight into the internal properties of the device, while the hysteresis loops reflect the dynamic response to alternating signals, demonstrating the memristive nature of the system.

The DC curve is obtained by setting the time derivative of the internal state variables to zero. The resulting V-I curve, as shown in Figure 2a, exhibits the distinctive N-shaped characteristic that is typical of LAM memristive devices. In the range of from −0.267 V to 0.267 V, the curve demonstrates a negative differential conductance, indicating that the device can operate in its local active domain (LAD) when adjusted in this voltage range. This is a hallmark of the LAM’s memristive behavior, where the current and voltage relationship shows a non-linear negative-slope response. The hysteresis loops, presented in Figure 2b, are plotted by applying an AC signal with various frequencies (e.g., 0.1 kHz, 0.2 kHz, 0.5 kHz). The loops are pinched, meaning that they shrink as the frequency increases, passing through the origin. This frequency-dependent behavior is a defining characteristic of the memristive system, and it aligns with the theoretical expectations for such devices. The hysteresis loops are indicative of the second-order LAM’s ability to retain memory of past voltage/current states, which is a key feature of memristive systems. The combination of the N-shaped DC curve and frequency-dependent hysteresis loops confirms that the second-order LAM operates as a voltage-controlled memristor, displaying essential memristive properties such as memory and nonlinearity essential for the practical applications in memory storage and neuromorphic computing. Having established the device characteristics of the second-order LAM, we next investigate how it behaves when embedded in a neuromorphic circuit.

Figure 2.

(a) The DC V-I curve of the second-order LAM; The blue color represents the passive region with positive slope, while the magenta color shows the region with negative slope defining the locally active domain. (b) Pinched hysteresis loops of the second-order LAM by applying an AC signal 0.5sin(2πft).

2.2. The Neuromorphic Circuit Using the Second-Order LAM

In this section, the second-order LAM is integrated into a neuromorphic circuit based on the FitzHugh-Nagumo (FHN) model, as depicted in Figure 1b. The neuromorphic circuit is designed to emulate the behavior of excitable systems, which are commonly observed in neural dynamics. The incorporation of the second-order LAM enables the circuit to exhibit complex, memory-dependent responses, suitable for neuromorphic applications.

The system consists of several key components, including the second-order LAM, an inductor, resistors, a capacitor, and an external voltage source . The LAM is utilized in the circuit to introduce a memristive element, which interacts with the other components, enabling the circuit to exhibit nonlinear and hysteretic behavior typical of biological neurons.

The key equations governing the behavior of the circuit are as follows:

where is the membrane potential of the neuron, and is the inductor current. is the voltage of the memristor, which is equal to , where is the reference voltage (set to 0 to ensure that the LAM operates in its local active domain. is the external voltage source. is the membrane capacitance. The constants are chosen based on the circuit design as kΩ, kΩ, mH. In the subsequent dynamical analysis, two different sets of capacitance values are considered. In the first case, the parameters are chosen as C1 = C2 = 100 nF and C = 10 nF. In the second case, the capacitances are reduced to C1 = C2 = 0.1 nF and C = 1 nF. The first set of larger capacitances slows down the circuit dynamics, leading primarily to stable equilibria or low-frequency oscillations. The second set of smaller capacitances accelerates the dynamics, which promotes oscillatory and chaotic bursting regimes. This choice reflects the role of capacitance in biological neurons: membrane capacitance determines how quickly neurons integrate and respond to stimuli. Larger capacitances mimic the behavior of regular spiking neurons with slower dynamics, whereas smaller capacitances emulate excitable neurons or synapses with faster response characteristics. By employing these two configurations, we demonstrate that the proposed second-order LAM circuit can flexibly reproduce diverse neuronal firing behaviors relevant to real neural systems.

Given the inherently fast dynamics of the proposed circuit, direct analysis in the original time scale can be cumbersome and less illustrative. To address this, we perform a time rescaling by substituting t with , where is chosen to normalize the system’s temporal evolution and facilitate both analytical treatment and numerical visualization. For the first parameter set of capacitances, , reflecting the moderate timescale of the dynamics, while for the second parameter set, , corresponding to the significantly faster response of the system. The subsequent analysis is conducted on this rescaled model, enabling a clearer representation of the system’s behavior and more efficient computation of its dynamical properties. To better understand the fundamental behaviors of the system, we now analyze its equilibrium points and stability.

2.3. Equilibrium Analysis

In the equilibrium analysis, it is assumed that the external stimulus is constant. To analyze the equilibrium point of the system, we set the time derivatives of the state variables to zero. This corresponds to a situation where there is no change in the state of the system over time, implying that the circuit is at rest. The equilibrium point is determined by solving the system of equations where all the derivatives are zero, as shown below,

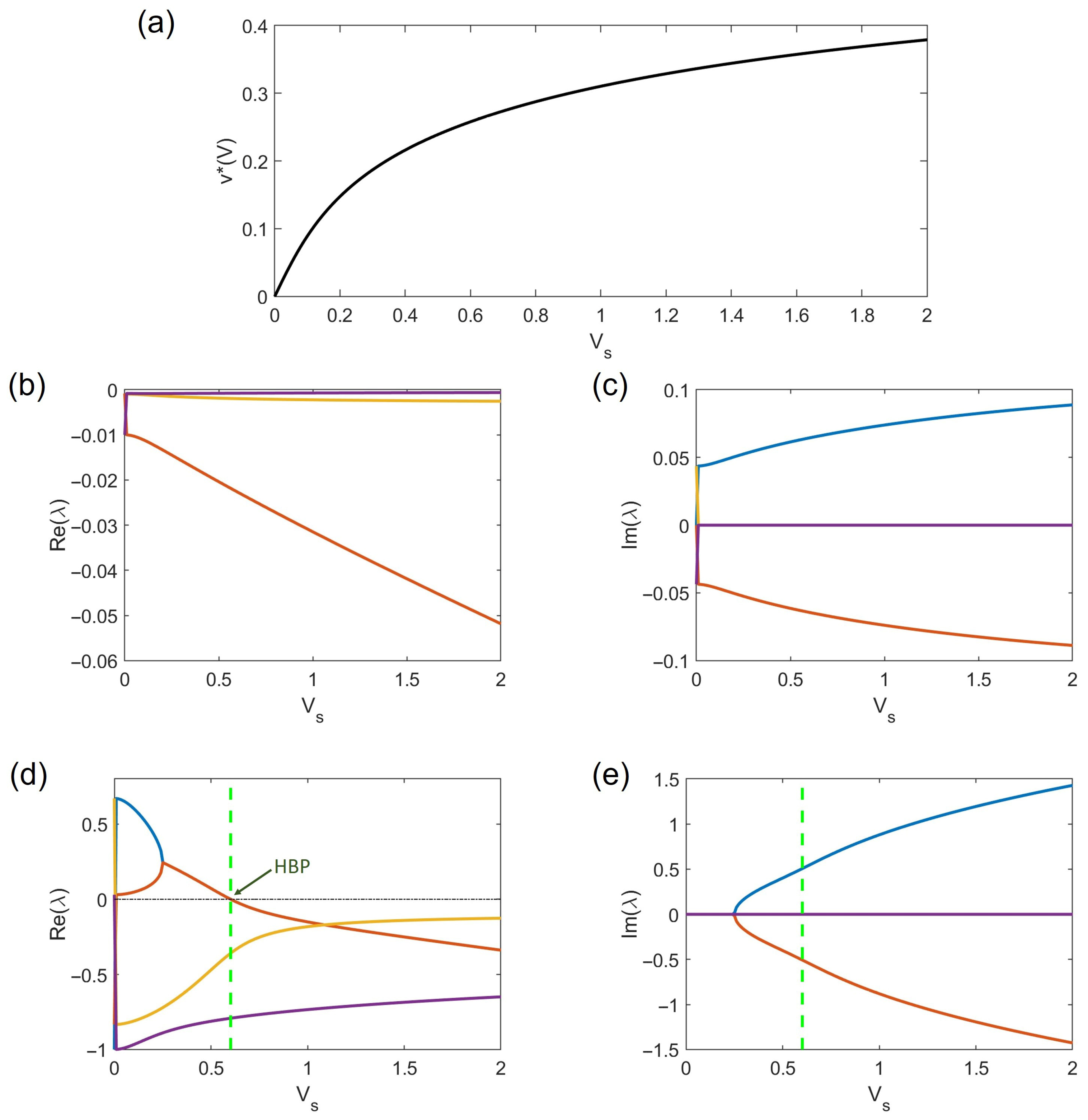

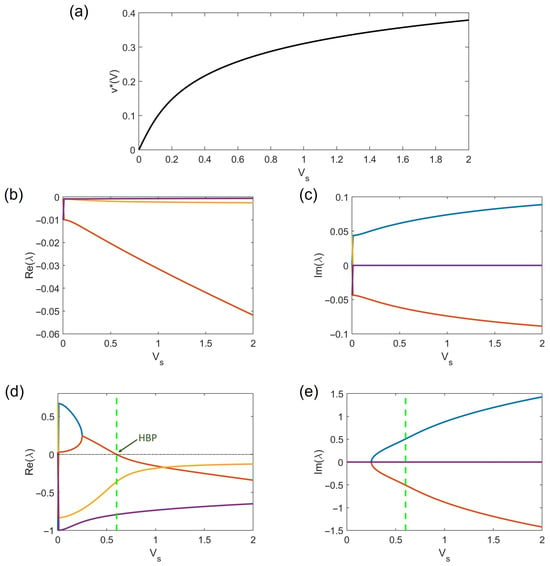

Figure 3a shows the equilibrium voltage as a function of . This figure shows only the continuation of the equilibrium solution as the external bias increases. The smooth variation confirms the persistent existence of an equilibrium point. However, the stability of this equilibrium must be determined from the eigenvalue spectrum of the Jacobian. The Jacobin matrix at E can be derived as,

By substituting the system parameter values into the Jacobian matrix, the corresponding eigenvalues at each equilibrium point can be computed. These eigenvalues determine the stability of the equilibrium: if all eigenvalues have negative real parts, the equilibrium is stable; conversely, the presence of any eigenvalue with a positive real part indicates instability. The location of the equilibrium point of the system is independent of the capacitance values. However, the stability of the equilibrium is affected by the capacitances through their influence on the Jacobian matrix. Therefore, we demonstrate the eigenvalues of the Jacobian matrix at the equilibrium for these two capacitance sets.

Figure 3.

(a) The equilibrium voltage v* as a function of constant Vs; (b,c) Real and imaginary parts of the eigenvalues of the Jacobian matrix for the capacitance values C1 = C2 = 100 nF and C = 10 nF; (d,e) Real and imaginary parts of the eigenvalues of the Jacobian matrix for the capacitance values C1 = C2 = 0.1 nF and C = 1 nF. The four eigenvalues are shown in blue, red, yellow and purple colors and the dashed green line shows the Hopf bifurcation point.

The real and imaginary parts of the Jacobian’s eigenvalues are shown in Figure 3b,c for the first capacitance set, and in Figure 3d,e for the second capacitance set. It can be observed that for the first parameter set, all the eigenvalues have negative real part for any stimulus ; therefore, the equilibrium point is always stable. For the second parameter set the stability of the equilibrium point depends on the value.

For small , one complex-conjugate pair of eigenvalues lies in the right half-plane (); therefore, the equilibrium, although still present, is unstable. As increases, this pair moves continuously toward the imaginary axis, and at the real part crosses zero while the imaginary part remains nonzero. This is the defining condition for a Hopf bifurcation: the stability of the equilibrium changes via a pair of complex eigenvalues crossing the imaginary axis. The dashed green line in Figure 3d,e shows the Hopf bifurcation point.

The qualitative nature of a Hopf bifurcation is determined by the sign of the first Lyapunov coefficient , which governs the amplitude equation on the center manifold. If , the Hopf is supercritical: the equilibrium becomes stable for while a stable limit cycle emerges for . If , the Hopf is subcritical: an unstable limit cycle exists, and the equilibrium is unstable beyond the bifurcation, often leading to bistability or hysteresis. In this system, the first Lyapunov coefficient was computed from the nonlinear terms of the vector field projected onto the critical eigenvectors, following the standard formula. We obtained , which confirms that the Hopf is supercritical. This classification matches direct time-domain simulations, where stable small-amplitude oscillations appear for and shrink to zero as

3. Dynamical Behaviors

This section presents the nonlinear dynamical behaviors of the proposed circuit under DC and AC external stimulation. We first study the case of DC excitation (Section 3.1) and then investigate AC-driven dynamics (Section 3.2).

3.1. DC External Stimulation

As indicated in the previous subsection, the location of the equilibrium point is independent of the capacitance values, while its stability depends on them through the Jacobian matrix. For the first parameter set of capacitances (C1 = C2 = 100 nF, C = 10 nF) the equilibrium point remains stable under any DC stimulus, corresponding to a quiescent neuronal state.

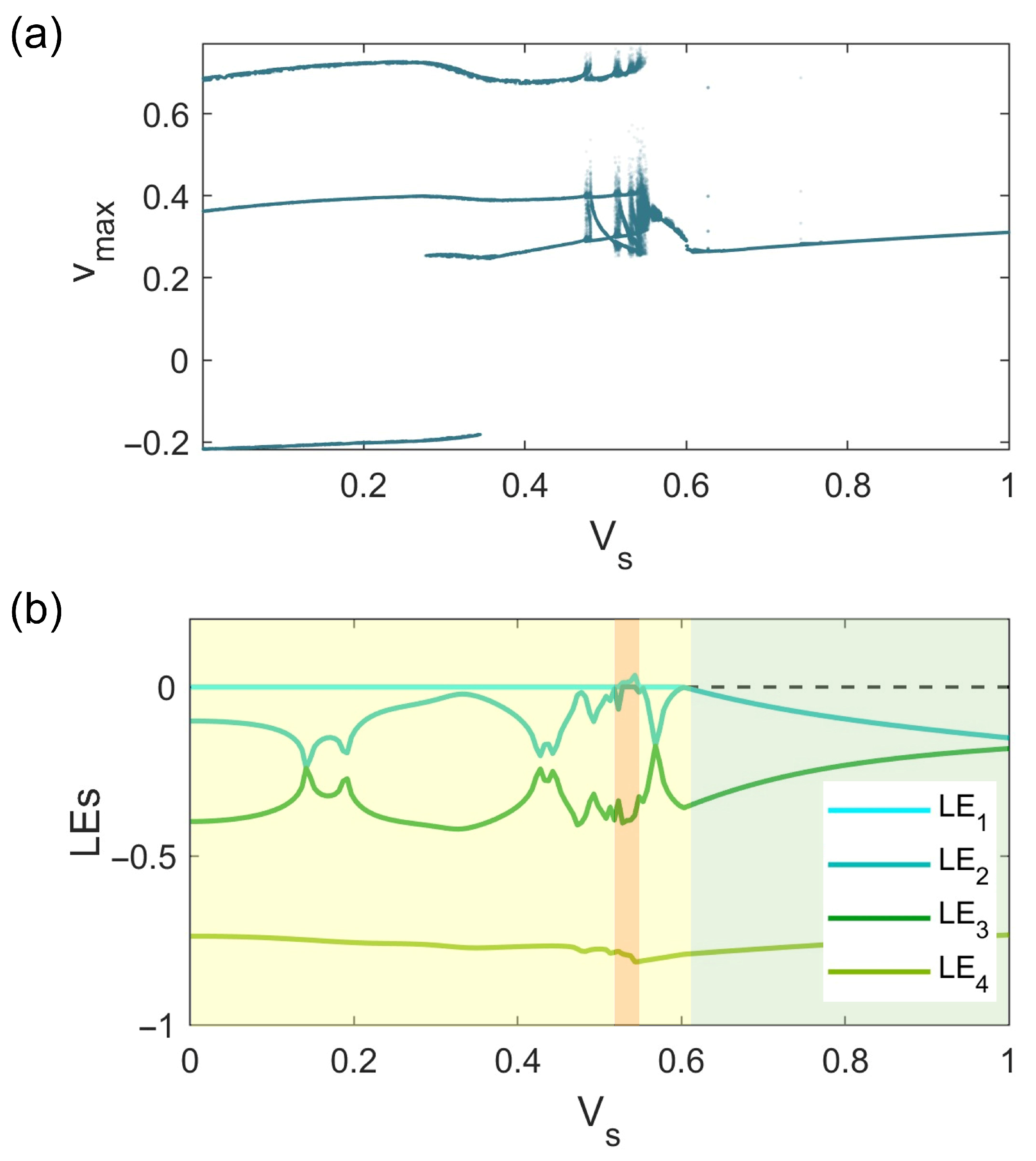

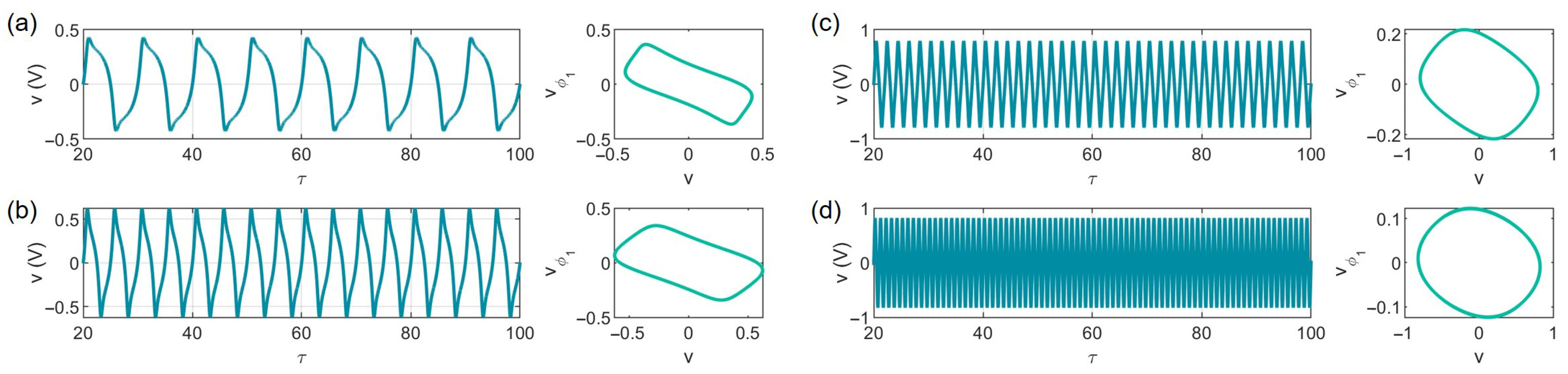

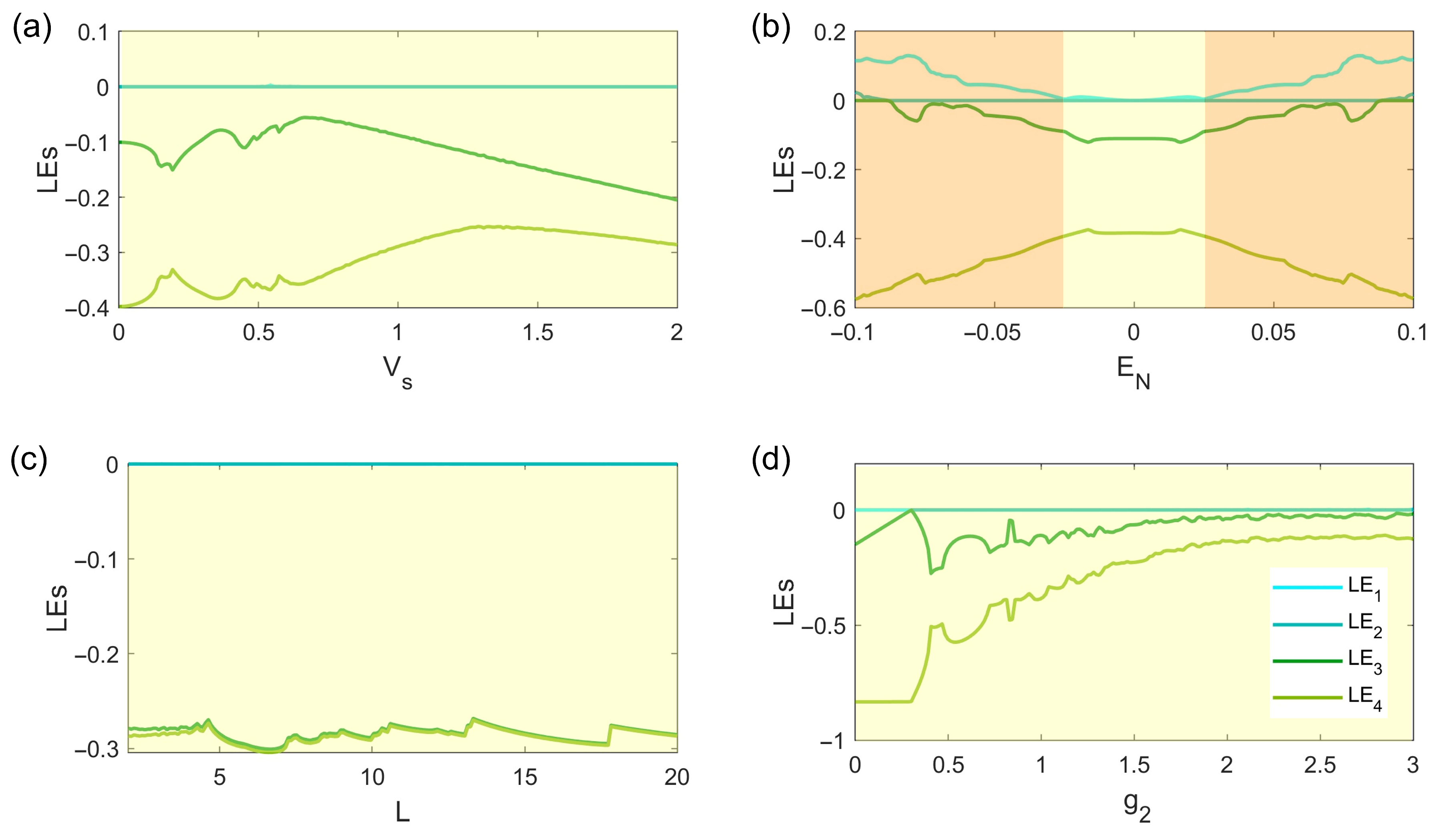

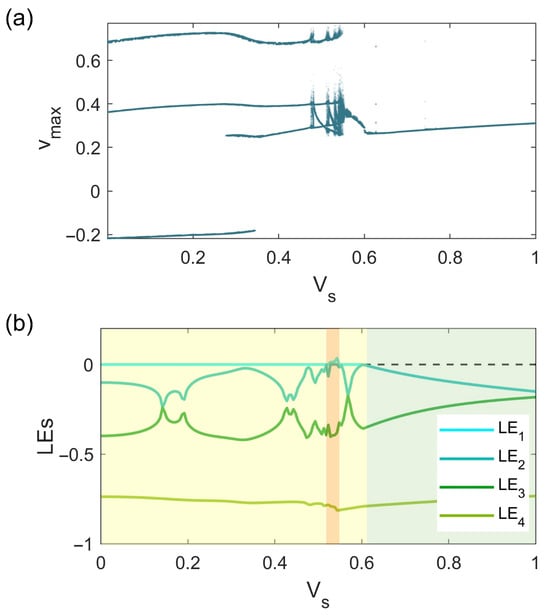

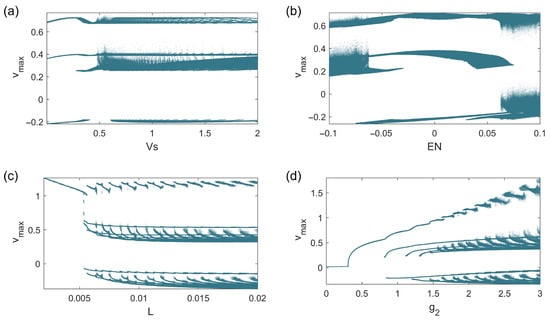

In contrast, for the second parameter set (C1 = C2 = 0.1 nF, C = 1 nF), the system undergoes a Hopf bifurcation, giving rise to sustained oscillations analogous to regular spiking. The bifurcation diagram for this case is shown in Figure 4a, and the corresponding Lyapunov exponents (LEs) are presented in Figure 4b. The Lyapunov exponents are computed using the Wolf algorithm [45]. It can be observed that for , all LEs are negative, indicating convergence to a stable fixed point. Below this critical threshold, the largest Lyapunov exponent (LLE) becomes zero, signifying periodic oscillations, except in the interval , where and the system exhibits chaotic dynamics.

Figure 4.

Bifurcation diagram (a) and the Lyapunov exponents (b) of the system versus the constant Vs (DC external stimulation). Background colors indicate dynamical regimes classified by the largest Lyapunov exponent (LLE): green background = fixed point (LLE < 0), yellow background = periodic or quasi-periodic (LLE ≈ 0), orange background = chaotic (LLE > 0). The dashed black line shows zero axis.

According to the bifurcation diagram, the system demonstrates period-3 oscillations for , followed by period-4 dynamics up to , and then returns to period-3 behavior. In the range , the dynamics alternate between different periodic regimes. Finally, for , the system transitions to period-1 oscillations before converging to a fixed point for higher values of .

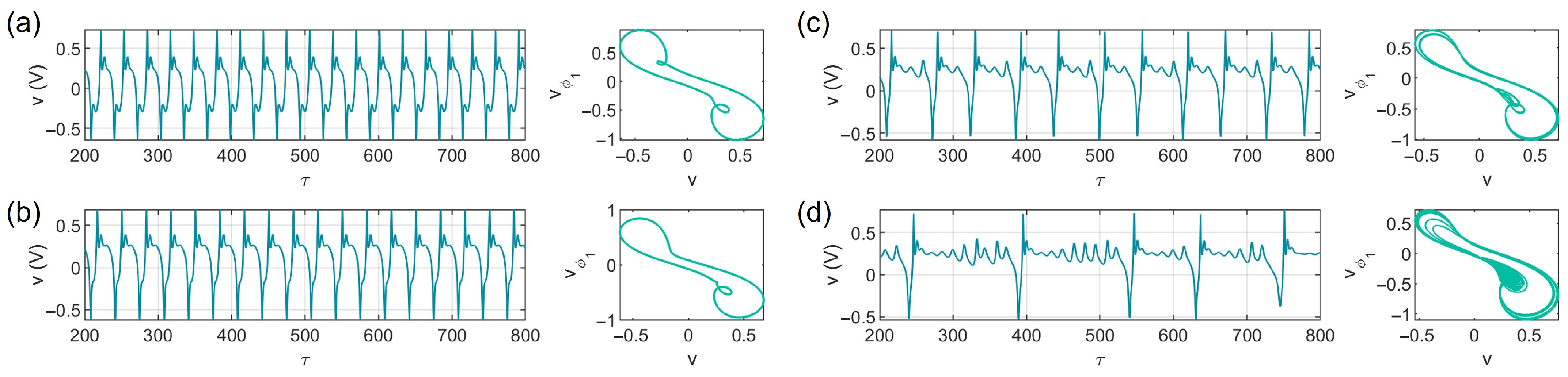

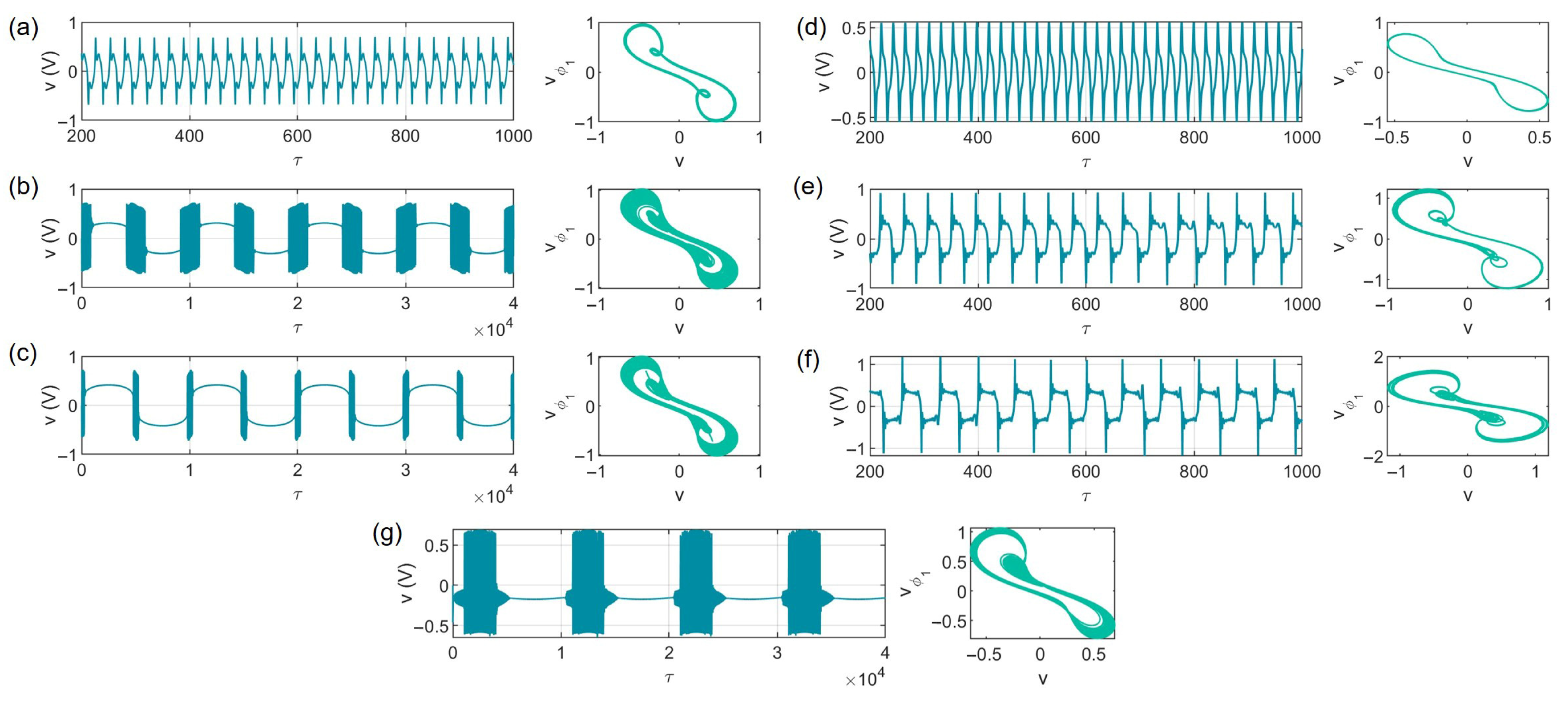

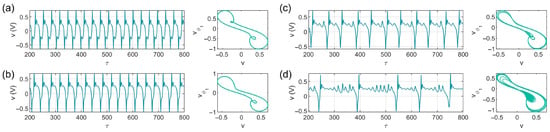

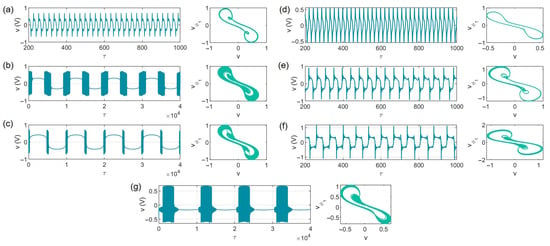

Representative examples of the system dynamics are illustrated in Figure 5. In Figure 5a, for , the system exhibits a period-3 firing pattern, which can be interpreted as a form of bursting activity, where clusters of spikes repeat with a fixed rhythm. Another variant of period-3 oscillations is shown in Figure 5b for , representing a different type of repetitive spiking. At , as depicted in Figure 5c, the circuit displays multi-periodic firing, corresponding to spaced bursts that resemble mixed-mode oscillations often reported in neuronal models. Finally, for , Figure 5d demonstrates chaotic firing. It should be noted that in general, periodic limit cycles observed here are relatively robust; perturbations of a few percent in model parameters typically change spike amplitude or period but do not destroy periodicity. In parts a and b, parameter points are well inside the periodic regime window; therefore, they are likely robust to small perturbations. In contrast, near bifurcation points and chaotic regimes are more parameter sensitive. Parts c and d in Figure 5 are near bifurcation points and small changes may induce transitions between regimes. These firing transitions can be physically interpreted in terms of the system’s bifurcation structure. For small capacitances, the system undergoes a Hopf bifurcation, giving rise to sustained oscillations analogous to regular spiking. Further parameter variations induce multi-periodic or quasi-periodic oscillations, where interactions between the two state variables of the LAM generate mixed-mode dynamics resembling irregular bursts. At higher excitation levels, the nonlinear feedback of the LAM drives the system into chaos, mimicking irregular spiking patterns observed in cortical neurons under strong or noisy stimulation.

Figure 5.

Firing patterns (time series and attractors) of the system with DC external stimulation for C1 = C2 = 0.1 nF and C = 1 nF. (a) Vs = 0.2, (b) Vs = 0.4, (c) Vs = 0.514, (d) Vs = 0.54.

3.2. AC External Stimulation

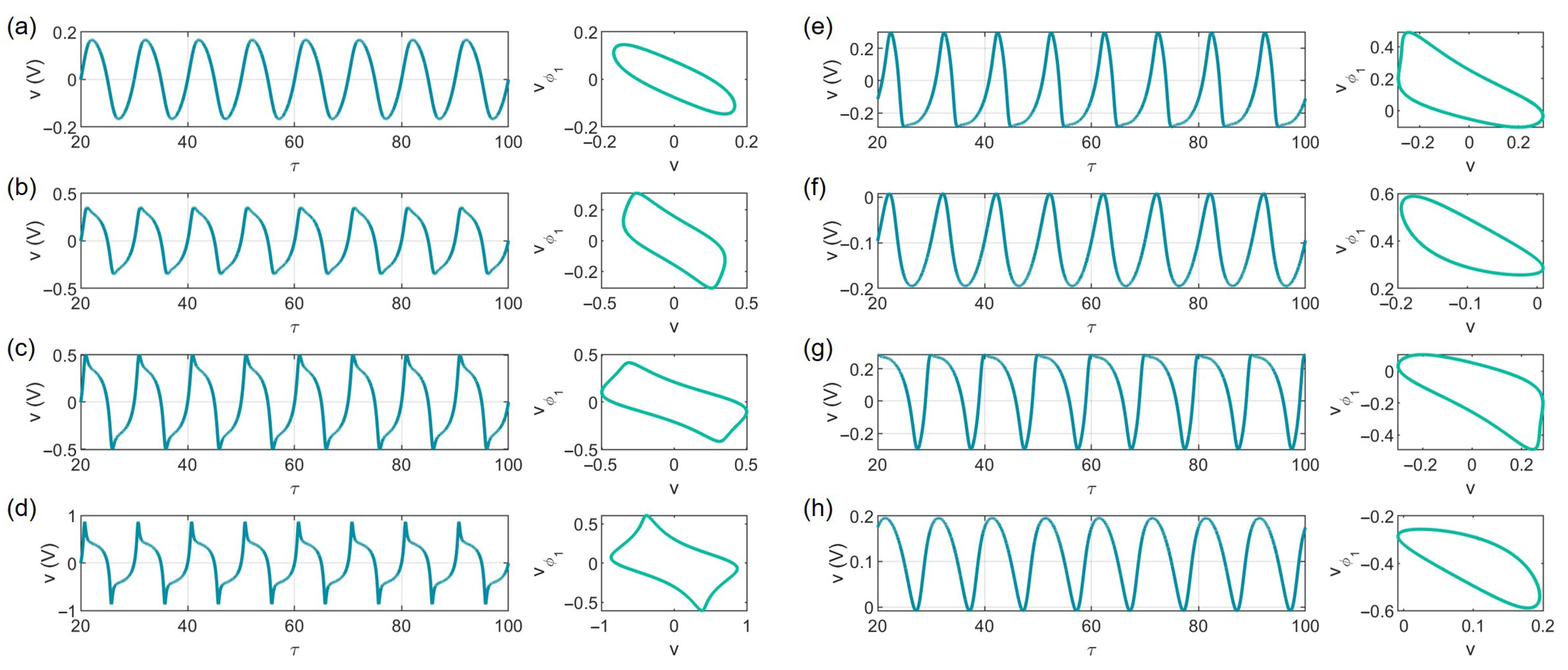

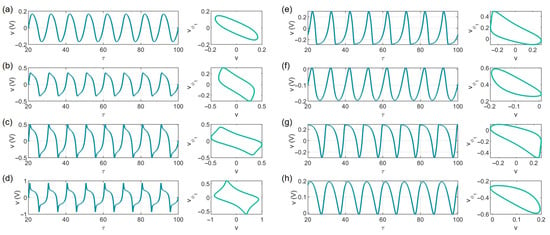

When the system is subjected to an external sinusoidal stimulation, it is capable of exhibiting richer dynamical responses. We first examine the case of larger capacitances (C1 = C2 = 100 nF, C = 10 nF) under the input Illustrations of the system dynamics are shown in Figure 6 where the transients are omitted to highlight the stable oscillatory regime. In Figure 6a–d, the stimulus amplitude is varied while keeping the frequency fixed at Hz and . It can be observed that the dynamics consistently exhibit period-1 spiking, although the waveform shape and the corresponding attractors are modified as the stimulus amplitude increases. In Figure 6e–h, the amplitude is fixed at , while the bias parameter is varied (). The system still produces period-1 spiking in all cases, but the spike morphology and trajectory in the phase space are noticeably altered, reflecting sensitivity to the bias conditions. It should be noted that the application of positive and negative bias leads to mirror-inverted but not perfectly symmetric oscillations, owing to the nonlinear characteristics of the circuit. From a neuromorphic perspective, the observed period-1 spiking corresponds directly to the behavior of regular spiking neurons in biology. Variations in stimulus amplitude and bias ( and ) primarily affect the waveform and morphology of the spikes without destroying their rhythm, analogous to how membrane bias and ionic currents modulate spike shape and duration in real neurons.

Figure 6.

Firing patterns (time series and attractor) of the system with AC external stimulation Vssin2πft, f = 100 Hz for C1 = C2 = 100 nF and C = 10 nF and varying Vs and EN; (a) Vs = 0.2, EN = 0; (b) Vs = 0.6, EN = 0; (c) Vs = 1, EN = 0; (d) Vs = 2, EN = 0; (e) Vs = 0.8, EN = 0.1; (f) Vs = 0.8, EN = 0.2; (g) Vs = 0.8, EN = −0.1; (h) Vs = 0.8, EN = −0.2.

Figure 7 illustrates the effect of changing the stimulus frequency. The circuit again maintains period-1 spiking dynamics across the tested frequency range. However, as the frequency of stimulation increases, the spiking rate of the system also increases, and the oscillations gradually evolve toward a more sinusoidal waveform. varying the input frequency (Figure 7) alters the firing rate, reflecting frequency coding in biological systems. At higher frequencies, the waveforms gradually evolve toward sinusoidal oscillations, resembling subthreshold oscillations or entrainment phenomena reported in cortical and thalamic neurons.

Figure 7.

Firing patterns of the system with AC external stimulation Vssin2πft for C1 = C2 = 100 nF and C = 10 nF, Vs = 0.8 and EN = 0 and varying f. (a) f = 100 Hz; (b) f = 200 Hz; (c) f = 500 Hz; (d) f = 1000 Hz.

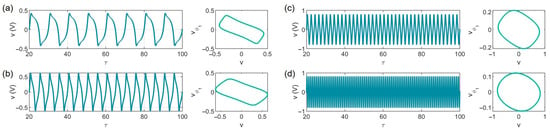

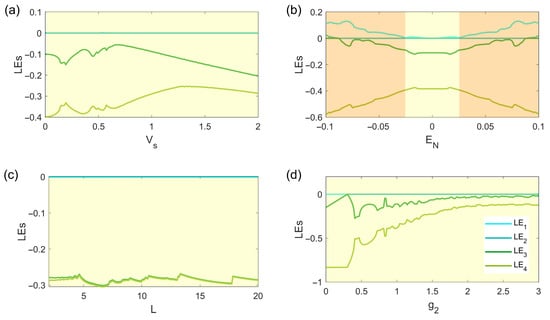

Next, we investigate the system with the second parameter set under AC stimulation. The bifurcation diagram as a function of the stimulus amplitude is presented in Figure 8a, with the corresponding Lyapunov exponents (LEs) shown in Figure 9a. The LEs indicate that for all values of , two exponents remain zero, confirming that the dynamics are predominantly quasi-periodic. Nevertheless, bifurcations occur as is varied, leading to qualitative changes in the neuronal firing patterns.

Figure 8.

Bifurcation diagrams of the system with AC external stimulation Vssin2πft with f = 100 Hz for C1 = C2 = 0.1 nF and C = 1 nF. (a) According to Vs for EN = 0, g2 = 1, L = 10 mH; (b) According to EN for Vs = 0.1, g2 = 1, L = 10 mH; (c) According to L for EN = 0, Vs = 0.1, g2 = 2; (d) According to g2 for EN = 0, Vs = 0.1, L = 10 mH.

Figure 9.

Lyapunov exponents of the systems with AC external stimulation Vssin2πft with f = 100 Hz for C1 = C2 = 0.1 nF and C = 1 nF. Parts (a–d) correspond to bifurcation diagrams in Figure 8a–d. Background colors indicate dynamical regimes classified by the largest Lyapunov exponent (LLE): yellow background= periodic or quasi-periodic (LLE ≈ 0), orange background = chaotic (LLE > 0).

Representative examples are provided in Figure 10a–c for , and 3. At small amplitudes (), the system exhibits a firing pattern similar to the periodic spiking observed in the DC-stimulated case. When the stimulus amplitude is increased (), a bursting dynamic emerges, where clusters of spikes are separated by quiescent intervals. As the stimulus amplitude is further increased ( V), the width of each burst decreases, leading to more compact firing episodes. This progressive reduction in burst width under stronger forcing mirrors the modulation of burst duration seen in certain neuronal populations, where stronger excitatory drive shortens the inter-burst interval and compresses firing activity.

Figure 10.

Firing patterns (time series and attractors) of the system with AC external stimulation Vssin2πft with f = 100 Hz for C1 = C2 = 0.1 nF and C = 1 nF. (a) Vs = 0.1, EN = 0, g2 = 1, L = 10 mH; (b) Vs = 1, EN = 0, g2 = 1, L = 10 mH; (c) Vs = 3, EN = 0, g2 = 1, L = 10 mH; (d) Vs = 0.1, EN = 0.1, g2 = 1, L = 10 mH; (e) Vs = 0.1, EN = 0, g2 = 0.8, L = 10 mH; (f) Vs = 0.1, EN = 0, g2 = 1.5, L = 10 mH; (g) Vs = 0.1, EN = 0, g2 = 2, L = 10 mH.

Figure 8b presents the bifurcation diagram as a function of the bias voltage , with the corresponding Lyapunov exponents shown in Figure 9b. It can be observed that the largest Lyapunov exponent (LLE) becomes positive for , indicating the onset of chaotic firing patterns. For , the dynamics remain quasi-periodic. A particularly interesting case occurs at , where the system generates a form of chaotic bursting, as illustrated in Figure 10d. This behavior resembles irregular bursting activity observed in cortical and thalamic neurons under strong bias or modulation, where chaotic alternation between active and silent phases occurs.

Figure 8c,d show the bifurcation diagrams with respect to the parameters L and g2, respectively. As either parameter increases, the system dynamics become more complex, with a richer set of oscillatory behaviors appearing. However, the corresponding Lyapunov exponents confirm that for all tested values of L and g2, the system remains in a quasi-periodic regime. Representative examples of these patterns are shown in Figure 10e–g. As g2 is increased, the firing activity transitions from period-1 spiking to quasi-periodic bursting.

The observed transitions under AC stimulation can be interpreted as frequency- and amplitude-dependent modulations of neuronal firing. Increasing the input amplitude or bias shifts the circuit from tonic spiking to bursting, similar to how stronger synaptic drive modulates burst duration in real neurons. Likewise, chaotic bursting observed at higher bias reflects irregular alternation between active and silent phases, a behavior widely reported in thalamic and cortical neurons. These results reinforce the circuit’s capability to emulate diverse neuronal coding strategies.

4. Parameter Estimation

Having characterized the circuit’s dynamics, we now turn to the problem of parameter estimation. Parameter estimation plays a critical role in modeling nonlinear and chaotic systems, where accurate identification of system parameters is essential for reproducing observed dynamics. However, due to the inherent sensitivity to initial conditions, traditional cost functions based on direct time-domain comparisons between model output and observed data often fail in chaotic systems. Even with nearly identical parameters, small deviations in initial conditions can produce completely different trajectories, making time-series-based error measures unreliable.

To overcome this difficulty, alternative approaches focus on comparing the geometric structure of attractors in state space rather than individual time series. One effective method employs return maps, constructed from the sequence of local maxima extracted from a system variable [46]. By plotting versus , a return map captures the underlying structure of the chaotic attractor in a reduced form. The cost function is then defined as the average bidirectional Euclidean distance between points of the experimental and model-generated return maps, measuring how closely the model reproduces the system’s geometric patterns. This approach provides a more robust criterion for parameter identification in chaotic and multistable systems, as it is less affected by mismatched initial conditions.

Practically, the cost function is minimized using an optimization algorithm, where the parameter set yielding the lowest cost corresponds to the best estimate of the true system parameters. The resulting cost landscape typically exhibits a well-defined global minimum at the actual parameter values, confirming the validity of this return-map-based formulation. Here, we use the Aquila Optimization (AO) algorithm [47] for parameter estimation. AO is a recent metaheuristic inspired by the cooperative hunting behavior of eagles, offering a strong balance between global exploration and local exploitation. Unlike traditional methods, AO requires only a small number of control parameters, reducing implementation complexity and parameter sensitivity. These characteristics make AO especially suitable for estimating parameters in nonlinear chaotic systems, where search spaces are highly multimodal.

The Aquila eagle is a highly intelligent and agile bird of prey, known for its strategic adaptability and swift decision-making in diverse environments. In the wild, Aquilas employ a range of sophisticated tactics to capture prey: they may hover at high altitudes before executing rapid dives on moving targets, skim just above the ground to chase fast animals, perform controlled descents to seize slower prey, or even walk on land to capture concealed or stationary targets. These varied techniques reflect the eagle’s exceptional ability to alternate between broad environmental surveillance and precise, targeted actions, balancing exploration and exploitation based on context.

The AO algorithm emulates this natural intelligence by transforming these biological strategies into a structured computational framework for solving optimization problems. It starts with a randomly initialized population of candidate solutions, each representing a possible set of unknown parameters. This initial phase mirrors an eagle scanning a vast territory from above, casting a wide net to identify promising regions. Each solution is evaluated using a cost function, which quantifies how well the solution replicates the desired system behavior. The most effective solution in each iteration is treated as the prey that the algorithm aims to capture.

The AO algorithm operates through two key phases: exploration and exploitation, where each is modeled after distinct stages of the eagle’s hunting sequence. The exploration phase refers to the early stages of optimization, where AO prioritizes global search capabilities. Candidate solutions are dispersed across the search space, mimicking the eagle’s high-altitude soaring or long gliding flights. This broad scanning helps prevent premature convergence to suboptimal solutions and ensures that a wide variety of potential solutions are considered. As the search progresses and promising areas are identified, the exploitation phase is reached where the algorithm shifts focus toward refining the best candidates. Solutions begin to converge around the current optimal point, simulating the eagle’s low-altitude approaches, rapid descents, or ground-level pursuits. This phase enhances solution accuracy by intensively searching the vicinity of high-performing regions, gradually minimizing the cost function.

A core strength of AO lies in its adaptive balance between exploration and exploitation. Like the Aquila adjusting its tactics in real time based on prey movement and terrain, the algorithm dynamically modulates its search behavior depending on the iteration stage. Initially, exploration dominates to ensure comprehensive coverage of the parameter space; later, exploitation becomes more pronounced, guiding the population toward fine-tuned convergence. Throughout successive iterations, candidate solutions evolve through a combination of stochastic (random) perturbations (maintaining population diversity) and deterministic attraction toward the best-known solution (ensuring steady progress toward optimality). The process continues until a stopping condition is met, such as reaching a maximum number of iterations or observing negligible improvement in solution quality. At this point, the best-performing solution is selected as the final, optimized result.

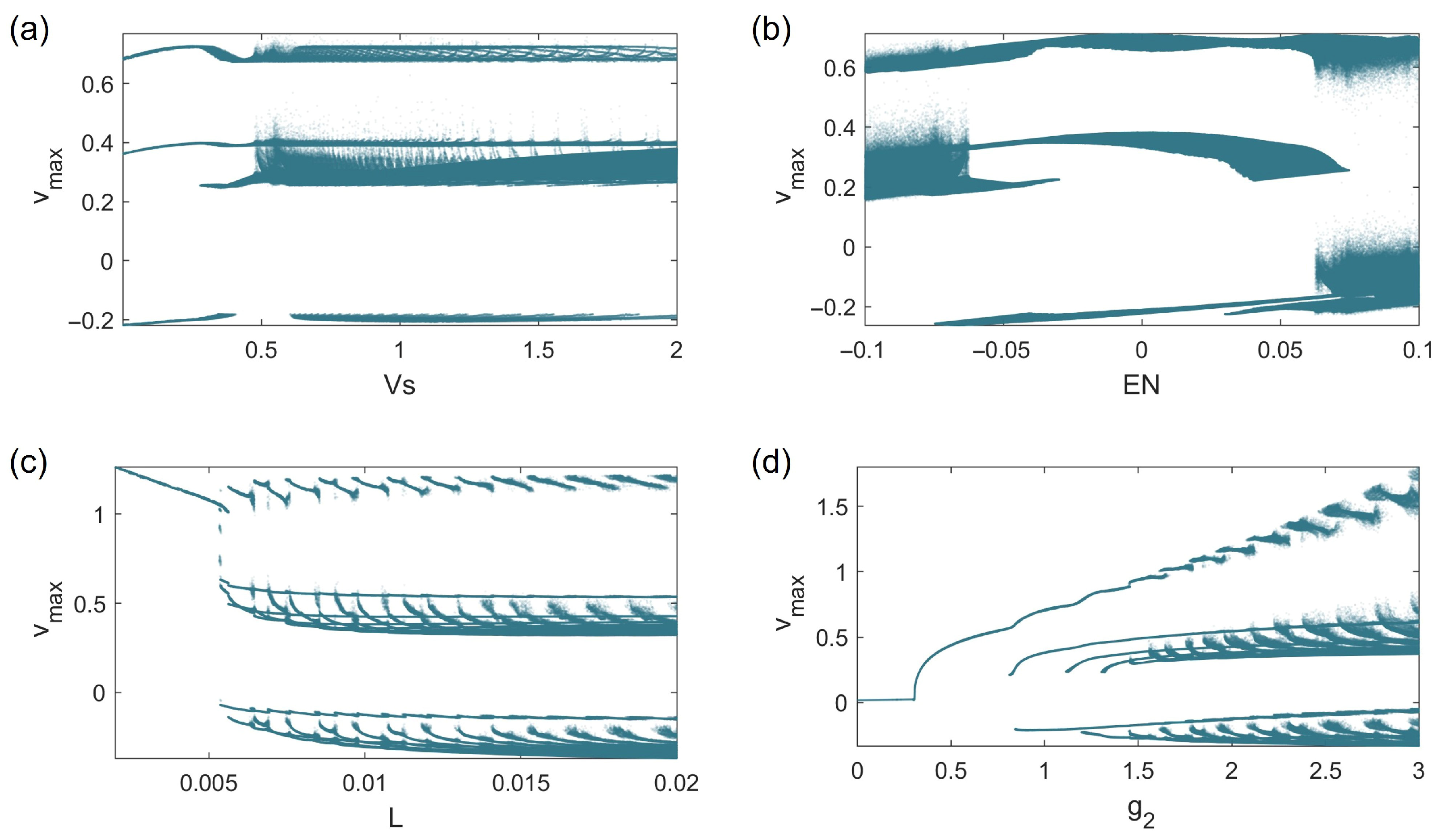

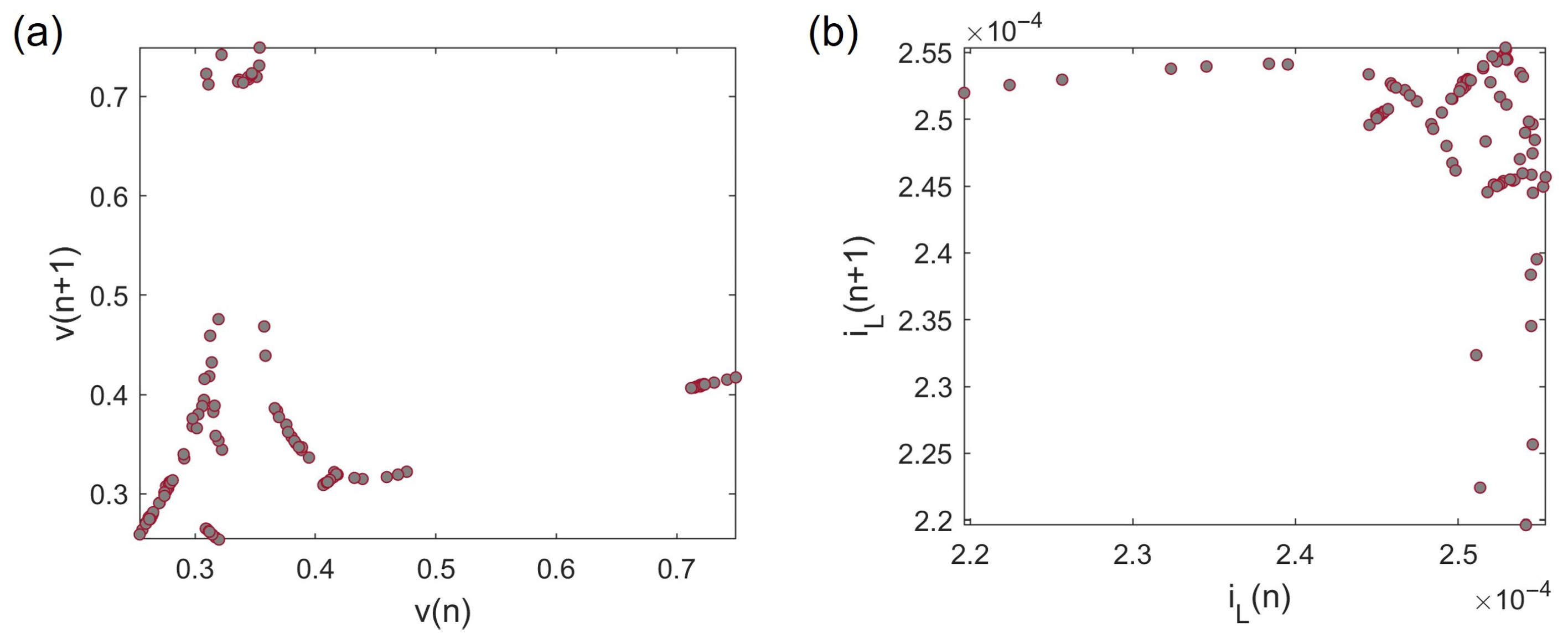

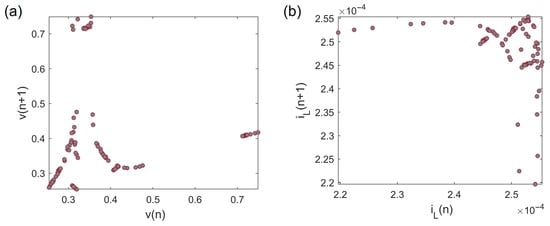

In this study, we assume that the capacitor values in the system are unknown and must be estimated, while all other parameters are considered known and fixed. A constant external voltage stimulus of is applied, which, under the nominal capacitance values of C1 = C2 = 0.1 nF and C = 1 nF, induces chaotic bursting dynamics, as illustrated in Figure 5d. To further verify the chaotic nature of the system under these conditions, return maps are constructed using the time series data of state variables v and iL as shown in Figure 11. The irregular distribution of points and the absence of uniform clustering in both return maps provide strong evidence of chaotic behavior.

Figure 11.

Return maps of the system with C1 = C2 = 0.1 nF and C = 1 nF, and initial conditions [0, 0, 0, 0] based on the local maxima of v (a) and iL (b).

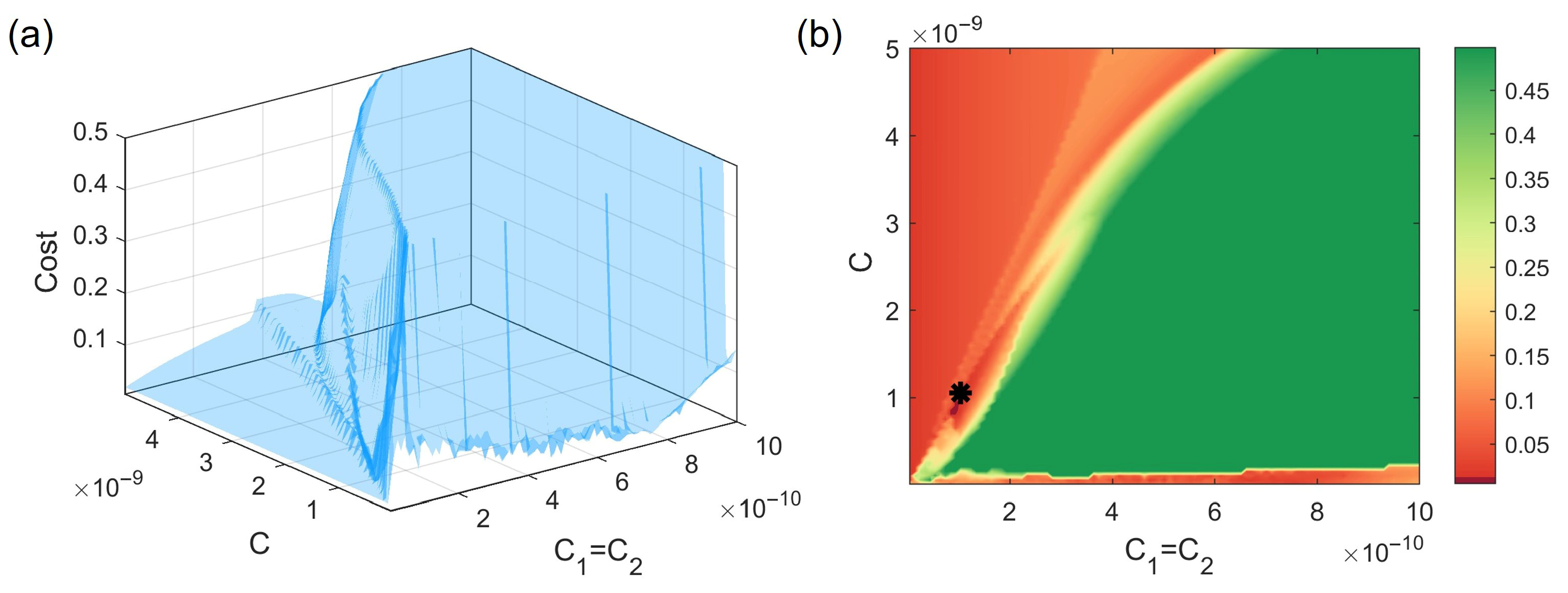

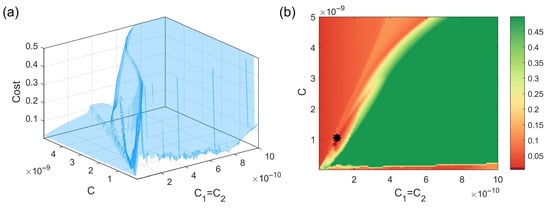

Given the uncertainty in capacitor values, the parameter estimation process defines the search bounds for C1 and C2 within the interval nF, and for C within nF. The search bounds are selected for computational feasibility rather than strict hardware limitations. They were set wide enough to include the true parameter values employed in the simulations, while remaining sufficiently constrained to promote convergence efficiency. Within these ranges, the cost function is evaluated based on the reconstructed return map of the voltage variable v, aiming to minimize the discrepancy between the simulated and target chaotic dynamics. The resulting 3D and 2D visualizations of the cost landscape are presented in Figure 12. In the 2D representation, dark red regions indicate lower cost values, while a shift toward green signifies increasing cost and reduced accuracy.

Figure 12.

The cost function of the system (blue) based on the return map of v state variable for and . (a) The three-dimensional surface; (b) The two-dimensional projection. The black star shows the parameters with the lowest cost.

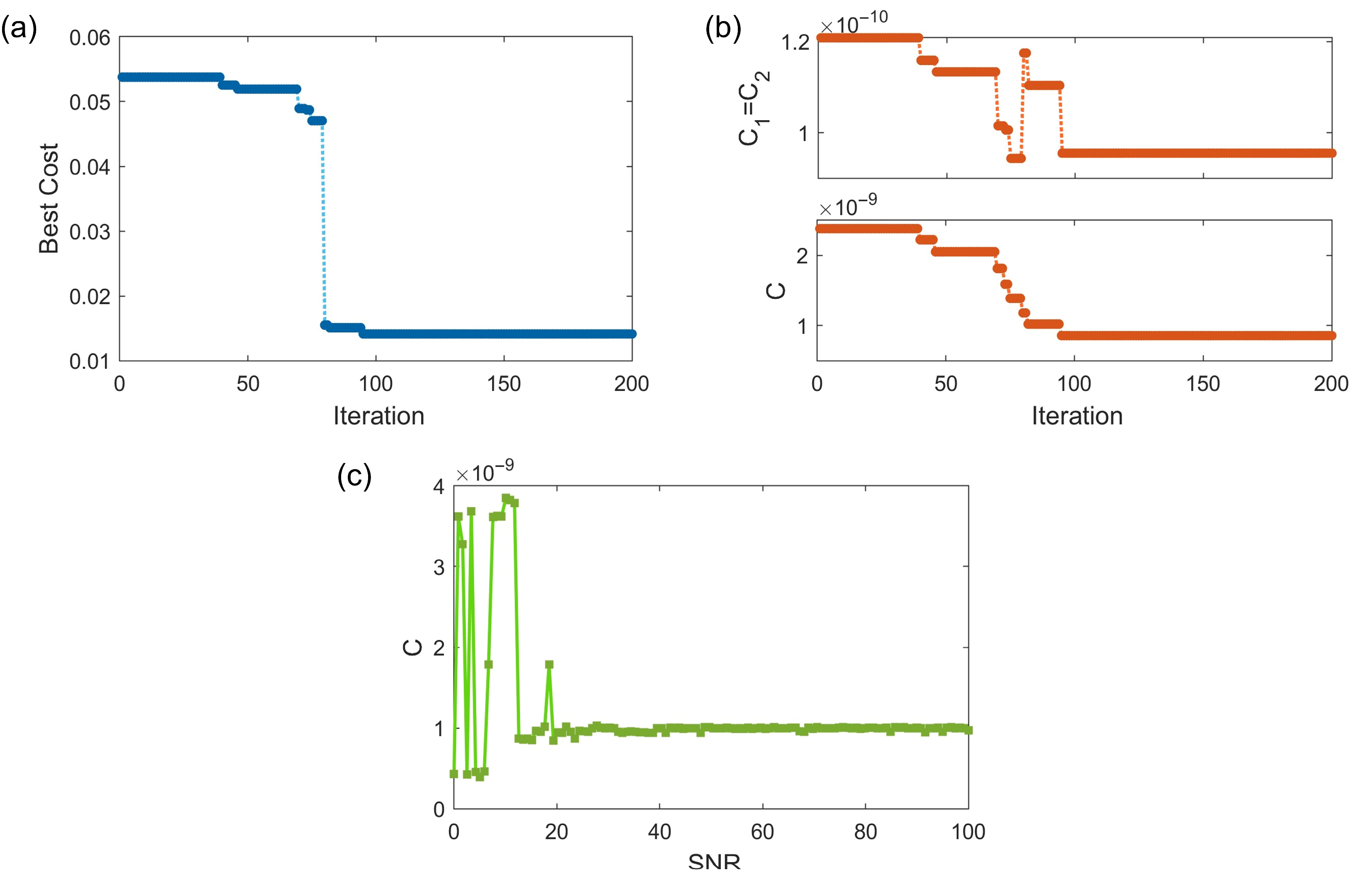

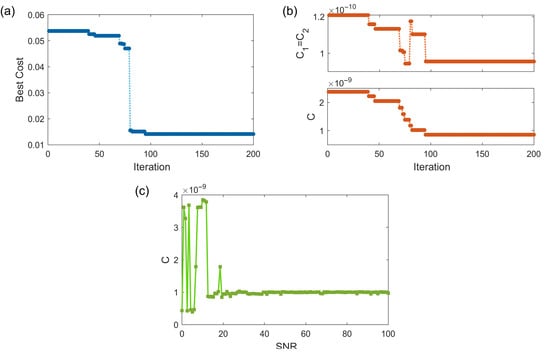

To identify the optimal capacitor values, the Aquila Optimization (AO) algorithm is employed with a population of candidate solutions and a maximum of 600 iterations. Figure 13a displays the evolution of the best cost value across iterations, demonstrating the algorithm’s steady convergence toward a globally optimal solution. The parameter set corresponding to the lowest cost is marked by a black star in Figure 12b, aligning closely with the true values used to generate the reference dynamics. Furthermore, Figure 13b tracks the trajectory of the best-estimated parameters throughout the optimization process, showing rapid convergence toward the target region.

Figure 13.

(a) The best cost function at each iteration in the AO algorithm converging to 0.014. (b) The best parameters found at each iteration. (c) Estimated parameter C with fixed C1 = C2 = 0.1 nF according to the SNR.

The optimization yields a minimum cost of 0.014, indicating a high degree of similarity between the reconstructed and original system behaviors. Numerical simulations confirm that the estimated parameters successfully replicate the chaotic bursting patterns observed in the reference system. It is worth noting that the AO-based estimation framework is not restricted to chaotic regimes. In periodic and quasi-periodic regimes, the method can still be applied and typically converges successfully.

To further evaluate the robustness of the proposed estimation method, we tested the AO algorithm under noisy conditions. Gaussian noise was added to the time series at different signal-to-noise ratios (SNRs), and the estimation of the capacitance parameter C was monitored for the fixed configuration C1 = C2 = 0.1 nF. The results are presented in Figure 13c. It can be observed that for SNR > 20, the estimated capacitance value remains very close to the true value, demonstrating that the AO algorithm retains high accuracy despite measurement noise. At lower SNR levels, the estimation accuracy degrades slightly, as expected. These findings highlight the robustness of the AO algorithm and the return-map-based cost function in the presence of noise, an essential feature for practical neuromorphic implementations.

5. Conclusions

Memristive neuromorphic circuits offer a promising pathway for emulating neuronal dynamics with minimal energy consumption. Locally active memristors (LAMs) are particularly effective in generating action potential-like responses when operated within their locally active domain, making them valuable building blocks for brain-inspired hardware. In this work, we proposed a novel second-order LAM with two coupled state variables, extending the functionality of conventional first-order devices. Even when the capacitances of the two states are equal, the memristor retains a higher-dimensional internal memory, enabling richer hysteresis and nonlinear dynamics. By integrating this device into a FitzHugh–Nagumo (FHN)-based neuromorphic circuit, we demonstrated that it can reproduce a diverse spectrum of neuronal firing behaviors. By selecting two capacitance sets corresponding to slow and fast timescales, we established an analogy with biological neurons: large capacitances correspond to slower, regular spiking activity, while small capacitances mimic faster, bursting or chaotic firing. This highlights the relevance of the proposed circuit in modeling the heterogeneous dynamics observed in dense neural networks. We investigated the circuit’s response to both DC and AC external stimuli and explored its rich nonlinear dynamics through bifurcation diagrams and Lyapunov exponent spectra. The results revealed a diverse range of firing behaviors, including periodic spiking, multi-periodic oscillations, and chaotic bursting, underscoring the ability of the proposed design to replicate complex neuronal activity. These findings highlight the potential of second-order LAMs as fundamental elements for developing energy-efficient neuromorphic systems capable of exhibiting biologically relevant dynamics.

Finally, this structural and dynamical advancement was complemented by the methodological contribution of applying the Aquila Optimization (AO) algorithm with a return-map-based cost function for parameter estimation, confirming both the novelty and practical significance of our work. Some key parameters of the circuit were considered unknown, and the AO algorithm was used to estimate the parameters. For the optimization algorithm, a cost function, designed based on the system’s return map which is a powerful tool in the presence of chaotic behavior, was utilized. Simulation results demonstrated that the AO algorithm efficiently converged to the true parameter values, exhibiting strong accuracy and robustness throughout the optimization process. In addition, we evaluated the robustness of the AO-based parameter estimation under noisy conditions. Simulation results demonstrated that for SNR > 20, the estimated capacitance values remained very close to the true parameters, confirming that the return-map-based cost function provides reliable estimation even in the presence of measurement noise.

In real-world implementations, several non-idealities are expected to influence performance. These include device mismatch, parameter drift, parasitic capacitances, finite op-amp bandwidth, and electronic noise. Moreover, practical memristive devices may exhibit asymmetric or stochastic switching characteristics that deviate from the idealized model used in the study. While our parameter estimation results already indicated robustness under moderate Gaussian noise, further validation under realistic hardware conditions will be necessary. Therefore, an important avenue for future work is the construction of a hardware prototype of the proposed circuit and the validation of its firing patterns, which will complement and strengthen the present simulation results.

Author Contributions

Conceptualization, F.P. and S.J.; methodology, V.-T.P. and K.R.; software, S.R. and F.P.; validation, V.-T.P. and K.R.; investigation, S.R.; writing—original draft preparation, S.R. and V.-T.P.; writing—review and editing, F.P., K.R. and S.J.; supervision, S.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- MacGregor, R. Neural Modeling: Electrical Signal Processing in the Nervous System; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Shlyonsky, V.; Dupuis, F.; de Prelle, B.; Erneux, T.; Osee, M.; Nonclercq, A.; Gall, D. Compact hybrid type electronic neuron and computational model of its dynamics. Nonlinear Dyn. 2024, 112, 14343–14362. [Google Scholar] [CrossRef]

- Tang, H.; Tan, K.C.; Yi, Z. Neural Networks: Computational Models and Applications; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Xu, Q.; Ding, X.; Chen, B.; Parastesh, F.; Iu, H.H.-C.; Wang, N. A universal configuration framework for mem-element-emulator-based bionic firing circuits. IEEE Trans. Circuits Syst. I Regul. Pap. 2024, 71, 4120–4130. [Google Scholar] [CrossRef]

- Schuman, C.D.; Kulkarni, S.R.; Parsa, M.; Mitchell, J.P.; Date, P.; Kay, B. Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci. 2022, 2, 10–19. [Google Scholar] [CrossRef] [PubMed]

- Marković, D.; Mizrahi, A.; Querlioz, D.; Grollier, J. Physics for neuromorphic computing. Nat. Rev. Phys. 2020, 2, 499–510. [Google Scholar] [CrossRef]

- Li, H.; Min, F. Cross-Hemispheric Memristive Neural Network with Discrete Corsage Memristor for Telemedicine Encryption Application. IEEE Internet Things J. 2025, 12, 29018–29031. [Google Scholar] [CrossRef]

- Wang, Y.; Tao, K.; Wang, Z.; Sun, J. Memristor-based GFMM neural network circuit of biology with multiobjective decision and its application in industrial autonomous firefighting. IEEE Trans. Ind. Inform. 2025, 21, 5777–5786. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, S.; Zheng, Y.; Li, Y.; Li, C.; Wang, Y.; Ding, X.J.I.I.o.T.J. Tri-Memristor Hyperchaotic Ring Neural Network with Hidden Firings: Dynamic Analysis, Hardware Implementation and Application to Image Encryption. IEEE Internet Things J. 2025, in press. [Google Scholar] [CrossRef]

- Vazquez-Guerrero, P.; Tuladhar, R.; Psychalinos, C.; Elwakil, A.; Chacron, M.J.; Santamaria, F. Fractional order memcapacitive neuromorphic elements reproduce and predict neuronal function. Sci. Rep. 2024, 14, 5817. [Google Scholar] [CrossRef]

- Zhang, X.; Li, C.; Lei, T.; Iu, H.H.-C.; Kapitaniak, T. Coexisting Hyperchaos in a Memristive Neuromorphic Oscillator. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2025, 44, 3179–3188. [Google Scholar] [CrossRef]

- Wei, Q.; Gao, B.; Tang, J.; Qian, H.; Wu, H. Emerging memory-based chip development for neuromorphic computing: Status, challenges, and perspectives. IEEE Electron Devices Mag. 2023, 1, 33–49. [Google Scholar] [CrossRef]

- Burr, G.W.; Shelby, R.M.; Sebastian, A.; Kim, S.; Kim, S.; Sidler, S.; Virwani, K.; Ishii, M.; Narayanan, P.; Fumarola, A. Neuromorphic computing using non-volatile memory. Adv. Phys. X 2017, 2, 89–124. [Google Scholar] [CrossRef]

- Milo, V.; Malavena, G.; Monzio Compagnoni, C.; Ielmini, D. Memristive and CMOS devices for neuromorphic computing. Materials 2020, 13, 166. [Google Scholar] [CrossRef] [PubMed]

- Yammenavar, B.D.; Gurunaik, V.R.; Bevinagidad, R.N.; Gandage, V.U. Design and analog VLSI implementation of artificial neural network. Int. J. Artif. Intell. Appl. 2011, 2, 96–109. [Google Scholar] [CrossRef]

- Chen, X.; Byambadorj, Z.; Yajima, T.; Inoue, H.; Inoue, I.H.; Iizuka, T. CMOS-based area-and-power-efficient neuron and synapse circuits for time-domain analog spiking neural networks. Appl. Phys. Lett. 2023, 122, 074102. [Google Scholar] [CrossRef]

- Khanday, F.A.; Kant, N.A.; Dar, M.R.; Zulkifli, T.Z.A.; Psychalinos, C. Low-voltage low-power integrable CMOS circuit implementation of integer-and fractional–order FitzHugh–Nagumo neuron model. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 2108–2122. [Google Scholar] [CrossRef]

- Khanday, F.A.; Dar, M.R.; Kant, N.A.; Zulkifli, T.Z.A.; Psychalinos, C. Ultra-low-voltage integrable electronic implementation of delayed inertial neural networks for complex dynamical behavior using multiple activation functions. Neural Comput. Appl. 2020, 32, 8297–8314. [Google Scholar] [CrossRef]

- Aguirre, F.; Sebastian, A.; Le Gallo, M.; Song, W.; Wang, T.; Yang, J.J.; Lu, W.; Chang, M.-F.; Ielmini, D.; Yang, Y. Hardware implementation of memristor-based artificial neural networks. Nat. Commun. 2024, 15, 1974. [Google Scholar] [CrossRef] [PubMed]

- Xu, Q.; Wang, Y.; Wu, H.; Chen, M.; Chen, B. Periodic and chaotic spiking behaviors in a simplified memristive Hodgkin-Huxley circuit. Chaos Solitons Fractals 2024, 179, 114458. [Google Scholar] [CrossRef]

- Jeong, H.; Shi, L. Memristor devices for neural networks. J. Phys. D 2018, 52, 023003. [Google Scholar] [CrossRef]

- Indiveri, G.; Linares-Barranco, B.; Legenstein, R.; Deligeorgis, G.; Prodromakis, T. Integration of nanoscale memristor synapses in neuromorphic computing architectures. Nanotechnology 2013, 24, 384010. [Google Scholar] [CrossRef]

- Ye, L.; Gao, Z.; Fu, J.; Ren, W.; Yang, C.; Wen, J.; Wan, X.; Ren, Q.; Gu, S.; Liu, X. Overview of memristor-based neural network design and applications. Front. Phys. 2022, 10, 839243. [Google Scholar] [CrossRef]

- He, S.; Liu, J.; Wang, H.; Sun, K. A discrete memristive neural network and its application for character recognition. Neurocomputing 2023, 523, 1–8. [Google Scholar] [CrossRef]

- Li, F.; Chen, Z.; Zhang, Y.; Bai, L.; Bao, B. Cascade tri-neuron hopfield neural network: Dynamical analysis and analog circuit implementation. AEU-Int. J. Electron. Commun. 2024, 174, 155037. [Google Scholar] [CrossRef]

- Lai, Q.; Lai, C.; Zhang, H.; Li, C. Hidden coexisting hyperchaos of new memristive neuron model and its application in image encryption. Chaos Solitons Fractals 2022, 158, 112017. [Google Scholar] [CrossRef]

- Ma, M.; Xiong, K.; Li, Z.; He, S. Dynamical behavior of memristor-coupled heterogeneous discrete neural networks with synaptic crosstalk. Chin. Phys. B 2024, 33, 028706. [Google Scholar] [CrossRef]

- Bao, H.; Zhang, Y.; Liu, W.; Bao, B. Memristor synapse-coupled memristive neuron network: Synchronization transition and occurrence of chimera. Nonlinear Dyn. 2020, 100, 937–950. [Google Scholar] [CrossRef]

- Kozma, R.; Pino, R.E.; Pazienza, G.E. Advances in Neuromorphic Memristor Science and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Volume 4. [Google Scholar]

- Zhang, X.; Huang, A.; Hu, Q.; Xiao, Z.; Chu, P.K. Neuromorphic computing with memristor crossbar. Phys. Status Solidi 2018, 215, 1700875. [Google Scholar] [CrossRef]

- Weilenmann, C.; Ziogas, A.N.; Zellweger, T.; Portner, K.; Mladenović, M.; Kaniselvan, M.; Moraitis, T.; Luisier, M.; Emboras, A. Single neuromorphic memristor closely emulates multiple synaptic mechanisms for energy efficient neural networks. Nat. Commun. 2024, 15, 6898. [Google Scholar] [CrossRef]

- Bao, H.; Fan, J.; Hua, Z.; Xu, Q.; Bao, B. Discrete Memristive Hopfield Neural Network and Application in Memristor-State-Based Encryption. IEEE Internet Things J. 2025, 12, 31843–31855. [Google Scholar] [CrossRef]

- Sun, J.; Shen, Y.; Wang, Y.; Wang, Y. A Memristor-Based Neural Network Circuit with Retrospective Revaluation Effect and Application in Intelligent Household Robots. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 11466–11478. [Google Scholar] [CrossRef]

- Chua, L. If it’s pinched it’sa memristor. Semicond. Sci. Technol. 2014, 29, 104001. [Google Scholar] [CrossRef]

- Tan, Y.; Wang, C. A simple locally active memristor and its application in HR neurons. Chaos 2020, 30, 053118. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Li, C.; He, S. Locally active memristor with variable parameters and its oscillation circuit. Int. J. Bifurc. Chaos 2023, 33, 2350032. [Google Scholar] [CrossRef]

- Xu, Q.; Fang, Y.; Wu, H.; Bao, H.; Wang, N. Firing patterns and fast–slow dynamics in an N-type LAM-based FitzHugh–Nagumo circuit. Chaos Solitons Fractals 2024, 187, 115376. [Google Scholar] [CrossRef]

- Lu, R.; Ying, J.; Min, F.; Wang, G. Neurodynamics of Different Neurons Based on a Memristor with S-Type and N-Type Locally Active Domains. Int. J. Bifurc. Chaos 2025, 35, 2530008. [Google Scholar] [CrossRef]

- Xu, Q.; Fang, Y.; Feng, C.; Parastesh, F.; Chen, M.; Wang, N. Firing activity in an N-type locally active memristor-based Hodgkin–Huxley circuit. Nonlinear Dyn. 2024, 112, 13451–13464. [Google Scholar] [CrossRef]

- Ding, X.; Fan, W.; Wang, N.; Su, Y.; Chen, M.; Lin, Y.; Xu, Q. Dynamical behaviors and firing patterns in a fully memory-element emulator-based bionic circuit. Chaos Solitons Fractals 2025, 199, 116658. [Google Scholar] [CrossRef]

- Mou, J.; Cao, H.; Zhou, N.; Cao, Y. An FHN-HR neuron network coupled with a novel locally active memristor and its DSP implementation. IEEE Trans. Cybern. 2024, 54, 7333–7342. [Google Scholar] [CrossRef]

- Tabekoueng Njitacke, Z.; Sriram, G.; Rajagopal, K.; Karthikeyan, A.; Awrejcewicz, J. Energy computation and multiplier-less implementation of the two-dimensional FitzHugh–Nagumo (FHN) neural circuit. Eur. Phys. J. E 2023, 46, 60. [Google Scholar] [CrossRef]

- Njitacke, Z.T.; Ramadoss, J.; Takembo, C.N.; Rajagopal, K.; Awrejcewicz, J. An enhanced FitzHugh–Nagumo neuron circuit, microcontroller-based hardware implementation: Light illumination and magnetic field effects on information patterns. Chaos Solitons Fractals 2023, 167, 113014. [Google Scholar] [CrossRef]

- Chen, L.; Bao, H.; Zhang, X.; Zhang, Y.; Bao, B. DC-bias induced chaotic dynamics and periodic bursting in Chua’s diode-based FitzHugh-Nagumo circuit. Chaos Solitons Fractals 2025, 199, 116739. [Google Scholar] [CrossRef]

- Wolf, A.; Swift, J.B.; Swinney, H.L.; Vastano, J.A. Determining Lyapunov exponents from a time series. Phys. D 1985, 16, 285–317. [Google Scholar] [CrossRef]

- Jafari, S.; Sprott, J.C.; Pham, V.-T.; Golpayegani, S.M.R.H.; Jafari, A.H. A new cost function for parameter estimation of chaotic systems using return maps as fingerprints. Int. J. Bifurc. Chaos 2014, 24, 1450134. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-Qaness, M.A.; Gandomi, A.H. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).