Abstract

The field of systemology does not yet have a standardised terminology; there are multiple glossaries and diverse perspectives even about the meanings of fundamental terms. This situation undermines researchers’ and practitioners’ ability to communicate clearly both within and outside their own specialist communities. Our perspective is that different vocabularies can in principle be reconciled by seeking more generalised definitions that reduce, in specialised contexts, to the nuanced meaning intended in those contexts. To this end, this paper lays the groundwork for a community effort to develop an ‘Ontology of Systemology’. In particular we argue that the standard methods for ontology development can be enhanced by drawing on systems thinking principles, and show via four examples how these can be applied for both domain-specific and upper ontologies. We then use this insight to derive a systemic and systematic framework for selecting and organising the terminology of systemology. The outcome of this paper is therefore twofold: We show the value in applying a systems perspective to ontology development in any discipline, and we provide a starting outline for an Ontology of Systemology. We suggest that both outcomes could help to make systems concepts more accessible to other lines of inquiry.

1. Introduction: Glossaries and Ontologies in the Context of Systemology

Systemology is a field with a rich variety of important concepts but no standardized way of expressing them. One of the most important terminology resources in the field is Charles François’ International Encyclopedia of Systems and Cybernetics (hereafter, ‘Encyclopedia’) [1], with fully 3800 entries. It is an encyclopedia though, not an ontology, which means terms may have multiple definitions. In fact, this manifests in extremis: the Encyclopedia contains 18 pages of definitions for the term ‘system’ and nearly four pages of definitions for ‘hierarchy’.

In the absence of an agreed terminology for the field, and perhaps also because the Encyclopedia’s cost puts it out of reach of many systemologists, individuals will often develop vocabularies for how they use systems terms in their own work. If explicit, these may be published as glossaries to their books or papers, e.g., [2] (pp. 21–33), [3] (pp. 11–46), [4] and [5] (pp. 13–68), [6] (pp. 353–360), and [7] (pp. 205–212). However, those who do this rarely use formal methods or develop any structure for their terminologies, and their term use is often inconsistent with that of other researchers, even within the same community. Readers are left to try to rationalise different meanings across the resources they consult, and often simply make assumptions about an author’s intent.

The outcome is not just terminological confusion, but also conceptual blurring. In 2016 the International Council on Systems Engineering (INCOSE) appointed a team of INCOSE Fellows to propose a definition of ‘system’ that would be suitable for systems engineering (SE). The team found that even amongst the relatively small number of current INCOSE Fellows there are seven different perspectives on the meaning of ‘system’ [8].

These conceptual differences can have profound implications. For example, in the SE field the term “emergence” is often taken to refer to system behaviours that were not designed for [9,10], while in system science it is usually taken to refer to properties the system has but the parts by themselves do not [11]. This is a significant difference because it entails that for system scientists the notion of emergence is central to the notion of what a system is, whereas for some systems engineers it is incidental.

Having multiple systems terminologies in use clearly inhibits communication between specialists even within the same community. Moreover, it is hard to build on ambiguous foundations, and we suggest that this state of affairs has impeded progress in the systems field. We have argued elsewhere [12] (p. 7) that the initial energy behind the search for a General Systems Theory (GST) was dissipated by Ludwig von Bertalanffy’s inconsistency throughout his eponymous book [13], where he used the term GST in 16 different ways.

Most importantly, we suggest that this ontological confusion has constrained the uptake of systems concepts in other disciplines that might well have benefitted from them. We will elaborate on the mechanism for this last-mentioned consequence in a later section.

Our perspective in seeking a resolution to this situation is that different researchers’ vocabularies are designed to capture nuances of meaning that are important in their special case contexts, and these can in principle be reconciled by seeking a more generalised concept that is consilient with each special case. An example of this in practice is the INCOSE Fellows’ project mentioned above, which resulted in a definition for the word “system” that can reduce, in different situations, to the different perspectives they identified.

Drawing on a distinction from Information Science between a “domain ontology”, which involves domain-specific terms, and an “upper ontology”, which involves terms that are applicable across multiple domains, we argue that Systemology has the unusual potential to provide both types of concepts. In fact, we suggest that it is the failure to distinguish between the different types of terminologies applicable in Systemology that has in large part led to the terminological confusions mentioned above.

Although the unique nature of Systemology has exacerbated the challenges involved in developing its terminology, it also provides the means to resolve them. In this paper we show how systems thinking can itself be applied to the process of ontology development, usefully extending the extensive formal methods that are already in existence. Amongst the examples we develop is a proposed structure for a concept map for Systemology, developing the General Inquiry Framework structure we introduced in a companion paper in this journal issue [14].

The purpose of the present paper is to lay the basic groundwork for a community effort to develop an ‘Ontology of Systemology’, by:

- providing arguments for the need for such an ontology;

- disambiguating different concepts relating to termbases, vocabularies, and ontologies;

- providing background on how such ontologies are constructed;

- making suggestions for how systems thinking can aid the building of ontologies; and

- proposing a systemic and systematic framework for selecting and organising the terminology of Systemology.

We hold that the application of systems thinking to the building of ontologies would be valuable in all attempts to build ontologies, especially in areas of study with many ambiguous terms (e.g., consciousness studies) or high uncertainty about which terms are relevant for the study area (e.g., frontier science). Moreover, we believe that the structure we will propose for organising the Ontology of Systemology will aid the application of systems concepts in doing specialised research, by linking kinds of systems concepts to general kinds of lines of inquiry.

2. General Background on Ontologies and Concept Maps

2.1. Scientific and Philosophical Uses of the Term Ontology

The term ‘ontology’ has different meanings to philosophers and scientists. The scientific meaning derives from Information Science, where ‘ontology’ refers to a shared conceptualisation of a domain, presented as an organised technical vocabulary for that domain [15]. The term ‘ontology’ is here meant to evoke the idea that the terms and their associated concepts are the building blocks of theories in the discipline or domain, and hence reflect the most basic units of thought about the subjects under study [16] (p. 9). This is different from, but not unrelated to, the use of this term in Philosophy. In Philosophy, the term ‘Ontology’ refers to a discipline investigating what exists most fundamentally in the real world [17,18], and hence reflects the most fundamental units of thought for theorising about the nature of reality. In this paper we primarily intend the meaning from Information Science, although there are a few places where we will need to draw on the philosophical concept. We have tried to make the usage clear in each case.

The above scientific definition embeds nuanced meaning for the phrase “an organised technical vocabulary”. In the following sections we introduce key concepts in ontology development that elaborate on this definition as a foundation for the arguments we will develop later.

2.2. Definition of a Category in Ontology

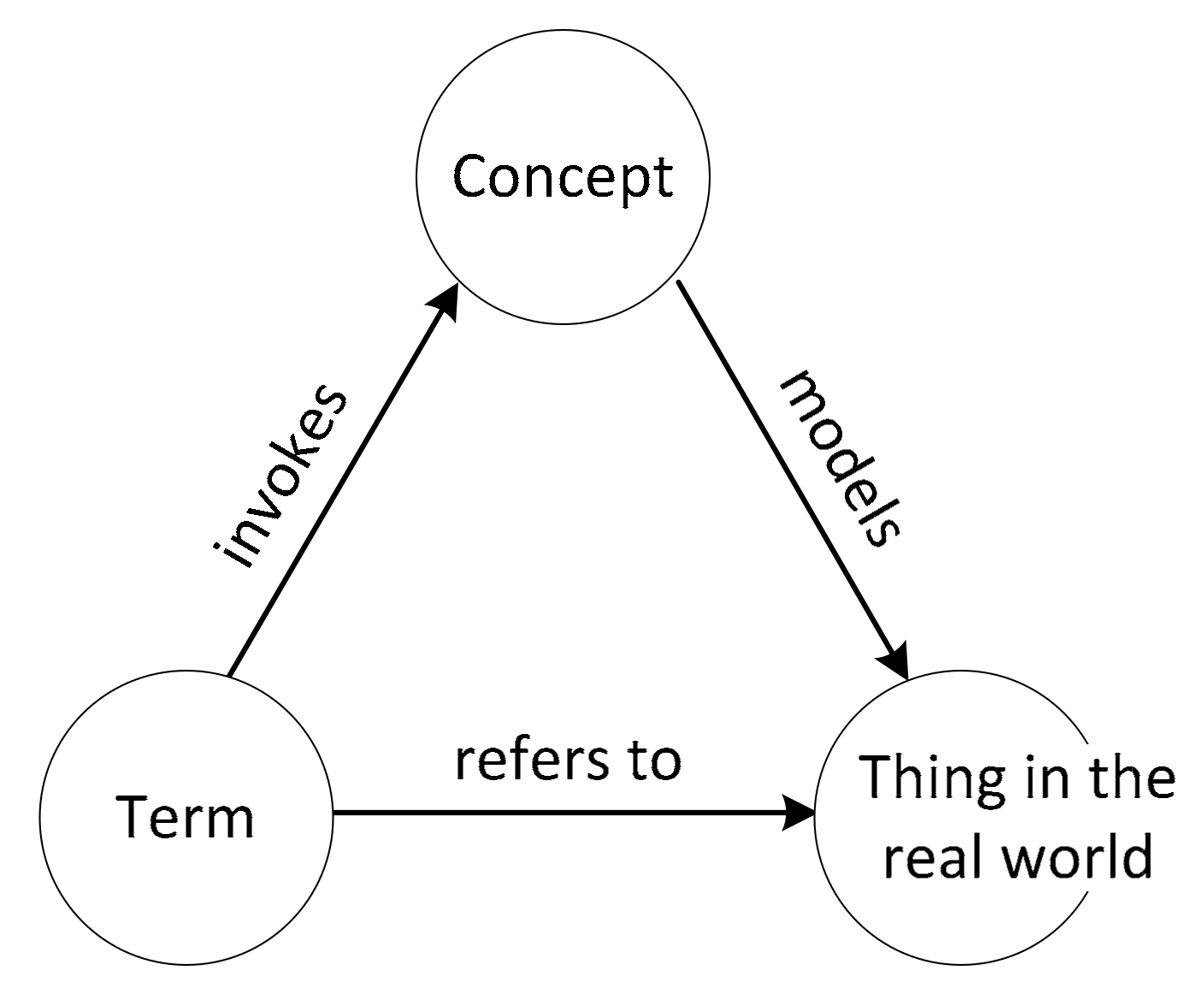

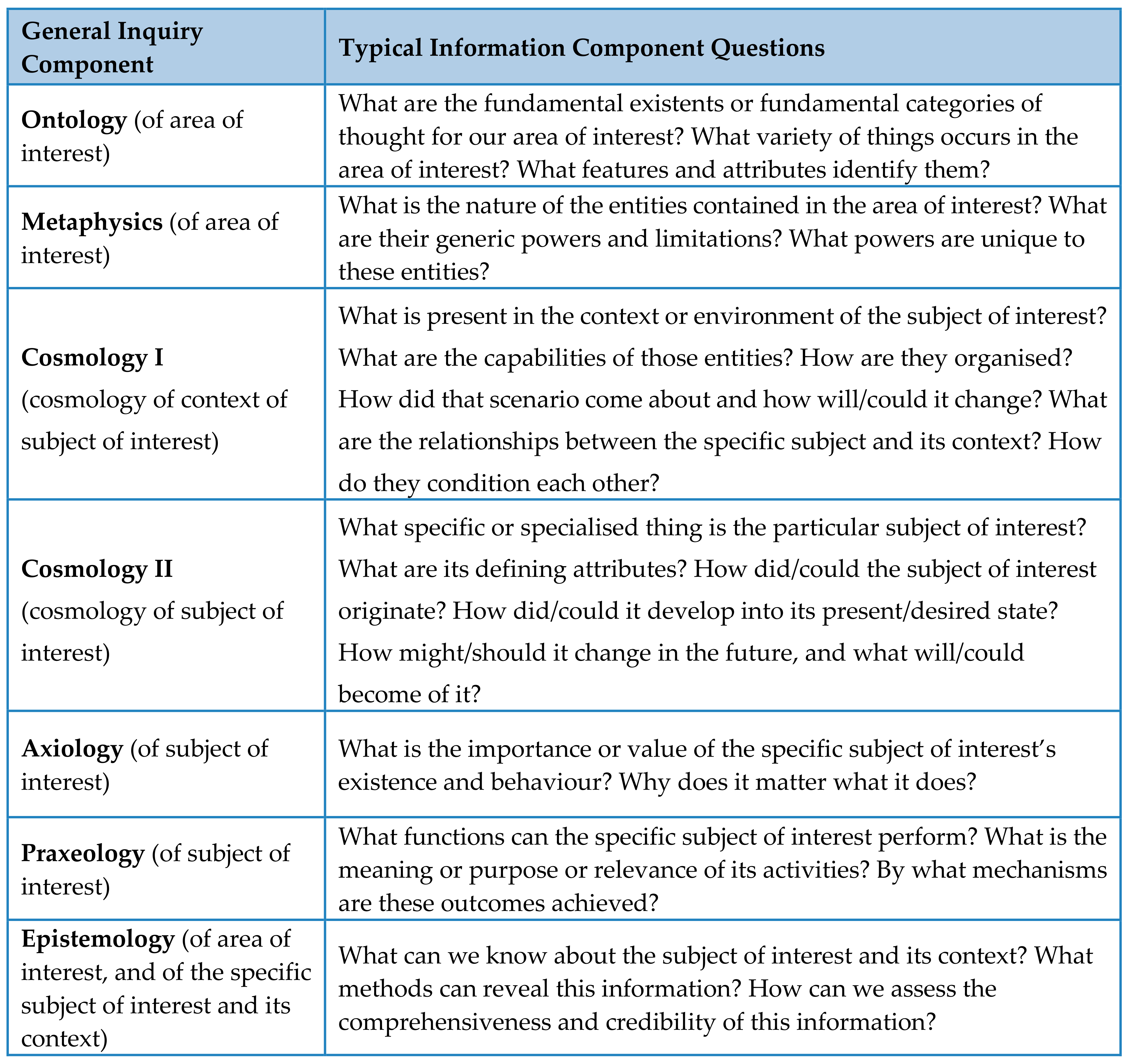

The semiotic triangle, also known as the triangle of meaning, is a simple model about the meaning of words, specifically the words that point to or reference something [19,20].

A basic analysis, which we find already in Aristotle, shows that we use certain words (called “terms”) to point to or refer to ‘things’ that exist ‘in the world’ e.g., flowers, buildings, persons, love, values, numbers, emotions, ideas, beliefs and myths. We think these things exist because we have concepts in our minds that represent them to us, and experiences that we can interpret using these concepts.1 In this way, we find our perceptions meaningful insofar as they can be associated with concepts. We can model this in terms of the semiotic triangle, as illustrated in Figure 1.

Figure 1.

The semiotic triangle.

Note that according to this model each term has a ‘referent’, namely the ‘thing’ (phenomenon) in the real world it points to, and a ‘meaning’, which is the idea (concept) it evokes in our or other minds when we use the term. The concept is the mental model one has of the thing or phenomenon being referred to by the term, and hence the concept is the meaning the perceived phenomenon has for the observer.2 The concepts we have about things in the real world are ‘categories of thought’, i.e., ideas that help us to understand our perceptions and hence make judgements or take action as we go about our lives. When we consciously associate these concepts with terms, we establish the elements of a language.

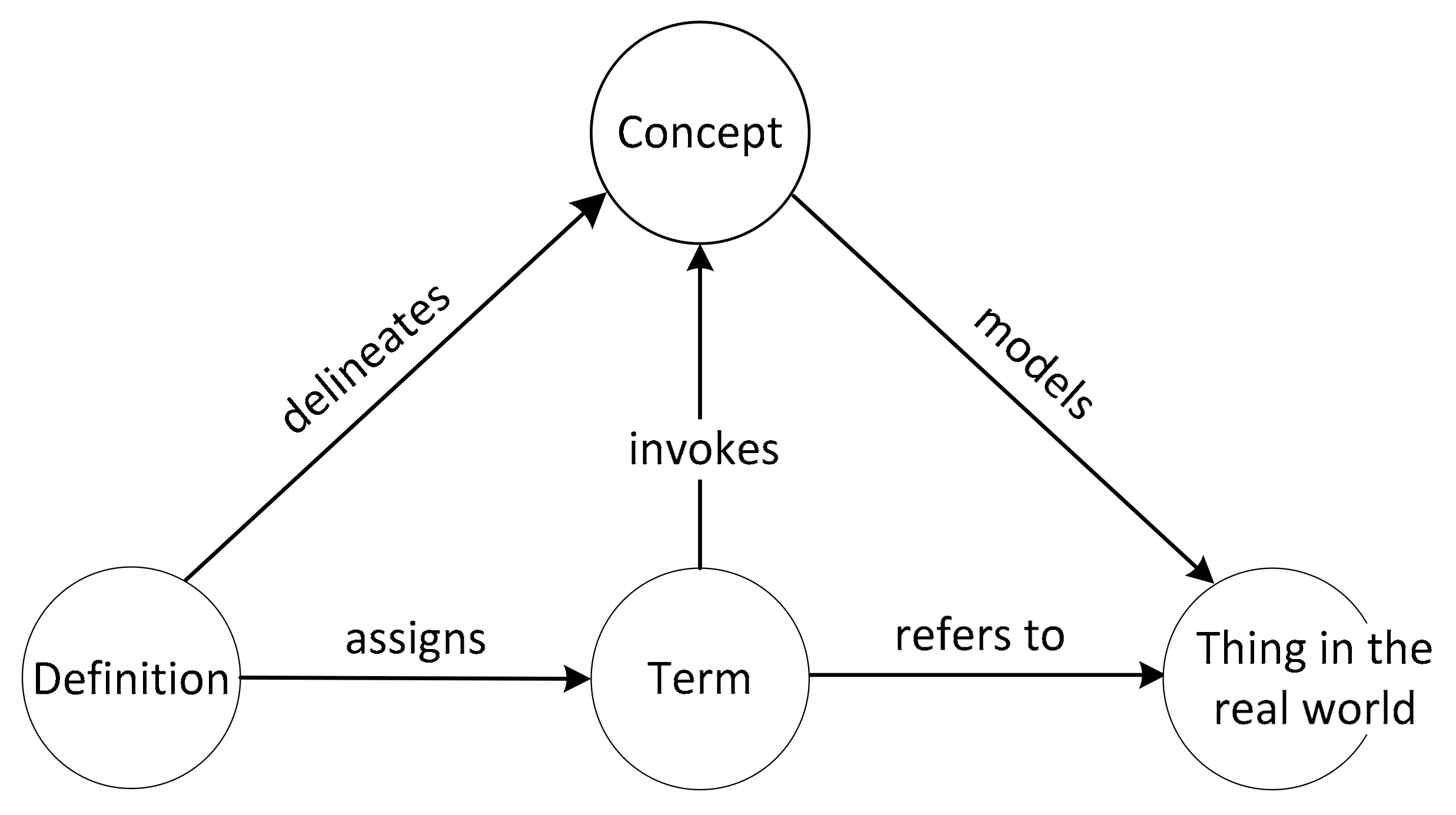

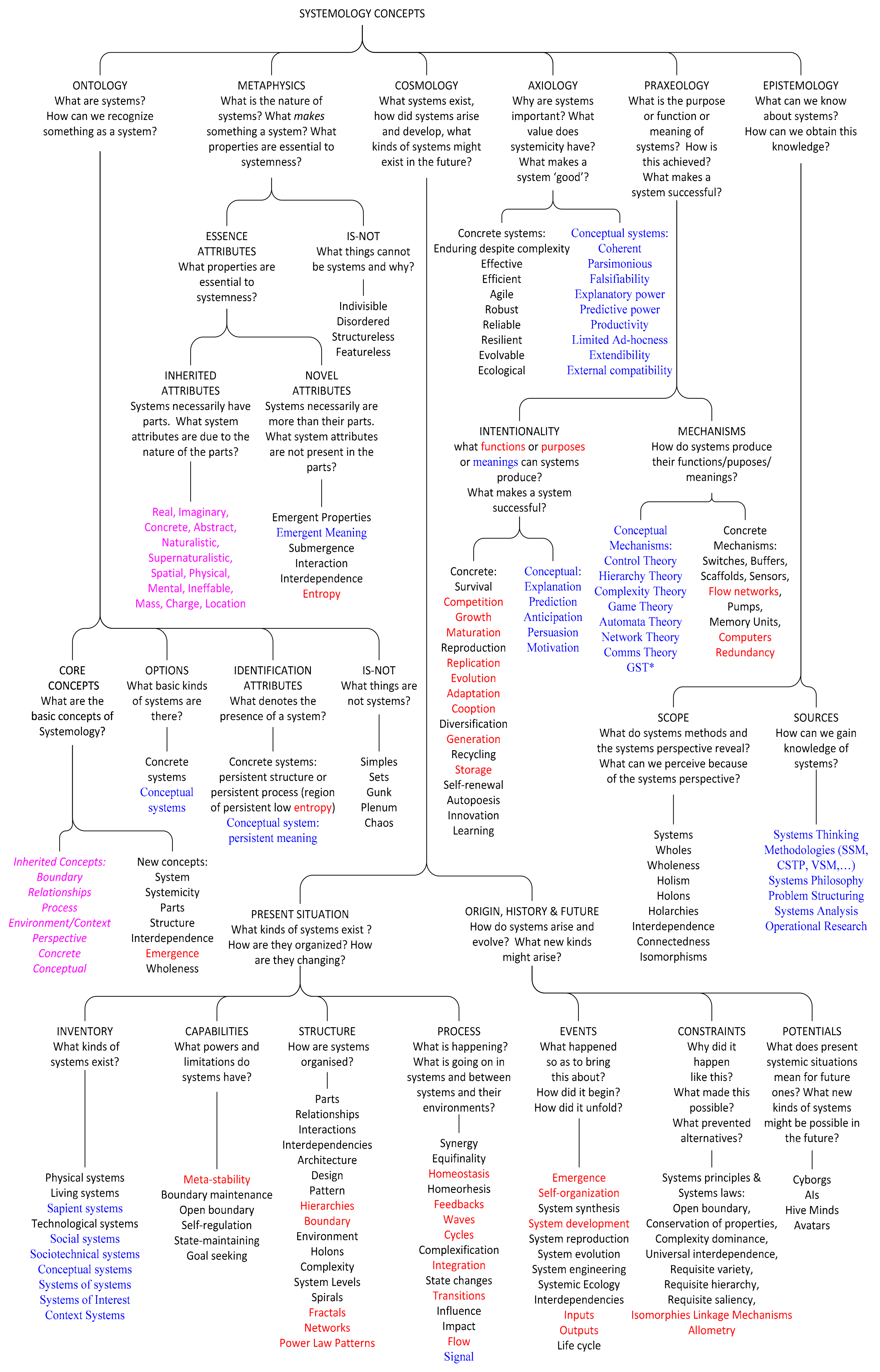

There is, however, a variety differential between ordinary language and thought. We can see from this simple model how miscommunication arises in common discourse, because although in discussions our use of the same term may actually refer to the same thing in the real world, the conceptual models they invoke in each of us may be different, and so we may misunderstand each other even when we correctly use the same terms to refer to the same actual things in the real world. The polysemy of terms is a problem in addition to the one of having terms that point to different referents in different contexts. This is a frequent occurrence in the common use of natural language terms. For the 500 most common words in the English language the Oxford English Dictionary records 14,070 distinct meanings—an average of 28 meanings for each word [22]. We clearly have many more concepts than we have terms, and this is why we have to create ‘technical terminologies’ or ‘protected vocabularies’ or ‘technical vocabularies’ for specific disciplines or areas of investigation, so that terms we use have commonly agreed unique meanings in those contexts.3 Without such a communal consent about the use of terms, we could not hope to effectively collaborate within or across disciplines. We would be unable to agree on what we are about to do, explain why it is important, teach what we have learnt, or think in a consistent way about our subject matter. To create such a context-specific vocabulary we place constraints on meanings through definitions, the definitions specifying what attributes of the thing in question are to be included or excluded from the concept entailed by the term. This is illustrated in Figure 2.

Figure 2.

A category of analysis in relation to a thing in the real world.

Note that definitions can come in multiple forms [24], some of which are stronger than others, e.g.,:

- intensional definitions, which specify the meaning of a term by giving the necessary and sufficient conditions for when the term applies;

- extensional definitions, which define applicability by listing everything that falls under that definition;

- operational definitions, which define applicable ranges of measurable parameters within which the term applies;

- ostensive definitions, which suggests where the term applies by giving indicative examples (without being exhaustive); and

- negative definitions, which articulates applicability by specifying what is excluded from the meaning of the term.

By using a careful definition, a concept can be made more precise, and the distinctions that can be made in discussing the subject can be made finer, thus improving the rigour and the expressive power of the associated term.

When we consciously restrict the meaning of a concept evoked by a term, by associating it with a definition, then we create a ‘category of analysis’. In ontology development, this is referred to as a ‘category’.

2.3. Definitions of a Vocabulary and a Termbase

Ontology construction typically starts with collecting terms and assigning their definitions, and such a collection is called the domain’s (technical) ‘vocabulary’. Such a vocabulary differs from a dictionary in that every term has a unique meaning. If the vocabulary is captured in a database, then the database is called a ‘termbase’, and the process of collecting, defining, translating, and storing those terms is known as ‘terminology management’.

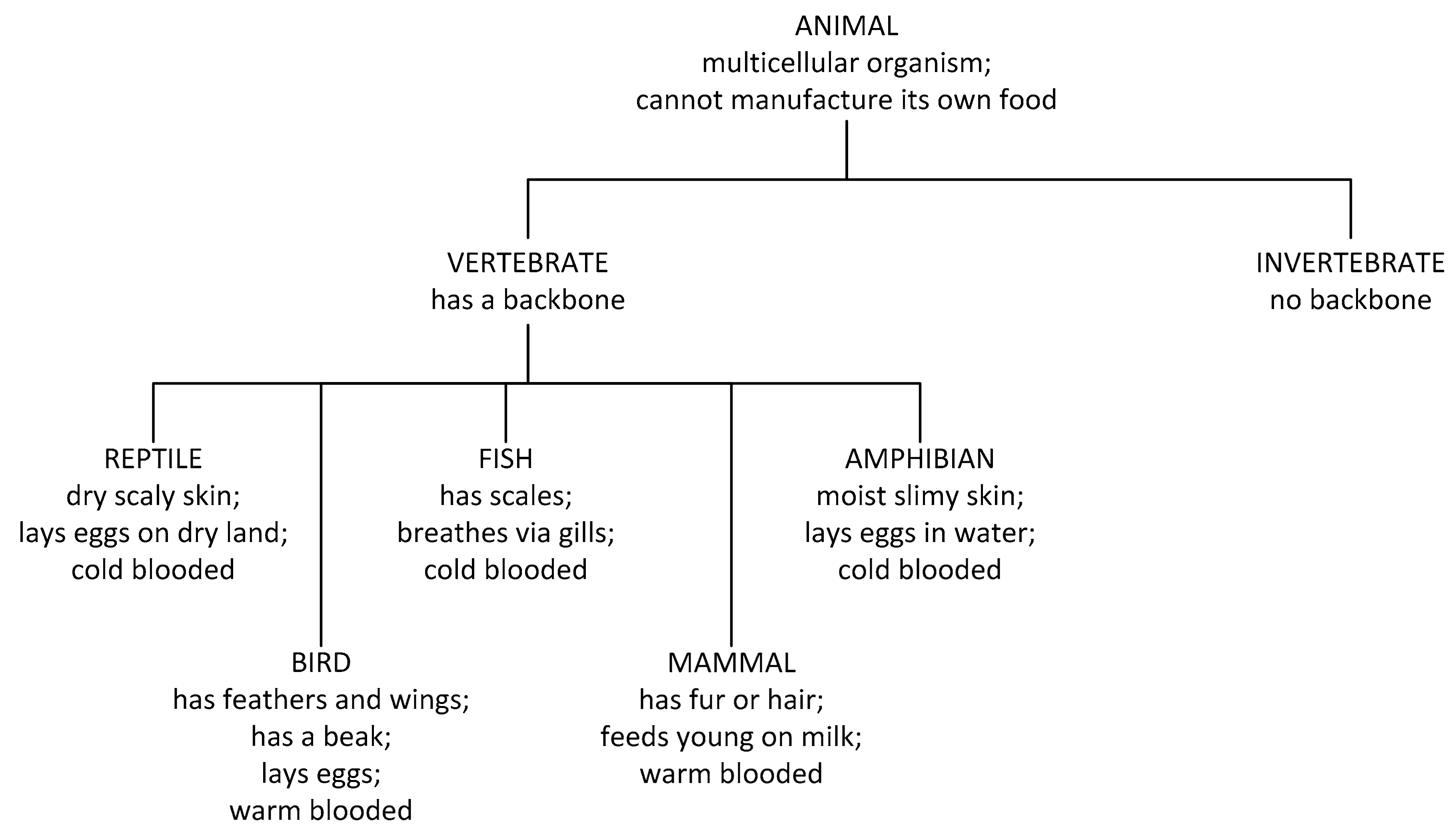

2.4. Definition of a Concept Map

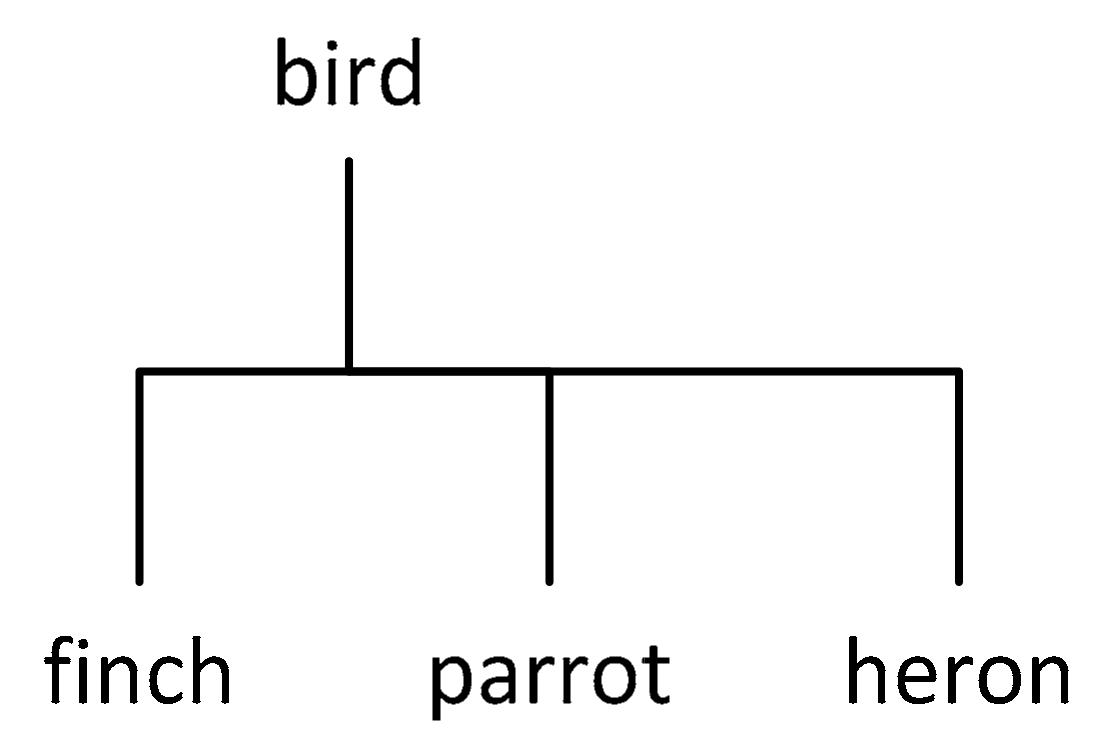

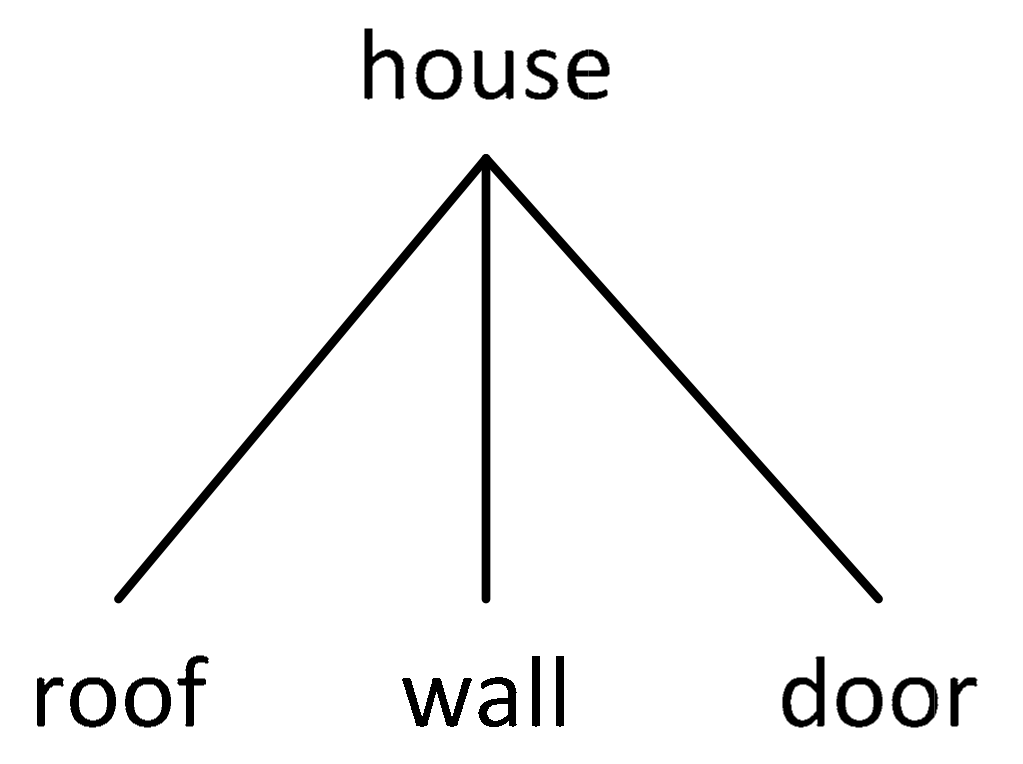

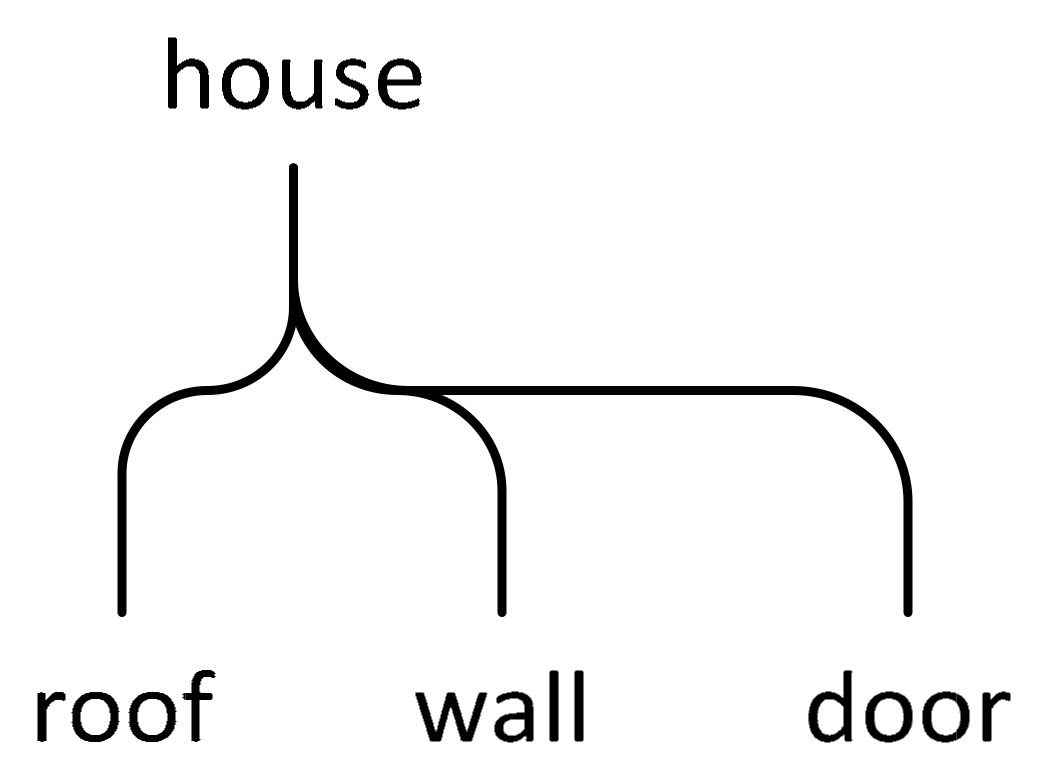

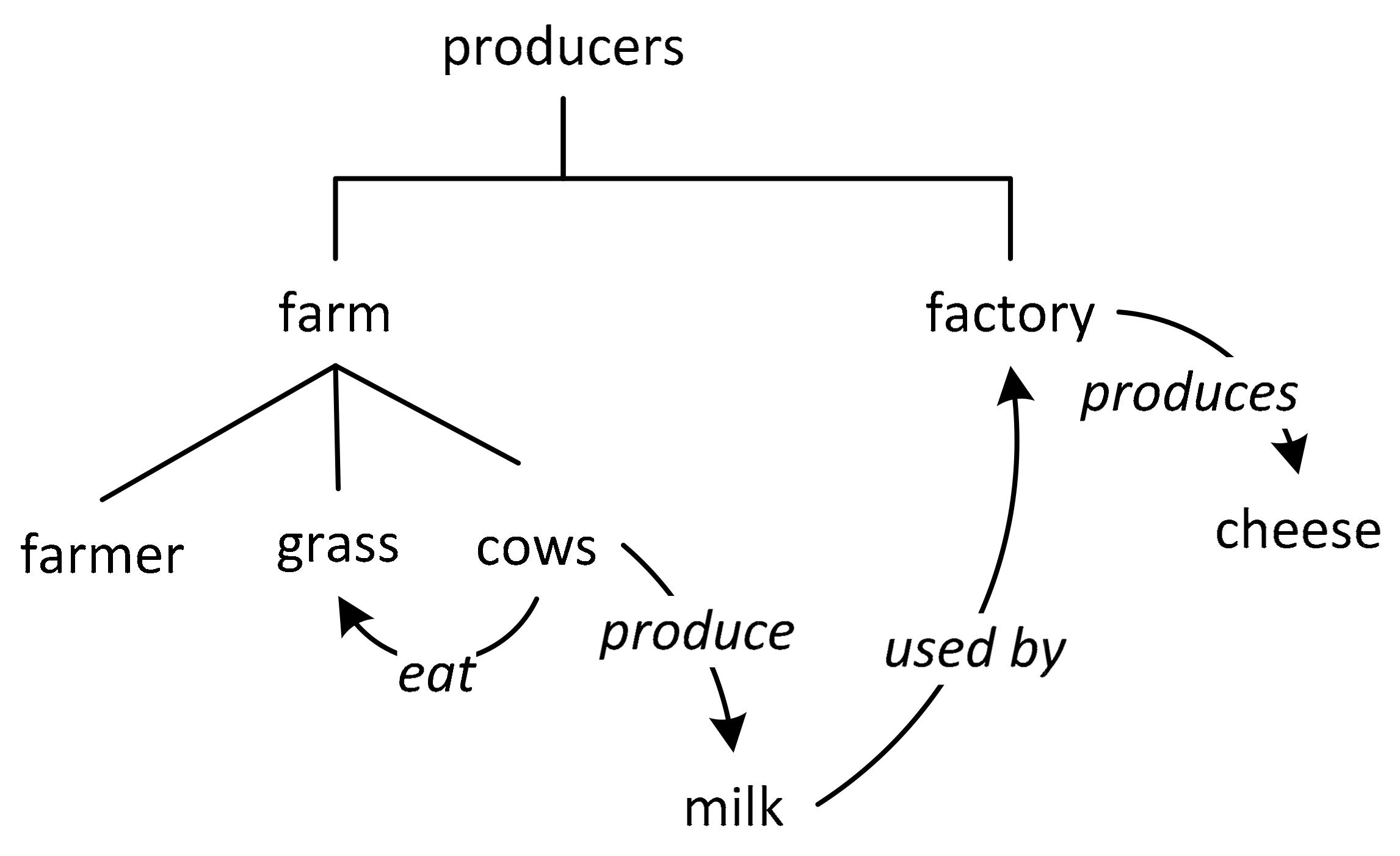

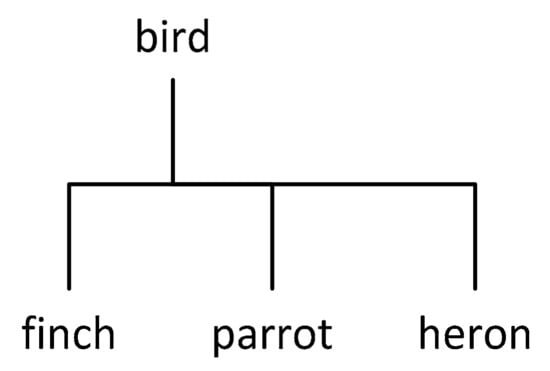

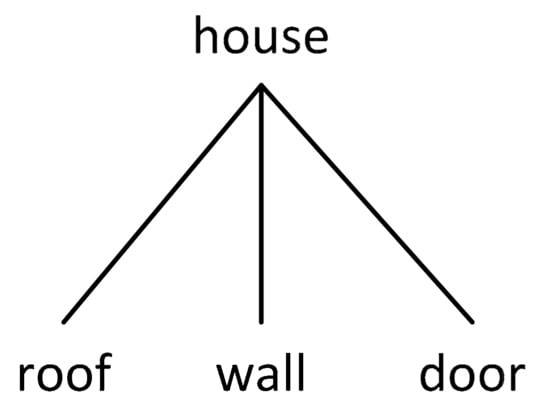

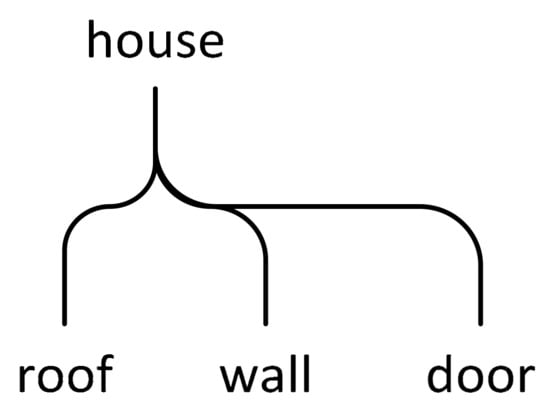

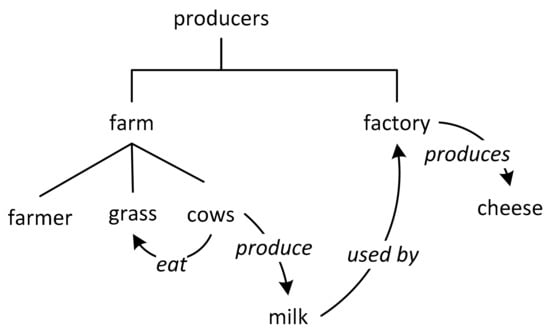

Concepts are not formed or used in isolation, and it is a common practice to visualise the relationships among them using a graphical representation. Such a graphical representation is called a ‘concept map’. A concept map is typically presented as a network, with the concepts placed at the nodes and the relationships as connecting lines, which can be labelled to indicate the relationship. Figure 1 and Figure 2 are diagrams of this sort. One can make this as simple or as complex as needed for the study in hand. There are many kinds of relationships that can exist between concepts. Three common ones are the superordinate/subordinate relationship, where the characteristics of the more general concept are inherited by the more specialised ones (as shown in the “inheritance hierarchy” given in Figure 3), the whole/part relationship where the characteristics are not inherited (as shown in the “partitive hierarchy” given in Figure 4), and the associative relationship (as used in the “relationships network” given in Figure 2) (refs). Note the use of graphical conventions such as tree forms, fan forms and labelled arrows to indicate kinds of relationships. Such conventions are important but they are not standardised—for example, we could equally denote a partitive hierarchy using a tree with rounded corners to distinguish it from the square-cornered tree used to denote an inheritance hierarchy. Such a convention for a partitive hierarchy is shown in Figure 5. The kinds of relationships can of course be used in combination in the same concept map, so long as the conventions used are made clear. An example of such a mixed-model concept map is shown in Figure 6.

Figure 3.

An inheritance hierarchy.

Figure 4.

A partitive hierarchy.

Figure 5.

An alternative convention for showing a partitive hierarchy.

Figure 6.

A concept map with multiple kinds of relationships.

2.5. Definition of an Ontology

An ontology consists of categories, as defined above, arranged according to some subject matter classification scheme, such as a taxonomy or typology [25]. The classification scheme provides a structure into which the concepts in the vocabulary can be sorted, and establishes the relationships between the concepts.

Ontologies are often specified in a formal way so they can be made machine-readable, and thus be used in application areas involving the processing of information by computers, for example in AI, machine translation, and knowledge management. However, as shown above, ontologies can be represented in a graphical way, via a concept map. Because of their visual character, concept maps are useful tools for making a concise representation of all or some part of an ontology, and thus are often used to guide the early stages of ontology development. However, formal ontologies enable the representation of vast ontologies, which concept maps cannot so easily or usefully do.

2.6. Types of Ontologies

In addition to domain ontologies, there are also ‘upper ontologies’ (also called ‘foundational ontologies’ or ‘top ontologies’), which contain general categories that are applicable across multiple domains. Upper ontologies serve to provide semantic interoperability of ontologies across multiple domains. Upper ontologies provide general concepts which are common to all domains, and therefore they can provide a common foundation for domain ontologies.

Multiple upper ontologies have been developed, reflecting difference in interests or worldviews (for example, differing in how they envision the nature of time, e.g., as consisting of points or intervals). An upper ontology is developed with contributions from various philosophical disciplines. For example, one part of an upper ontology might be derived from the branches of philosophy called ‘Ontology’ and ‘Metaphysics’, providing general categories denoted by terms such as object, process, property, relation, space, time, role, function, individual, etc. [17]. Another part might be derived from the philosophy of science, providing categories denoted by terms such as energy, force, entropy, quantum, momentum, mechanism, interaction, species, etc. [26]. Another part might be derived from the philosophy of worldviews, providing general categories denoted by terms such as meaning, value, purpose, agency, freedom, knowledge, belief, etc. [14]. We can now see that another part might be derived from the philosophy of systemology, providing general categories denoted by terms such as system, hierarchy, emergence, wholeness, holon, complexity, integration, feedback, meta-stability, design pattern, etc.

The historical inconsistency and ambiguity of systemology’s terms have impeded the construction of an ‘ontology of systemology’, and this has limited the impact of systemology in the building of upper ontologies. This is what we meant earlier when we claimed that having multiple systems terminologies limits the ability to transfer insights from systemology to other disciplines that might be able to employ them, and thus opportunities are lost for accelerating progress and avoiding duplication of effort in the specialised disciplines.

2.7. State of the Art in Ontology Development

There are several important standards that have been established for ontology development, several significant implementations of these standards, and several examples of uses of these implementations relevant to the systems community. We will not review these here, but in this section we mention some of the key ones in order to give a sense of the ‘the state of the art’ of the foundations and the technical maturity of the field of ontology development.

The following are the most relevant standards for terminology management.

- ISO 704:2000 Terminology work—Principles and methods

- ISO 860:1996 Terminology work—Harmonisation of concepts and terms

- ISO 1087-1:2000 Terminology work—Vocabulary-Part 1: Theory and application

- ISO 1087-2:2000 Terminology work—Vocabulary-Part 2: Computer applications

- ISO 10241:1992 Preparation and layout of international terminology standards

- NISO Z39.19-200x Guidelines for the Construction, Format, and Management of Monolingual Controlled Vocabularies

Several upper ontologies have been constructed to potentially serve as foundations for building domain ontologies (in ontology development these are called ‘implementations’ of the standards mentioned above). Significant implementations include:

- BFO (Basic Formal Ontology) [27]

- DOLCE (Descriptive Ontology for Linguistic and Cognitive Engineering) [28,29]

- GFO (General Formal Ontology) [30]

- SUMO (The Suggested Upper Merged Ontology) [31]

- KR Ontology [32]

- YAMATO (Yet Another More Advanced Top-level Ontology) [33]

- UFO (Unified Foundational Ontology) [34]

- PROTON (PROTo ONtology) [35]

- Cyc [36]

Some of these implementations are extensive. For example, SUMO, which is owned by the IEEE, underpins the largest formal public ontology in existence today [31]. It consists of SUMO itself (the upper ontology), a mid-level ontology called MILO (for MId-Level Ontology), and more than 30 domain ontologies (e.g., Communications, Countries, Distributed computing and User interfaces, Economy, Finance, Automobiles and Engineering components, Food, Sport, Geography, Government and Justice, People and their Emotions, Viruses, and Weather. The SUMO-based ontology cluster contains ~25,000 terms and ~80,000 relationship axioms. This ontology is free to use, unlike Cyc, for example, which is licenced.

Different implementations make different assumptions about what should be included and how it should be represented, and these choices bestow different strengths and weaknesses on the corresponding ontology implementations. A comparison of different upper ontology implementations can be found here [37], and illustrative examples of some of these implementations together with a discussion of differences in their commitments, and their pros and cons, can be found here: https://cw.fel.cvut.cz/wiki/_media/courses/osw/lecture-08matching-h.pdf.

Ontology standards and formal implementations have thus far had little recognition or impact in the systems community. For example, INCOSE’s Systems Science Working Group (SSWG) initiated a project in early 2011 to develop a unified ontology around the concept of ‘system’, but effectively abandoned the project after 2.5 years, concluding that “the attempt to converge on unified concept maps let alone any conversion of these to ontologies may be futile”.4 The working group did consult ISO standards about specific terms (e.g., ‘context’ and ‘environment’5) but not any of the ISO standards about ontology development. They did reflect on the KR ontology, but there is little trace of it in the concepts maps the working group produced.6

A significant, but limited, attempt to apply formal ontologies occurred in 2018, as part of the abovementioned INCOSE Fellows Project on the definition of ‘system’. This project demonstrated how their proposed definition of ‘system’ might be represented using BFO [41].

Although we have well-developed standards for the procedure of building up a concept map for a domain of interest (for a general overview, see [42]), the task can be intellectually challenging. We will discuss some of these challenges in the next section.

3. Systems Thinking as an Aid to Ontology Development

3.1. General Challenges in Ontology Development

As mentioned above, there are well-established methods and frameworks for constructing ontologies. Nevertheless, constructing a domain ontology can be extremely challenging as an intellectual exercise. The basic challenges are to:

- Keep the definitions clear while expressing them as compactly as possible;

- Limit the conceptual scope of a term to the minimum without trivialising it;

- Minimise the number of terms employed without leaving out important distinctions;

- Maintain coherence of the network of terms and definitions;

- Maximise the use of categories and relationships already established in a relevant upper ontology, to avoid duplication of effort and to maximise interoperability with other domain ontologies; and

- Maximise compatibility between proposed terms, definitions and meanings already present in the scholarly literature.

We shall refer to the items in the above list, taken as goals for ontology development, as ‘general principles in ontology development’.

It is unlikely that all of these criteria can be satisfied simultaneously, so some compromises will have to be reached. It is therefore important to bear in mind the impact of changes at every step, not only on the new additions themselves but also on the whole ontology developed thus far.

3.2. The Nature of Systems, and the Systemicity of Ontologies

We mentioned earlier the interdependencies between the concepts in an ontology, without exploring an important implication of these relationships, namely that an ontology is a system in a sense that is much deeper and richer than the fact that it instantiates a taxonomic system.

According to one systems definition, due to Anatol Rapoport and popular in the systems science community, a system is a whole that functions as a whole because of the interactions between its parts [43]. This is consistent with the definition of systems now emerging in the systems engineering community, according to which “A physical system is a structured set of parts or elements, which together exhibit behaviour that the individual parts do not” and “A conceptual system is a structured set of parts or elements, which together exhibit meaning that the individual parts do not” [8,44].

In the sense of these definitions, an ontology is a conceptual system. A ontology’s systemicity is evident in the way that the scope of the definitions of existing terms within an ontology are constantly adjusted to accommodate new terms and their definitions in a way that maintains the distinctness of each term’s meaning, the overall coherence of the relationships between the terms, and changes in the relationships between the domain of interest and other domains.

In our view, recognising that an ontology is a system is important because it implies that we can leverage knowledge that we have about systems in general, to help us meet the specific challenges outlined above. In the subsections to follow, we will demonstrate various ways in which systems concepts and principles either enrich our understanding of existing practices in ontology development or suggest helpful new approaches to it.

3.3. Systems Thinking for Ontology Development

To leverage our knowledge of systems in the context of ontology development, we have to employ what is known as “Systems Thinking”. There is no general agreement on what ‘Systems Thinking’ is, but there have been many attempts to define some ‘common core’ for it, e.g., [45,46]. A challenge to such assessment and interpolation is that the body of literature on this subject is extensive, and consequently it is easy for any particular review to overlook important works.

We regard the framework developed initially by Derek Cabrera as the most representative account of what Systems Thinking is, because he used formal scientific methods to distill and evaluate the concepts and themes relevant to systems thinking. Cornell University awarded a PhD for this work in 2006 [47]. It has since been extensively discussed in the academic literature (e.g., [48]) and was recently published as a popular book co-authored with translational scientist Laura Cabrera [49].

We took Cabrera’s definition of ‘Systems Thinking’ as our standard for the present study [47] (p. 176):

“Systems thinking is a conceptual framework, derived from patterns in systems science concepts, theories and methods, in which a concept about a phenomenon evolves by recursively applying rules to each construct and thus changes or eliminates existing constructs or creates new ones until an internally consistent conclusion is reached. The rules are:

- Distinction making: differentiating between a concept’s identity (what it is) and the other (what it is not), between what is internal and what is external to the boundaries of the concept or system of concepts;

- Interrelating: inter linking one concept to another by identifying reciprocal (i.e., 2 × 2) causes and effects;

- Organising Systems: lumping or splitting concepts into larger wholes or smaller parts; and

- Perspective taking: reorienting a system of concepts by determining the focal point from which observation occurs by attributing to a point in the system a view of the other objects in the system (e.g., a point of view)”.

Cabrera called this the ‘DSRP’ framework, after Distinction-making (D), Organising Systems (S), Inter-relating (R), and Perspective-taking (P), and from this he developed an application method based on what he now calls ‘the Distinction Rule’, ‘the Relationships Rule’, ‘the Systems Rule’, and ‘the Perspectives Rule’ [49] (p. 9). Each rule is defined in terms of a concept and its dual, namely thing/idea and other (D), cause and effect (R), whole and part (S), and point/subject and viewpoint/object (P) [47] (p. 178), [49] (p. 9).

In what follows, we will introduce various concepts that are important in systems science and show how these concepts and Systems Thinking (in the mold of DSRP) can support efforts in ontology development.

3.4. Systems Principles in Ontology Development

3.4.1. Dialectical Feedback

As mentioned above, a key challenge for ontology development is to ensure that concepts are clear and their definitions are succinct without sacrificing their utility: after all, a “good” concept represents the smallest unit of knowledge carrying as much meaning as possible [16] (p. 9). One way to achieve concise definitions is to leverage a systems principle that originated in dialectics. Dialectics was an ancient debating technique, dating back to Plato, where persons with opposite points of view tried to find out the truth about some matter through reasoned means by analysing the implications of their opposing views. Dialectical reasoning found a modern incarnation in Hegel, whose dialectical method explicitly sought insight by exploring the tension between opposing concepts (rather than persons with opposing views). Hegelian dialectics has found a more modern home in systems theory, in the sense that the systems perspective looks for understanding by considering not only the nature of the parts (the reductionist perspective) but also in the relationship to the context/environment, and hence aims to explain a phenomenon not only in terms of its composition but also in terms of its relationship to what it is not, that is, to what is different from it or contrastive to it [50,51].7 In this way, the meaning of the initial ‘concept of interest’ is deepened and clarified by making it contingent on also understanding the concept associated with its opposite and the nature of the relationship between the two. This suggests that looking for dialectical opposites might be a useful strategy for clarifying and compacting definitions for terms in an ontology, because it is often easier to say what something is not than to say what it is. Note that this is not merely a matter of making a negative definition of the ‘concept of interest’, but rather to define it and its contrary jointly, making the relationship between them part of how either concept is understood.8 In practice, this technique would typically be applied where the initial concept is difficult to define precisely, or where there is controversy about how the representing term should be used.

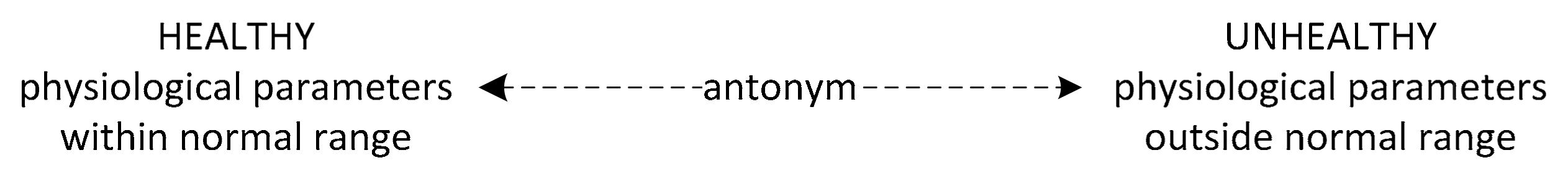

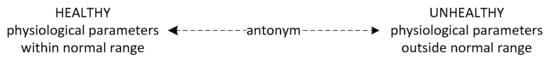

For example, imagine we need to define a term such us ‘healthy’ in the context of human persons. To define a basis on which we might, in general, regard a person as healthy is a challenge because human beings are complex systems with multiple properties and state variables, and there may be a large number of ways in which these can be balanced so as to add up to an assessment of ‘heathy’. How can we know that our definition is adequate and comprehensive? The systems principle of dialectical feedback suggests that we start by looking at its opposite, ‘unhealthy’. We can easily conceptualize this, because an unhealthy person could be defined as a person presenting any of the signs that we know of as originating in diseases or traumas, e.g., fevers, pains, cardiac arythmias, swellings etc.9 We could recognise such signs even in the absence of being able to diagnose the specific disease or trauma, so this definition of ‘unhealthy’ is not contingent on how comprehensive our knowledge of diseases and traumas are. However, the list of signs suggests a list of relevant physiological parameters that are disturbed by diseases and traumas. We can in principle determine the normal ranges of those parameters in persons not suffering from a disease or trauma, and thus we can now define a healthy person as someone exhibiting physiological signs that are within normal ranges, as shown in Figure 7. This is a simplified example but it demonstrates how we can quickly get to a satisfactory definition by working with the relationship between contraries. Note that the two definitions are linked by the concept of ‘signs’, which we had to define along the way, and also a special notion of ‘normal’, which was introduced to express the nature of the contrast between the two definitions. The dialectical tension is not captured by the fact that both definitions involve the notion of ‘signs’, but by the two definitions being grounded in the signs having different ranges for their parametric values. It is quite common when refining terms in this way to have to also define or refine other terms and articulate their relationship to the categories we are working on. This is a positive phenomenon in ontology development because it improves the terminology in a broader sense than we set out to do, and this may make it easier to refine other terms later on.

Figure 7.

A concept map for the categories ‘healthy’ and ‘unhealthy’.

The mapping convention used here is to display the categories in upper case, terse definitions below them in lower case, and the relationships using graphical elements, in this case a dashed arrow to indicate the ‘antonym’ relationship.

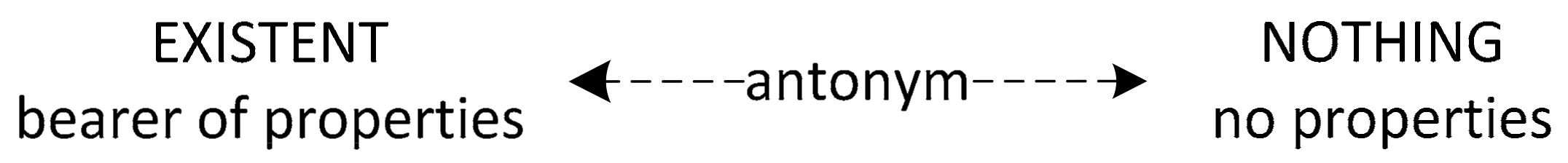

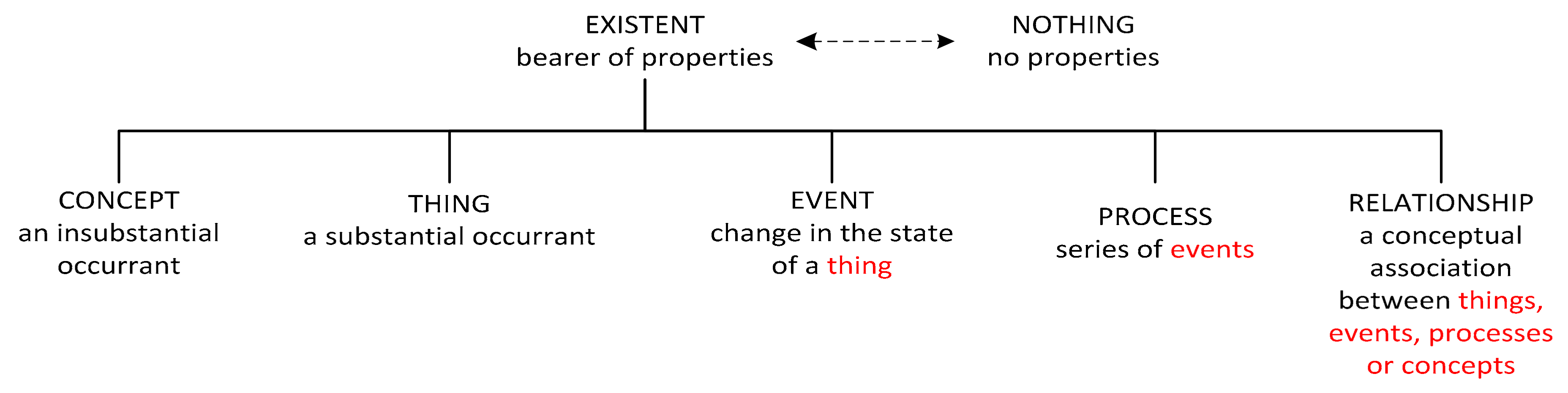

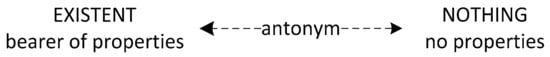

We will now illustrate this use of the dialectical feedback principle in a more complicated case involving a category for an upper ontology. Imagine we want to create a top category for an upper ontology. This would be the most general category in our ontology, so that every other category will be a specialised instance of it. The term must be able to refer to anything of any nature whatsoever, that is, irrespective of whether the referent is real, imaginary, abstract, ideal, etc. We need to select a term that could usefully stand for that meaning and determine a nontrivial definition. It would be trivial to define it as “the designator for anything that exists” because then it (a) begs the question of what we mean by ‘exists’, thus subverting our quest for creating a top category, and (b) it gives us no guidance for then creating subcategories (e.g., via inheritance relationships).

To start, consider terms that might do, for example: thing, object, entity, existent, particular, universal type or individual. All of these have been used as top categories, e.g., BFO, SUMO, and DOLCE use ‘entity’, Cyc uses ‘thing’, KR Ontology uses a symbol, T, defined as ‘universal type’. In the branch of philosophy called ‘Ontology’, common choices are ‘object’ and ‘particular’ (e.g., [17,52]). Given this variety, it will be hard to achieve one of the goals listed in Section 3.1., namely to “maximise the use of categories and relationships already established in a relevant upper ontology”. However, we can reason about our choice in terms of another mentioned challenge, namely to “maximise compatibility between proposed terms and meanings already present in the literature”. None of the available terms seems ideal, e.g.,:

- ‘thing’ seems inappropriate for a category that might have ‘values’ or ‘ideas’ or ‘processes’ as subcategories;

- ‘object’ seems inappropriate for a category that might have ‘force fields’ or ‘consciousness’ as subcategories;

- ‘entity’ sounds like a term more appropriate to referring to some kind of living being;

- ‘individual’ sounds like a term more appropriate to referring to persons;

- ‘particular’ could suggest an interpretation in the sense of ‘not general’, whereas generality is exactly what is being aimed for;

- ‘existent’ is naturally an adjective and seems clunky when used as a noun; and

- ‘T’ is not a term but a symbol.

The least potentially confusing option seems to be ‘existent’, and therefore we recommend its selection while acknowledging that other choices also have merit.

Moving on to defining it, consider the dialectical opposites of the candidate terms. For most of them the obvious choice for an opposite does not work, as they invoke inappropriate opposites. Consider the pairings thing/nothing, object/subject, entity/non-entity, existent/non-existent, particular/universal, individual/group, and T/ (universal type/paradoxical type in KR Ontology).

Only the antonyms ‘non-existent’ and ‘nothing’ are relevant concepts as antonyms of the top category (‘existent’).

A common definition of ‘non-existent’ is: “does not exist or is not present in a particular place”10. This is not helpful, because it involves either a simple negative definition (which is trivial) or one that is misleading from the dialectical feedback perspective, because ‘not present’ would suggest an ‘existent’ has to be somewhere; but this is an unwarranted constraint, because some kinds of existents are not inherently located ‘somewhere’, e.g., numbers or geometrical shapes.11

The antonym ‘nothing’ is helpful, however, because it suggests that ‘nothing’ is a special kind of thing, namely a ‘no-thing’. This is perhaps just a quirk of language, but it puts us on the right mental track, because we can now say that a no-thing is something that has no properties.12 If it had any, those properties would indicate what sort of thing it is, in which case it would not be a no-thing. This suggests the idea that to be an existent is to have properties. Properties cannot be free-floating but have to belong to some existent (there are no actual Cheshire cat smiles without Cheshire cats). So this gives us a concise and useful definition: an ‘existent’ is ‘a bearer of properties’, and ‘nothing’ is ‘not a bearer of properties’. Note the role of properties as a link between these categories, analogously to the role of ‘physiological parameter’ in the previous example about health.

This work enables us now to draw the diagram given in Figure 8. It is simple, but its utility will be demonstrated in the next section.

Figure 8.

A concept map for the categories ‘existent’ and ‘nothing’.

3.4.2. Emergence, Wholeness and Coherence

Systems are wholes, that is to say they have properties (called ‘emergent properties’) that only exist at the level of ‘the whole’, and are not present in the parts or the relationships among the parts [8] and [11] (p. 12), [53] (p. 55). For systems to function as wholes, their parts must work together in a coherent way, otherwise instabilities would arise to undermine the integrity of the system and its ‘wholeness’ would break down (the emergent properties would degrade or be lost). This idea is important when thinking about ontologies. In a dictionary, terms have multiple meanings, and the applicable meaning is determined by the context in which the term is used. In an ontology, terms must always have unique meanings. This can ostensibly be achieved via a list of terms each with an associated single definition, but in practice this can be confounded by the fact that a term definition may well use other terms. This opens up the possibility of definitions being ambiguous, circular, or incoherent because of the connected meanings of those other terms. The risk of this occurring can be managed by creating a network of categories in which the relationships and interdependencies between the categories are made explicit, easy to trace, and open to assessment. This makes it possible to check that definitions are not circular, that concepts do not have unwarranted overlapping meanings, and that the definitions express all the nuances we need to cover in order to discuss the subject of interest.

The network of terms thus forms a coherent system, in which the mutual coherence of the definitions makes the system stable as a whole, and the distinctness of individual categories makes the categories powerful tools for analysis, enabling lucid thinking, theorising, and discussion in the disciplinary context. The net effect is an ontology that as a system has the (emergent) stability and coherence needed to ensure and sustain the stability and coherence of the discipline of which it is the conceptualisation.

Maintenance of the ontology as a system is thus of ongoing importance. As a discipline grows in conceptual richness, we cannot merely add terms and new definitions but must continuously review the relationships between the employed categories to ensure we maintain the coherence of the systemic ontology.

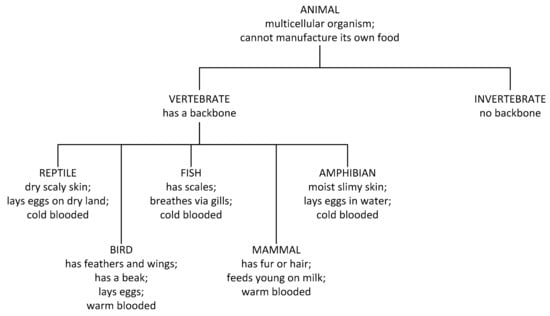

A well-known example of an ontological system that derives its utility from the clarity and distinctiveness of its categories, and its overall coherence as a system is the Linnaean system for classifying organisms. A simplified representation of a fragment of it is given in Figure 9. This is an inheritance hierarchy. The relationships are not labelled because they are all of the same kind, in this case the ‘is a’ relationship (reading upwards), e.g., a reptile is vertebrate, a vertebrate is an animal.

Figure 9.

A concept map of a part of the Linnaean classification system.

Although the illustration in Figure 9 is very incomplete, it serves to show how a concise concept map can help to identify the concepts that recur across definitions and check for ambiguity and circularity. It also simplifies the communication of a definition, given that inherited aspects do not need to be repeated, so the distinctive defining characteristics are clearly highlighted at each level.

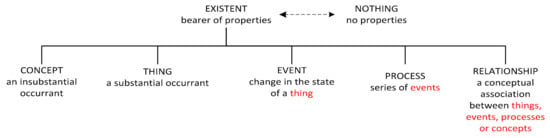

We will now demonstrate the application of these systems principles in a more complicated example, expanding the upper ontology model we worked on in Section 3.4.1. We have a ‘most general’ category, ‘existent’, in hand, so let us now consider what might appear on the next level(s) of our upper ontology and sort them into a systematic and coherent arrangement. First, let us assemble a list of candidate general categories as might be denoted by terms such as thing, process, event, relationship, and concept. For this demonstration, we will set aside whether that selection is an exhaustive set or not, and we’ll gloss some of the deep debates in philosophy about the nature of fundamental quantities such as space and time.

To get started in thinking about these categories, we need to establish some additional concepts, for which we can find examples in the existing philosophical literature and formal ontology frameworks. For now we will list them as assumptions or conventions for this demonstration, as follows:

- We will use the term ‘substance’ to refer to ‘stuff something might be comprised of’, so if something is made of stuff we will refer to it as ‘substantial, and if not then as ‘insubstantial’. The difference between them is that substances are part of the real world and have inherent causal powers;

- We will regard time as a kind of metric and not as kind of thing or substance, enabling us to specify, in relation to the temporal metric, such notions as ‘before’, ‘during’, ‘interval’, and ‘after’;

- We will regard space as having metrical properties, enabling us to specify, in relation to the spatial metric, such notions as size, shape, ‘next to’, ‘to the left of’, and ‘above’; and also as being substantial. This is consistent with the idea in contemporary physics that ‘empty space’ is not really empty but is a substance comprised of virtual particles, thus constituting what is called the quantum vacuum;

- If all the aspects of an existent exist at the same time, we will call it an ‘occurant’ (e.g., this apple), and if the aspects of an existent are spread out over an interval we will call it a ‘continuant’ (e.g., this football match). We will disambiguate ‘continuant’ from the case where an occurant persists in time by describing such an occurant as being also an ‘endurant’. An occurant that does not endure is an instantaneous one;

- We will take it for granted that for a substance to exist it must be located in time and space; and

- We will take ‘state’ to stand for the instantaneous values of an existent’s properties at a moment.

Now we can construct initial minimal definitions for our basic categories, inter alia using the general and systems principles already discussed. First, we might assign attributes to our categories as follows:

- Thing: spatial & temporal properties, substantial, occurant (things could be either enduring or instantaneous);

- Process: spatial & temporal properties, substantial, continuant;

- Event: spatial & temporal properties, substantial, instantaneous;

- Relationship: spatial and/or temporal properties, insubstantial, occurant, or continuant; and

- Concept: no spatial properties, logical properties, insubstantial, occurant.

With these characterisations in mind, we can now propose definitions that encapsulate the relationships between the categories, for example:

- Thing: a substantial occurant;

- Concept: an insubstantial occurant;

- Event: a change in the state of an existent;

- Process: a series of events; and

- Relationship: a conceptual association between things, processes, events, or concepts.

Note that this list has been arranged so that we define the categories in a sequential way, so that no definition employs categories that have not yet been defined. This not only helps us to check for collective coherence but it also entails that some categories are more fundamental than others. We can now illustrate this in a concept map as shown in Figure 10.

Figure 10.

A partial concept map of upper kinds of existents.

In Figure 10 we have an inheritance hierarchy, except from the antonym relationship indicated by the dashed arrow. The categories have been arranged so the more fundamental ones are above or to the left of more derivative ones. This systematic buildup of meanings is indicated by the colored terms.13 An implication of these systematic relationships is that, in a domain in which these definitions are used, the foundational existents are things and concepts, not processes or events, and this will be reflected in research planning and theory building. This prioritisation however reflects only one possible metaphysical stance, and there are other perspectives in which processes or events, or even other categories such as percepts or measurements or information, are considered fundamental. This debate is inconsequential for the purposes of the present demonstration of how general systems principles can, in principle, aid in the development of an upper ontology.

Having demonstrated this method, it is now possible to apply it to a more extensive effort involving further foundational categories, such as space, time, law, truth, cause, real, abstract, imaginary, naturalistic, etc. These terms are ubiquitous in general texts in Ontology and Metaphysics, which can be consulted for identifying both category instances and the controversies over their definitions. A useful text is the two volumes on ontology by Mario Bunge [54,55], and recent works on the metaphysics of scientific realism, e.g., [56,57]. Other useful references include [26,58,59,60,61].

That said, the method discussed here is not specifically aimed at developing upper ontologies but may be useful in distilling any specialised vocabulary into an ontology.

3.4.3. Boundaries, Contexts and Levels

The notion of ‘boundary’ is central to both systems science and systems thinking, and because ontologies are (conceptual) systems, we can gain helpful insights for ontology development by considering an ontology’s boundary from a systems perspective. As we will show, proper attention to the ontology’s boundary can facilitate leveraging existing work in a thoughtful and managed way, thus increasing a project’s power while constraining its scope and making it more efficient, and consequently resulting in an ontology with a broader impact potential.

The ‘boundary’ concept is a nuanced one in systemology. Key defining characteristics of a ‘boundary’ have to do with where the boundary is drawn, which can be objective or subjective, and how permeable it is to flows of influence. We will discuss these aspects more deeply first, pointing out how each aspect relates to an ontology’s boundary, before bringing the ideas together to apply to both the system of interest and the conceptual system that is the ontology, which may well have different boundaries.

First, consider systems with inherent boundaries for which there can be objective criteria. There are two ways such boundaries can be recognised, that draw on the mechanisms by which they are established. Systems are called ‘wholes’ because they exhibit properties the parts do not have by themselves [11] (p. 17), and the system boundary is defined by the limits of the region over which these emergent properties are present [11] (p. 36), [62]. In an ontology system, a key emergent property is its coherent meaning, as discussed before. Another recognisable sign derives from the fact that a system’s parts interact or interrelate in such a manner as to establish and maintain a boundary that demarcates the identifying characteristics of the system, via which it can be distinguished from its environment or context [62,63]. These interactions and relationships between parts produce a region of high organisation compared to their immediate environment, so that we could characterise systems as persistent regions of low entropy in a generally dissipative environment [63] (p. 3). This enables us to recognise a system boundary by the gradient between regions of low entropy and relatively higher entropy. In an ontology this might manifest in the connectedness and mutual coherence of concepts.

Where the boundary of a system is not clear, researchers can determine them intuitively to encompass what they judge to be the limits of the system of interest. This is a common phenomenon in systems practice, where it often difficult to identify all the stakeholders in a systemic intervention. It is also common in frontier research, where it may be radically unclear what factors contribute to the phenomenon under investigation. In this case, a subjective assumption followed by monitoring and adjustment is appropriate.

Turning secondly to the question of permeability, the boundaries of all known systems, apart from the universe, are ‘open’, that is to say, it is possible for matter, energy, and/or information to flow across the boundary. The internal systemic interactions modulate these transfers, so that the system can change without undermining its identity. The system thus changes in a way that pursues some kind of balance between the state and properties of the system and the state and properties of its environment. As a consequence, both the position of the boundary and the emergent properties of the system can change over time. Open boundaries thus create the possibility for systems to evolve or adapt (or be adapted via interactions coming from the environment). For ontology development, a key point is that the boundary is managed in a controlled way from within, but it is not rigid.

Lastly, it is important to note that although the environment is distinct from the system, the environment can be systemic in its own right or contain other autonomously existing systems. To prevent confusion given these possibilities, the system that is the focus of study, design, or intervention is often called the ‘system of interest’. While the system of interest is a part in a larger system, it is called a ‘holon’ [64], and some holons cannot exist autonomously, for example an organ in a body. In such a case, an ontology for a system of interest would be of limited use unless it was integrated with the broader ontology in a consistent way. If the environment contains autonomous systems that can interact with the system of interest to produce a higher-order system, then that collection of systems is called a ‘system of systems’, for example a military defense force composed of operational units that work in a centrally coordinated way but can in principle operate independently. Here, the ontology development must take account of the actual or potential interdependencies. For example, the ontology of genomics is a holon within the ontology of biology, and if a multi-disciplinary team work on the same project then their specialist ontologies form a system of systems.

We will turn now to how these ideas can collectively impact ontology development.

Any specific ontology supports a discipline that is concerned with some subject matter, and therefore the ontology is restricted to the scope of that interest. This implies a ‘boundary’ that demarcates between categories that are of direct interest to the specific discipline and categories that are indirectly relevant or only relevant to other disciplines. If such a spectrum of relevance can be identified, it would allow us to manage the scope of the ontology representing our area of interest, and identify other ontologies we can employ or influence without having to take responsibility for their development. This increases both the effectiveness and the efficiency of our ontology development.

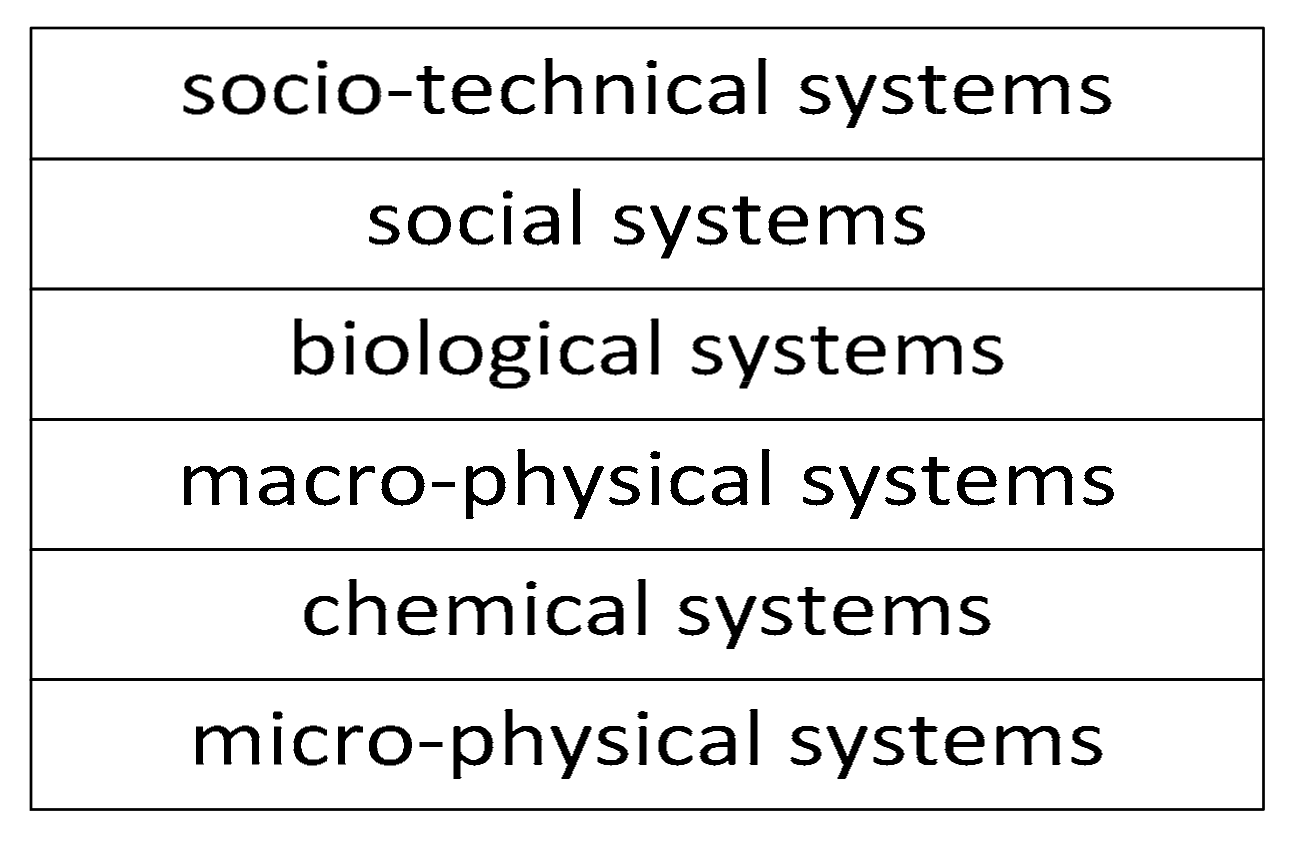

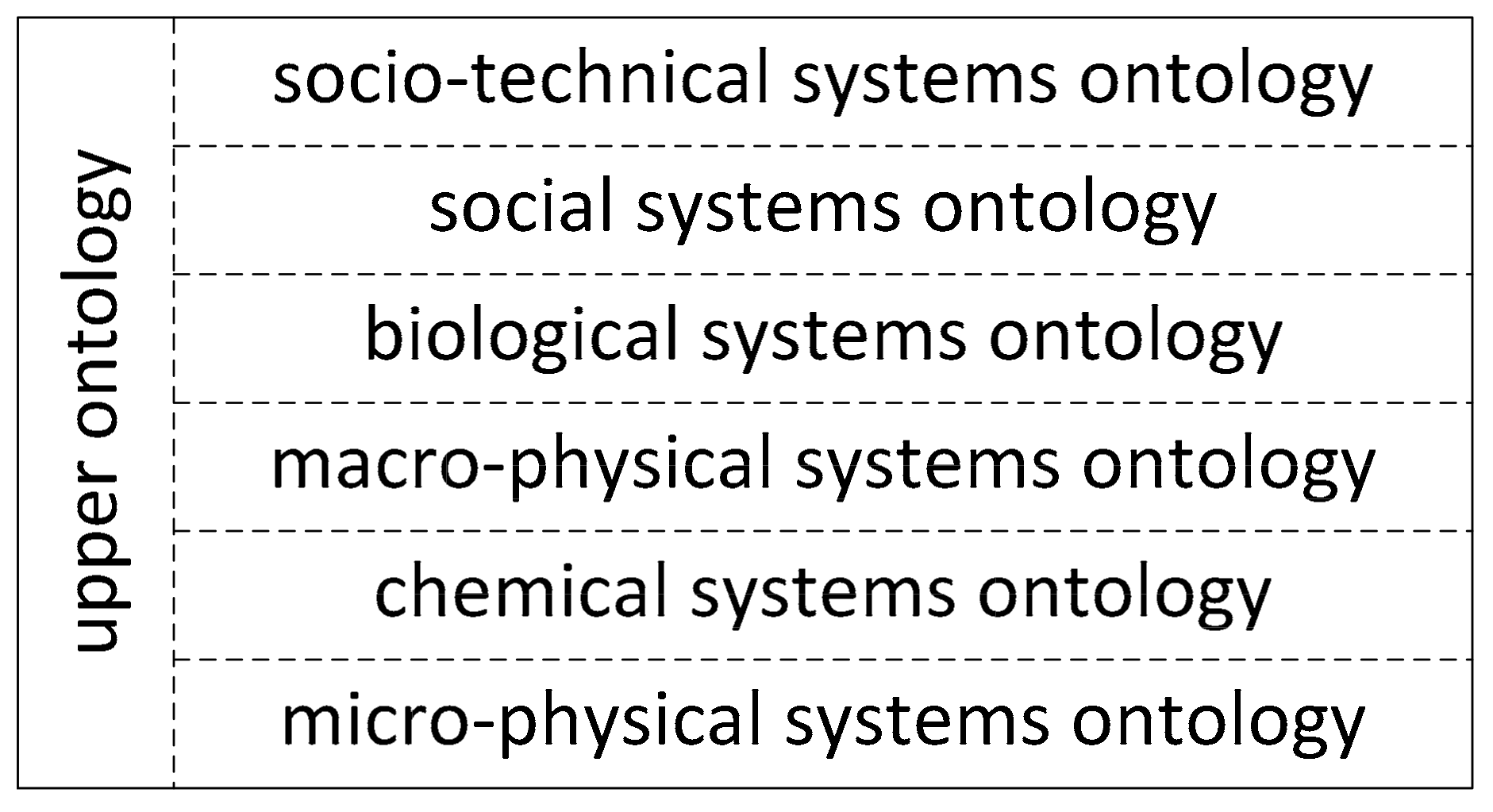

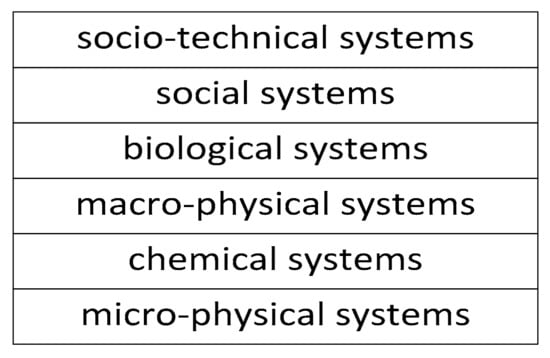

There are different ways to identify the nearest or most relevant neighbours. For example, different disciplines study different kinds of systems, and these can be organised into levels according to their complexity, resulting in a subsumption hierarchy, as shown in a simplified way in Figure 11. At every level, systems can have as parts systems from the ’lower’ levels, and any system can have in its environment any of the kinds of systems in the hierarchy.

Figure 11.

A levels hierarchy of systems ordered by complexity.

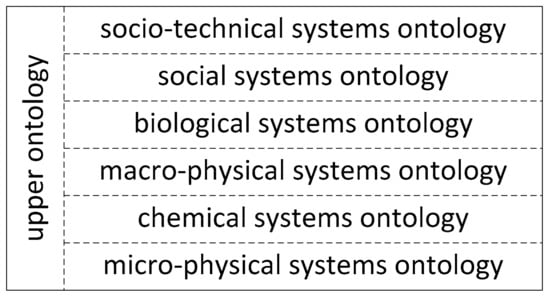

Each level can be associated with a disciplinary ontology, e.g., physics, chemistry, geology, biology, sociology (the list is not comprehensive here, but just meant to be illustrative of the principle at stake). What we can now see is that every domain ontology has boundaries that link it both to an environment comprised of other domain ontologies, as well as to the upper ontology that is general across those domains. We can illustrate this as shown in Figure 12, which is of course not a subsumption hierarchy. The boundaries between the ontologies are indicated with a dashed line to remind us that these are open boundaries that will in fact be located more flexibly and differently to the disciplinary ones.

Figure 12.

A systemic hierarchy of ontologies.

The implication is that to build an adequate ontology, the developer must not only take into account the upper ontology as providing relevant categories that do not have to be independently developed, but also the categories in adjoining domains, especially those of its immediate neighbours. What we therefore have is a kind of ontology ecosystem, in which the development of any one ontology has (ideally) to proceed in a way that preserves a coherent balance between its own categories and the categories in the ontologies reflecting its environment. If this is not done, the result is silofication of the disciplinary domain. This can inhibit progress (through lack of awareness of useful categories of analysis existing in other domains) or waste resources (through duplication of effort to create categories already existing elsewhere or pursuing blind alleys already excluded from being potentially fruitful via insights captured in alternative domain categories).

Recognising and working with boundaries in this way not only makes ontology development more effective, efficient, and impactful, but such open boundaries can also enhance the abilities of researchers who cultivate awareness of categories in related domains. An accessible example of the value of such ‘multidisciplinary’ competence is the case of William Harvey, who was a physician but also had a good grasp of the categories of the modern scientific method then emerging from the work of people such as his contemporary Galileo, and also the categories of mechanics. This allowed him to make systematic observations in his research and propose a mechanical analogue for the nature of heart, by characterising it a kind of a pump [65] (pp. 49–54). Such multi-disciplinarity and inter-disciplinarity is currently driving a rapid rise in technological innovation, and is being heavily promoted by funding bodies under the rubric of ‘convergence’ [66]. This is an important development, but much more needs to be done to break down silos between ontology development projects, because, as has been noted, silos often persist due to political or economic considerations rather than because scholars or practitioners are overly protective of their niche in the academic ecosystem, or unappreciative of the systemicity of academic knowledge [67]. While funding bodies and commercial interests are driving convergence in technological projects, ontology projects are not yet showing significant convergence [8,68], especially in the area of upper ontology development (as shown by the plethora of ontology implementations listed in Section 2.6). Lack of ontology convergence can raise risks for complex projects though lack of clear communication within a multi-disciplinary team.

An important consequence of the open-ness of the boundaries of ontologies (and their corresponding disciplines) is that the boundaries change over time as information flows across them, changing our understanding of where the boundaries should usefully lie. In this way, boundaries could be changed to include categories newly discovered or formerly viewed as not relevant, or to drop categories that have become superfluous or been taken over by other ontologies. In this sense, ontologies evolve over time, and the work of ontology development is not only never complete, but ontologists must keep tracking developments in adjacent ontologies so they can effectively and efficiently manage where the boundaries of their ontologies are.

3.4.4. Isomorphic Systems Patterns

A key concept in systems science is the idea of ‘isomorphies’. They represent similar patterns of structure or behaviour that recur across differences in scale or composition, and so have significance across multiple kinds of systems. For example, spiral patterns occur in sea shells, sunflowers, peacock tails, tornados, and galaxies. Such patterns represent systemic solutions to similar optimisation problems in varied contexts [12] (pp. 109–110), and therefore can help designers to find optimal or elegant solutions to design challenges [69]. Many systemic isomorphies have been identified so far, and useful overviews and discussions of them can be found in the works of Len Troncale [70,71,72].

These systemic isomorphisms suggest the existence of universal principles behind the way in which enduring complex systems are ordered. This is essentially the argument for the existence of a general theory of systems [12] (pp. 108–110) and for the general systems community’s belief that not only is nature a unity despite its phenomenological diversity, but also our knowledge of reality can be unified despite the many apparently intractable gaps in our current body of knowledge [12,13]. However, the most significant point about isomorphisms for present purposes is that they are routes to achieving optimality and robustness in system designs. Given that an ontology is a system, we can now look for isomorphisms that might help us design robust or elegant ontologies. Most of the isomorphisms noted to date have been discovered by studying concrete systems, but some have arisen in the study of conceptual systems and some occur in both types. For example, Zipf’s Law patterns occur in both physical and conceptual systems [73].

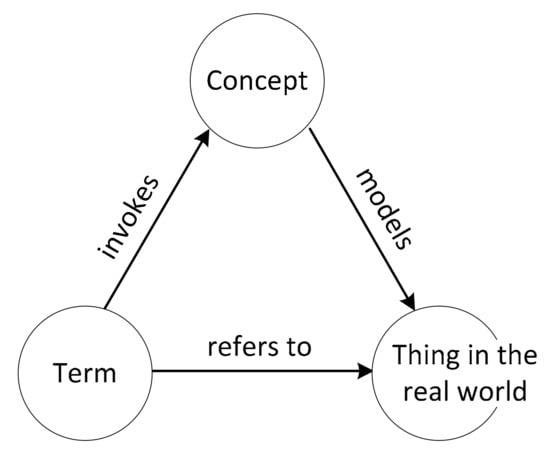

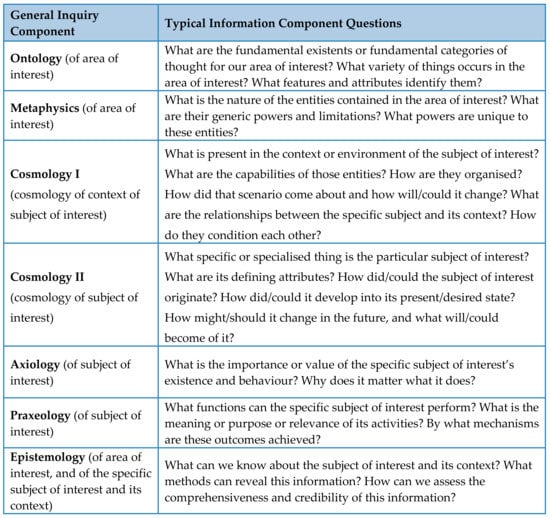

Ontologies capture foundational information about a field of interest, and recent work in systemology has revealed the existence of a systems isomorphism related to kinds of knowledge. A paper discussing this is included in this special issue of Systems [14]. The isomorphism is captured in a systematic and systemic “General Inquiry Framework” that provides categories for organising knowledge in multiple specialised contexts, such as when designing a product, solving a problem, investigating a phenomenon, or documenting a worldview. The framework was generalised by abstracting from a more specialised “Worldview Inquiry Framework” used for exploring, documenting, and comparing worldviews.

In the next section, we will show how this framework can be adapted to provide a starting point for an Ontology of Systemology. For a start, consider the top-level categories of the General Inquiry Framework given in Figure 13.

Figure 13.

The Top Categories of the “General Inquiry Framework” [14].

Note that the term ‘Ontology’, here denoting the first category of knowledge, refers to Ontology in the philosophical sense, which deals with foundational categories, and is distinct from the idea of an ontology as the conceptualisation of a domain. The knowledge isomorphy suggests that any systematic pool of knowledge can be organised according to this structure, including, of course, the information in an ontology. An example of this is given in [14], where a concept map for worldview categories is given in its Figure 1. We would suggest that the General Inquiry Framework, treated as a systemic isomorphy, provides a useful tool for ontology development, particularly for developing ontologies for areas of study where there is uncertainty about what categories are needed, for example in nascent domains such as systemology, or frontier domains such as consciousness studies. The list of possible knowledge categories, as illustrated in more detail in [14], can provide useful suggestions for gaps in the knowledge base or opportunities for investigation in research. In the next section, we will illustrate the application of this isomorphy for the case of Systemology.

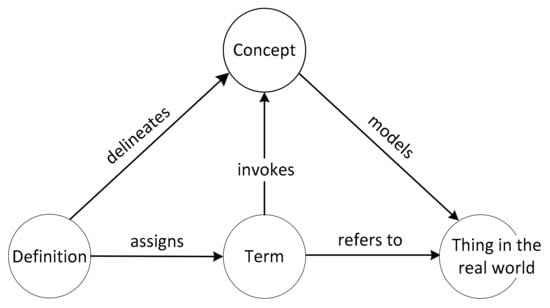

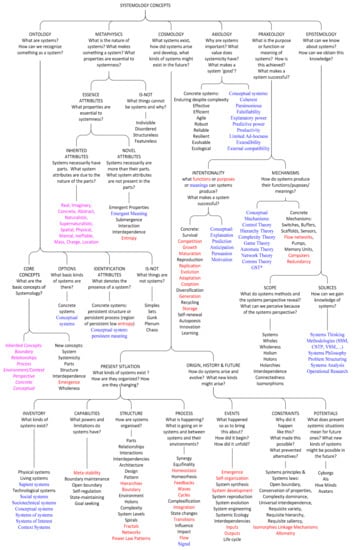

4. A Concept Map towards an Ontology of Systemology

We have in this paper argued for the need for an ontology of systemology, discussed the value of concept maps especially in the early stages of ontology development, and suggested how systems thinking can help advance the practice of ontology development. The overarching purpose of the present paper is to advocate for, and help start work towards, the development of a standardised ontology of Systemology. To show how the principles of ontology development and systems thinking as discussed in the present paper can facilitate this development, we now present in Figure 14 a first draft of a concept map representing core terms from systemology, arranged according to the categories of the General Inquiry Framework. This diagram is meant to be provisional and illustrative only, as a basis for discussion and further development.

Figure 14.

The Top Categories of the “General Inquiry Framework”.

It is important to note that that the given concepts are not intended as answers to the questions posed under each knowledge category. The questions are there, in this context, to stimulate reflection on what concepts might be required to answer such questions. The listed concepts are then candidate concepts to be defined in the ontology for systemology, in order to facilitate proposing or developing answers to be captured in the knowledge base of systemology. The concepts listed are by no means a complete set. We have tried to show relevant concepts in each knowledge area, to show to employ this framework, but a more detailed development is needed based on the collections of definitions such as those mentioned in Section 1.

In order to facilitate discussion relative to upper ontologies, contextual domain ontologies, and other works on the categories of systemology, we have changed some of the colors and fonts in the presented concept map to illustrate some of these linkages, as follows:

- Blue serif font indicates concepts relating to conceptual systems rather than physical ones;

- Red indicates concepts occurring on the isomorphy lists of Len Troncale, to show how this presented structure can assist in their organisation; and

- Pink indicates concepts inherited from upper ontologies.

Note that ‘Ontology’ only appears once; there is no need to distinguish between Ontology I and Ontology II because the same concepts are needed to describe the cosmology of the system of interest and the context.

5. Conclusions

Systemology is a transdiscipline, which means that its principles and methods could in principle be useful across a wide variety of disciplines and problem contexts. However, as we have argued, these potential benefits are undermined by the lack of a consistent disciplinary terminology, which makes it hard to convey systems concepts to other disciplines. In fact, this lack can even make it hard to communicate between different systems specialisations. In this paper we have presented a conceptual framework for selecting and organising the terminology of systemology, and we call for a community effort to use this to develop an “Ontology for Systemology”. We also offer the approach by which we arrived at this framework as an example of how the systems perspective can enrich other disciplines’ methodologies.

Author Contributions

The project was conceived and managed by David Rousseau. David Rousseau, Julie Billingham and Javier Calvo-Amodio contributed equally to the research and the writing of the paper. David Rousseau prepared the diagrams.

Funding

Financial and material support for the project was provided by the Centre for Systems Philosophy, INCOSE and the University of Hull’s Centre for Systems Studies.

Acknowledgments

We would like to thank the two anonymous reviewers of this paper for their helpful comments, which have greatly aided us in improving the structure and clarity of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References and Note

- Francois, C. (Ed.) International Encyclopedia of Systems and Cybernetics; Saur Verlag: Munich, Germany, 2004. [Google Scholar]

- Heylighen, F. Self-Organization of Complex, Intelligent Systems: An Action Ontology for Transdisciplinary Integration. Integral Rev. 2012. Available online: http://pespmc1.vub.ac.be/papers/ECCO-paradigm.pdf (accessed on 22 November 2013).

- Kramer, N.J.T.A.; de Smit, J. Systems Thinking: Concepts and Notions; Martinus Nijhoff: Leiden, The Netherlands, 1977. [Google Scholar]

- Schindel, W.D. Abbreviated SystematicaTM 4.0 Glossary—Ordered by Concept; ICTT System Sciences: Terre Haute, IN, USA, 2013. [Google Scholar]

- Schoderbek, P.P.; Schoderbek, C.G.; Kefalas, A.G. Management Systems: Conceptual Considerations, revised ed.; IRWIN: Boston, MA, USA, 1990. [Google Scholar]

- Wimsatt, W. Re-Engineering Philosophy for Limited Beings: Piecewise Approximations to Reality; Harvard University Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Madni, A.M.; Boehm, B.; Ghanem, R.G.; Erwin, D.; Wheaton, M.J. (Eds.) Disciplinary Convergence in Systems Engineering Research; Springer: New York, NY, USA, 2017. [Google Scholar]

- Sillitto, H.; Martin, J.; McKinney, D.; Dori, D.; Eileen Arnold, R.G.; Godfrey, P.; Krob, D.; Jackson, S. What do we mean by “system”?—System beliefs and worldviews in the INCOSE community. In Proceedings of the INCOSE International Symposium, Washington, DC, USA, 7–12 July 2018; p. 17. [Google Scholar]

- Dyson, G.B. Darwin among the Machines; Addison-Weslely: Reading, MA, USA, 1997. [Google Scholar]

- Moshirpour, M.; Mani, N.; Eberlein, A.; Far, B. Model Based Approach to Detect Emergent Behavior in Multi-Agent Systems. In Proceedings of the 2013 International Conference on Autonomous Agents and Multi-Agent Systems, Saint Paul, MN, USA, 6–10 May 2013; International Foundation for Autonomous Agents and Multiagent Systems: Richland, SC, USA; pp. 1285–1286. [Google Scholar]

- Bunge, M. Emergence and Convergence: Qualitative Novelty and the Unity of Knowledge; University of Toronto Press: Toronto, ON, Canada, 2003. [Google Scholar]

- Rousseau, D.; Wilby, J.M.; Billingham, J.; Blachfellner, S. General Systemology—Transdisciplinarity for Discovery, Insight, and Innovation; Springer: Kyoto, Japan, 2018. [Google Scholar]

- Von Bertalanffy, L. General System Theory: Foundations, Development, Applications, revised ed.; Braziller: New York, NY, USA, 1976. [Google Scholar]

- Rousseau, D.; Billingham, J. A Systemic Framework for Exploring Worldviews and its Generalization as a Multi-Purpose Inquiry Framework. Systems 2018, 6, 27. [Google Scholar] [CrossRef]

- Gruber, T.R. A translation approach to portable ontology specifications. Knowl. Acquis. 1933, 5, 199–220. [Google Scholar] [CrossRef]

- Jakus, G.; Milutinović, V.; Omerović, S.; Tomažič, S. Concepts, Ontologies, and Knowledge Representation; Springer: New York, NY, USA, 2013. [Google Scholar]

- Heil, J. From an Ontological Point of View; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Varzi, A.C. On Doing Ontology without Metaphysics. Philos. Perspect. 2011, 25, 407–423. [Google Scholar] [CrossRef]

- Kudashev, I.; Kudasheva, I. Semiotic Triangle Revisited for the Purposes of Ontology-Based Terminology Management; Institut Porphyre: Annecy, France, 2010. [Google Scholar]

- Ogden, C.K.; Richards, I.A. The Meaning of Meaning: A Study of the Influence of Language upon Thought and of the Science of Symbolism; Kegan Paul: London, UK, 1923. [Google Scholar]

- Hanson, N.R. Patterns of Discovery: An Inquiry into the Conceptual Foundations of Science; Cambridge University Press: London, UK, 1958. [Google Scholar]

- Fries, C.C. Linguistics and Reading; Holt Rinehart and Winston: New York, NY, USA, 1963. [Google Scholar]

- Kuhn, T. The Structure of Scientific Revolutions, 3rd ed.; Original 1962; University of Chicago Press: Chicago, IL, USA, 1996. [Google Scholar]

- Gupta, A. Definitions. In The Stanford Encyclopedia of Philosophy, Summer 2015; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University: Stanford, CA, USA, 2015. [Google Scholar]

- Hoehndorf, R. What Is an Upper Level Ontology? 2010. Available online: http://ontogenesis.knowledgeblog.org/740 (accessed on 21 April 2015).

- Schrenk, M. Metaphysics of Science: A Systematic and Historical Introduction; Routledge: New York, NY, USA, 2016. [Google Scholar]

- Ruttenberg, A. Basic Formal Ontology (BFO)|Home. Available online: http://basic-formal-ontology.org/ (accessed on 30 June 2018).

- Gangemi, A.; Guarino, N.; Masolo, C. Laboratory for Applied Ontology—DOLCE. Available online: http://www.loa.istc.cnr.it/old/DOLCE.html (accessed on 20 June 2018).

- Gangemi, A.; Guarino, N.; Masolo, C. DOLCE: A Descriptive Ontology for Linguistic and Cognitive Engineering Institute of Cognitive Sciences and Technologies. Available online: /en/content/dolce-descriptive-ontology-linguistic-and-cognitive-engineering (accessed on 20 June 2018).

- Hoop, K.-U. General Formal Ontology (GFO). Available online: http://www.onto-med.de/ontologies/gfo/ (accessed on 20 June 2017).

- Pease, A. The Suggested Upper Merged Ontology (SUMO)—Ontology Portal. Available online: http://www.adampease.org/OP/ (accessed on 30 October 2017).

- Sowa, J.F. Ontology. 2010. Available online: http://www.jfsowa.com/ontology/ (accessed on 30 November 2017).

- Mizoguchi, R. YAMATO: Yet Another More Advanced Top-Level Ontology. 2016. Available online: http://download.hozo.jp/onto_library/upperOnto.htm (accessed on 1 May 2018).

- Guizzardi, G. Ontology Project. Ontology Project. 2015. Available online: http://ontology.com.br/ (accessed on 1 July 2018).

- Semantic Knowledge Technologies (SEKT) Project. Proton—LightWeight Upper Level Ontology; Ontotext: Sofia, Bulgaria, 2004; Available online: https://ontotext.com/products/proton/ (accessed on 5 November 2017).

- Wikipedia. Cyc; Wikipedia: San Francisco, CA, USA, 2018. [Google Scholar]

- Mascardi, V.; Cordì, V.; Rosso, P. A Comparison of Upper Ontologies. In Proceedings of the WOA 2007: Dagli Oggetti Agli Agenti, Genova, Italy, 24–25 September 2007; Volume 2007, pp. 55–64. [Google Scholar]

- Ring, J. Final Report—Unified Ontology of Science and Systems Project—System Science Working Group, International Council on Systems Engineering 23 June 2013. 2013. Available online: https://docs.google.com/viewer?a=v&pid=sites&srcid=ZGVmYXVsdGRvbWFpbnxzeXNzY2l3Z3xneDo1YmMxMTgyZmFjNjJkYmI1 (accessed on 10 July 2018).

- Ring, J. System Science Working Group—Unified Ontology Project—Progress Report #2′. 2011. Available online: s.google.com/viewer?a=v&pid=sites&srcid=ZGVmYXVsdGRvbWFpbnxzeXNzY2l3Z3xneDoyMmRlYjg1ZmQ1ZTdmNTZh (accessed on 10 July 2018).

- Martin, R. Leveraging System Science When Doing System Engineering. 2013. Available online: https://www.google.co.uk/url?sa=i&source=images&cd=&cad=rja&uact=8&ved=2ahUKEwj3wvS00KHcAhXJvRQKHehFDhUQjhx6BAgBEAI&url=https%3A%2F%2Fwww.incose.org%2Fdocs%2Fdefault-source%2Fenchantment%2F130710_jamesmartin_leveragingsystemscience.pdf%3Fsfvrsn%3D2%26sfvrsn%3D2&psig=AOvVaw3Srz2k9d5jBnsjS3AiiqPb&ust=1531762029620572 (accessed on 10 July 2018).

- Sillitto, H. What Is a System?—INCOSE Webinar 111. Presented on the 17th of April 2018. Available online: https://connect.incose.org/Library/Webinars/Documents/INCOSE Webinar 111 What is a System.mp4 (accessed on 1 May 2018).

- Novak, J.D.; Cañas, A.J. The Theory Underlying Concept Maps and How to Construct and Use Them; Technical Report IHMC CmapTools 2006-01 Rev 01-2008; Institute for Human and Machine Cognition: Pensacola, FL, USA, 2008; Available online: http://cmap.ihmc.us/docs/pdf/TheoryUnderlyingConceptMaps.pdf (accessed on 1 June 2013).

- Rapoport, A. General System Theory. In The International Encyclopedia of Social Sciences; Sills, D.L., Ed.; Macmillan & The Free Press: New York, NY, USA, 1968; Volume 15, pp. 452–458. [Google Scholar]

- Sillitto, H.; Martin, J.; Dori, D.; Griego, R.M.; Jackson, S.; Krob, D.; Godfrey, P.; Arnold, E.; McKinney, D. SystemDef13MAy18.docx. In Working Paper of the INCOSE Fellows Project on the Definition of “System”; INCOSE: San Diego, CA, USA, 2018. [Google Scholar]

- SEBoK Contributors. Systems Thinking. 2015. Available online: http://www.sebokwiki.org/w/index.php?title=Systems_Thinking&oldid=50563 (accessed on 17 July 2018).

- Monat, J.P.; Gannon, T.F. What is Systems Thinking? A Review of Selected Literature Plus Recommendations. Am. J. Syst. Sci. 2015, 4, 11–26. [Google Scholar]

- Cabrera, D.A. Systems Thinking; Cornell University: Ithaca, NY, USA, 2006. [Google Scholar]

- Cabrera, D.; Colosi, L.; Lobdell, C. Systems thinking. Eval. Progr. Plan. 2008, 31, 299–310. [Google Scholar] [CrossRef] [PubMed]

- Cabrera, D.; Cabrera, L. Systems Thinking Made Simple: New Hope for Solving Wicked Problems; Odyssean Press: Ithaca, NY, USA, 2017. [Google Scholar]

- Levins, R. Dialectics and Systems Theory. In Dialectics for the New Century; Palgrave Macmillan: London, UK, 2008; pp. 26–49. [Google Scholar]

- Maybee, J.E. Hegel’s Dialectics. In The Stanford Encyclopedia of Philosophy; Winter 2016; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University: Stanford, CA, USA, 2016. [Google Scholar]

- Bunge, M. Matter and Mind: A Philosophical Inquiry; Springer: New York, NY, USA, 2010. [Google Scholar]

- Von Bertalanffy, L. General System Theory: Foundations, Development, Applications; Braziller: New York, NY, USA, 1969. [Google Scholar]

- Bunge, M. Ontology I: The Furniture of the World; Reidel: Dordrecht, The Netherlands, 1977. [Google Scholar]

- Bunge, M. Ontology II: A World of Systems; Reidel: Dordrecht, The Netherlands, 1979. [Google Scholar]

- Chakravartty, A. A Metaphysics for Scientific Realism: Knowing the Unobservable; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Ellis, B. The Metaphysics of Scientific Realism; Routledge: Durham, UK, 2009. [Google Scholar]

- Koons, R.C.; Pickavance, T. Metaphysics: The Fundamentals, 1st ed.; Wiley-Blackwell: Hoboken, NJ, USA, 2015. [Google Scholar]

- Lowe, E.J. The Possibility of Metaphysics: Substance, Identity, and Time: Substance, Identity and Time; Clarendon Press: Oxford, UK, 2004. [Google Scholar]

- Van Inwagen, P.; Zimmerman, D.W. Metaphysics: The Big Questions, 2nd ed.; Blackwell: Oxford, UK, 2008. [Google Scholar]

- Whitehead, A.N. Process and Reality, an Essay in Cosmology; Gifford Lectures Delivered in the University of Edinburgh During the Session 1927–28; Cambridge University Press: Cambridge, UK, 1929. [Google Scholar]

- Davis, G.B. (Ed.) Blackwell Encyclopedic Dictionary of Management Information Systems; Wiley-Blackwell: Malden, MA, USA, 1997. [Google Scholar]

- Sillitto, H. What is systems science?—Some Thoughts. Draft manuscript towards. In Proceedings of the IFSR’s 2018 Linz Conversation, Linz, Austria, 8–13 April 2018. [Google Scholar]

- Koestler, A. The Ghost in the Machine; Henry Regnery Co.: Chicago, IL, USA, 1967. [Google Scholar]

- Butterfield, H. The Origins of Modern Science 1300–1800, 1973rd ed.; Bell & Sons: London, UK, 1951. [Google Scholar]

- MIT Washington Office. The Convergence Revolution. Available online: http://www.convergencerevolution.net/ (accessed on 4 February 2018).

- Parkhurst, J.O.; Hunsmann, M. Breaking out of silos—The need for critical paradigm reflection in HIV prevention. Rev. Afr. Political Econ. 2015, 42, 477–487. [Google Scholar] [CrossRef]

- Sillitto, H.; Martin, J.; Griego, R.; McKinney, D.; Arnold, E.; Godfrey, P.; Dori, D.; Krob, D. A fresh look at Systems Engineering—what is it, how should it work? In Proceedings of the INCOSE International Symposium, Washington, DC, USA, 7–12 July 2018; p. 16. [Google Scholar]

- Zyga, L. Scientists Find Clues to the Formation of Fibonacci Spirals in Nature. 2007. Available online: https://phys.org/news/2007-05-scientists-clues-formation-fibonacci-spirals.html (accessed on 17 February 2017).