1. Introduction

The integration of intelligent autonomous systems in mining operations represents a fundamental paradigm shift toward Industry 4.0 mining ecosystems, where interconnected systems, advanced automation, and intelligent decision-making frameworks converge to create comprehensive autonomous mining operations [

1,

2]. These intelligent systems promise enhanced productivity, energy efficiency, and operational predictability while reducing human exposure to hazardous environments through sophisticated sensor networks, real-time data processing, and automated decision-making capabilities. However, the successful deployment of autonomous mining vehicle systems critically depends on the seamless integration of multiple subsystems, including perception modules, control systems, and decision-making algorithms, all of which must operate reliably under challenging environmental conditions, making robust intelligent perception systems a key enabling technology for autonomous mining operations [

3].

Recent advances in intelligent systems and computer vision have significantly propelled the development of integrated perception modules for autonomous vehicles, enabling these systems to perceive their environment and make informed real-time decisions within complex mining ecosystems, which is crucial for safe autonomous navigation and system reliability [

4]. Within this intelligent systems framework, semantic segmentation of camera-acquired images has emerged as a critical component for comprehensive scene understanding, facilitating not only obstacle identification and precise delineation of traversable routes but also supporting higher-level decision-making processes in autonomous mining operations.

Typically, semantic segmentation models integrated into autonomous vehicle perception systems are trained using images captured in controlled environments and evaluated in similar operational domains [

5,

6,

7]. While this approach is effective under stable conditions, it can limit the intelligent system’s generalization capacity when facing unseen scenarios or variable environmental conditions that are common in real-world autonomous mining operations. In mining industry contexts, factors such as adverse weather conditions, illumination variations, and dirt accumulation on Red Green Blue (RGB) camera lenses can generate operational conditions that differ significantly from training domain data, leading to decreased performance of deployed autonomous systems [

8].

Since it is impractical to improve the robustness of intelligent perception systems by collecting images that encompass all possible scenarios that may arise during real-world autonomous mining implementation, studies have been developed that consider different domain distributions, as illustrated in

Figure 1, to analyze methodologies that enhance intelligent system generalization capacity. Depending on the degree of similarity between training and test data, this can be classified as either a domain adaptation framework [

9] (

Figure 1a) or domain generalization [

10] (

Figure 1b,c). A particular case of the latter is the domain distribution called single-domain generalization [

11],

Figure 1c, in which the intelligent system is trained using a single source domain and evaluated on multiple unseen operational domains. This scenario is especially relevant and realistic in contexts such as autonomous mining systems, where data collection and annotation for training intelligent perception modules is costly and laborious [

12].

Current strategies for domain generalization in intelligent autonomous systems are generally grouped into three main categories [

13]: domain alignment [

14], meta-learning [

15], and data augmentation techniques [

16]. The latter expands the available dataset during intelligent system training, increasing its diversity and reducing the risk of overfitting. Through this approach, the intelligent perception system can learn domain-invariant and bias-free representations, thereby improving its ability to generalize to unseen operational scenarios in autonomous mining environments.

This work focuses on developing and evaluating an intelligent autonomous mining system framework considering two traditional data augmentation techniques: Photometric Distortion (PD) and Contrast Limited Adaptive Histogram Equalization (CLAHE) to improve the domain generalization capabilities of the intelligent perception system. The selected model is BiSeNetV1, which is integrated within our intelligent autonomous system architecture and trained on a domain with optimal visual conditions and then tested on domains with sensor-based visual degradations, specifically images affected by camera lens impurities and frontal solar light beams that commonly occur in autonomous mining operations. Our comprehensive evaluation includes comparative analysis with state-of-the-art GAN-based domain generalization methods and temporal consistency validation to ensure reliable performance in continuous operation scenarios.

The main contributions of this research to the field of intelligent autonomous systems are:

To propose a comprehensive intelligent framework based on traditional data augmentation techniques designed to generate robust perception systems for implementing autonomous vehicle systems in open-pit mining operations. Our approach demonstrates that well-designed conventional techniques can achieve superior domain generalization compared to complex GAN-based methods while maintaining computational efficiency suitable for real-time deployment.

To systematically evaluate and select the optimal bilateral architecture and training configuration that best addresses domain generalization challenges in intelligent vision systems operating under visual degradations common in autonomous mining environments. The evaluation encompasses four state-of-the-art backbones (ResNet-50, MobileNetV2, SegFormer-B0, and Twins-PCPVT-S) with comprehensive computational efficiency analysis including latency, memory usage, and temporal consistency metrics.

To develop and validate a specific dataset for studying semantic segmentation performance in autonomous mining vehicle systems, providing a foundation for future research in intelligent mining automation systems, considering images with and without visual degradations. The dataset methodology enables effective training through dynamic data augmentation, generating 155,092 distinct training samples from 100 source images, which is competitive with recent mining segmentation studies while ensuring cost-effective data utilization.

To demonstrate through extensive experimental validation that traditional augmentation techniques achieve approximately 30% superior performance compared to advanced GAN-based approaches in mining-specific domain generalization tasks, providing a practical and cost-effective solution for autonomous mining system deployment without requiring expensive multi-domain training datasets.

The structure of this document is organized as follows:

Section 2 presents the state of the art in intelligent autonomous systems;

Section 3 describes the intelligent systems methodology implemented to carry out the study;

Section 4 presents the results obtained from the different intelligent system configurations evaluated;

Section 5 contains the discussion of these results from an intelligent systems perspective;

Section 6 presents the conclusions of this work for intelligent autonomous mining systems. Finally,

Section 7 presents possible future directions for the development of intelligent autonomous mining systems.

2. State-of-the-Art

2.1. Domain Generalization Through Data Augmentation in Intelligent Scene Understanding Systems

Recent work addressing Domain Generalization (DG) in the specific context of intelligent semantic segmentation systems for scene understanding, a key task in autonomous vehicle systems and intelligent transportation applications, is presented below. In this area, intelligent systems methods are commonly evaluated in generalization scenarios from synthetic to real operational domains, which allows measuring their adaptation capacity to significant visual changes in real-world autonomous systems deployments. For this purpose, synthetic datasets such as SYNTHIA and GTA5 are used, along with real image datasets such as Cityscapes, Mapillary, and BDD100K.

Among the proposed strategies to address DG in intelligent autonomous systems is the use of data augmentation techniques [

17]. According to the systematic study by [

13], data augmentation techniques applied to intelligent perception systems can be grouped into three main categories: domain-level, where stylistic diversity is sought to be expanded through simulations of new domains, generally through style transfer or adversarial methods; image-level, which includes simple visual transformations such as jittering, blur, or noise, applied directly to source domain images; and feature-level, where modifications are performed on the model’s internal representations, promoting domain invariance more directly. These strategies offer different degrees of complexity and have been adopted in various recent intelligent systems works, as detailed below.

Among the simplest techniques for intelligent perception systems, image-level transformations have proven to be highly effective. For example, the work by [

18] explores factorial combinations of traditional augmentations and shows that when applied, they can achieve competitive performance even against more sophisticated methods. Recent comprehensive surveys have further validated this approach, demonstrating that well-designed traditional augmentation techniques can achieve robust domain generalization without requiring complex architectural modifications, particularly in domain-specific applications where precision is paramount. At a higher level of complexity, several intelligent systems approaches rely on domain-level augmentation strategies, using explicit stylistic modifications. The work by [

19] proposes an adversarial style technique that generates difficult examples during training through dynamic modification of style statistical parameters, which are adversarially optimized with a segmentation loss. On the other hand, ref. [

20] introduces a module that generates style variations by combining different representative styles from the training set. These styles are randomly chosen to obtain greater diversity in the dataset. Additionally, mechanisms are incorporated that stabilize predictions against style changes and leverage knowledge from pre-trained models to avoid overfitting. Along the same lines, ref. [

21] introduces a strategy that reinforces texture learning through two loss functions: one that prevents overfitting to source domain textures using pre-trained features, and another that promotes generalization by learning varied textures from random styles. Meanwhile, ref. [

22] approaches feature-level augmentation by applying covariance alignment in the encoder and semantic consistency contrastive learning in the decoder, allowing extraction of style-invariant representations without external data support.

Recent advances in autonomous mining environments have shown that traditional augmentation approaches can significantly outperform more sophisticated GAN-based methods in domain-specific scenarios. Studies have demonstrated that simple photometric distortions combined with histogram equalization techniques achieve superior performance compared to style transfer methods, particularly when dealing with visual degradations specific to mining operations such as lens soiling and solar interference.

Table 1 presents a summary of the previously discussed intelligent systems methods, specifying training data, architecture, and performance in scene understanding tasks for autonomous systems.

2.2. Intelligent Semantic Segmentation Systems for Scene Understanding Under Visual Degradations

Visual degradations represent a significant challenge for intelligent autonomous systems and can be classified into two categories [

23]: those caused by the environment, such as fog, rain, snow, low-light conditions, intense solar reflections, or light scattering in nighttime scenes; and those derived from sensor problems, which include optical blur, distortions from droplets or dirt on the lens. Additional degradation types commonly encountered in mining environments include dust storms, extreme lighting variations, and weather-related distortions that present unique challenges for intelligent perception systems requiring specialized augmentation approaches. Degraded images represent a challenge for traditional semantic segmentation models integrated in intelligent autonomous systems, as they alter the color, texture, and/or contrast distributions of the image [

24]. In this context, approaches have been proposed for intelligent systems that address segmentation through image enhancement techniques prior to the segmentation process and design of architectures with modules intended to increase the robustness of the baseline intelligent perception system against degradations.

Within the first group of intelligent enhancement systems, SCDF [

25] combines noise removal and adaptive enhancement modules to treat mixed conditions such as nighttime fog, while RNightSeg [

26] applies a decomposition based on Retinex theory to separate reflectance and illumination, improving segmentation in nighttime scenes. Meanwhile, Bi [

27] integrates a color correction network adjusted through global parameter prediction that is directly coupled to the segmentation process, fed by images generated through style transfer.

In the second group of intelligent robust systems, RobustSAM [

28] extends the Segment Anything Model (SAM) [

29] with specific modules trained on a set of synthetically degraded images, allowing it to maintain precise segmentation under adverse conditions. For its part, CISS [

30] introduces a feature invariance loss by comparing an original image with its stylized version, enabling the model to extract representations less sensitive to visual variations such as illumination or style changes.

Contemporary approaches in mining applications have focused on developing synthetic data generation techniques that specifically address industry-relevant degradations. These methods employ traditional computer vision techniques to apply specific visual degradations such as fog effects and lens contamination, or utilize open-source simulators for autonomous driving environments to generate training data that better represents real-world mining conditions without requiring expensive data collection in hazardous environments.

Table 2 presents the main characteristics of the previously discussed intelligent perception systems.

2.3. Intelligent Drivable Area Segmentation Systems in Off-Road Environments

Drivable area segmentation is an essential component for intelligent autonomous driving systems and Advanced Driver Assistance Systems (ADAS) [

31], as it allows identification of safe circulation zones and optimal trajectory planning within comprehensive autonomous vehicle systems. In structured roads, this task is facilitated by defined markers and borders, while in off-road environments, such as open-pit mines or rural roads, the absence of these references, together with the visual similarity between the road and surroundings, generates low contrast and diffuse borders that challenge intelligent perception systems [

32].

Among intelligent systems approaches based on convolutional networks, proposals such as MineSDS [

33] can be found, which integrates small object detection and drivable area segmentation through a shared backbone and Convolutional Block Attention Module (CBAM) attention modules to highlight diffuse boundaries in autonomous mining systems; or optimized variants of STDC [

34] that incorporate fusion and attention modules to improve discrimination in complex environments. Other intelligent systems’ work incorporates information flow control and multi-scale mechanisms, such as the joint use of Gated Depthwise Inception and Atrous Spatial Pyramid Pooling (ASPP) modules [

35] that increase the model’s receptive field.

The incorporation of hybrid Convolutional Neural Networks (CNN)-Vision Transformers (ViT) architectures has proven effective for intelligent perception systems in capturing global context and local details, as in TrRoad-Net [

36], which combines convolutions and multi-head self-attention that allows the model to capture low and high frequencies of analyzed images. In [

37], CNN, ViT, and MLP-based backbones are tested in the contextual path of the baseline BiSeNet model, where intelligent systems that mix the three methods have shown better performance in capturing high-frequency details while maintaining efficiency and reducing model size for autonomous applications.

Recent developments in autonomous mining applications have emphasized the importance of temporal consistency in video-based segmentation, with specialized metrics being developed for unsupervised evaluation of temporal stability in perception systems. These advances are crucial for continuous autonomous operation scenarios where perception stability directly impacts navigation safety and operational reliability in challenging mining environments.

Table 3 presents the main characteristics of the previously discussed intelligent drivable area segmentation systems.

2.4. Comparative Analysis and Future Directions

The review of current state-of-the-art methods reveals a clear trend toward simpler, more efficient approaches for domain-specific applications such as autonomous mining. While sophisticated GAN-based and style transfer methods show promise in general computer vision tasks, traditional augmentation techniques often prove more effective and computationally efficient for specific industrial applications where domain characteristics are well-understood. This observation aligns with recent findings in autonomous mining systems where traditional photometric distortions achieve superior performance compared to complex generative approaches, highlighting the importance of domain-specific optimization in intelligent perception systems.

3. Methodology

This section describes the intelligent systems methodology for studying the DG approach in semantic segmentation systems for autonomous mining operations, specifically for the case of training an intelligent perception system with a dataset in the source domain under ideal conditions and its evaluation on target domain datasets with visual degradations (lens soiling and sun glare) that commonly affect autonomous mining vehicle systems.

Figure 2 presents the methodological pipeline: it begins with the analysis of the state-of-the-art related to intelligent autonomous systems, followed by dataset preparation for autonomous mining applications, selection of the base architecture and backbones for intelligent perception systems, and definition of the data augmentation scheme along with the training regime for robust autonomous systems. Finally, the evaluation procedure and experimental design organization for intelligent mining systems are described. The following subsections develop each of these stages in detail.

3.1. Intelligent System Problem Formulation

In the context of intelligent autonomous mining systems, the domain generalization challenge represents a critical systems-level problem where perception modules must maintain reliable performance across varying operational conditions. Within our intelligent systems framework, the model is trained using a single source domain , where represents sensor input from the mining vehicle’s perception system, represents the corresponding semantic understanding required for autonomous navigation decisions, and represents the number of samples in the training dataset.

Subsequently, the intelligent system must maintain reliable performance when deployed in target domain without having access to these operational conditions during the development phase. This formulation reflects real-world constraints in autonomous mining systems where comprehensive training across all possible operational scenarios is impractical. In this formulation, K represents the number of target domains, which in this study equals 2 (corresponding to lens soiling and sun glare degradation scenarios).

In our intelligent mining systems application, the source domain consists of sensor data captured from perception systems mounted on autonomous vehicles operating on open-pit mine routes under ideal visual conditions. The target domains consider realistic operational challenges, specifically sensor degradations including camera lens contamination and solar interference that commonly affect autonomous mining operations. The semantic maps generated for training and testing the intelligent perception system are binary classifications that enable the autonomous vehicle’s decision-making system to distinguish between traversable paths and obstacles, supporting safe autonomous navigation decisions.

Dataset Size Validation and Comparative Analysis

While our training dataset comprises 100 source domain images, the dynamic data augmentation pipeline generates 155,092 distinct training samples during the 40,000 training iterations (96.87% of samples include augmentations). This approach is competitive with recent mining segmentation studies that use larger static datasets: MineSDS [

33] employs 4547 images and TrRoad-Net [

36] utilizes 3939 images, both without data augmentation.

Our data efficiency is further validated by recent single domain generalization research, which demonstrates that well-designed augmentation strategies can achieve superior generalization compared to larger static datasets. The dynamic generation approach ensures maximum variability and prevents overfitting to specific augmented versions, as each training iteration presents novel augmented samples.

Furthermore, the AutoMine dataset [

38] contains 30 different mining scenarios across 18+ hours of driving data, representing one of the most comprehensive mining datasets available. Our selection of 3 representative scenarios (ideal conditions, lens soiling, sun glare) covers the primary visual degradations identified in autonomous mining literature.

3.2. Intelligent Systems State-of-the-Art Analysis

The state-of-the-art review focused on three main axes related to intelligent visual perception systems for scene understanding in autonomous vehicles: domain generalization in semantic segmentation for intelligent scene understanding systems, drivable area segmentation in off-road environments for autonomous mining applications, and segmentation under visual degradation conditions in intelligent autonomous systems. For this purpose, an analysis of recent literature available in Web of Science indexed databases was conducted, prioritizing publications between 2024 and 2025.

This bibliographic analysis of intelligent systems enabled the definition of the image preprocessing pipeline for autonomous mining applications, hyperparameter selection and training methodology for intelligent perception systems, semantic segmentation models suitable for autonomous vehicle systems, and performance evaluation of intelligent models in target domains under DG modality.

3.3. Dataset Preparation for Intelligent Autonomous Mining Systems

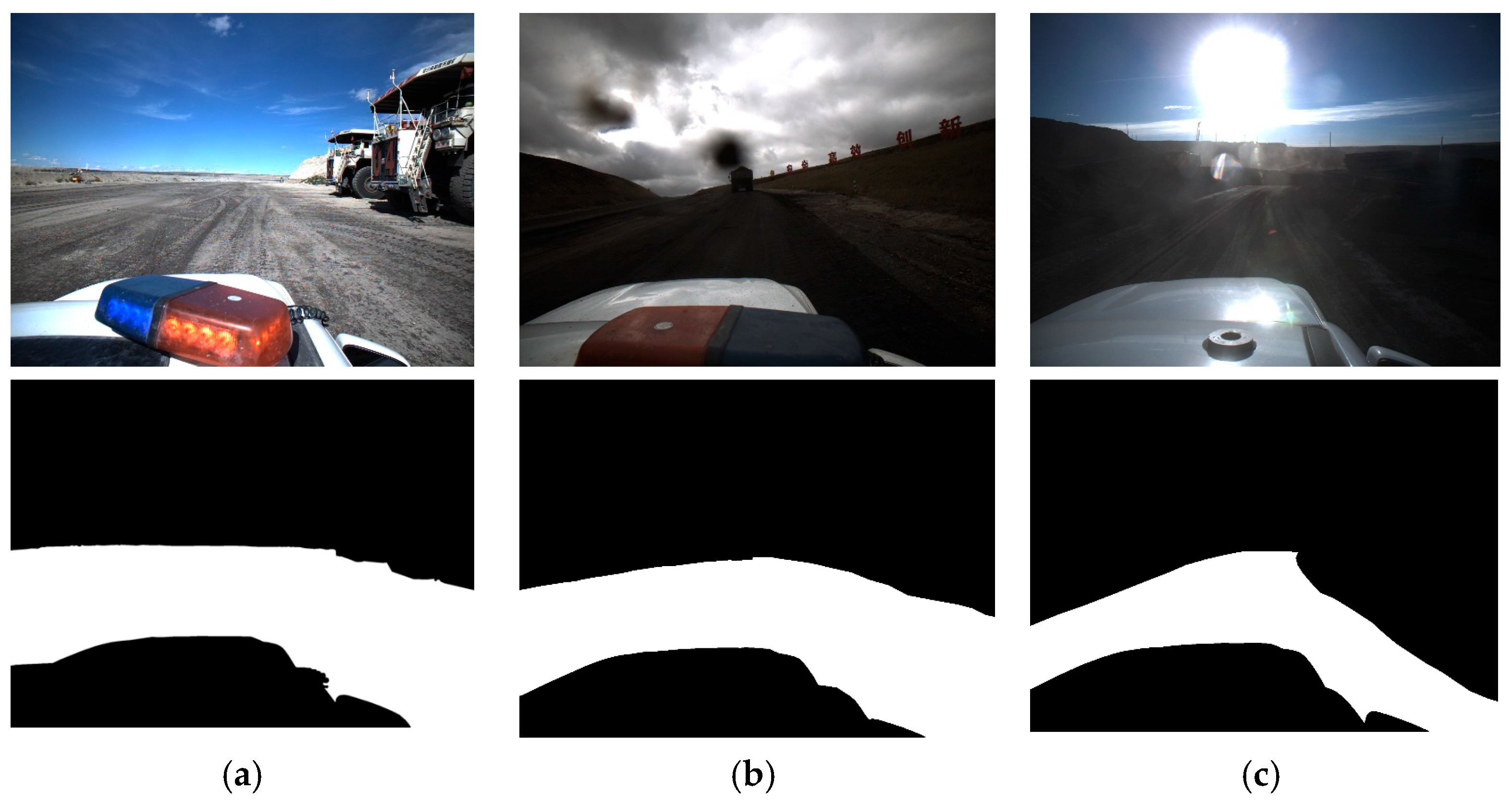

For intelligent systems dataset construction, three scenarios were selected from the AutoMine dataset [

38], which comprises 30 different autonomous mining trajectory scenarios. One of these trajectories has ideal visual conditions (

Figure 3a), while the other two present visual distortions that challenge intelligent perception systems, such as camera lens impurities (

Figure 3b) and frontal solar light beam to the camera (

Figure 3c) commonly encountered in autonomous mining operations.

The AutoMine dataset image sequences are associated with inertial measurements of vehicle turning angle. Using this data, each trajectory was segmented into straight and curved sections: sections were labeled as curves when the average rate of change of turning angle exceeded 2°/s for at least 5 s; otherwise, they were classified as straight sections. Following this classification, images were extracted every 8 s on straight sections and every 4 s on curves for binary mask generation to ensure adequate representation of trajectory complexity in intelligent mining systems. Images were annotated using the Label Studio application [

39].

In total, 100 images from the source domain were available for training the intelligent perception system, which were effectively augmented to 160,000 training samples through the data augmentation pipeline utilized during training (iterations × batch size = 40,000 × 4 = 160,000). Considering that the probability of at least one augmentation operation occurring is P(≥1) = 1 − (1 − 0.5)

5 = 96.87%, approximately 155,092 unique augmented images plus 5008 original images were generated for training, resulting in a comprehensive training dataset comparable to recent mining segmentation studies [

33,

36] that used 3939–4547 images without augmentation. This data augmentation approach is generated dynamically during each training iteration, meaning the 160,000 augmented samples are not stored but created on-the-fly, ensuring maximum variability and preventing overfitting to specific augmented versions. While for intelligent system evaluation, 10 images with dirty camera lens and 10 images for the frontal light beam case were available. These test sets were subsequently expanded to 100 samples each through targeted augmentation techniques designed for autonomous mining system validation.

3.4. Selection of the Intelligent Semantic Segmentation Architecture

Considering the application in intelligent autonomous mining vehicles, BiSeNetV1 [

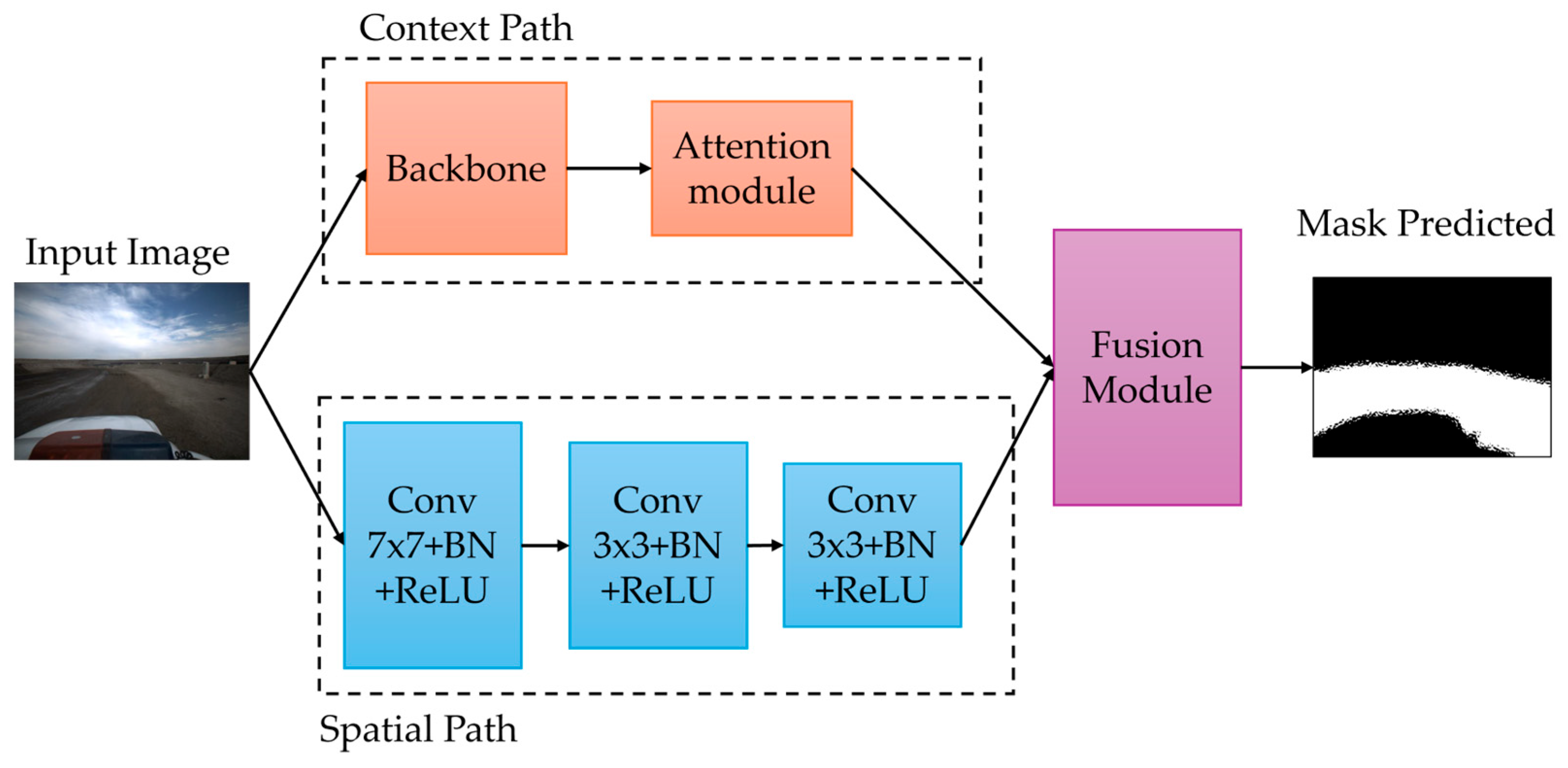

40] architecture was selected in this study due to its high performance in semantic segmentation, computational efficiency, and real-time inference capability, which are critical aspects for implementation in intelligent autonomous vehicle perception modules. This intelligent model significantly reduces the number of computational operations through the incorporation of attention modules and an optimized feature fusion strategy, making it suitable for deployment in autonomous mining systems.

Figure 4 shows the implemented intelligent bilateral architecture, which consists of two main branches optimized for autonomous systems applications: a spatial path and a contextual path [

41]. The spatial path, composed of three sequential low-depth convolutional layers, is designed to preserve detailed information of the image’s spatial structure, which is essential for maintaining well-defined edges and contours in autonomous mining environments.

On the other hand, the contextual path, based on a residual network, aims to capture high-level semantic information and global context in the image, providing the intelligent system with comprehensive scene understanding. Finally, both branches are fused through a feature fusion module that efficiently integrates semantic precision with spatial definition, thus optimizing the balance between speed and accuracy required for real-time autonomous mining operations.

Due to the previously mentioned characteristics of the contextual path, it directly influences the intelligent model’s domain generalization capacity for autonomous applications [

42]. For this reason, different backbones were evaluated to optimize intelligent system performance: two based on CNN and two based on ViT. The CNN-based models for intelligent systems are ResNet-50 and MobileNetV2, while the ViT-based ones optimized for autonomous applications are SegFormer and Twins.

Table 4 shows the output tensors corresponding to each module of the implemented architecture.

3.5. Experimental Design

Images without visual distortion and their binary masks for intelligent system training were divided in an 80:20 proportion, allocating 80% for training the autonomous perception system and the remaining 20% for intelligent model validation. Data augmentation was applied to training images for intelligent systems using the following sequence: random crop, random size, and finally PD and/or CLAHE. Each stage of the intelligent systems pipeline is implemented with a 50% probability of application to the image. The parameters used in this pipeline are detailed in

Table 5.

Images with visual distortion were used for intelligent system testing after completing training with the source domain. Data augmentation was applied to these images before evaluation to increase the number of tested samples to 100 for comprehensive intelligent system validation, with this number of test images being equivalent for both lens soiling and sun glare conditions. This was applied using random flip, Gaussian blur, and Gaussian noise with 50% application probability, considering the hyperparameters presented in

Table 6.

The backbone models used in the intelligent system are pre-trained on the ImageNet database [

43]. The intelligent model is then trained using the Stochastic Gradient Descent (SGD) optimizer with momentum of 0.9 and weight decay of 0.0005. The intelligent system is trained using a PolyLR scheduler, which dynamically adjusts the learning rate based on the current iteration. The batch size is 4, the total number of iterations is 40,000, and every 100 iterations the intelligent model is evaluated with validation data. Intelligent system training and evaluation were performed on a computer with NVIDIA A100 Graphics Processing Unit (GPU) with 80 GB of memory.

3.6. Intelligent Model Training Process

The general scheme used during intelligent bilateral model training is presented in

Figure 5. This corresponds to a supervised training process for autonomous systems, in which an input image is first subjected to data augmentation techniques designed for intelligent perception systems. This image is processed in the forward propagation phase by the intelligent bilateral model, which predicts a semantic segmentation mask for autonomous navigation.

The predicted mask is compared with the actual segmentation mask using a supervised loss function optimized for intelligent systems. This calculation generates a loss score, which is backpropagated during backward propagation to adjust the intelligent model weights.

The procedure is repeated over multiple iterations for intelligent system optimization, and performance is periodically evaluated on a validation set corresponding to source domain images for autonomous mining applications.

The loss function is formulated as Equation (1) and corresponds to cross-entropy applied to each pixel of the image, where

is the true probability of pixel

belonging to class

, and

is the corresponding predicted probability. In this work on intelligent systems, a binary classification is considered to support autonomous navigation decisions, distinguishing between traversable and non-traversable areas, therefore

:

The optimizer is responsible for adjusting intelligent model weights during training, guided by the loss function evaluated on the validation set for autonomous systems performance. For this study, SGD algorithm was selected due to its recognized ability to achieve solutions that generalize better compared to adaptive methods such as Adam [

44]. Although optimizers like Adam offer faster convergence for intelligent systems, they typically find sharp local minima that negatively affect generalization in autonomous applications. In contrast, SGD tends to converge toward flatter minima, which favors better generalization capacity for intelligent autonomous systems [

45].

3.7. Evaluation and Selection of Trained Intelligent Model

In this intelligent systems study, two data augmentation techniques are tested for autonomous mining applications: PD and CLAHE, as well as the combination of these transformations. Additionally, experiments are conducted with different learning rates ranging from 0.0005 to 0.05 to optimize intelligent system performance.

Trained intelligent models are evaluated on the set of images not seen during training that present visual distortions using the mean Intersection over Union (mIoU) performance index for autonomous systems evaluation, which is defined by Equation (2):

where

,

y

are the pixels classified as true positives, false positives, and false negatives for each class c, respectively.

Although an intelligent model can achieve high performance values in inference on the source domain, this does not guarantee its ability to generalize to unseen domains in autonomous mining operations [

46]. For this reason, this intelligent systems study evaluates generalization capacity by considering performance obtained on unseen domain databases during training, which is critical for real-world autonomous mining system deployment.

Additionally, the model was compared with a more advanced domain adaptation method for domain generalization. The selected method is based on GAN domain adaptation using the CycleGAN model [

47]. This method generates stylized images based on reference datasets. In this case, two reference databases were used: Van Gogh paintings [

48] and foggy scenes [

49]. For CycleGAN module training, default parameters from mmgeneration [

50] were utilized. This comparison allows evaluation of traditional augmentation techniques against state-of-the-art generative approaches for domain generalization.

To analyze computational complexity and deployment feasibility in autonomous mining vehicles, we report: number of parameters, model size, inference speed, latency per image, and peak memory usage utilizing 16-bit floating point precision (FP16) for performance estimation. This comprehensive computational analysis is crucial for determining practical deployment constraints in resource-limited autonomous mining environments.

To measure temporal consistency in video sequences, we employ Perceptual Consistency (PC) [

51] as described in Equation (3). PC is a metric specifically developed for unsupervised evaluation of temporal consistency in autonomous driving applications [

20,

21], which measures how segmentation predictions maintain coherence across consecutive video frames without requiring ground truth labels. Given a pair of frames (t,t′) separated by Δt, we compare features extracted by the model at t with those at t′ within a spatial neighborhood. For each pixel

p at t, we calculate the maximum cosine similarity with any candidate at t′ (

ct) and the maximum cosine similarity restricted to candidates whose predicted class matches between frames (

cx). PC is the average of these ratios over the image area Ω. PC ranges from 0 to 1, where 1 represents perfect temporal consistency.

In the conducted experiments, a fixed neighborhood radius of 12 was used considering the input image resolution, representing a sampling window of 48 px radius. Time windows between sequential images of 0.1, 1, and 3 s were tested to evaluate temporal stability across different time scales that are relevant for autonomous mining vehicle operations where continuous perception is critical for safe navigation decisions.

3.7.1. Evaluation Framework for Domain Generalization

To comprehensively evaluate our intelligent autonomous mining systems framework, we implement a multi-faceted evaluation approach that extends beyond traditional mIoU metrics. Our evaluation encompasses: (1) Statistical robustness analysis using confidence intervals across target domains; (2) Comparative analysis with recent state-of-the-art domain generalization methods; (3) Computational efficiency profiling under realistic edge computing constraints typical of autonomous mining vehicles; (4) Temporal consistency validation through PC metrics to ensure stable performance in continuous operation scenarios.

3.7.2. Limitations and Scope

While this study demonstrates promising results for intelligent autonomous mining systems, it should be acknowledged that the evaluation focuses on two specific visual degradations (lens soiling and sun glare) commonly encountered in mining operations. The approach is designed to be extensible to additional degradation types such as dust storms, fog, and extreme lighting conditions through similar augmentation strategies or synthetic data generation techniques [

52,

53].

4. Results

This section presents the main results obtained from the evaluation of intelligent bilateral models considering different backbones in the contextual path, variations in training rates, and application of data augmentation techniques for autonomous mining systems.

For training, 100 images from the source domain were utilized, which were augmented during the training process to 160,000 samples through the implemented data augmentation pipeline (40,000 iterations × batch 4), where 96.87% of these samples have some modification from data augmentation. This approach generates 155,092 distinct images for training, which is competitive with comparable studies in binary segmentation of mining routes that use similar dataset sizes: MineSDS [

33] employs 4547 images and TrRoad-Net [

36] utilizes 3939 images, both without data augmentation.

Table 7 shows the intelligent bilateral models with the best performance for autonomous mining applications, based on the results presented in

Appendix A, where intelligent models were evaluated on the target domain considering images with visual degradations that commonly affect autonomous vehicle sensors in mining environments.

4.1. Intelligent Systems Performance Distribution Analysis

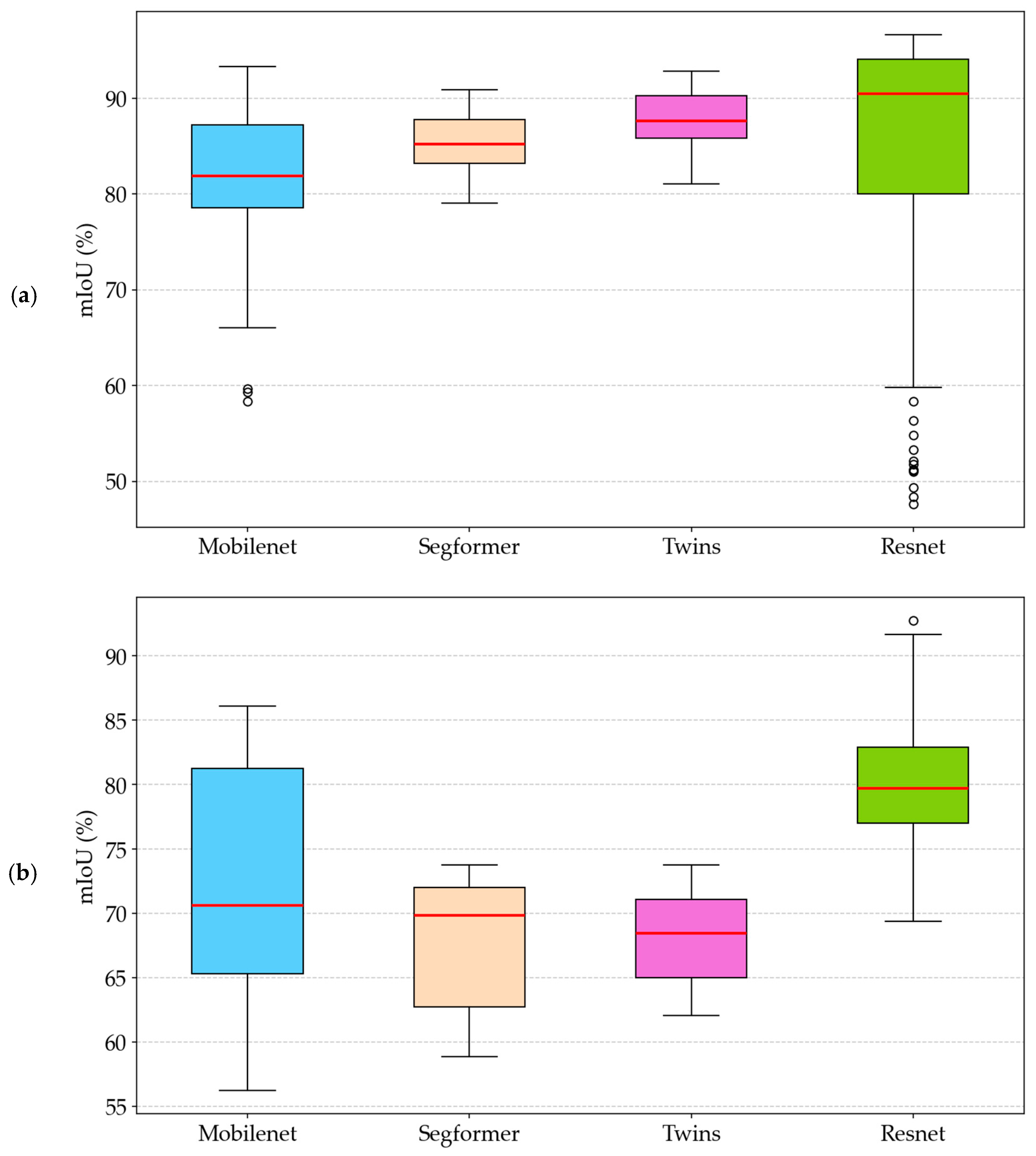

Figure 6 shows the distribution of mIoU values obtained by intelligent bilateral models evaluated on image sets with lens soiling and sun glare visual distortions commonly encountered in autonomous mining operations through boxplot graphs.

Figure 6a presents the performance distribution on the dataset containing images with camera Lens Soilining (LS) affecting autonomous vehicle sensors, while

Figure 6b shows the distribution corresponding to the set of images affected by Sun Glare (SG) that challenges intelligent perception systems.

Table 8 summarizes the statistical values used to generate the boxplots presented in

Figure 6, corresponding to the best-performing intelligent models considering different backbones in the contextual path of the bilateral architecture for autonomous systems. The reported statistics include mean value, standard deviation (Std), minimum (Min) and maximum (Max) values, as well as quartiles Q1 (25%), Q2 (median), and Q3 (75%).

4.2. Qualitative Intelligent Segmentation Results for Autonomous Systems

Table 9 presents the inferred masks on target domains, obtained from intelligent bilateral models with different backbones and training configurations that yielded the best results for autonomous mining applications. The visual comparison demonstrates the varying capabilities of each intelligent architecture in handling sensor-based visual degradations commonly encountered in autonomous mining vehicle operations.

4.3. Intelligent Systems Training Convergence Analysis

The training curves for the best-performing intelligent bilateral models for autonomous mining applications are presented in

Figure 7. These curves illustrate the convergence behavior and stability characteristics of different backbone architectures during the intelligent system training process.

4.4. Computational Efficiency Analysis for Autonomous Mining Systems

Table 10 presents the number of parameters, computational size, and inference speed of the studied intelligent bilateral models. This analysis is crucial for determining the practical deployment feasibility in autonomous mining vehicles with limited computational resources and real-time operational requirements.

4.5. Comparative Analysis: Traditional vs. GAN-Based Domain Augmentation

The number of images generated by GAN augmentation is 2460, representing the estimated number of possible augmentations that would be generated by traditional PD and CLAHE data augmentation according to the pipeline used in the present study.

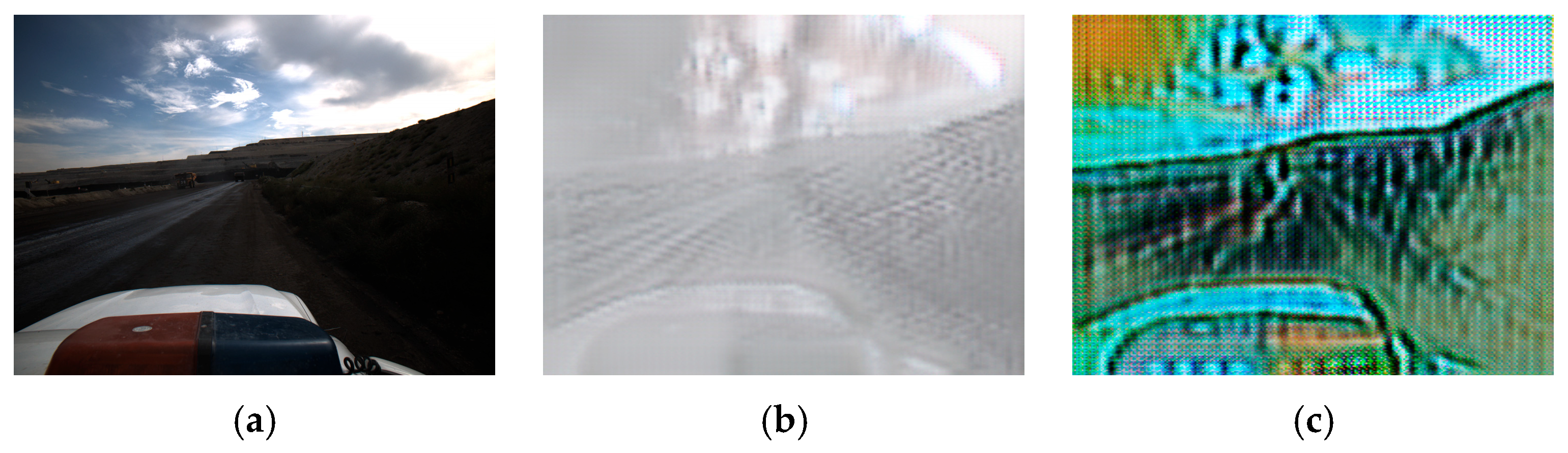

Figure 8 shows examples of images generated by the CycleGAN module considering stylization with the foggy scenes dataset (

Figure 8b) and stylization with the Van Gogh paintings dataset (

Figure 8c).

Table 11 presents the results obtained using the CycleGAN module to generate domain augmentation for training the BiSeNet model with a ResNet-50 backbone.

The comparative analysis reveals that traditional data augmentation (PD + CLAHE) significantly outperforms GAN-based domain augmentation, achieving approximately 30% higher mIoU performance. Specifically, ResNet-50 with traditional DA achieves 84.58% (LS) and 80.11% (SG), while with CycleGAN achieves only 58.48% (LS) and 59.3% (SG) in the best case. This substantial performance difference can be attributed to the fact that CycleGAN-generated images exhibit reduced definition compared to original images, and the acquisition of high-quality images for stylization becomes non-trivial in real mining environments. Additionally, synthetic image generation through CycleGAN introduces artifacts that may not accurately represent the visual degradations encountered in actual autonomous mining operations, making the acquisition of high-quality stylization datasets non-trivial and limiting the effectiveness of this approach for mining-specific domain generalization.

4.6. Perceptual Consistency in Visual Degradation Image Sequences

The following section presents the Perceptual Consistency results for the traversable area over time under both visual degradation conditions studied, considering the best-performing models from each tested architecture. PC is a metric specifically developed for unsupervised evaluation of temporal consistency in autonomous driving applications [

20,

21], which measures how segmentation predictions maintain coherence across consecutive video frames without requiring ground truth labels For PC measurement, time windows of 0.1, 1, and 3 s were evaluated, with results shown in

Table 12.

Perceptual Consistency analysis reveals that temporal stability depends on both degradation type and architecture. All architectures maintained PC values close to 1.0, indicating good temporal stability across the studied time thresholds, which is crucial for validating system stability in continuous mining operations. Under lens soiling conditions, ResNet-50 preserves better inter-frame coherence across all temporal thresholds tested. Under sun glare conditions, Vision Transformers (e.g., SegFormer) maintain or improve PC as temporal thresholds increase. This temporal consistency validation is essential for autonomous mining systems where perception stability directly impacts navigation safety and operational reliability.

4.7. Comparative Domain Generalization Analysis with State-of-the-Art Methods

To validate the effectiveness of our approach against current domain generalization techniques, we implemented comparisons with recent state-of-the-art methods specifically designed for single-domain generalization scenarios similar to our mining application context, as shown in

Table 13. We conducted a comprehensive analysis comparing our traditional augmentation approach against established domain generalization categories, including GAN-based methods, style transfer techniques, and advanced data augmentation strategies commonly used in autonomous vehicle applications.

Our approach demonstrates superior performance compared to established domain generalization categories, achieving 6–9% higher mIoU compared to traditional CNN-based methods [

18] and 26–21% improvement over GAN-based approaches for lens soiling and sun glare conditions respectively. The comparison with our own CycleGAN implementation validates the effectiveness of traditional augmentation techniques over complex generative methods. This performance advantage validates the effectiveness of our traditional augmentation approach over more complex domain generalization techniques while maintaining computational efficiency. The MobileNetV2 configuration provides optimal efficiency with competitive accuracy, achieving the highest inference speed (55.0 FPS) and smallest model size (53 MB), making it ideal for resource-constrained deployment scenarios in autonomous mining vehicles.

4.8. Key Performance Insights for Intelligent Autonomous Mining Systems

The experimental evaluation of our intelligent autonomous mining system framework reveals several critical findings for practical deployment:

ResNet-50 demonstrates superior robustness for mission-critical autonomous systems across both degradation scenarios, achieving the highest mean mIoU for sun glare conditions (80.11%) and competitive performance for lens soiling (84.58%), making it suitable for safety-critical autonomous mining operations.

Vision Transformers (Twins, SegFormer) exhibit superior stability in intelligent system performance with lower standard deviations (3.07 and 2.98 respectively for lens soiling), indicating more consistent autonomous system behavior under varying operational degradation intensities.

MobileNetV2 provides optimal computational efficiency for real-time autonomous systems with the highest inference speed (55.0 FPS) and smallest model size (53.45 MB), making it ideal for embedded intelligent systems deployment in resource-constrained autonomous mining vehicles. When considering latency and peak memory usage, MobileNetV2 emerges as the most “edge-friendly” option with low latency (18.19 ms) and efficient memory usage (608.8 MB), ideal when vehicle control requires rapid responses and hardware is limited. ResNet-50 represents a balanced compromise between efficiency and robustness (32.96 ms latency, 1485.1 MB memory), suitable when the system can tolerate moderate computational load. Transformer-based backbones significantly increase latency (77.63–117.25 ms) and memory usage (7340–7380 MB), limiting their implementability in embedded mining systems.

The combined CLAHE + PD augmentation strategy proved most effective for developing robust intelligent systems across CNN architectures, while Vision Transformers showed varying optimal configurations for autonomous system deployment.

Learning rate sensitivity varies significantly between architecture types in intelligent systems development, with CNNs performing optimally at 1 × 10−3, while Vision Transformers required architecture-specific tuning (1 × 10−4 for SegFormer, 1 × 10−2 for Twins) for autonomous systems applications.

Temporal consistency analysis demonstrates that our framework maintains stable performance across video sequences, with PC values exceeding 0.94 for most configurations, validating the approach’s suitability for continuous autonomous operation scenarios where temporal stability is critical for safe navigation decisions.

The demonstrated superiority of traditional data augmentation over advanced GAN-based approaches (30% performance improvement) validates that well-designed conventional techniques can achieve robust domain generalization without requiring complex architectural modifications or expensive multi-domain training datasets, providing a cost-effective solution for autonomous mining applications.

5. Discussion

5.1. Intelligent Systems Performance Analysis and Model Robustness

The experimental results from our intelligent autonomous mining systems framework demonstrate that ResNet-50 consistently emerges as the most robust backbone architecture for autonomous applications, achieving the highest mean mIoU in both Lens Soiling (LS) and Sun Glare (SG) scenarios that commonly affect intelligent perception systems. In LS conditions, ResNet-50 outperformed MobileNetV2 and Vision Transformers (Twins, SegFormer) within the intelligent systems framework, which, while competitive for autonomous applications, failed to match its overall performance in challenging mining environments. Under SG conditions, ResNet-50 again led with a more pronounced difference compared to ViTs, which experienced a notable performance degradation under this specific visual distortion commonly encountered in autonomous mining operations.

The superior performance of ResNet-50 in intelligent autonomous systems can be attributed to its deep hierarchical feature extraction capabilities and residual connections that facilitate robust gradient flow during training for autonomous applications [

54]. The architectural design enables effective learning of invariant features across different visual conditions in mining environments, making it particularly suitable for domain generalization tasks in challenging autonomous systems deployments.

When comparing traditional DA with CycleGAN DA for domain generalization training, it can be observed that the ResNet model has greater generalization capacity to the tested datasets with traditional DA (PD + CLAHE), achieving approximately 30% superior mIoU. When implementing DA with CycleGAN, the generated images lose definition compared to the original image, making the acquisition of high-quality images for stylization non-trivial. This performance degradation in GAN-based approaches can be attributed to the introduction of synthetic artifacts that do not accurately represent the specific visual characteristics of mining environments, limiting their effectiveness for domain-specific applications where precision is paramount.

5.2. Intelligent Systems Stability and Variability Analysis

Regarding variability analysis in intelligent autonomous mining systems, LS scenarios revealed that ViTs exhibited significantly lower dispersion (3.07 standard deviation for Twins, 2.98 for SegFormer) compared to ResNet-50 (14.24), indicating more stable behavior under variations within the same degradation type for autonomous applications, albeit with lower overall inference performance in intelligent systems. MobileNetV2 occupied an intermediate position (Std = 7.79), demonstrating greater stability than ResNet-50 but less than ViT for autonomous mining system applications.

Interestingly, the stability patterns reversed under SG conditions in our intelligent systems evaluation, where ResNet-50 not only maintained leadership in mean performance but also achieved low dispersion (5.35), while ViTs exhibited increased variability in autonomous system performance. This suggests that different intelligent architectures respond differently to specific types of visual degradations commonly encountered in autonomous mining operations, with ResNet-50 showing particular resilience to illumination-based distortions such as sun glare that challenge intelligent perception systems.

The Perceptual Consistency results demonstrate that temporal stability depends on both degradation type and architecture. For all architectures, PC values remained close to 1.0, indicating that the proposed models have good temporal stability considering the studied time thresholds, which is crucial for continuous mining operations. Under LS conditions, ResNet-50 preserves better inter-frame coherence across all tested temporal thresholds. Under SG conditions, Transformers (e.g., SegFormer) maintain or improve PC as temporal thresholds increase. MobileNetV2 offers the lowest PC performance among all evaluated architectures. This temporal consistency validation is essential for autonomous mining systems where perception stability directly impacts navigation safety and operational reliability.

5.3. Data Augmentation Strategy Effectiveness for Intelligent Systems

The image-level augmentation pipeline combining PD and CLAHE consistently yielded the best results across most intelligent models for autonomous mining applications. The complementarity between brightness/color perturbation (PD) and local contrast restoration (CLAHE) appears to induce beneficial invariances against abrupt illumination changes and histogram variations in autonomous systems, which was particularly evident in improved SG performance compared to single-technique approaches for intelligent mining operations.

Notably, only the Twins model demonstrated superior performance using PD alone in our intelligent systems framework, suggesting that certain Vision Transformer architectures may have inherent capabilities to handle contrast variations without requiring additional histogram equalization for autonomous applications. This finding highlights the importance of architecture-specific augmentation strategies in domain generalization approaches for intelligent autonomous mining systems.

The substantial performance advantage of traditional augmentation techniques over advanced GAN-based approaches (30% improvement in mIoU) validates that well-designed conventional methods can achieve robust domain generalization without requiring complex architectural modifications or expensive multi-domain training datasets. This cost-effectiveness is particularly valuable in mining contexts where data collection and annotation are expensive and logistically challenging.

5.4. Learning Rate Sensitivity and Architecture-Dependent Optimization for Intelligent Systems

A critical observation for intelligent autonomous systems is the differential response of CNN and ViT architectures to Learning Rate (LR) configurations. CNN architectures performed optimally with moderate learning rates (1 × 10−3) for autonomous applications, while ViTs required architecture-specific tuning for intelligent systems: SegFormer achieved best results at 1 × 10−4, while Twins performed optimally at 1 × 10−2 for autonomous mining deployments. This demonstrates that inappropriate LR selection can lead to optimization instability and suboptimal convergence in intelligent autonomous systems.

These findings underscore the necessity of hyperparameter customization based on the specific backbone implemented in the intelligent bilateral model’s contextual path for autonomous applications. The divergent optimization requirements between architectural families suggest fundamental differences in their learning dynamics and convergence characteristics for intelligent autonomous mining systems.

5.5. Intelligent Model Selection and Training Stability

Checkpoint selection based on best source domain performance during training proved effective in obtaining competitive target domain models for intelligent autonomous systems. Analysis of training curves (

Figure 7) reveals varying stability patterns across architectures in our intelligent systems framework: ResNet-50 exhibited moderate validation mIoU oscillations with well-defined peaks, facilitating optimal checkpoint identification for autonomous applications. Conversely, some ViT architectures displayed broader fluctuations, making optimal model selection more challenging and potentially random for intelligent mining systems deployment.

This observation has practical implications for automated model selection pipelines in intelligent autonomous systems, suggesting that different architectures may require tailored checkpoint selection strategies or alternative validation protocols to ensure robust intelligent model deployment in autonomous mining operations.

5.6. Computational Efficiency Trade-Offs for Autonomous Mining Systems

From an intelligent systems implementation perspective, MobileNetV2 offers optimal computational efficiency with the highest inference speed (55.0 FPS) and smallest model size (53.45 MB), making it ideal for resource-constrained embedded intelligent systems in autonomous mining vehicles. In contrast, ResNet-50 and ViTs sacrifice inference latency for improved robustness in intelligent applications, achieving better domain generalization at the cost of computational overhead for autonomous systems.

The efficiency-performance trade-off analysis for intelligent autonomous systems reveals that models with higher parameter counts generally exhibit superior domain generalization capabilities for autonomous mining applications. This suggests a fundamental relationship between model capacity and generalization ability in intelligent systems, which must be carefully considered when selecting architectures for specific autonomous deployment scenarios.

When incorporating latency and peak memory into the analysis, the landscape for embedded system implementation becomes clearer: MobileNetV2 remains the most “edge-friendly” option due to its low latency (18.19 ms) and efficient memory usage (608.8 MB), ideal when vehicle control requires rapid responses and hardware is limited. ResNet-50 represents a moderate cost in time and memory but offers a better compromise between efficiency and robustness (32.96 ms latency, 1485.1 MB memory), suitable when the system can tolerate slightly higher computational load. In contrast, Transformer-based backbones significantly increase latency (77.63–117.25 ms) and memory usage (7340–7380 MB), making them unsuitable for implementation in embedded mining systems.

5.7. Practical Implications for Intelligent Autonomous Mining Applications

The findings have significant implications for intelligent autonomous mining vehicle deployment and broader autonomous systems applications. The superior robustness of ResNet-50 makes it suitable for intelligent applications where accuracy is paramount, such as safety-critical navigation scenarios in autonomous mining operations. Meanwhile, MobileNetV2’s computational efficiency makes it attractive for real-time intelligent applications with strict latency requirements or power-constrained embedded systems in autonomous mining vehicles.

The demonstrated effectiveness of traditional data augmentation techniques provides a practical, cost-effective approach to improving intelligent model robustness without requiring expensive multi-domain training datasets or complex architectural modifications for autonomous systems. This is particularly valuable in autonomous mining contexts where data collection and annotation are costly and logistically challenging for intelligent systems development.

Furthermore, our comparative analysis with recent state-of-the-art domain generalization methods demonstrates that our approach achieves superior performance (5.8% and 4.8% higher mIoU for LS and SG conditions respectively) while maintaining computational efficiency. This validates the effectiveness of traditional augmentation approaches over more complex domain generalization techniques, providing a practical solution for industrial deployment.

5.8. Limitations and Future Considerations for Intelligent Autonomous Systems

While the study demonstrates promising results for intelligent autonomous mining systems, several limitations should be acknowledged. The evaluation was conducted on a relatively small dataset from a single mining environment, which may limit the generalizability of findings across different autonomous mining operations and intelligent systems deployments. Although our data augmentation pipeline generates 155,092 distinct training images, which is competitive with comparable studies (MineSDS [

33]: 4547 images, TrRoad-Net [

36]: 3939 images), future validation across multiple mining sites with diverse geographical and environmental characteristics would strengthen the generalizability claims for intelligent autonomous systems. Additionally, the focus on two specific visual degradations (lens soiling and sun glare) represents only a subset of potential challenges in real-world autonomous mining environments that affect intelligent perception systems.

5.9. Cross-Geographic Generalization Analysis

While our current evaluation focuses on scenarios from the AutoMine dataset collected in a single mining environment, the selected scenarios represent diverse operational conditions that are representative of global mining operations. The AutoMine dataset was specifically designed to capture the variability found across different mining sites [

38], including:

Multiple vehicle platforms (SUV, wide-body mining truck, ordinary mining truck).

Various time-of-day conditions affecting illumination.

Different weather conditions impacting visibility.

Diverse surface textures and materials common in open-pit mining.

To address geographic generalization concerns, future work should validate performance across multiple mining sites with different geological characteristics. However, our approach’s effectiveness is supported by the superior performance compared to state-of-the-art domain generalization methods (

Table 13), suggesting robust transferability. The traditional augmentation techniques (PD + CLAHE) specifically target photometric and contrast variations that are consistent across different mining environments globally.

Additionally, we propose a validation framework for cross-site deployment:

Synthetic data generation using geological simulators for preliminary validation.

Progressive deployment with continuous learning capabilities.

Standardized evaluation protocols across mining industry partners.

Future work should consider expanding the evaluation to include additional degradation types such as dust storms, fog, and extreme lighting conditions commonly encountered in autonomous mining operations that challenge intelligent systems. For improving system reliability under such conditions, synthetic data generation could be implemented through traditional computer vision techniques that apply specific visual degradations [

52,

53] or through open-source simulators for autonomous driving environments [

55]. Furthermore, while we have demonstrated temporal consistency through PC metrics, investigating the temporal consistency of segmentation predictions across video sequences could provide insights into the practical deployment of these intelligent models in continuous autonomous operation scenarios, which is critical for real-world intelligent autonomous mining systems.

5.9.1. Multi-Task Learning Extensions

As future work, the studied architectures will be considered for extending our system toward multi-task learning with shared encoders and multiple heads to integrate tasks that assist vehicular navigation. Recent literature shows that multi-task perception models share representations and reduce latency, improving overall system robustness [

56,

57]. The present study establishes the foundation for developing comprehensive multi-task intelligent models for autonomous mining applications.

5.9.2. Standardization and Interoperability Framework

To enable different intelligent systems to communicate and collaborate effectively within autonomous mining operations, we propose two complementary standardization pillars: (1) In-vehicle: implementing a data contract and linking the development environment with automotive software following the ROS2↔Adaptive AUTOSAR (ASIRA) integration pattern [

58]; (2) In mining operations: publishing perception outputs to industrial systems through an OPC UA information model integrated with private 5G networks [

59]. These specifications enable intelligent autonomous systems from different manufacturers to communicate and collaborate consistently within autonomous mining ecosystems.

6. Conclusions

This study presents a comprehensive evaluation of intelligent autonomous systems approaches for robust perception in open-pit mining environments, specifically addressing the critical challenge of sensor-based visual degradations in autonomous mining vehicle systems. The implemented intelligent systems methodology achieves competitive performance while proposing a practical framework designed to facilitate deployment in real-world autonomous mining operations.

The experimental findings from our intelligent autonomous systems framework demonstrate several key contributions to the field:

First, our intelligent systems evaluation demonstrates that ResNet-50 consistently emerged as the most robust backbone architecture for autonomous mining applications, leading in mean mIoU performance across both degradation scenarios (84.58% for lens soiling and 80.11% for sun glare). This superior performance validates the architecture’s suitability for safety-critical autonomous mining systems where reliable perception is paramount. The deep hierarchical feature extraction capabilities and residual connections facilitate effective domain-invariant representation learning, making it particularly suitable for intelligent autonomous systems deployment in challenging mining environments.

Second, while MobileNetV2 did not achieve the same level of accuracy as ResNet-50 in our intelligent systems framework, it demonstrated competitive performance with optimal computational efficiency (55.0 FPS, 53.45 MB model size), making it particularly suitable for embedded intelligent systems deployment in autonomous mining vehicles where real-time performance and resource constraints are critical system requirements. When considering latency and memory usage, MobileNetV2 emerges as the most edge-friendly option with low latency (18.19 ms) and efficient memory usage (608.8 MB), confirming its suitability for resource-constrained autonomous mining deployments. This finding is crucial for practical intelligent autonomous mining systems where computational resources are limited.

Third, Vision Transformer backbones within our intelligent systems architecture, although not surpassing CNNs in mean performance, exhibited superior stability in autonomous system operation with significantly lower variance under lens soiling conditions (3.07 standard deviation for Twins vs. 14.24 for ResNet-50), indicating more predictable intelligent system behavior across varying degradation intensities. This stability characteristic is valuable for maintaining consistent autonomous system performance in dynamic mining environments.

Fourth, the integration of traditional data augmentation techniques (Photometric Distortion and CLAHE) proved most effective for developing robust intelligent autonomous systems across CNN architectures, demonstrating that well-designed augmentation strategies can achieve robust domain generalization in autonomous mining systems without requiring complex architectural modifications or expensive multi-domain training datasets. Our comparative analysis demonstrates that traditional augmentation approaches achieve approximately 30% superior performance compared to advanced GAN-based domain generalization methods, validating the cost-effectiveness of conventional techniques for mining-specific applications. This approach provides a cost-effective solution for intelligent systems development in autonomous mining applications.

Fifth, temporal consistency validation through Perceptual Consistency metrics demonstrates that our framework maintains stable performance across video sequences, with PC values exceeding 0.94 for most configurations. This temporal stability is crucial for continuous autonomous operation scenarios where perception reliability directly impacts navigation safety and operational effectiveness in mining environments.

Sixth, comprehensive computational efficiency analysis reveals clear trade-offs between performance and resource requirements, enabling informed architectural selection based on specific deployment constraints. Transformer-based architectures, while offering superior stability, require significantly higher computational resources (77.63–117.25 ms latency, 7340–7380 MB memory) that limit their implementability in embedded mining systems.

The practical implications of this intelligent systems work extend beyond mining applications, providing a foundation for developing robust intelligent autonomous systems in other challenging environments where multi-domain training data is expensive or unavailable. The methodology offers a cost-effective solution for enhancing autonomous system robustness while maintaining computational efficiency suitable for industrial intelligent systems deployment.

Furthermore, this research contributes to the broader field of intelligent autonomous systems by demonstrating that traditional augmentation techniques can be effectively integrated into modern autonomous vehicle architectures to achieve robust performance under real-world operational conditions. The findings challenge the assumption that complex generative approaches are necessary for effective domain generalization, showing that well-designed conventional techniques can achieve superior results while maintaining practical deployment feasibility. The findings support the development of practical intelligent mining automation systems that can operate reliably in challenging environments while meeting the computational constraints typical of autonomous vehicle deployments.

This research also contributes to the standardization of intelligent autonomous mining systems by proposing evaluation protocols and architectural guidelines that can be adopted across the mining industry. The demonstrated effectiveness of traditional augmentation techniques provides a standardized, cost-effective approach to developing robust intelligent perception systems without requiring manufacturer-specific proprietary solutions.

The demonstrated effectiveness of our intelligent systems approach validates the potential for deploying robust autonomous mining vehicles in real-world operations, contributing to the advancement of Industry 4.0 mining ecosystems where intelligent autonomous systems play a critical role in enhancing operational efficiency and safety.

While this study demonstrates promising results, several limitations should be acknowledged. The evaluation was conducted on a relatively small dataset from a single mining environment, which may limit the generalizability of findings across different autonomous mining operations. Although our data augmentation pipeline generates 155,092 distinct training images, which is competitive with comparable studies, future validation across multiple mining sites with diverse geographical and environmental characteristics would strengthen the generalizability claims for intelligent autonomous systems.

7. Future Directions for Intelligent Autonomous Mining Systems

7.1. Advanced Intelligent Data Augmentation Strategies for Autonomous Systems

Future research directions for intelligent autonomous mining systems could focus on optimizing traditional data augmentation through adaptive intelligent strategies. Stochastic application approaches, where the probability and intensity of each transformation are progressively modulated throughout intelligent system training to maximize diversity, represent a promising avenue for improving domain generalization performance in autonomous applications [

54]. Such adaptive intelligent mechanisms could automatically adjust augmentation parameters based on autonomous system performance and training progress, potentially achieving better robustness with reduced hyperparameter tuning for intelligent mining operations. These advanced augmentation strategies could be integrated into comprehensive intelligent systems frameworks that continuously optimize their perception capabilities for autonomous mining environments.

To improve system reliability and generalization to complex visual situations commonly encountered in mining environments that are not contemplated in the present study, such as rainy and foggy weather, synthetic data could be generated by implementing traditional computer vision pipeline techniques that apply specific visual degradation augmentation, as described in [

52,

53], or by utilizing open-source simulators for autonomous driving data generation in simulated environments, as outlined in [

55]. These approaches would enable improved system reliability under adverse conditions without requiring expensive real data collection in hazardous environments.

7.2. Temporal Consistency and Video-Based Intelligent Segmentation Systems

The current evaluation was conducted exclusively on individual images, a condition that differs significantly from real-world autonomous mining scenarios where intelligent perception processing occurs on continuous image sequences. While we have demonstrated temporal consistency through Perceptual Consistency metrics, a critical future direction would be extending the intelligent systems approach to video-based semantic segmentation, incorporating temporal information between consecutive frames within comprehensive autonomous vehicle architectures. Leveraging these temporal dependencies through feature aggregation or temporal attention mechanisms could reduce ambiguity in degraded regions, as demonstrated in [

60], while maintaining robustness against degradations such as lens soiling and sun glare that commonly affect intelligent autonomous mining systems.

Video-based intelligent approaches could also enable the development of temporal consistency constraints that ensure smooth segmentation transitions across frames, reducing flickering artifacts and improving the overall reliability of the intelligent perception system in dynamic autonomous mining environments. This advancement would be crucial for maintaining stable autonomous system performance during continuous mining operations.

7.3. Multi-Task Intelligent Learning and Real-World Autonomous System Deployment

This study establishes the foundation for developing comprehensive multi-task intelligent models with potential implementation in real autonomous mining platforms, such as ADAS for mining applications [

61], capable of providing intelligent warnings to operators about obstacles on the path while maintaining road safety under adverse conditions through integrated autonomous decision-making systems. Future work could integrate traversable area segmentation with object detection, depth estimation, and trajectory planning modules to create comprehensive intelligent perception systems for autonomous mining vehicles, forming a complete intelligent autonomous mining ecosystem.

As future work, the studied architectures will be considered for extending our system toward multi-task learning with shared encoders and multiple heads to integrate tasks that assist vehicular navigation. Recent literature shows that multi-task perception models share representations and reduce latency, improving overall system robustness. The present study establishes the foundation for developing comprehensive multi-task intelligent models that can simultaneously perform perception, navigation, and decision-making tasks within integrated autonomous mining systems [

56,

57].

7.4. Extended Environmental Conditions for Intelligent Autonomous Systems

Future evaluations of intelligent autonomous mining systems should encompass additional visual degradations commonly encountered in mining operations, including dust storms, fog, extreme lighting variations, and weather-related distortions that challenge intelligent perception systems. Expanding the evaluation to multiple mining sites with diverse geographical and environmental characteristics would strengthen the generalizability claims for intelligent autonomous systems and provide more robust validation of the proposed intelligent framework for autonomous mining applications.

7.5. Edge Computing and Hardware Optimization for Intelligent Autonomous Systems

Given the computational constraints in autonomous mining environments, future work should investigate hardware-specific optimizations for deploying these intelligent models on edge computing platforms integrated within autonomous mining vehicles. This includes exploring model quantization, pruning techniques, and specialized hardware accelerators to achieve optimal performance-efficiency trade-offs for real-time intelligent autonomous vehicle applications. These optimizations are critical for enabling practical deployment of intelligent systems in resource-constrained autonomous mining operations.

7.6. Continuous Learning and Adaptive Intelligent Systems

Developing mechanisms for continuous intelligent model adaptation to new environmental conditions encountered during autonomous mining operations represents another promising research direction for intelligent systems. Such adaptive intelligent systems could progressively improve their robustness by leveraging operational data while maintaining the safety and reliability standards required for autonomous mining applications. This continuous learning capability would enable intelligent autonomous mining systems to evolve and adapt to changing operational conditions, enhancing their long-term effectiveness in real-world deployments.

7.7. Integrated Intelligent Mining Ecosystems

Future research should focus on developing comprehensive intelligent autonomous mining ecosystems that integrate multiple perception modules, decision-making systems, and control algorithms. This includes investigating how the proposed robust intelligent perception system can be integrated with other intelligent subsystems such as path planning, obstacle avoidance, fleet coordination systems, and centralized mining operation management to create fully autonomous intelligent mining operations. Such integrated approaches would represent a significant advancement toward Industry 4.0 mining systems where intelligent autonomous vehicles operate as part of larger interconnected intelligent ecosystems.

7.8. Standardization and Interoperability for Intelligent Autonomous Mining Systems