In this Section, we employ publicly available datasets to validate the effectiveness of the methods proposed earlier and discuss them.

4.1. Datasets

In order to fully verify the generalization of the proposed method, experiments were conducted using the bearing dataset from Harbin Institute of Technology [

27] and the bearing fault dataset from Jiangnan University [

28].

- A.

The Bearing Dataset of Harbin Institute of Technology (HIT)

The engine used in the test bench of this dataset retains a dual rotor structure (low-pressure/high-pressure compressor and turbine) and key bearings, with six sensors installed: two displacement sensors (low-pressure rotor) and four acceleration sensors (casing). The test was conducted under 28 sets of high/low pressure speed combinations (speed range 1000–6000 rpm) with a sampling frequency of 25 kHz. The collected data included acceleration vibration signals and displacement vibration signals of the low pressure rotor, totaling 2412 sets of data. Each set was a truncated segment of the 15 s vibration signal with 20,480 data points.

Manufacturing faults (depth 0.5 mm, length 0.5/1.0 mm) in the inner/outer ring of the intermediate bearing through wire cutting, simulating the periodic impact of real faults. This dataset contains four types of labels, including one bearing with outer ring fault, two bearings with inner ring fault, and healthy bearings. The detailed information on the bearings is shown in

Table 1.

- B.

The Bearing Dataset of Jiangnan University (JNU)

The bearing fault dataset of Jiangnan University has a sampling frequency of 50 kHz and contains four types: normal state, inner ring fault, outer ring fault, and rolling element fault. During the experiment, an accelerometer was used to collect vibration signals at three different speeds of 600, 800, and 1000 r/min. Therefore, under different working conditions, the total number of rolling bearing categories is 12. In this study, the sliding window method is used to segment each sample into 2048 fixed length data points. As a result, a total of 89,850 samples were extracted, with a total of 183,091,200 data points.

4.2. Data Preparation and Evaluation

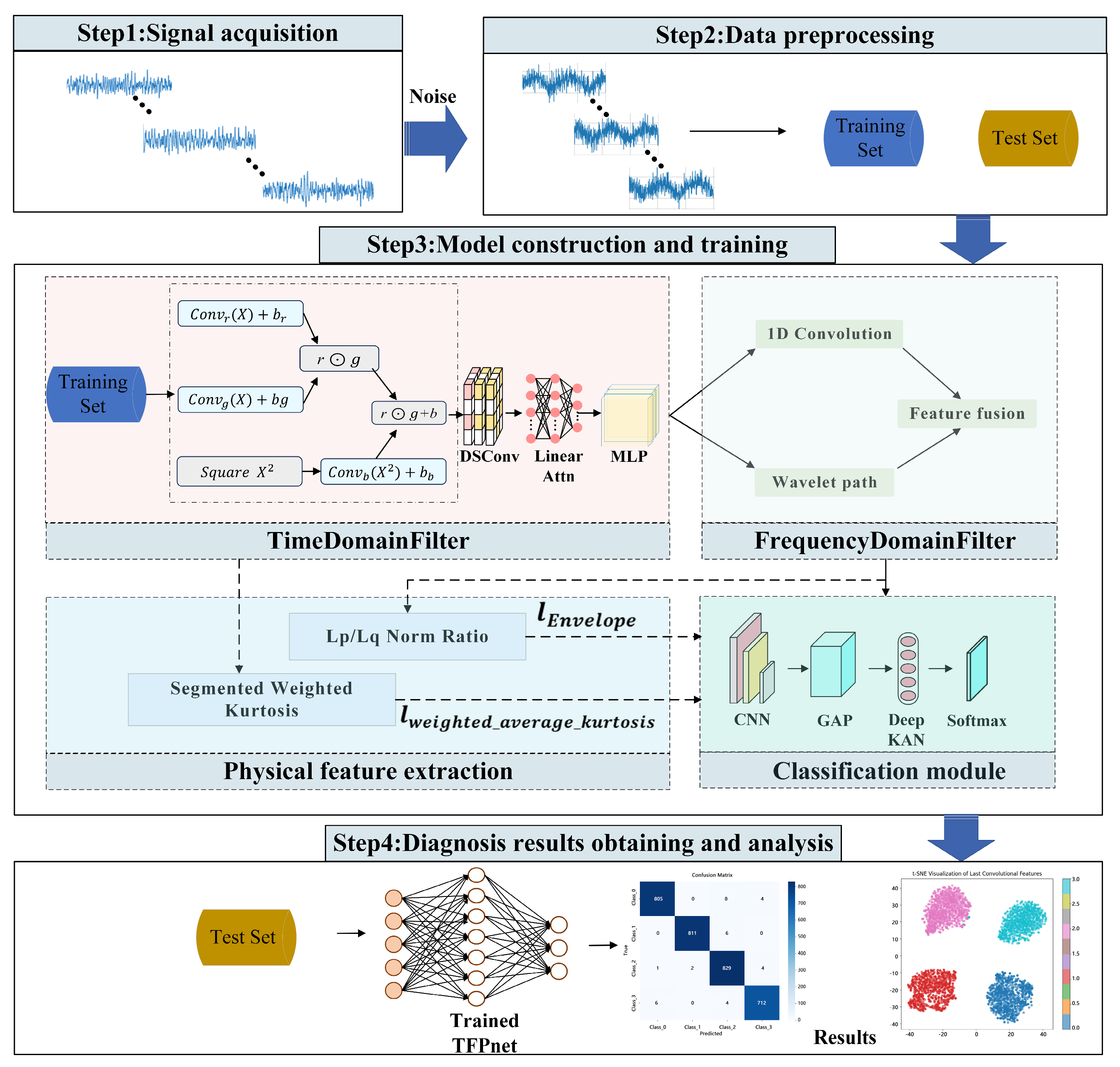

To improve the generalization ability and robustness of the model under strong noise environments, the data preprocessing in this article includes multiple steps, such as sample segmentation, noise injection, and data augmentation.

The original vibration signal is divided into fixed length segments for subsequent modeling. The duration of a single channel acceleration signal is 15 s, with a sampling rate of 25 kHz. This article divides data into structured sub-sample sequences using a sliding window with a length of 2048 points. All sub-samples are divided into datasets based on the original samples, and training, validation, and testing sets are constructed in proportions of 70%, 20%, and 10%, respectively, to ensure that all windows of the same original sample do not span multiple sub-sets, effectively avoiding data leakage issues.

By adding Gaussian white noise of different intensities as model input to the raw data samples, the vibration signal fault diagnosis in actual noisy environments is simulated. The signal-to-noise ratio (SNR) is usually chosen as an important indicator to evaluate the intensity of noise, and the calculation is given in Equation (34).

where

and

are the power of the original signal and the noise signal. The smaller the signal-to-noise ratio, the stronger the noise. When the signal-to-noise ratio is less than 0 dB, it is a strong noise environment, and the power of the noise signal is greater than that of the original signal. The signal-to-noise ratio range in the experiment was set to −8 to 0 dB. Subsequently, a data augmentation strategy was applied to the data, and the window signal was randomly scaled to enhance the robustness of the model to signal strength changes. Fifteen percent of the frequency band was randomly masked in the frequency domain to simulate the actual situation of missing frequency components.

The performance of the proposed method is evaluated by commonly used evaluation indicators, including Accuracy (

Acc), false positive rate (

FPR),

Recall,

Precision, and

F1 score. The FPR represents the proportion of individuals who are actually “healthy” but incorrectly identified as “faulty” by the model. Equation (39) defines the balance between the

Precision and

Recall of fault types in the

F1 comprehensive reflection model.

where

TP,

TN,

FP, and

FN represent the number of true positives, true negatives, false positives, and false negatives, respectively.

4.3. Results and Discussion

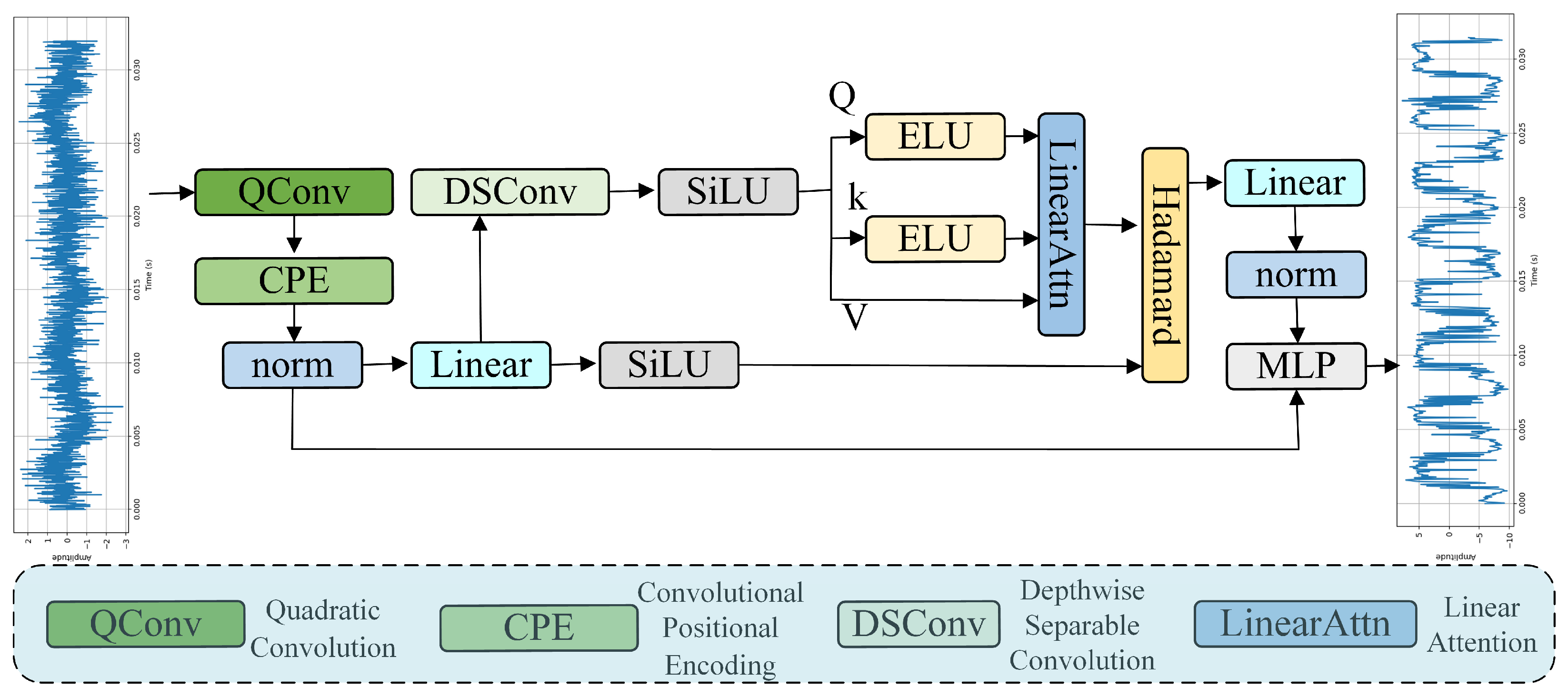

In this Section, we analyze the performance of the proposed TFPNet model through multiple sets of experimental results.

- A.

Classification Results of TFPNet Model on Two Datasets

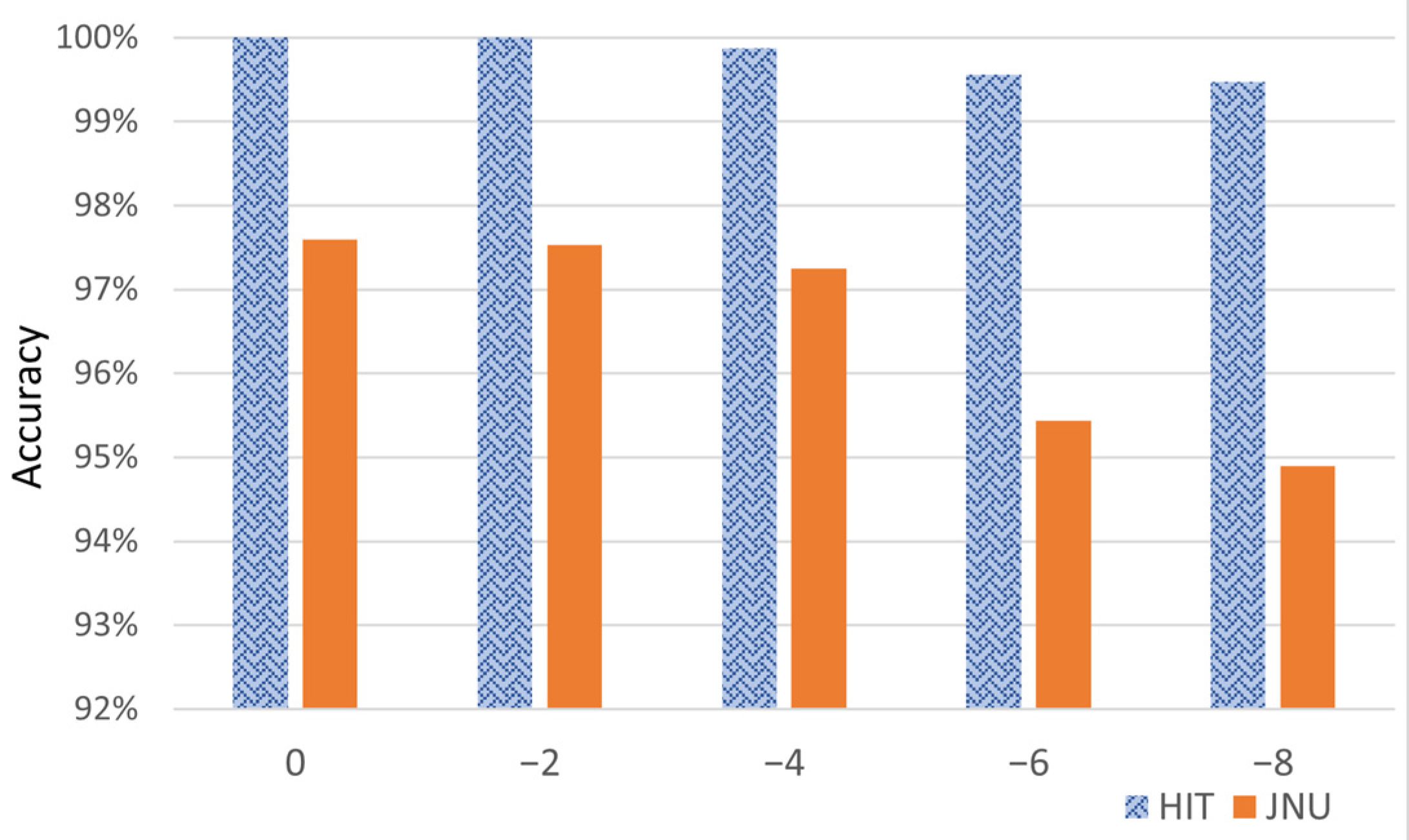

To evaluate the model’s stability under noise, we run the proposed model 10 times on both the training and testing sets, and report the average test accuracy as the final diagnostic performance. The accuracy of the proposed model on two datasets under different signal-to-noise ratios (SNR) is given in

Figure 6. As the noise intensity increases, both datasets exhibit a gradual decline in classification accuracy.

The proposed TFPNet model maintains high fault detection performance even in strong noise backgrounds, demonstrating good robustness. Taking the HIT dataset as an example, the proposed model achieves perfect classification accuracy (100%) under an SNR of 0 dB, and even in a high-noise environment with an SNR of −8 dB, the model maintains a high accuracy of 98.78%. Similarly, on the JNU dataset, the model delivers robust performance, attaining 98.28% accuracy at 0 dB and still achieving 93.65% under the challenging −8 dB condition. These results demonstrate the model’s strong generalization capability across different scenarios, even in the presence of intense noise interference.

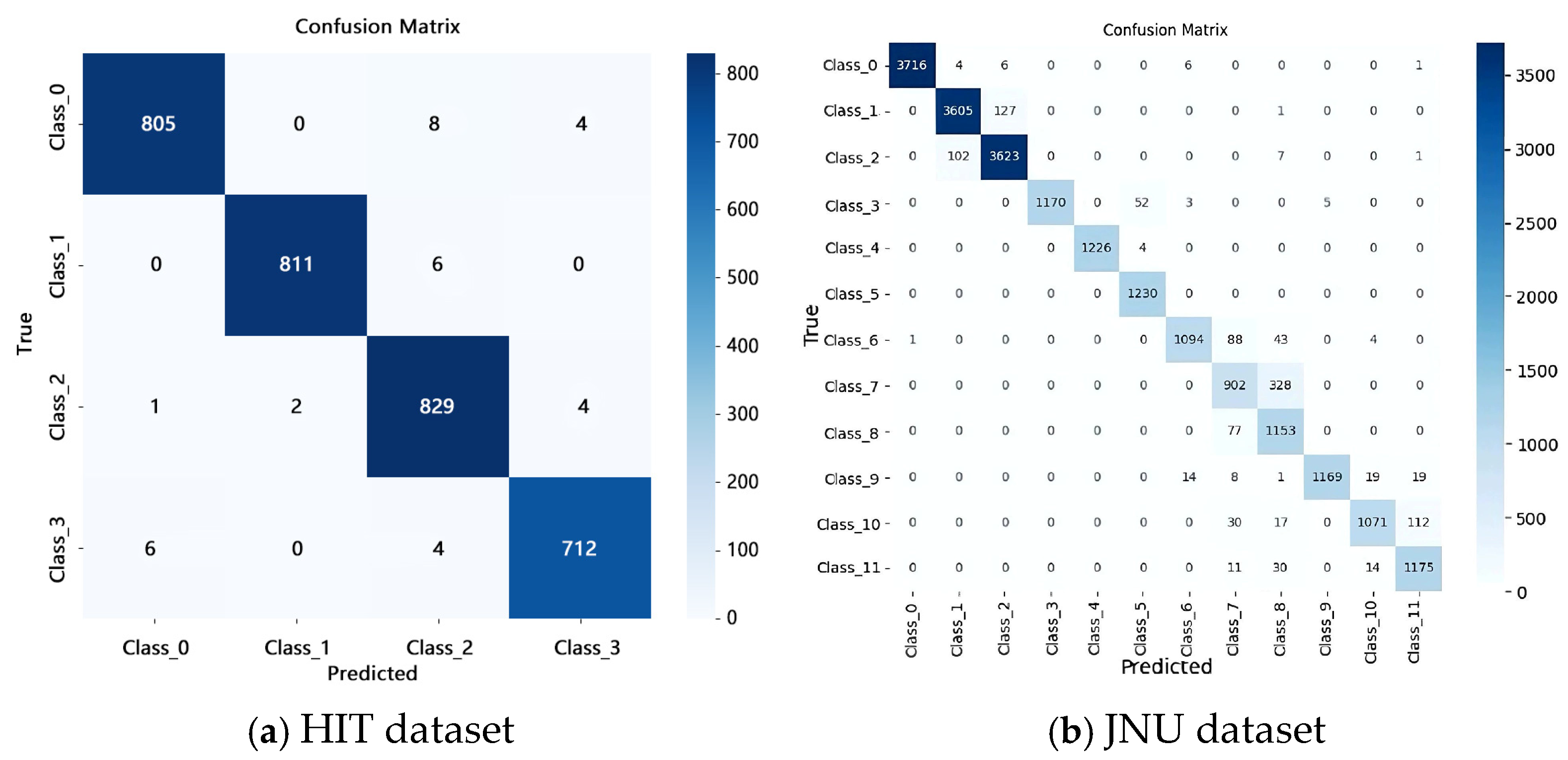

To further verify the discriminative ability of the proposed model in handling various types of bearing faults, confusion matrices are utilized to illustrate the correspondence between classification results and true labels. Diagnostic results on two datasets with a signal-to-noise ratio (SNR) of −8 dB demonstrate that TFPNet maintains high accuracy and fault distinction even under strong noise conditions. These results are given in

Figure 7.

We conduct a qualitative analysis of data distribution using t-SNE under varying noise conditions (−8 dB, −4 dB, and 0 dB) to further demonstrate the model’s ability to classify and separate different sample categories in the feature space. The resulting two-dimensional embeddings are shown in

Figure 8. Even at the lowest SNR of −8 dB, samples of the same class form compact clusters, while heterogeneous classes remain clearly separated, confirming the model’s robustness against strong noise.

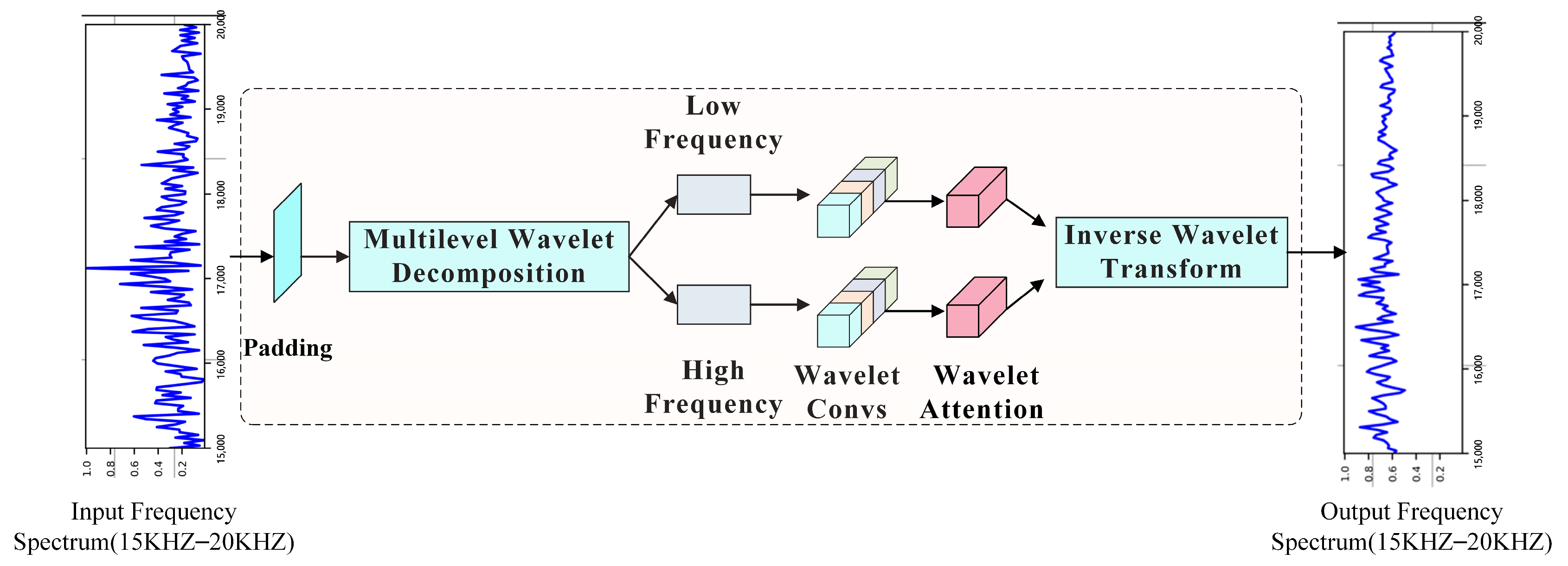

In order to further evaluate the specific contributions of each module in the proposed TFPNet model, this Section conducts ablation experiments on accuracy. The following eight ablation models are specifically designed.

- (1)

TFPNet-NoTime: This model completely removes the Time-Domain Filter and directly performs Fourier transform on the normalized signal, using the frequency spectrum as the input starting point to extract fault information from frequency domain features.

- (2)

TFPNet-NoFreq: This model completely removes Frequency-Domain Filters and relies only on time domain features for subsequent classification, aiming to verify the impact of spectral filtering on fault detection performance.

- (3)

TFPNet-NoAK: This model removes the weighted average kurtosis feature ak from the time-domain signal while retaining the spectral sparsity measure (Lp/Lq norm), verifying whether the model still has good representation ability without introducing time-domain statistical features.

- (4)

TFPNet-NoG: This model removes the Lp/Lq spectral sparsity index g and retains the auxiliary branch based on weighted average kurtosis to explore whether the model still has good representation ability in the absence of spectral sparsity assistance.

- (5)

TFPNet-NoAKG: This model simultaneously removes two auxiliary features, weighted average kurtosis ak and Lp/Lq norm g, and only retains the main classification path. It is used to test the basic performance of the model under the condition of complete dependence on the backbone structure, and verify the feasibility of the model without prior physical information assistance.

- (6)

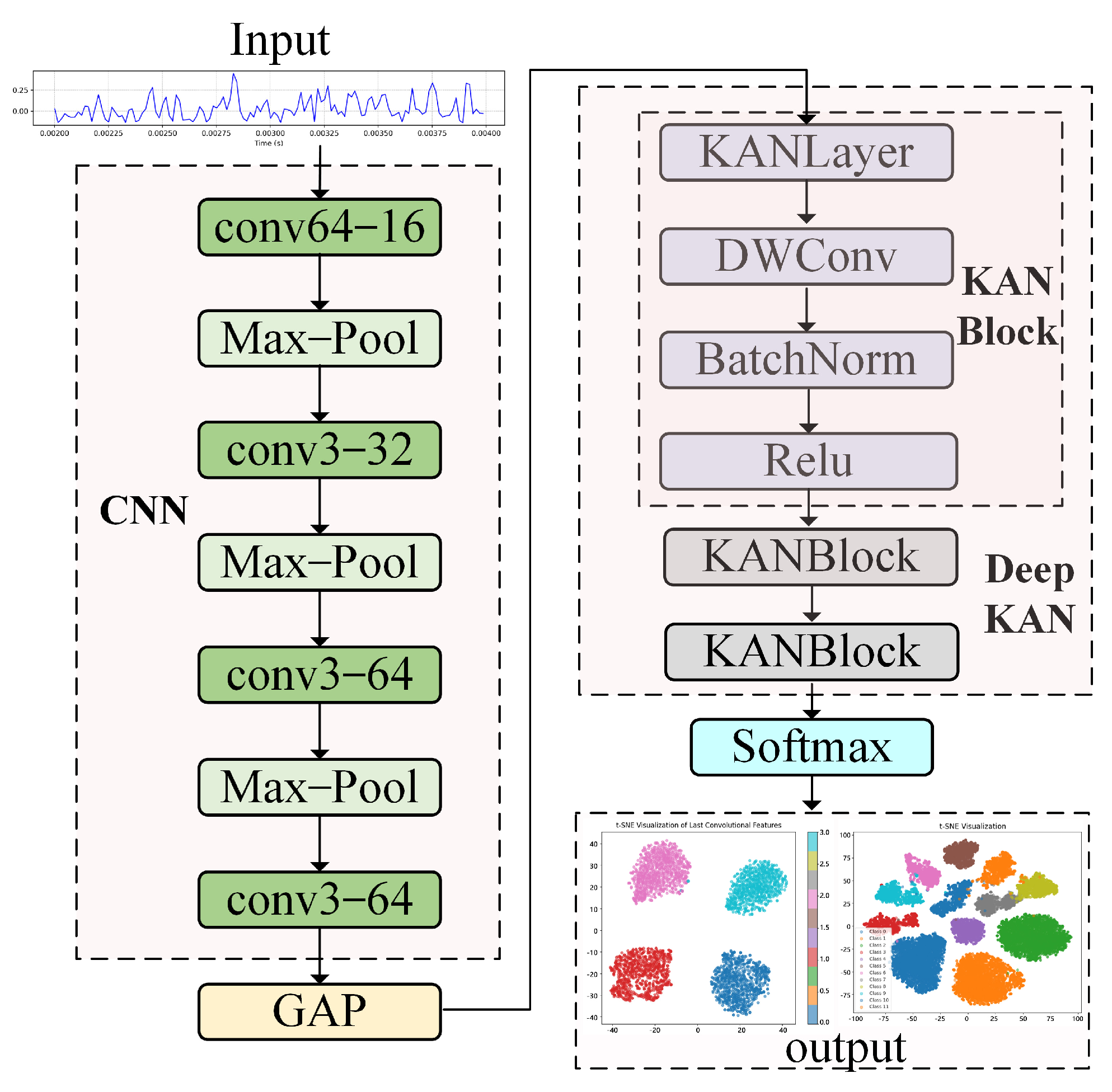

TFPNet-CNN: This model removes the DeepKAN module from the original structure and only retains the CNN backbone for feature extraction and classification, aiming to evaluate the improvement effect of the deep plasticity nonlinear modeling module (KAN) on the final performance.

- (7)

TFPNet-Transformer: This model replaces the original classification feature extraction module with a Transformer architecture, explores the modeling ability of Transformer in fault detection tasks, and compares it with the original classification module.

- (8)

TFPNet-ResNet: This model replaces the original classification feature extraction module with a ResNet like convolutional neural network structure, aiming to verify the effectiveness of the original classification model in modeling fault features.

The ablation experiment is conducted on the JNU dataset under the condition of SNR = −4 db. By comparing these ablation models, the effects of different modules on fault detection and diagnosis can be observed. The results are shown in

Table 2 and

Figure 9.

From the overall results in

Table 2 and

Figure 9, TFPNet performs the best in

Acc (97.25%),

F1 score (96.47%), and

FPR (0.25%), indicating that the collaborative fusion of each module plays a key role in performance improvement.

In the ablation experiment of Time–Frequency Domain Filters, TFPNet-NoTime and TFPNet-NoFreq remove the Time-Domain and Frequency-Domain Filters, respectively. The accuracy of TFPNet-NoFreq decreases to 91.11%, and the FPR increases to 0.82%, indicating that the spectral filter plays a more critical role in extracting weak fault information. The performance of TFPNet-NoTime is slightly better than TFPNet-NoFreq, but still lower than the original model, indicating the effectiveness of the Time-Domain Filter.

In the ablation experiment of the physical feature extraction module, the accuracy of TFPNet-NoAK and TFPNet-NoG are 95.77% and 96.81%, respectively, both slightly lower than the full model, indicating that the two have complementary enhancement effects on the model in different dimensions. Especially TFPNet-NoAKG shows a more significant performance decline after simultaneously removing ak and g (Acc of 95.29%, F1 value decreased to 93.69%), further confirming the synergistic value of these two physical prior information.

In the ablation experiment of the classification feature extraction module, TFPNet-CNN only retains the convolutional feature extractor, and the accuracy significantly decreases to 86.20%, indicating that the feature mapping structure of KAN has stronger fault detection ability in the current task. After completely replacing the classification model with the Transformer architecture (TFPNet Transformer), the F1 score further decreases to 80.60%, the F1 value is only 76.21%, and the FPR increases to 1.78%, indicating that the Transformer’s modeling ability is unstable in low signal-to-noise ratio environments and it has difficultly handling complex signal feature extraction. In contrast, although TFPNet-ResNet outperforms the two mentioned above (with an accuracy of 92.15%), it is still significantly inferior to the original CNN-DeepKAN module, demonstrating the superiority of this classification module over mainstream convolutional neural networks.

In conclusion, the ablation experiment fully proves that the Time–Frequency Domain Filters, Physical Feature Extraction and Classification Model based on CNN and KAN in the TFPNet model play an important role in improving the diagnostic performance. The absence of any module will lead to performance degradation to varying degrees, which verifies the rationality and effectiveness of the overall architecture design.

In order to verify the performance of the TFPnet model proposed in this paper under noisy environments, it is compared with the three models, DRSN [

29], Laplace Wavelet [

30], and CSSTNet [

31], under SNR-8-0db conditions, as shown in

Table 3.

Table 3 shows that TFPNet consistently exhibits higher robustness and generalization ability under various noise environments. For the F

1 indicator, TFPNet achieves good performance under all SNR conditions, especially in the case of −6 dB and −8 dB strong noise, significantly higher than other models, demonstrating superior classification performance and stability.

Meanwhile, the average FPR of the proposed TFPNet is overall the lowest, far lower than the FPR levels of other models under the same conditions. This performance indicates that TFPNet can not only accurately identify real fault samples but also effectively suppress misjudgments under noise interference, improving availability and safety in industrial scenarios.

To more intuitively demonstrate the lightweight results of the proposed model, we compare the TFPNet model with the three models above, and results are given in

Table 4. The total number of parameters and floating-point operations of the models are adopted to evaluate the complexity and computational cost of each model. Params (M) represents the total number of parameters of a model, in millions (Million). A smaller value usually indicates that the model is more compact and easier to deploy. FLOPs(M) represents the number of floating-point operations required for a model in a single forward inference process, also in millions (Million). A lower FLOPs value means the model has lower computational overhead and faster inference speed.

As shown in

Table 4, TFPNet achieves the lowest parameter count (0.0274 M) and computational cost (4.96 M FLOPs) among all comparison models, representing only 4.4% and 7.1% of CSSTNet, respectively. Compared with other lightweight schemes, such as DRSN and Laplace Wavelet, TFPNet also demonstrates superior efficiency and compactness. These results indicate that the proposed model provides an obvious advantage for lightweight deployment while maintaining strong diagnostic performance.

At the same time, in order to demonstrate the performance of the proposed model, its results on the two datasets are compared with that of existing literature, as shown in

Table 5 and

Table 6.

The above results indicate that the proposed TFPNet has demonstrated good accuracy in fault detection under different noise conditions, demonstrating that the design of the model framework can achieve bearing fault diagnosis under strong noise environments.

- D.

Cross-Condition Experiment under a Noisy Environment

This Section evaluates the cross-domain diagnostic performance of the proposed model by designing six specific migration tasks under different speed switching.

The experiment used a sliding pane with a length of 2048 to sample healthy samples and various types of fault samples with a sampling rate of 50 kHz. The datasets at different operating speeds (600 RPM, 800 RPM, 1000 RPM) were represented by X, Y, and Z, respectively. The detailed migration instructions for the six migration tasks are shown in

Table 7.

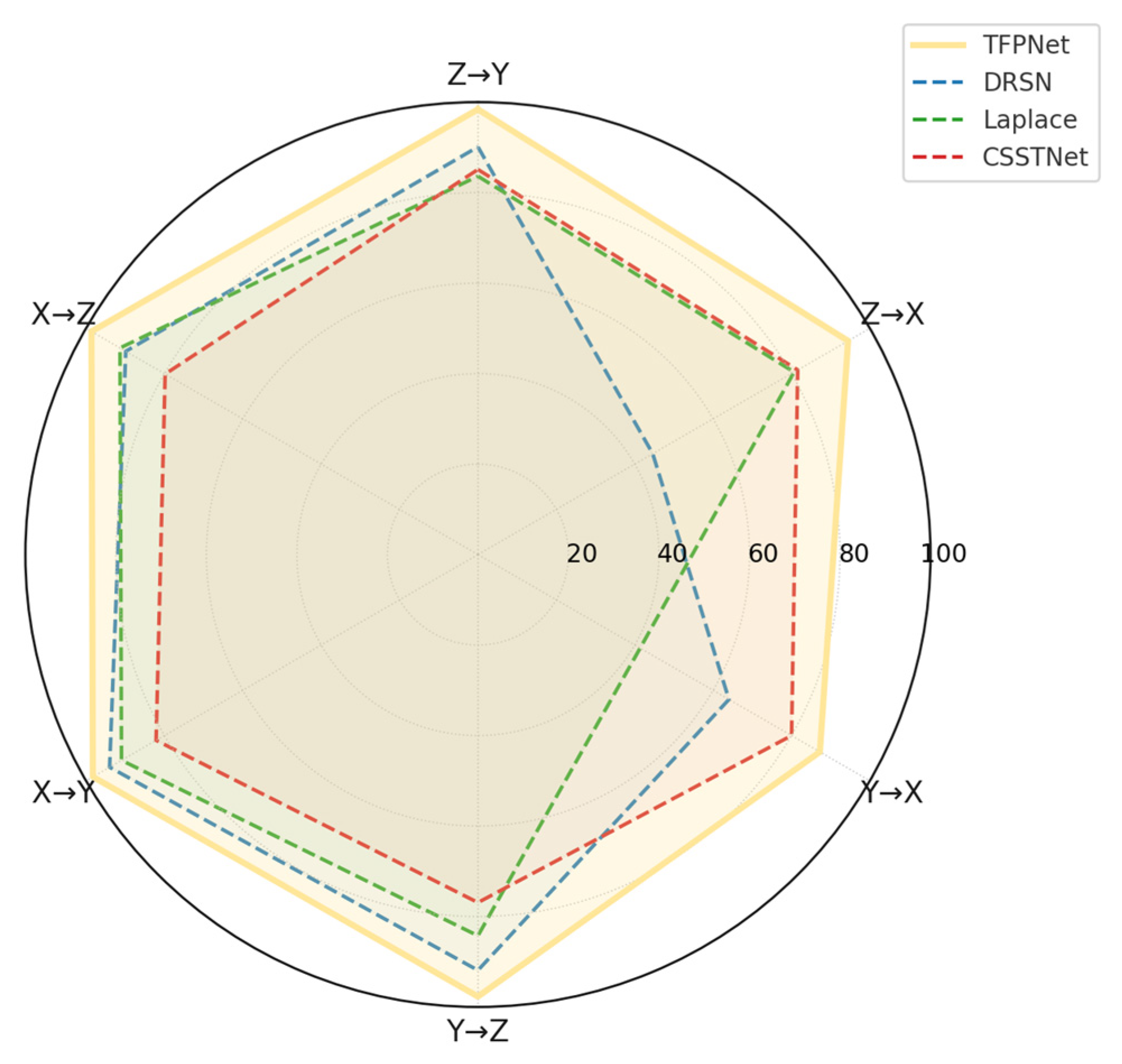

Six migration tasks are constructed to simulate domain shifts across different operating speeds for evaluating the transfer learning performance of the proposed model. As shown in

Table 8, TFPNet consistently achieves the best accuracy among all compared methods, with the highest reaching 98.62% (X→Z) and the lowest remaining above 87.24% (Y→X). In particular, for tasks with large speed disparities, such as Z→X and X→Z, the model still maintains high accuracy of 94.44% and 98.62%, respectively. The trend of classification performance across different transfer scenarios is further visualized in

Figure 10, supporting the model’s robustness and adaptability to complex domain differences.

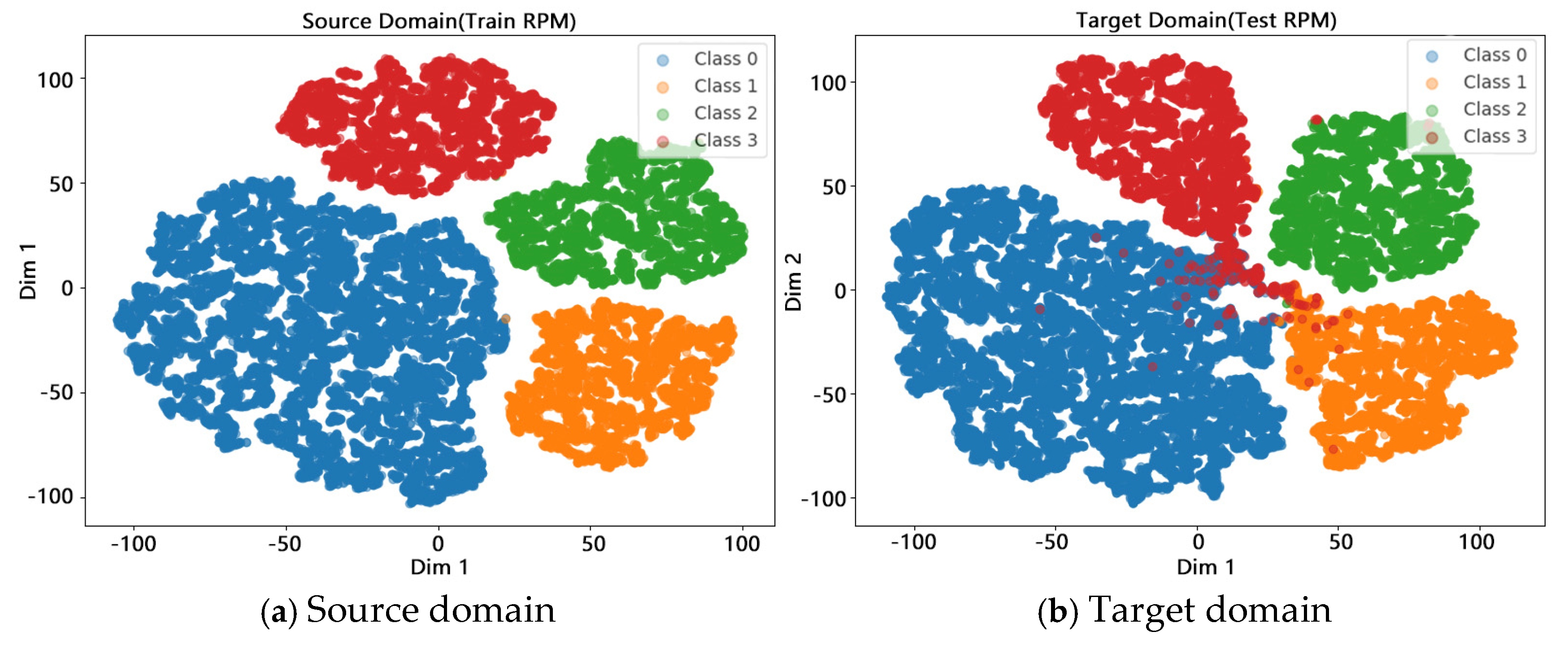

In order to observe the alignment effect of source domain (600 RPM) and target domain (1000 RPM) data with noise environment in the feature space more intuitively, this Section employs t-SNE algorithm for visual dimensionality reduction with SNR = −4 db, as shown in

Figure 11. In

Figure 11a, the distribution of the four classes of samples in the source domain shows a good clustering structure and is clearly separated from other categories.

Figure 11b shows that the clustering effect of various samples remains good after migration, with tight clustering of each cluster and stable feature distribution. There are a small number of boundary samples in Class 1 and Class 2 that shift towards the Class 0 region, resulting in some features being blurred. However, overall, the alignment effect of the source-target domain features in the latent space is good, which verifies the feature transfer and domain adaptation ability of the TFPNet model under different rotational speed conditions.

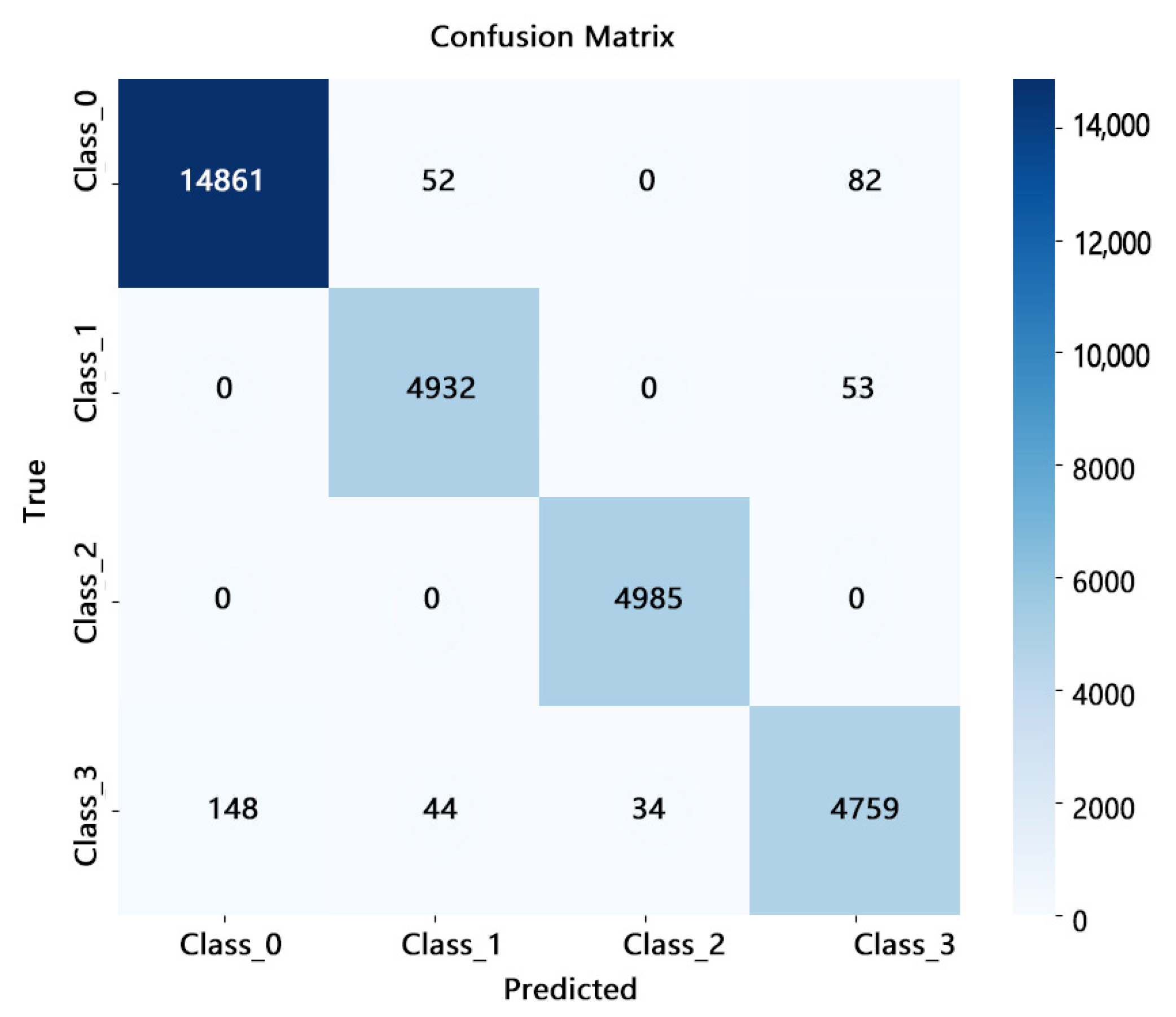

Confusion matrix is illustrated to further analyze the model performance on individual fault types in the target domain, as shown in

Figure 12. Class 2 exhibits near-zero misclassification, indicating the model’s strong representational ability for this fault category. This observation is consistent with the clear separation of Class 2 in the t-SNE visualization. In contrast, misclassifications are observed between Class 0 and Class 3, particularly with approximately 2.8% of Class 3 samples being predicted as Class 0 or Class 1. This aligns with the partial overlap of these classes at the boundaries of the feature space in the t-SNE plot. Despite these local misclassifications, the overall diagnostic accuracy remains high, confirming the robustness and generalization capability of the proposed model in cross-condition fault diagnosis tasks.