1. Introduction

The development of highway infrastructure constitutes a pivotal component in national modernization efforts and serves as the backbone of contemporary transportation networks. Amidst the exponential growth in motor vehicle ownership, highway congestion has emerged as a pressing challenge, with traffic volumes having reached critical thresholds during holiday periods. This phenomenon not only undermines public mobility efficiency but also constrains service quality enhancement, thereby impeding regional economic integration and societal advancement.

Traffic flow dynamics exhibit complex spatiotemporal patterns influenced by multifactorial factors. Crucially, holiday periods and meteorological conditions have been identified as primary determinants of traffic volatility, resulting in nonlinear relationships that traditional prediction models struggle to capture. In response to these challenges, this paper proposes a novel framework for highway traffic prediction that integrates key contextual factors, such as holiday-induced travel patterns and weather conditions, into multidimensional analytics.

In recent years, with the rapid development of deep learning algorithms, an increasing number of models have been employed for traffic flow prediction research. To enhance model accuracy, this paper proposes a hybrid CNN-LSTM-GRU model. This model extracts spatial features from data using Convolutional Neural Networks (CNN) and subsequently captures temporal features through Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks. The advantages of this approach are twofold. (1) Spatial Feature Enhancement: By constructing two-dimensional feature matrices of highway network topologies, the CNN effectively captures spatial correlations between adjacent road segments, addressing the limitation of traditional time-series models that often overlook spatial dependencies. (2) Temporal Feature Synergy: The dual-path architecture combining LSTM and GRU preserves LSTM’s strength in long-term memory retention while leveraging the GRU’s computational efficiency, creating a complementary mechanism for temporal sequence analysis. This integrated framework enables more accurate traffic flow prediction by simultaneously modeling spatial dependencies and temporal dynamics, offering a robust solution for modern highway management challenges.

2. Literature Review

Currently, research on short-term traffic flow prediction is diverse, and with the continuous advancement of technology, prediction levels and accuracy are also improving. This section summarizes traffic flow prediction methods based on a review of the existing literature.

Firstly, the most common methods are those based on mathematical statistics, which include both linear and nonlinear models. Linear models include Kalman filtering and historical average models, while nonlinear methods include non-parametric regression models and chaos theory. Guo et al. [

1] proposed using Kalman filtering to achieve real-time data processing capabilities, employing process variance to handle real-world data. Wang and Papageorgiou [

2] used a macroscopic traffic flow model along with the measurement model, which is designed by use of the extended Kalman-filtering method. Some other researches relied on time series analysis, such as autoregressive integrated moving average models (ARIMA) and their variants (SARIMA), to capture periodic patterns through linear assumptions. The ARIMA method was first proposed in 1979, by Ahmed et al. [

3] and applied to traffic flow prediction research. Smith et al. [

4] conducted an investigation into the theoretical underpinnings of nonparametric regression, with the dual objectives of establishing its methodological framework and evaluating whether heuristically optimized forecast generation techniques in nonparametric regression could attain traffic flow prediction accuracy comparable to seasonal ARIMA models in single-interval forecasting scenarios. Kumar et al. [

5] developed a predictive framework utilizing the Seasonal Autoregressive Integrated Moving Average (SARIMA) model for short-term traffic flow forecasting, achieving reliable performance even when constrained by limited input data. However, this type of method has weak adaptability to nonlinear and unexpected events, and it ignores spatial correlation. For the nonlinearity of complicated urban traffic flow, Chen and Xiao [

6] proposed a switching autoregressive integrated moving average (ARIMA) model and employed it to explore how traffic flow varies with time.

There are also many studies that use machine learning algorithms to conduct traffic flow prediction research. Algorithms such as Support Vector Machine (SVM), Random Forest (RF), and k-Nearest Neighbor (k-NN) enhance predictive ability through nonlinear mapping. Zhang et al. [

7] developed a high-precision multi-step traffic flow prediction model using Support Vector Machine (SVM) methodology. The framework incorporates actual traffic volume data as input vectors, with a systematic comparison of four distinct input vector configurations conducted to evaluate their comparative predictive performance. Hou et al. [

8] developed four traffic flow forecasting models—Random Forest, regression tree, multilayer feed-forward neural network, and nonparametric regression—specifically for planned work zone events. Concurrently, Zhang et al. [

9] proposed a short-term traffic flow prediction method using k-Nearest Neighbor (KNN)-based nonparametric regression, systematically analyzing how key parameter configurations influence model performance. Building on these efforts, Lu et al. [

10] introduced a hybrid traffic flow prediction framework combining signal decomposition and machine learning. The improved complete ensemble empirical mode decomposition with adaptive noise (ICEEMDAN) was applied to decompose traffic sequences into multiple intrinsic mode functions (IMFs), which were then processed by machine learning algorithms for enhanced predictive accuracy. Nevertheless, traditional machine learning approaches often necessitate extensive manual feature engineering and demonstrate limited capacity in processing high-dimensional spatiotemporal datasets.

In recent years, deep learning has garnered significant attention and has been widely applied to the research of traffic flow prediction. The application of foundational models, such as CNN, enables the effective utilization of spatial convolution to capture topological relationships in road networks. For instance, Zhang et al. [

11] proposed ST-ResNet, which models the spatiotemporal correlation of urban traffic flow through residual CNN. LSTM, with its gating mechanism addressing long-range dependencies, has become a benchmark for processing temporal data. Ma et al. [

12] were the first to apply LSTM to traffic prediction, demonstrating its superiority over traditional methods. Additionally, numerous studies have integrated various models for traffic flow prediction. The spatiotemporal fusion architecture, constructed by combining CNN and LSTM [

13], extracted the temporal dependencies of spatial features and models. Mackenzie et al. [

14] used HTM (Hierarchical Temporal Memory) to integrate and evaluate data from the adaptive traffic system on the main roads of Sydney and Adelaide. The results showed that HTM achieved comparable prediction outcomes to the LSTM model. Tian et al. [

15] proposed using multi-scale temporal smoothing to infer missing data and conducted comparisons on the PEMS dataset and their own dataset. Experiments demonstrated that this method achieved high accuracy. Feng et al. [

16] proposed the AMSVM-STC model based on support vector machines to accurately predict traffic congestion. They used an adaptive particle swarm optimization algorithm to find optimal parameters. The validation of datasets showed that, even during periods of abnormal traffic flow fluctuations, the model could provide accurate and timely predictions, outperforming the results of other mentioned models. This has further evolved into a combination of 3D convolution (C3D) and Transformer [

17], enhancing multi-scale feature extraction. For the non-Euclidean structure of road networks, Graph Neural Networks (GNNs), such as GCN and GAT, capture inter-node relationships through graph convolution. The DCRNN model proposed by Li et al. [

18], which combines graph diffusion convolution with sequence modeling, has become a classic framework.

In the research on predicting traffic flow on highways, due to the periodicity of highway traffic flow, a certain section of road has a relatively stable flow and change trajectory at the same time period in different historical times. Therefore, historical time is considered an important entry point for predicting traffic flow. Fang et al. [

19] added an attention mechanism to the LSTM model, effectively assigning different weights to different inputs of the model and focusing on filtering important information. After, four datasets were used as examples to prove that this model has good accuracy. Shuai et al. [

20] decomposed the traffic flow components through SSA, predicted the traffic flow through LSTM and SVR, and finally combined their respective prediction results to obtain the final prediction results. Through the verification of the traffic flow data of the Guizhou expressway, it was concluded that the combined model based on component decomposition was superior to the single model. Bing et al. [

21] proposed a multi-step prediction method consisting of VMD and LSTM. The VMD algorithm decomposes traffic flow into IMF components, and each LSTM predicts one component before integrating the predictions. Xu et al. [

22] proposed using the whale optimization algorithm to optimize the structure of the BiLSTM-Attention network, and using this algorithm to find the best parameters to input into the network structure, they formed the WOA_BiLSTM-Attention model.

At the same time, during the process of reading the literature, it was found through induction and summary that traffic flow prediction considering time and space has also formed quite rich results. Due to the high degree of closure of highways, the traffic flow of a certain section will be affected by the upper and lower nodes; thus, it is necessary to consider the spatial impact of highways. Zheng et al. [

23] considered spatiotemporal correlation in the prediction of urban road network traffic flow, selected similar and target road segments as independent and dependent variables, and finally used them as inputs for CNN-LSTM traffic flow prediction. The final results showed that, compared with other models, this model has higher accuracy. Zheng et al. [

24] proposed using an attention mechanism on the basis of Conv LSTM to solve the problem of poor spatial feature capture, and using multi-layer architecture for feature extraction to improve the capturing power of Bi LSTM on temporal features, they thereby improved the overall prediction accuracy of the model. Some combination models have also been proposed for short-term traffic flow prediction research, i.e., Bi-LSTM-CNN [

25] and GTO-CNN-LSTM [

26].

Traffic flow prediction is influenced by a multitude of factors. Beyond the inherent temporal and spatial characteristics of traffic flow itself, it is also affected by fundamental elements such as driver behavior and road conditions. These conventional factors can generally be categorized as routine influences, as their impact on traffic flow tends to remain relatively stable. This study, however, places particular emphasis on analyzing the effects of dynamic factors—including weather conditions, holidays, and other time-varying elements—on traffic flow. For instance, adverse weather such as rain or snow can render road surfaces slippery to varying degrees, compromising driver control, reducing vehicle speeds, and ultimately leading to congestion. Similarly, holidays often induce significant fluctuations in highway traffic flow, with notable surges observed before and after holiday periods. Hence, this research aims to integrate these dynamic factors (weather, holidays, etc.) with the intrinsic temporal and spatial attributes of traffic flow to develop a more comprehensive predictive framework.

In terms of prediction methods, the combination model can better utilize the advantages of each model and fully explore data features. Therefore, in this study, a combination model based on LSTM-GRU is constructed. CNN is used to better extract spatial features of the data and to complete the prediction.

3. Data Sources and Characteristics Analysis

3.1. Data Sources and Preprocessing

The dataset used in this study consists of traffic flow data and weather data collected from toll stations on a provincial highway from 19 July 2016 to 24 October 2016. The traffic flow data include entry and exit traffic data from three toll stations, labeled as Station 1, Station 2, and Station 3. Each toll station has two directions (0 and 1), where 0 represents the entrance and 1 represents the exit. Station 2 was only allowed to enter the highway due to maintenance, which is a one-way passage. The weather data span from 19 July 2016, 00:00, to 24 October 2016, 24:00, and includes the following seven fields: atmospheric pressure, sea-level pressure, wind direction, wind speed, temperature, humidity, and rainfall.

The raw traffic flow data were collected at a frequency of 20 min. Specifically, the entrance of toll Station 1 contains 2084 records, the exit of toll Station 1 contains 2084 records, the exit of toll Station 2 contains 1725 records, the entrance of toll Station 2 is 0 in this dataset, the exit of toll Station 3 contains 2086 records, and the entrance of toll Station 3 contains 2085 records.

The weather data consist of 231 records collected at a frequency of every 3 h.

Preprocessing raw data is an essential and critical step in data mining, machine learning, and artificial intelligence. Data collected directly often lack quality assurance, with missing values being a common issue due to machine malfunctions or human errors. Missing data can lead to errors during feature extraction, thereby affecting prediction accuracy. Linear interpolation, the simplest form of algebraic interpolation, is widely used for handling nonlinear functions. Therefore, this study employs linear interpolation to fill in missing values.

The traffic flow data for direction 1-0 from 19 July 2016 to 24 October 2016 contain four missing values. The traffic flow data for direction 2-0 during the same period contain 362 missing values. The traffic flow data for direction 3-0 contain two missing values. The traffic flow data for direction 1-1 contain four missing values, and the traffic flow data for direction 3-1 contain three missing values. Below is a portion of the raw traffic flow data table for direction 1-0, which includes one missing value, as shown in

Table 1. The missing value is filled using the mean imputation method. Since the data represent the number of vehicles passing through at 20 min intervals, the repaired data are rounded to 14.5. Due to the large number of missing values in direction 2-0, likely caused by detector malfunctions, linear interpolation is used for filling.

Since the weather data collection frequency differs from the traffic flow data, linear interpolation is applied to align the weather data frequency to 20 min, resulting in a total of 2088 weather data records. A portion of the filled weather data is shown in

Table 2.

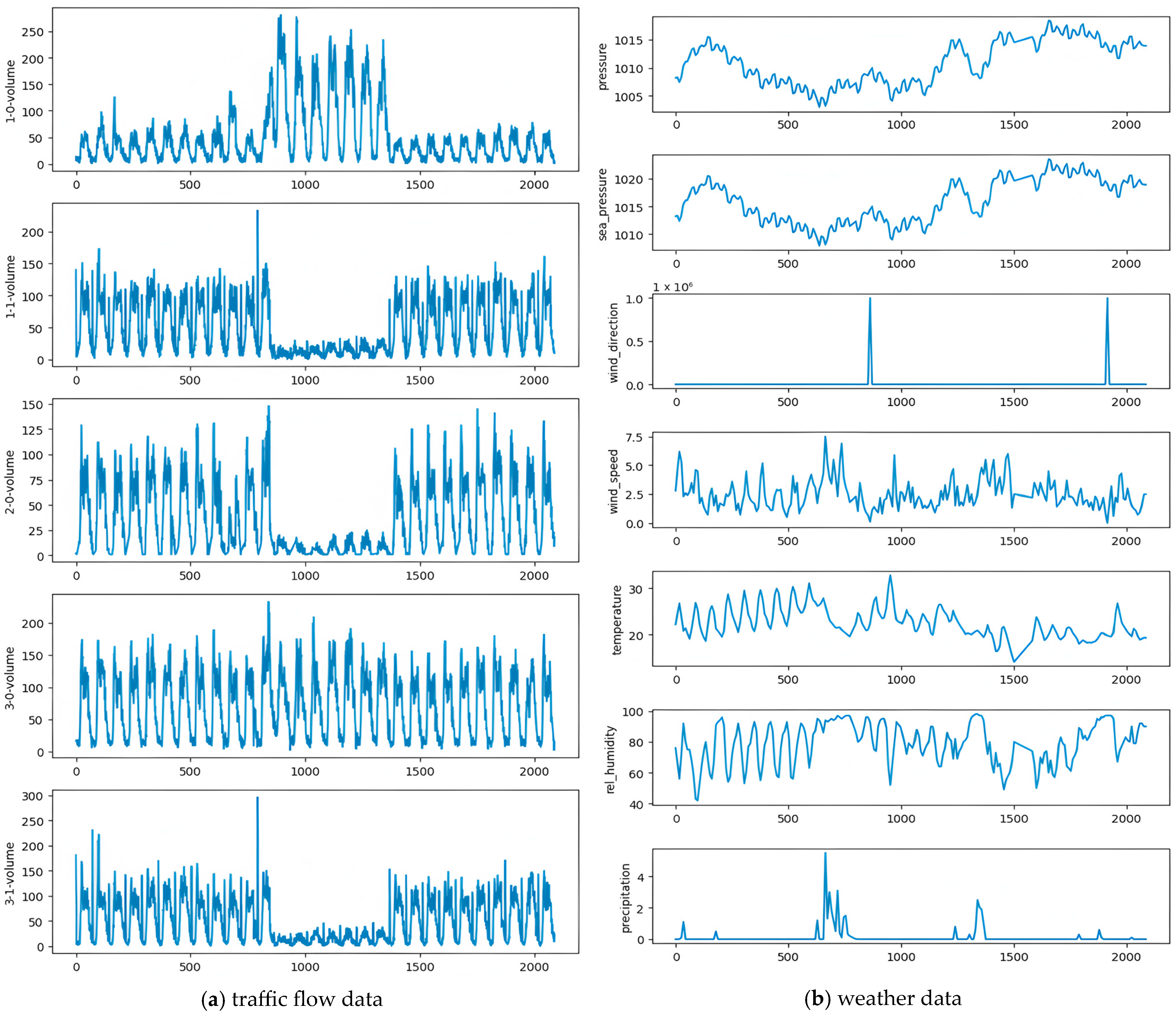

Data visualization is crucial for in-depth analysis and trend prediction. By presenting traffic flow and weather data in graphical form, it helps uncover patterns over time and space, explore correlations between the two, analyze long-term traffic demand trends, and provide scientific references for future traffic flow predictions and traffic management under extreme weather conditions.

Therefore, to better observe the characteristics of the data, visualizations of the features of traffic flow data and weather data are provided, as shown in

Figure 1 below.

From the figure, it can be observed that the traffic flow trends at each station are similar over certain periods. When studying traffic flow prediction, integrating data from all stations can be considered. Additionally, understanding traffic flow trends can help traffic management departments to allocate resources more effectively and to simplify management models.

Based on the previous analysis, periodic changes in traffic flow, holidays, and weather are key factors influencing traffic flow. Therefore, the weather and traffic flow data are integrated here, with a portion of the data shown in

Table 3 below.

After integration, this study finds that the data features are not on the same scale, which could affect prediction accuracy. Therefore, before analysis, the obtained data were normalized. This study employs the commonly used min–max method for data normalization, scaling the data to a range between 0 and 1. The min–max method is shown in Formula 1 below:

where

represents the original data,

represents the normalized data,

is the minimum value, and

is the maximum value.

3.2. Analysis of Highway Traffic Flow Characteristics

Highway traffic flow, as a crucial indicator reflecting the operational status of road transportation systems, is shaped and driven by a series of complex internal conditions and external environments. Therefore, to better predict traffic flow, it is necessary to analyze both internal and external characteristics.

- (1)

Analysis of Internal Traffic Flow Characteristics

- (a).

Periodicity

The periodicity analysis of highway traffic flow focuses on temporal characteristics, primarily manifested in daily and weekly cycles, exhibiting both complexity and regularity. As shown in

Figure 2, the data represent traffic flow from 00:00 on 7 October 2016 to 00:00 on 8 October 2016 in

Figure 2a. It can be observed that the traffic flow exhibits significant fluctuations within a single day, indicating strong complexity.

Figure 2b shows the traffic flow data from 19 September 2016 to 25 September 2016, revealing a high degree of similarity in traffic flow trends over a week, with peak commuting times being largely consistent.

Figure 2c displays traffic flow data from 21 September 2016 to 11 October 2016, including both workdays and holidays. The data show two distinct patterns, but within individual workdays or holiday periods, the trends remain similar.

By leveraging long-term accumulated highway traffic data, these periodic phenomena can be precisely quantified, and the patterns can be used to predict future traffic conditions. This, in turn, guides the optimal allocation of traffic resources and the design of more accurate and efficient traffic management and control measures. Additionally, the results of the periodicity analysis provide indispensable scientific support for highway system expansion plans and the development of emergency response strategies for unexpected events.

- (b).

Spatial Correlation

In the highway system, the spatial correlation of traffic flow refers to the inherent connections and influencing mechanisms between traffic volume, density, and speed across different road segments. Understanding this characteristic allows for a global analysis of the operational patterns of the traffic system. This not only provides recommendations for optimizing existing traffic management models but also enables better allocation of road resources to alleviate congestion. In the long term, grasping the spatial correlation of highway traffic flow helps in designing more forward-looking and adaptable road network layouts, thereby enhancing the operational efficiency and service quality of the road transportation system.

Figure 3 shows the traffic flow trends from 20 September 2016 to 29 September 2016 for the 0-direction of the three adjacent toll stations (Station 1, Station 2 and Station 3). It can be observed that the traffic flow changes at adjacent stations are highly correlated, with consistent trends.

- (2)

Analysis of External Traffic Flow Characteristics

Traffic flow has uncertainty, which means that the influence of other factors outside the transportation system can cause dynamic changes in traffic flow. According to daily experience, the occurrence of unconventional congestion is usually due to factors such as commuting time, holidays, and weather. In order to achieve a more accurate prediction of highway traffic flow, this article integrates the impact of key factors on traffic flow and conducts research.

The Pearson correlation analysis method is used to calculate the correlation between dynamic weather factors and traffic flow. The Pearson correlation coefficient is calculated using Formula (2) as follows:

The strength of the correlation results is judged based on the range shown in

Table 4 below.

The calculation results are shown in

Figure 4.

As shown in

Figure 4, we found that the correlations between Station 1-0 and other stations are 0.65, 0.74, 0.64, and 0.60, all above 0.6, indicating strong correlations between the traffic flows at different stations. Therefore, when predicting traffic flow at a target station, the traffic flow data from other stations should also be considered.

Taking Station 1-0 as an example, the correlations between traffic flow and weather factors such as atmospheric pressure (pressure), sea-level pressure (sea_pressure), wind direction (wind_direction), wind speed (wind_speed), temperature (temperature), and relative humidity (rel_humidity) are −0.14, −0.017, 0.09, 0.03, 0.039, and 0.14, respectively, all below 0.2. This indicates that these factors have almost no correlation with the traffic flow data at this station. However, the correlation between precipitation (precipitation) and traffic flow data is 0.35, indicating a strong correlation. This also proves that natural factors like weather do indeed influence traffic flow.

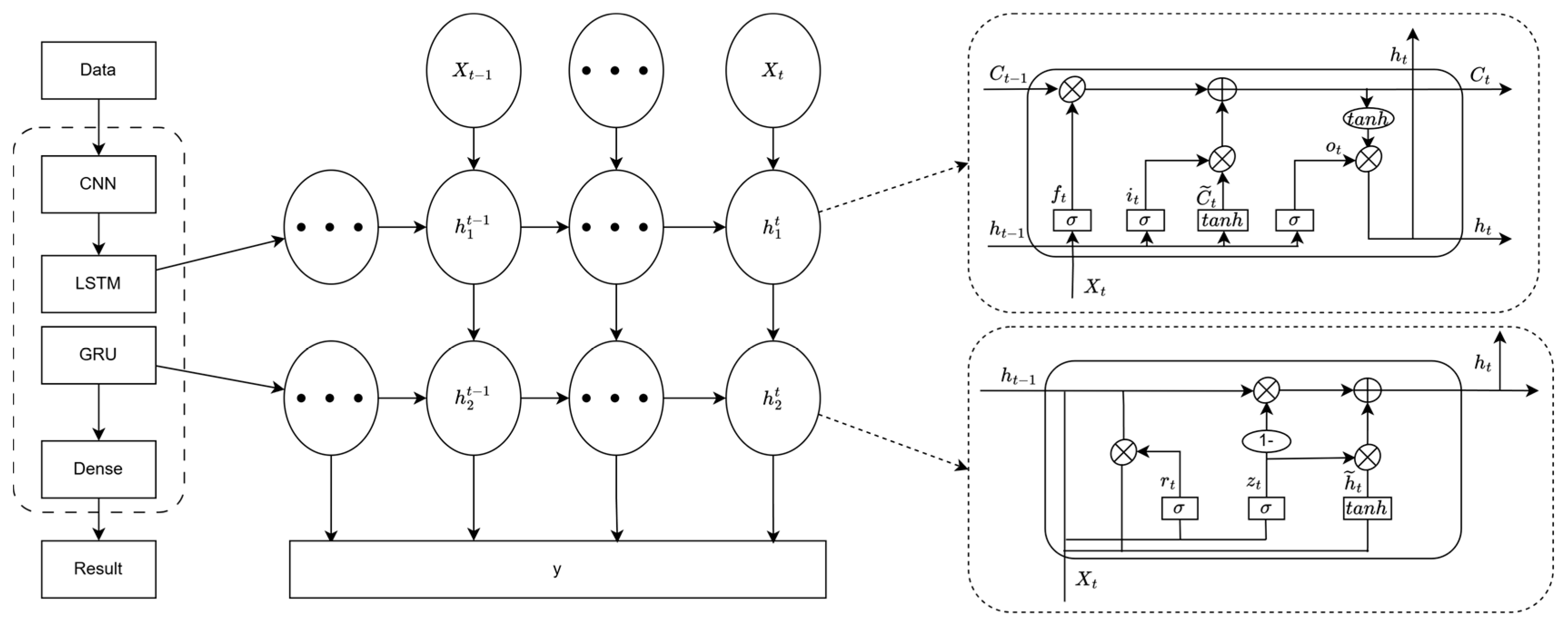

4. Methodology

LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) are models developed to address the vanishing gradient problem encountered by recurrent neural networks (RNNs) when processing continuous and lengthy data. Both models introduce gating mechanisms to more effectively regulate the flow and storage of information, thereby improving the processing of time-series data. While LSTM can finely handle long-term dependencies, its structure is complex. On the other hand, GRU has a simpler structure but sacrifices some prediction accuracy. Therefore, this study adopts an integrated LSTM-GRU approach to leverage the strengths of both models in handling long-term dependencies. However, neither model can process the spatial features in traffic flow data. To address this, the study incorporates CNN (Convolutional Neural Network) to extract spatial features and validates the model using highway traffic flow data and weather data from a specific province.

4.1. Model Architecture Design

The architecture mainly consists of three parts, which are data preprocessing, feature matrix construction, and model building. First, missing values in the raw traffic flow and weather data are filled, and the data are normalized. Then, the processed data are constructed into a matrix containing time, space, and weather features, which serves as the input to the model. In the model phase, the CNN is used to extract spatial features, while a combination of LSTM and GRU layers processes the temporal features. Finally, a fully connected layer (Dense) is used to output the results. The main architecture of the model is shown in

Figure 5 below.

When extracting temporal features using LSTM-GRU, the Keras Sequential model is first used, with an LSTM layer embedded using the add function. The parameter return_sequences is set to True. Then, a GRU layer is added, using the output of the LSTM layer as its input. Finally, a fully connected layer (Dense) and an output layer are appended to obtain the final prediction. The specific structure is shown in

Figure 6 below.

- (1)

The LSTM layer mainly includes structures such as the forget gate, input gate, and output gate. The calculation process is shown in Formulas (3)–(8) below:

By integrating the above formulas, the following calculation formula is derived:

where

represents the input variable at the current time step.

represents the hidden state at the previous time step.

represents the cell memory at time

.

represents the cell memory at time

.

represents the candidate cell memory.

represents the weight matrices for the input state and hidden state.

represents the value of bias.

The hidden state and input are processed through the output gate’s Sigmoid function to calculate . The cell memory is activated using the tanh function, ultimately yielding the updated hidden state .

- (2)

The GRU layer mainly includes the reset gate and update gate. The calculation process is shown in Formulas (9)–(13) below:

where

represents the portion of the hidden state from the previous time step that needs to be reset.

is a Sigmoid activation function ranging from 0 to 1, quantifying the degree of selective forgetting.

represents the hidden state from the previous time step.

is the current input.

is the relevant weight matrix.

represents the degree of retention for the current data, ranging from 0 to 1. A value closer to 0 indicates more forgetting, while a value closer to 1 indicates more retention.

During the calculation of , the reset data are first combined with to obtain . Then, is combined with and scaled using the tanh function.

Finally, the updated hidden state is obtained.

4.2. Construction of the Two-Dimensional Matrix

As mentioned earlier, highway traffic flow is influenced by both internal and external factors. Specifically, since traffic flow is time-series data, historical data are required for traffic flow prediction to forecast future traffic based on historical trends. Additionally, traffic flow at adjacent stations and upstream/downstream stations can also impact the target station’s traffic flow. External dynamic factors can cause fluctuations in traffic as well. Therefore, considering these aspects, a matrix containing time, space, and weather features is constructed.

The specific calculation method is shown in Formula (14) below:

where

represent the traffic flow data,

represent the weather data for the same time period.

contain two types of data; one is the historical traffic flow data of the station itself, and the other is the traffic flow data from adjacent stations. The horizontal data in the matrix are sorted in chronological order, representing

historical data points.

4.3. Model Parameter Settings

The configuration of the model’s network structure significantly impacts its predictive performance. For the highway toll station traffic flow data in this study, parameters such as the number of filters, convolution kernels, pooling layers, LSTM layers and units, GRU layers and units, learning rate, and optimizer are determined to suit the model.

First, the learning rate and step size are determined. The fixed parameter method is used to find the optimal learning rate.

Table 5 shows the model’s MAE and RMSE values under different learning rates.

From the table, it can be observed that, when the learning rate is 0.005, the model’s error values are relatively the smallest. Therefore, the learning rate is set to 0.005.

Next, with the learning rate fixed at 0.005,

Table 6 shows the MAE and RMSE values of the model under different iteration counts.

From the table, it can be concluded that, when the iteration count is 250, the model’s error values are relatively the smallest. Thus, the iteration count is set to 250.

The number of filters is set to 3, with values of 224, 104, and 72. The number of convolution kernels is set to 3, with values of 6, 5, and 6. The pooling layers are set to 2. The LSTM layers and units are set to 1 and 128, respectively. The GRU layers and units are set to 1 and 96, respectively. The learning rate is fixed at 0.005, and the Adam optimizer is chosen. Other parameter settings are shown in

Table 7 below.

4.4. Sliding Window and Evaluation Metrics

When handling time-series data prediction tasks, the model’s prediction accuracy is indeed influenced by the sequence length setting. Given this, this study adopts the sliding window technique to select an appropriate sequence length. The sliding window method is an effective approach for processing array or sequence data structures by setting a fixed window size, which can simplify multiple loops into a single loop in specific scenarios, thereby reducing computational complexity.

The choice of window size directly affects the number of generated samples and the number of time-step features included in the samples. In other words, in a given dataset, a smaller window means capturing shorter time segments, resulting in more independent samples. Conversely, a larger window captures longer time spans, resulting in fewer samples. Therefore, selecting an appropriate window size is crucial for accurately predicting traffic flow.

In terms of evaluation metrics, with reference to the approaches in [

27,

28], the study employs MAE (Mean Absolute Error) and RMSE (Root Mean Square Error) to evaluate the predictive performance of the regression model. RMSE is sensitive to extreme values in the data and measures the gap between predicted results and actual observations. MAE calculates the average of the absolute errors between predicted values and true values, intuitively reflecting the average level of model prediction bias. The mathematical formulas for these two evaluation metrics are as follows:

where

and

represent the true values and predicted values, respectively.

Given the above reasons, it is necessary to calculate the appropriate time step size for the highway dataset of the province. The step sizes were set to 72 (1 day), 144 (2 days), 216 (3 days), 288 (4 days), 360 (5 days), 432 (6 days), 504 (7 days), and 576 (8 days), and the MAE and RMSE values are calculated for each. The specific results are shown in

Table 8 below.

From the table, it can be observed that, when the window size is 504 (7 days), the MAE and RMSE values are the lowest, indicating that the model’s prediction results are better under this window size. Based on these results, the window size is ultimately set to 504.

5. Results Comparison and Analysis

The experimental results in this section are analyzed from the following two perspectives: whether it is a holiday and whether weather factors are included. To better demonstrate the predictive performance of the proposed model, this study compares it with four other models, which are LSTM, GRU, CNN-LSTM, and CNN-GRU. The following presents the comparison of prediction results.

5.1. Comparison and Analysis of Workday Traffic Flow Prediction Results

This study takes 11–17 October 2016 as the prediction target for workdays and calculates the prediction errors of the model for each station, direction, and date, as shown in

Table 9 below.

To better evaluate the model’s prediction accuracy,

Table 10 provides a comparison of the average prediction errors of the five models for 11–17 October 2016.

From the table above, it can be observed that, when predicting workday traffic flow, the proposed model achieves the lowest MAE and RMSE values, indicating superior prediction accuracy.

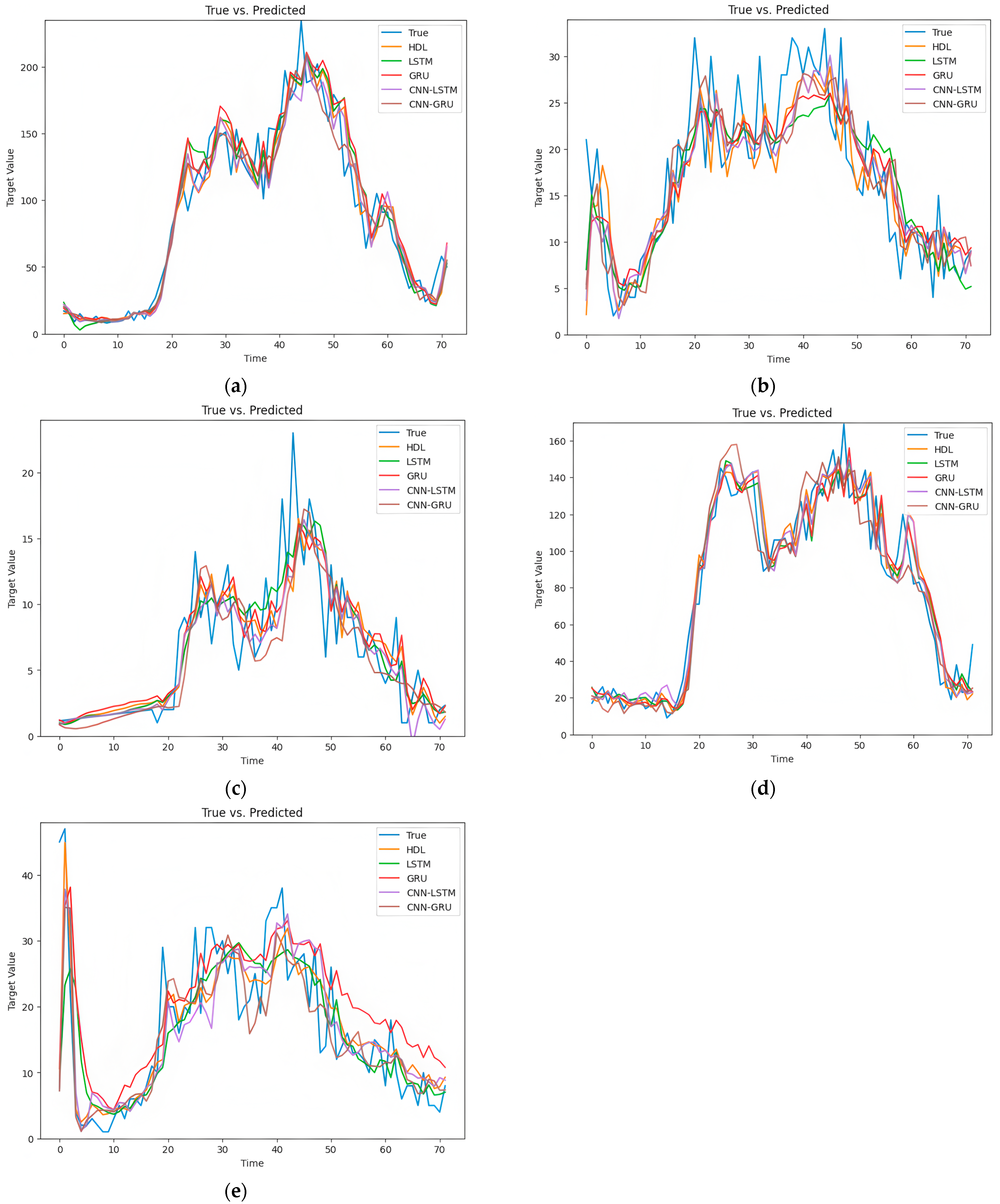

To more clearly demonstrate the prediction accuracy of the model,

Figure 7 presents the prediction results of the proposed model (HDL) compared to LSTM, GRU, CNN-LSTM, and CNN-GRU for 11 October 2016, as shown in the following figures.

In the previous analysis, it was noted that weather factors can impact the entire traffic system, causing fluctuations in traffic flow. Therefore, this study incorporates weather factors into the model. Using 11–17 October 2016 as the prediction target, the average prediction errors for each station are calculated and compared with the other four models. The comparison results are shown in

Table 11 below.

After incorporating external factors, it can be observed that the model’s prediction results have significantly improved. For the CNN-LSTM-GRU predictions at each station, the MAE and RMSE decreased by 32.8% and 27.8%, 14.9% and 11.23%, 1.91% and 2.42%, 4.22% and 6.75%, and 36.3% and 30.79%, respectively. This indicates that including weather factors effectively enhances prediction accuracy.

5.2. Comparison and Analysis of Holiday Traffic Flow Prediction Results

In this section, 1–7 October 2016 is taken as the prediction target for holidays. The prediction errors of the model for each station, direction, and date are calculated, as shown in

Table 12 below.

To better evaluate the model’s prediction accuracy,

Table 13 provides a comparison of the average prediction errors of the five models for 1–7 October 2016.

From

Table 13, it can be seen that, when predicting holiday traffic flow, the proposed model achieves the lowest MAE and RMSE values, indicating superior prediction accuracy.

In the previous analysis, it was noted that weather factors can impact the entire traffic system, causing fluctuations in traffic flow. Therefore, this section incorporates weather factors into the model. Using 1–7 October 2016 as the prediction target, the average prediction errors for each station are calculated and compared with the other four models. The comparison results are shown in

Table 14 below.

After incorporating external factors, the model’s prediction results show improvement. For the CNN-LSTM-GRU predictions at each station, the MAE and RMSE decreased by 7.08% and 6.96%, 1.48% and 3.65%, 17.3% and 1.45%, 2.31% and 4.10%, and 1.62% and 9.35%, respectively. This indicates that including weather factors also improves the prediction accuracy for holiday traffic flow. However, compared to workday prediction accuracy, there is a noticeable gap. This is because the data volume for the National Day holiday is smaller than that for workdays, and the traffic flow during the holiday is influenced by more uncontrollable factors and exhibits greater fluctuations, resulting in lower prediction accuracy for holiday traffic flow.

Based on the above analysis, after conducting two sets of comparisons, the results indicate that incorporating weather factors and holiday factors leads to smaller prediction errors for the integrated model compared to individual models, both on workdays and holidays. The proposed model in this study demonstrates superior prediction accuracy for highway traffic flow over the other four typical deep learning models. The performance of the model has improved to varying degrees.

The recent literature using relevant datasets for traffic flow prediction was collected, and the prediction results are shown in

Table 15 below.

From the table, it can be concluded that, within the same dataset used in this study, the proposed model outperforms the CEEMD-CNN-LSTM-Attention model.

6. Conclusions

The main work of this paper included two aspects. Firstly, a deep learning model based on CNN-LSTM-GRU was proposed. Although LSTM could effectively handle long-term dependencies in data, its structure was complex; GRU had the advantage of a simpler structure but sacrificed some prediction accuracy. Therefore, this paper adopted an integrated LSTM and GRU framework to combine their respective strengths in managing long-term dependencies. However, both models were unable to capture spatial features in traffic flow data. To address this, our study integrated LSTM-GRU with CNN to extract spatial features and validated the model using highway traffic flow data from a certain province.

Additionally, in the experimental analysis, considering factors affecting traffic flow prediction, this paper incorporated temporal factors and weather data and analyzed the results from the following two perspectives: whether holidays were included and whether weather data had been added. The findings showed that the proposed model exhibited better prediction performance compared to LSTM, GRU, CNN-LSTM, and CNN-GRU, indicating that incorporating temporal and weather elements could further enhance the model’s prediction accuracy.

However, there are still some limitations in the research process of this paper, which need to be further optimized in future studies. For example, in terms of dataset, the dataset used in the paper is relatively limited and outdated. In order to verify the applicability of the algorithm, experiments will need to be conducted on more and updated datasets in the future. In addition, in the study, the impact of road conditions and driver’s own reasons on traffic flow was not taken into account. In future research, a more comprehensive indicator system of influencing factors can be established.