1. Introduction

The advancement of the “Dual Carbon” (carbon peaking and carbon neutrality) goals profoundly impacts energy structures, industrial transformation, and public lifestyles, involving changes in energy prices, employment, and daily habits, which readily trigger widespread attention and public opinion fluctuations [

1]. Information asymmetry or inadequate communication during policy implementation may lead to public misunderstandings, while the amplification effect of social media can escalate local sentiments into national events. Moreover, economic shocks in high-energy-consuming industries or resource-dependent regions, without effective transitional measures, may intensify social conflicts and generate negative public opinion [

2]. Therefore, systematic analysis of “Dual Carbon” public opinion is critical for optimizing policy implementation, guiding public sentiment, and enhancing social support.

Research on “Dual Carbon” public opinion offers multi-dimensional value. First, public opinion analysis based on high-frequency big data reveals public attitudes, providing a foundation for policy design and communication strategies [

3]. Second, it captures the spatiotemporal dynamics of cognition and attitudes, enabling refined governance. Third, monitoring and predicting public opinion trends help address doubts and build social consensus. Additionally, such research promotes the application of digital technologies in low-carbon governance, such as the development of intelligent analysis systems, fostering the integration of technology and policy. Thus, “Dual Carbon” public opinion research is a key driver of green and low-carbon development.

However, current studies have notable shortcomings, failing to meet policy demands. Although the dynamic and heterogeneous nature of public opinion is recognized, several challenges persist. Integration and semantic extraction of multi-source heterogeneous data, such as policy texts and social media, remain limited. There is also a lack of systematic causal modeling tools to accurately depict the dynamic relationships among policy, technology, economy, and public sentiment. Predicting the behavior of multiple stakeholders, including government, enterprises, and the public, is constrained, particularly in scenarios with information asymmetry, making it difficult to model public opinion evolution. Lastly, the generation and impact assessment of intervention strategies lack specificity and real-time applicability.

To address these issues, this study proposes a “Framework for Causal Inference and Dynamic Intervention in Dual Carbon Public Opinion Based on Reinforcement Learning Enhanced Large Language Models and Diffusion Models”. To tackle data integration challenges, we design a multimodal causal knowledge graph, leveraging improved large language models and diffusion models to construct a four-dimensional causal network of policy, technology, economy, and public sentiment, with dynamic updates enabled by a multi-agent system. To overcome deficiencies in causal modeling and stakeholder interaction prediction, we propose a multi-agent collaborative approach for causal inference and interaction simulation, modeling stakeholder behaviors to enhance complex dynamic forecasting capabilities. To meet the need for optimized interventions, we develop dynamic intervention strategies using generative models, with RLHF optimizing LLM outputs for reliable policy recommendations, validated by pass@k metrics, to enhance intervention effectiveness and trustworthiness. This study aims to provide scientific support for “Dual Carbon” policymaking and public opinion guidance, facilitating the green and low-carbon transition and fostering social consensus.

2. Literature Review

As global climate change intensifies and the urgent need for green, low-carbon transformation grows, public awareness and the evolution of public opinion regarding the “Dual Carbon” goals have become research hotspots. Scholars worldwide have conducted extensive studies on “Dual Carbon” public opinion, focusing on three key areas: public opinion identification and analysis, public opinion evolution modeling, and dynamic public opinion monitoring. While significant progress has been made, shortcomings persist.

2.1. Public Opinion Identification and Analysis

Public opinion identification and analysis technologies have advanced significantly under the “dual carbon” agenda, particularly through the integration of clustering methods and semantic-driven approaches to enhance recognition accuracy. In terms of clustering methods, Mashayekhi et al. proposed an evolutionary clustering method based on a Bayesian nonparametric Dirichlet process mixture model [

4]. George et al. proposed an integrated clustering and BERT framework to improve topic modeling [

5]. Chen et al. applied an improved Single-Pass clustering method to disaster-related opinion monitoring, offering a reference for intelligent monitoring of policy-related opinions on carbon neutrality [

6]. On the semantic-driven front, Zhang et al. introduced an innovative integration framework that merges two representation approaches, enabling the transfer of topic information into the corresponding semantic embedding structure [

7]. Diego et al. integrated word embeddings with topic models to improve the semantic representation of documents [

8]. Jiang et al. proposed a topic detection and tracking method based on time-aware document embeddings, suitable for real-time news and microblog data [

9]. Chen et al. developed a simulation and control strategy for online opinion by integrating sentiment analysis models, topic computation models, and the SEIR model [

10].

2.2. Public Opinion Evolution Modeling

Modeling the evolution of public opinion primarily relies on graph-based methods and topic modeling to reveal dynamic trends. Regarding graph-based approaches, Hassan et al. proposed the KeyGraph algorithm to analyze public opinion structures based on keyword co-occurrence [

11]. Lv et al. proposed a method combining document and knowledge-level similarity to generate timelines of news events, segmenting sub-events using community detection algorithms [

12]. Weng et al. used Transformer models and the HDBSCAN clustering algorithm to identify key topics in scientific publications [

13]. Li et al. proposed a new Revised Medoid-Shift method and compared the performance of different methods on datasets with and without ground truth labels [

14]. In the domain of topic modeling, Churchill et al. proposed the Dynamic Topic-Noise Discriminator and Dynamic Noiseless Latent Dirichlet Allocation, improving dynamic topic modeling for social media by effectively handling noise [

15]. Xue et al. used topic modeling to analyze public opinions on waste classification from Sina Weibo, identifying sentiment trends and specific concerns, and proposed policy improvement suggestions based on these findings [

16]. Muthusami et al. evaluated the quality of short-text topic modeling using clustering methods and silhouette analysis [

17].

2.3. Dynamic Public Opinion Monitoring

Dynamic monitoring of public opinion is achieved through semantic similarity and statistical learning methods. For semantic similarity methods, Xu et al. utilized the Latent Dirichlet Allocation model and Gibbs Sampling method to extract and track topics in online news texts [

18]. Sun et al. proposed a context-based sentence similarity framework that measures similarity by comparing the generation probabilities of two sentences [

19]. For statistical learning methods, Zhang et al. used LS-SVM in conjunction with LSI to reduce feature dimensionality in vector space and improve classification accuracy [

20]. Yeh et al. proposed a conceptual dynamic Latent Dirichlet Allocation model for topic detection and tracking [

21]. Rizky et al. developed a retweet prediction system using an Artificial Neural Network optimized with Harmony Search [

22].

2.4. Limitations and My Study

Although research on “dual carbon” public opinion has made progress in opinion identification and analysis, evolution modeling, and dynamic monitoring, several limitations persist, summarized as follows:

First, there is insufficient integration between technology and data. Most existing studies rely on single-method approaches, making it difficult to handle the complexity of heterogeneous data from multiple sources such as policy documents and social media. Moreover, the depth of multimodal data integration is limited, restricting the ability to capture deep semantic relationships among policy, economy, and public sentiment. This hinders a comprehensive depiction of the “policy-public” interaction and the transmission chains of public opinion.

Second, there is a lack of causal reasoning and dynamic modeling. Many studies remain at the level of correlation analysis and fail to accurately model the causal chain of “policy stimulus-public sentiment-opinion dissemination”. Furthermore, the reliance on static rules or historical data makes it difficult to predict real-time opinion changes or capture dynamic polarization processes, which limits the adaptability and predictive power of current models.

Lastly, existing intervention strategies lack scientific rigor. Most current interventions depend on static rules and do not optimize dynamic interventions based on causal effects. There is also a lack of quantitative evaluation of intervention strength and design of collaborative mechanisms among multiple stakeholders, affecting the sustainability of intervention outcomes.

Recent advancements in resilient consensus for MASs have provided valuable insights into handling adversarial conditions in networked systems, which are analogous to the challenges of misinformation or collusive negative sentiments in public opinion dynamics. Related studies primarily focus on attack isolation, detection, consensus achievement, and applications in specific scenarios. For instance, Zhao et al. [

23] proposed a generalized graph-dependent isolation strategy based on graph topology to detect and isolate collusive false data injection and covert attacks in interconnected systems, thereby maintaining system integrity. Wang et al. [

24] investigated resilient consensus in discrete-time MASs with dynamic leaders and time delays under cyber-attacks, combining theoretical analysis with experimental validation using UAVs to extend applications in time-delay scenarios. Zhao et al. [

25] studied resilient consensus in high-order networks under collusive attacks, developing algorithms to ensure agent agreement despite malicious interference. Yang et al. [

26] focused on resilient bipartite consensus for high-order heterogeneous MASs under Byzantine attacks, supporting cooperation and competition among agents while mitigating malicious behaviors, highlighting the handling of heterogeneous systems. Zhao et al. [

27] adopted an attack isolation-based approach for higher-order multi-agent networks, achieving consensus by excluding extreme values and isolating compromised nodes. Jahangiri-Heidari et al. [

28] developed a resilient consensus scheme for nonlinear MASs under false data injection attacks on communication channels, employing detection and isolation techniques to further emphasize attack detection. Wang et al. [

29] proposed distributed resilient adaptive consensus tracking based on K-filters to address deception attacks in nonlinear MASs, ensuring tracking performance despite uncertainties and attacks. Zhu et al. [

30] introduced secure consensus control using improved PBFT and Raft blockchain algorithms to enhance the security of MASs against attacks in industrial environments, integrating blockchain technology into consensus mechanisms.

Although these studies have advanced resilient MASs by emphasizing attack isolation and detection, consensus achievement, adaptive tracking, and blockchain integration, they primarily target engineered systems and do not incorporate natural language processing or generative AI to handle unstructured, multimodal data from social contexts. In contrast, the priority of this study lies in adapting and extending MAS resilience concepts to the domain of “Dual Carbon” public opinion, where “attacks” manifest as collusive misinformation or sentiment shifts rather than direct cyber threats. By integrating reinforcement learning-enhanced LLMs for semantic alignment and causal discovery, diffusion models for simulating opinion propagation, and MASs for modeling interactions among stakeholders like government, enterprises, and the public, this framework constructs a four-dimensional causal network encompassing policy, technology, economy, and public sentiment. This approach goes beyond consensus to include predictive causal inference and real-time dynamic interventions. Unlike the control-focused methods mentioned above, which lack mechanisms for generative strategy optimization or quantitative causal effect estimation, such as through Conditional Average Treatment Effect, this study employs multi-agent deep reinforcement learning integrated with causal Bayesian networks to design collaborative, adaptive intervention measures, enhancing sustainability and applicability to policy guidance. This interdisciplinary integration addresses the unique challenges of public opinion evolution, such as information asymmetry and spatiotemporal dynamics, offering superior causal modeling, multi-agent collaboration, and intervention efficacy compared to existing resilient MAS works, as validated through comparisons with baselines like LDA, BERT, and SIR models.

In light of these limitations, this study proposes a collaborative framework integrating reinforcement learning-enhanced LLMs, diffusion models, and MASs. The framework constructs a four-dimensional causal network covering “policy–technology–economy–public sentiment”, employing reinforcement learning to achieve semantic alignment and causal discovery across multimodal data. It embeds LLM-based decision logic generators and designs a multi-agent game model that incorporates a dual-loop reasoning mechanism based on counterfactual and intervention responses, thereby enhancing the dynamic modeling capacity needed to address the real-time nature of opinion evolution. Additionally, the study develops a quantitative model for intervention intensity estimation based on Conditional Average Treatment Effect (CATE) and optimizes collaborative intervention strategies and risk warning mechanisms for government, media, and platforms by integrating multi-agent deep reinforcement learning (MADRL) with causal Bayesian networks. This enhances the accuracy and sustainability of public opinion modeling and intervention efforts.

3. Multimodal Causal Inference and Collaborative Architecture Design

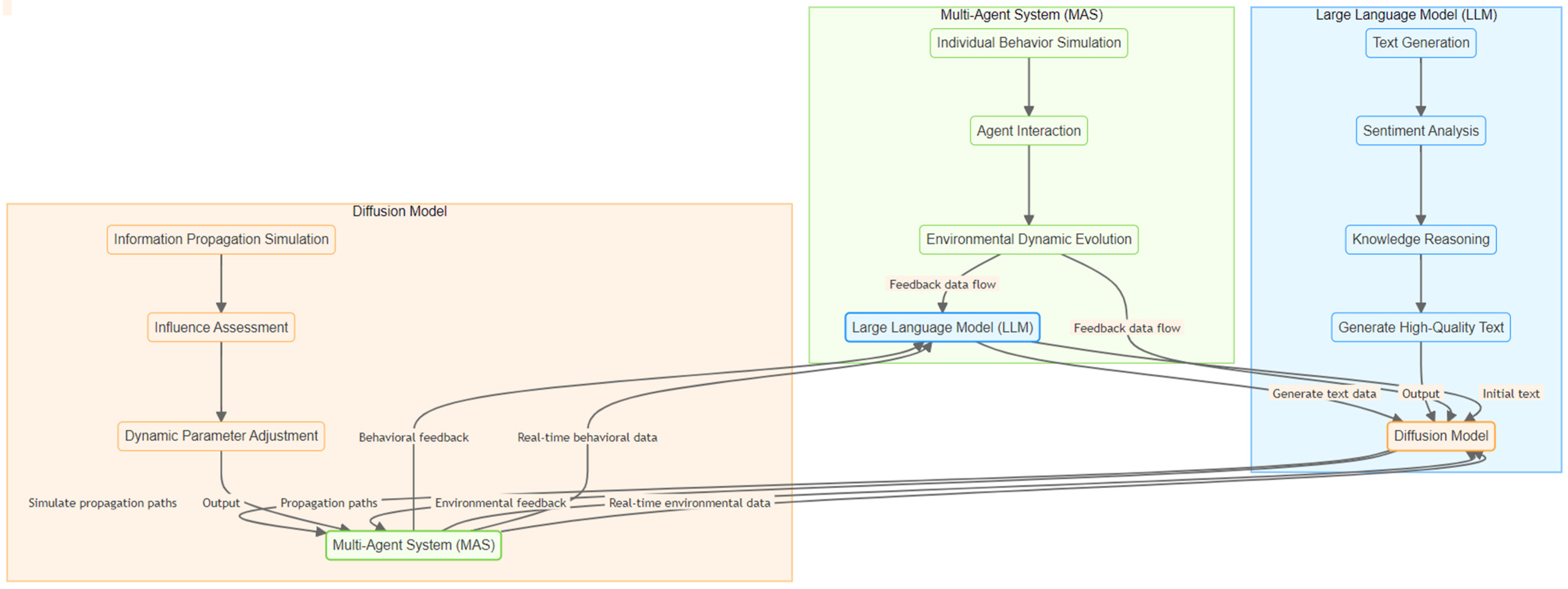

“Dual Carbon” public opinion involves multi-source heterogeneous data, including policy texts, social media, and expert opinions, which traditional methods struggle to analyze for complex causal relationships. This study proposes a collaborative architecture integrating LLMs, diffusion models, and MASs to construct a multimodal causal knowledge graph, simulate multi-agent interactions, and reveal causal emergence patterns through counterfactual reasoning. This framework provides scientific support for public opinion evolution modeling and intervention. The collaborative architecture is illustrated in

Figure 1.

3.1. Multimodal Data Fusion and Causal Graph Generation

Multimodal data fusion aims to integrate policy texts, social media posts, and economic data to uncover deep causal relationships. This study designs the following steps:

First, the semantic understanding capabilities of large language models are utilized to extract preliminary causal hypotheses. Large language models process multi-source data through pre-trained text analysis modules to identify causal patterns, such as policy announcements triggering media coverage. MASs provide collaborative inputs and incorporate environmental feedback to optimize large language model inference, generating high-quality causal hypotheses.

Second, diffusion models simulate the temporal and spatial dissemination paths of public opinion, quantifying the dynamic characteristics of causal relationships. These models take preprocessed multi-source data and use time series modeling and spatial propagation analysis to create a dynamic picture of public opinion spread, such as media coverage triggering cascading emotional reactions across regions. The behavior decision module of MASs predicts public opinion evolution trends based on these features and feeds the results back to the large language model.

To ensure the reliability of causal relationships, a causal discovery algorithm based on adversarial training is designed. Agents within the multi-agent system are divided into two types: hypothesis generators and counterfactual validators. Through interaction, they verify the robustness of causal hypotheses. For example, one agent generates a hypothesis like policy announcement leading to public sentiment change, while another validates it through counterfactual analysis to rule out confounding effects. Environmental feedback adjusts agent strategies, forming a closed-loop optimization that eliminates spurious correlations.

Ultimately, a multimodal causal knowledge graph is constructed, encompassing policy, public behavior, and corporate actions. Large language models integrate multi-source data to generate causal chains, diffusion models provide temporal and spatial dimensions, and MASs validate causal directions. The graph is output in natural language descriptions or visualizations, aiding decision-makers in understanding complex causal mechanisms. This design enhances the precision of causal analysis, providing data support for policymaking and public opinion management.

3.2. Multi-Agent Interaction Simulation and Counterfactual Causal Inference

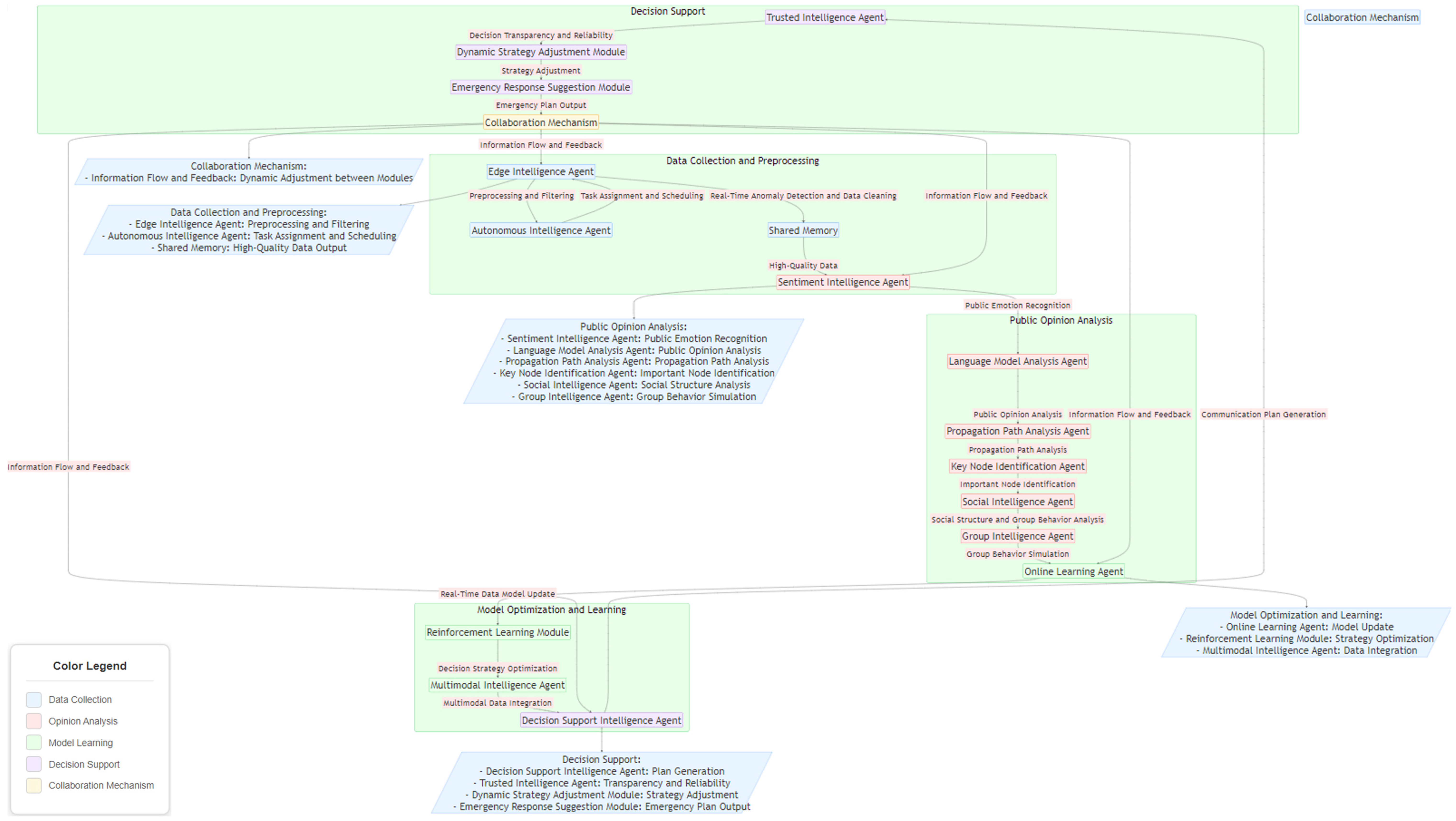

The evolution of “Dual Carbon” public opinion involves interest-based interactions among multiple stakeholders, including government, enterprises, and the public, which single models struggle to simulate due to their complex dynamics. This study employs a MAS to construct a collaborative decision-making and causal inference framework, designing differentiated agents and a dual-loop inference mechanism of counterfactuals and intervention responses to predict causal emergence patterns in interaction scenarios. The architecture of the MAS is illustrated in

Figure 2.

First, differentiated agents are constructed to simulate multi-agent behaviors. Agents represent roles such as the government optimizing emission reduction policies, enterprises balancing emission reduction costs, and the public reacting based on emotions, with decision logic generators based on large language models embedded to generate behavior strategies aligned with their respective objectives. Multi-level objective functions couple macro-constraints like carbon emission targets with micro-characteristics such as public approval rates, coordinating decisions across agents through weighted optimization.

Second, the collaborative decision-making module of the multi-agent system simulates dynamic interactions among agents to predict the cascading effects of interventions. For example, it analyzes how policy announcements trigger public emotional fluctuations through media dissemination, which in turn influence corporate actions. Diffusion models provide spatiotemporal propagation simulations while large language models evaluate the semantic impacts of interventions such as the amplifying effects of media coverage. The multi-agent system integrates results to predict the transmission pathways of causal chains.

Third, a counterfactual–intervention response dual-loop reasoning mechanism is developed to assess causal relationships. The counterfactual reasoning module compares intervention scenarios such as policy announcements with non-intervention scenarios like public reactions without policies to identify the authenticity of causality. The decision support module integrates multi-agent system results to generate optimization suggestions such as adjusting policy release timings to mitigate negative emotions. Large language models translate analyses into interpretable outputs while the multi-agent system feedback mechanism optimizes agent behaviors to ensure reasoning robustness.

Finally, the risk early-warning module of the multi-agent system monitors the effectiveness of game strategies in real time. When detecting abnormal public opinion such as high-risk emotional diffusion, it combines semantic analysis from large language models and propagation predictions from diffusion models to adjust agent behaviors and generate new intervention strategies. A closed-loop feedback mechanism ensures the timeliness of reasoning, providing support for dynamic interventions. For instance, the system may recommend optimizing policy communication to enhance public acceptance.

This framework simulates multi-agent games in dual carbon public opinion through differentiated agents, multi-level objective functions, and dual-loop reasoning mechanisms, revealing laws of causal emergence and supporting policy optimization and public opinion management.

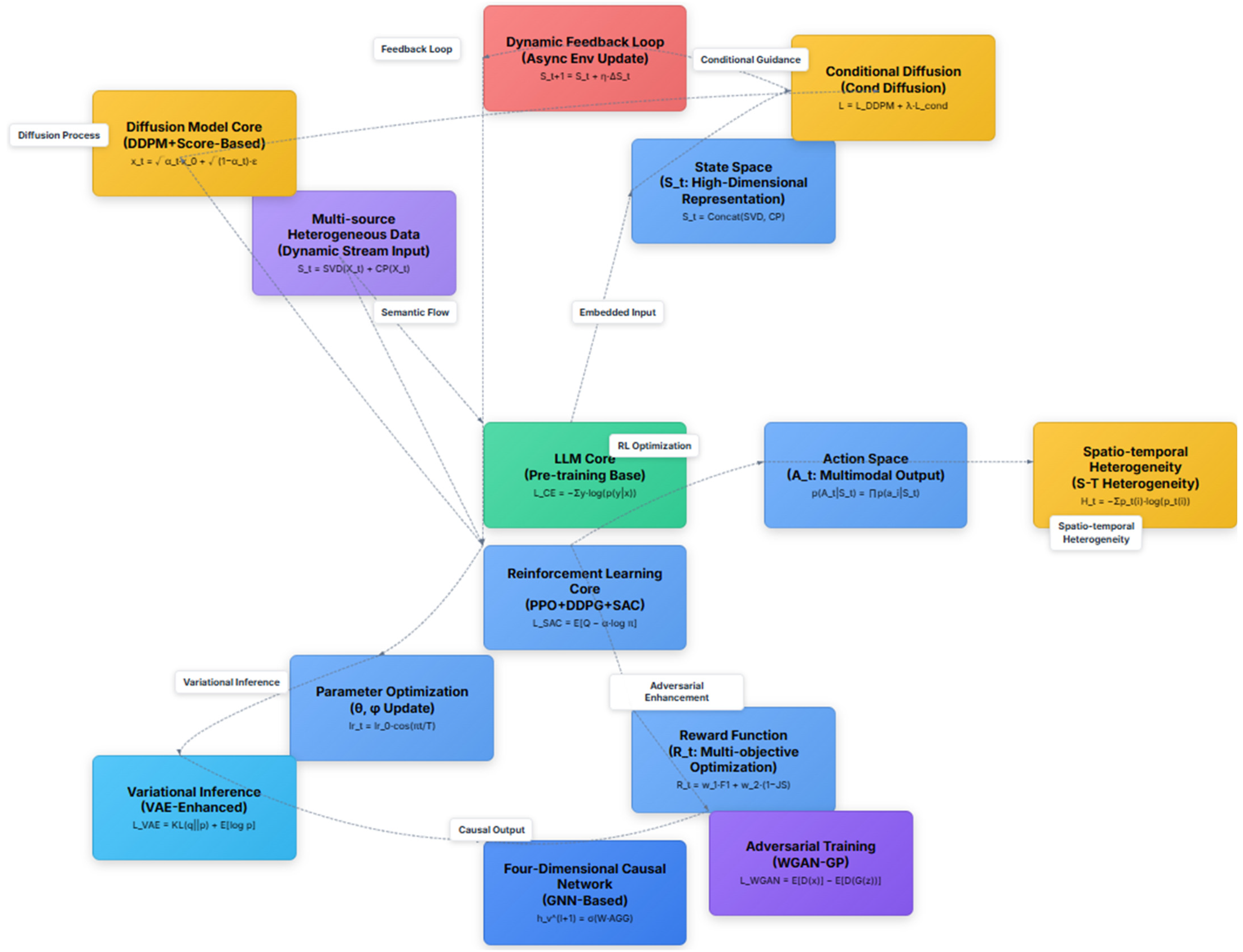

4. Construction of Four-Dimensional Causal Network

To capture the spatiotemporal dynamics and underlying causal patterns of dual carbon public opinion dissemination, this study develops a reinforcement learning-enhanced framework that integrates large language models and diffusion models to construct a four-dimensional causal network comprising policy, technology, economy, and public sentiment. In this network, nodes represent key entities such as policy directives, technological developments, economic indicators, and emotional responses, while edges indicate directional causal relationships, forming a structured knowledge graph. The framework combines the semantic parsing capabilities of large language models, the spatiotemporal modeling strength of diffusion models, and the strategy optimization of reinforcement learning to reveal the causal mechanisms governing information spread and sentiment evolution. The technical architecture, as shown in

Figure 3, outlines the complete process of input processing, reinforcement learning optimization, diffusion-based generation, and feedback output.

4.1. Data Input and Pre-Training

In the input stage, multi-source heterogeneous data such as policy documents, social media posts, and economic indicators are processed to generate high-quality representations through feature extraction. The multi-source heterogeneous data module is based on matrix and tensor decomposition theories, utilizing Singular Value Decomposition (SVD) and conditional probability (CP) distributions to extract semantic and structural features. The formulations are as follows:

where

denotes the state vector at time step t, and

represents the input data matrix.

preserves the principal semantic information, while

captures conditional dependencies and reduces data complexity.

Subsequently, the core LLM module is built upon a pre-trained model and optimizes semantic understanding through cross-entropy loss.

where

denotes the cross-entropy loss,

is the ground truth label, and

represents the predicted probability distribution. The symbol

represents summation, aggregating the loss terms over all classes or samples, and

denotes the logarithm function, typically the natural logarithm, used to compute the information-theoretic divergence in the loss calculation. This module provides a robust semantic foundation for the reinforcement learning and diffusion processes.

4.2. Reinforcement Learning Optimization

Reinforcement learning (RL) drives the dynamic construction of the four-dimensional causal network through Soft Actor-Critic (SAC) and Proximal Policy Optimization (PPO), within the framework of a Markov Decision Process (MDP), supporting strategy optimization in multi-agent game settings. The formulations are as follows [

31,

32,

33].

① SAC loss function:

where

denotes the SAC loss function, guiding the optimization of the policy.

denotes the mathematical expectation operator, computing the expected value over the distribution.

denotes the state-action value function,

denotes the entropy regularization coefficient, and

represents the action probability distribution.

denotes the logarithm function, typically the natural logarithm, used in entropy calculations. SAC balances exploration and exploitation by maximizing entropy.

② PPO objective function:

where

denotes the clipped objective function for the PPO algorithm, guiding policy optimization.

denotes the mathematical expectation operator, computing the expected value over the distribution.

denotes the probability ratio between the new and old policies,

is the advantage function,

is the clipping parameter,

denotes the clipping function, limiting the probability ratio within the range

, and

denotes the minimum function, selecting the smaller value between the clipped and unclipped terms. PPO stabilizes policy updates through a clipping mechanism.

③ Multi-agent value function:

where

denotes the value function for the

agent, estimating the expected return given state

, action

, and the actions of other agents

.

denotes the mathematical expectation operator, computing the expected value over the distribution.

denotes the immediate reward received by the

agent.

denotes the discount factor, weighting the importance of future rewards.

denotes the current state of the environment.

denotes the action taken by the

agent.

denotes the actions taken by all other agents except the

agent.

denotes the next state of the environment.

denotes the next action of the

agent.

denotes the next actions of all other agents. This formulation evaluates the expected return in multi-agent game scenarios.

④ State vector:

where

denotes the state vector at time step t, integrating semantic and conditional features, and serves as the input to the action distribution.

denotes the concatenation operation, combining the outputs of

and CP.

denotes the Singular Value Decomposition, extracting principal semantic information.

denotes the conditional probability or dependency capture operation, modeling conditional relationships.

⑤ Action probability distribution:

where

denotes the action set,

denotes the state vector at time step t, and

represents an individual action, supporting multimodal action modeling.

denotes the product operator, aggregating probabilities over individual actions.

denotes the probability of an individual action

given the state vector

.

⑥ Reward function:

where

denotes the reward function at time step t, quantifying the objective for optimization.

denotes the

score,

represents the Jensen–Shannon divergence, and

and

are the corresponding weights, guiding semantic accuracy and distributional consistency.

⑦ Learning rate scheduling:

where

denotes the learning rate at time step t, determining the step size for model updates.

denotes the initial learning rate, and

denotes the total number of training steps.

denotes the cosine function, used to modulate the learning rate. The cosine annealing schedule is employed to enhance training stability.

⑧ Value function:

where

denotes the value function for state

under policy π, estimating the expected return.

denotes the reward received at time step t.

denotes the discount factor, controlling the weight of future rewards.

denotes the state at time step t.

denotes the state at the next time step t + 1. The reinforcement learning module optimizes the policy through the state

, reward

, and value function

, supporting the dynamic construction of the four-dimensional causal network.

4.3. Diffusion Model Generation

The diffusion model simulates the spatiotemporal dynamics of public opinion dissemination using the Denoising Diffusion Probabilistic Model (DDPM), combined with Wasserstein GAN (WGAN) and Variational Autoencoder (VAE) to optimize the generation of causal relationships [

34,

35,

36]. The formulations are as follows.

① Forward diffusion:

where

denotes the noisy data,

represents the original data,

is the scheduling parameter,

denotes the square root function, and

is Gaussian noise following the standard normal distribution

. The forward process provides the foundation for the subsequent reverse denoising procedure.

② DDPM Loss:

where

denotes the DDPM loss function, quantifying the denoising objective.

denotes the true Gaussian noise added during the diffusion process.

denotes the noise predicted by the model parameterized by

, based on the noisy data

and time step t.

denotes the noisy data at time step t. t denotes the time step index, indicating the stage in the diffusion process.

denotes the squared L2 norm, measuring the squared difference between true and predicted noise.

denotes the model parameters being optimized.

③ Denoising Distribution:

where

denotes the conditional probability distribution of the data at time step

given the data at time step t, parameterized by

.

denotes the Gaussian distribution.

denotes the data at time step t − 1.

denotes the noisy data at time step t.

denotes the mean of the Gaussian distribution, predicted by the model with parameters

.

denotes the covariance matrix of the Gaussian distribution, predicted by the model with parameters

. t denotes the time step index, indicating the stage in the diffusion process.

denotes the model parameters being optimized. | denotes conditioning, indicating the dependency of

on

.

④ Total Loss:

where

denotes the conditional loss based on LLM features, and

is a balancing coefficient between the two loss components.

refers to the loss function of the DDPM.

⑤ Temporal Entropy:

where

denotes the entropy at time step t, and

represents the probability of state

at time step t.

denotes the summation operator, aggregating over all possible states

.

denotes the logarithm function, typically the natural logarithm, used to compute the entropy term. This formulation captures the dynamic variations in spatiotemporal features and serves as a basis for adjusting the covariance in the denoising distribution.

⑥ WGAN Loss Function:

where

denotes the loss function of the WGAN,

is the output of the discriminator for real data

,

represents the fake data generated by the generator based on the latent variable

,

is the discriminator’s output for the generated data, and

refers to the expected score for the fake data. The generator and discriminator are optimized using the Wasserstein distance, which enhances generation quality and addresses the instability issues commonly encountered in traditional GAN training.

⑦ VAE Loss:

where

denotes the loss function of the VAE,

represents the Kullback–Leibler (KL) divergence,

denotes the divergence operator in the context of KL divergence, and

is the expected reconstruction likelihood. The variational inference module optimizes the latent representation by minimizing the KL divergence and maximizing the reconstruction likelihood, thereby ensuring effective modeling of the latent space to support generative tasks.

⑧ MAE of propagation paths

where

denotes the mean absolute error of the propagation paths, with lower values indicating closer alignment between predicted and actual diffusion paths.

denotes the number of propagation paths or samples in the evaluation set.

denotes the length of each path.

denotes the predicted value at time step t for the n-th path, generated from the denoising process in Formulation (7).

denotes the ground truth value at time step t for the n-th path, obtained from actual data observations. The inner summation

aggregates absolute errors over each path’s steps, while the outer summation

averages across all paths. Division by

computes the overall mean.

⑨ Sample coverage rate

where

denotes the sample coverage rate, expressed as a percentage, with higher values indicating better representation of real data by generated samples.

denotes the number of real samples.

denotes the indicator function.

denotes the distance between real sample

and generated sample

, computed using KL divergence from Formulation (8).

denotes a predefined threshold for coverage. The minimization

finds the closest generated neighbor for each real sample, and the summation aggregates covered samples, divided by

for the average rate.

The diffusion model generates public opinion propagation paths through a forward noise injection and reverse denoising process, while LLM features guide the conditional generation. WGAN and VAE modules further enhance the quality of generation.

4.4. Feedback and Four-Dimensional Causal Output

The feedback mechanism generates structured causal outputs through dynamic state updates and graph neural networks (GNNs) [

37,

38]. The formulations are as follows:

① Dynamic Feedback

where

denotes the state vector at time step

,

denotes the state vector at time step

,

denotes the state difference computed based on the Wasserstein distance, and

is the learning rate, which ensures adaptive adjustment of the system.

② Four-Dimensional Causal Network

where

denotes the node representation at layer

, and

denotes the activation function, introducing nonlinearity to the model.

denotes the weight matrix, transforming the aggregated information.

denotes the aggregation function, combining information from neighboring nodes. The GNNs capture causal relationships through iterative aggregation.

The state update formula supports system adaptability, and the GNN formulation generates the four-dimensional causal network, producing causal chains such as “policy release-technology investment-economic cost-public sentiment change”, which assist in analyzing public opinion related to the “dual carbon” goals and optimizing policies.

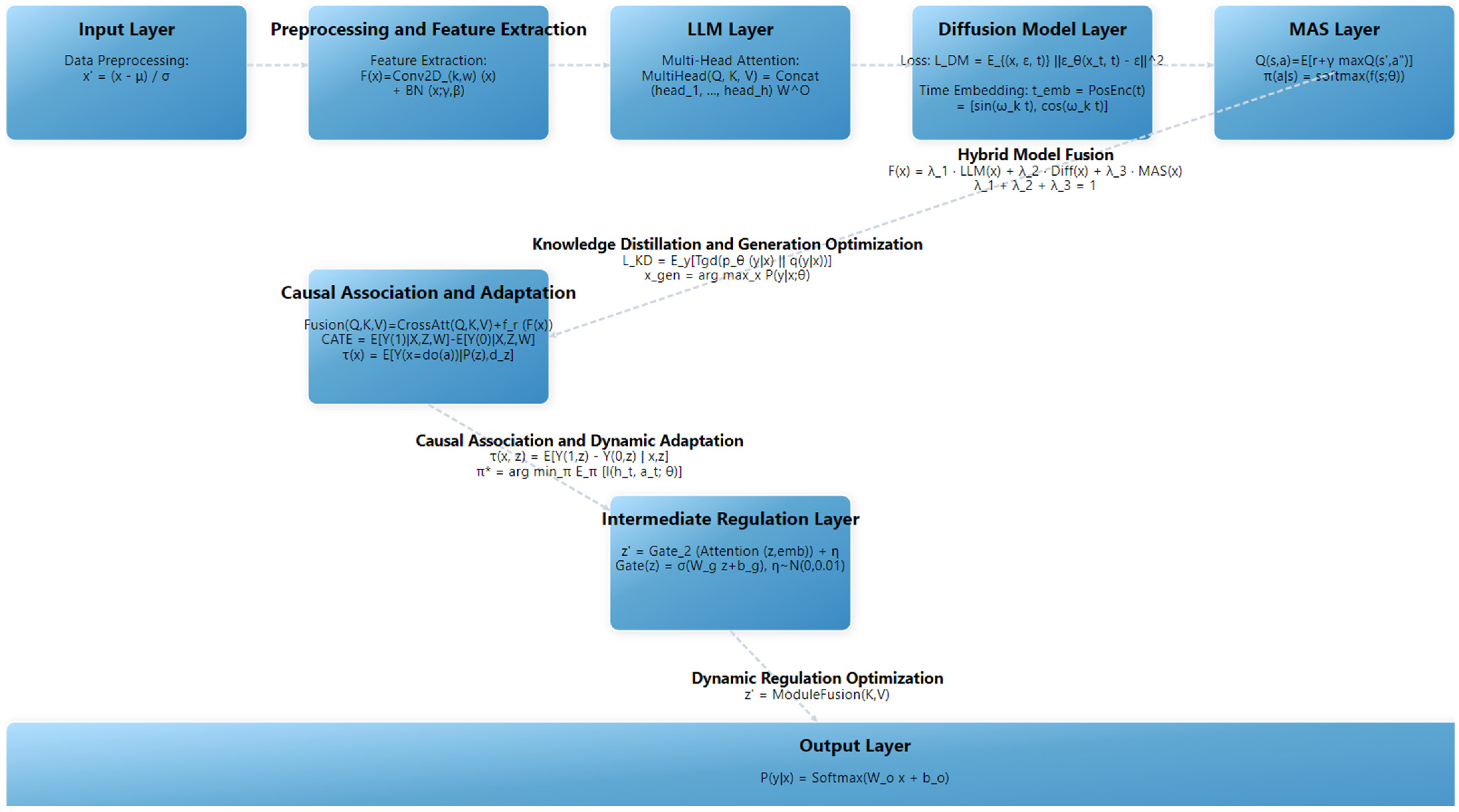

5. Dynamic Public Opinion Intervention Through Multimodal Collaboration

Against the backdrop of global climate change, the “dual carbon” goals have triggered complex public opinion dynamics involving policy documents, social media, and multi-agent interactions. Traditional static intervention approaches struggle to achieve accurate guidance and real-time prediction. This study proposes a collaborative framework that integrates RL-enhanced LLMs, diffusion models, and MASs. Through multimodal feature extraction, causal inference, and dynamic strategy optimization, the framework enables effective intervention in public opinion related to the “dual carbon” initiative. The technical roadmap, as illustrated in

Figure 4, presents the complete workflow encompassing data input, feature extraction, propagation modeling, causal reasoning, and policy output.

5.1. Data Input and Preprocessing

The input layer receives multi-source heterogeneous data, including policy documents, public discussions, news reports, and environmental monitoring data. The data formats include text vectors, image pixels, and time series, with the target variable being public support rate or sentiment value. Preprocessing involves the tokenization, denoising, vectorization, and formatting of image and spatiotemporal data to ensure data consistency and quality. The formulation is as follows:

where

denotes the standardized input data,

is the original input data,

is the mean of the input data, and σ is the standard deviation. This process reduces the impact of noise and provides reliable input for subsequent modules.

5.2. Multimodal Feature Extraction and Semantic Understanding

Multimodal feature extraction integrates features from text, images, and time series to support semantic understanding and the construction of causal networks [

39,

40]. The formulations are as follows.

① Feature Extraction:

where

denotes the extracted features,

represents the convolution operation where

is the kernel size and

is the weight, and BN refers to batch normalization with

and

as the scaling and shifting parameters. Convolution captures local features such as propagation patterns, while batch normalization enhances training stability.

② Multi-Head Attention:

where

denote the query, key, and value matrices, respectively,

is the

th attention head, h is the number of attention heads,

is the output projection matrix, and

denotes the concatenation operation, combining outputs from all attention heads. Multi-head attention extracts deep semantic associations between policy texts and public sentiment.

③ Temporal Embedding:

where

denotes the temporal embedding vector at time step t, encoding temporal information.

denotes the positional encoding function, combining sine and cosine terms.

denotes the sine function, used in temporal encoding.

denotes the cosine function, used in temporal encoding.

denotes the frequency parameter for the i-th dimension, controlling the oscillation rate, t denotes the time step index, indicating the temporal position. Temporal embedding provides time-awareness to the diffusion model, enabling it to capture the dynamics of public opinion.

④ Representation quality separation

where

denotes the representation quality separation, ranging from [0, 1], with higher values indicating better inter-class separation and lower intra-class variance in the feature embeddings.

denotes the number of classes or categories in the dataset.

denotes the average inter-class distance between class

and class l.

denotes the average intra-class distance for class

. The summation over inter-class pairs

aggregates distances across all unique class pairs, divided by the number of such pairs

. The summation over intra-class

averages the within-class distances, divided by

.

denotes the maximum possible inter-class distance, used for normalization.

⑤ Feature robustness MSE

where

denotes the Feature Robustness Mean Squared Error (MSE), with lower values indicating greater stability of features under noise perturbations.

denotes the number of samples.

denotes the feature extraction function.

denotes the noise term.

denotes the i-th input sample. The norm

denotes the squared L2 distance. The summation aggregates squared errors over samples, divided by

for the mean.

5.3. Dynamic Propagation Modeling

The diffusion model captures the spatiotemporal heterogeneity of public opinion dissemination through a DDPM, predicting the diffusion paths of emerging hotspots [

41]. The formulations are as follows.

① Denoising Loss for Diffusion Model

where

denotes the loss function for the diffusion model, quantifying the denoising objective;

denotes the mathematical expectation operator, computing the expected value over the distributions of

and

;

denotes the noise predicted by the model with parameters θ, based on the noisy data

and time step

;

denotes the true Gaussian noise added during the diffusion process;

denotes the noisy data at time step

;

denotes the time step index, indicating the stage in the diffusion process;

denotes the squared L2 norm, measuring the squared difference between predicted and true noise; and

denotes the model parameters being optimized. The model simulates the regional propagation and emotional dynamics following policy announcements.

② DTW distance

where

denotes the Dynamic Time Warping distance between sequences

and

, with lower values indicating greater similarity in propagation paths, accounting for temporal shifts.

and

denote the predicted and ground truth sequences, respectively, where points

and

are generated from time steps in the diffusion process. The norm

denotes the squared Euclidean distance as local cost, reusable from the L2 norm in Formulation (13). The summation along

aggregates costs over the optimal warping path, found via dynamic programming to minimize total distance. The square root normalizes to a distance scale, and the minimum ensures the best alignment.

5.4. Model Optimization and Sample Generation

The model is optimized to enhance prediction accuracy and efficiency through knowledge distillation and optimal sample generation [

42]. The formulations are as follows.

① Knowledge Distillation Loss:

where

denotes the knowledge distillation loss function, quantifying the transfer of knowledge.

denotes the mathematical expectation operator over the output label

, computing the expected value.

denotes the divergence measure, assessing the difference between distributions.

denotes the predictive probability distribution of the student model parameterized by

, for label

given input

.

denotes the predictive probability distribution of the teacher model, for label

given input

.

denotes the output label or target variable.

denotes the input data.

denotes the parameters of the student model.

denotes the divergence operator between two distributions. Knowledge distillation transfers the knowledge of a complex teacher model to a lightweight student model, thereby reducing computational cost and making it suitable for public opinion prediction tasks.

② Optimal Sample Generation:

where

denotes the generated optimal samples,

denotes the argument of the maximum, selecting the

that maximizes the given function, and

represents the probability of output

given input

and model parameters

. This process provides high-quality data to support model optimization and public opinion analysis.

③ Generation quality improvement

where

denotes the generation quality improvement, expressed as a percentage, with positive values indicating reduced divergence and better alignment between generated and real distributions after optimization.

denotes the pre-optimization KL divergence.

denotes the post-optimization KL divergence. The subtraction,

, captures the absolute reduction in divergence. Division by

normalizes to a relative improvement.

5.5. Multi-Model Integration

Model integration combines the outputs of LLMs, diffusion models, and MASs to enhance performance and robustness. The formulation is as follows:

where

denotes the output of the integrated model;

,

, and

represent the outputs of the large language model, diffusion model, and multi-agent system, respectively. The coefficients

,

, and

are the weighting factors for each model’s output, subject to the constraint

, and are determined via grid search. The integration combines semantic understanding, dynamic dissemination, and multi-agent interaction to enhance prediction robustness.

5.6. Causal Inference and Effect Estimation

Causal inference leverages multimodal feature fusion to quantify the effects of interventions [

43]. The formulations are as follows.

① Fusion Operation:

where

denotes the fusion operation, combining cross-attention and remapped features.

denotes the cross-attention mechanism output, computed using query

,

, and

matrices.

denotes the query matrix, used to compute attention scores.

denotes the key matrix, used to compare the queries.

denotes the value matrix, providing the information to be weighted.

denotes the feature remapping function, transforming the extracted features.

denotes the output of the feature extraction layer, processing input

. This fusion provides a comprehensive input for causal inference.

② Causal Bayesian Network:

where

denotes the joint probability distribution over the set of variables

, and

denotes the product operator, aggregating conditional probabilities across all variables.

denotes the conditional probability of variable

given its parent nodes

.

denotes the

variable in the set of variables.

denotes the set of parent nodes of

in the Bayesian network.

denotes conditioning, indicating that the probability of

depends on its parent nodes. This formulation models causal relationships by decomposing the joint distribution into conditional probabilities, enabling dynamic updates of the graph structure and inference of causal chains.

③ CATE:

where

denotes the Conditional Average Treatment Effect, measuring the expected difference in outcomes due to intervention.

denotes the mathematical expectation operator, computing the expected value over the distribution.

denotes the outcome under intervention and

represents the outcome without intervention.

denotes the feature variables, capturing characteristics of the data.

denotes the contextual variables, providing additional context for the analysis.

denotes the weighting variables, used to adjust for confounding factors.

denotes conditioning, indicating that the expectation is conditioned on

.

④ Conditional Causal Effect:

where

denotes the conditional causal effect, representing the expected treatment effect given variables

and

.

denotes the mathematical expectation operator, computing the expected value over the distribution.

denotes the potential outcome under intervention with context

.

denotes the potential outcome without intervention with context

.

denotes the feature variables, capturing characteristics of the data.

denotes the contextual variables, providing specific conditions for the analysis.

denotes conditioning, indicating that the expectation is conditioned on

and

. This formulation refines the CATE by estimating causal effects under specific conditions, thereby improving the precision of causal inference.

⑤ Causal Effect Estimation:

where

denotes the causal effect for feature variable

, quantifying the impact of the intervention.

denotes the mathematical expectation operator, computing the expected value over the distribution.

denotes the outcome under intervention

.

denotes the intervention operator, indicating a specific action

is enforced.

denotes the conditional probability distribution over contextual variable

.

denotes the intervention variable, representing the specific intervention applied to

.

denotes the feature variable, capturing characteristics of the data.

denotes the contextual variable, providing additional context.

denotes conditioning, indicating that the expectation is conditioned on

and

. This formulation evaluates the causal effect of a specific intervention, supporting the design of precise intervention strategies.

⑥ Causal Chain Precision

where

denotes the causal chain precision, ranging from [0, 1], with higher values indicating greater accuracy of the model’s predicted causal chains.

denotes the set of causal chains predicted by the model, derived from the Bayesian network decomposition in Formulation (16).

denotes the ground truth set of causal chains, obtained from the dataset or expert annotations.

denotes the indicator function, which is 1 if the predicted chain

is in the ground truth set

, and 0 otherwise.

denotes the total number of predicted chains.

⑦ Counterfactual inference MSE

where

denotes the counterfactual inference MSE, measuring the average squared difference between the predicted and actual counterfactual outcomes, with lower values indicating better accuracy in counterfactual prediction.

denotes the number of samples in the dataset.

denotes the model’s predicted counterfactual outcome for the i-th sample, derived from the conditional causal effect in the CATE in Formulation (17).

denotes the ground truth counterfactual outcome for the i-th sample, obtained from dataset annotations or simulated baselines. The summation

aggregates the squared errors over all samples, and division by

computes the mean.

5.7. Policy Optimization and Reward Design

The multi-agent coordination layer enhances intervention efficiency through reward functions and policy updates. The formulations are as follows.

① Reward Function:

where

denotes the reward function, quantifying the objective to optimize.

denotes the change in support rate, while

and

denote the entropy values before and after the intervention, respectively.

and

are weighting coefficients. The reward function is designed to encourage increased support rates and reduced uncertainty.

② Policy Update:

where

denotes the policy at time step

, representing the updated action selection strategy.

denotes the policy at time step

, serving as the current policy.

is the learning rate, and

represents the policy gradient, which adapts to the dynamics of public opinion.

③ Optimal Policy:

where

denotes the optimal policy, representing the best action selection strategy.

denotes the argument of the minimum, selecting the policy

that minimizes the expected loss.

denotes the mathematical expectation operator, computing the expected value under policy

.

denotes the loss function, evaluating the performance of action

given historical state

and model parameters

.

denotes the historical state at time step

, capturing past information.

denotes the action taken at time step

.

denotes the model parameters, defining the loss function’s behavior.

denotes the action space, indicating the set of possible actions.

denotes the time step index, indicating the temporal sequence. This formulation selects the optimal policy by minimizing the loss function, thereby optimizing complex intervention scenarios.

④ Support rate improvement

where

denotes the support rate improvement, expressed as a percentage, with positive values indicating an increase in positive sentiment or support after intervention.

denotes the absolute change in the support rate, directly derived from Formulation (20) as the difference in support rates before and after intervention.

denotes the post-intervention support rate, calculated as the proportion of positive samples after applying the policy or intervention.

denotes the pre-intervention support rate, serving as the baseline proportion of positive samples. Division by

normalizes the change to a relative improvement.

⑤ State update stability

where

denotes the state update stability, represented as variance, with lower values indicating more consistent updates and reduced oscillation in the system.

denotes the number of time steps or update iterations.

denotes the update delta at step t.

denotes the mean update delta over all steps. The summation aggregates squared deviations from the mean, divided by

for sample variance.

⑥ Reduction in convergence iterations

where

denotes the reduction in convergence iterations, expressed as a percentage, with positive values indicating fewer iterations needed for convergence in the proposed model compared to baselines.

denotes the number of iterations for convergence in the baseline model.

denotes the number of iterations for convergence in the proposed model. The subtraction

captures the absolute reduction in iterations. Division by

normalizes to a relative improvement.

5.8. Intermediate Feature Regulation

The intermediate regulation layer optimizes feature robustness. The formulations are as follows [

44,

45].

① Feature Regulation Mechanism

where

denotes the regulated intermediate feature, representing the optimized feature output.

denotes the gating function, controlling the flow of information.

denotes the output of the attention mechanism, computed using the intermediate feature

and embedding vector

.

denotes the intermediate feature, serving as input to the attention mechanism.

denotes the embedding vector, providing contextual or learned representations.

denotes the noise term, introducing stochasticity for robustness. The formulation is as follows.

② Gating Function with Noise

where

denotes the output of the gating function, processing the input feature

.

denotes the activation function, introducing nonlinearity.

denotes the weight matrix, transforming the input feature.

denotes the input feature, serving as the input to the gating function.

denotes the bias vector, adjusting the transformed feature.

represents Gaussian noise with a mean of 0 and variance of 0.01.

③ Regulation MSE

where

denotes the regulation MSE, with lower values indicating minimal error in the regulated features, reflecting effective regulation.

denotes the number of samples or features.

denotes the regulated feature for the i-th sample, computed using Formulation (24).

denotes the target feature. The norm

denotes the squared L2 distance. The summation aggregates squared errors over samples, divided by

for the mean.

5.9. Multi-Agent Collaborative Decision-Making

The multi-agent coordination layer simulates the behaviors of entities such as government, media, and the public. The formulations are as follows.

① State-Action Value Function:

where

denotes the state-action value function, estimating the expected return for taking action

in state

.

denotes the mathematical expectation operator, computing the expected value over the distribution.

denotes the immediate reward received after taking action

in state

.

denotes the discount factor, weighting the importance of future rewards.

denotes the maximum state-action value for the next state

over all possible actions

.

denotes the current state.

denotes the current action.

denotes the next state.

denotes the next action.

② Policy Function:

where

denotes the policy function, representing the action probability distribution given state

; softmax is the normalization function; and

denotes the output of the policy network, parameterized by

, for state

. This formulation enables agents to flexibly select actions in dynamic public opinion environments.

③ Module Integration:

where

denotes the optimized feature after integration; ModuleFusion represents the module fusion function; and

are the key and value matrices, respectively. This formulation coordinates multi-agent collaboration and reduces public opinion conflicts.

④ Collaboration efficiency

where

denotes the collaboration efficiency, expressed as a percentage, with higher values indicating greater action consistency among agents.

denotes the number of time steps or interactions.

denotes the number of agents.

denotes the policy distribution for agent i at time t, computed using Formulation (26).

denotes the cosine similarity between policies of agents i and j, measuring alignment. The inner summation over pairs

aggregates pairwise alignments, divided by the number of unique pairs

. The outer summation over t averages across interactions, divided by

for overall efficiency.

⑤ Action accuracy

where

denotes the action accuracy, expressed as a percentage, with higher values indicating better match between predicted and actual actions.

denotes the number of samples or actions in the evaluation set.

denotes the indicator function: 1 if the predicted action

equals the ground truth action

, 0 otherwise.

denotes the predicted action for the i-th sample.

denotes the ground truth action, obtained from dataset observations or simulations. The summation aggregates correct predictions over all samples, divided by

for the average rate.

5.10. Output and Visualization

The output layer generates public opinion trends, intervention strategies, and risk warnings. The formulation is as follows:

where

denotes the conditional probability distribution of the predicted label

given input feature

.

denotes the normalization function, converting raw scores into a probability distribution.

denotes the weight matrix of the output layer, transforming the input feature.

denotes the input feature, representing the data provided to the model.

denotes the bias vector of the output layer, adjusting the transformed feature. The model outputs predictions of “dual carbon” public opinion trends, intervention strategies, and risk warnings, providing intuitive decision support for policymakers.

6. Experiments and Analysis

To validate the effectiveness of the proposed collaborative framework that integrates large language models, diffusion models, and MASs for analyzing public opinion on the “dual carbon” policy, this study conducts a comprehensive set of experiments using an open-source Twitter dataset related to climate change. The experimental design incorporates multiple analytical dimensions, including causal inference, dynamic intervention, and multi-agent collaboration, and compares the results with several baseline models.

6.1. Dataset and Experimental Setup

The experiments utilize the Twitter Climate Change Sentiment Dataset, which contains 43,943 tweets [

46]. The dataset covers a wide range of topics such as climate change, carbon emissions, carbon taxes, and renewable energy. Each entry includes the tweet text, sentiment label, and an anonymized user ID. Meanwhile, to improve model robustness, data augmentation techniques are applied to expand the dataset, generating diverse semantic variants to better capture the complexity and dynamics of “dual carbon” public opinion.

The experimental setup consists of three key components. First, a causal network encompassing policy, technology, economy, and public sentiment is constructed to quantify the effects of policy interventions. Second, the diffusion of public opinion following policy announcements is simulated to optimize support levels and emotional stability. Third, strategic interactions among stakeholders such as governments, enterprises, and the public are modeled to evaluate the efficiency of collaborative decision-making. Baseline models used for comparison include Latent Dirichlet Allocation for topic modeling, BERT as a representative pre-trained language model, and the SIR model for simulating information diffusion dynamics.

6.2. Evaluation Metrics and Analysis of Results

This subsection presents a detailed analysis of the experimental results across key dimensions: causal inference, dynamic intervention, and multi-agent collaboration. Comparisons with baseline models (LDA, BERT, and SIR) highlight the proposed framework’s superior performance, particularly in terms of accuracy, responsiveness, and coordination. Additionally, we discuss the evolving nature of evaluation metrics for deep generative models and agent-based simulations, emphasizing the need for ongoing adaptations to better align with real-world policy dynamics.

6.2.1. Causal Inference

The primary evaluation metrics include the F1 score and the Area Under the Curve (AUC), which assess classification performance and the model’s discrimination ability, respectively.

where

denotes the F1 score, a harmonic mean of precision and recall, measuring the model’s classification performance.

denotes the ratio of true positives to the total predicted positives, quantifying prediction accuracy,

= TP/(TP + FP).

denotes the ratio of true positives to the total actual positives, measuring the model’s ability to identify positive instances,

= TP/(TP + FN). TP denotes true positives, the number of correctly predicted positive instances. FP denotes false positives, the number of incorrectly predicted positive instances. FN denotes false negatives, the number of positive instances incorrectly predicted as negative.

where

denotes the Area Under the Curve, specifically the area under the Receiver Operating Characteristic (ROC) curve, measuring the model’s discriminative ability.

denotes the True Positive Rate, representing the ratio of true positives to total actual positives, TPR = TP/(TP + FN).

denotes the False Positive Rate, representing the ratio of false positives to total actual negatives, FPR = FP/(FP + TN). TP denotes true positives, the number of correctly predicted positive instances. FP denotes false positives, the number of incorrectly predicted positive instances. FN denotes false negatives, the number of positive instances incorrectly predicted as negative. TN denotes true negatives, the number of correctly predicted negative instances.

denotes the definite integral from 0 to 1, computing the area under the

curve with respect to

.

denotes the differential of the False Positive Rate, used as the integration variable.

Other metrics include causal chain precision, which measures the proportion of correctly predicted causal relationships, see Equation (18) for details; counterfactual inference MSE, representing the MSE between counterfactual predictions and actual outcomes, see Equation (19) for details; and representation quality separation, indicating the inter-class separation degree of feature embeddings based on cosine similarity, see Equation (11) for details.

The experimental results, along with

Table 1, demonstrate that the proposed model significantly outperforms LDA, BERT, and SIR in terms of F1 score, AUC, and causal chain precision. The model also achieves the lowest counterfactual inference MSE, indicating higher accuracy in causal prediction. The representation quality separation is relatively high, reflecting the advantage in feature expression diversity.

In comparison to baselines, the proposed framework’s integration of reinforcement learning-enhanced LLMs allows for more nuanced causal modeling, capturing subtle interdependencies between policy announcements and public sentiment shifts that LDA and BERT often overlook due to their lack of dynamic adaptation. The SIR model, while effective for basic diffusion, fails to incorporate multi-faceted causal chains, leading to lower precision. These improvements translate to a 12.4–35.8% gain in F1 score and a 13.3–32.4% gain in AUC over the baselines, underscoring the framework’s enhanced accuracy in inferring causal links from fragmented social media data.

6.2.2. Dynamic Intervention

represents the entropy value at time step , which measures the uncertainty of the distribution. A decrease in entropy indicates a reduction in public opinion disorder and an increase in support rate. The formulations are as follows.

① Temporal entropy

where

denotes the temporal entropy at time step

, quantifying the uncertainty of the state distribution.

denotes the summation operator, aggregating terms over all possible states

.

denotes the probability of state

at time step

.

denotes the logarithm function, typically the natural logarithm, used to compute the entropy term.

② Decrease in entropy

where

denotes the decrease in entropy, expressed as a percentage, with positive values indicating reduced uncertainty and improved stability in public opinion dynamics.

denotes the entropy before intervention, computed using Formulation (31) as

, where

is the probability distribution of states pre-intervention.

denotes the entropy after intervention, similarly computed using Formulation (29) as

, which is the post-intervention probability distribution. The subtraction

captures the absolute reduction. Division by

normalizes to a relative decrease.

Other evaluation metrics include the following: support rate improvement, defined as the increase in the proportion of positive sentiment after intervention, see Equation (21) for details; mean absolute error (MAE) of propagation paths, which measures the average absolute deviation between predicted and actual diffusion paths, see Equation (9) for details; Dynamic Time Warping (DTW) distance, which quantifies the similarity between propagation sequences, see Equation (14) for details; sample coverage rate, indicating the proportion of real data covered by the generated samples, see Equation (10) for details; generation quality improvement, measured by the consistency between the generated and real data distributions using KL divergence, see Equation (15) for details; and state update stability, represented by the variance in policy updates, see Equation (22) for details.

As shown in

Table 2, the proposed model achieves a 15.2% increase in support rate and a 12.6% reduction in entropy. It also records the lowest propagation path MAE and DTW distance, with a sample coverage rate of 96% and a 10% improvement in generation quality. The state update variance is 0.05, outperforming baseline models. These results demonstrate the model’s robustness in dynamic diffusion prediction and intervention optimization, attributed to the spatiotemporal modeling capacity of the diffusion module and the efficiency of knowledge distillation.

The proposed framework employs diffusion models enhanced by LLMs to simulate real-time opinion spreads, allowing for proactive adjustments, with support rate improvements and entropy reductions that are 50–157.1% higher than baselines. Compared to BERT and LDA, the framework lowers MAE by 51.7–60% and DTW by 62.5–67.9%, and boosts sample coverage by 7.9–18.5%, indicating more stable and responsive emotional outcomes. This highlights significant improvements in intervention responsiveness, as the multi-agent system enables rapid coordination between simulated stakeholders, optimizing support for “dual carbon” policies in dynamic scenarios.

The evaluation metrics for deep generative models, such as diffusion processes, are still evolving, particularly in capturing long-term stability. For instance, DTW distance assesses dynamic matching by quantifying the similarity between predicted and actual opinion propagation sequences, making it suitable for temporal evolution analysis in policy contexts; sample coverage rate measures the representativeness of generated samples against real data, supporting the simulation of diverse opinions. Both require further adaptation, such as incorporating temporal decay factors to reflect real-world opinion fatigue or integrating group fairness checks to address bias amplification risks. Agent-based simulations need continuous metric refinement, incorporating real-time feedback loops to ensure both stability and fairness in influencing “dual carbon” policy responses while reducing uncertainties in public discourse.

6.2.3. Multi-Agent Collaboration

The evaluation metrics include collaboration efficiency, which reflects the consistency of actions among multiple agents and is measured by the action alignment ratio, see Equation (27) for details; action accuracy, representing the match between the predicted and actual actions, see Equation (28) for details; reduction in convergence iterations, indicating the percentage decrease in the number of iterations required for convergence, see Equation (23) for details; feature robustness MSE, measuring the MSE of features under noise, see Equation (12) for details; and regulation MSE, representing the error after feature modulation, see Equation (25) for details.

The results show, along with

Table 3, that the proposed model outperforms the baselines with a collaboration efficiency of 8.7%, an action accuracy of 0.92, and a 25% reduction in convergence iterations. It also achieves the lowest feature robustness MSE and regulation MSE. These performance advantages stem from the MAS-based state-action value function and optimized module fusion.

The MAS component, integrated with LLMs and diffusion models, models interactions among governments, enterprises, and the public, leading to more efficient decision-making. The proposed model outperforms baselines with collaboration efficiency that is 77.6–234.6% higher and action accuracy that is 16.5–48.4% higher than SIR’s and BERT’s. LDA, being non-interactive, scores lowest. The framework’s reinforcement learning enables faster convergence, demonstrating marked improvements in coordination by adapting to diverse agent behaviors and reducing MSE metrics by 55.6–75%.

Agent-based simulations for policy influence require constant metric adaptation, as real-world responses involve unpredictable human elements. Metrics like collaboration efficiency must incorporate diversity indices to handle evolving scenarios, ensuring the framework remains relevant for shaping “dual carbon” discourse while addressing potential biases in multi-stakeholder alignments.

6.2.4. Ablation Study

To evaluate the contribution of each module, ablation experiments were conducted. The results of the ablation study are presented in

Table 4.

The ablation study validates the importance of , , , , and . The formulas for each component can be found in Equation (1) through Equation (6). Removing any of these components results in notable performance degradation, especially in collaboration efficiency, which can decline by up to 26.4%, and in the improvement of the support rate, which can decrease by as much as 15.8%. More specifically, removing the action probability increases the randomness in action selection, leading to a 25.3% reduction in collaboration efficiency and a 7.6% decrease in action accuracy, since this term defines the probability distribution required for generating the policy . Eliminating the learning rate reduces the reduction in convergence iterations by 40% and lowers the improvement in the support rate by 11.2% because a fixed learning rate fails to properly balance exploration and refinement. The removal of the entropy regularization term weakens the exploratory capacity of the policy, resulting in a 19.5% drop in collaboration efficiency and a 7.9% decrease in support rate improvement. Excluding the clipped surrogate objective leads to overly aggressive updates during policy optimization, decreasing the convergence gain by 36% and lowering action accuracy by 6.5%. The absence of the state value function causes the policy to become short-sighted, which reduces the improvement in the support rate by 13.2% and leads to a 26.4% decline in collaboration efficiency. Finally, removing the reward signal , which ensures consistency in distributional learning, causes the F1 score to drop by 4.4% and support rate improvement to decrease by 15.8%, ultimately undermining the effectiveness of policy interventions. These findings demonstrate that each element plays an essential role, and their synergy is vital for effective causal reasoning and dynamic intervention in the context of “dual-carbon” public opinion management.

6.2.5. Evaluation of LLM Components in Reinforcement Learning Settings

In the collaborative framework integrating LLMs, diffusion models, and MASs, LLMs generate diverse “dual-carbon” policy recommendations and sentiment explanations through policy optimization in RL settings, significantly enhancing the efficacy of causal inference, dynamic intervention, and multi-agent collaboration. Based on previous experimental results, this subsection systematically evaluates LLM performance from the perspectives of generation capability using the metric, operationalization of RLHF, and modular collaborative contributions via Pearson correlation analysis. It reveals the LLM’s ability to mitigate public opinion uncertainty through diverse outputs and provide actionable insights for policy interventions.

① Core Role of the Evaluation Metric

Generative programming has advanced computationally supported coding and language models, establishing

metrics for evaluating output diversity and correctness. Allamanis et al. [

47] outlined challenges in code generation, emphasizing robust quality assessment. Han et al. [

48] explored pre-trained models’ applications, including code understanding. Xu et al. [

49] introduced benchmarks for large language models in code generation, promoting

adoption. Chen et al. [

50] pioneered

to assess functional correctness in code synthesis. Wong et al. [

51] analyzed natural language and big code understanding, reinforcing

role. Zan et al. [

52] surveyed LLMs in NL2Code tasks, solidifying

as a standard. Wong et al. [

53] enhanced reliability via RLHF, applying

to complex tasks. For “dual-carbon” tasks, where single outputs limit LLM capabilities,

, defined in Formulation (30), ensures diverse, accurate policy recommendations.

where

denotes the probability that at least one sample is correct among

generated outputs.

denotes the mathematical expectation operator, averaging the metric.

represents the total number of generated samples.

represents the number of correct samples determined by predefined test criteria.

and

represent binomial coefficients used to calculate the probability estimate’s combinations.

The experiment sampled 1000 prompts from the Twitter Climate Change Sentiment Dataset (43,943 tweets), generating 100 samples per prompt. To balance diversity and correctness, the LLM’s was selected with k = 10, adapting to the task’s multi-solution demands. These pass@10 values provide the data foundation for subsequent correlation analysis.

② RLHF

RLHF optimizes LLMs through human preferences, ensuring the practicality and ethicality of generated outputs, indirectly improving pass@10 accuracy. In “dual-carbon” tasks, the focus is on outputs aligned with “dual-carbon” goals, such as enhancing public support while avoiding the amplification of misinformation. These signals are in the form of pairwise comparisons, capturing nuanced human judgments on output quality, relevance, and ethical considerations, such as avoiding exaggerated climate claims or cultural insensitivity in sentiment explanations.

The reward function is designed based on the Bradley–Terry model [

54], which estimates relative preferences from pairwise data:

where

is the reward function,

are the model parameters, and

is the conditional probability that output A is preferred over B. The alignment mechanism employs PPO, which prevents drastic changes through clipped policy updates, ensuring training stability and avoiding divergence. This clipping maintains model robustness by constraining the KL divergence between new and old policies, while incorporating bias mitigation checks. For example, during training, outputs are screened to avoid potential biases in public opinion frameworks, ensuring fairness to different stakeholder groups in “dual-carbon” discussions. RLHF significantly reduces hallucination risks, showing a strong negative correlation with counterfactual MSE, validating its role in enhancing model robustness and ensuring ethical alignment by optimizing the accuracy of generated outputs.

③ Performance Analysis and Collaborative Contributions

To quantify the LLM’s contributions in RL settings, Pearson correlation coefficient (

) analysis is used to examine the linear associations between pass@10 and framework metrics, with the following formula:

where

is the

value,

is the framework metric, and

,

are the means. The statistical significance of the correlation coefficient is calculated via

-test, with the following

-statistic formula:

where n = 10 and the degrees of freedom are n − 2 = 8. The

p-value is calculated via a two-tailed

t-distribution test:

where

is the right-tail probability of the t-distribution’s cumulative distribution function at

, reflecting the probability of observing the current or more extreme t-value under the null hypothesis.

Table 5 analyzes the collaborative contributions of

via Pearson correlation coefficients (r), demonstrating how it significantly enhances the performance of causal inference, dynamic intervention, and multi-agent collaboration through diverse outputs.

The results indicate that shows strong positive correlations with causal inference metrics, suggesting that diverse outputs improve classification and prediction performance; correlations with dynamic intervention metrics indicate that high reduces uncertainty and propagation errors; correlations with multi-agent collaboration metrics validate that aligned outputs enhance collaboration consistency and robustness. All r values are statistically significant (p < 0.05), with an average r > 0.80, indicating that significantly drives framework performance.

6.3. Results Analysis