1. Introduction

Artificial intelligence (AI) has evolved from symbolic systems in the 1950s to advanced machine learning architectures, transforming education through breakthroughs in image recognition, natural language processing, and adaptive learning [

1]. Enabled by increased computational power and data availability, AI has reshaped teaching methods and expanded learning beyond traditional classroom boundaries [

2,

3].

As AI reconfigures labor markets and economic structures [

4], educational institutions face unprecedented opportunities and challenges. The convergence of digital technologies with physical learning environments necessitates adaptation of traditional pedagogical approaches to address emerging educational demands [

5]. This transformation positions AI as a catalyst for educational innovation, enhancing learning experiences and preparing individuals for an increasingly digital future [

6]. The 2021 Horizon Report’s recognition of AI as a key educational technology has intensified research interest in Artificial Intelligence in Education (AIED), while simultaneously highlighting implementation complexities.

AI demonstrates significant potential in educational advancement, supporting both structured academic programs and continuous learning initiatives [

7]. Its applications span four key domains: analytical assessment, intelligent instruction, evaluation systems, and personalized learning platforms [

8]. Current educational uses of AI fall into three main categories: (i) Conversational AI systems like ChatGPT that handle routine queries and free educators for higher-order tasks [

9]; (ii) Intelligent tutoring systems offering real-time personalized feedback [

10]; and (iii) Adaptive learning platforms such as Socratic and Habitica, which adjust content dynamically based on student performance [

11].

AI holds promise for enhancing equity and teaching quality [

12,

13]. It facilitates personalized learning and relieves educators from repetitive tasks, allowing focus on complex pedagogy [

14]. Yet, its integration raises concerns about authenticity, job security, and human connection [

8]. Automation risks reducing the nuanced feedback essential for effective education. Moreover, ethical issues such as diminished teacher autonomy, student data surveillance, and opaque algorithmic decisions have drawn increasing attention [

15], underscoring the need for human-centered, transparent AI design.

Further barriers include data privacy risks, algorithmic bias, and financial constraints—particularly in resource-limited institutions [

16,

17]. These complexities suggest that AI adoption is not merely a technical matter, but a sociotechnical process shaped by individual, organizational, and systemic dynamics. Global initiatives such as the UNESCO AI Competency Framework for Teachers emphasize the educator’s role in ensuring responsible adoption [

18], making it essential to understand the psychological and contextual factors influencing their engagement.

To this end, Rogers’ Diffusion of Innovations (DoI) theory (2014) [

19] provides a useful lens, outlining five key attributes—relative advantage, compatibility, complexity, trialability, and observability—that affect adoption. Prior research confirms DoI’s utility in explaining divergent faculty responses to educational technologies [

20,

21]. Integrating this framework helps reveal how perceived benefits and risks shape AI adoption decisions, particularly in constrained institutional settings [

17].

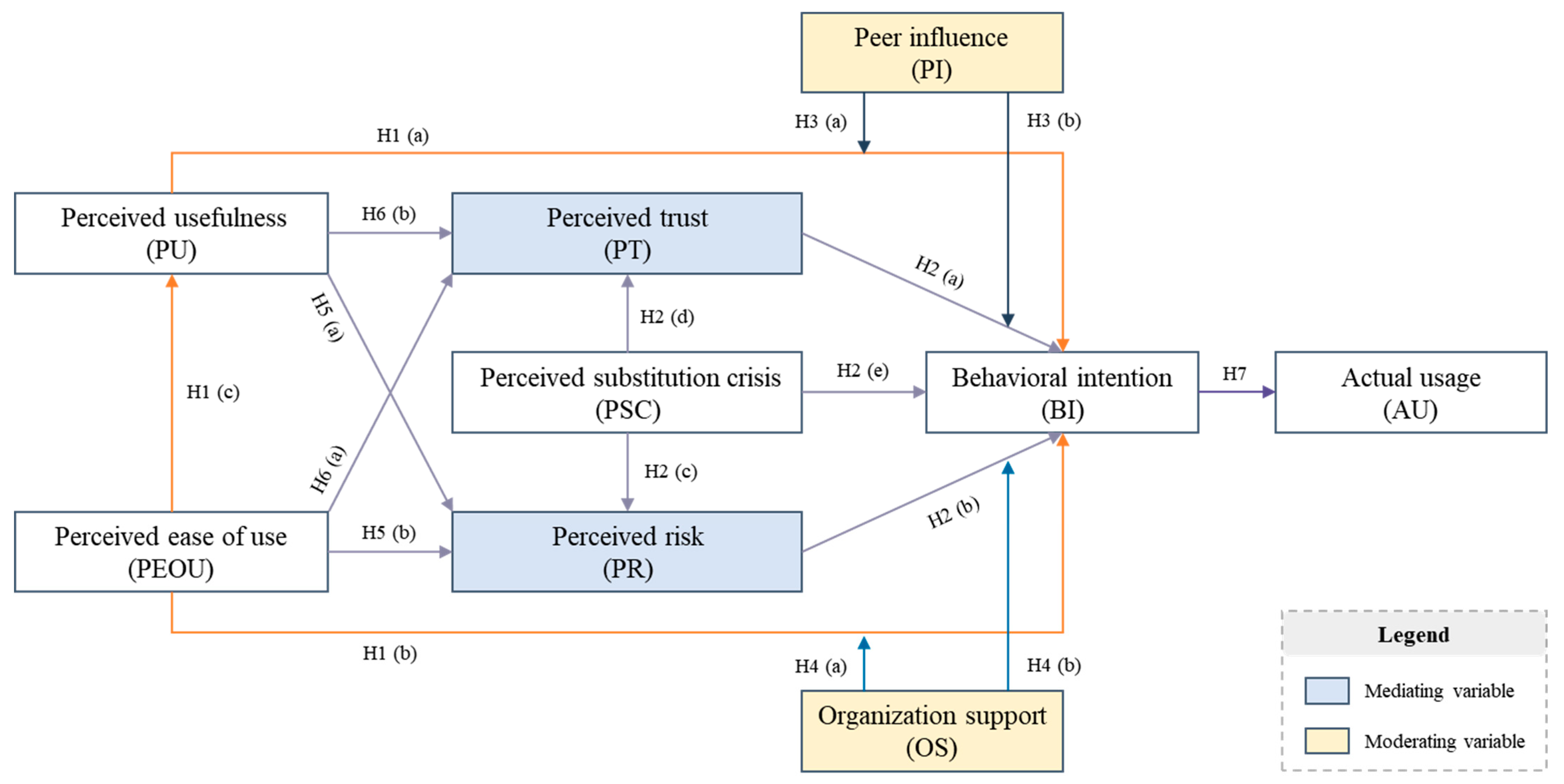

This tension between technological advancement and educational integrity positions university faculty as central actors in the AI adoption process. Their perceptions determine whether AI functions as a collaborative pedagogical tool or a disruptive force [

22]. Understanding these dynamics requires examining not only educators’ perceptions—such as trust, risk, and usefulness—but also how these translate into behavioral intention and actual usage. To capture this process, this study adopts a perception–intention–behavior framework to explore the psychological and organizational factors influencing AI adoption among university educators. Accordingly, this study seeks to address the following research questions:

- (1)

How do university teachers perceive the role and impact of AI integration in higher education?

- (2)

What individual, interpersonal, and organizational factors influence university teachers’ behavioral intention and actual use of AI technologies in teaching?

The study is structured in the following manner.

Section 2 presents the theoretical foundation and hypnoses development.

Section 3 details the methodology, including data collection, sample characteristics, and analytical techniques used.

Section 4 presents the results and analysis.

Section 5 offers conclusions and future outlook, considering the implications of the findings for educational reform and policy.

4. Results and Analysis

4.1. Common Method Bias

To ensure research validity, we implemented comprehensive measures to address common method bias (CMB). Procedurally, we enhanced survey design through careful question construction, extensive pre-testing for clarity, and strict anonymity protocols to elicit authentic responses [

61]. Participant trust was further strengthened by transparent communication of research objectives and data confidentiality assurances. Statistically, we employed Harman’s single factor test, which revealed the maximum variance explained by any single factor was substantially below the 50% threshold, indicating robust protection against CMB [

62]. This dual-approach strategy, combining methodological rigor with statistical validation, aligns with best practices in survey research methodology [

63] and strengthens the credibility of our findings. Additionally, the low variance explained by individual factors suggests successful measurement design and data collection procedures, reinforcing the reliability of our analytical framework.

4.2. Measurement Model Evaluation

The Partial Least Squares Structural Equation Modeling (PLS-SEM) methodology encompasses two critical phases: measurement model assessment and structural model assessment. During the initial phase, researchers evaluate the measurement model to validate the reliability, convergent validity, and discriminant validity of the constructs. This comprehensive evaluation process examines both the internal consistency and the degree of concordance among indicators representing each construct.

Table 2 presents a detailed interpretation of the model estimates.

The assessment of construct reliability utilized two widely acclaimed metrics in structural equation modeling: Cronbach’s α and composite reliability (CR). Cronbach’s α, pioneered by Lee Cronbach, quantifies a construct’s internal consistency through the analysis of inter-item correlations [

64]. Internal consistency indicates the degree of interrelation among individual items within a construct, ensuring their collective representation of the underlying theoretical concept. It is noteworthy that Cronbach’s α exhibits an upward trend as the correlation among variables within a construct intensifies. In reliability assessment, a Cronbach’s α value of 0.70 or higher meets acceptable standards, while values exceeding 0.70 are prescribed for robust models [

65]. As evidenced in

Table 2, all constructs demonstrated Cronbach’s α values surpassing the 0.70 threshold, thereby confirming the data’s reliability and precision.

Complementarily, CR was computed to evaluate construct consistency by examining the external loading values of indicators [

66]. CR offers a more comprehensive reliability measure compared to Cronbach’s α, as it accounts for the differential contributions of individual indicators. A CR value exceeding the conventional threshold of 0.70 signifies robust internal consistency among a construct’s indicators. As illustrated in

Table 2, all CR scores exceeded the recommended benchmark, further substantiating the measurement model’s reliability.

The PLS-SEM analysis method involves two key stages: measurement model assessment and structural model assessment. In the first stage, the measurement model was evaluated to ensure the reliability, convergent validity, and discriminant validity of the constructs. This process involved examining the internal consistency and the degree of agreement among the indicators representing each construct.

Table 2 provides a detailed interpretation of the model estimates.

Furthermore, this study employed the Fornell-Larcker correlation matrix, the square root of the AVE, and the Heterotrait-Monotrait (HTMT) ratio to evaluate discriminant validity [

67]. These methods collectively provide a robust assessment of whether constructs are sufficiently distinct from one another.

Table 3 presents the Fornell-Larcker correlation matrix, in which the diagonal elements represent the square root of the AVE for each construct. In accordance with the Fornell-Larcker criterion, the square root of the AVE for each construct exceeds its correlations with any other construct. This result demonstrates strong discriminant validity, as each construct shares more variance with its own indicators than with other constructs in the model.

Table 4 presents the Heterotrait-Monotrait (HTMT) ratios, calculated following the methodological guidelines established by Hair et al. (2023) [

68]. The HTMT ratio serves as a sophisticated measure of discriminant validity by comparing the heterogeneity between constructs to their internal homogeneity, offering a more stringent test of discriminant validity that accounts for both measurement error and construct overlap. All HTMT values reported in

Table 4 fell well below the conservative threshold of 0.85, with the highest observed ratio being 0.827, substantially below this critical value. These results provide compelling evidence of discriminant validity, demonstrating that the constructs are empirically distinct and capture unique theoretical concepts. This finding is especially significant given that the 0.85 threshold represents a conservative criterion in the methodological literature. Some scholars suggest that values up to 0.90 may be acceptable [

69], making the results particularly robust.

4.3. Structural Model Evaluation

This study applied PLS-SEM to analyze the relationships between the constructs in the structural model, utilizing SmartPLS 4 for hypothesis testing. A comprehensive sample of 487 valid responses was analyzed, and bootstrapping with 5000 subsamples was employed to ensure the robustness and statistical reliability of the findings. The bootstrapping procedure’s large number of subsamples helps minimize sampling error and provides stable parameter estimates, particularly crucial for examining complex path relationships in educational technology adoption models. Control variables were not included in the model, consistent with prior research in technology acceptance studies [

70], as the focus was on examining the direct effects of key theoretical constructs.

Table 5 summarizes the main effects, providing detailed insights into the factors that influence teachers’ BI and AU of AI tools in higher education.

PU demonstrated a significant positive effect on BI (β = 0.550, t = 1.687, p = 0.091), supporting H1(a). This finding suggests that teachers are more inclined to adopt AI tools when they perceive them as useful for improving teaching effectiveness, enhancing student engagement, and optimizing course design. However, the relatively marginal significance level implies that other factors, such as perceived risks, institutional context, or individual differences in technology readiness, may moderate this relationship, necessitating further investigation in future research. This finding aligns with core technology acceptance theories while highlighting the complexity of AI adoption in educational settings.

Similarly, PEOU exhibited a strong positive influence on BI (β = 0.551, t = 6.482, p < 0.001), validating H1(b). This robust relationship indicates that when AI tools are intuitive and user-friendly, teachers are more likely to integrate them into their teaching practices. The findings emphasize the critical importance of designing AI tools with simplicity and ease of use to encourage widespread adoption among educators in higher education settings, particularly given the diverse technological proficiency levels among faculty members.

PEOU also significantly influenced PU (β = 0.417, t = 4.893, p < 0.001), supporting H1(c). This finding reflects the intertwined nature of ease of use and perceived usefulness, where user-friendly tools not only lower barriers to adoption but also enhance teachers’ perceptions of their practical utility in educational contexts. This relationship underscores the importance of considering both usability and functionality in AI tool development, particularly in the complex context of higher education teaching.

Additionally, PT positively impacted BI (β = 0.482, t = 8.951, p < 0.001), affirming H2(a). The path coefficient and highly significant t-value highlight trust as a crucial determinant of AI adoption intentions. The findings suggest that trust may be particularly important for AI adoption compared to traditional educational technologies, given AI’s unique characteristics such as autonomous decision-making and data processing capabilities. Institutions must build trust by ensuring robust data protection measures and transparent policies to support successful AI adoption in education.

In contrast, PSC yielded mixed results. While PSC negatively influenced PT (β = −0.131, t = 5.031, p < 0.001), its direct effect on BI (β = −0.048, t = 1.296, p = 0.201) was insignificant, leading to the rejection of H2(e). The significant negative effect on trust but insignificant direct effect on behavioral intention suggests a complex relationship between job displacement concerns and AI adoption. This nuanced finding suggests that while concerns about AI replacing teachers may erode trust in AI systems, they do not directly deter adoption intentions in this educational context. The relatively small path coefficient from PSC to PT (β = −0.131) indicates that while job displacement concerns influence trust, other factors such as system reliability and data privacy may be more crucial in trust formation. These findings highlight the critical need for addressing teachers’ concerns about their professional role in an AI-enhanced educational environment to ensure smooth and effective technology integration, particularly through professional development programs and institutional policies that emphasize AI as a complementary tool rather than a replacement for human educators.

4.4. Moderation Analysis

This study examined the moderation effects of PI and OS on BI.

Table 6 summarizes the results of the path analysis for moderation effects, revealing complex interactions between social, organizational, and individual factors in AI adoption decisions.

The moderation effect of PI on the relationship between PU and BI was not significant (β = 0.035, t = 0.621, p = 0.511), indicating that PU’s influence on BI is independent of peer interactions. This finding suggests that teachers’ evaluation of AI tools’ usefulness is primarily driven by intrinsic assessments and personal teaching experiences, rather than being substantially influenced by colleagues’ opinions. This independence from peer influence in usefulness assessment highlights the importance of demonstrating concrete benefits to individual teachers’ pedagogical practices. However, PI significantly moderated the relationship between PT and BI (β = 0.244, t = 2.172, p = 0.035), highlighting the crucial role of social dynamics in trust formation and adoption decisions. The positive moderation effect suggests that teachers are more likely to trust and subsequently adopt AI tools when colleagues demonstrate successful usage experiences, aligning with core tenets of Social Influence Theory. This social validation effect is particularly important in educational settings where peer learning and collaborative professional development are common.

The moderation effect of OS on the relationship between PEOU and BI was significant and substantial (β = 0.289, t = 5.211, p < 0.001), suggesting that institutional support mechanisms significantly enhance the impact of PEOU on adoption intentions. The strong positive moderation effect underscores the critical need for robust organizational support systems to reinforce teachers’ adoption intentions, particularly when dealing with sophisticated AI technologies. This finding implies that even user-friendly AI tools may face adoption barriers without adequate institutional backing. Conversely, OS did not significantly moderate the relationship between PR and BI (β = 0.011, t = 0.782, p = 0.619), indicating that general organizational support measures are insufficient to address specific risk-related concerns. Targeted measures, such as transparent data security protocols and system reliability guarantees, are essential to mitigate PR and encourage AI adoption.

4.5. Meditation Analysis

Table 7 presents the mediation effects of PR and PT on BI. The mediation analysis revealed complex relationships between perceptions, risk assessment, trust formation, and adoption intentions in the context of AI tool integration in higher education.

The mediation effect of PR on the relationship between PU and BI was insignificant (β = −0.015, t = 0.178, p = 0.859), leading to the rejection of H5(a). This notable finding indicates that perceptions of AI tools’ utility do not effectively mitigate fundamental risk concerns such as data privacy, algorithmic bias, or system reliability. The weak mediation effect suggests that PR may be more strongly influenced by external factors such as institutional policies, technical safeguards, or explicit security assurances, highlighting the critical need for targeted risk mitigation strategies beyond merely emphasizing AI’s practical benefits. This finding underscores the importance of addressing security and privacy concerns directly through robust institutional frameworks. Conversely, PR significantly mediated the relationship between PEOU and BI (β = −0.259, t = 3.931, p < 0.001), supporting H5(b). The substantial negative mediation effect indicates that user-friendly and intuitive AI tools effectively reduce risk perceptions, thereby enhancing adoption intentions. This robust finding emphasizes the crucial importance of designing accessible and transparent tools to alleviate operational concerns and foster confidence in AI usage. The significant mediation suggests that well-designed interfaces and intuitive interactions serve as powerful mechanisms for reducing perceived risks associated with AI adoption.

PT demonstrated a significant mediating effect between PU and BI (β = 0.322, t = 2.011, p = 0.045), supporting H5(a). This substantial positive mediation underscores the pivotal role of trust in translating perceived usefulness into concrete adoption intentions. The finding suggests that teachers who recognize AI tools’ potential for improving teaching outcomes are more likely to develop trust in these systems, which subsequently serves as a critical bridge facilitating adoption decisions. This highlights the importance of demonstrating clear pedagogical benefits while simultaneously building trust through transparent and reliable system performance. Similarly, the mediation effect of PT on the relationship between PEOU and BI was marginally significant (β = 0.149, t = 1.782, p = 0.075), supporting H5(b). While this marginal significance suggests that ease of use contributes to trust formation, the relatively modest effect size indicates that its impact may vary based on multiple factors, including tool design sophistication, institutional support mechanisms, and peer endorsements. This nuanced finding suggests that while user-friendly interfaces can help build trust, other contextual factors play important roles in strengthening this relationship and ultimately influencing adoption intentions.

4.6. Model Explanatory and Predictive Power

The empirical validation of the research model was conducted through a comprehensive assessment of both explanatory and predictive capabilities using multiple sophisticated analytical metrics. The model’s explanatory power was primarily evaluated through the coefficient of determination (R

2), which quantifies the proportion of variance explained in endogenous constructs. All R

2 values substantially exceeded the conservative threshold of 0.10 established by Bagozzi and Yi (1998) [

65], demonstrating robust explanatory capacity. Notably, the model explained 67.2% of the variance in BI to adopt AI tools (R

2 = 0.672), representing a substantial level of explanatory power. The model also effectively explained the variance in PT (R

2 = 0.538), PR (R

2 = 0.412), and AU (R

2 = 0.389), indicating strong predictive relationships among the constructs.

The model’s structural validity was further substantiated by the Standardized Root Mean Square Residual (SRMR) value of 0.043, which falls well below the stringent threshold of 0.080 recommended by Hair et al. (2020) [

71]. This demonstrates excellent model fit and confirms the theoretical framework’s alignment with empirical observations. The effect size analysis (f

2) revealed hierarchical impacts among the constructs, with PT exhibiting a large effect on BI (f

2 = 0.35), while PR and PU demonstrated moderate effects (f

2 = 0.21 and 0.18, respectively). These findings align with recent literature suggesting the paramount importance of trust-building mechanisms in technology adoption contexts [

72].

The model’s predictive capability was rigorously assessed through cross-validated redundancy analysis (Q2) using the blindfolding procedure with an omission distance of 7. The analysis yielded consistently positive Q2 values across all endogenous constructs, surpassing the null threshold and confirming the model’s predictive relevance (Shahzad et al., 2024). The hierarchical pattern of Q2 values—BI (0.571), PT (0.386), PR (0.342), and AU (0.321)—reveals particularly strong predictive accuracy for behavioural intentions, suggesting the model’s enhanced capability in forecasting teachers’ propensity to adopt AI tools in higher education settings. The notably high Q2 value for BI (0.571) indicates that the model demonstrates superior predictive power for this crucial outcome variable, substantially exceeding typical predictive relevance thresholds in technology adoption research.

4.7. Discussion

The findings of this study shed light on the complex interplay of cognitive, affective, and contextual factors influencing university teachers’ adoption of AI tools in higher education.

One contribution is the demonstrated mediating role of PT between cognitive appraisals (PU and PEOU) and behavioral intention (BI). This highlights that trust is not merely an external factor, but an affective conduit that transforms rational assessments into committed adoption decisions. In contrast, PR functioned more as a contextual inhibitor, particularly shaped by perceived ease of use—suggesting that operational complexity, rather than perceived utility, is the main trigger of risk perceptions. This nuance deepens our understanding of risk cognition in educational AI, a relatively underexplored domain in existing TAM- or UTAUT-based models.

Beyond individual psychology, this study foregrounds the significance of social and institutional moderators—specifically, PI and OS—in amplifying or suppressing adoption pathways. These findings emphasize that AI adoption is not merely a personal decision, but one shaped by interpersonal trust networks and institutional enablers, aligning with Teo (2011) [

73], Zhao & Frank (2003) [

74].

Additionally, the study introduces PSC as a novel, emotionally charged construct that interacts with trust but not directly with behavioral intention. This suggests that identity threat—a teacher’s fear of being replaced by AI—undermines affective receptivity without necessarily deterring pragmatic engagement. This insight contributes to the growing conversation around human-machine role negotiation in AI-enhanced education, indicating the importance of reframing AI not as a competitor, but as a collaborator.

These patterns resonate closely with Everett Rogers’ DoI theory. Constructs like PU and PEOU mirror Rogers’ notions of relative advantage and complexity, while PI and OS reflect the importance of observability, trialability, and system readiness in facilitating adoption. By integrating DoI into the interpretation of results, this study provides a dual-theoretical perspective that enriches our understanding of AI diffusion in complex organizational environments like higher education.

5. Conclusions and Implications

This study investigated the factors influencing university teachers’ adoption of AI tools in higher education, employing a PLS-SEM approach. The findings highlight the pivotal roles of PU, PEOU, PT, and PR in shaping teachers’ BI and AU of AI tools. The study concluded that: (i) PU and PEOU emerged as significant predictors of BI, indicating that teachers are more likely to adopt AI tools when they perceive them as beneficial and intuitive. PEOU also exhibited a strong influence on PU, highlighting the intertwined relationship between ease of use and perceived utility. (ii) PT played a pivotal mediating role in translating PU and PEOU into stronger adoption intentions, while PR served as a barrier to adoption. Notably, while PR was significantly mitigated by PEOU, it was not influenced by PU, suggesting that risk perceptions are more closely tied to the perceived simplicity and operational feasibility of AI tools than their utility. This highlights the importance of designing accessible and user-friendly tools to alleviate operational concerns and foster adoption. (iii) The moderating roles of PI and OS further emphasize the importance of social and institutional contexts in facilitating AI adoption. PI significantly strengthened the relationship between PT and BI, suggesting that peer demonstrations and endorsements can enhance trust and encourage adoption. OS, on the other hand, amplified the effect of PEOU on BI, underscoring the necessity of robust institutional support systems, such as training programs and technical resources, to reinforce teachers’ intentions to integrate AI tools into their teaching practices. Additionally, PSC demonstrated a complex relationship with adoption: while it negatively influenced PT (β = −0.131, t = 5.031, p < 0.001), its direct effect on BI was insignificant (β = −0.048, t = 1.296, p = 0.201). This nuanced finding suggests that while concerns about AI replacing teachers may erode trust in AI systems, they do not directly deter adoption intentions, highlighting the importance of addressing professional role concerns through institutional policies that emphasize AI as a complementary tool rather than a replacement for human educators. (iv) The study’s explanatory and predictive power metrics, including high R2 and Q2 values, demonstrate the robustness of the research model in capturing the key dynamics of AI adoption in higher education. The R2 for BI was 0.672, indicating that 67.2% of its variance was explained by the model. Similarly, Q2 values for BI (0.571), PT (0.386), and AU (0.321) confirmed the model’s strong predictive relevance.

Based on the study’s findings, the following strategies are proposed to facilitate the adoption of AI tools among university teachers:

- (i)

AI tools should be tailored to the actual teaching needs of university educators and continuously refined based on teacher feedback during development. AI developers and universities should collaborate to design user-friendly tools that focus on addressing practical classroom needs such as streamlining course design, enhancing student engagement, and improving assessment processes. Teachers’ ongoing feedback during the development phase ensures alignment with classroom requirements, increasing both PU and PEOU.

- (ii)

Higher education institutions should build trust by establishing transparent data protection policies, which will facilitate AI tool adoption. Trust is crucial for AI adoption, especially regarding concerns over data privacy and system reliability. Institutions and policymakers should implement robust data protection measures and transparent AI usage policies. Effectively communicating these efforts through regular updates, transparent audits, and faculty briefings will alleviate fears and foster confidence among educators, addressing the critical role of PT.

- (iii)

Universities should leverage social influence by encouraging educators to act as AI champions, sharing their experiences and strategies. Social influence plays a significant role in technology adoption. University administrations should encourage experienced educators to become AI champions, sharing their positive experiences and best practices with their peers. Peer-led workshops and collaborative forums will normalize AI use, increase trust, and reduce resistance from hesitant teachers.

- (iv)

Higher education institutions should establish a comprehensive professional development roadmap, including continuous technical support, to reinforce teachers’ intentions to adopt AI tools. Institutional support is essential for reinforcing AI adoption intentions. Universities should develop comprehensive training programs focused on building digital literacy and AI competence. These programs should include discipline-specific workshops, hands-on sessions, and access to technical resources. Additionally, offering real-time technical support and establishing dedicated help desks will make AI tools more approachable, reducing barriers for educators unfamiliar with technology.

- (v)

Policymakers and university leaders should prioritize AI adoption within the broader digital transformation strategy and provide necessary policy support and resources. Policymakers and university leadership must prioritize AI adoption within the broader digital transformation strategy, setting clear goals for integrating AI into teaching and learning. Adequate funding for digital infrastructure and continuous faculty training must be ensured. Universities should integrate AI-related metrics into performance evaluations and funding allocations, incentivizing continuous progress. Collaboration with national strategies, such as China’s “Smart Education”, will ensure alignment and foster systemic innovation.

Despite its contributions, this study has certain limitations. First, the sample was limited to university teachers in one country, which may affect generalizability across regions or cultures and restricts the ability to conduct subgroup analyses based on demographic or institutional characteristics. Second, while disciplinary data were collected, field-specific adoption differences were not analyzed and warrant future attention. Third, perceived risk was examined mainly at the individual level, focusing on usability and professional concerns; broader ethical risks—such as AI’s potential to deepen educational inequality—were not addressed. Lastly, the cross-sectional design limits causal interpretation. Longitudinal studies are needed to track changes in trust, risk, and sustained adoption over time.